metadata

language:

- zh

tags:

- chatglm

- pytorch

- zh

- Text2Text-Generation

license: apache-2.0

widget:

- text: |-

对下面中文拼写纠错:

少先队员因该为老人让坐。

答:

Chinese Spelling Correction LoRA Model

ChatGLM3-6B中文纠错LoRA模型

shibing624/chatglm3-6b-csc-chinese-lora evaluate test data:

The overall performance of shibing624/chatglm3-6b-csc-chinese-lora on CSC test:

| prefix | input_text | target_text | pred |

|---|---|---|---|

| 对下面文本纠错: | 少先队员因该为老人让坐。 | 少先队员应该为老人让座。 | 少先队员应该为老人让座。 |

在CSC测试集上生成结果纠错准确率高,由于是基于ChatGLM3-6B模型,结果常常能带给人惊喜,不仅能纠错,还带有句子润色和改写功能。

Usage

本项目开源在 pycorrector 项目:textgen,可支持ChatGLM原生模型和LoRA微调后的模型,通过如下命令调用:

Install package:

pip install -U pycorrector

from pycorrector.gpt.gpt_model import GptModel

model = GptModel("chatglm", "THUDM/chatglm3-6b", peft_name="shibing624/chatglm3-6b-csc-chinese-lora")

r = model.predict(["对下面文本纠错:\n少先队员因该为老人让坐。"])

print(r) # ['少先队员应该为老人让座。']

Usage (HuggingFace Transformers)

Without pycorrector, you can use the model like this:

First, you pass your input through the transformer model, then you get the generated sentence.

Install package:

pip install transformers

import sys

from peft import PeftModel

from transformers import AutoModel, AutoTokenizer

sys.path.append('..')

model = AutoModel.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True, device_map='auto')

model = PeftModel.from_pretrained(model, "shibing624/chatglm3-6b-csc-chinese-lora")

model = model.half().cuda() # fp16

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True)

sents = ['对下面中文拼写纠错:\n少先队员因该为老人让坐。',

'对下面中文拼写纠错:\n下个星期,我跟我朋唷打算去法国玩儿。']

for s in sents:

response = model.chat(tokenizer, s, max_length=128, eos_token_id=tokenizer.eos_token_id)

print(response)

output:

少先队员应该为老人让座。

下个星期,我跟我朋友打算去法国玩儿。

模型文件组成:

chatglm3-6b-csc-chinese-lora

├── adapter_config.json

└── adapter_model.bin

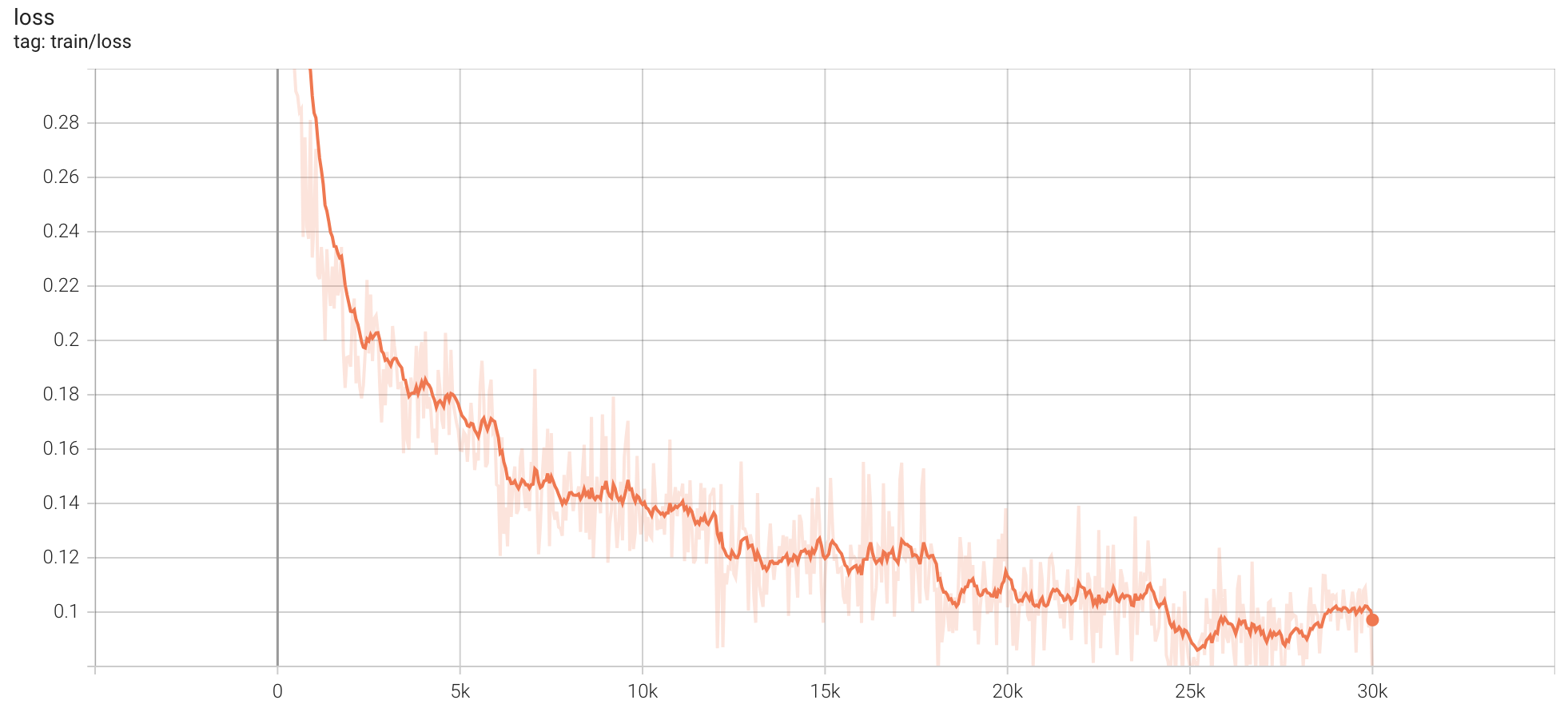

训练参数:

- num_epochs: 5

- per_device_train_batch_size: 6

- learning_rate: 2e-05

- best steps: 25100

- train_loss: 0.0834

- lr_scheduler_type: linear

- base model: THUDM/chatglm3-6b

- warmup_steps: 50

- "save_strategy": "steps"

- "save_steps": 500

- "save_total_limit": 10

- "bf16": false

- "fp16": true

- "optim": "adamw_torch"

- "ddp_find_unused_parameters": false

- "gradient_checkpointing": true

- max_seq_length: 512

- max_length: 512

- prompt_template_name: vicuna

- 6 * V100 32GB, training 48 hours

训练数据集

训练集包括以下数据:

- 中文拼写纠错数据集:https://huggingface.co/datasets/shibing624/CSC

- 中文语法纠错数据集:https://github.com/shibing624/pycorrector/tree/llm/examples/data/grammar

- 通用GPT4问答数据集:https://huggingface.co/datasets/shibing624/sharegpt_gpt4

如果需要训练GPT模型,请参考https://github.com/shibing624/pycorrector

Citation

@software{pycorrector,

author = {Ming Xu},

title = {pycorrector: Text Error Correction Tool},

year = {2023},

url = {https://github.com/shibing624/pycorrector},

}