File size: 3,744 Bytes

793f8c7 dcff94d fd7cea1 dcff94d fd7cea1 793f8c7 670d1b2 7fdbe81 670d1b2 5556700 670d1b2 7fdbe81 670d1b2 d062eb7 670d1b2 d062eb7 670d1b2 05f2812 670d1b2 95748da d062eb7 670d1b2 05f2812 670d1b2 95748da d062eb7 670d1b2 05f2812 670d1b2 95748da d062eb7 670d1b2 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 |

---

license: mit

tags:

- vision

- image-segmentation

datasets:

- scene_parse_150

widget:

- src: https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/ade20k.jpeg

example_title: House

- src: https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/demo_2.jpg

example_title: Airplane

- src: https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/coco.jpeg

example_title: Person

---

# OneFormer

OneFormer model trained on the ADE20k dataset (tiny-sized version, Swin backbone). It was introduced in the paper [OneFormer: One Transformer to Rule Universal Image Segmentation](https://arxiv.org/abs/2211.06220) by Jain et al. and first released in [this repository](https://github.com/SHI-Labs/OneFormer).

## Model description

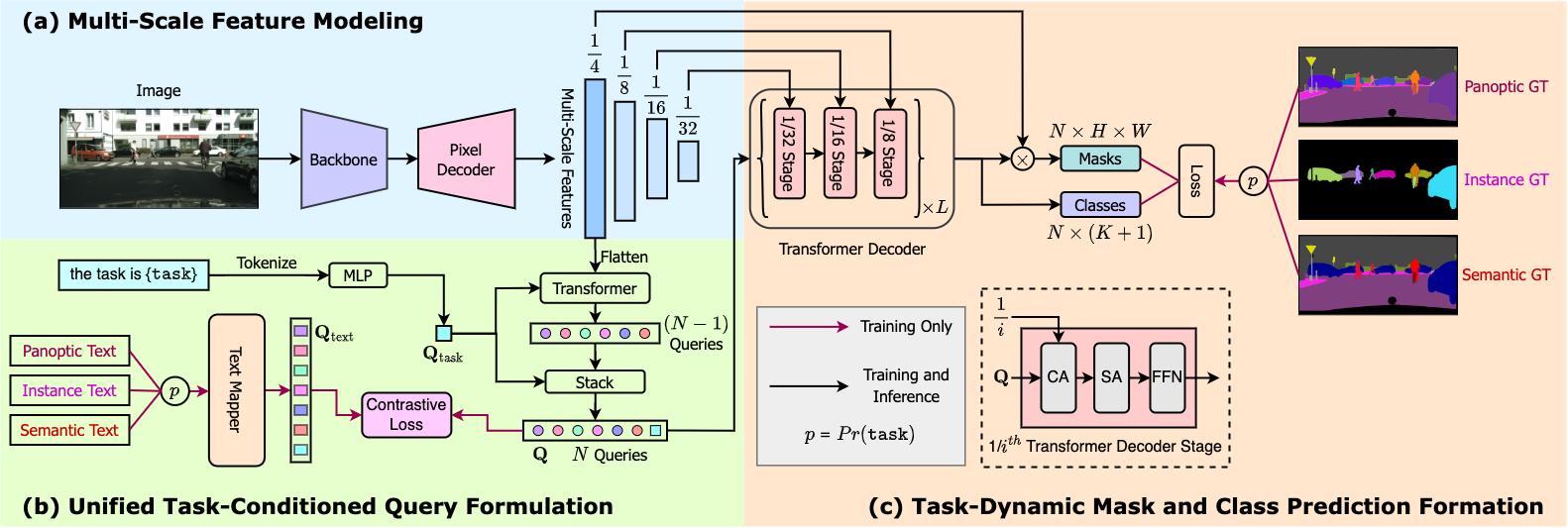

OneFormer is the first multi-task universal image segmentation framework. It needs to be trained only once with a single universal architecture, a single model, and on a single dataset, to outperform existing specialized models across semantic, instance, and panoptic segmentation tasks. OneFormer uses a task token to condition the model on the task in focus, making the architecture task-guided for training, and task-dynamic for inference, all with a single model.

## Intended uses & limitations

You can use this particular checkpoint for semantic, instance and panoptic segmentation. See the [model hub](https://huggingface.co/models?search=oneformer) to look for other fine-tuned versions on a different dataset.

### How to use

Here is how to use this model:

```python

from transformers import OneFormerProcessor, OneFormerForUniversalSegmentation

from PIL import Image

import requests

url = "https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/ade20k.jpeg"

image = Image.open(requests.get(url, stream=True).raw)

# Loading a single model for all three tasks

processor = OneFormerProcessor.from_pretrained("shi-labs/oneformer_ade20k_swin_tiny")

model = OneFormerForUniversalSegmentation.from_pretrained("shi-labs/oneformer_ade20k_swin_tiny")

# Semantic Segmentation

semantic_inputs = processor(images=image, task_inputs=["semantic"], return_tensors="pt")

semantic_outputs = model(**semantic_inputs)

# pass through image_processor for postprocessing

predicted_semantic_map = processor.post_process_semantic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]

# Instance Segmentation

instance_inputs = processor(images=image, task_inputs=["instance"], return_tensors="pt")

instance_outputs = model(**instance_inputs)

# pass through image_processor for postprocessing

predicted_instance_map = processor.post_process_instance_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

# Panoptic Segmentation

panoptic_inputs = processor(images=image, task_inputs=["panoptic"], return_tensors="pt")

panoptic_outputs = model(**panoptic_inputs)

# pass through image_processor for postprocessing

predicted_semantic_map = processor.post_process_panoptic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

```

For more examples, please refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/oneformer).

### Citation

```bibtex

@article{jain2022oneformer,

title={{OneFormer: One Transformer to Rule Universal Image Segmentation}},

author={Jitesh Jain and Jiachen Li and MangTik Chiu and Ali Hassani and Nikita Orlov and Humphrey Shi},

journal={arXiv},

year={2022}

}

```

|