license: apache-2.0

language:

- en

tags:

- Diffusion Transformer

- Image Editing

- Image To Image

- Scepter

- ACE

ACE: All-round Creator and Editor Following Instructions via Diffusion Transformer

Tongyi Lab, Alibaba Group

Paper | Project Page | Code

ACE is a unified foundational model framework that supports a wide range of visual generation tasks. By defining CU for unifying multi-modal inputs across different tasks and incorporating long-context CU, we introduce historical contextual information into visual generation tasks, paving the way for ChatGPT-like dialog systems in visual generation.

|

📢 News

- [2024.9.30] Release the paper of ACE on arxiv.

- [2024.10.31] Release the ACE checkpoint on ModelScope and HuggingFace.

- [2024.11.1] Support online demo on HuggingFace.

- [2024.11.20] Release the ACE-0.6b-1024px model, which significantly enhances image generation quality compared with ACE-0.6b-512px.

🚀 Installation

Install the necessary packages with pip:

git clone https://github.com/ali-vilab/ACE.git

pip install -r requirements.txt

🔥 ACE Models

🖼 Model Performance Visualization

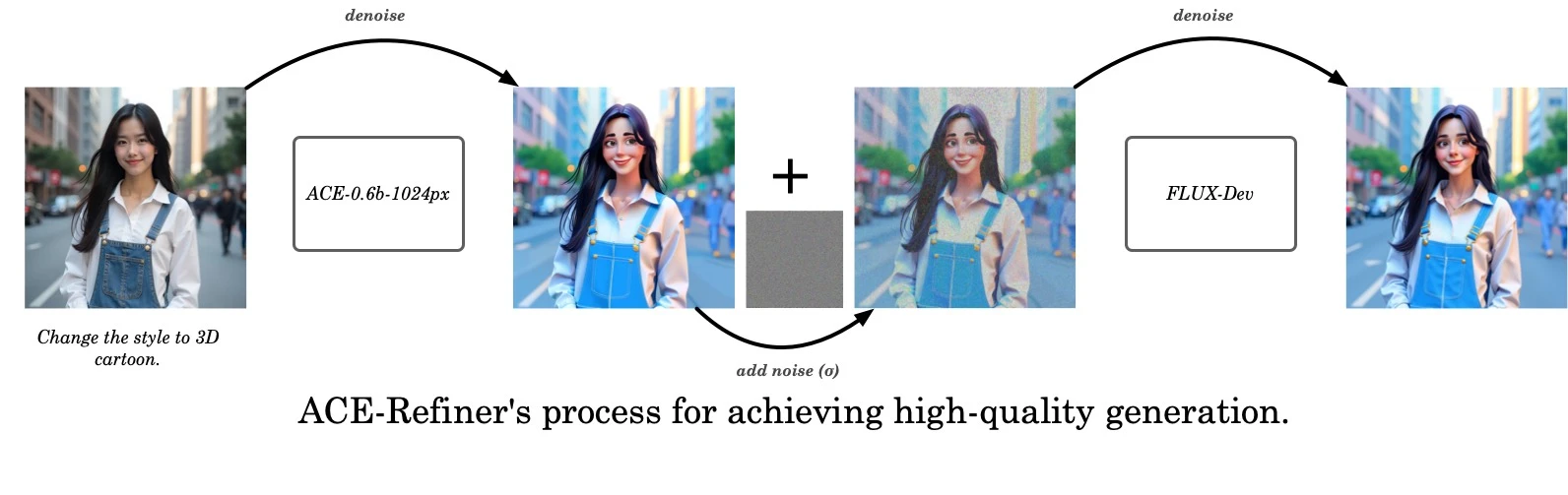

The current model's parameters scale of ACE is 0.6B, which imposes certain limitations on the quality of image generation. FLUX.1-Dev, on the other hand, has a significant advantage in text-to-image generation quality. By using SDEdit, we can effectively leverage the generative capabilities of FLUX to further enhance the image results generated by ACE. Based on the above considerations, we have designed the ACE-Refiner pipeline, as shown in the diagram below.

As shown in the figure below, when the strength

σ of the generated image is high, the generated image will suffer from fidelity loss compared to the original image. Conversely, lower

σ does not significantly improve the image quality. Therefore, users can make a trade-off between fidelity to the generated result and the image quality based on their own needs.

Users can set the value of "REFINER_SCALE" in the configuration file config/inference_config/models/ace_0.6b_1024_refiner.yaml.

We recommend that users use the advance options in the webui-demo for effect verification.

We compared the generation and editing performance of different models on several tasks, as shown as following.

🔥 Training

We offer a demonstration training YAML that enables the end-to-end training of ACE using a toy dataset. For a comprehensive overview of the hyperparameter configurations, please consult config/ace_0.6b_512_train.yaml.

Prepare datasets

Please find the dataset class located in modules/data/dataset/dataset.py,

designed to facilitate end-to-end training using an open-source toy dataset.

Download a dataset zip file from modelscope, and then extract its contents into the cache/datasets/ directory.

Should you wish to prepare your own datasets, we recommend consulting modules/data/dataset/dataset.py for detailed guidance on the required data format.

Prepare initial weight

The ACE checkpoint has been uploaded to both ModelScope and HuggingFace platforms:

In the provided training YAML configuration, we have designated the Modelscope URL as the default checkpoint URL. Should you wish to transition to Hugging Face, you can effortlessly achieve this by modifying the PRETRAINED_MODEL value within the YAML file (replace the prefix "ms://iic" to "hf://scepter-studio").

Start training

You can easily start training procedure by executing the following command:

# ACE-0.6B-512px

PYTHONPATH=. python tools/run_train.py --cfg config/ace_0.6b_512_train.yaml

# ACE-0.6B-1024px

PYTHONPATH=. python tools/run_train.py --cfg config/ace_0.6b_1024_train.yaml

🚀 Inference

We provide a simple inference demo that allows users to generate images from text descriptions.

PYTHONPATH=. python tools/run_inference.py --cfg config/inference_config/models/ace_0.6b_512.yaml --instruction "make the boy cry, his eyes filled with tears" --seed 199999 --input_image examples/input_images/example0.webp

We recommend runing the examples for quick testing. Running the following command will run the example inference and the results will be saved in examples/output_images/.

PYTHONPATH=. python tools/run_inference.py --cfg config/inference_config/models/ace_0.6b_512.yaml

📝 Citation

@article{han2024ace,

title={ACE: All-round Creator and Editor Following Instructions via Diffusion Transformer},

author={Han, Zhen and Jiang, Zeyinzi and Pan, Yulin and Zhang, Jingfeng and Mao, Chaojie and Xie, Chenwei and Liu, Yu and Zhou, Jingren},

journal={arXiv preprint arXiv:2410.00086},

year={2024}

}