language:

- de

pipeline_tag: text-generation

library_name: transformers

tags:

- bloom

- LLM

inference: false

widget:

- text: TODO

IGEL: Instruction-tuned German large Language Model for Text

IGEL is a LLM model family developed for German. The first version of IGEL is built on top BigScience BLOOM adapted to German from Malte Ostendorff. IGEL designed to provide accurate and reliable language understanding capabilities for a wide range of natural language understanding tasks, including sentiment analysis, language translation, and question answering.

You can try out the model at igel-playground.

The IGEL family includes instruction instruct-igel-001 and chat-igel-001 coming soon.

Model Description

LoRA tuned BLOOM-CLP German (6.4B parameters) with merged weights.

Training data

instruct-igel-001 is trained on naive translated instruction datasets, without much post-processing.

Known limitations

instruct-igel-001 also exhibits several common deficiencies of language models, including hallucination, toxicity, and stereotypes.

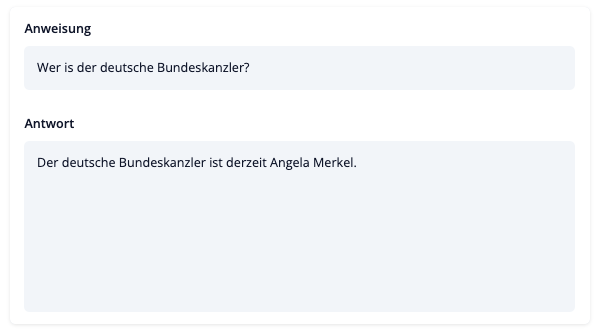

For example, in the following figure, instruct-igel-001 wrongly says that the cancelor of Germany is Angela Merkel.

Training procedure

coming soon

How to use

You can test the model in this LLM playground.

coming soon