Less-to-More Generalization: Unlocking More Controllability by In-Context Generation

Abstract

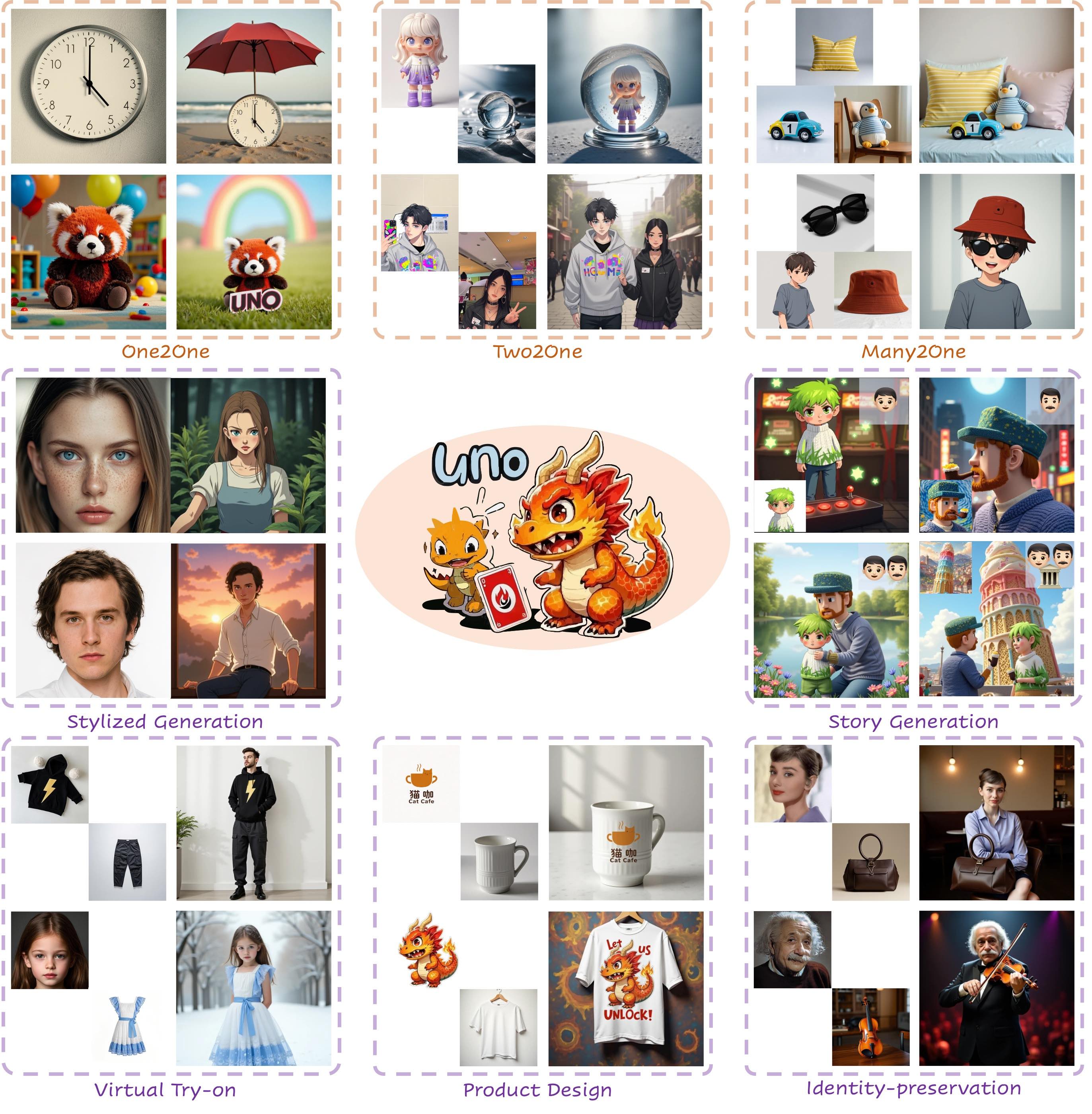

Although subject-driven generation has been extensively explored in image generation due to its wide applications, it still has challenges in data scalability and subject expansibility. For the first challenge, moving from curating single-subject datasets to multiple-subject ones and scaling them is particularly difficult. For the second, most recent methods center on single-subject generation, making it hard to apply when dealing with multi-subject scenarios. In this study, we propose a highly-consistent data synthesis pipeline to tackle this challenge. This pipeline harnesses the intrinsic in-context generation capabilities of diffusion transformers and generates high-consistency multi-subject paired data. Additionally, we introduce UNO, which consists of progressive cross-modal alignment and universal rotary position embedding. It is a multi-image conditioned subject-to-image model iteratively trained from a text-to-image model. Extensive experiments show that our method can achieve high consistency while ensuring controllability in both single-subject and multi-subject driven generation.

Community

🔥🔥 We introduce UNO, a universal framework that evolves from single-subject to multi-subject customization. UNO demonstrates strong generalization capabilities and is capable of unifying diverse tasks under one model.

🚄 code link: https://github.com/bytedance/UNO

🚀 project page: https://bytedance.github.io/UNO/

🌟 huggingface space: https://huggingface.co/spaces/bytedance-research/UNO-FLUX

👀 model checkpoint: https://huggingface.co/bytedance-research/UNO

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- RealGeneral: Unifying Visual Generation via Temporal In-Context Learning with Video Models (2025)

- Personalize Anything for Free with Diffusion Transformer (2025)

- EasyControl: Adding Efficient and Flexible Control for Diffusion Transformer (2025)

- CINEMA: Coherent Multi-Subject Video Generation via MLLM-Based Guidance (2025)

- UniCombine: Unified Multi-Conditional Combination with Diffusion Transformer (2025)

- SkyReels-A2: Compose Anything in Video Diffusion Transformers (2025)

- Proxy-Tuning: Tailoring Multimodal Autoregressive Models for Subject-Driven Image Generation (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

Have this person light a match as shown in the picture.

Models citing this paper 1

Datasets citing this paper 0

No dataset linking this paper