|

--- |

|

license: apache-2.0 |

|

datasets: |

|

- Yirany/UniMM-Chat |

|

- HaoyeZhang/RLHF-V-Dataset |

|

language: |

|

- en |

|

library_name: transformers |

|

--- |

|

|

|

# Model Card for RLHF-V |

|

|

|

[Project Page](https://rlhf-v.github.io/) | [GitHub ](https://github.com/RLHF-V/RLHF-V) | [Demo](http://120.92.209.146:8081/) | [Paper](https://arxiv.org/abs/2312.00849) |

|

|

|

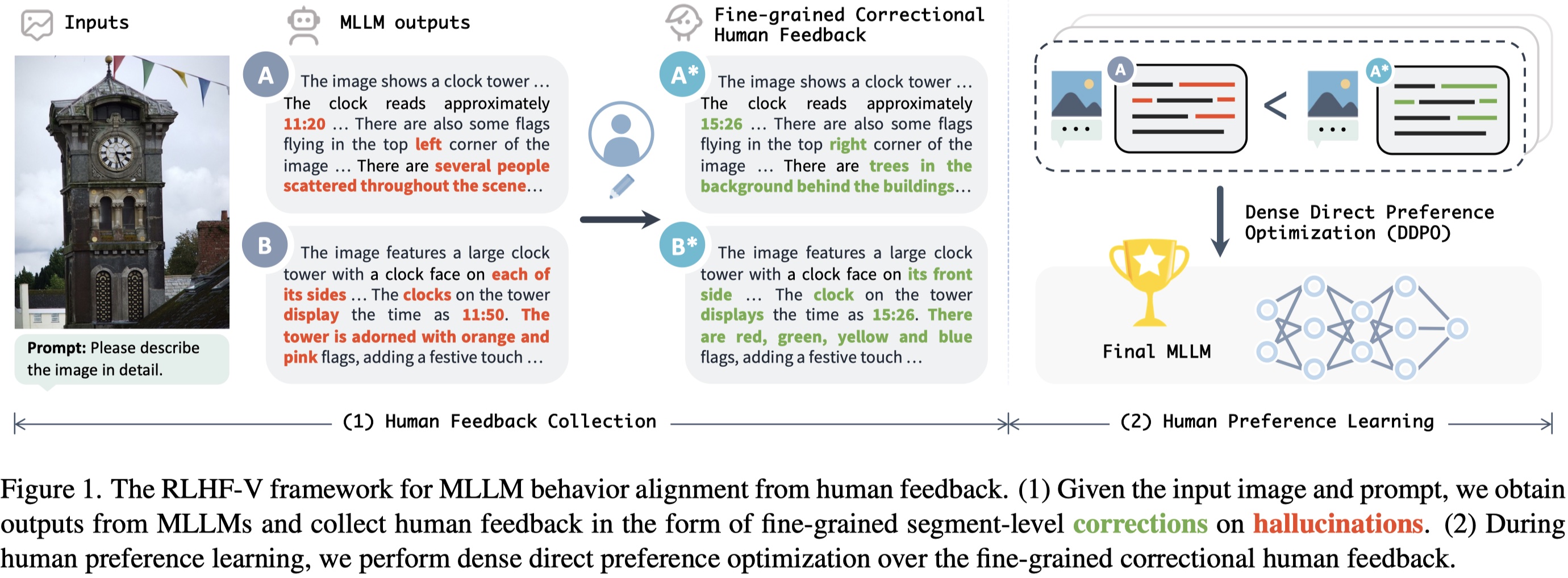

RLHF-V is an open-source multimodal large language model with the **lowest hallucination rate** on both long-form instructions and short-form questions. |

|

|

|

RLHF-V is trained on [RLHF-V-Dataset](https://huggingface.co/datasets/HaoyeZhang/RLHF-V-Dataset), which contains **fine-grained segment-level human corrections** on diverse instructions. The base model is trained on [UniMM-Chat](https://huggingface.co/datasets/Yirany/UniMM-Chat), which is a high-quality knowledge-intensive SFT dataset. We introduce a new method **Dense Direct Preference Optimization (DDPO)** that can make better use of the fine-grained annotations. |

|

|

|

For more details, please refer to our [paper](https://arxiv.org/abs/2312.00849). |

|

|

|

|

|

|

|

## Model Details |

|

|

|

### Model Description |

|

- **Trained from model:** Vicuna-13B |

|

- **Trained on data:** [RLHF-V-Dataset](https://huggingface.co/datasets/HaoyeZhang/RLHF-V-Dataset) |

|

|

|

### Model Sources |

|

|

|

- **Project Page:** https://rlhf-v.github.io |

|

- **GitHub Repository:** https://github.com/RLHF-V/RLHF-V |

|

- **Demo:** http://120.92.209.146:8081 |

|

- **Paper:** https://arxiv.org/abs/2312.00849 |

|

|

|

## Performance |

|

|

|

Low hallucination rate while being informative: |

|

|

|

|

|

|

|

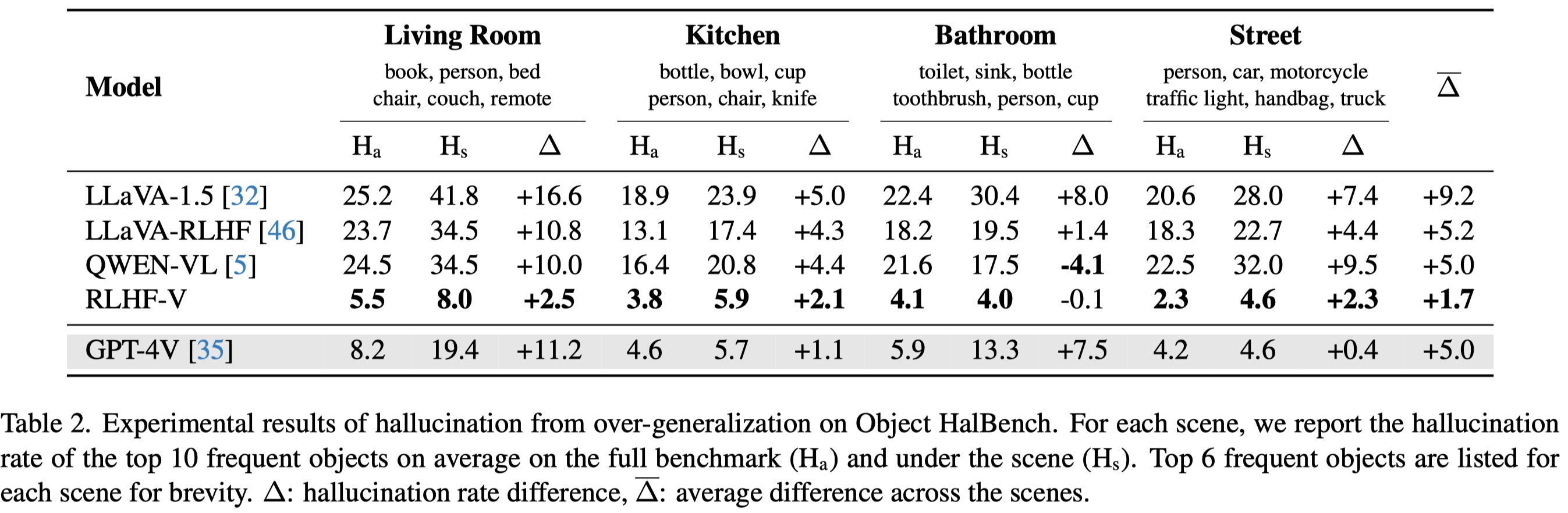

More resistant to over-generalization, even compared to GPT-4V: |

|

|

|

|

|

|

|

## Citation |

|

|

|

If you find RLHF-V is useful in your work, please cite it with: |

|

|

|

``` |

|

@article{yu2023rlhf, |

|

title={Rlhf-v: Towards trustworthy mllms via behavior alignment from fine-grained correctional human feedback}, |

|

author={Yu, Tianyu and Yao, Yuan and Zhang, Haoye and He, Taiwen and Han, Yifeng and Cui, Ganqu and Hu, Jinyi and Liu, Zhiyuan and Zheng, Hai-Tao and Sun, Maosong and others}, |

|

journal={arXiv preprint arXiv:2312.00849}, |

|

year={2023} |

|

} |

|

``` |