|

--- |

|

language: |

|

- en |

|

library_name: peft |

|

pipeline_tag: text-generation |

|

tags: |

|

- medical |

|

license: cc-by-nc-4.0 |

|

--- |

|

|

|

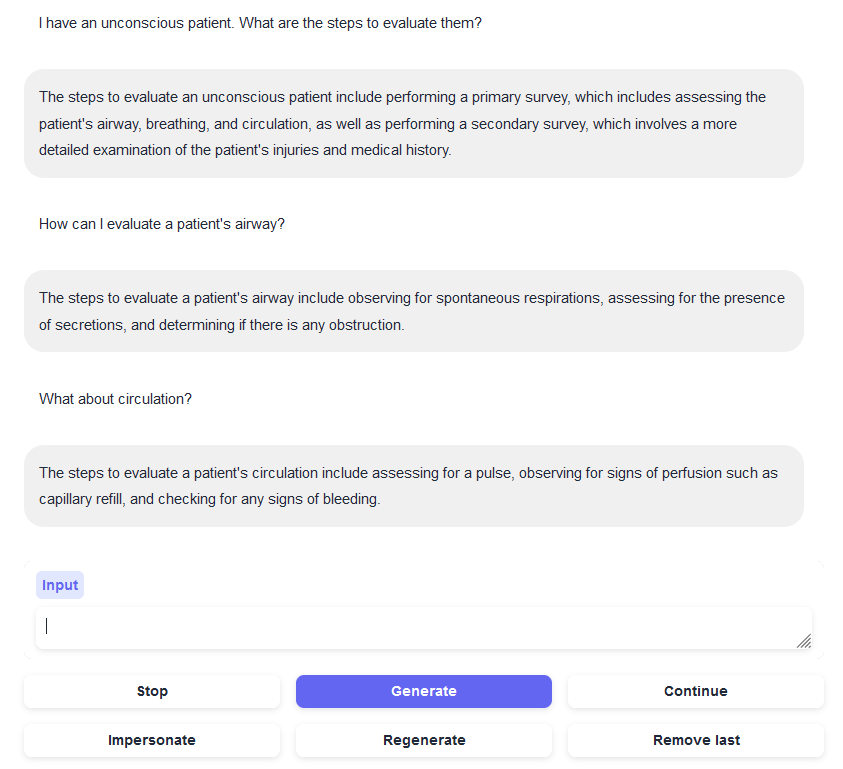

# MedFalcon v2 40b LoRA - Final |

|

|

|

|

|

|

|

## Model Description |

|

|

|

This a model release at `1 epoch`. For evaluation use only! Limitations: |

|

* Do not use to treat paitients! Treat AI content as if you wrote it!!! |

|

|

|

### Architecture |

|

`nmitchko/medfalcon-v2-40b-lora` is a large language model LoRa specifically fine-tuned for medical domain tasks. |

|

It is based on [`Falcon-40b`](https://huggingface.co/tiiuae/falcon-40b) at 40 billion parameters. |

|

|

|

The primary goal of this model is to improve question-answering and medical dialogue tasks. |

|

It was trained using [LoRA](https://arxiv.org/abs/2106.09685), specifically [QLora](https://github.com/artidoro/qlora), to reduce memory footprint. |

|

|

|

See Training Parameters for more info This Lora supports 4-bit and 8-bit modes. |

|

|

|

### Requirements |

|

|

|

``` |

|

bitsandbytes>=0.39.0 |

|

peft |

|

transformers |

|

``` |

|

|

|

Steps to load this model: |

|

1. Load base model using transformers |

|

2. Apply LoRA using peft |

|

|

|

```python |

|

# |

|

from transformers import AutoTokenizer, AutoModelForCausalLM |

|

import transformers |

|

import torch |

|

from peft import PeftModel |

|

|

|

model = "tiiuae/falcon-40b" |

|

LoRA = "nmitchko/medfalcon-v2-40b-lora" |

|

|

|

# If you want 8 or 4 bit set the appropriate flags |

|

load_8bit = True |

|

|

|

tokenizer = AutoTokenizer.from_pretrained(model) |

|

|

|

model = AutoModelForCausalLM.from_pretrained(model, |

|

load_in_8bit=load_8bit, |

|

torch_dtype=torch.float16, |

|

trust_remote_code=True, |

|

) |

|

|

|

model = PeftModel.from_pretrained(model, LoRA) |

|

|

|

pipeline = transformers.pipeline( |

|

"text-generation", |

|

model=model, |

|

tokenizer=tokenizer, |

|

torch_dtype=torch.bfloat16, |

|

trust_remote_code=True, |

|

device_map="auto", |

|

) |

|

|

|

sequences = pipeline( |

|

"What does the drug ceftrioxone do?\nDoctor:", |

|

max_length=200, |

|

do_sample=True, |

|

top_k=40, |

|

num_return_sequences=1, |

|

eos_token_id=tokenizer.eos_token_id, |

|

) |

|

|

|

for seq in sequences: |

|

print(f"Result: {seq['generated_text']}") |

|

``` |

|

|

|

## Training Parameters |

|

|

|

The model was trained for or 1 epoch on a custom, unreleased dataset named `medconcat`. |

|

`medconcat` contains only human generated content and weighs in at over 100MiB of raw text. |

|

|

|

|

|

| Item | Amount | Units | |

|

|---------------|--------|-------| |

|

| LoRA Rank | 64 | ~ | |

|

| LoRA Alpha | 16 | ~ | |

|

| Learning Rate | 1e-4 | SI | |

|

| Dropout | 5 | % | |

|

|