metadata

license: apache-2.0

datasets:

- BeIR/nq

- embedding-data/PAQ_pairs

- sentence-transformers/msmarco-hard-negatives

- leminda-ai/s2orc_small

- lucadiliello/triviaqa

- pietrolesci/agnews

- mteb/amazon_reviews_multi

- multiIR/ccnews2016-8multi

- eli5

- gooaq

- quora

- lucadiliello/searchqa

- flax-sentence-embeddings/stackexchange_math_jsonl

- yahoo_answers_qa

- EdinburghNLP/xsum

- wikihow

- rajpurkar/squad_v2

- nixiesearch/amazon-esci

- osunlp/Mind2Web

- derek-thomas/dataset-creator-askreddit

language:

- en

nixie-querygen-v2

A Mistral-7B-v0.1 fine-tuned on query generation task. Main use cases:

- synthetic query generation for downstream embedding fine-tuning tasks - when you have only documents and no queries/labels. Such task can be done with the nixietune toolkit, see the

nixietune.qgen.generaterecipe. - synthetic dataset expansion for further embedding training - when you DO have query-document pairs, but only a few. You can fine-tune the

nixie-querygen-v2on existing pairs, and then expand your document corpus with synthetic queries (which are still based on your few real ones). Seenixietune.qgen.trainrecipe.

Training data

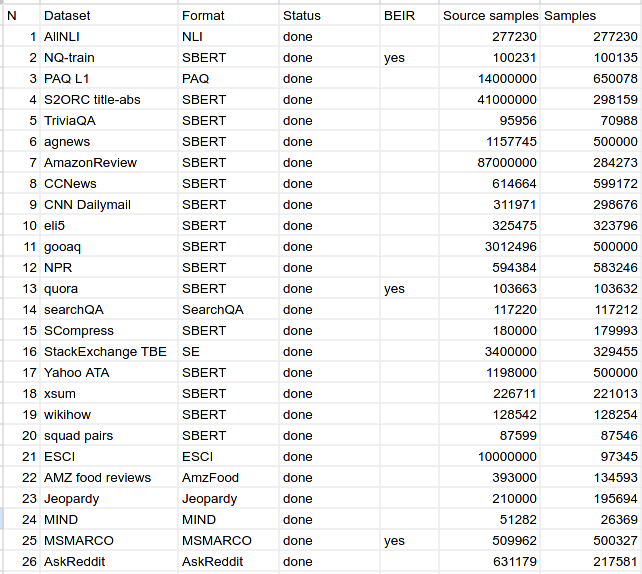

We used 200k query-document pairs sampled randomly from a diverse set of IR datasets:

Flavours

This repo has multiple versions of the model:

- model-*.safetensors: Pytorch FP16 checkpoint, suitable for down-stream fine-tuning

- ggml-model-f16.gguf: GGUF F16 non-quantized llama-cpp checkpoint, for CPU inference

- ggml-model-q4.gguf: GGUF Q4_0 quantized llama-cpp checkpoint, for fast (and less precise) CPU inference.

Prompt formats

The model accepts the followinng prompt format:

<document next> [short|medium|long]? [question|regular]? query:

Some notes on format:

[short|medium|long]and[question|regular]fragments are optional and can be skipped.- the prompt suffix

query:has no trailing space, be careful.

Inference example

With llama-cpp and Q4 model the inference can be done on a CPU:

$ ./main -m ~/models/nixie-querygen-v2/ggml-model-q4.gguf -p "git lfs track will \

begin tracking a new file or an existing file that is already checked in to your \

repository. When you run git lfs track and then commit that change, it will \

update the file, replacing it with the LFS pointer contents. short regular query:" -s 1

sampling:

repeat_last_n = 64, repeat_penalty = 1.100, frequency_penalty = 0.000, presence_penalty = 0.000

top_k = 40, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.800

mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

sampling order:

CFG -> Penalties -> top_k -> tfs_z -> typical_p -> top_p -> min_p -> temp

generate: n_ctx = 512, n_batch = 512, n_predict = -1, n_keep = 0

git lfs track will begin tracking a new file or an existing file that is

already checked in to your repository. When you run git lfs track and then

commit that change, it will update the file, replacing it with the LFS

pointer contents. short regular query: git-lfs track [end of text]

Training config

The model is trained with the follwing nixietune config:

{

"train_dataset": "/home/shutty/data/nixiesearch-datasets/query-doc/data/train",

"eval_dataset": "/home/shutty/data/nixiesearch-datasets/query-doc/data/test",

"seq_len": 512,

"model_name_or_path": "mistralai/Mistral-7B-v0.1",

"output_dir": "mistral-qgen",

"num_train_epochs": 1,

"seed": 33,

"per_device_train_batch_size": 6,

"per_device_eval_batch_size": 2,

"bf16": true,

"logging_dir": "logs",

"gradient_checkpointing": true,

"gradient_accumulation_steps": 1,

"dataloader_num_workers": 14,

"eval_steps": 0.03,

"logging_steps": 0.03,

"evaluation_strategy": "steps",

"torch_compile": false,

"report_to": [],

"save_strategy": "epoch",

"streaming": false,

"do_eval": true,

"label_names": [

"labels"

]

}

License

Apache 2.0