File size: 3,448 Bytes

bc805ef 2ca681d bc805ef 2ca681d 0f8578a 2ca681d 309a24d 2ca681d 309a24d bc805ef 2ca681d d1a77c4 c0cb31e d1a77c4 2ca681d bc805ef 67c7004 bc805ef |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 |

---

license: mit

language: en

tags:

- text-to-image

---

# Zack Fox Stable Diffusion

Stable Diffusion v1.5 model fine-tuned to generate pictures of Zack Fox with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks zach fox**. Yea...I misspelled his name when I made the prompt. My bad lol.

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

## Usage

To use this model, you can run the following...

```python

import torch

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

"nateraw/zack-fox",

torch_dtype=torch.float16

).to("cuda")

prompt = "a photo of sks zach fox, oil on canvas"

image = pipe(prompt).images[0]

```

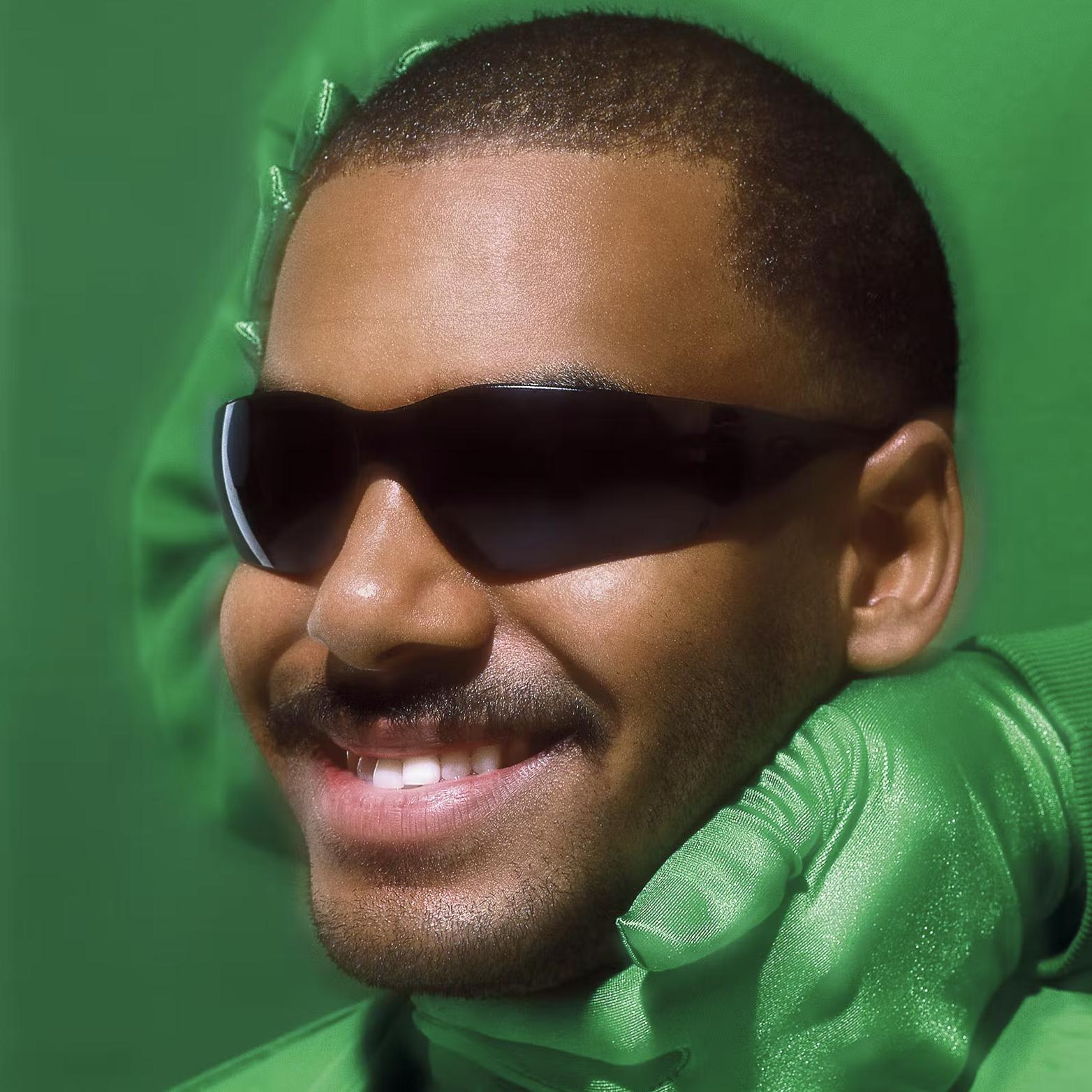

Here are the images used for training this concept:

|