lmqg/mt5-base-zhquad-qg-ae-trimmed-50000

Text2Text Generation

•

Updated

•

5

Language Model finetuning for Question Generation (LMQG)

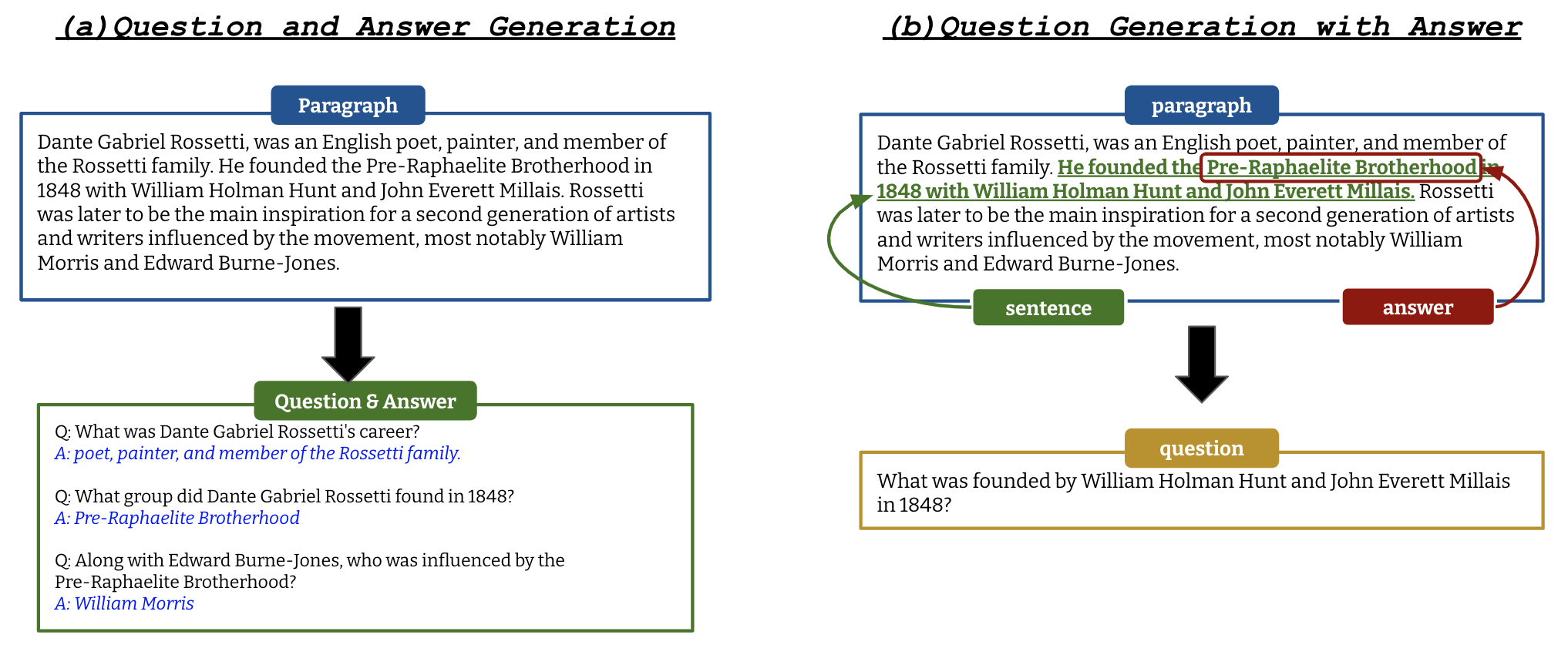

Language Models for Question Generation (LMQG) is the official registry of "Generative Language Models for Paragraph-Level Question Generation, EMNLP 2022", which has proposed QG-Bench, multilingual and multidomain question generation datasets and models.

See the official GitHub for more information.

The QG models can be used with lmqg library as below.

from lmqg import TransformersQG

model = TransformersQG(language='en', model='lmqg/t5-large-squad-qg-ae')

context = "William Turner was an English painter who specialised "

"in watercolour landscapes. He is often known as "

"William Turner of Oxford or just Turner of Oxford to "

"distinguish him from his contemporary, J. M. W. Turner. "

"Many of Turner's paintings depicted the countryside "

"around Oxford. One of his best known pictures is a "

"view of the city of Oxford from Hinksey Hill."

question_answer = model.generate_qa(context)

print(question_answer)

[

('Who was an English painter who specialised in watercolour landscapes?',

'William Turner'),

("What was William Turner's nickname?",

'William Turner of Oxford'),

("What did many of Turner's paintings depict around Oxford?",

'countryside'),

("What is one of William Turner's best known paintings?",

'a view of the city of Oxford')

]

See more information bellow.