license: openrail

language:

- en

metrics:

- f1

library_name: fairseq

pipeline_tag: audio-classification

Model Card for Model ID

We explore benefits of unsupervised pretraining of wav2vec 2.0 (W2V2) using large-scale unlabeled home recordings collected using LittleBeats (LB) and LENA (Language Environment Analysis) devices. LittleBeats is a new infant wearable multi-modal device that we developed, which simultaneously records audio, movement of the infant, as well as heart-rate variablity. We use W2V2 to advance LB audio pipeline such that it automatically provides reliable labels of speaker diarization and vocalization classifications for family members, including infants, parents, and siblings, at home. We show that W2V2 pretrained on thousands hours of large-scale unlabeled home audio outperforms oracle W2V2 pretrained on 52k-hours released by Facebook/Meta in terms of automatic family audio analysis tasks.

For more details about LittleBeats, check out https://littlebeats.hdfs.illinois.edu/

Model Sources

For more information regarding this model, please checkout our paper

- Paper [optional]: [More Information Needed]

Model Description

Two versions of pretrained W2V2 models using fairseq are available:

- LB_1100/checkpoint_best.pt: pretrained using 1100-hour of LB home recordings collected from 110 families of children under 5-year-old

- LL_4300/checkpoint_best.pt: pretrained using 1100-hour of LB home recordings collected from 110 families + 3200-hour of LENA home recordings from 275 families of children under 5-year-old

One version of fine-tuned W2V2 models on labeled LB and LENA data using SpeechBrain is available:

- LL_4300_fine_tuned: pretrained on LL_4300 checkpoint and followed by fine-tuning on labeled LB and LENA home recordings + labeled lab recordings

Two pretrained ECAPA-TDNN speaker embeddings are available:

- ECAPA_TDNN_LB/embedding_model.ckpt: pretrained using 12-hour of labeled LB home recordings collected from 22 families of infants under 14-month-old

- ECAPA_TDNN_LB_LENA/embedding_model.ckpt: pretrained using 12-hour of labeled LB home recordings collected from 22 families + 18-hour of labeled LENA home recordings from 30 families of infants under 14-month-old

Uses

We develop our complete fine-tuning recipe using SpeechBrain toolkit available at

Quick Start

If you wish to use fairseq framework, the following code snippet provides two functions of loading our pretrained W2V2 model and extracting features.

import torch

import torch.nn.functional as F

from torch import nn

import fairseq

import torchaudio

def load_model(model_path, freeze=True):

'''

This function loads pretrained model using fairseq framework.

Arguments

---------

model_path : str

Path and filename of the pretrained model

freeze : bool (default: True)

If True, the model is frozen with no parameter updates through training.

'''

model,_,_ = fairseq.checkpoint_utils.load_model_ensemble_and_task([model_path])

model = model[0]

if freeze:

model.eval()

# Freeze parameters

for param in model.parameters():

param.requires_grad = False

else:

model.train()

for param in model.parameters():

param.requires_grad = True

#remove unnecessary components

model.quantizer = None

model.project_q = None

model.target_glu = None

model.final_proj = None

return model

def extract_features(model, wav, input_norm=None, output_norm=True, tgt_layer=None, output_all_hiddens=False):

'''

This function extracts features from w2v2 model. The function extracts the last transformer layer

feature by default. It allows for extracting features from certain layer, or features from all layers

Arguments

---------

model : fairseq wav2vec

wav : tensor

audio wav for feature extraction

input_norm : bool (default: None)

If True, a layer_norm (affine) will be applied to the input waveform.

output_norm : bool (default: True)

If True, a layer_norm (affine) will be applied to the output obtained

from the wav2vec model.

tgt_layer : int (default: None)

Target transformer layer features, 0-indexed.

output_all_hiddens : bool (default: False)

Whether to extract features from all layers. Need to set tgt_layer as None

'''

if input_norm:

wav = F.layer_norm(wav, wav.shape)

# Extract wav2vec output

out = model.extract_features(wav, padding_mask=None, mask=False)['x']

if isinstance(tgt_layer, int):

out = model.extract_features(wav, padding_mask=None, mask=False, layer=tgt_layer)['x']

elif output_all_hiddens:

features = []

model.layerdrop = 0

for i in range(len(out['layer_results'])):

curr_feature = out['layer_results'][i][0].transpose(0,1)

features.append(curr_feature)

out = torch.stack(features)

if output_norm:

out = F.layer_norm(out, out.shape)

return out

model=load_model("your/path/to/LL_4300/checkpoint_best.pt")

audio, fs = torchaudio.load("sample.wav")

audio = audio.transpose(0,1).squeeze(1)

features = extract_features(model, audio)

Evaluation

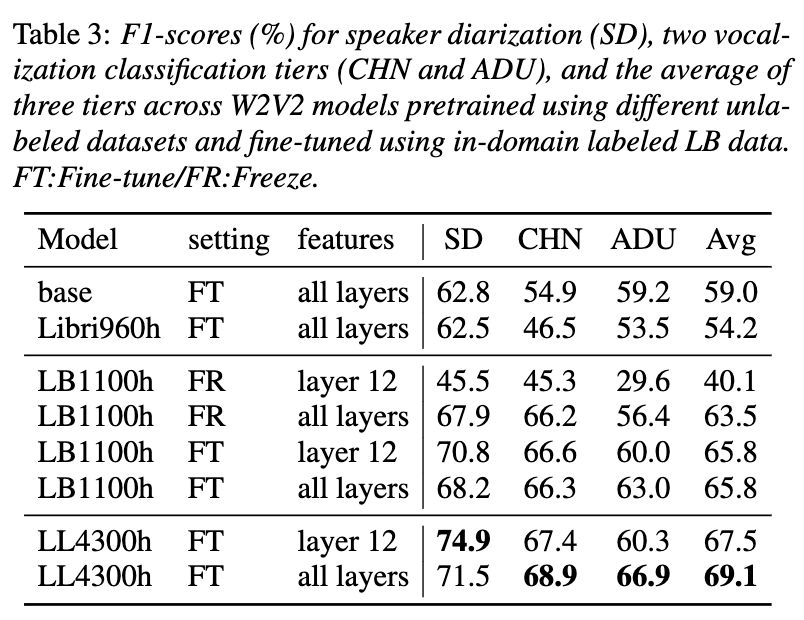

We test 4 unlabeled datasets on unsupervised pretrained W2V2-base models:

- base (oracle version): originally released version pretrained on ~52k-hour unlabeled audio

- Libri960h: oracle version fine-tuned using 960h Librispeech

- LB1100h: pretrain W2V2 using 1100h LB home recordings

- LL4300h: pretrain W2V2 using 4300h LB+LENA home recordings We then fine-tune pretrained models on 11.7h of LB labeled home recordings, the f1 scores across three tasks are

Additionally, we improve our model performances by adding relevant labeled home recordings and using data augmentation techniques of SpecAug and noise/reverberation corruption. For more details of experiments and results, please refer to our paper.

Paper/BibTex Citation

If you found this model helpful to you, please cite us as

Coming soon

Model Card Contact

Jialu Li (she, her, hers)

Ph.D candidate @ Department of Electrical and Computer Engineering, University of Illinois at Urbana-Champaign

E-mail: jialuli3@illinois.edu

Homepage: https://sites.google.com/view/jialuli/