Commit

•

9961b2e

1

Parent(s):

785a6f2

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,147 @@

|

|

| 1 |

---

|

| 2 |

license: gpl-3.0

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: gpl-3.0

|

| 3 |

---

|

| 4 |

+

|

| 5 |

+

# Lung Adeno/Squam v1 Model Card

|

| 6 |

+

This model card describes the model associated with the manuscript "Uncertainty-informed deep learning models enable high-confidence predictions for digital histopathology", by Dolezal _et al_, available [here](https://www.nature.com/articles/s41467-022-34025-x).

|

| 7 |

+

|

| 8 |

+

## Model Details

|

| 9 |

+

- **Developed by:** James Dolezal

|

| 10 |

+

- **Model type:** Deep convolutional neural network image classifier

|

| 11 |

+

- **Language(s):** English

|

| 12 |

+

- **License:** GPL-3.0

|

| 13 |

+

- **Model Description:** This is a model that can classify H&E-stained pathologic images of non-small cell lung cancer into adenocarcinoma or squamous cell carcinoma and provide an estimate of classification uncertainty. It is an [Xception](https://arxiv.org/abs/1610.02357) model with two dropout-enabled hidden weights enabled during both training and inference. During inference, a given image is passed through the network 30 times, resulting in a distribution of predictions. The mean of this distribution is the final prediction, and the standard deviation is the uncertainty.

|

| 14 |

+

- **Image processing:** This model expects images of H&E-stained pathology slides at 299 x 299 px and 302 x 302 μm resolution. Images should be stain-normalized using a modified Reinhard normalizer ("Reinhard-Fast") available [here](https://github.com/jamesdolezal/slideflow/blob/master/slideflow/norm/tensorflow/reinhard.py). The stain normalizer should be fit using the `target_means` and `target_stds` listed in the model `params.json` file. Images should be should be standardized with `tf.image.per_image_standardization()`.

|

| 15 |

+

- **Resources for more information:** [GitHub Repository](https://github.com/jamesdolezal/biscuit), [Paper](https://www.nature.com/articles/s41467-022-34025-x)

|

| 16 |

+

- **Cite as:**

|

| 17 |

+

|

| 18 |

+

@ARTICLE{Dolezal2022-qa,

|

| 19 |

+

title = "Uncertainty-informed deep learning models enable high-confidence

|

| 20 |

+

predictions for digital histopathology",

|

| 21 |

+

author = "Dolezal, James M and Srisuwananukorn, Andrew and Karpeyev, Dmitry

|

| 22 |

+

and Ramesh, Siddhi and Kochanny, Sara and Cody, Brittany and

|

| 23 |

+

Mansfield, Aaron S and Rakshit, Sagar and Bansal, Radhika and

|

| 24 |

+

Bois, Melanie C and Bungum, Aaron O and Schulte, Jefree J and

|

| 25 |

+

Vokes, Everett E and Garassino, Marina Chiara and Husain, Aliya N

|

| 26 |

+

and Pearson, Alexander T",

|

| 27 |

+

journal = "Nature Communications",

|

| 28 |

+

volume = 13,

|

| 29 |

+

number = 1,

|

| 30 |

+

pages = "6572",

|

| 31 |

+

month = nov,

|

| 32 |

+

year = 2022

|

| 33 |

+

}

|

| 34 |

+

|

| 35 |

+

# Uses

|

| 36 |

+

|

| 37 |

+

## Direct Use

|

| 38 |

+

This model is intended for research purposes only. Possible research areas and tasks include

|

| 39 |

+

|

| 40 |

+

- Development and comparison of uncertainty quantification methods for pathologic images.

|

| 41 |

+

- Probing and understanding the limitations of out-of-distribution detection for pathology classification models.

|

| 42 |

+

- Applications in educational settings.

|

| 43 |

+

- Research on pathology classification models for non-small cell lung cancer.

|

| 44 |

+

|

| 45 |

+

Excluded uses are described below.

|

| 46 |

+

|

| 47 |

+

### Misuse and Out-of-Scope Use

|

| 48 |

+

This model should not be used in a clinical setting to generate predictions that will be used to inform patients, physicians, or any other health care members directly involved in their health care outside the context of an approved research protocol. Using the model in a clinical setting outside the context of an approved research protocol is a misuse of this model. This includes, but is not limited to:

|

| 49 |

+

|

| 50 |

+

- Generating predictions of images from a patient's tumor and sharing those predictions with the patient

|

| 51 |

+

- Generating predictions of images from a patient's tumor and sharing those predictions with the patient's physician, or other members of the patient's healthcare team

|

| 52 |

+

- Influencing a patient's health care treatment in any way based on output from this model

|

| 53 |

+

|

| 54 |

+

### Limitations

|

| 55 |

+

|

| 56 |

+

- The model has not been validated to discriminate lung adenocarcinoma vs. squamous cell carcinoma in contexts where other tumor types are possible (such as lung small cell carcinoma, neuroendocrine tumors, metastatic deposits, etc.)

|

| 57 |

+

|

| 58 |

+

### Bias

|

| 59 |

+

This model was trained on The Cancer Genome Atlas (TCGA), which contains patient data from communities and cultures which may not reflect the general population. This datasets is comprised of images from multiple institutions, which may introduce a potential source of bias from site-specific batch effects ([Howard, 2021](https://www.nature.com/articles/s41467-021-24698-1)). The model was validated on data from the Clinical Proteomics Tumor Analysis Consortium (CPTAC) and an institutional dataset from Mayo Clinic, the latter of which consists primarily of data from patients of white and western cultures.

|

| 60 |

+

|

| 61 |

+

## Examples

|

| 62 |

+

For direct use, the model can be loaded using [Slideflow](https://github.com/jamesdolezal/slideflow) version 1.1 with the following syntax:

|

| 63 |

+

|

| 64 |

+

```

|

| 65 |

+

import slideflow as sf

|

| 66 |

+

model = sf.model.load('/path/')

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

The stain normalizer can be loaded and fit using Slideflow:

|

| 70 |

+

|

| 71 |

+

```

|

| 72 |

+

normalizer = sf.util.get_model_normalizer('/path/')

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

The stain normalizer has a native Tensorflow transform and can be directly applied to a tf.data.Dataset:

|

| 76 |

+

|

| 77 |

+

```

|

| 78 |

+

# Map the stain normalizer transformation

|

| 79 |

+

# to a tf.data.Dataset

|

| 80 |

+

dataset = dataset.map(normalizer.tf_to_tf)

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

Alternatively, the model can be used to generate predictions for whole-slide images processed through Slideflow in an end-to-end [Project](https://slideflow.dev/project_setup.html). To use the model to generate predictions on data processed with Slideflow, simply pass the model to the [`Project.predict()`](https://slideflow.dev/project.html#slideflow.Project.predict) function:

|

| 84 |

+

|

| 85 |

+

```

|

| 86 |

+

import slideflow

|

| 87 |

+

P = sf.Project('/path/to/slideflow/project')

|

| 88 |

+

P.predict('/model/path')

|

| 89 |

+

```

|

| 90 |

+

|

| 91 |

+

## Training

|

| 92 |

+

|

| 93 |

+

**Training Data**

|

| 94 |

+

The following dataset was used to train the model:

|

| 95 |

+

|

| 96 |

+

- The Cancer Genome Atlas (TCGA), LUAD (adenocarcinoma) and LUSC (squamous cell carcinoma) cohorts (see next section)

|

| 97 |

+

|

| 98 |

+

This model was trained on the full dataset, with a total of 941 slides.

|

| 99 |

+

|

| 100 |

+

**Training Procedure**

|

| 101 |

+

Each whole-slide image was sectioned into smaller images in a grid-wise fashion in order to extract tiles from whole-slide images at 302 x 302 μm. Image tiles were extracted at the nearest downsample layer, and resized to 299 x 299 px using [Libvips](https://www.libvips.org/API/current/libvips-resample.html#vips-resize). During training,

|

| 102 |

+

|

| 103 |

+

- Images are stain-normalized with a modified Reinhard normalizer ("Reinhard-Fast"), which excludes the brightness standardization step, available [here](https://github.com/jamesdolezal/slideflow/blob/master/slideflow/norm/tensorflow/reinhard.py)

|

| 104 |

+

- Images are randomly flipped and rotated (90, 180, 270)

|

| 105 |

+

- Images have a 50% chance of being JPEG compressed with quality level between 50-100%

|

| 106 |

+

- Images have a 10% chance of random Gaussian blur, with sigma between 0.5-2.0

|

| 107 |

+

- Images are standardized with `tf.image.per_image_standardization()`

|

| 108 |

+

- Images are classified through an Xception block, followed by two hidden layers with dropout (p=0.1) permanently enabled during both training and inference

|

| 109 |

+

- The loss is cross-entropy, with adenocarcinoma=0 and squamous=1

|

| 110 |

+

- Training was halted at a predetermined step=1451 (at batch size of 128, this is after 185,728 images), determined through nested cross-validation

|

| 111 |

+

|

| 112 |

+

During inference,

|

| 113 |

+

|

| 114 |

+

- A given image undergoes 30 forward passes in the network, resulting in a distribution, where

|

| 115 |

+

- The mean is the final prediction

|

| 116 |

+

- The standard deviation is the uncertainty

|

| 117 |

+

|

| 118 |

+

Tile-level and slide-level uncertainty thresholds are calculated and applied as discussed in the [Paper](https://www.nature.com/articles/s41467-022-34025-x). For this model, θ_tile=0.0228 and θ_slide=0.0139.

|

| 119 |

+

|

| 120 |

+

- **Hardware:** 1 x A100 GPUs

|

| 121 |

+

- **Optimizer:** Adam

|

| 122 |

+

- **Batch:** 128

|

| 123 |

+

- **Learning rate:** 0.0001, with a decay of 0.98 every 512 steps

|

| 124 |

+

- **Hidden layers:** 2 hidden layers of width 1024, with dropout p=0.1

|

| 125 |

+

|

| 126 |

+

## Evaluation Results

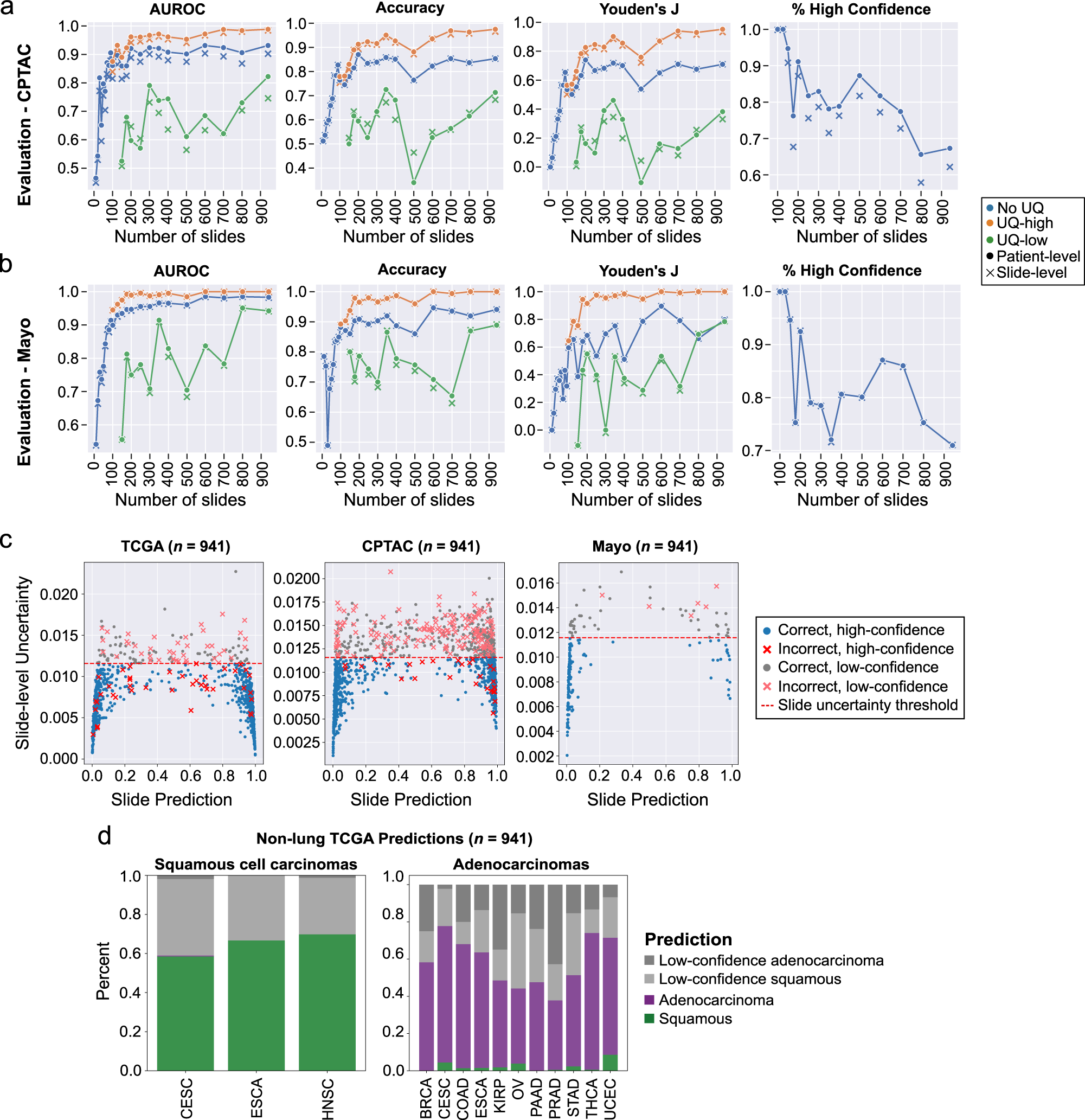

|

| 127 |

+

External evaluation results in the CPTAC and Mayo Clinic dataset are presented in the [Paper](https://www.nature.com/articles/s41467-022-34025-x) and shown here:

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

## Citation

|

| 132 |

+

@ARTICLE{Dolezal2022-qa,

|

| 133 |

+

title = "Uncertainty-informed deep learning models enable high-confidence

|

| 134 |

+

predictions for digital histopathology",

|

| 135 |

+

author = "Dolezal, James M and Srisuwananukorn, Andrew and Karpeyev, Dmitry

|

| 136 |

+

and Ramesh, Siddhi and Kochanny, Sara and Cody, Brittany and

|

| 137 |

+

Mansfield, Aaron S and Rakshit, Sagar and Bansal, Radhika and

|

| 138 |

+

Bois, Melanie C and Bungum, Aaron O and Schulte, Jefree J and

|

| 139 |

+

Vokes, Everett E and Garassino, Marina Chiara and Husain, Aliya N

|

| 140 |

+

and Pearson, Alexander T",

|

| 141 |

+

journal = "Nature Communications",

|

| 142 |

+

volume = 13,

|

| 143 |

+

number = 1,

|

| 144 |

+

pages = "6572",

|

| 145 |

+

month = nov,

|

| 146 |

+

year = 2022

|

| 147 |

+

}

|