File size: 2,581 Bytes

cf95685 f95a5ba ebfb3c7 eccf10e d1407d4 cf95685 ebfb3c7 eccf10e ebfb3c7 eccf10e ebfb3c7 eccf10e ebfb3c7 eccf10e |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 |

---

license: other

tags:

- stable-diffusion

- art

language:

- en

pipeline_tag: text-to-image

---

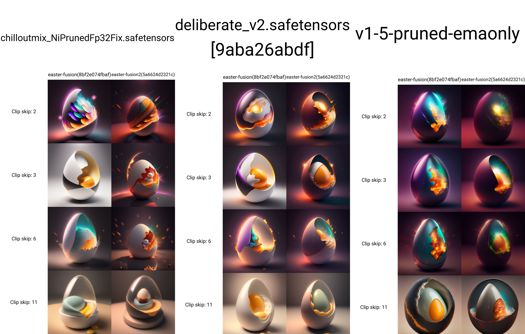

A fully revamped checkpoint based on the 512dim Lora

and chilloutmix_NiPrunedFp32Fix + deliberate_v2.

Training data: 512 DIM LORA

https://civitai.com/models/41893/lora-eggeaster-fusion

Here is how it looks using the lora on top of models:

More info on the Fusion project is soon to be announced ;)

🤟 🥃

lora weights: TEnc Weight 0.2 UNet Weight 1

Merged the 512dim lora to chillout and deliberate / --ratios 0.99 toward my fusion lora

C is CHillout / d is deliberate

Merged C and D with:

Base_alpha=0.53

Weight_values=0,0.157576195987654,0.28491512345679,0.384765625,0.459876543209877,0.512996720679012,0.546875,0.564260223765432,0.567901234567901,0.560546875,0.544945987654321,0.523847415123457,0.5,0.476152584876543,0.455054012345679,0.439453125,0.432098765432099,0.435739776234568,0.453125,0.487003279320987,0.540123456790124,0.615234375,0.71508487654321,0.842423804012347,1

and

1,0.842423804012346,0.71508487654321,0.615234375,0.540123456790123,0.487003279320988,0.453125,0.435739776234568,0.432098765432099,0.439453125,0.455054012345679,0.476152584876543,0.5,0.523847415123457,0.544945987654321,0.560546875,0.567901234567901,0.564260223765432,0.546875,0.512996720679013,0.459876543209876,0.384765625,0.28491512345679,0.157576195987653,0

both results merged

Weight_values=

1,0.842423804012346,0.71508487654321,0.615234375,0.540123456790123,0.487003279320988,0.453125,0.435739776234568,0.432098765432099,0.439453125,0.455054012345679,0.476152584876543,0.5,0.523847415123457,0.544945987654321,0.560546875,0.567901234567901,0.564260223765432,0.546875,0.512996720679013,0.459876543209876,0.384765625,0.28491512345679,0.157576195987653,0

And voila..

here is Egg_fusion checkpoint with the 512 dim-trained lora

Enjoy your eggs 🤟 🥃 |