license: apache-2.0

language:

- en

tags:

- Pytorch

- mmsegmentation

- segmentation

- Flood mapping

- Sentinel-2

- Geospatial

- Foundation model

metrics:

- accuracy

- IoU

Model and Inputs

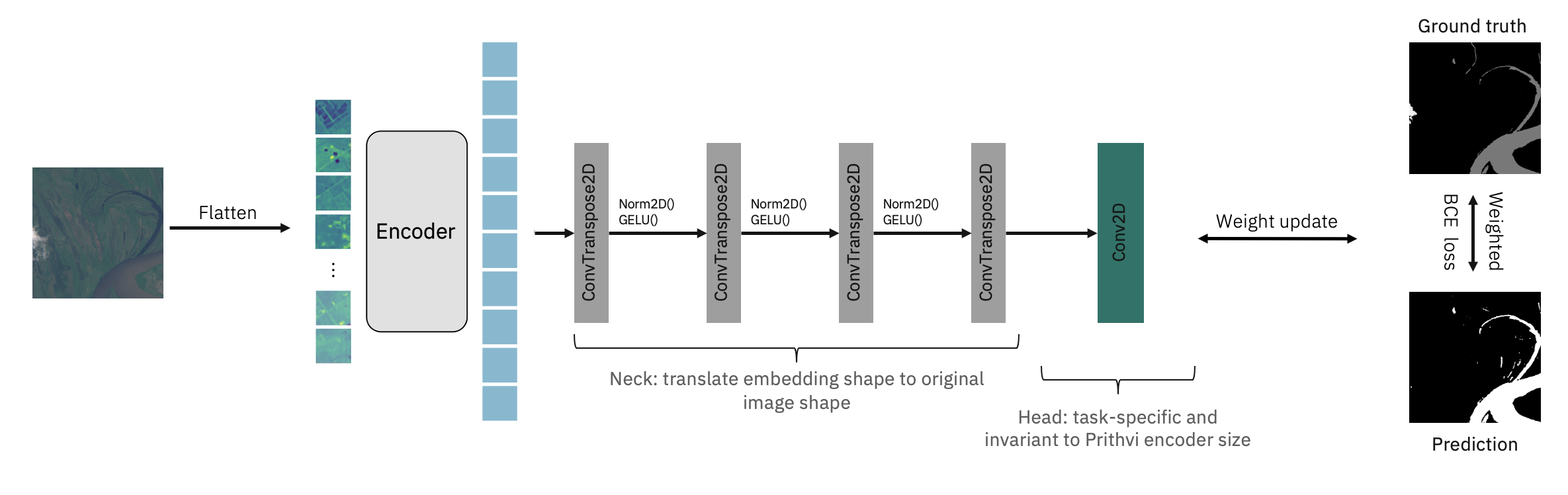

The pretrained Prithvi-100m model is finetuned to segment the extend of floods on Sentinel-2 images from the Sen1Floods11 dataset.

The dataset consists of 446 labeled 512x512 chips that span all 14 biomes, 357 ecoregions, and 6 continents of the world across 11 flood events. The benchmark associated to Sen1Floods11 provides results for fully convolutional neural networks trained in various input/labeled data setups, considering Sentinel-1 and Sentinel-2 imagery.

We extract the following bands for flood mapping:

- Blue

- Green

- Red

- Narrow NIR

- SWIR 1

- SWIR 2

Labels represent no water (class 0), water/flood (class 1), and no data/clouds (class 2).

The Prithvi-100m model was initially pretrained using a sequence length of 3 timesteps. Based on the characteristics of this benchmark dataset, we focus on single-timestamp segmentation. This demonstrates that our model can be utilized with an arbitrary number of timestamps during finetuning.

Code

The code for this finetuning is available through github.

The configuration used for finetuning is available through this config.

Results

Finetuning the geospatial foundation model for 100 epochs leads to the following performance on out-of-sample test data:

| Classes | IoU | Acc |

|---|---|---|

| No water | 96.90% | 98.11% |

| Water/Flood | 80.46% | 90.54% |

| aAcc | mIoU | mAcc |

|---|---|---|

| 97.25% | 88.68% | 94.37% |

The performance of the model has been further validated on an unseen, holdout flood event in Bolivia. The results are consistent with the performance on the test set:

| Classes | IoU | Acc |

|---|---|---|

| No water | 95.37% | 97.39% |

| Water/Flood | 77.95% | 88.74% |

| aAcc | mIoU | mAcc |

|---|---|---|

| 96.02% | 86.66% | 93.07% |

Finetuning took ~1 hour on an NVIDIA V100.

Inference

The github repo includes an inference script that allows running the flood mapping model for inference on Sentinel-2 images. These inputs have to be geotiff format, including 6 bands for a single time-step described above (Blue, Green, Red, Narrow NIR, SWIR, SWIR 2) in order. There is also a demo that leverages the same code here.