| datasets: | |

| - wikitext | |

| language: | |

| - en | |

| metrics: | |

| - perplexity | |

| ## Model Details | |

| GPT-2 Pretrained on Wikitext-103 (180M sentences) on 32GB V100 GPU for around 1.10L iterations. | |

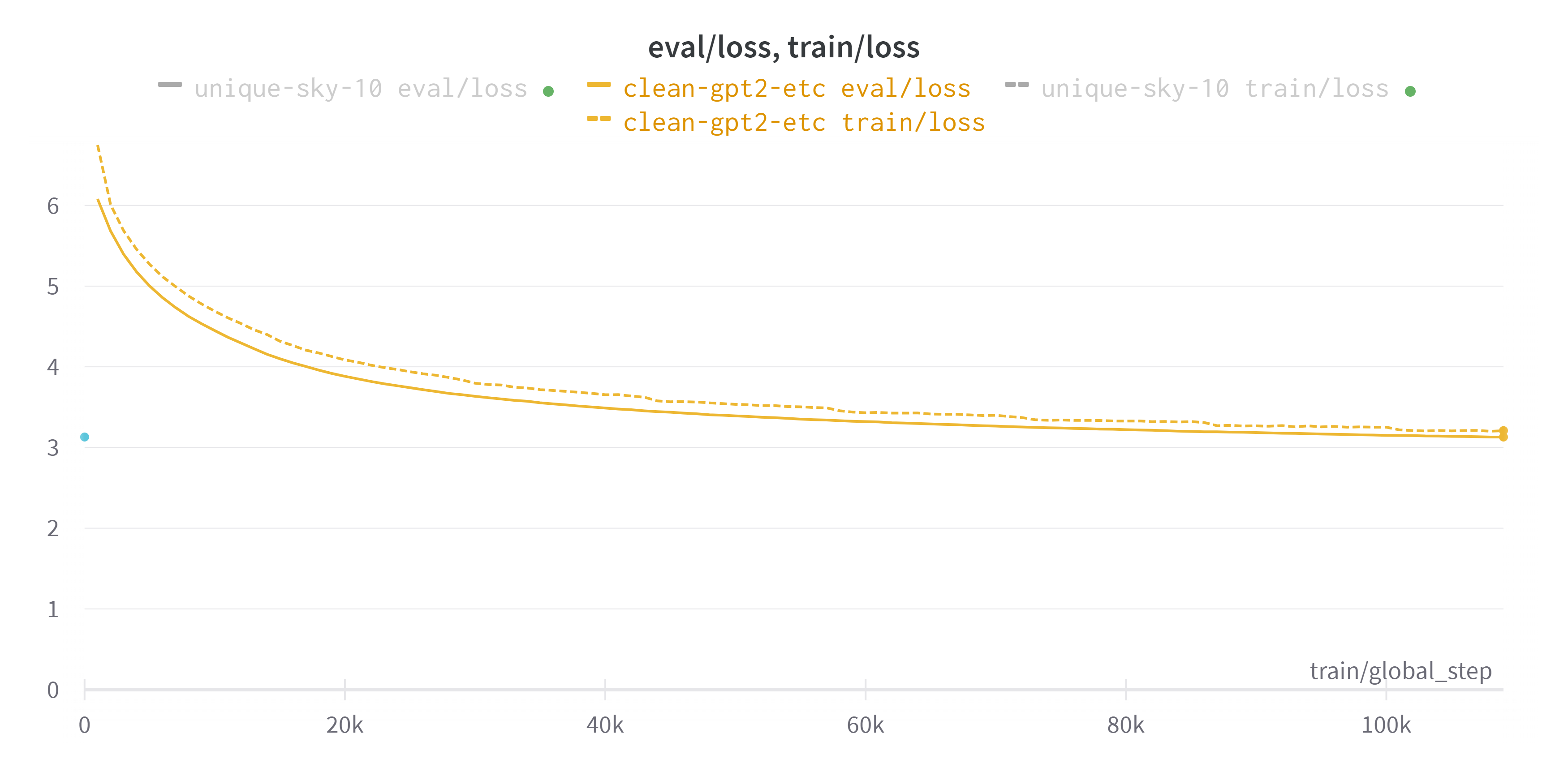

| Val_loss vs train_loss: | |

|  | |

| ### Model Description | |

| Perplexity: 22.87 | |

| ### Out-of-Scope Use | |

| Just a test model. Please don't expect good results. | |