Datasets:

File size: 3,353 Bytes

33bc715 4084592 33bc715 4084592 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 |

---

license: apache-2.0

task_categories:

- image-classification

language:

- en

tags:

- yolo

- opensource

- computervision

- imageprocessing

- yolov3

- yplov4

- labelimg

pretty_name: ToyCarAnnotation

size_categories:

- n<1K

---

Hey everyone,

In my final year project, I created **Smart Traffic Management System**.

The project was to manage traffic lights' delays based on the number of vehicles on road.

I made everything worked using Raspberry Pi and pre-recorded videos but it was a "final year project", it was needed to be tested by changing videos frequently which was a kind of hustle. Collecting tons of videos and loading them in Pi was not too hard but it would have cost time, by every time changing names of videos in the code. Also, it was not possible to implement it in real *(unless govt. would have permitted me, hehe)*. So I chose to showcase my work by making a beautiful prototype.

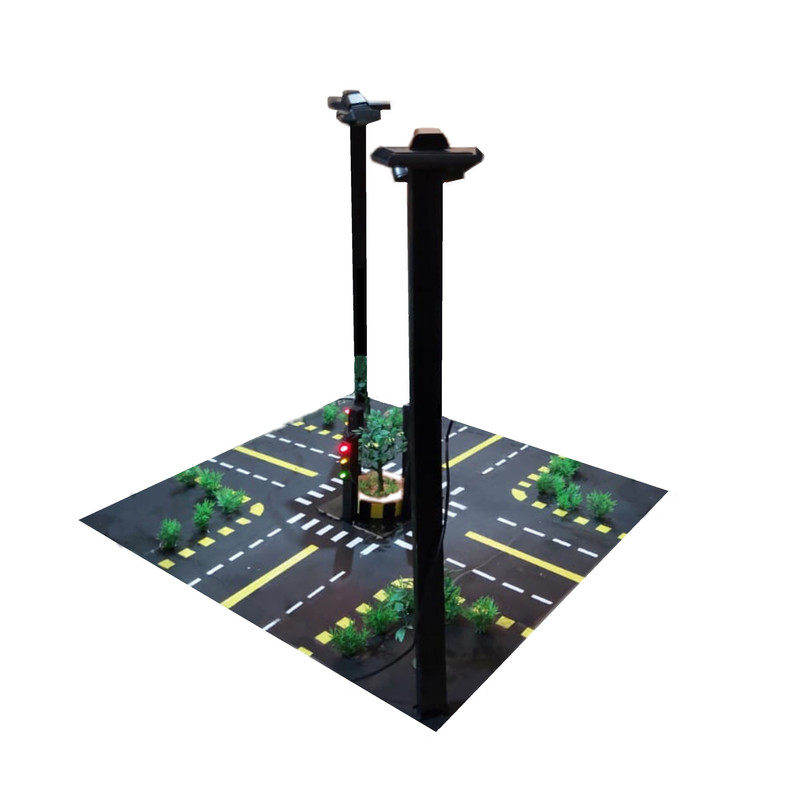

[](https://postimg.cc/jDBySvRP)

I know, the image isn't so appealing, I apologise for that, but you got the idea, right.

I placed my cars on tracks and took real-time video of the lanes from the two cameras attached to two big sticks.

***Why only two cameras when there are four roads?***

Raspberry Pi supports only two cameras. In my case, the indexes were 0 and 2.

But to make things work as I have planned, I cropped images for each lane.

***What does it mean?***

Let us take one camera and the respective two roads as an example.

I took real-time video, performed image framing on it. Since the roads beneath the cars were supposed to be still *(obvio, cars move, not roads :>)*, I performed image framing after every 2 seconds of the video. The images were first cropped and then saved in the Pi. I resized the images, found the coordinates on which the two roads were separating, cropped the image till those coordinates and got 2 images of 2 separate roads from 1 camera.

Finally, I ran my code and I found it could only detect a few cars. I thought real and toy ones looked quite similar, but the model didn't think the same. My YOLO weight file was trained on original cars and now I had to do training, again.

I looked for datasets already available but couldn't find any. So I decided to make one.

I collected images from different web sources and performed the most important task on each of them. ***ANNOTATION***, using LabelImg.

I separately annotated around 1000 images, in YOLO format, did all the processing and created this dataset. Usually, for YOLO especially, you get pictures on the internet but not text files. You have to individually perform annotation on all of them. It takes time and there isn't any tool to do it in bulk because you have to properly tell how many cars are there in the picture. Maybe in the future, LableImg gets updated with some machine learning algorithm for detecting and annotating images automatically (who knows).

So here it is for your help.

I will be adding the notebook as well in some time.

Any questions? drop down below. Do like if it’s helpful.

***You can find me on:***

[https://www.github.com/tubasid](url)

[https://www.linkedin.com/in/tubasid](url)

[https://www.twitter.com/in/tubaasid](url)

[https://www.discord.com/channels/@tubasid](url)

Until next post.

***TubaSid***

|