dataset_info:

features:

- name: url

dtype: string

- name: text

dtype: string

- name: date

dtype: string

- name: metadata

dtype: string

splits:

- name: train

num_bytes: 56651995057

num_examples: 6315233

download_size: 16370689925

dataset_size: 56651995057

Keiran Paster*, Marco Dos Santos*, Zhangir Azerbayev, Jimmy Ba

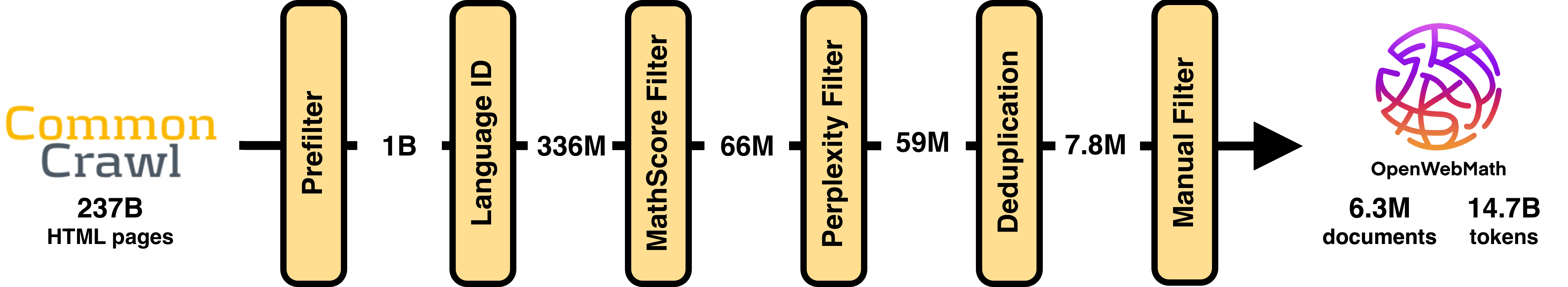

OpenWebMath is a dataset containing the majority of the high-quality, mathematical text from the internet. It is filtered and extracted from over 200B HTML files on Common Crawl down to a set of 6.3 million documents containing 14.7B tokens. OpenWebMath is intended for use in pretraining and finetuning large language models.

You can download the dataset using Hugging Face:

from datasets import load_dataset

ds = load_dataset("open-web-math/open-web-math")

Important Links:

Dataset Contents

The dataset is structured as follows:

{

"text": ... # text of the document,

"url": ... # url of the document,

"date": ... # date the page was crawled,

"metadata": ... # a JSON string containing information from the extraction process

}

OpenWebMath contains documents from over 130k different domains, including data from forums, educational pages, and blogs. The dataset contains documents covering mathematics, physics, statistics, computer science, and more. We summarize the content distribution of OpenWebMath in Figure 1 and Table 1.

Table 1: Most Common Domains in OpenWebMath by Character Count

| Domain | # Characters | % Characters |

|---|---|---|

| stackexchange.com | 4,655,132,784 | 9.55% |

| nature.com | 1,529,935,838 | 3.14% |

| wordpress.com | 1,294,166,938 | 2.66% |

| physicsforums.com | 1,160,137,919 | 2.38% |

| github.io | 725,689,722 | 1.49% |

| zbmath.org | 620,019,503 | 1.27% |

| wikipedia.org | 618,024,754 | 1.27% |

| groundai.com | 545,214,990 | 1.12% |

| blogspot.com | 520,392,333 | 1.07% |

| mathoverflow.net | 499,102,560 | 1.02% |

Figure 1: Content Distribution of OpenWebMath by Type and Subject

Dataset Pipeline

Figure 2: OpenWebMath Pipeline

OpenWebMath builds on the massive Common Crawl dataset, which contains over 200B HTML documents. We filtered the data to only include documents that are: (1) in English, (2) contain mathematical content, and (3) are of high quality. We also put a strong emphasis on extracting LaTeX content from the HTML documents as well as reducing boilerplate in comparison to other web datasets.

The OpenWebMath pipeline consists of five steps:

Prefiltering HTML Documents:

- We apply a simple prefilter to all HTML documents in Common Crawl in order to skip documents without mathematical content to unnecessary processing time.

Text Extraction:

- Extract text, including LaTeX content, from the HTML documents while removing boilerplate.

Content Classification and Filtering:

- Apply a FastText language identification model to keep only English documents.

- Filter high perplexity documents using a KenLM model trained on Proof-Pile.

- Filter non-mathematical documents using our own MathScore model.

Deduplication:

- Deduplicate the dataset using SimHash in text-dedup.

Manual Inspection:

- Inspect the documents gathered from previous steps and remove low quality pages.

For a detailed discussion on the processing pipeline, please refer to the associated paper.

License

OpenWebMath is made available under an ODC-By 1.0 license; users should also abide by the CommonCrawl ToU: https://commoncrawl.org/terms-of-use/. We do not alter the license of any of the underlying data.

Citation Information

@article{openwebmath,

title={OpenWebMath: An Open Dataset of High-Quality Mathematical Web Text},

author={Keiran Paster and Marco Dos Santos and Zhangir Azerbayev and Jimmy Ba},

journal={arXiv preprint arXiv:????.?????},

eprint={????.?????},

eprinttype = {arXiv},

url={https://arxiv.org/abs/????.?????},

year={2023}

}