text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Conclusion

**Congrats on finishing this unit**! There was a lot of information.

And congrats on finishing the tutorial. You've just coded your first Deep Reinforcement Learning agent from scratch using PyTorch and shared it on the Hub 🥳.

Don't hesitate to iterate on this unit **by improving the implementation for more complex environments** (for instance, what about changing the network to a Convolutional Neural Network to handle

frames as observation)?

In the next unit, **we're going to learn more about Unity MLAgents**, by training agents in Unity environments. This way, you will be ready to participate in the **AI vs AI challenges where you'll train your agents

to compete against other agents in a snowball fight and a soccer game.**

Sound fun? See you next time!

Finally, we would love **to hear what you think of the course and how we can improve it**. If you have some feedback then please 👉 [fill this form](https://forms.gle/BzKXWzLAGZESGNaE9)

### Keep Learning, stay awesome 🤗

|

huggingface/deep-rl-class/blob/main/units/en/unit4/conclusion.mdx

|

--

title: MASE

emoji: 🤗

colorFrom: blue

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

description: >-

Mean Absolute Scaled Error (MASE) is the mean absolute error of the forecast values, divided by the mean absolute error of the in-sample one-step naive forecast on the training set.

---

# Metric Card for MASE

## Metric Description

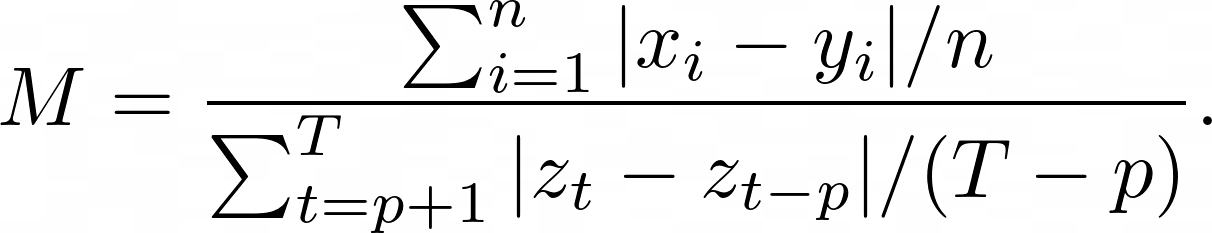

Mean Absolute Scaled Error (MASE) is the mean absolute error of the forecast values, divided by the mean absolute error of the in-sample one-step naive forecast. For prediction $x_i$ and corresponding ground truth $y_i$ as well as training data $z_t$ with seasonality $p$ the metric is given by:

This metric is:

* independent of the scale of the data;

* has predictable behavior when predicted/ground-truth data is near zero;

* symmetric;

* interpretable, as values greater than one indicate that in-sample one-step forecasts from the naïve method perform better than the forecast values under consideration.

## How to Use

At minimum, this metric requires predictions, references and training data as inputs.

```python

>>> mase_metric = evaluate.load("mase")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> training = [5, 0.5, 4, 6, 3, 5, 2]

>>> results = mase_metric.compute(predictions=predictions, references=references, training=training)

```

### Inputs

Mandatory inputs:

- `predictions`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the estimated target values.

- `references`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the ground truth (correct) target values.

- `training`: numeric array-like of shape (`n_train_samples,`) or (`n_train_samples`, `n_outputs`), representing the in sample training data.

Optional arguments:

- `periodicity`: the seasonal periodicity of training data. The default is 1.

- `sample_weight`: numeric array-like of shape (`n_samples,`) representing sample weights. The default is `None`.

- `multioutput`: `raw_values`, `uniform_average` or numeric array-like of shape (`n_outputs,`), which defines the aggregation of multiple output values. The default value is `uniform_average`.

- `raw_values` returns a full set of errors in case of multioutput input.

- `uniform_average` means that the errors of all outputs are averaged with uniform weight.

- the array-like value defines weights used to average errors.

### Output Values

This metric outputs a dictionary, containing the mean absolute error score, which is of type:

- `float`: if multioutput is `uniform_average` or an ndarray of weights, then the weighted average of all output errors is returned.

- numeric array-like of shape (`n_outputs,`): if multioutput is `raw_values`, then the score is returned for each output separately.

Each MASE `float` value ranges from `0.0` to `1.0`, with the best value being 0.0.

Output Example(s):

```python

{'mase': 0.5}

```

If `multioutput="raw_values"`:

```python

{'mase': array([0.5, 1. ])}

```

#### Values from Popular Papers

### Examples

Example with the `uniform_average` config:

```python

>>> mase_metric = evaluate.load("mase")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> training = [5, 0.5, 4, 6, 3, 5, 2]

>>> results = mase_metric.compute(predictions=predictions, references=references, training=training)

>>> print(results)

{'mase': 0.1833...}

```

Example with multi-dimensional lists, and the `raw_values` config:

```python

>>> mase_metric = evaluate.load("mase", "multilist")

>>> predictions = [[0.5, 1], [-1, 1], [7, -6]]

>>> references = [[0.1, 2], [-1, 2], [8, -5]]

>>> training = [[0.5, 1], [-1, 1], [7, -6]]

>>> results = mase_metric.compute(predictions=predictions, references=references, training=training)

>>> print(results)

{'mase': 0.1818...}

>>> results = mase_metric.compute(predictions=predictions, references=references, training=training, multioutput='raw_values')

>>> print(results)

{'mase': array([0.1052..., 0.2857...])}

```

## Limitations and Bias

## Citation(s)

```bibtex

@article{HYNDMAN2006679,

title = {Another look at measures of forecast accuracy},

journal = {International Journal of Forecasting},

volume = {22},

number = {4},

pages = {679--688},

year = {2006},

issn = {0169-2070},

doi = {https://doi.org/10.1016/j.ijforecast.2006.03.001},

url = {https://www.sciencedirect.com/science/article/pii/S0169207006000239},

author = {Rob J. Hyndman and Anne B. Koehler},

}

```

## Further References

- [Mean absolute scaled error - Wikipedia](https://en.wikipedia.org/wiki/Mean_absolute_scaled_errorr)

|

huggingface/evaluate/blob/main/metrics/mase/README.md

|

Metric Card for XTREME-S

## Metric Description

The XTREME-S metric aims to evaluate model performance on the Cross-lingual TRansfer Evaluation of Multilingual Encoders for Speech (XTREME-S) benchmark.

This benchmark was designed to evaluate speech representations across languages, tasks, domains and data regimes. It covers 102 languages from 10+ language families, 3 different domains and 4 task families: speech recognition, translation, classification and retrieval.

## How to Use

There are two steps: (1) loading the XTREME-S metric relevant to the subset of the benchmark being used for evaluation; and (2) calculating the metric.

1. **Loading the relevant XTREME-S metric** : the subsets of XTREME-S are the following: `mls`, `voxpopuli`, `covost2`, `fleurs-asr`, `fleurs-lang_id`, `minds14` and `babel`. More information about the different subsets can be found on the [XTREME-S benchmark page](https://huggingface.co/datasets/google/xtreme_s).

```python

>>> from datasets import load_metric

>>> xtreme_s_metric = datasets.load_metric('xtreme_s', 'mls')

```

2. **Calculating the metric**: the metric takes two inputs :

- `predictions`: a list of predictions to score, with each prediction a `str`.

- `references`: a list of lists of references for each translation, with each reference a `str`.

```python

>>> references = ["it is sunny here", "paper and pen are essentials"]

>>> predictions = ["it's sunny", "paper pen are essential"]

>>> results = xtreme_s_metric.compute(predictions=predictions, references=references)

```

It also has two optional arguments:

- `bleu_kwargs`: a `dict` of keywords to be passed when computing the `bleu` metric for the `covost2` subset. Keywords can be one of `smooth_method`, `smooth_value`, `force`, `lowercase`, `tokenize`, `use_effective_order`.

- `wer_kwargs`: optional dict of keywords to be passed when computing `wer` and `cer`, which are computed for the `mls`, `fleurs-asr`, `voxpopuli`, and `babel` subsets. Keywords are `concatenate_texts`.

## Output values

The output of the metric depends on the XTREME-S subset chosen, consisting of a dictionary that contains one or several of the following metrics:

- `accuracy`: the proportion of correct predictions among the total number of cases processed, with a range between 0 and 1 (see [accuracy](https://huggingface.co/metrics/accuracy) for more information). This is returned for the `fleurs-lang_id` and `minds14` subsets.

- `f1`: the harmonic mean of the precision and recall (see [F1 score](https://huggingface.co/metrics/f1) for more information). Its range is 0-1 -- its lowest possible value is 0, if either the precision or the recall is 0, and its highest possible value is 1.0, which means perfect precision and recall. It is returned for the `minds14` subset.

- `wer`: Word error rate (WER) is a common metric of the performance of an automatic speech recognition system. The lower the value, the better the performance of the ASR system, with a WER of 0 being a perfect score (see [WER score](https://huggingface.co/metrics/wer) for more information). It is returned for the `mls`, `fleurs-asr`, `voxpopuli` and `babel` subsets of the benchmark.

- `cer`: Character error rate (CER) is similar to WER, but operates on character instead of word. The lower the CER value, the better the performance of the ASR system, with a CER of 0 being a perfect score (see [CER score](https://huggingface.co/metrics/cer) for more information). It is returned for the `mls`, `fleurs-asr`, `voxpopuli` and `babel` subsets of the benchmark.

- `bleu`: the BLEU score, calculated according to the SacreBLEU metric approach. It can take any value between 0.0 and 100.0, inclusive, with higher values being better (see [SacreBLEU](https://huggingface.co/metrics/sacrebleu) for more details). This is returned for the `covost2` subset.

### Values from popular papers

The [original XTREME-S paper](https://arxiv.org/pdf/2203.10752.pdf) reported average WERs ranging from 9.2 to 14.6, a BLEU score of 20.6, an accuracy of 73.3 and F1 score of 86.9, depending on the subsets of the dataset tested on.

## Examples

For the `mls` subset (which outputs `wer` and `cer`):

```python

>>> from datasets import load_metric

>>> xtreme_s_metric = datasets.load_metric('xtreme_s', 'mls')

>>> references = ["it is sunny here", "paper and pen are essentials"]

>>> predictions = ["it's sunny", "paper pen are essential"]

>>> results = xtreme_s_metric.compute(predictions=predictions, references=references)

>>> print({k: round(v, 2) for k, v in results.items()})

{'wer': 0.56, 'cer': 0.27}

```

For the `covost2` subset (which outputs `bleu`):

```python

>>> from datasets import load_metric

>>> xtreme_s_metric = datasets.load_metric('xtreme_s', 'covost2')

>>> references = ["bonjour paris", "il est necessaire de faire du sport de temps en temp"]

>>> predictions = ["bonjour paris", "il est important de faire du sport souvent"]

>>> results = xtreme_s_metric.compute(predictions=predictions, references=references)

>>> print({k: round(v, 2) for k, v in results.items()})

{'bleu': 31.65}

```

For the `fleurs-lang_id` subset (which outputs `accuracy`):

```python

>>> from datasets import load_metric

>>> xtreme_s_metric = datasets.load_metric('xtreme_s', 'fleurs-lang_id')

>>> references = [0, 1, 0, 0, 1]

>>> predictions = [0, 1, 1, 0, 0]

>>> results = xtreme_s_metric.compute(predictions=predictions, references=references)

>>> print({k: round(v, 2) for k, v in results.items()})

{'accuracy': 0.6}

```

For the `minds14` subset (which outputs `f1` and `accuracy`):

```python

>>> from datasets import load_metric

>>> xtreme_s_metric = datasets.load_metric('xtreme_s', 'minds14')

>>> references = [0, 1, 0, 0, 1]

>>> predictions = [0, 1, 1, 0, 0]

>>> results = xtreme_s_metric.compute(predictions=predictions, references=references)

>>> print({k: round(v, 2) for k, v in results.items()})

{'f1': 0.58, 'accuracy': 0.6}

```

## Limitations and bias

This metric works only with datasets that have the same format as the [XTREME-S dataset](https://huggingface.co/datasets/google/xtreme_s).

While the XTREME-S dataset is meant to represent a variety of languages and tasks, it has inherent biases: it is missing many languages that are important and under-represented in NLP datasets.

It also has a particular focus on read-speech because common evaluation benchmarks like CoVoST-2 or LibriSpeech evaluate on this type of speech, which results in a mismatch between performance obtained in a read-speech setting and a more noisy setting (in production or live deployment, for instance).

## Citation

```bibtex

@article{conneau2022xtreme,

title={XTREME-S: Evaluating Cross-lingual Speech Representations},

author={Conneau, Alexis and Bapna, Ankur and Zhang, Yu and Ma, Min and von Platen, Patrick and Lozhkov, Anton and Cherry, Colin and Jia, Ye and Rivera, Clara and Kale, Mihir and others},

journal={arXiv preprint arXiv:2203.10752},

year={2022}

}

```

## Further References

- [XTREME-S dataset](https://huggingface.co/datasets/google/xtreme_s)

- [XTREME-S github repository](https://github.com/google-research/xtreme)

|

huggingface/datasets/blob/main/metrics/xtreme_s/README.md

|

ResNet

**Residual Networks**, or **ResNets**, learn residual functions with reference to the layer inputs, instead of learning unreferenced functions. Instead of hoping each few stacked layers directly fit a desired underlying mapping, residual nets let these layers fit a residual mapping. They stack [residual blocks](https://paperswithcode.com/method/residual-block) ontop of each other to form network: e.g. a ResNet-50 has fifty layers using these blocks.

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('resnet18', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `resnet18`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('resnet18', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@article{DBLP:journals/corr/HeZRS15,

author = {Kaiming He and

Xiangyu Zhang and

Shaoqing Ren and

Jian Sun},

title = {Deep Residual Learning for Image Recognition},

journal = {CoRR},

volume = {abs/1512.03385},

year = {2015},

url = {http://arxiv.org/abs/1512.03385},

archivePrefix = {arXiv},

eprint = {1512.03385},

timestamp = {Wed, 17 Apr 2019 17:23:45 +0200},

biburl = {https://dblp.org/rec/journals/corr/HeZRS15.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

<!--

Type: model-index

Collections:

- Name: ResNet

Paper:

Title: Deep Residual Learning for Image Recognition

URL: https://paperswithcode.com/paper/deep-residual-learning-for-image-recognition

Models:

- Name: resnet18

In Collection: ResNet

Metadata:

FLOPs: 2337073152

Parameters: 11690000

File Size: 46827520

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: resnet18

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/resnet.py#L641

Weights: https://download.pytorch.org/models/resnet18-5c106cde.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 69.74%

Top 5 Accuracy: 89.09%

- Name: resnet26

In Collection: ResNet

Metadata:

FLOPs: 3026804736

Parameters: 16000000

File Size: 64129972

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: resnet26

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/resnet.py#L675

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/resnet26-9aa10e23.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 75.29%

Top 5 Accuracy: 92.57%

- Name: resnet34

In Collection: ResNet

Metadata:

FLOPs: 4718469120

Parameters: 21800000

File Size: 87290831

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: resnet34

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/resnet.py#L658

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/resnet34-43635321.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 75.11%

Top 5 Accuracy: 92.28%

- Name: resnet50

In Collection: ResNet

Metadata:

FLOPs: 5282531328

Parameters: 25560000

File Size: 102488165

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: resnet50

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/resnet.py#L691

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/resnet50_ram-a26f946b.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.04%

Top 5 Accuracy: 94.39%

- Name: resnetblur50

In Collection: ResNet

Metadata:

FLOPs: 6621606912

Parameters: 25560000

File Size: 102488165

Architecture:

- 1x1 Convolution

- Batch Normalization

- Blur Pooling

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: resnetblur50

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/resnet.py#L1160

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/resnetblur50-84f4748f.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.29%

Top 5 Accuracy: 94.64%

- Name: tv_resnet101

In Collection: ResNet

Metadata:

FLOPs: 10068547584

Parameters: 44550000

File Size: 178728960

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

ID: tv_resnet101

LR: 0.1

Epochs: 90

Crop Pct: '0.875'

LR Gamma: 0.1

Momentum: 0.9

Batch Size: 32

Image Size: '224'

LR Step Size: 30

Weight Decay: 0.0001

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/resnet.py#L761

Weights: https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 77.37%

Top 5 Accuracy: 93.56%

- Name: tv_resnet152

In Collection: ResNet

Metadata:

FLOPs: 14857660416

Parameters: 60190000

File Size: 241530880

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

ID: tv_resnet152

LR: 0.1

Epochs: 90

Crop Pct: '0.875'

LR Gamma: 0.1

Momentum: 0.9

Batch Size: 32

Image Size: '224'

LR Step Size: 30

Weight Decay: 0.0001

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/resnet.py#L769

Weights: https://download.pytorch.org/models/resnet152-b121ed2d.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 78.32%

Top 5 Accuracy: 94.05%

- Name: tv_resnet34

In Collection: ResNet

Metadata:

FLOPs: 4718469120

Parameters: 21800000

File Size: 87306240

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

ID: tv_resnet34

LR: 0.1

Epochs: 90

Crop Pct: '0.875'

LR Gamma: 0.1

Momentum: 0.9

Batch Size: 32

Image Size: '224'

LR Step Size: 30

Weight Decay: 0.0001

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/resnet.py#L745

Weights: https://download.pytorch.org/models/resnet34-333f7ec4.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 73.3%

Top 5 Accuracy: 91.42%

- Name: tv_resnet50

In Collection: ResNet

Metadata:

FLOPs: 5282531328

Parameters: 25560000

File Size: 102502400

Architecture:

- 1x1 Convolution

- Batch Normalization

- Bottleneck Residual Block

- Convolution

- Global Average Pooling

- Max Pooling

- ReLU

- Residual Block

- Residual Connection

- Softmax

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

ID: tv_resnet50

LR: 0.1

Epochs: 90

Crop Pct: '0.875'

LR Gamma: 0.1

Momentum: 0.9

Batch Size: 32

Image Size: '224'

LR Step Size: 30

Weight Decay: 0.0001

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/resnet.py#L753

Weights: https://download.pytorch.org/models/resnet50-19c8e357.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 76.16%

Top 5 Accuracy: 92.88%

-->

|

huggingface/pytorch-image-models/blob/main/hfdocs/source/models/resnet.mdx

|

Visualize the Clipped Surrogate Objective Function

Don't worry. **It's normal if this seems complex to handle right now**. But we're going to see what this Clipped Surrogate Objective Function looks like, and this will help you to visualize better what's going on.

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit9/recap.jpg" alt="PPO"/>

<figcaption><a href="https://fse.studenttheses.ub.rug.nl/25709/1/mAI_2021_BickD.pdf">Table from "Towards Delivering a Coherent Self-Contained

Explanation of Proximal Policy Optimization" by Daniel Bick</a></figcaption>

</figure>

We have six different situations. Remember first that we take the minimum between the clipped and unclipped objectives.

## Case 1 and 2: the ratio is between the range

In situations 1 and 2, **the clipping does not apply since the ratio is between the range** \\( [1 - \epsilon, 1 + \epsilon] \\)

In situation 1, we have a positive advantage: the **action is better than the average** of all the actions in that state. Therefore, we should encourage our current policy to increase the probability of taking that action in that state.

Since the ratio is between intervals, **we can increase our policy's probability of taking that action at that state.**

In situation 2, we have a negative advantage: the action is worse than the average of all actions at that state. Therefore, we should discourage our current policy from taking that action in that state.

Since the ratio is between intervals, **we can decrease the probability that our policy takes that action at that state.**

## Case 3 and 4: the ratio is below the range

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit9/recap.jpg" alt="PPO"/>

<figcaption><a href="https://fse.studenttheses.ub.rug.nl/25709/1/mAI_2021_BickD.pdf">Table from "Towards Delivering a Coherent Self-Contained

Explanation of Proximal Policy Optimization" by Daniel Bick</a></figcaption>

</figure>

If the probability ratio is lower than \\( [1 - \epsilon] \\), the probability of taking that action at that state is much lower than with the old policy.

If, like in situation 3, the advantage estimate is positive (A>0), then **you want to increase the probability of taking that action at that state.**

But if, like situation 4, the advantage estimate is negative, **we don't want to decrease further** the probability of taking that action at that state. Therefore, the gradient is = 0 (since we're on a flat line), so we don't update our weights.

## Case 5 and 6: the ratio is above the range

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit9/recap.jpg" alt="PPO"/>

<figcaption><a href="https://fse.studenttheses.ub.rug.nl/25709/1/mAI_2021_BickD.pdf">Table from "Towards Delivering a Coherent Self-Contained

Explanation of Proximal Policy Optimization" by Daniel Bick</a></figcaption>

</figure>

If the probability ratio is higher than \\( [1 + \epsilon] \\), the probability of taking that action at that state in the current policy is **much higher than in the former policy.**

If, like in situation 5, the advantage is positive, **we don't want to get too greedy**. We already have a higher probability of taking that action at that state than the former policy. Therefore, the gradient is = 0 (since we're on a flat line), so we don't update our weights.

If, like in situation 6, the advantage is negative, we want to decrease the probability of taking that action at that state.

So if we recap, **we only update the policy with the unclipped objective part**. When the minimum is the clipped objective part, we don't update our policy weights since the gradient will equal 0.

So we update our policy only if:

- Our ratio is in the range \\( [1 - \epsilon, 1 + \epsilon] \\)

- Our ratio is outside the range, but **the advantage leads to getting closer to the range**

- Being below the ratio but the advantage is > 0

- Being above the ratio but the advantage is < 0

**You might wonder why, when the minimum is the clipped ratio, the gradient is 0.** When the ratio is clipped, the derivative in this case will not be the derivative of the \\( r_t(\theta) * A_t \\) but the derivative of either \\( (1 - \epsilon)* A_t\\) or the derivative of \\( (1 + \epsilon)* A_t\\) which both = 0.

To summarize, thanks to this clipped surrogate objective, **we restrict the range that the current policy can vary from the old one.** Because we remove the incentive for the probability ratio to move outside of the interval since the clip forces the gradient to be zero. If the ratio is > \\( 1 + \epsilon \\) or < \\( 1 - \epsilon \\) the gradient will be equal to 0.

The final Clipped Surrogate Objective Loss for PPO Actor-Critic style looks like this, it's a combination of Clipped Surrogate Objective function, Value Loss Function and Entropy bonus:

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit9/ppo-objective.jpg" alt="PPO objective"/>

That was quite complex. Take time to understand these situations by looking at the table and the graph. **You must understand why this makes sense.** If you want to go deeper, the best resource is the article [Towards Delivering a Coherent Self-Contained Explanation of Proximal Policy Optimization" by Daniel Bick, especially part 3.4](https://fse.studenttheses.ub.rug.nl/25709/1/mAI_2021_BickD.pdf).

|

huggingface/deep-rl-class/blob/main/units/en/unit8/visualize.mdx

|

Metric Card for WER

## Metric description

Word error rate (WER) is a common metric of the performance of an automatic speech recognition (ASR) system.

The general difficulty of measuring the performance of ASR systems lies in the fact that the recognized word sequence can have a different length from the reference word sequence (supposedly the correct one). The WER is derived from the [Levenshtein distance](https://en.wikipedia.org/wiki/Levenshtein_distance), working at the word level.

This problem is solved by first aligning the recognized word sequence with the reference (spoken) word sequence using dynamic string alignment. Examination of this issue is seen through a theory called the power law that states the correlation between [perplexity](https://huggingface.co/metrics/perplexity) and word error rate (see [this article](https://www.cs.cmu.edu/~roni/papers/eval-metrics-bntuw-9802.pdf) for further information).

Word error rate can then be computed as:

`WER = (S + D + I) / N = (S + D + I) / (S + D + C)`

where

`S` is the number of substitutions,

`D` is the number of deletions,

`I` is the number of insertions,

`C` is the number of correct words,

`N` is the number of words in the reference (`N=S+D+C`).

## How to use

The metric takes two inputs: references (a list of references for each speech input) and predictions (a list of transcriptions to score).

```python

from datasets import load_metric

wer = load_metric("wer")

wer_score = wer.compute(predictions=predictions, references=references)

```

## Output values

This metric outputs a float representing the word error rate.

```

print(wer_score)

0.5

```

This value indicates the average number of errors per reference word.

The **lower** the value, the **better** the performance of the ASR system, with a WER of 0 being a perfect score.

### Values from popular papers

This metric is highly dependent on the content and quality of the dataset, and therefore users can expect very different values for the same model but on different datasets.

For example, datasets such as [LibriSpeech](https://huggingface.co/datasets/librispeech_asr) report a WER in the 1.8-3.3 range, whereas ASR models evaluated on [Timit](https://huggingface.co/datasets/timit_asr) report a WER in the 8.3-20.4 range.

See the leaderboards for [LibriSpeech](https://paperswithcode.com/sota/speech-recognition-on-librispeech-test-clean) and [Timit](https://paperswithcode.com/sota/speech-recognition-on-timit) for the most recent values.

## Examples

Perfect match between prediction and reference:

```python

from datasets import load_metric

wer = load_metric("wer")

predictions = ["hello world", "good night moon"]

references = ["hello world", "good night moon"]

wer_score = wer.compute(predictions=predictions, references=references)

print(wer_score)

0.0

```

Partial match between prediction and reference:

```python

from datasets import load_metric

wer = load_metric("wer")

predictions = ["this is the prediction", "there is an other sample"]

references = ["this is the reference", "there is another one"]

wer_score = wer.compute(predictions=predictions, references=references)

print(wer_score)

0.5

```

No match between prediction and reference:

```python

from datasets import load_metric

wer = load_metric("wer")

predictions = ["hello world", "good night moon"]

references = ["hi everyone", "have a great day"]

wer_score = wer.compute(predictions=predictions, references=references)

print(wer_score)

1.0

```

## Limitations and bias

WER is a valuable tool for comparing different systems as well as for evaluating improvements within one system. This kind of measurement, however, provides no details on the nature of translation errors and further work is therefore required to identify the main source(s) of error and to focus any research effort.

## Citation

```bibtex

@inproceedings{woodard1982,

author = {Woodard, J.P. and Nelson, J.T.,

year = {1982},

journal = Ẅorkshop on standardisation for speech I/O technology, Naval Air Development Center, Warminster, PA},

title = {An information theoretic measure of speech recognition performance}

}

```

```bibtex

@inproceedings{morris2004,

author = {Morris, Andrew and Maier, Viktoria and Green, Phil},

year = {2004},

month = {01},

pages = {},

title = {From WER and RIL to MER and WIL: improved evaluation measures for connected speech recognition.}

}

```

## Further References

- [Word Error Rate -- Wikipedia](https://en.wikipedia.org/wiki/Word_error_rate)

- [Hugging Face Tasks -- Automatic Speech Recognition](https://huggingface.co/tasks/automatic-speech-recognition)

|

huggingface/datasets/blob/main/metrics/wer/README.md

|

Share a dataset to the Hub

The [Hub](https://huggingface.co/datasets) is home to an extensive collection of community-curated and popular research datasets. We encourage you to share your dataset to the Hub to help grow the ML community and accelerate progress for everyone. All contributions are welcome; adding a dataset is just a drag and drop away!

Start by [creating a Hugging Face Hub account](https://huggingface.co/join) if you don't have one yet.

## Upload with the Hub UI

The Hub's web-based interface allows users without any developer experience to upload a dataset.

### Create a repository

A repository hosts all your dataset files, including the revision history, making storing more than one dataset version possible.

1. Click on your profile and select **New Dataset** to create a new dataset repository.

2. Pick a name for your dataset, and choose whether it is a public or private dataset. A public dataset is visible to anyone, whereas a private dataset can only be viewed by you or members of your organization.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/create_repo.png"/>

</div>

### Upload dataset

1. Once you've created a repository, navigate to the **Files and versions** tab to add a file. Select **Add file** to upload your dataset files. We support many text, audio, and image data extensions such as `.csv`, `.mp3`, and `.jpg` among many others. For text data extensions like `.csv`, `.json`, `.jsonl`, and `.txt`, we recommend compressing them before uploading to the Hub (to `.zip` or `.gz` file extension for example).

Text file extensions are not tracked by Git LFS by default, and if they're greater than 10MB, they will not be committed and uploaded. Take a look at the `.gitattributes` file in your repository for a complete list of tracked file extensions. For this tutorial, you can use the following sample `.csv` files since they're small: <a href="https://huggingface.co/datasets/stevhliu/demo/raw/main/train.csv" download>train.csv</a>, <a href="https://huggingface.co/datasets/stevhliu/demo/raw/main/test.csv" download>test.csv</a>.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/upload_files.png"/>

</div>

2. Drag and drop your dataset files and add a brief descriptive commit message.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/commit_files.png"/>

</div>

3. After uploading your dataset files, they are stored in your dataset repository.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/files_stored.png"/>

</div>

### Create a Dataset card

Adding a Dataset card is super valuable for helping users find your dataset and understand how to use it responsibly.

1. Click on **Create Dataset Card** to create a Dataset card. This button creates a `README.md` file in your repository.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/dataset_card.png"/>

</div>

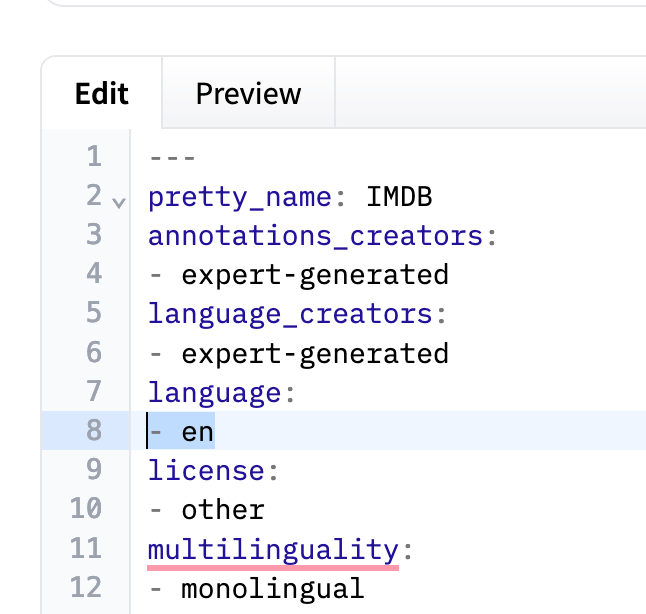

2. At the top, you'll see the **Metadata UI** with several fields to select from like license, language, and task categories. These are the most important tags for helping users discover your dataset on the Hub. When you select an option from each field, they'll be automatically added to the top of the dataset card.

You can also look at the [Dataset Card specifications](https://github.com/huggingface/hub-docs/blob/main/datasetcard.md?plain=1), which has a complete set of (but not required) tag options like `annotations_creators`, to help you choose the appropriate tags.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/datasets/metadata_ui.png"/>

</div>

3. Click on the **Import dataset card template** link at the top of the editor to automatically create a dataset card template. Filling out the template is a great way to introduce your dataset to the community and help users understand how to use it. For a detailed example of what a good Dataset card should look like, take a look at the [CNN DailyMail Dataset card](https://huggingface.co/datasets/cnn_dailymail).

### Load dataset

Once your dataset is stored on the Hub, anyone can load it with the [`load_dataset`] function:

```py

>>> from datasets import load_dataset

>>> dataset = load_dataset("stevhliu/demo")

```

## Upload with Python

Users who prefer to upload a dataset programmatically can use the [huggingface_hub](https://huggingface.co/docs/huggingface_hub/index) library. This library allows users to interact with the Hub from Python.

1. Begin by installing the library:

```bash

pip install huggingface_hub

```

2. To upload a dataset on the Hub in Python, you need to log in to your Hugging Face account:

```bash

huggingface-cli login

```

3. Use the [`push_to_hub()`](https://huggingface.co/docs/datasets/main/en/package_reference/main_classes#datasets.DatasetDict.push_to_hub) function to help you add, commit, and push a file to your repository:

```py

>>> from datasets import load_dataset

>>> dataset = load_dataset("stevhliu/demo")

# dataset = dataset.map(...) # do all your processing here

>>> dataset.push_to_hub("stevhliu/processed_demo")

```

To set your dataset as private, set the `private` parameter to `True`. This parameter will only work if you are creating a repository for the first time.

```py

>>> dataset.push_to_hub("stevhliu/private_processed_demo", private=True)

```

To add a new configuration (or subset) to a dataset or to add a new split (train/validation/test), please refer to the [`Dataset.push_to_hub`] documentation.

### Privacy

A private dataset is only accessible by you. Similarly, if you share a dataset within your organization, then members of the organization can also access the dataset.

Load a private dataset by providing your authentication token to the `token` parameter:

```py

>>> from datasets import load_dataset

# Load a private individual dataset

>>> dataset = load_dataset("stevhliu/demo", token=True)

# Load a private organization dataset

>>> dataset = load_dataset("organization/dataset_name", token=True)

```

## What's next?

Congratulations, you've completed the tutorials! 🥳

From here, you can go on to:

- Learn more about how to use 🤗 Datasets other functions to [process your dataset](process).

- [Stream large datasets](stream) without downloading it locally.

- [Define your dataset splits and configurations](repository_structure) or [loading script](dataset_script) and share your dataset with the community.

If you have any questions about 🤗 Datasets, feel free to join and ask the community on our [forum](https://discuss.huggingface.co/c/datasets/10).

|

huggingface/datasets/blob/main/docs/source/upload_dataset.mdx

|

--

title: sMAPE

emoji: 🤗

colorFrom: blue

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

description: >-

Symmetric Mean Absolute Percentage Error (sMAPE) is the symmetric mean percentage error difference between the predicted and actual values defined by Chen and Yang (2004).

---

# Metric Card for sMAPE

## Metric Description

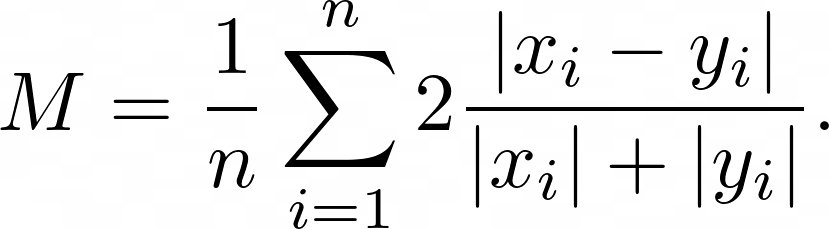

Symmetric Mean Absolute Error (sMAPE) is the symmetric mean of the percentage error of difference between the predicted $x_i$ and actual $y_i$ numeric values:

## How to Use

At minimum, this metric requires predictions and references as inputs.

```python

>>> smape_metric = evaluate.load("smape")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> results = smape_metric.compute(predictions=predictions, references=references)

```

### Inputs

Mandatory inputs:

- `predictions`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the estimated target values.

- `references`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the ground truth (correct) target values.

Optional arguments:

- `sample_weight`: numeric array-like of shape (`n_samples,`) representing sample weights. The default is `None`.

- `multioutput`: `raw_values`, `uniform_average` or numeric array-like of shape (`n_outputs,`), which defines the aggregation of multiple output values. The default value is `uniform_average`.

- `raw_values` returns a full set of errors in case of multioutput input.

- `uniform_average` means that the errors of all outputs are averaged with uniform weight.

- the array-like value defines weights used to average errors.

### Output Values

This metric outputs a dictionary, containing the mean absolute error score, which is of type:

- `float`: if multioutput is `uniform_average` or an ndarray of weights, then the weighted average of all output errors is returned.

- numeric array-like of shape (`n_outputs,`): if multioutput is `raw_values`, then the score is returned for each output separately.

Each sMAPE `float` value ranges from `0.0` to `2.0`, with the best value being 0.0.

Output Example(s):

```python

{'smape': 0.5}

```

If `multioutput="raw_values"`:

```python

{'smape': array([0.5, 1.5 ])}

```

#### Values from Popular Papers

### Examples

Example with the `uniform_average` config:

```python

>>> smape_metric = evaluate.load("smape")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> results = smape_metric.compute(predictions=predictions, references=references)

>>> print(results)

{'smape': 0.5787...}

```

Example with multi-dimensional lists, and the `raw_values` config:

```python

>>> smape_metric = evaluate.load("smape", "multilist")

>>> predictions = [[0.5, 1], [-1, 1], [7, -6]]

>>> references = [[0.1, 2], [-1, 2], [8, -5]]

>>> results = smape_metric.compute(predictions=predictions, references=references)

>>> print(results)

{'smape': 0.8874...}

>>> results = smape_metric.compute(predictions=predictions, references=references, multioutput='raw_values')

>>> print(results)

{'smape': array([1.3749..., 0.4])}

```

## Limitations and Bias

This metric is called a measure of "percentage error" even though there is no multiplier of 100. The range is between (0, 2) with it being two when the target and prediction are both zero.

## Citation(s)

```bibtex

@article{article,

author = {Chen, Zhuo and Yang, Yuhong},

year = {2004},

month = {04},

pages = {},

title = {Assessing forecast accuracy measures}

}

```

## Further References

- [Symmetric Mean absolute percentage error - Wikipedia](https://en.wikipedia.org/wiki/Symmetric_mean_absolute_percentage_error)

|

huggingface/evaluate/blob/main/metrics/smape/README.md

|

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Prefix tuning

[Prefix tuning](https://hf.co/papers/2101.00190) prefixes a series of task-specific vectors to the input sequence that can be learned while keeping the pretrained model frozen. The prefix parameters are inserted in all of the model layers.

The abstract from the paper is:

*Fine-tuning is the de facto way to leverage large pretrained language models to perform downstream tasks. However, it modifies all the language model parameters and therefore necessitates storing a full copy for each task. In this paper, we propose prefix-tuning, a lightweight alternative to fine-tuning for natural language generation tasks, which keeps language model parameters frozen, but optimizes a small continuous task-specific vector (called the prefix). Prefix-tuning draws inspiration from prompting, allowing subsequent tokens to attend to this prefix as if it were "virtual tokens". We apply prefix-tuning to GPT-2 for table-to-text generation and to BART for summarization. We find that by learning only 0.1\% of the parameters, prefix-tuning obtains comparable performance in the full data setting, outperforms fine-tuning in low-data settings, and extrapolates better to examples with topics unseen during training*.

## PrefixTuningConfig

[[autodoc]] tuners.prefix_tuning.config.PrefixTuningConfig

## PrefixEncoder

[[autodoc]] tuners.prefix_tuning.model.PrefixEncoder

|

huggingface/peft/blob/main/docs/source/package_reference/prefix_tuning.md

|

--

title: "Announcing the 🤗 AI Research Residency Program"

thumbnail: /blog/assets/57_ai_residency/residency-thumbnail.jpg

authors:

- user: douwekiela

---

# Announcing the 🤗 AI Research Residency Program 🎉 🎉 🎉

The 🤗 Research Residency Program is a 9-month opportunity to launch or advance your career in machine learning research 🚀. The goal of the residency is to help you grow into an impactful AI researcher. Residents will work alongside Researchers from our Science Team. Together, you will pick a research problem and then develop new machine learning techniques to solve it in an open & collaborative way, with the hope of ultimately publishing your work and making it visible to a wide audience.

Applicants from all backgrounds are welcome! Ideally, you have some research experience and are excited about our mission to democratize responsible machine learning. The progress of our field has the potential to exacerbate existing disparities in ways that disproportionately hurt the most marginalized people in society — including people of color, people from working-class backgrounds, women, and LGBTQ+ people. These communities must be centered in the work we do as a research community. So we strongly encourage proposals from people whose personal experience reflects these identities.. We encourage applications relating to AI that demonstrate a clear and positive societal impact.

## How to Apply

Since the focus of your work will be on developing Machine Learning techniques, your application should show evidence of programming skills and of prerequisite courses, like calculus or linear algebra, or links to an open-source project that demonstrates programming and mathematical ability.

More importantly, your application needs to present interest in effecting positive change through AI in any number of creative ways. This can stem from a topic that is of particular interest to you and your proposal would capture concrete ways in which machine learning can contribute. Thinking through the entire pipeline, from understanding where ML tools are needed to gathering data and deploying the resulting approach, can help make your project more impactful.

We are actively working to build a culture that values diversity, equity, and inclusivity. We are intentionally building a workplace where people feel respected and supported—regardless of who you are or where you come from. We believe this is foundational to building a great company and community. Hugging Face is an equal opportunity employer and we do not discriminate on the basis of race, religion, color, national origin, gender, sexual orientation, age, marital status, veteran status, or disability status.

[Submit your application here](https://apply.workable.com/huggingface/j/1B77519961).

## FAQs

* **Can I complete the program part-time?**<br>No. The Residency is only offered as a full-time position.

* **I have been out of school for several years. Can I apply?**<br>Yes. We will consider applications from various backgrounds.

* **Can I be enrolled as a student at a university or work for another employer during the residency?**<br>No, the residency can’t be completed simultaneously with any other obligations.

* **Will I receive benefits during the Residency?**<br>Yes, residents are eligible for most benefits, including medical (depending on location).

* **Will I be required to relocate for this residency?**<br>Absolutely not! We are a distributed team and you are welcome to work from wherever you are currently located.

* **Is there a deadline?**<br>Applications close on April 3rd, 2022!

|

huggingface/blog/blob/main/ai-residency.md

|

@gradio/plot

## 0.2.6

### Patch Changes

- Updated dependencies [[`828fb9e`](https://github.com/gradio-app/gradio/commit/828fb9e6ce15b6ea08318675a2361117596a1b5d), [`73268ee`](https://github.com/gradio-app/gradio/commit/73268ee2e39f23ebdd1e927cb49b8d79c4b9a144)]:

- @gradio/statustracker@0.4.3

- @gradio/atoms@0.4.1

## 0.2.5

### Patch Changes

- Updated dependencies [[`053bec9`](https://github.com/gradio-app/gradio/commit/053bec98be1127e083414024e02cf0bebb0b5142), [`4d1cbbc`](https://github.com/gradio-app/gradio/commit/4d1cbbcf30833ef1de2d2d2710c7492a379a9a00)]:

- @gradio/icons@0.3.2

- @gradio/atoms@0.4.0

- @gradio/statustracker@0.4.2

## 0.2.4

### Patch Changes

- Updated dependencies [[`206af31`](https://github.com/gradio-app/gradio/commit/206af31d7c1a31013364a44e9b40cf8df304ba50)]:

- @gradio/icons@0.3.1

- @gradio/atoms@0.3.1

- @gradio/statustracker@0.4.1

## 0.2.3

### Patch Changes

- Updated dependencies [[`9caddc17b`](https://github.com/gradio-app/gradio/commit/9caddc17b1dea8da1af8ba724c6a5eab04ce0ed8)]:

- @gradio/atoms@0.3.0

- @gradio/icons@0.3.0

- @gradio/statustracker@0.4.0

## 0.2.2

### Patch Changes

- Updated dependencies [[`f816136a0`](https://github.com/gradio-app/gradio/commit/f816136a039fa6011be9c4fb14f573e4050a681a)]:

- @gradio/atoms@0.2.2

- @gradio/icons@0.2.1

- @gradio/statustracker@0.3.2

## 0.2.1

### Patch Changes

- Updated dependencies [[`3cdeabc68`](https://github.com/gradio-app/gradio/commit/3cdeabc6843000310e1a9e1d17190ecbf3bbc780), [`fad92c29d`](https://github.com/gradio-app/gradio/commit/fad92c29dc1f5cd84341aae417c495b33e01245f)]:

- @gradio/atoms@0.2.1

- @gradio/statustracker@0.3.1

## 0.2.0

### Features

- [#5498](https://github.com/gradio-app/gradio/pull/5498) [`287fe6782`](https://github.com/gradio-app/gradio/commit/287fe6782825479513e79a5cf0ba0fbfe51443d7) - Publish all components to npm. Thanks [@pngwn](https://github.com/pngwn)!

- [#5498](https://github.com/gradio-app/gradio/pull/5498) [`287fe6782`](https://github.com/gradio-app/gradio/commit/287fe6782825479513e79a5cf0ba0fbfe51443d7) - Custom components. Thanks [@pngwn](https://github.com/pngwn)!

## 0.2.0-beta.8

### Patch Changes

- Updated dependencies [[`667802a6c`](https://github.com/gradio-app/gradio/commit/667802a6cdbfb2ce454a3be5a78e0990b194548a), [`c476bd5a5`](https://github.com/gradio-app/gradio/commit/c476bd5a5b70836163b9c69bf4bfe068b17fbe13)]:

- @gradio/atoms@0.2.0-beta.6

- @gradio/statustracker@0.3.0-beta.8

- @gradio/utils@0.2.0-beta.6

- @gradio/icons@0.2.0-beta.3

## 0.2.0-beta.7

### Features

- [#6016](https://github.com/gradio-app/gradio/pull/6016) [`83e947676`](https://github.com/gradio-app/gradio/commit/83e947676d327ca2ab6ae2a2d710c78961c771a0) - Format js in v4 branch. Thanks [@freddyaboulton](https://github.com/freddyaboulton)!

## 0.2.0-beta.6

### Features

- [#5960](https://github.com/gradio-app/gradio/pull/5960) [`319c30f3f`](https://github.com/gradio-app/gradio/commit/319c30f3fccf23bfe1da6c9b132a6a99d59652f7) - rererefactor frontend files. Thanks [@pngwn](https://github.com/pngwn)!

- [#5938](https://github.com/gradio-app/gradio/pull/5938) [`13ed8a485`](https://github.com/gradio-app/gradio/commit/13ed8a485d5e31d7d75af87fe8654b661edcca93) - V4: Use beta release versions for '@gradio' packages. Thanks [@freddyaboulton](https://github.com/freddyaboulton)!

## 0.2.3

### Patch Changes

- Updated dependencies [[`e70805d54`](https://github.com/gradio-app/gradio/commit/e70805d54cc792452545f5d8eccc1aa0212a4695)]:

- @gradio/atoms@0.2.0

- @gradio/statustracker@0.2.3

## 0.2.2

### Fixes

- [#5795](https://github.com/gradio-app/gradio/pull/5795) [`957ba5cfd`](https://github.com/gradio-app/gradio/commit/957ba5cfde18e09caedf31236a2064923cd7b282) - Prevent bokeh from injecting bokeh js multiple times. Thanks [@abidlabs](https://github.com/abidlabs)!

## 0.2.1

### Patch Changes

- Updated dependencies [[`8f0fed857`](https://github.com/gradio-app/gradio/commit/8f0fed857d156830626eb48b469d54d211a582d2)]:

- @gradio/icons@0.2.0

- @gradio/atoms@0.1.3

- @gradio/statustracker@0.2.1

## 0.2.0

### Features

- [#5642](https://github.com/gradio-app/gradio/pull/5642) [`21c7225bd`](https://github.com/gradio-app/gradio/commit/21c7225bda057117a9d3311854323520218720b5) - Improve plot rendering. Thanks [@aliabid94](https://github.com/aliabid94)!

- [#5554](https://github.com/gradio-app/gradio/pull/5554) [`75ddeb390`](https://github.com/gradio-app/gradio/commit/75ddeb390d665d4484667390a97442081b49a423) - Accessibility Improvements. Thanks [@hannahblair](https://github.com/hannahblair)!

## 0.1.2

### Patch Changes

- Updated dependencies [[`afac0006`](https://github.com/gradio-app/gradio/commit/afac0006337ce2840cf497cd65691f2f60ee5912)]:

- @gradio/statustracker@0.2.0

- @gradio/theme@0.1.0

- @gradio/utils@0.1.1

- @gradio/atoms@0.1.2

## 0.1.1

### Patch Changes

- Updated dependencies [[`abf1c57d`](https://github.com/gradio-app/gradio/commit/abf1c57d7d85de0df233ee3b38aeb38b638477db)]:

- @gradio/icons@0.1.0

- @gradio/utils@0.1.0

- @gradio/atoms@0.1.1

- @gradio/statustracker@0.1.1

## 0.1.0

### Highlights

#### Improve startup performance and markdown support ([#5279](https://github.com/gradio-app/gradio/pull/5279) [`fe057300`](https://github.com/gradio-app/gradio/commit/fe057300f0672c62dab9d9b4501054ac5d45a4ec))

##### Improved markdown support

We now have better support for markdown in `gr.Markdown` and `gr.Dataframe`. Including syntax highlighting and Github Flavoured Markdown. We also have more consistent markdown behaviour and styling.

##### Various performance improvements

These improvements will be particularly beneficial to large applications.

- Rather than attaching events manually, they are now delegated, leading to a significant performance improvement and addressing a performance regression introduced in a recent version of Gradio. App startup for large applications is now around twice as fast.

- Optimised the mounting of individual components, leading to a modest performance improvement during startup (~30%).

- Corrected an issue that was causing markdown to re-render infinitely.

- Ensured that the `gr.3DModel` does re-render prematurely.

Thanks [@pngwn](https://github.com/pngwn)!

### Features

- [#5215](https://github.com/gradio-app/gradio/pull/5215) [`fbdad78a`](https://github.com/gradio-app/gradio/commit/fbdad78af4c47454cbb570f88cc14bf4479bbceb) - Lazy load interactive or static variants of a component individually, rather than loading both variants regardless. This change will improve performance for many applications. Thanks [@pngwn](https://github.com/pngwn)!

- [#5216](https://github.com/gradio-app/gradio/pull/5216) [`4b58ea6d`](https://github.com/gradio-app/gradio/commit/4b58ea6d98e7a43b3f30d8a4cb6f379bc2eca6a8) - Update i18n tokens and locale files. Thanks [@hannahblair](https://github.com/hannahblair)!

## 0.0.2

### Patch Changes

- Updated dependencies [[`41c83070`](https://github.com/gradio-app/gradio/commit/41c83070b01632084e7d29123048a96c1e261407)]:

- @gradio/theme@0.0.2

- @gradio/utils@0.0.2

- @gradio/atoms@0.0.2

|

gradio-app/gradio/blob/main/js/plot/CHANGELOG.md

|

Organizations, Security, and the Hub API

## Contents

- [Organizations](./organizations)

- [Managing Organizations](./organizations-managing)

- [Organization Cards](./organizations-cards)

- [Access control in organizations](./organizations-security)

- [Moderation](./moderation)

- [Billing](./billing)

- [Digital Object Identifier (DOI)](./doi)

- [Security](./security)

- [User Access Tokens](./security-tokens)

- [Signing commits with GPG](./security-gpg)

- [Malware Scanning](./security-malware)

- [Pickle Scanning](./security-pickle)

- [Hub API Endpoints](./api)

- [Webhooks](./webhooks)

|

huggingface/hub-docs/blob/main/docs/hub/other.md

|

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Consistency Models

Consistency Models were proposed in [Consistency Models](https://huggingface.co/papers/2303.01469) by Yang Song, Prafulla Dhariwal, Mark Chen, and Ilya Sutskever.

The abstract from the paper is:

*Diffusion models have significantly advanced the fields of image, audio, and video generation, but they depend on an iterative sampling process that causes slow generation. To overcome this limitation, we propose consistency models, a new family of models that generate high quality samples by directly mapping noise to data. They support fast one-step generation by design, while still allowing multistep sampling to trade compute for sample quality. They also support zero-shot data editing, such as image inpainting, colorization, and super-resolution, without requiring explicit training on these tasks. Consistency models can be trained either by distilling pre-trained diffusion models, or as standalone generative models altogether. Through extensive experiments, we demonstrate that they outperform existing distillation techniques for diffusion models in one- and few-step sampling, achieving the new state-of-the-art FID of 3.55 on CIFAR-10 and 6.20 on ImageNet 64x64 for one-step generation. When trained in isolation, consistency models become a new family of generative models that can outperform existing one-step, non-adversarial generative models on standard benchmarks such as CIFAR-10, ImageNet 64x64 and LSUN 256x256.*

The original codebase can be found at [openai/consistency_models](https://github.com/openai/consistency_models), and additional checkpoints are available at [openai](https://huggingface.co/openai).

The pipeline was contributed by [dg845](https://github.com/dg845) and [ayushtues](https://huggingface.co/ayushtues). ❤️

## Tips

For an additional speed-up, use `torch.compile` to generate multiple images in <1 second:

```diff

import torch

from diffusers import ConsistencyModelPipeline

device = "cuda"

# Load the cd_bedroom256_lpips checkpoint.

model_id_or_path = "openai/diffusers-cd_bedroom256_lpips"

pipe = ConsistencyModelPipeline.from_pretrained(model_id_or_path, torch_dtype=torch.float16)

pipe.to(device)

+ pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

# Multistep sampling

# Timesteps can be explicitly specified; the particular timesteps below are from the original GitHub repo:

# https://github.com/openai/consistency_models/blob/main/scripts/launch.sh#L83

for _ in range(10):

image = pipe(timesteps=[17, 0]).images[0]

image.show()

```

## ConsistencyModelPipeline

[[autodoc]] ConsistencyModelPipeline

- all

- __call__

## ImagePipelineOutput

[[autodoc]] pipelines.ImagePipelineOutput

|

huggingface/diffusers/blob/main/docs/source/en/api/pipelines/consistency_models.md

|

Welcome to the 🤗 Deep Reinforcement Learning Course [[introduction]]

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/thumbnail.jpg" alt="Deep RL Course thumbnail" width="100%"/>

Welcome to the most fascinating topic in Artificial Intelligence: **Deep Reinforcement Learning**.

This course will **teach you about Deep Reinforcement Learning from beginner to expert**. It’s completely free and open-source!

In this introduction unit you’ll:

- Learn more about the **course content**.

- **Define the path** you’re going to take (either self-audit or certification process).

- Learn more about the **AI vs. AI challenges** you're going to participate in.

- Learn more **about us**.

- **Create your Hugging Face account** (it’s free).

- **Sign-up to our Discord server**, the place where you can chat with your classmates and us (the Hugging Face team).

Let’s get started!

## What to expect? [[expect]]

In this course, you will:

- 📖 Study Deep Reinforcement Learning in **theory and practice.**

- 🧑💻 Learn to **use famous Deep RL libraries** such as [Stable Baselines3](https://stable-baselines3.readthedocs.io/en/master/), [RL Baselines3 Zoo](https://github.com/DLR-RM/rl-baselines3-zoo), [Sample Factory](https://samplefactory.dev/) and [CleanRL](https://github.com/vwxyzjn/cleanrl).

- 🤖 **Train agents in unique environments** such as [SnowballFight](https://huggingface.co/spaces/ThomasSimonini/SnowballFight), [Huggy the Doggo 🐶](https://huggingface.co/spaces/ThomasSimonini/Huggy), [VizDoom (Doom)](https://vizdoom.cs.put.edu.pl/) and classical ones such as [Space Invaders](https://gymnasium.farama.org/environments/atari/space_invaders/), [PyBullet](https://pybullet.org/wordpress/) and more.

- 💾 Share your **trained agents with one line of code to the Hub** and also download powerful agents from the community.

- 🏆 Participate in challenges where you will **evaluate your agents against other teams. You'll also get to play against the agents you'll train.**

- 🎓 **Earn a certificate of completion** by completing 80% of the assignments.

And more!

At the end of this course, **you’ll get a solid foundation from the basics to the SOTA (state-of-the-art) of methods**.

Don’t forget to **<a href="http://eepurl.com/ic5ZUD">sign up to the course</a>** (we are collecting your email to be able to **send you the links when each Unit is published and give you information about the challenges and updates).**

Sign up 👉 <a href="http://eepurl.com/ic5ZUD">here</a>

## What does the course look like? [[course-look-like]]

The course is composed of:

- *A theory part*: where you learn a **concept in theory**.

- *A hands-on*: where you’ll learn **to use famous Deep RL libraries** to train your agents in unique environments. These hands-on will be **Google Colab notebooks with companion tutorial videos** if you prefer learning with video format!

- *Challenges*: you'll get to put your agent to compete against other agents in different challenges. There will also be [a leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard) for you to compare the agents' performance.

## What's the syllabus? [[syllabus]]

This is the course's syllabus:

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/syllabus1.jpg" alt="Syllabus Part 1" width="100%"/>

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/syllabus2.jpg" alt="Syllabus Part 2" width="100%"/>

## Two paths: choose your own adventure [[two-paths]]

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/two-paths.jpg" alt="Two paths" width="100%"/>

You can choose to follow this course either:

- *To get a certificate of completion*: you need to complete 80% of the assignments.

- *To get a certificate of honors*: you need to complete 100% of the assignments.

- *As a simple audit*: you can participate in all challenges and do assignments if you want.

There's **no deadlines, the course is self-paced**.

Both paths **are completely free**.

Whatever path you choose, we advise you **to follow the recommended pace to enjoy the course and challenges with your fellow classmates.**

You don't need to tell us which path you choose. **If you get more than 80% of the assignments done, you'll get a certificate.**

## The Certification Process [[certification-process]]

The certification process is **completely free**:

- *To get a certificate of completion*: you need to complete 80% of the assignments.

- *To get a certificate of honors*: you need to complete 100% of the assignments.

Again, there's **no deadline** since the course is self paced. But our advice **is to follow the recommended pace section**.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/certification.jpg" alt="Course certification" width="100%"/>

## How to get most of the course? [[advice]]

To get most of the course, we have some advice:

1. <a href="https://discord.gg/ydHrjt3WP5">Join study groups in Discord </a>: studying in groups is always easier. To do that, you need to join our discord server. If you're new to Discord, no worries! We have some tools that will help you learn about it.

2. **Do the quizzes and assignments**: the best way to learn is to do and test yourself.

3. **Define a schedule to stay in sync**: you can use our recommended pace schedule below or create yours.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/advice.jpg" alt="Course advice" width="100%"/>

## What tools do I need? [[tools]]

You need only 3 things:

- *A computer* with an internet connection.

- *Google Colab (free version)*: most of our hands-on will use Google Colab, the **free version is enough.**

- A *Hugging Face Account*: to push and load models. If you don’t have an account yet, you can create one **[here](https://hf.co/join)** (it’s free).

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/tools.jpg" alt="Course tools needed" width="100%"/>

## What is the recommended pace? [[recommended-pace]]

Each chapter in this course is designed **to be completed in 1 week, with approximately 3-4 hours of work per week**. However, you can take as much time as necessary to complete the course. If you want to dive into a topic more in-depth, we'll provide additional resources to help you achieve that.

## Who are we [[who-are-we]]

About the author:

- <a href="https://twitter.com/ThomasSimonini">Thomas Simonini</a> is a Developer Advocate at Hugging Face 🤗 specializing in Deep Reinforcement Learning. He founded the Deep Reinforcement Learning Course in 2018, which became one of the most used courses in Deep RL.

About the team:

- <a href="https://twitter.com/osanseviero">Omar Sanseviero</a> is a Machine Learning Engineer at Hugging Face where he works in the intersection of ML, Community and Open Source. Previously, Omar worked as a Software Engineer at Google in the teams of Assistant and TensorFlow Graphics. He is from Peru and likes llamas 🦙.

- <a href="https://twitter.com/RisingSayak"> Sayak Paul</a> is a Developer Advocate Engineer at Hugging Face. He's interested in the area of representation learning (self-supervision, semi-supervision, model robustness). And he loves watching crime and action thrillers 🔪.

## What are the challenges in this course? [[challenges]]

In this new version of the course, you have two types of challenges:

- [A leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard) to compare your agent's performance to other classmates'.

- [AI vs. AI challenges](https://huggingface.co/learn/deep-rl-course/unit7/introduction?fw=pt) where you can train your agent and compete against other classmates' agents.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/challenges.jpg" alt="Challenges" width="100%"/>

## I found a bug, or I want to improve the course [[contribute]]

Contributions are welcomed 🤗

- If you *found a bug 🐛 in a notebook*, please <a href="https://github.com/huggingface/deep-rl-class/issues">open an issue</a> and **describe the problem**.

- If you *want to improve the course*, you can <a href="https://github.com/huggingface/deep-rl-class/pulls">open a Pull Request.</a>

## I still have questions [[questions]]

Please ask your question in our <a href="https://discord.gg/ydHrjt3WP5">discord server #rl-discussions.</a>

|

huggingface/deep-rl-class/blob/main/units/en/unit0/introduction.mdx

|

Using timm at Hugging Face

`timm`, also known as [pytorch-image-models](https://github.com/rwightman/pytorch-image-models), is an open-source collection of state-of-the-art PyTorch image models, pretrained weights, and utility scripts for training, inference, and validation.

This documentation focuses on `timm` functionality in the Hugging Face Hub instead of the `timm` library itself. For detailed information about the `timm` library, visit [its documentation](https://huggingface.co/docs/timm).

You can find a number of `timm` models on the Hub using the filters on the left of the [models page](https://huggingface.co/models?library=timm&sort=downloads).

All models on the Hub come with several useful features:

1. An automatically generated model card, which model authors can complete with [information about their model](./model-cards).

2. Metadata tags help users discover the relevant `timm` models.

3. An [interactive widget](./models-widgets) you can use to play with the model directly in the browser.

4. An [Inference API](./models-inference) that allows users to make inference requests.

## Using existing models from the Hub

Any `timm` model from the Hugging Face Hub can be loaded with a single line of code as long as you have `timm` installed! Once you've selected a model from the Hub, pass the model's ID prefixed with `hf-hub:` to `timm`'s `create_model` method to download and instantiate the model.

```py

import timm