text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

!--⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Quickstart

The [Hugging Face Hub](https://huggingface.co/) is the go-to place for sharing machine learning

models, demos, datasets, and metrics. `huggingface_hub` library helps you interact with

the Hub without leaving your development environment. You can create and manage

repositories easily, download and upload files, and get useful model and dataset

metadata from the Hub.

## Installation

To get started, install the `huggingface_hub` library:

```bash

pip install --upgrade huggingface_hub

```

For more details, check out the [installation](installation) guide.

## Download files

Repositories on the Hub are git version controlled, and users can download a single file

or the whole repository. You can use the [`hf_hub_download`] function to download files.

This function will download and cache a file on your local disk. The next time you need

that file, it will load from your cache, so you don't need to re-download it.

You will need the repository id and the filename of the file you want to download. For

example, to download the [Pegasus](https://huggingface.co/google/pegasus-xsum) model

configuration file:

```py

>>> from huggingface_hub import hf_hub_download

>>> hf_hub_download(repo_id="google/pegasus-xsum", filename="config.json")

```

To download a specific version of the file, use the `revision` parameter to specify the

branch name, tag, or commit hash. If you choose to use the commit hash, it must be the

full-length hash instead of the shorter 7-character commit hash:

```py

>>> from huggingface_hub import hf_hub_download

>>> hf_hub_download(

... repo_id="google/pegasus-xsum",

... filename="config.json",

... revision="4d33b01d79672f27f001f6abade33f22d993b151"

... )

```

For more details and options, see the API reference for [`hf_hub_download`].

<a id="login"></a> <!-- backward compatible anchor -->

## Authentication

In a lot of cases, you must be authenticated with a Hugging Face account to interact with

the Hub: download private repos, upload files, create PRs,...

[Create an account](https://huggingface.co/join) if you don't already have one, and then sign in

to get your [User Access Token](https://huggingface.co/docs/hub/security-tokens) from

your [Settings page](https://huggingface.co/settings/tokens). The User Access Token is

used to authenticate your identity to the Hub.

<Tip>

Tokens can have `read` or `write` permissions. Make sure to have a `write` access token if you want to create or edit a repository. Otherwise, it's best to generate a `read` token to reduce risk in case your token is inadvertently leaked.

</Tip>

### Login command

The easiest way to authenticate is to save the token on your machine. You can do that from the terminal using the [`login`] command:

```bash

huggingface-cli login

```

The command will tell you if you are already logged in and prompt you for your token. The token is then validated and saved in your `HF_HOME` directory (defaults to `~/.cache/huggingface/token`). Any script or library interacting with the Hub will use this token when sending requests.

Alternatively, you can programmatically login using [`login`] in a notebook or a script:

```py

>>> from huggingface_hub import login

>>> login()

```

You can only be logged in to one account at a time. Logging in to a new account will automatically log you out of the previous one. To determine your currently active account, simply run the `huggingface-cli whoami` command.

<Tip warning={true}>

Once logged in, all requests to the Hub - even methods that don't necessarily require authentication - will use your access token by default. If you want to disable the implicit use of your token, you should set `HF_HUB_DISABLE_IMPLICIT_TOKEN=1` as an environment variable (see [reference](../package_reference/environment_variables#hfhubdisableimplicittoken)).

</Tip>

### Environment variable

The environment variable `HF_TOKEN` can also be used to authenticate yourself. This is especially useful in a Space where you can set `HF_TOKEN` as a [Space secret](https://huggingface.co/docs/hub/spaces-overview#managing-secrets).

<Tip>

**NEW:** Google Colaboratory lets you define [private keys](https://twitter.com/GoogleColab/status/1719798406195867814) for your notebooks. Define a `HF_TOKEN` secret to be automatically authenticated!

</Tip>

Authentication via an environment variable or a secret has priority over the token stored on your machine.

### Method parameters

Finally, it is also possible to authenticate by passing your token to any method that accepts `token` as a parameter.

```

from transformers import whoami

user = whoami(token=...)

```

This is usually discouraged except in an environment where you don't want to store your token permanently or if you need to handle several tokens at once.

<Tip warning={true}>

Please be careful when passing tokens as a parameter. It is always best practice to load the token from a secure vault instead of hardcoding it in your codebase or notebook. Hardcoded tokens present a major leak risk if you share your code inadvertently.

</Tip>

## Create a repository

Once you've registered and logged in, create a repository with the [`create_repo`]

function:

```py

>>> from huggingface_hub import HfApi

>>> api = HfApi()

>>> api.create_repo(repo_id="super-cool-model")

```

If you want your repository to be private, then:

```py

>>> from huggingface_hub import HfApi

>>> api = HfApi()

>>> api.create_repo(repo_id="super-cool-model", private=True)

```

Private repositories will not be visible to anyone except yourself.

<Tip>

To create a repository or to push content to the Hub, you must provide a User Access

Token that has the `write` permission. You can choose the permission when creating the

token in your [Settings page](https://huggingface.co/settings/tokens).

</Tip>

## Upload files

Use the [`upload_file`] function to add a file to your newly created repository. You

need to specify:

1. The path of the file to upload.

2. The path of the file in the repository.

3. The repository id of where you want to add the file.

```py

>>> from huggingface_hub import HfApi

>>> api = HfApi()

>>> api.upload_file(

... path_or_fileobj="/home/lysandre/dummy-test/README.md",

... path_in_repo="README.md",

... repo_id="lysandre/test-model",

... )

```

To upload more than one file at a time, take a look at the [Upload](./guides/upload) guide

which will introduce you to several methods for uploading files (with or without git).

## Next steps

The `huggingface_hub` library provides an easy way for users to interact with the Hub

with Python. To learn more about how you can manage your files and repositories on the

Hub, we recommend reading our [how-to guides](./guides/overview) to:

- [Manage your repository](./guides/repository).

- [Download](./guides/download) files from the Hub.

- [Upload](./guides/upload) files to the Hub.

- [Search the Hub](./guides/search) for your desired model or dataset.

- [Access the Inference API](./guides/inference) for fast inference. | huggingface/huggingface_hub/blob/main/docs/source/en/quick-start.md |

Metric Card for Code Eval

## Metric description

The CodeEval metric estimates the pass@k metric for code synthesis.

It implements the evaluation harness for the HumanEval problem solving dataset described in the paper ["Evaluating Large Language Models Trained on Code"](https://arxiv.org/abs/2107.03374).

## How to use

The Code Eval metric calculates how good are predictions given a set of references. Its arguments are:

`predictions`: a list of candidates to evaluate. Each candidate should be a list of strings with several code candidates to solve the problem.

`references`: a list with a test for each prediction. Each test should evaluate the correctness of a code candidate.

`k`: number of code candidates to consider in the evaluation. The default value is `[1, 10, 100]`.

`num_workers`: the number of workers used to evaluate the candidate programs (The default value is `4`).

`timeout`: The maximum time taken to produce a prediction before it is considered a "timeout". The default value is `3.0` (i.e. 3 seconds).

```python

from datasets import load_metric

code_eval = load_metric("code_eval")

test_cases = ["assert add(2,3)==5"]

candidates = [["def add(a,b): return a*b", "def add(a, b): return a+b"]]

pass_at_k, results = code_eval.compute(references=test_cases, predictions=candidates, k=[1, 2])

```

N.B.

This metric exists to run untrusted model-generated code. Users are strongly encouraged not to do so outside of a robust security sandbox. Before running this metric and once you've taken the necessary precautions, you will need to set the `HF_ALLOW_CODE_EVAL` environment variable. Use it at your own risk:

```python

import os

os.environ["HF_ALLOW_CODE_EVAL"] = "1"`

```

## Output values

The Code Eval metric outputs two things:

`pass_at_k`: a dictionary with the pass rates for each k value defined in the arguments.

`results`: a dictionary with granular results of each unit test.

### Values from popular papers

The [original CODEX paper](https://arxiv.org/pdf/2107.03374.pdf) reported that the CODEX-12B model had a pass@k score of 28.8% at `k=1`, 46.8% at `k=10` and 72.3% at `k=100`. However, since the CODEX model is not open source, it is hard to verify these numbers.

## Examples

Full match at `k=1`:

```python

from datasets import load_metric

code_eval = load_metric("code_eval")

test_cases = ["assert add(2,3)==5"]

candidates = [["def add(a, b): return a+b"]]

pass_at_k, results = code_eval.compute(references=test_cases, predictions=candidates, k=[1])

print(pass_at_k)

{'pass@1': 1.0}

```

No match for k = 1:

```python

from datasets import load_metric

code_eval = load_metric("code_eval")

test_cases = ["assert add(2,3)==5"]

candidates = [["def add(a,b): return a*b"]]

pass_at_k, results = code_eval.compute(references=test_cases, predictions=candidates, k=[1])

print(pass_at_k)

{'pass@1': 0.0}

```

Partial match at k=1, full match at k=2:

```python

from datasets import load_metric

code_eval = load_metric("code_eval")

test_cases = ["assert add(2,3)==5"]

candidates = [["def add(a, b): return a+b", "def add(a,b): return a*b"]]

pass_at_k, results = code_eval.compute(references=test_cases, predictions=candidates, k=[1, 2])

print(pass_at_k)

{'pass@1': 0.5, 'pass@2': 1.0}

```

## Limitations and bias

As per the warning included in the metric code itself:

> This program exists to execute untrusted model-generated code. Although it is highly unlikely that model-generated code will do something overtly malicious in response to this test suite, model-generated code may act destructively due to a lack of model capability or alignment. Users are strongly encouraged to sandbox this evaluation suite so that it does not perform destructive actions on their host or network. For more information on how OpenAI sandboxes its code, see the accompanying paper. Once you have read this disclaimer and taken appropriate precautions, uncomment the following line and proceed at your own risk:

More information about the limitations of the code can be found on the [Human Eval Github repository](https://github.com/openai/human-eval).

## Citation

```bibtex

@misc{chen2021evaluating,

title={Evaluating Large Language Models Trained on Code},

author={Mark Chen and Jerry Tworek and Heewoo Jun and Qiming Yuan \

and Henrique Ponde de Oliveira Pinto and Jared Kaplan and Harri Edwards \

and Yuri Burda and Nicholas Joseph and Greg Brockman and Alex Ray \

and Raul Puri and Gretchen Krueger and Michael Petrov and Heidy Khlaaf \

and Girish Sastry and Pamela Mishkin and Brooke Chan and Scott Gray \

and Nick Ryder and Mikhail Pavlov and Alethea Power and Lukasz Kaiser \

and Mohammad Bavarian and Clemens Winter and Philippe Tillet \

and Felipe Petroski Such and Dave Cummings and Matthias Plappert \

and Fotios Chantzis and Elizabeth Barnes and Ariel Herbert-Voss \

and William Hebgen Guss and Alex Nichol and Alex Paino and Nikolas Tezak \

and Jie Tang and Igor Babuschkin and Suchir Balaji and Shantanu Jain \

and William Saunders and Christopher Hesse and Andrew N. Carr \

and Jan Leike and Josh Achiam and Vedant Misra and Evan Morikawa \

and Alec Radford and Matthew Knight and Miles Brundage and Mira Murati \

and Katie Mayer and Peter Welinder and Bob McGrew and Dario Amodei \

and Sam McCandlish and Ilya Sutskever and Wojciech Zaremba},

year={2021},

eprint={2107.03374},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

## Further References

- [Human Eval Github repository](https://github.com/openai/human-eval)

- [OpenAI Codex website](https://openai.com/blog/openai-codex/)

| huggingface/datasets/blob/main/metrics/code_eval/README.md |

Interface State

So far, we've assumed that your demos are *stateless*: that they do not persist information beyond a single function call. What if you want to modify the behavior of your demo based on previous interactions with the demo? There are two approaches in Gradio: *global state* and *session state*.

## Global State

If the state is something that should be accessible to all function calls and all users, you can create a variable outside the function call and access it inside the function. For example, you may load a large model outside the function and use it inside the function so that every function call does not need to reload the model.

$code_score_tracker

In the code above, the `scores` array is shared between all users. If multiple users are accessing this demo, their scores will all be added to the same list, and the returned top 3 scores will be collected from this shared reference.

## Session State

Another type of data persistence Gradio supports is session state, where data persists across multiple submits within a page session. However, data is _not_ shared between different users of your model. To store data in a session state, you need to do three things:

1. Pass in an extra parameter into your function, which represents the state of the interface.

2. At the end of the function, return the updated value of the state as an extra return value.

3. Add the `'state'` input and `'state'` output components when creating your `Interface`

A chatbot is an example where you would need session state - you want access to a users previous submissions, but you cannot store chat history in a global variable, because then chat history would get jumbled between different users.

$code_chatbot_dialogpt

$demo_chatbot_dialogpt

Notice how the state persists across submits within each page, but if you load this demo in another tab (or refresh the page), the demos will not share chat history.

The default value of `state` is None. If you pass a default value to the state parameter of the function, it is used as the default value of the state instead. The `Interface` class only supports a single input and outputs state variable, though it can be a list with multiple elements. For more complex use cases, you can use Blocks, [which supports multiple `State` variables](/guides/state-in-blocks/).

| gradio-app/gradio/blob/main/guides/02_building-interfaces/03_interface-state.md |

Hands-on [[hands-on]]

Now that you've learned to use Optuna, here are some ideas to apply what you've learned:

1️⃣ **Beat your LunarLander-v2 agent results**, by using Optuna to find a better set of hyperparameters. You can also try with another environment, such as MountainCar-v0 and CartPole-v1.

2️⃣ **Beat your SpaceInvaders agent results**.

By doing this, you'll see how valuable and powerful Optuna can be in training better agents.

Have fun!

Finally, we would love **to hear what you think of the course and how we can improve it**. If you have some feedback then please 👉 [fill out this form](https://forms.gle/BzKXWzLAGZESGNaE9)

### Keep Learning, stay awesome 🤗

| huggingface/deep-rl-class/blob/main/units/en/unitbonus2/hands-on.mdx |

--

title: "Creating open machine learning datasets? Share them on the Hugging Face Hub!"

thumbnail: /blog/assets/researcher-dataset-sharing/thumbnail.png

authors:

- user: davanstrien

---

# Creating open machine learning datasets? Share them on the Hugging Face Hub!

## Who is this blog post for?

Are you a researcher doing data-intensive research or using machine learning as a research tool? As part of this research, you have likely created datasets for training and evaluating machine learning models, and like many researchers, you may be sharing these datasets via Google Drive, OneDrive, or your own personal server. In this post, we’ll outline why you might want to consider sharing these datasets on the Hugging Face Hub instead.

This post outlines:

- Why researchers should openly share their data (feel free to skip this section if you are already convinced about this!)

- What the Hugging Face Hub offers for researchers who want to share their datasets.

- Resources for getting started with sharing your datasets on the Hugging Face Hub.

## Why share your data?

Machine learning is increasingly utilized across various disciplines, enhancing research efficiency in tackling diverse problems. Data remains crucial for training and evaluating models, especially when developing new machine-learning methods for specific tasks or domains. Large Language Models may not perform well on specialized tasks like bio-medical entity extraction, and computer vision models might struggle with classifying domain specific images.

Domain-specific datasets are vital for evaluating and training machine learning models, helping to overcome the limitations of existing models. Creating these datasets, however, is challenging, requiring significant time, resources, and domain expertise, particularly for annotating data. Maximizing the impact of this data is crucial for the benefit of both the researchers involved and their respective fields.

The Hugging Face Hub can help achieve this maximum impact.

## What is the Hugging Face Hub?

The [Hugging Face Hub](https://huggingface.co/) has become the central hub for sharing open machine learning models, datasets and demos, hosting over 360,000 models and 70,000 datasets. The Hub enables people – including researchers – to access state-of-the-art machine learning models and datasets in a few lines of code.

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/researcher-dataset-sharing/hub-datasets.png" alt="Screenshot of datasets in the Hugging Face Hub"><br>

<em>Datasets on the Hugging Face Hub.</em>

</p>

## What does the Hugging Face Hub offer for data sharing?

This blog post won’t cover all of the features and benefits of hosting datasets on the Hugging Face Hub but will instead highlight some that are particularly relevant for researchers.

### Visibility for your work

The Hugging Face Hub has become the central Hub for people to collaborate on open machine learning. Making your datasets available via the Hugging Face Hub ensures it is visible to a wide audience of machine learning researchers. The Hub makes it possible to expose links between datasets, models and demos which makes it easier to see how people are using your datasets for training models and creating demos.

### Tools for exploring and working with datasets

There are a growing number of tools being created which make it easier to understand datasets hosted on the Hugging Face Hub.

### Tools for loading datasets hosted on the Hugging Face Hub

Datasets shared on the Hugging Face Hub can be loaded via a variety of tools. The [`datasets`](https://huggingface.co/docs/datasets/) library is a Python library which can directly load datasets from the huggingface hub via a `load_dataset` command. The `datasets` library is optimized for working with large datasets (including datasets which won't fit into memory) and supporting machine learning workflows.

Alongside this many of the datasets on the Hub can also be loaded directly into [`Pandas`](https://pandas.pydata.org/), [`Polars`](https://www.pola.rs/), and [`DuckDB`](https://duckdb.org/). This [page](https://huggingface.co/docs/datasets-server/parquet_process) provides a more detailed overview of the different ways you can load datasets from the Hub.

#### Datasets Viewer

The datasets viewer allows people to explore and interact with datasets hosted on the Hub directly in the browser by visiting the dataset repository on the Hugging Face Hub. This makes it much easier for others to view and explore your data without first having to download it. The datasets viewer also allows you to search and filter datasets, which can be valuable to potential dataset users, understanding the nature of a dataset more quickly.

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/researcher-dataset-sharing/datasets-viewer.png" alt="Screenshot of a dataset viewer on the Hub showing a named entity recognition dataset"><br>

<em>The dataset viewer for the multiconer_v2 Named Entity Recognition dataset.</em>

</p>

### Community tools

Alongside the datasets viewer there are a growing number of community created tools for exploring datasets on the Hub.

#### Spotlight

[`Spotlight`](https://github.com/Renumics/spotlight) is a tool that allows you to interactively explore datasets on the Hub with one line of code.

<p align="center"><a href="https://github.com/Renumics/spotlight"><img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/scalable-data-inspection/speech_commands_vis_s.gif" width="100%"/></a></p>

You can learn more about how you can use this tool in this [blog post](https://huggingface.co/blog/scalable-data-inspection).

#### Lilac

[`Lilac`](https://lilacml.com/) is a tool that aims to help you "curate better data for LLMs" and allows you to explore natural language datasets more easily. The tool allows you to semantically search your dataset (search by meaning), cluster data and gain high-level insights into your dataset.

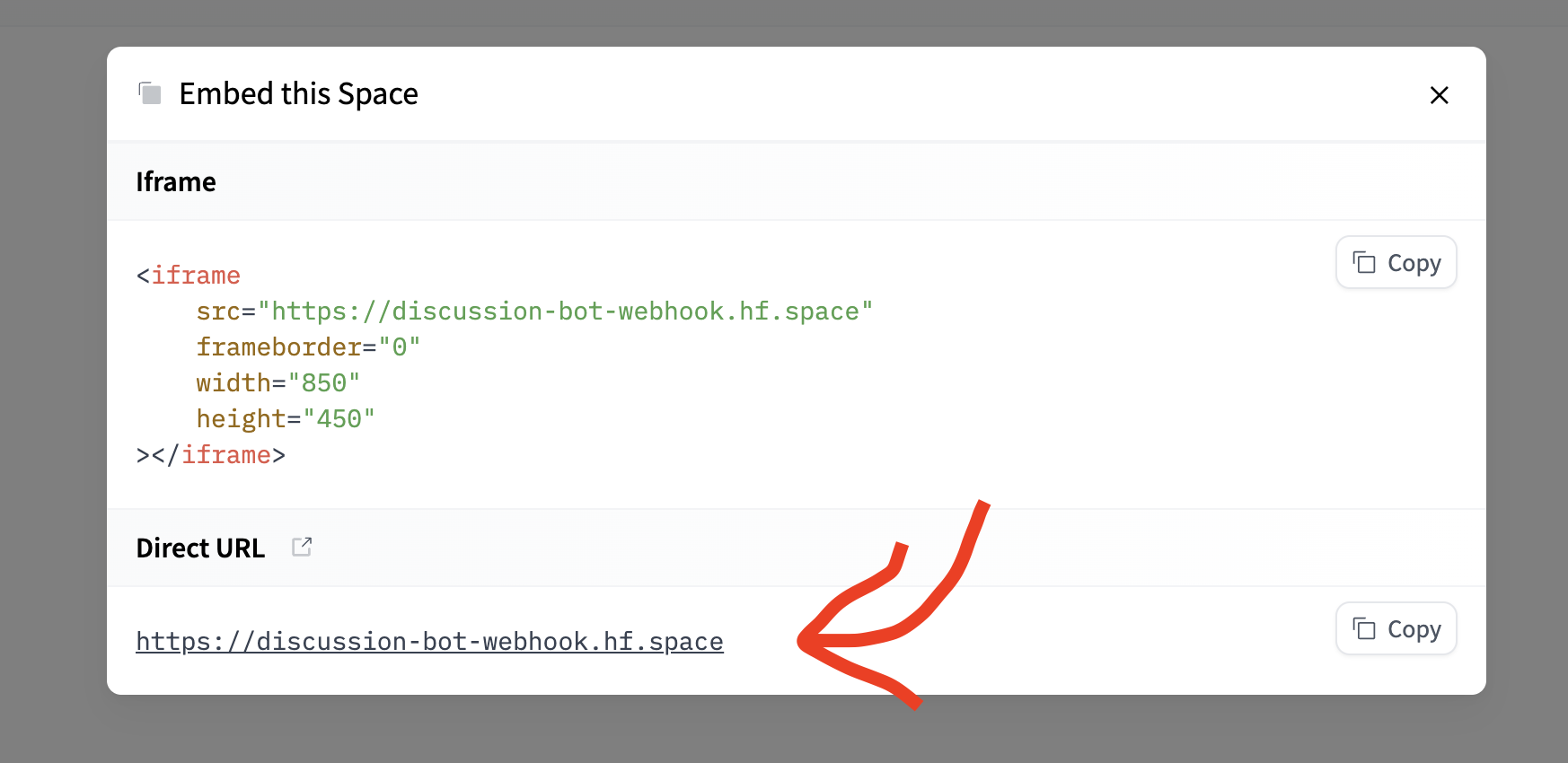

<div style="text-align: center;">

<iframe

src="https://lilacai-lilac.hf.space"

frameborder="0"

width="850"

height="450"

></iframe>

<em>A Spaces demo of the lilac tool.</em>

</div>

You can explore the `Lilac` tool further in a [demo](https://lilacai-lilac.hf.space/).

This growing number of tools for exploring datasets on the Hub makes it easier for people to explore and understand your datasets and can help promote your datasets to a wider audience.

### Support for large datasets

The Hub can host large datasets; it currently hosts datasets with multiple TBs of data.The datasets library, which users can use to download and process datasets from the Hub, supports streaming, making it possible to work with large datasets without downloading the entire dataset upfront. This can be invaluable for allowing researchers with less computational resources to work with your datasets, or to select small portions of a huge dataset for testing, development or prototyping.

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/researcher-dataset-sharing/filesize.png" alt="Screenshot of the file size information for a dataset"><br>

<em>The Hugging Face Hub can host the large datasets often created for machine learning research.</em>

</p>

## API and client library interaction with the Hub

Interacting with the Hugging Face Hub via an [API](https://huggingface.co/docs/hub/api) or the [`huggingface_hub`](https://huggingface.co/docs/huggingface_hub/index) Python library is possible. This includes creating new repositories, uploading data programmatically and creating and modifying metadata for datasets. This can be powerful for research workflows where new data or annotations continue to be created. The client library also makes uploading large datasets much more accessible.

## Community

The Hugging Face Hub is already home to a large community of researchers, developers, artists, and others interested in using and contributing to an ecosystem of open-source machine learning. Making your datasets accessible to this community increases their visibility, opens them up to new types of users and places your datasets within the context of a larger ecosystem of models, datasets and libraries.

The Hub also has features which allow communities to collaborate more easily. This includes a discussion page for each dataset, model and Space hosted on the Hub. This means users of your datasets can quickly ask questions and discuss ideas for working with a dataset.

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/researcher-dataset-sharing/discussion.png" alt="Screenshot of a discussion for a dataset on the Hub."><br>

<em>The Hub makes it easy to ask questions and discuss datasets.</em>

</p>

### Other important features for researchers

Some other features of the Hub may be of particular interest to researchers wanting to share their machine learning datasets on the Hub:

- [Organizations](https://huggingface.co/organizations) allow you to collaborate with other people and share models, datasets and demos under a single organization. This can be an excellent way of highlighting the work of a particular research project or institute.

- [Gated repositories](https://huggingface.co/docs/hub/datasets-gated) allow you to add some access restrictions to accessing your dataset.

- Download metrics are available for datasets on the Hub; this can be useful for communicating the impact of your researchers to funders and hiring committees.

- [Digital Object Identifiers (DOI)](https://huggingface.co/docs/hub/doi): it’s possible to register a persistent identifier for your dataset.

### How can I share my dataset on the Hugging Face Hub?

Here are some resources to help you get started with sharing your datasets on the Hugging Face Hub:

- General guidance on [creating](https://huggingface.co/docs/datasets/create_dataset) and [sharing datasets on the Hub](https://huggingface.co/docs/datasets/upload_dataset)

- Guides for particular modalities:

- Creating an [audio dataset](https://huggingface.co/docs/datasets/audio_dataset)

- Creating an [image dataset](https://huggingface.co/docs/datasets/image_dataset)

- Guidance on [structuring your repository](https://huggingface.co/docs/datasets/repository_structure) so a dataset can be automatically loaded from the Hub.

The following pages will be useful if you want to share large datasets:

- [Repository limitations and recommendations](https://huggingface.co/docs/hub/repositories-recommendations) provides general guidance on some of the considerations you'll want to make when sharing large datasets.

- The [Tips and tricks for large uploads](https://huggingface.co/docs/huggingface_hub/guides/upload#tips-and-tricks-for-large-uploads) page provides some guidance on how to upload large datasets to the Hub.

If you want any further help uploading a dataset to the Hub or want to upload a particularly large dataset, please contact datasets@huggingface.co.

| huggingface/blog/blob/main/researcher-dataset-sharing.md |

Gradio Demo: tabbed_interface_lite

```

!pip install -q gradio

```

```

import gradio as gr

hello_world = gr.Interface(lambda name: "Hello " + name, "text", "text")

bye_world = gr.Interface(lambda name: "Bye " + name, "text", "text")

demo = gr.TabbedInterface([hello_world, bye_world], ["Hello World", "Bye World"])

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/tabbed_interface_lite/run.ipynb |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Installation

🤗 Diffusers is tested on Python 3.8+, PyTorch 1.7.0+, and Flax. Follow the installation instructions below for the deep learning library you are using:

- [PyTorch](https://pytorch.org/get-started/locally/) installation instructions

- [Flax](https://flax.readthedocs.io/en/latest/) installation instructions

## Install with pip

You should install 🤗 Diffusers in a [virtual environment](https://docs.python.org/3/library/venv.html).

If you're unfamiliar with Python virtual environments, take a look at this [guide](https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/).

A virtual environment makes it easier to manage different projects and avoid compatibility issues between dependencies.

Start by creating a virtual environment in your project directory:

```bash

python -m venv .env

```

Activate the virtual environment:

```bash

source .env/bin/activate

```

You should also install 🤗 Transformers because 🤗 Diffusers relies on its models:

<frameworkcontent>

<pt>

```bash

pip install diffusers["torch"] transformers

```

</pt>

<jax>

```bash

pip install diffusers["flax"] transformers

```

</jax>

</frameworkcontent>

## Install with conda

After activating your virtual environment, with `conda` (maintained by the community):

```bash

conda install -c conda-forge diffusers

```

## Install from source

Before installing 🤗 Diffusers from source, make sure you have PyTorch and 🤗 Accelerate installed.

To install 🤗 Accelerate:

```bash

pip install accelerate

```

Then install 🤗 Diffusers from source:

```bash

pip install git+https://github.com/huggingface/diffusers

```

This command installs the bleeding edge `main` version rather than the latest `stable` version.

The `main` version is useful for staying up-to-date with the latest developments.

For instance, if a bug has been fixed since the last official release but a new release hasn't been rolled out yet.

However, this means the `main` version may not always be stable.

We strive to keep the `main` version operational, and most issues are usually resolved within a few hours or a day.

If you run into a problem, please open an [Issue](https://github.com/huggingface/diffusers/issues/new/choose) so we can fix it even sooner!

## Editable install

You will need an editable install if you'd like to:

* Use the `main` version of the source code.

* Contribute to 🤗 Diffusers and need to test changes in the code.

Clone the repository and install 🤗 Diffusers with the following commands:

```bash

git clone https://github.com/huggingface/diffusers.git

cd diffusers

```

<frameworkcontent>

<pt>

```bash

pip install -e ".[torch]"

```

</pt>

<jax>

```bash

pip install -e ".[flax]"

```

</jax>

</frameworkcontent>

These commands will link the folder you cloned the repository to and your Python library paths.

Python will now look inside the folder you cloned to in addition to the normal library paths.

For example, if your Python packages are typically installed in `~/anaconda3/envs/main/lib/python3.8/site-packages/`, Python will also search the `~/diffusers/` folder you cloned to.

<Tip warning={true}>

You must keep the `diffusers` folder if you want to keep using the library.

</Tip>

Now you can easily update your clone to the latest version of 🤗 Diffusers with the following command:

```bash

cd ~/diffusers/

git pull

```

Your Python environment will find the `main` version of 🤗 Diffusers on the next run.

## Cache

Model weights and files are downloaded from the Hub to a cache which is usually your home directory. You can change the cache location by specifying the `HF_HOME` or `HUGGINFACE_HUB_CACHE` environment variables or configuring the `cache_dir` parameter in methods like [`~DiffusionPipeline.from_pretrained`].

Cached files allow you to run 🤗 Diffusers offline. To prevent 🤗 Diffusers from connecting to the internet, set the `HF_HUB_OFFLINE` environment variable to `True` and 🤗 Diffusers will only load previously downloaded files in the cache.

```shell

export HF_HUB_OFFLINE=True

```

For more details about managing and cleaning the cache, take a look at the [caching](https://huggingface.co/docs/huggingface_hub/guides/manage-cache) guide.

## Telemetry logging

Our library gathers telemetry information during [`~DiffusionPipeline.from_pretrained`] requests.

The data gathered includes the version of 🤗 Diffusers and PyTorch/Flax, the requested model or pipeline class,

and the path to a pretrained checkpoint if it is hosted on the Hugging Face Hub.

This usage data helps us debug issues and prioritize new features.

Telemetry is only sent when loading models and pipelines from the Hub,

and it is not collected if you're loading local files.

We understand that not everyone wants to share additional information,and we respect your privacy.

You can disable telemetry collection by setting the `DISABLE_TELEMETRY` environment variable from your terminal:

On Linux/MacOS:

```bash

export DISABLE_TELEMETRY=YES

```

On Windows:

```bash

set DISABLE_TELEMETRY=YES

```

| huggingface/diffusers/blob/main/docs/source/en/installation.md |

Gradio Demo: theme_extended_step_1

```

!pip install -q gradio

```

```

import gradio as gr

import time

with gr.Blocks(theme=gr.themes.Default(primary_hue="red", secondary_hue="pink")) as demo:

textbox = gr.Textbox(label="Name")

slider = gr.Slider(label="Count", minimum=0, maximum=100, step=1)

with gr.Row():

button = gr.Button("Submit", variant="primary")

clear = gr.Button("Clear")

output = gr.Textbox(label="Output")

def repeat(name, count):

time.sleep(3)

return name * count

button.click(repeat, [textbox, slider], output)

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/theme_extended_step_1/run.ipynb |

--

title: "Assisted Generation: a new direction toward low-latency text generation"

thumbnail: /blog/assets/assisted-generation/thumbnail.png

authors:

- user: joaogante

---

# Assisted Generation: a new direction toward low-latency text generation

Large language models are all the rage these days, with many companies investing significant resources to scale them up and unlock new capabilities. However, as humans with ever-decreasing attention spans, we also dislike their slow response times. Latency is critical for a good user experience, and smaller models are often used despite their lower quality (e.g. in [code completion](https://ai.googleblog.com/2022/07/ml-enhanced-code-completion-improves.html)).

Why is text generation so slow? What’s preventing you from deploying low-latency large language models without going bankrupt? In this blog post, we will revisit the bottlenecks for autoregressive text generation and introduce a new decoding method to tackle the latency problem. You’ll see that by using our new method, assisted generation, you can reduce latency up to 10x in commodity hardware!

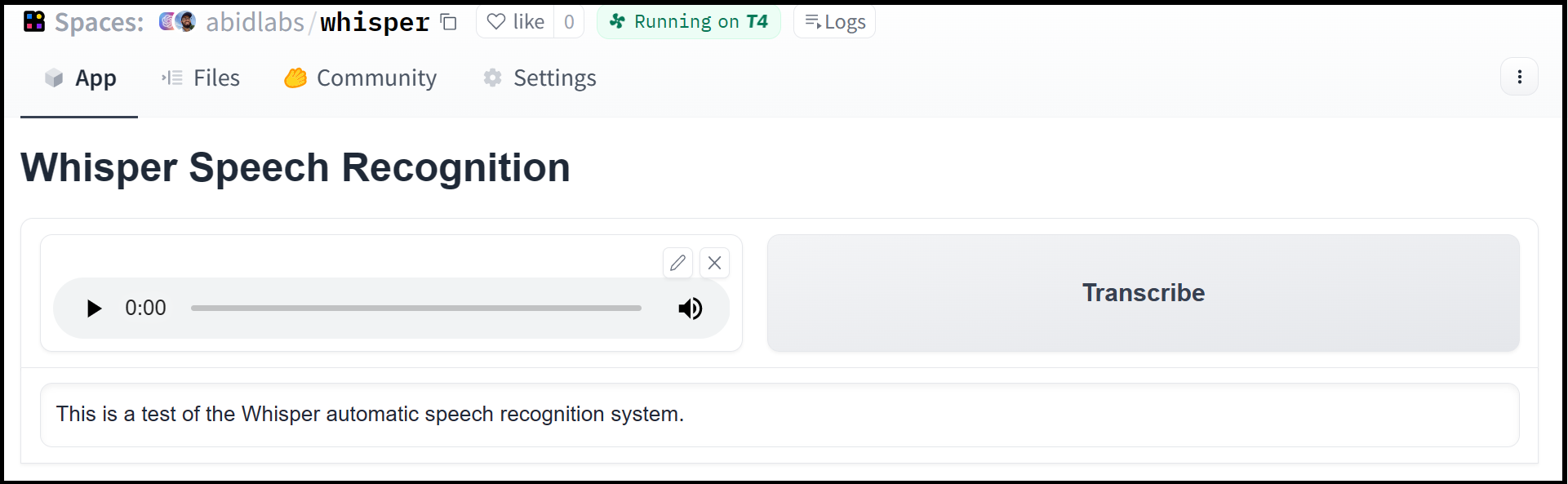

## Understanding text generation latency

The core of modern text generation is straightforward to understand. Let’s look at the central piece, the ML model. Its input contains a text sequence, which includes the text generated so far, and potentially other model-specific components (for instance, Whisper also has an audio input). The model takes the input and runs a forward pass: the input is fed to the model and passed sequentially along its layers until the unnormalized log probabilities for the next token are predicted (also known as logits). A token may consist of entire words, sub-words, or even individual characters, depending on the model. The [illustrated GPT-2](https://jalammar.github.io/illustrated-gpt2/) is a great reference if you’d like to dive deeper into this part of text generation.

<!-- [GIF 1 -- FWD PASS] -->

<figure class="image table text-center m-0 w-full">

<video

style="max-width: 90%; margin: auto;"

autoplay loop muted playsinline

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/assisted-generation/gif_1_1080p.mov"

></video>

</figure>

A model forward pass gets you the logits for the next token, which you can freely manipulate (e.g. set the probability of undesirable words or sequences to 0). The following step in text generation is to select the next token from these logits. Common strategies include picking the most likely token, known as greedy decoding, or sampling from their distribution, also called multinomial sampling. Chaining model forward passes with next token selection iteratively gets you text generation. This explanation is the tip of the iceberg when it comes to decoding methods; please refer to [our blog post on text generation](https://huggingface.co/blog/how-to-generate) for an in-depth exploration.

<!-- [GIF 2 -- TEXT GENERATION] -->

<figure class="image table text-center m-0 w-full">

<video

style="max-width: 90%; margin: auto;"

autoplay loop muted playsinline

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/assisted-generation/gif_2_1080p.mov"

></video>

</figure>

From the description above, the latency bottleneck in text generation is clear: running a model forward pass for large models is slow, and you may need to do hundreds of them in a sequence. But let’s dive deeper: why are forward passes slow? Forward passes are typically dominated by matrix multiplications and, after a quick visit to the [corresponding wikipedia section](https://en.wikipedia.org/wiki/Matrix_multiplication_algorithm#Communication-avoiding_and_distributed_algorithms), you can tell that memory bandwidth is the limitation in this operation (e.g. from the GPU RAM to the GPU compute cores). In other words, *the bottleneck in the forward pass comes from loading the model layer weights into the computation cores of your device, not from performing the computations themselves*.

At the moment, you have three main avenues you can explore to get the most out of text generation, all tackling the performance of the model forward pass. First, you have the hardware-specific model optimizations. For instance, your device may be compatible with [Flash Attention](https://github.com/HazyResearch/flash-attention), which speeds up the attention layer through a reorder of the operations, or [INT8 quantization](https://huggingface.co/blog/hf-bitsandbytes-integration), which reduces the size of the model weights.

Second, when you know you’ll get concurrent text generation requests, you can batch the inputs and massively increase the throughput with a small latency penalty. The model layer weights loaded into the device are now used on several input rows in parallel, which means that you’ll get more tokens out for approximately the same memory bandwidth burden. The catch with batching is that you need additional device memory (or to offload the memory somewhere) – at the end of this spectrum, you can see projects like [FlexGen](https://github.com/FMInference/FlexGen) which optimize throughput at the expense of latency.

```python

# Example showcasing the impact of batched generation. Measurement device: RTX3090

from transformers import AutoModelForCausalLM, AutoTokenizer

import time

tokenizer = AutoTokenizer.from_pretrained("distilgpt2")

model = AutoModelForCausalLM.from_pretrained("distilgpt2").to("cuda")

inputs = tokenizer(["Hello world"], return_tensors="pt").to("cuda")

def print_tokens_per_second(batch_size):

new_tokens = 100

cumulative_time = 0

# warmup

model.generate(

**inputs, do_sample=True, max_new_tokens=new_tokens, num_return_sequences=batch_size

)

for _ in range(10):

start = time.time()

model.generate(

**inputs, do_sample=True, max_new_tokens=new_tokens, num_return_sequences=batch_size

)

cumulative_time += time.time() - start

print(f"Tokens per second: {new_tokens * batch_size * 10 / cumulative_time:.1f}")

print_tokens_per_second(1) # Tokens per second: 418.3

print_tokens_per_second(64) # Tokens per second: 16266.2 (~39x more tokens per second)

```

Finally, if you have multiple devices available to you, you can distribute the workload using [Tensor Parallelism](https://huggingface.co/docs/transformers/main/en/perf_train_gpu_many#tensor-parallelism) and obtain lower latency. With Tensor Parallelism, you split the memory bandwidth burden across multiple devices, but you now have to consider inter-device communication bottlenecks in addition to the monetary cost of running multiple devices. The benefits depend largely on the model size: models that easily fit on a single consumer device see very limited benefits. Taking the results from this [DeepSpeed blog post](https://www.microsoft.com/en-us/research/blog/deepspeed-accelerating-large-scale-model-inference-and-training-via-system-optimizations-and-compression/), you see that you can spread a 17B parameter model across 4 GPUs to reduce the latency by 1.5x (Figure 7).

These three types of improvements can be used in tandem, resulting in [high throughput solutions](https://github.com/huggingface/text-generation-inference). However, after applying hardware-specific optimizations, there are limited options to reduce latency – and the existing options are expensive. Let’s fix that!

## Language decoder forward pass, revisited

You’ve read above that each model forward pass yields the logits for the next token, but that’s actually an incomplete description. During text generation, the typical iteration consists in the model receiving as input the latest generated token, plus cached internal computations for all other previous inputs, returning the next token logits. Caching is used to avoid redundant computations, resulting in faster forward passes, but it’s not mandatory (and can be used partially). When caching is disabled, the input contains the entire sequence of tokens generated so far and the output contains the logits corresponding to the next token for *all positions* in the sequence! The logits at position N correspond to the distribution for the next token if the input consisted of the first N tokens, ignoring all subsequent tokens in the sequence. In the particular case of greedy decoding, if you pass the generated sequence as input and apply the argmax operator to the resulting logits, you will obtain the generated sequence back.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tok = AutoTokenizer.from_pretrained("distilgpt2")

model = AutoModelForCausalLM.from_pretrained("distilgpt2")

inputs = tok(["The"], return_tensors="pt")

generated = model.generate(**inputs, do_sample=False, max_new_tokens=10)

forward_confirmation = model(generated).logits.argmax(-1)

# We exclude the opposing tips from each sequence: the forward pass returns

# the logits for the next token, so it is shifted by one position.

print(generated[0, 1:].tolist() == forward_confirmation[0, :-1].tolist()) # True

```

This means that you can use a model forward pass for a different purpose: in addition to feeding some tokens to predict the next one, you can also pass a sequence to the model and double-check whether the model would generate that same sequence (or part of it).

<!-- [GIF 3 -- FWD CONFIRMATION] -->

<figure class="image table text-center m-0 w-full">

<video

style="max-width: 90%; margin: auto;"

autoplay loop muted playsinline

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/assisted-generation/gif_3_1080p.mov"

></video>

</figure>

Let’s consider for a second that you have access to a magical latency-free oracle model that generates the same sequence as your model, for any given input. For argument’s sake, it can’t be used directly, it’s limited to being an assistant to your generation procedure. Using the property described above, you could use this assistant model to get candidate output tokens followed by a forward pass with your model to confirm that they are indeed correct. In this utopian scenario, the latency of text generation would be reduced from `O(n)` to `O(1)`, with `n` being the number of generated tokens. For long generations, we're talking about several orders of magnitude.

Walking a step towards reality, let's assume the assistant model has lost its oracle properties. Now it’s a latency-free model that gets some of the candidate tokens wrong, according to your model. Due to the autoregressive nature of the task, as soon as the assistant gets a token wrong, all subsequent candidates must be invalidated. However, that does not prevent you from querying the assistant again, after correcting the wrong token with your model, and repeating this process iteratively. Even if the assistant fails a few tokens, text generation would have an order of magnitude less latency than in its original form.

Obviously, there are no latency-free assistant models. Nevertheless, it is relatively easy to find a model that approximates some other model’s text generation outputs – smaller versions of the same architecture trained similarly often fit this property. Moreover, when the difference in model sizes becomes significant, the cost of using the smaller model as an assistant becomes an afterthought after factoring in the benefits of skipping a few forward passes! You now understand the core of _assisted generation_.

## Greedy decoding with assisted generation

Assisted generation is a balancing act. You want the assistant to quickly generate a candidate sequence while being as accurate as possible. If the assistant has poor quality, your get the cost of using the assistant model with little to no benefits. On the other hand, optimizing the quality of the candidate sequences may imply the use of slow assistants, resulting in a net slowdown. While we can't automate the selection of the assistant model for you, we’ve included an additional requirement and a heuristic to ensure the time spent with the assistant stays in check.

First, the requirement – the assistant must have the exact same tokenizer as your model. If this requirement was not in place, expensive token decoding and re-encoding steps would have to be added. Furthermore, these additional steps would have to happen on the CPU, which in turn may need slow inter-device data transfers. Fast usage of the assistant is critical for the benefits of assisted generation to show up.

Finally, the heuristic. By this point, you have probably noticed the similarities between the movie Inception and assisted generation – you are, after all, running text generation inside text generation. There will be one assistant model forward pass per candidate token, and we know that forward passes are expensive. While you can’t know in advance the number of tokens that the assistant model will get right, you can keep track of this information and use it to limit the number of candidate tokens requested to the assistant – some sections of the output are easier to anticipate than others.

Wrapping all up, here’s our original implementation of the assisted generation loop ([code](https://github.com/huggingface/transformers/blob/849367ccf741d8c58aa88ccfe1d52d8636eaf2b7/src/transformers/generation/utils.py#L4064)):

1. Use greedy decoding to generate a certain number of candidate tokens with the assistant model, producing `candidates`. The number of produced candidate tokens is initialized to `5` the first time assisted generation is called.

2. Using our model, do a forward pass with `candidates`, obtaining `logits`.

3. Use the token selection method (`.argmax()` for greedy search or `.multinomial()` for sampling) to get the `next_tokens` from `logits`.

4. Compare `next_tokens` to `candidates` and get the number of matching tokens. Remember that this comparison has to be done with left-to-right causality: after the first mismatch, all candidates are invalidated.

5. Use the number of matches to slice things up and discard variables related to unconfirmed candidate tokens. In essence, in `next_tokens`, keep the matching tokens plus the first divergent token (which our model generates from a valid candidate subsequence).

6. Adjust the number of candidate tokens to be produced in the next iteration — our original heuristic increases it by `2` if ALL tokens match and decreases it by `1` otherwise.

<!-- [GIF 4 -- ASSISTED GENERATION] -->

<figure class="image table text-center m-0 w-full">

<video

style="max-width: 90%; margin: auto;"

autoplay loop muted playsinline

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/assisted-generation/gif_4_1080p.mov"

></video>

</figure>

We’ve designed the API in 🤗 Transformers such that this process is hassle-free for you. All you need to do is to pass the assistant model under the new `assistant_model` keyword argument and reap the latency gains! At the time of the release of this blog post, assisted generation is limited to a batch size of `1`.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

prompt = "Alice and Bob"

checkpoint = "EleutherAI/pythia-1.4b-deduped"

assistant_checkpoint = "EleutherAI/pythia-160m-deduped"

device = "cuda" if torch.cuda.is_available() else "cpu"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

inputs = tokenizer(prompt, return_tensors="pt").to(device)

model = AutoModelForCausalLM.from_pretrained(checkpoint).to(device)

assistant_model = AutoModelForCausalLM.from_pretrained(assistant_checkpoint).to(device)

outputs = model.generate(**inputs, assistant_model=assistant_model)

print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

# ['Alice and Bob are sitting in a bar. Alice is drinking a beer and Bob is drinking a']

```

Is the additional internal complexity worth it? Let’s have a look at the latency numbers for the greedy decoding case (results for sampling are in the next section), considering a batch size of `1`. These results were pulled directly out of 🤗 Transformers without any additional optimizations, so you should be able to reproduce them in your setup.

<!-- [SPACE WITH GREEDY DECODING PERFORMANCE NUMBERS] -->

<script

type="module"

src="https://gradio.s3-us-west-2.amazonaws.com/3.28.2/gradio.js"

></script>

<gradio-app theme_mode="light" space="joaogante/assisted_generation_benchmarks"></gradio-app>

Glancing at the collected numbers, we see that assisted generation can deliver significant latency reductions in diverse settings, but it is not a silver bullet – you should benchmark it before applying it to your use case. We can conclude that assisted generation:

1. 🤏 Requires access to an assistant model that is at least an order of magnitude smaller than your model (the bigger the difference, the better);

2. 🚀 Gets up to 3x speedups in the presence of INT8 and up to 2x otherwise, when the model fits in the GPU memory;

3. 🤯 If you’re playing with models that do not fit in your GPU and are relying on memory offloading, you can see up to 10x speedups;

4. 📄 Shines in input-grounded tasks, like automatic speech recognition or summarization.

## Sample with assisted generation

Greedy decoding is suited for input-grounded tasks (automatic speech recognition, translation, summarization, ...) or factual knowledge-seeking. Open-ended tasks requiring large levels of creativity, such as most uses of a language model as a chatbot, should use sampling instead. Assisted generation is naturally designed for greedy decoding, but that doesn’t mean that you can’t use assisted generation with multinomial sampling!

Drawing samples from a probability distribution for the next token will cause our greedy assistant to fail more often, reducing its latency benefits. However, we can control how sharp the probability distribution for the next tokens is, using the temperature coefficient that’s present in most sampling-based applications. At one extreme, with temperatures close to 0, sampling will approximate greedy decoding, favoring the most likely token. At the other extreme, with the temperature set to values much larger than 1, sampling will be chaotic, drawing from a uniform distribution. Low temperatures are, therefore, more favorable to your assistant model, retaining most of the latency benefits from assisted generation, as we can see below.

<!-- [TEMPERATURE RESULTS, SHOW THAT LATENCY INCREASES STEADILY WITH TEMP] -->

<div align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/assisted-generation/temperature.png"/>

</div>

Why don't you see it for yourself, so get a feeling of assisted generation?

<!-- [DEMO] -->

<gradio-app theme_mode="light" space="joaogante/assisted_generation_demo"></gradio-app>

## Future directions

Assisted generation shows that modern text generation strategies are ripe for optimization. Understanding that it is currently a memory-bound problem, not a compute-bound problem, allows us to apply simple heuristics to get the most out of the available memory bandwidth, alleviating the bottleneck. We believe that further refinement of the use of assistant models will get us even bigger latency reductions - for instance, we may be able to skip a few more forward passes if we request the assistant to generate several candidate continuations. Naturally, releasing high-quality small models to be used as assistants will be critical to realizing and amplifying the benefits.

Initially released under our 🤗 Transformers library, to be used with the `.generate()` function, we expect to offer it throughout the Hugging Face universe. Its implementation is also completely open-source so, if you’re working on text generation and not using our tools, feel free to use it as a reference.

Finally, assisted generation resurfaces a crucial question in text generation. The field has been evolving with the constraint where all new tokens are the result of a fixed amount of compute, for a given model. One token per homogeneous forward pass, in pure autoregressive fashion. This blog post reinforces the idea that it shouldn’t be the case: large subsections of the generated output can also be equally generated by models that are a fraction of the size. For that, we’ll need new model architectures and decoding methods – we’re excited to see what the future holds!

## Related Work

After the original release of this blog post, it came to my attention that other works have explored the same core principle (use a forward pass to validate longer continuations). In particular, have a look at the following works:

- [Blockwise Parallel Decoding](https://proceedings.neurips.cc/paper/2018/file/c4127b9194fe8562c64dc0f5bf2c93bc-Paper.pdf), by Google Brain

- [Speculative Sampling](https://arxiv.org/abs/2302.01318), by DeepMind

## Citation

```bibtex

@misc {gante2023assisted,

author = { {Joao Gante} },

title = { Assisted Generation: a new direction toward low-latency text generation },

year = 2023,

url = { https://huggingface.co/blog/assisted-generation },

doi = { 10.57967/hf/0638 },

publisher = { Hugging Face Blog }

}

```

## Acknowledgements

I'd like to thank Sylvain Gugger, Nicolas Patry, and Lewis Tunstall for sharing many valuable suggestions to improve this blog post. Finally, kudos to Chunte Lee for designing the gorgeous cover you can see in our web page.

| huggingface/blog/blob/main/assisted-generation.md |

使用 GAN 创建您自己的朋友

spaces/NimaBoscarino/cryptopunks, https://huggingface.co/spaces/nateraw/cryptopunks-generator

Tags: GAN, IMAGE, HUB

由 <a href="https://huggingface.co/NimaBoscarino">Nima Boscarino</a> 和 <a href="https://huggingface.co/nateraw">Nate Raw</a> 贡献

## 简介

最近,加密货币、NFTs 和 Web3 运动似乎都非常流行!数字资产以惊人的金额在市场上上市,几乎每个名人都推出了自己的 NFT 收藏。虽然您的加密资产可能是应税的,例如在加拿大(https://www.canada.ca/en/revenue-agency/programs/about-canada-revenue-agency-cra/compliance/digital-currency/cryptocurrency-guide.html),但今天我们将探索一些有趣且无税的方法来生成自己的一系列过程生成的 CryptoPunks(https://www.larvalabs.com/cryptopunks)。

生成对抗网络(GANs),通常称为 GANs,是一类特定的深度学习模型,旨在通过学习输入数据集来创建(生成!)与原始训练集中的元素具有令人信服的相似性的新材料。众所周知,网站[thispersondoesnotexist.com](https://thispersondoesnotexist.com/)通过名为 StyleGAN2 的模型生成了栩栩如生但是合成的人物图像而迅速走红。GANs 在机器学习领域获得了人们的关注,现在被用于生成各种图像、文本甚至音乐!

今天我们将简要介绍 GAN 的高级直觉,然后我们将围绕一个预训练的 GAN 构建一个小型演示,看看这一切都是怎么回事。下面是我们将要组合的东西的一瞥:

<iframe src="https://nimaboscarino-cryptopunks.hf.space" frameBorder="0" height="855" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

### 先决条件

确保已经[安装](/getting_started)了 `gradio` Python 包。要使用预训练模型,请还安装 `torch` 和 `torchvision`。

## GANs:简介

最初在[Goodfellow 等人 2014 年的论文](https://arxiv.org/abs/1406.2661)中提出,GANs 由互相竞争的神经网络组成,旨在相互智能地欺骗对方。一种网络,称为“生成器”,负责生成图像。另一个网络,称为“鉴别器”,从生成器一次接收一张图像,以及来自训练数据集的 **real 真实**图像。然后,鉴别器必须猜测:哪张图像是假的?

生成器不断训练以创建对鉴别器更难以识别的图像,而鉴别器每次正确检测到伪造图像时,都会为生成器设置更高的门槛。随着网络之间的这种竞争(**adversarial 对抗性!**),生成的图像改善到了对人眼来说无法区分的地步!

如果您想更深入地了解 GANs,可以参考[Analytics Vidhya 上的这篇优秀文章](https://www.analyticsvidhya.com/blog/2021/06/a-detailed-explanation-of-gan-with-implementation-using-tensorflow-and-keras/)或这个[PyTorch 教程](https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html)。不过,现在我们将深入看一下演示!

## 步骤 1 - 创建生成器模型

要使用 GAN 生成新图像,只需要生成器模型。生成器可以使用许多不同的架构,但是对于这个演示,我们将使用一个预训练的 GAN 生成器模型,其架构如下:

```python

from torch import nn

class Generator(nn.Module):

# 有关nc,nz和ngf的解释,请参见下面的链接

# https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html#inputs

def __init__(self, nc=4, nz=100, ngf=64):

super(Generator, self).__init__()

self.network = nn.Sequential(

nn.ConvTranspose2d(nz, ngf * 4, 3, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 4, ngf * 2, 3, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 2, ngf, 4, 2, 0, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

nn.ConvTranspose2d(ngf, nc, 4, 2, 1, bias=False),

nn.Tanh(),

)

def forward(self, input):

output = self.network(input)

return output

```

我们正在使用来自[此 repo 的 @teddykoker](https://github.com/teddykoker/cryptopunks-gan/blob/main/train.py#L90)的生成器模型,您还可以在那里看到原始的鉴别器模型结构。

在实例化模型之后,我们将加载来自 Hugging Face Hub 的权重,存储在[nateraw/cryptopunks-gan](https://huggingface.co/nateraw/cryptopunks-gan)中:

```python

from huggingface_hub import hf_hub_download

import torch

model = Generator()

weights_path = hf_hub_download('nateraw/cryptopunks-gan', 'generator.pth')

model.load_state_dict(torch.load(weights_path, map_location=torch.device('cpu'))) # 如果有可用的GPU,请使用'cuda'

```

## 步骤 2 - 定义“predict”函数

`predict` 函数是使 Gradio 工作的关键!我们通过 Gradio 界面选择的任何输入都将通过我们的 `predict` 函数传递,该函数应对输入进行操作并生成我们可以通过 Gradio 输出组件显示的输出。对于 GANs,常见的做法是将随机噪声传入我们的模型作为输入,因此我们将生成一张随机数的张量并将其传递给模型。然后,我们可以使用 `torchvision` 的 `save_image` 函数将模型的输出保存为 `png` 文件,并返回文件名:

```python

from torchvision.utils import save_image

def predict(seed):

num_punks = 4

torch.manual_seed(seed)

z = torch.randn(num_punks, 100, 1, 1)

punks = model(z)

save_image(punks, "punks.png", normalize=True)

return 'punks.png'

```

我们给 `predict` 函数一个 `seed` 参数,这样我们就可以使用一个种子固定随机张量生成。然后,我们可以通过传入相同的种子再次查看生成的 punks。

_注意!_ 我们的模型需要一个 100x1x1 的输入张量进行单次推理,或者 (BatchSize)x100x1x1 来生成一批图像。在这个演示中,我们每次生成 4 个 punk。

## 第三步—创建一个 Gradio 接口

此时,您甚至可以运行您拥有的代码 `predict(<SOME_NUMBER>)`,并在您的文件系统中找到新生成的 punk 在 `./punks.png`。然而,为了制作一个真正的交互演示,我们将用 Gradio 构建一个简单的界面。我们的目标是:

- 设置一个滑块输入,以便用户可以选择“seed”值

- 使用图像组件作为输出,展示生成的 punk

- 使用我们的 `predict()` 函数来接受种子并生成图像

通过使用 `gr.Interface()`,我们可以使用一个函数调用来定义所有这些 :

```python

import gradio as gr

gr.Interface(

predict,

inputs=[

gr.Slider(0, 1000, label='Seed', default=42),

],

outputs="image",

).launch()

```

启动界面后,您应该会看到像这样的东西 :

<iframe src="https://nimaboscarino-cryptopunks-1.hf.space" frameBorder="0" height="365" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

## 第四步—更多 punk!

每次生成 4 个 punk 是一个好的开始,但是也许我们想控制每次想生成多少。通过简单地向我们传递给 `gr.Interface` 的 `inputs` 列表添加另一项即可向我们的 Gradio 界面添加更多输入 :

```python

gr.Interface(

predict,

inputs=[

gr.Slider(0, 1000, label='Seed', default=42),

gr.Slider(4, 64, label='Number of Punks', step=1, default=10), # 添加另一个滑块!

],

outputs="image",

).launch()

```

新的输入将传递给我们的 `predict()` 函数,所以我们必须对该函数进行一些更改,以接受一个新的参数 :

```python

def predict(seed, num_punks):

torch.manual_seed(seed)

z = torch.randn(num_punks, 100, 1, 1)

punks = model(z)

save_image(punks, "punks.png", normalize=True)

return 'punks.png'

```

当您重新启动界面时,您应该会看到一个第二个滑块,它可以让您控制 punk 的数量!

## 第五步-完善它

您的 Gradio 应用已经准备好运行了,但是您可以添加一些额外的功能来使其真正准备好发光 ✨

我们可以添加一些用户可以轻松尝试的示例,通过将其添加到 `gr.Interface` 中实现 :

```python

gr.Interface(

# ...

# 将所有内容保持不变,然后添加

examples=[[123, 15], [42, 29], [456, 8], [1337, 35]],

).launch(cache_examples=True) # cache_examples是可选的

```

`examples` 参数接受一个列表的列表,其中子列表中的每个项目的顺序与我们列出的 `inputs` 的顺序相同。所以在我们的例子中,`[seed, num_punks]`。试一试吧!

您还可以尝试在 `gr.Interface` 中添加 `title`、`description` 和 `article`。每个参数都接受一个字符串,所以试试看发生了什么👀 `article` 也接受 HTML,如[前面的指南](./key_features/#descriptive-content)所述!

当您完成所有操作后,您可能会得到类似于这样的结果 :

<iframe src="https://nimaboscarino-cryptopunks.hf.space" frameBorder="0" height="855" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

供参考,这是我们的完整代码 :

```python

import torch

from torch import nn

from huggingface_hub import hf_hub_download

from torchvision.utils import save_image

import gradio as gr

class Generator(nn.Module):

# 关于nc、nz和ngf的解释,请参见下面的链接

# https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html#inputs

def __init__(self, nc=4, nz=100, ngf=64):

super(Generator, self).__init__()

self.network = nn.Sequential(

nn.ConvTranspose2d(nz, ngf * 4, 3, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 4, ngf * 2, 3, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 2, ngf, 4, 2, 0, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

nn.ConvTranspose2d(ngf, nc, 4, 2, 1, bias=False),

nn.Tanh(),

)

def forward(self, input):

output = self.network(input)

return output

model = Generator()

weights_path = hf_hub_download('nateraw/cryptopunks-gan', 'generator.pth')

model.load_state_dict(torch.load(weights_path, map_location=torch.device('cpu'))) # 如果您有可用的GPU,使用'cuda'

def predict(seed, num_punks):

torch.manual_seed(seed)

z = torch.randn(num_punks, 100, 1, 1)

punks = model(z)

save_image(punks, "punks.png", normalize=True)

return 'punks.png'

gr.Interface(

predict,

inputs=[

gr.Slider(0, 1000, label='Seed', default=42),

gr.Slider(4, 64, label='Number of Punks', step=1, default=10),

],

outputs="image",

examples=[[123, 15], [42, 29], [456, 8], [1337, 35]],

).launch(cache_examples=True)

```

---

恭喜!你已经成功构建了自己的基于 GAN 的 CryptoPunks 生成器,配备了一个时尚的 Gradio 界面,使任何人都能轻松使用。现在你可以在 Hub 上[寻找更多的 GANs](https://huggingface.co/models?other=gan)(或者自己训练)并继续制作更多令人赞叹的演示项目。🤗

| gradio-app/gradio/blob/main/guides/cn/07_other-tutorials/create-your-own-friends-with-a-gan.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# ViTDet

## Overview

The ViTDet model was proposed in [Exploring Plain Vision Transformer Backbones for Object Detection](https://arxiv.org/abs/2203.16527) by Yanghao Li, Hanzi Mao, Ross Girshick, Kaiming He.

VitDet leverages the plain [Vision Transformer](vit) for the task of object detection.

The abstract from the paper is the following:

*We explore the plain, non-hierarchical Vision Transformer (ViT) as a backbone network for object detection. This design enables the original ViT architecture to be fine-tuned for object detection without needing to redesign a hierarchical backbone for pre-training. With minimal adaptations for fine-tuning, our plain-backbone detector can achieve competitive results. Surprisingly, we observe: (i) it is sufficient to build a simple feature pyramid from a single-scale feature map (without the common FPN design) and (ii) it is sufficient to use window attention (without shifting) aided with very few cross-window propagation blocks. With plain ViT backbones pre-trained as Masked Autoencoders (MAE), our detector, named ViTDet, can compete with the previous leading methods that were all based on hierarchical backbones, reaching up to 61.3 AP_box on the COCO dataset using only ImageNet-1K pre-training. We hope our study will draw attention to research on plain-backbone detectors.*

This model was contributed by [nielsr](https://huggingface.co/nielsr).

The original code can be found [here](https://github.com/facebookresearch/detectron2/tree/main/projects/ViTDet).

Tips:

- At the moment, only the backbone is available.

## VitDetConfig

[[autodoc]] VitDetConfig

## VitDetModel

[[autodoc]] VitDetModel

- forward | huggingface/transformers/blob/main/docs/source/en/model_doc/vitdet.md |

AdvProp (EfficientNet)

**AdvProp** is an adversarial training scheme which treats adversarial examples as additional examples, to prevent overfitting. Key to the method is the usage of a separate auxiliary batch norm for adversarial examples, as they have different underlying distributions to normal examples.

The weights from this model were ported from [Tensorflow/TPU](https://github.com/tensorflow/tpu).

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('tf_efficientnet_b0_ap', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `tf_efficientnet_b0_ap`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('tf_efficientnet_b0_ap', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@misc{xie2020adversarial,

title={Adversarial Examples Improve Image Recognition},

author={Cihang Xie and Mingxing Tan and Boqing Gong and Jiang Wang and Alan Yuille and Quoc V. Le},

year={2020},

eprint={1911.09665},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

<!--

Type: model-index

Collections:

- Name: AdvProp

Paper:

Title: Adversarial Examples Improve Image Recognition

URL: https://paperswithcode.com/paper/adversarial-examples-improve-image

Models:

- Name: tf_efficientnet_b0_ap

In Collection: AdvProp

Metadata:

FLOPs: 488688572

Parameters: 5290000

File Size: 21385973

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- Squeeze-and-Excitation Block

- Swish

Tasks:

- Image Classification

Training Techniques:

- AdvProp

- AutoAugment

- Label Smoothing

- RMSProp

- Stochastic Depth

- Weight Decay

Training Data:

- ImageNet

ID: tf_efficientnet_b0_ap

LR: 0.256

Epochs: 350

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 2048

Image Size: '224'

Weight Decay: 1.0e-05

Interpolation: bicubic

RMSProp Decay: 0.9

Label Smoothing: 0.1

BatchNorm Momentum: 0.99

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1334

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_b0_ap-f262efe1.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 77.1%

Top 5 Accuracy: 93.26%

- Name: tf_efficientnet_b1_ap

In Collection: AdvProp

Metadata:

FLOPs: 883633200

Parameters: 7790000

File Size: 31515350

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- Squeeze-and-Excitation Block

- Swish

Tasks:

- Image Classification

Training Techniques:

- AdvProp

- AutoAugment

- Label Smoothing

- RMSProp

- Stochastic Depth

- Weight Decay

Training Data:

- ImageNet

ID: tf_efficientnet_b1_ap

LR: 0.256

Epochs: 350

Crop Pct: '0.882'

Momentum: 0.9

Batch Size: 2048

Image Size: '240'

Weight Decay: 1.0e-05

Interpolation: bicubic

RMSProp Decay: 0.9

Label Smoothing: 0.1

BatchNorm Momentum: 0.99

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1344

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_b1_ap-44ef0a3d.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.28%

Top 5 Accuracy: 94.3%

- Name: tf_efficientnet_b2_ap

In Collection: AdvProp

Metadata:

FLOPs: 1234321170

Parameters: 9110000

File Size: 36800745

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- Squeeze-and-Excitation Block

- Swish

Tasks:

- Image Classification

Training Techniques:

- AdvProp

- AutoAugment

- Label Smoothing

- RMSProp

- Stochastic Depth

- Weight Decay

Training Data:

- ImageNet

ID: tf_efficientnet_b2_ap

LR: 0.256

Epochs: 350

Crop Pct: '0.89'

Momentum: 0.9

Batch Size: 2048

Image Size: '260'

Weight Decay: 1.0e-05

Interpolation: bicubic

RMSProp Decay: 0.9

Label Smoothing: 0.1

BatchNorm Momentum: 0.99

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1354

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_b2_ap-2f8e7636.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 80.3%

Top 5 Accuracy: 95.03%

- Name: tf_efficientnet_b3_ap

In Collection: AdvProp

Metadata:

FLOPs: 2275247568

Parameters: 12230000

File Size: 49384538

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- Squeeze-and-Excitation Block

- Swish

Tasks:

- Image Classification

Training Techniques:

- AdvProp

- AutoAugment

- Label Smoothing

- RMSProp

- Stochastic Depth

- Weight Decay

Training Data:

- ImageNet

ID: tf_efficientnet_b3_ap

LR: 0.256

Epochs: 350