modelId

stringlengths 4

122

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

392M

| likes

int64 0

6.56k

| library_name

stringclasses 368

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 51

values | createdAt

unknown | card

stringlengths 1

1M

|

|---|---|---|---|---|---|---|---|---|---|

Rostlab/prot_t5_xl_half_uniref50-enc | Rostlab | "2023-01-31T21:04:38Z" | 287,961 | 15 | transformers | [

"transformers",

"pytorch",

"t5",

"protein language model",

"dataset:UniRef50",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | null | "2022-05-20T09:58:28Z" | ---

tags:

- protein language model

datasets:

- UniRef50

---

# Encoder only ProtT5-XL-UniRef50, half-precision model

An encoder-only, half-precision version of the [ProtT5-XL-UniRef50](https://huggingface.co/Rostlab/prot_t5_xl_uniref50) model. The original model and it's pretraining were introduced in

[this paper](https://doi.org/10.1101/2020.07.12.199554) and first released in

[this repository](https://github.com/agemagician/ProtTrans). This model is trained on uppercase amino acids: it only works with capital letter amino acids.

## Model description

ProtT5-XL-UniRef50 is based on the `t5-3b` model and was pretrained on a large corpus of protein sequences in a self-supervised fashion.

This means it was pretrained on the raw protein sequences only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those protein sequences.

One important difference between this T5 model and the original T5 version is the denoising objective.

The original T5-3B model was pretrained using a span denoising objective, while this model was pretrained with a Bart-like MLM denoising objective.

The masking probability is consistent with the original T5 training by randomly masking 15% of the amino acids in the input.

This model only contains the encoder portion of the original ProtT5-XL-UniRef50 model using half precision (float16).

As such, this model can efficiently be used to create protein/ amino acid representations. When used for training downstream networks/ feature extraction, these embeddings produced the same performance (established empirically by comparing on several downstream tasks).

## Intended uses & limitations

This version of the original ProtT5-XL-UniRef50 is mostly meant for conveniently creating amino-acid or protein embeddings with a low GPU-memory footprint without any measurable performance-decrease in our experiments. This model is fully usable on 8 GB of video RAM.

### How to use

An extensive, interactive example on how to use this model for common tasks can be found [on Google Colab](https://colab.research.google.com/drive/1TUj-ayG3WO52n5N50S7KH9vtt6zRkdmj?usp=sharing#scrollTo=ET2v51slC5ui)

Here is how to use this model to extract the features of a given protein sequence in PyTorch:

```python

sequence_examples = ["PRTEINO", "SEQWENCE"]

# this will replace all rare/ambiguous amino acids by X and introduce white-space between all amino acids

sequence_examples = [" ".join(list(re.sub(r"[UZOB]", "X", sequence))) for sequence in sequence_examples]

# tokenize sequences and pad up to the longest sequence in the batch

ids = tokenizer.batch_encode_plus(sequence_examples, add_special_tokens=True, padding="longest")

input_ids = torch.tensor(ids['input_ids']).to(device)

attention_mask = torch.tensor(ids['attention_mask']).to(device)

# generate embeddings

with torch.no_grad():

embedding_repr = model(input_ids=input_ids,attention_mask=attention_mask)

# extract embeddings for the first ([0,:]) sequence in the batch while removing padded & special tokens ([0,:7])

emb_0 = embedding_repr.last_hidden_state[0,:7] # shape (7 x 1024)

print(f"Shape of per-residue embedding of first sequences: {emb_0.shape}")

# do the same for the second ([1,:]) sequence in the batch while taking into account different sequence lengths ([1,:8])

emb_1 = embedding_repr.last_hidden_state[1,:8] # shape (8 x 1024)

# if you want to derive a single representation (per-protein embedding) for the whole protein

emb_0_per_protein = emb_0.mean(dim=0) # shape (1024)

print(f"Shape of per-protein embedding of first sequences: {emb_0_per_protein.shape}")

```

**NOTE**: Please make sure to explicitly set the model to `float16` (`T5EncoderModel.from_pretrained('Rostlab/prot_t5_xl_half_uniref50-enc', torch_dtype=torch.float16)`) otherwise, the generated embeddings will be full precision.

**NOTE**: Currently (06/2022) half-precision models cannot be used on CPU. If you want to use the encoder only version on CPU, you need to cast it to its full-precision version (`model=model.float()`).

### BibTeX entry and citation info

```bibtex

@article {Elnaggar2020.07.12.199554,

author = {Elnaggar, Ahmed and Heinzinger, Michael and Dallago, Christian and Rehawi, Ghalia and Wang, Yu and Jones, Llion and Gibbs, Tom and Feher, Tamas and Angerer, Christoph and Steinegger, Martin and BHOWMIK, DEBSINDHU and Rost, Burkhard},

title = {ProtTrans: Towards Cracking the Language of Life{\textquoteright}s Code Through Self-Supervised Deep Learning and High Performance Computing},

elocation-id = {2020.07.12.199554},

year = {2020},

doi = {10.1101/2020.07.12.199554},

publisher = {Cold Spring Harbor Laboratory},

abstract = {Computational biology and bioinformatics provide vast data gold-mines from protein sequences, ideal for Language Models (LMs) taken from Natural Language Processing (NLP). These LMs reach for new prediction frontiers at low inference costs. Here, we trained two auto-regressive language models (Transformer-XL, XLNet) and two auto-encoder models (Bert, Albert) on data from UniRef and BFD containing up to 393 billion amino acids (words) from 2.1 billion protein sequences (22- and 112 times the entire English Wikipedia). The LMs were trained on the Summit supercomputer at Oak Ridge National Laboratory (ORNL), using 936 nodes (total 5616 GPUs) and one TPU Pod (V3-512 or V3-1024). We validated the advantage of up-scaling LMs to larger models supported by bigger data by predicting secondary structure (3-states: Q3=76-84, 8 states: Q8=65-73), sub-cellular localization for 10 cellular compartments (Q10=74) and whether a protein is membrane-bound or water-soluble (Q2=89). Dimensionality reduction revealed that the LM-embeddings from unlabeled data (only protein sequences) captured important biophysical properties governing protein shape. This implied learning some of the grammar of the language of life realized in protein sequences. The successful up-scaling of protein LMs through HPC to larger data sets slightly reduced the gap between models trained on evolutionary information and LMs. Availability ProtTrans: \<a href="https://github.com/agemagician/ProtTrans"\>https://github.com/agemagician/ProtTrans\</a\>Competing Interest StatementThe authors have declared no competing interest.},

URL = {https://www.biorxiv.org/content/early/2020/07/21/2020.07.12.199554},

eprint = {https://www.biorxiv.org/content/early/2020/07/21/2020.07.12.199554.full.pdf},

journal = {bioRxiv}

}

```

|

prithivida/parrot_adequacy_model | prithivida | "2022-05-27T02:47:22Z" | 287,508 | 7 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2022-05-27T02:04:37Z" | ---

license: apache-2.0

---

Parrot

THIS IS AN ANCILLARY MODEL FOR PARROT PARAPHRASER

1. What is Parrot?

Parrot is a paraphrase-based utterance augmentation framework purpose-built to accelerate training NLU models. A paraphrase framework is more than just a paraphrasing model. Please refer to the GitHub page or The model card prithivida/parrot_paraphraser_on_T5 |

artificialguybr/ColoringBookRedmond-V2 | artificialguybr | "2023-10-07T20:57:38Z" | 287,168 | 32 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | "2023-10-07T20:54:11Z" | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: ColoringBookAF, Coloring Book

widget:

- text: ColoringBookAF, Coloring Book

---

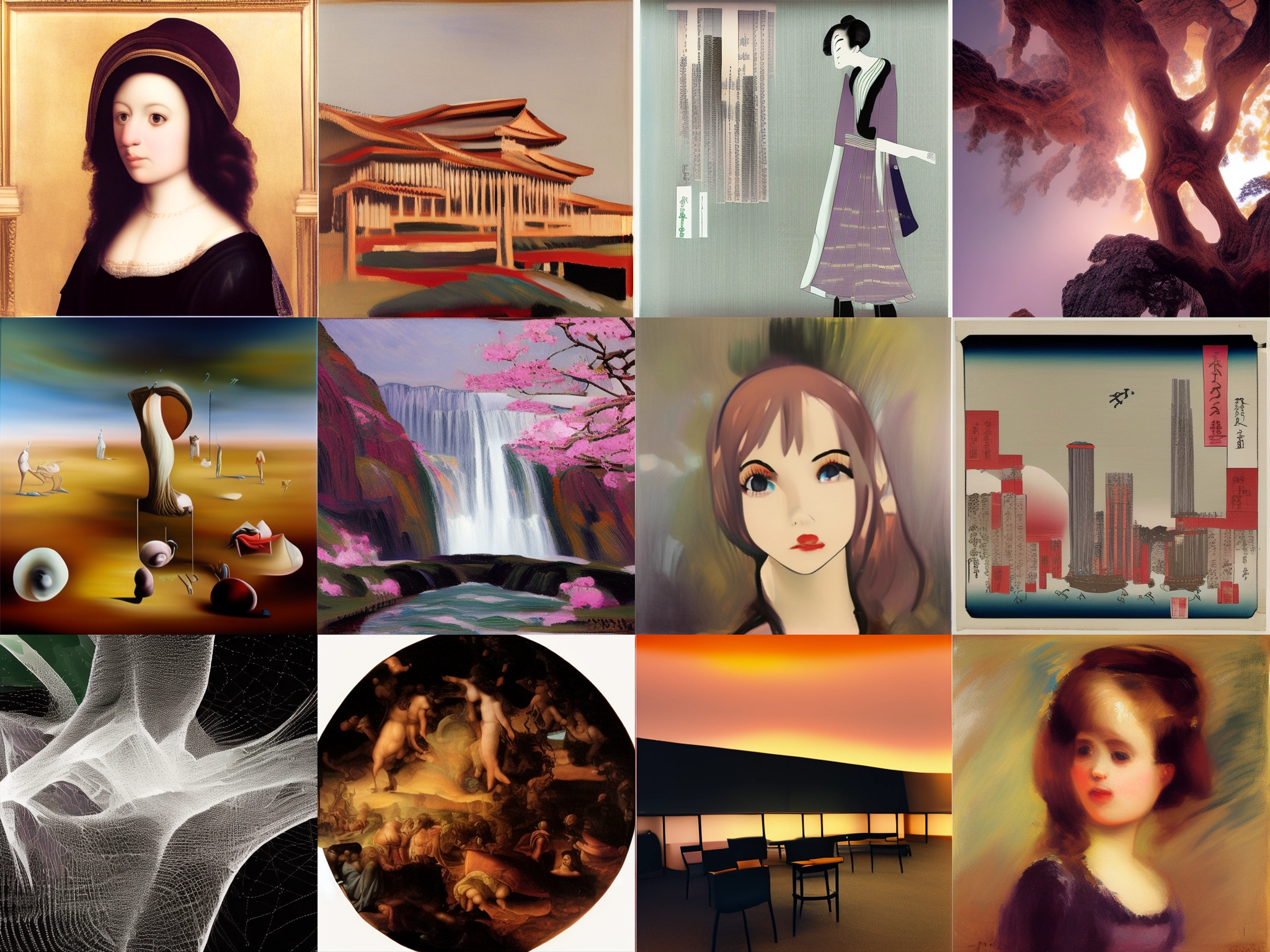

# ColoringBook.Redmond V2

ColoringBook.Redmond is here!

TEST ALL MY LORA HERE|: https://huggingface.co/spaces/artificialguybr/artificialguybr-demo-lora/

Introducing ColoringBook.Redmond, the ultimate LORA for creating Coloring Book images!

I'm grateful for the GPU time from Redmond.AI that allowed me to make this LORA! If you need GPU, then you need the great services from Redmond.AI.

It is based on SD XL 1.0 and fine-tuned on a large dataset.

The LORA has a high capacity to generate Coloring Book Images!

The tag for the model:ColoringBookAF, Coloring Book

I really hope you like the LORA and use it.

If you like the model and think it's worth it, you can make a donation to my Patreon or Ko-fi.

Patreon:

https://www.patreon.com/user?u=81570187

Ko-fi:https://ko-fi.com/artificialguybr

BuyMeACoffe:https://www.buymeacoffee.com/jvkape

Follow me in my twitter to know before all about new models:

https://twitter.com/artificialguybr/ |

lllyasviel/sd-controlnet-canny | lllyasviel | "2023-05-01T19:33:49Z" | 285,968 | 182 | diffusers | [

"diffusers",

"safetensors",

"art",

"controlnet",

"stable-diffusion",

"image-to-image",

"arxiv:2302.05543",

"base_model:runwayml/stable-diffusion-v1-5",

"base_model:adapter:runwayml/stable-diffusion-v1-5",

"license:openrail",

"region:us"

] | image-to-image | "2023-02-24T06:55:23Z" | ---

license: openrail

base_model: runwayml/stable-diffusion-v1-5

tags:

- art

- controlnet

- stable-diffusion

- image-to-image

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/canny-edge.jpg

prompt: Girl with Pearl Earring

---

# Controlnet - *Canny Version*

ControlNet is a neural network structure to control diffusion models by adding extra conditions.

This checkpoint corresponds to the ControlNet conditioned on **Canny edges**.

It can be used in combination with [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion/text2img).

## Model Details

- **Developed by:** Lvmin Zhang, Maneesh Agrawala

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming-convention-of-responsible-ai-licenses), adapted from the work that [BigScience](https://bigscience.huggingface.co/) and [the RAIL Initiative](https://www.licenses.ai/) are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) on which our license is based.

- **Resources for more information:** [GitHub Repository](https://github.com/lllyasviel/ControlNet), [Paper](https://arxiv.org/abs/2302.05543).

- **Cite as:**

@misc{zhang2023adding,

title={Adding Conditional Control to Text-to-Image Diffusion Models},

author={Lvmin Zhang and Maneesh Agrawala},

year={2023},

eprint={2302.05543},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

## Introduction

Controlnet was proposed in [*Adding Conditional Control to Text-to-Image Diffusion Models*](https://arxiv.org/abs/2302.05543) by

Lvmin Zhang, Maneesh Agrawala.

The abstract reads as follows:

*We present a neural network structure, ControlNet, to control pretrained large diffusion models to support additional input conditions.

The ControlNet learns task-specific conditions in an end-to-end way, and the learning is robust even when the training dataset is small (< 50k).

Moreover, training a ControlNet is as fast as fine-tuning a diffusion model, and the model can be trained on a personal devices.

Alternatively, if powerful computation clusters are available, the model can scale to large amounts (millions to billions) of data.

We report that large diffusion models like Stable Diffusion can be augmented with ControlNets to enable conditional inputs like edge maps, segmentation maps, keypoints, etc.

This may enrich the methods to control large diffusion models and further facilitate related applications.*

## Released Checkpoints

The authors released 8 different checkpoints, each trained with [Stable Diffusion v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

on a different type of conditioning:

| Model Name | Control Image Overview| Control Image Example | Generated Image Example |

|---|---|---|---|

|[lllyasviel/sd-controlnet-canny](https://huggingface.co/lllyasviel/sd-controlnet-canny)<br/> *Trained with canny edge detection* | A monochrome image with white edges on a black background.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_bird_canny.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_bird_canny.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_bird_canny_1.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_bird_canny_1.png"/></a>|

|[lllyasviel/sd-controlnet-depth](https://huggingface.co/lllyasviel/sd-controlnet-depth)<br/> *Trained with Midas depth estimation* |A grayscale image with black representing deep areas and white representing shallow areas.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_vermeer_depth.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_vermeer_depth.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_vermeer_depth_2.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_vermeer_depth_2.png"/></a>|

|[lllyasviel/sd-controlnet-hed](https://huggingface.co/lllyasviel/sd-controlnet-hed)<br/> *Trained with HED edge detection (soft edge)* |A monochrome image with white soft edges on a black background.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_bird_hed.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_bird_hed.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_bird_hed_1.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_bird_hed_1.png"/></a> |

|[lllyasviel/sd-controlnet-mlsd](https://huggingface.co/lllyasviel/sd-controlnet-mlsd)<br/> *Trained with M-LSD line detection* |A monochrome image composed only of white straight lines on a black background.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_room_mlsd.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_room_mlsd.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_room_mlsd_0.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_room_mlsd_0.png"/></a>|

|[lllyasviel/sd-controlnet-normal](https://huggingface.co/lllyasviel/sd-controlnet-normal)<br/> *Trained with normal map* |A [normal mapped](https://en.wikipedia.org/wiki/Normal_mapping) image.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_human_normal.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_normal.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_human_normal_1.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_human_normal_1.png"/></a>|

|[lllyasviel/sd-controlnet_openpose](https://huggingface.co/lllyasviel/sd-controlnet-openpose)<br/> *Trained with OpenPose bone image* |A [OpenPose bone](https://github.com/CMU-Perceptual-Computing-Lab/openpose) image.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_human_openpose.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_human_openpose.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_human_openpose_0.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_human_openpose_0.png"/></a>|

|[lllyasviel/sd-controlnet_scribble](https://huggingface.co/lllyasviel/sd-controlnet-scribble)<br/> *Trained with human scribbles* |A hand-drawn monochrome image with white outlines on a black background.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_vermeer_scribble.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_vermeer_scribble.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_vermeer_scribble_0.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_vermeer_scribble_0.png"/></a> |

|[lllyasviel/sd-controlnet_seg](https://huggingface.co/lllyasviel/sd-controlnet-seg)<br/>*Trained with semantic segmentation* |An [ADE20K](https://groups.csail.mit.edu/vision/datasets/ADE20K/)'s segmentation protocol image.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_room_seg.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_room_seg.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_room_seg_1.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_room_seg_1.png"/></a> |

## Example

It is recommended to use the checkpoint with [Stable Diffusion v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5) as the checkpoint

has been trained on it.

Experimentally, the checkpoint can be used with other diffusion models such as dreamboothed stable diffusion.

**Note**: If you want to process an image to create the auxiliary conditioning, external dependencies are required as shown below:

1. Install opencv

```sh

$ pip install opencv-contrib-python

```

2. Let's install `diffusers` and related packages:

```

$ pip install diffusers transformers accelerate

```

3. Run code:

```python

import cv2

from PIL import Image

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

import torch

import numpy as np

from diffusers.utils import load_image

image = load_image("https://huggingface.co/lllyasviel/sd-controlnet-hed/resolve/main/images/bird.png")

image = np.array(image)

low_threshold = 100

high_threshold = 200

image = cv2.Canny(image, low_threshold, high_threshold)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

image = Image.fromarray(image)

controlnet = ControlNetModel.from_pretrained(

"lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16

)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None, torch_dtype=torch.float16

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

# Remove if you do not have xformers installed

# see https://huggingface.co/docs/diffusers/v0.13.0/en/optimization/xformers#installing-xformers

# for installation instructions

pipe.enable_xformers_memory_efficient_attention()

pipe.enable_model_cpu_offload()

image = pipe("bird", image, num_inference_steps=20).images[0]

image.save('images/bird_canny_out.png')

```

### Training

The canny edge model was trained on 3M edge-image, caption pairs. The model was trained for 600 GPU-hours with Nvidia A100 80G using Stable Diffusion 1.5 as a base model.

### Blog post

For more information, please also have a look at the [official ControlNet Blog Post](https://huggingface.co/blog/controlnet). |

google/t5-v1_1-xl | google | "2023-01-24T16:52:38Z" | 285,143 | 15 | transformers | [

"transformers",

"pytorch",

"tf",

"t5",

"text2text-generation",

"en",

"dataset:c4",

"arxiv:2002.05202",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | "2022-03-02T23:29:05Z" | ---

language: en

datasets:

- c4

license: apache-2.0

---

[Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) Version 1.1

## Version 1.1

[T5 Version 1.1](https://github.com/google-research/text-to-text-transfer-transformer/blob/master/released_checkpoints.md#t511) includes the following improvements compared to the original T5 model- GEGLU activation in feed-forward hidden layer, rather than ReLU - see [here](https://arxiv.org/abs/2002.05202).

- Dropout was turned off in pre-training (quality win). Dropout should be re-enabled during fine-tuning.

- Pre-trained on C4 only without mixing in the downstream tasks.

- no parameter sharing between embedding and classifier layer

- "xl" and "xxl" replace "3B" and "11B". The model shapes are a bit different - larger `d_model` and smaller `num_heads` and `d_ff`.

**Note**: T5 Version 1.1 was only pre-trained on C4 excluding any supervised training. Therefore, this model has to be fine-tuned before it is useable on a downstream task.

Pretraining Dataset: [C4](https://huggingface.co/datasets/c4)

Other Community Checkpoints: [here](https://huggingface.co/models?search=t5-v1_1)

Paper: [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/pdf/1910.10683.pdf)

Authors: *Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, Peter J. Liu*

## Abstract

Transfer learning, where a model is first pre-trained on a data-rich task before being fine-tuned on a downstream task, has emerged as a powerful technique in natural language processing (NLP). The effectiveness of transfer learning has given rise to a diversity of approaches, methodology, and practice. In this paper, we explore the landscape of transfer learning techniques for NLP by introducing a unified framework that converts every language problem into a text-to-text format. Our systematic study compares pre-training objectives, architectures, unlabeled datasets, transfer approaches, and other factors on dozens of language understanding tasks. By combining the insights from our exploration with scale and our new “Colossal Clean Crawled Corpus”, we achieve state-of-the-art results on many benchmarks covering summarization, question answering, text classification, and more. To facilitate future work on transfer learning for NLP, we release our dataset, pre-trained models, and code.

|

sshleifer/tiny-marian-en-de | sshleifer | "2020-06-25T02:27:15Z" | 283,572 | 0 | transformers | [

"transformers",

"pytorch",

"marian",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | "2022-03-02T23:29:05Z" | Entry not found |

kingabzpro/wav2vec2-large-xls-r-300m-Urdu | kingabzpro | "2023-10-11T16:39:15Z" | 283,199 | 13 | transformers | [

"transformers",

"pytorch",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"hf-asr-leaderboard",

"robust-speech-event",

"ur",

"dataset:mozilla-foundation/common_voice_8_0",

"base_model:facebook/wav2vec2-xls-r-300m",

"base_model:finetune:facebook/wav2vec2-xls-r-300m",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | "2022-03-02T23:29:05Z" | ---

language:

- ur

license: apache-2.0

tags:

- generated_from_trainer

- hf-asr-leaderboard

- robust-speech-event

datasets:

- mozilla-foundation/common_voice_8_0

metrics:

- wer

base_model: facebook/wav2vec2-xls-r-300m

model-index:

- name: wav2vec2-large-xls-r-300m-Urdu

results:

- task:

type: automatic-speech-recognition

name: Speech Recognition

dataset:

name: Common Voice 8

type: mozilla-foundation/common_voice_8_0

args: ur

metrics:

- type: wer

value: 39.89

name: Test WER

- type: cer

value: 16.7

name: Test CER

---

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-Urdu

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9889

- Wer: 0.5607

- Cer: 0.2370

#### Evaluation Commands

1. To evaluate on `mozilla-foundation/common_voice_8_0` with split `test`

```bash

python eval.py --model_id kingabzpro/wav2vec2-large-xls-r-300m-Urdu --dataset mozilla-foundation/common_voice_8_0 --config ur --split test

```

### Inference With LM

```python

from datasets import load_dataset, Audio

from transformers import pipeline

model = "kingabzpro/wav2vec2-large-xls-r-300m-Urdu"

data = load_dataset("mozilla-foundation/common_voice_8_0",

"ur",

split="test",

streaming=True,

use_auth_token=True)

sample_iter = iter(data.cast_column("path",

Audio(sampling_rate=16_000)))

sample = next(sample_iter)

asr = pipeline("automatic-speech-recognition", model=model)

prediction = asr(sample["path"]["array"],

chunk_length_s=5,

stride_length_s=1)

prediction

# => {'text': 'اب یہ ونگین لمحاتانکھار دلمیں میںفوث کریلیا اجائ'}

```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:------:|:----:|:---------------:|:------:|:------:|

| 3.6398 | 30.77 | 400 | 3.3517 | 1.0 | 1.0 |

| 2.9225 | 61.54 | 800 | 2.5123 | 1.0 | 0.8310 |

| 1.2568 | 92.31 | 1200 | 0.9699 | 0.6273 | 0.2575 |

| 0.8974 | 123.08 | 1600 | 0.9715 | 0.5888 | 0.2457 |

| 0.7151 | 153.85 | 2000 | 0.9984 | 0.5588 | 0.2353 |

| 0.6416 | 184.62 | 2400 | 0.9889 | 0.5607 | 0.2370 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

### Eval results on Common Voice 8 "test" (WER):

| Without LM | With LM (run `./eval.py`) |

|---|---|

| 52.03 | 39.89 |

|

oliverguhr/german-sentiment-bert | oliverguhr | "2023-03-16T18:09:30Z" | 282,141 | 53 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"text-classification",

"sentiment",

"de",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2022-03-02T23:29:05Z" | ---

language:

- de

tags:

- sentiment

- bert

license: mit

widget:

- text: "Das ist gar nicht mal so schlecht"

metrics:

- f1

---

# German Sentiment Classification with Bert

This model was trained for sentiment classification of German language texts. To achieve the best results all model inputs needs to be preprocessed with the same procedure, that was applied during the training. To simplify the usage of the model,

we provide a Python package that bundles the code need for the preprocessing and inferencing.

The model uses the Googles Bert architecture and was trained on 1.834 million German-language samples. The training data contains texts from various domains like Twitter, Facebook and movie, app and hotel reviews.

You can find more information about the dataset and the training process in the [paper](http://www.lrec-conf.org/proceedings/lrec2020/pdf/2020.lrec-1.202.pdf).

## Using the Python package

To get started install the package from [pypi](https://pypi.org/project/germansentiment/):

```bash

pip install germansentiment

```

```python

from germansentiment import SentimentModel

model = SentimentModel()

texts = [

"Mit keinem guten Ergebniss","Das ist gar nicht mal so gut",

"Total awesome!","nicht so schlecht wie erwartet",

"Der Test verlief positiv.","Sie fährt ein grünes Auto."]

result = model.predict_sentiment(texts)

print(result)

```

The code above will output following list:

```python

["negative","negative","positive","positive","neutral", "neutral"]

```

### Output class probabilities

```python

from germansentiment import SentimentModel

model = SentimentModel()

classes, probabilities = model.predict_sentiment(["das ist super"], output_probabilities = True)

print(classes, probabilities)

```

```python

['positive'] [[['positive', 0.9761366844177246], ['negative', 0.023540444672107697], ['neutral', 0.00032294404809363186]]]

```

## Model and Data

If you are interested in code and data that was used to train this model please have a look at [this repository](https://github.com/oliverguhr/german-sentiment) and our [paper](http://www.lrec-conf.org/proceedings/lrec2020/pdf/2020.lrec-1.202.pdf). Here is a table of the F1 scores that this model achieves on different datasets. Since we trained this model with a newer version of the transformer library, the results are slightly better than reported in the paper.

| Dataset | F1 micro Score |

| :----------------------------------------------------------- | -------------: |

| [holidaycheck](https://github.com/oliverguhr/german-sentiment) | 0.9568 |

| [scare](https://www.romanklinger.de/scare/) | 0.9418 |

| [filmstarts](https://github.com/oliverguhr/german-sentiment) | 0.9021 |

| [germeval](https://sites.google.com/view/germeval2017-absa/home) | 0.7536 |

| [PotTS](https://www.aclweb.org/anthology/L16-1181/) | 0.6780 |

| [emotions](https://github.com/oliverguhr/german-sentiment) | 0.9649 |

| [sb10k](https://www.spinningbytes.com/resources/germansentiment/) | 0.7376 |

| [Leipzig Wikipedia Corpus 2016](https://wortschatz.uni-leipzig.de/de/download/german) | 0.9967 |

| all | 0.9639 |

## Cite

For feedback and questions contact me view mail or Twitter [@oliverguhr](https://twitter.com/oliverguhr). Please cite us if you found this useful:

```

@InProceedings{guhr-EtAl:2020:LREC,

author = {Guhr, Oliver and Schumann, Anne-Kathrin and Bahrmann, Frank and Böhme, Hans Joachim},

title = {Training a Broad-Coverage German Sentiment Classification Model for Dialog Systems},

booktitle = {Proceedings of The 12th Language Resources and Evaluation Conference},

month = {May},

year = {2020},

address = {Marseille, France},

publisher = {European Language Resources Association},

pages = {1620--1625},

url = {https://www.aclweb.org/anthology/2020.lrec-1.202}

}

```

|

lucas-leme/FinBERT-PT-BR | lucas-leme | "2024-02-13T15:20:33Z" | 281,667 | 21 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"pt",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2022-12-04T22:15:16Z" | ---

language: pt

license: apache-2.0

widget:

- text: "O futuro de DI caiu 20 bps nesta manhã"

example_title: "Example 1"

- text: "O Nubank decidiu cortar a faixa de preço da oferta pública inicial (IPO) após revés no humor dos mercados internacionais com as fintechs."

example_title: "Example 2"

- text: "O Ibovespa acompanha correção do mercado e fecha com alta moderada"

example_title: "Example 3"

---

# FinBERT-PT-BR : Financial BERT PT BR

FinBERT-PT-BR is a pre-trained NLP model to analyze sentiment of Brazilian Portuguese financial texts.

The model was trained in two main stages: language modeling and sentiment modeling. In the first stage, a language model was trained with more than 1.4 million texts of financial news in Portuguese.

From this first training, it was possible to build a sentiment classifier with few labeled texts (500) that presented a satisfactory convergence.

At the end of the work, a comparative analysis with other models and the possible applications of the developed model are presented.

In the comparative analysis, it was possible to observe that the developed model presented better results than the current models in the state of the art.

Among the applications, it was demonstrated that the model can be used to build sentiment indices, investment strategies and macroeconomic data analysis, such as inflation.

## Applications

### Sentiment Index

## Usage

#### BertForSequenceClassification

```python

from transformers import AutoTokenizer, BertForSequenceClassification

import numpy as np

pred_mapper = {

0: "POSITIVE",

1: "NEGATIVE",

2: "NEUTRAL"

}

tokenizer = AutoTokenizer.from_pretrained("lucas-leme/FinBERT-PT-BR")

finbertptbr = BertForSequenceClassification.from_pretrained("lucas-leme/FinBERT-PT-BR")

tokens = tokenizer(["Hoje a bolsa caiu", "Hoje a bolsa subiu"], return_tensors="pt",

padding=True, truncation=True, max_length=512)

finbertptbr_outputs = finbertptbr(**tokens)

preds = [pred_mapper[np.argmax(pred)] for pred in finbertptbr_outputs.logits.cpu().detach().numpy()]

```

#### Pipeline

```python

from transformers import (

AutoTokenizer,

BertForSequenceClassification,

pipeline,

)

finbert_pt_br_tokenizer = AutoTokenizer.from_pretrained("lucas-leme/FinBERT-PT-BR")

finbert_pt_br_model = BertForSequenceClassification.from_pretrained("lucas-leme/FinBERT-PT-BR")

finbert_pt_br_pipeline = pipeline(task='text-classification', model=finbert_pt_br_model, tokenizer=finbert_pt_br_tokenizer)

finbert_pt_br_pipeline(['Hoje a bolsa caiu', 'Hoje a bolsa subiu'])

```

## Author

- [Lucas Leme](https://www.linkedin.com/in/lucas-leme-santos/) - lucaslssantos99@gmail.com

## Citation

```latex

@inproceedings{santos2023finbert,

title={FinBERT-PT-BR: An{\'a}lise de Sentimentos de Textos em Portugu{\^e}s do Mercado Financeiro},

author={Santos, Lucas L and Bianchi, Reinaldo AC and Costa, Anna HR},

booktitle={Anais do II Brazilian Workshop on Artificial Intelligence in Finance},

pages={144--155},

year={2023},

organization={SBC}

}

```

## Paper

- Paper: [FinBERT-PT-BR: Sentiment Analysis of Texts in Portuguese from the Financial Market](https://sol.sbc.org.br/index.php/bwaif/article/view/24960)

- Undergraduate thesis: [FinBERT-PT-BR: Análise de sentimentos de textos em português referentes ao mercado financeiro](https://pcs.usp.br/pcspf/wp-content/uploads/sites/8/2022/12/Monografia_PCS3860_COOP_2022_Grupo_C12.pdf)

|

apple/DFN5B-CLIP-ViT-H-14-378 | apple | "2024-08-29T10:27:02Z" | 281,635 | 62 | open_clip | [

"open_clip",

"pytorch",

"clip",

"arxiv:2309.17425",

"license:other",

"region:us"

] | null | "2023-10-30T23:08:21Z" | ---

license: other

license_name: apple-sample-code-license

license_link: LICENSE

---

A CLIP (Contrastive Language-Image Pre-training) model trained on DFN-5B.

Data Filtering Networks (DFNs) are small networks used to automatically filter large pools of uncurated data.

This model was trained on 5B images that were filtered from a pool of 43B uncurated image-text pairs

(12.8B image-text pairs from CommonPool-12.8B + 30B additional public image-text pairs).

This model has been converted to PyTorch from the original JAX checkpoints from Axlearn (https://github.com/apple/axlearn).

These weights are directly usable in OpenCLIP (image + text).

## Model Details

- **Model Type:** Contrastive Image-Text, Zero-Shot Image Classification.

- **Dataset:** DFN-5b

- **Papers:**

- Data Filtering Networks: https://arxiv.org/abs/2309.17425

- **Samples Seen:** 39B (224 x 224) + 5B (384 x 384)

## Model Metrics

| dataset | metric |

|:-----------------------|---------:|

| ImageNet 1k | 0.84218 |

| Caltech-101 | 0.954479 |

| CIFAR-10 | 0.9879 |

| CIFAR-100 | 0.9041 |

| CLEVR Counts | 0.362467 |

| CLEVR Distance | 0.206067 |

| Country211 | 0.37673 |

| Describable Textures | 0.71383 |

| EuroSAT | 0.608333 |

| FGVC Aircraft | 0.719938 |

| Food-101 | 0.963129 |

| GTSRB | 0.679018 |

| ImageNet Sketch | 0.73338 |

| ImageNet v2 | 0.7837 |

| ImageNet-A | 0.7992 |

| ImageNet-O | 0.3785 |

| ImageNet-R | 0.937633 |

| KITTI Vehicle Distance | 0.38256 |

| MNIST | 0.8372 |

| ObjectNet <sup>1</sup> | 0.796867 |

| Oxford Flowers-102 | 0.896834 |

| Oxford-IIIT Pet | 0.966841 |

| Pascal VOC 2007 | 0.826255 |

| PatchCamelyon | 0.695953 |

| Rendered SST2 | 0.566722 |

| RESISC45 | 0.755079 |

| Stanford Cars | 0.959955 |

| STL-10 | 0.991125 |

| SUN397 | 0.772799 |

| SVHN | 0.671251 |

| Flickr | 0.8808 |

| MSCOCO | 0.636889 |

| WinoGAViL | 0.571813 |

| iWildCam | 0.224911 |

| Camelyon17 | 0.711536 |

| FMoW | 0.209024 |

| Dollar Street | 0.71729 |

| GeoDE | 0.935699 |

| **Average** | **0.709421** |

[1]: Center-crop pre-processing used for ObjectNet (squashing results in lower accuracy of 0.737)

## Model Usage

### With OpenCLIP

```

import torch

import torch.nn.functional as F

from urllib.request import urlopen

from PIL import Image

from open_clip import create_model_from_pretrained, get_tokenizer

model, preprocess = create_model_from_pretrained('hf-hub:apple/DFN5B-CLIP-ViT-H-14-384')

tokenizer = get_tokenizer('ViT-H-14')

image = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

image = preprocess(image).unsqueeze(0)

labels_list = ["a dog", "a cat", "a donut", "a beignet"]

text = tokenizer(labels_list, context_length=model.context_length)

with torch.no_grad(), torch.cuda.amp.autocast():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features = F.normalize(image_features, dim=-1)

text_features = F.normalize(text_features, dim=-1)

text_probs = torch.sigmoid(image_features @ text_features.T * model.logit_scale.exp() + model.logit_bias)

zipped_list = list(zip(labels_list, [round(p.item(), 3) for p in text_probs[0]]))

print("Label probabilities: ", zipped_list)

```

## Citation

```bibtex

@article{fang2023data,

title={Data Filtering Networks},

author={Fang, Alex and Jose, Albin Madappally and Jain, Amit and Schmidt, Ludwig and Toshev, Alexander and Shankar, Vaishaal},

journal={arXiv preprint arXiv:2309.17425},

year={2023}

}

``` |

hkunlp/instructor-large | hkunlp | "2023-04-21T06:04:33Z" | 281,431 | 491 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"t5",

"text-embedding",

"embeddings",

"information-retrieval",

"beir",

"text-classification",

"language-model",

"text-clustering",

"text-semantic-similarity",

"text-evaluation",

"prompt-retrieval",

"text-reranking",

"feature-extraction",

"sentence-similarity",

"transformers",

"English",

"Sentence Similarity",

"natural_questions",

"ms_marco",

"fever",

"hotpot_qa",

"mteb",

"en",

"arxiv:2212.09741",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"region:us"

] | sentence-similarity | "2022-12-20T05:31:06Z" | ---

pipeline_tag: sentence-similarity

tags:

- text-embedding

- embeddings

- information-retrieval

- beir

- text-classification

- language-model

- text-clustering

- text-semantic-similarity

- text-evaluation

- prompt-retrieval

- text-reranking

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- t5

- English

- Sentence Similarity

- natural_questions

- ms_marco

- fever

- hotpot_qa

- mteb

language: en

inference: false

license: apache-2.0

model-index:

- name: INSTRUCTOR

results:

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (en)

config: en

split: test

revision: e8379541af4e31359cca9fbcf4b00f2671dba205

metrics:

- type: accuracy

value: 88.13432835820896

- type: ap

value: 59.298209334395665

- type: f1

value: 83.31769058643586

- task:

type: Classification

dataset:

type: mteb/amazon_polarity

name: MTEB AmazonPolarityClassification

config: default

split: test

revision: e2d317d38cd51312af73b3d32a06d1a08b442046

metrics:

- type: accuracy

value: 91.526375

- type: ap

value: 88.16327709705504

- type: f1

value: 91.51095801287843

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (en)

config: en

split: test

revision: 1399c76144fd37290681b995c656ef9b2e06e26d

metrics:

- type: accuracy

value: 47.856

- type: f1

value: 45.41490917650942

- task:

type: Retrieval

dataset:

type: arguana

name: MTEB ArguAna

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 31.223

- type: map_at_10

value: 47.947

- type: map_at_100

value: 48.742000000000004

- type: map_at_1000

value: 48.745

- type: map_at_3

value: 43.137

- type: map_at_5

value: 45.992

- type: mrr_at_1

value: 32.432

- type: mrr_at_10

value: 48.4

- type: mrr_at_100

value: 49.202

- type: mrr_at_1000

value: 49.205

- type: mrr_at_3

value: 43.551

- type: mrr_at_5

value: 46.467999999999996

- type: ndcg_at_1

value: 31.223

- type: ndcg_at_10

value: 57.045

- type: ndcg_at_100

value: 60.175

- type: ndcg_at_1000

value: 60.233000000000004

- type: ndcg_at_3

value: 47.171

- type: ndcg_at_5

value: 52.322

- type: precision_at_1

value: 31.223

- type: precision_at_10

value: 8.599

- type: precision_at_100

value: 0.991

- type: precision_at_1000

value: 0.1

- type: precision_at_3

value: 19.63

- type: precision_at_5

value: 14.282

- type: recall_at_1

value: 31.223

- type: recall_at_10

value: 85.989

- type: recall_at_100

value: 99.075

- type: recall_at_1000

value: 99.502

- type: recall_at_3

value: 58.89

- type: recall_at_5

value: 71.408

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-p2p

name: MTEB ArxivClusteringP2P

config: default

split: test

revision: a122ad7f3f0291bf49cc6f4d32aa80929df69d5d

metrics:

- type: v_measure

value: 43.1621946393635

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-s2s

name: MTEB ArxivClusteringS2S

config: default

split: test

revision: f910caf1a6075f7329cdf8c1a6135696f37dbd53

metrics:

- type: v_measure

value: 32.56417132407894

- task:

type: Reranking

dataset:

type: mteb/askubuntudupquestions-reranking

name: MTEB AskUbuntuDupQuestions

config: default

split: test

revision: 2000358ca161889fa9c082cb41daa8dcfb161a54

metrics:

- type: map

value: 64.29539304390207

- type: mrr

value: 76.44484017060196

- task:

type: STS

dataset:

type: mteb/biosses-sts

name: MTEB BIOSSES

config: default

split: test

revision: d3fb88f8f02e40887cd149695127462bbcf29b4a

metrics:

- type: cos_sim_spearman

value: 84.38746499431112

- task:

type: Classification

dataset:

type: mteb/banking77

name: MTEB Banking77Classification

config: default

split: test

revision: 0fd18e25b25c072e09e0d92ab615fda904d66300

metrics:

- type: accuracy

value: 78.51298701298701

- type: f1

value: 77.49041754069235

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-p2p

name: MTEB BiorxivClusteringP2P

config: default

split: test

revision: 65b79d1d13f80053f67aca9498d9402c2d9f1f40

metrics:

- type: v_measure

value: 37.61848554098577

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-s2s

name: MTEB BiorxivClusteringS2S

config: default

split: test

revision: 258694dd0231531bc1fd9de6ceb52a0853c6d908

metrics:

- type: v_measure

value: 31.32623280148178

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackAndroidRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 35.803000000000004

- type: map_at_10

value: 48.848

- type: map_at_100

value: 50.5

- type: map_at_1000

value: 50.602999999999994

- type: map_at_3

value: 45.111000000000004

- type: map_at_5

value: 47.202

- type: mrr_at_1

value: 44.635000000000005

- type: mrr_at_10

value: 55.593

- type: mrr_at_100

value: 56.169999999999995

- type: mrr_at_1000

value: 56.19499999999999

- type: mrr_at_3

value: 53.361999999999995

- type: mrr_at_5

value: 54.806999999999995

- type: ndcg_at_1

value: 44.635000000000005

- type: ndcg_at_10

value: 55.899

- type: ndcg_at_100

value: 60.958

- type: ndcg_at_1000

value: 62.302

- type: ndcg_at_3

value: 51.051

- type: ndcg_at_5

value: 53.351000000000006

- type: precision_at_1

value: 44.635000000000005

- type: precision_at_10

value: 10.786999999999999

- type: precision_at_100

value: 1.6580000000000001

- type: precision_at_1000

value: 0.213

- type: precision_at_3

value: 24.893

- type: precision_at_5

value: 17.740000000000002

- type: recall_at_1

value: 35.803000000000004

- type: recall_at_10

value: 68.657

- type: recall_at_100

value: 89.77199999999999

- type: recall_at_1000

value: 97.67

- type: recall_at_3

value: 54.066

- type: recall_at_5

value: 60.788

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackEnglishRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 33.706

- type: map_at_10

value: 44.896

- type: map_at_100

value: 46.299

- type: map_at_1000

value: 46.44

- type: map_at_3

value: 41.721000000000004

- type: map_at_5

value: 43.486000000000004

- type: mrr_at_1

value: 41.592

- type: mrr_at_10

value: 50.529

- type: mrr_at_100

value: 51.22

- type: mrr_at_1000

value: 51.258

- type: mrr_at_3

value: 48.205999999999996

- type: mrr_at_5

value: 49.528

- type: ndcg_at_1

value: 41.592

- type: ndcg_at_10

value: 50.77199999999999

- type: ndcg_at_100

value: 55.383

- type: ndcg_at_1000

value: 57.288

- type: ndcg_at_3

value: 46.324

- type: ndcg_at_5

value: 48.346000000000004

- type: precision_at_1

value: 41.592

- type: precision_at_10

value: 9.516

- type: precision_at_100

value: 1.541

- type: precision_at_1000

value: 0.2

- type: precision_at_3

value: 22.399

- type: precision_at_5

value: 15.770999999999999

- type: recall_at_1

value: 33.706

- type: recall_at_10

value: 61.353

- type: recall_at_100

value: 80.182

- type: recall_at_1000

value: 91.896

- type: recall_at_3

value: 48.204

- type: recall_at_5

value: 53.89699999999999

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGamingRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 44.424

- type: map_at_10

value: 57.169000000000004

- type: map_at_100

value: 58.202

- type: map_at_1000

value: 58.242000000000004

- type: map_at_3

value: 53.825

- type: map_at_5

value: 55.714

- type: mrr_at_1

value: 50.470000000000006

- type: mrr_at_10

value: 60.489000000000004

- type: mrr_at_100

value: 61.096

- type: mrr_at_1000

value: 61.112

- type: mrr_at_3

value: 58.192

- type: mrr_at_5

value: 59.611999999999995

- type: ndcg_at_1

value: 50.470000000000006

- type: ndcg_at_10

value: 63.071999999999996

- type: ndcg_at_100

value: 66.964

- type: ndcg_at_1000

value: 67.659

- type: ndcg_at_3

value: 57.74399999999999

- type: ndcg_at_5

value: 60.367000000000004

- type: precision_at_1

value: 50.470000000000006

- type: precision_at_10

value: 10.019

- type: precision_at_100

value: 1.29

- type: precision_at_1000

value: 0.13899999999999998

- type: precision_at_3

value: 25.558999999999997

- type: precision_at_5

value: 17.467

- type: recall_at_1

value: 44.424

- type: recall_at_10

value: 77.02

- type: recall_at_100

value: 93.738

- type: recall_at_1000

value: 98.451

- type: recall_at_3

value: 62.888

- type: recall_at_5

value: 69.138

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGisRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 26.294

- type: map_at_10

value: 34.503

- type: map_at_100

value: 35.641

- type: map_at_1000

value: 35.724000000000004

- type: map_at_3

value: 31.753999999999998

- type: map_at_5

value: 33.190999999999995

- type: mrr_at_1

value: 28.362

- type: mrr_at_10

value: 36.53

- type: mrr_at_100

value: 37.541000000000004

- type: mrr_at_1000

value: 37.602000000000004

- type: mrr_at_3

value: 33.917

- type: mrr_at_5

value: 35.358000000000004

- type: ndcg_at_1

value: 28.362

- type: ndcg_at_10

value: 39.513999999999996

- type: ndcg_at_100

value: 44.815

- type: ndcg_at_1000

value: 46.839

- type: ndcg_at_3

value: 34.02

- type: ndcg_at_5

value: 36.522

- type: precision_at_1

value: 28.362

- type: precision_at_10

value: 6.101999999999999

- type: precision_at_100

value: 0.9129999999999999

- type: precision_at_1000

value: 0.11399999999999999

- type: precision_at_3

value: 14.161999999999999

- type: precision_at_5

value: 9.966

- type: recall_at_1

value: 26.294

- type: recall_at_10

value: 53.098

- type: recall_at_100

value: 76.877

- type: recall_at_1000

value: 91.834

- type: recall_at_3

value: 38.266

- type: recall_at_5

value: 44.287

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackMathematicaRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 16.407

- type: map_at_10

value: 25.185999999999996

- type: map_at_100

value: 26.533

- type: map_at_1000

value: 26.657999999999998

- type: map_at_3

value: 22.201999999999998

- type: map_at_5

value: 23.923

- type: mrr_at_1

value: 20.522000000000002

- type: mrr_at_10

value: 29.522

- type: mrr_at_100

value: 30.644

- type: mrr_at_1000

value: 30.713

- type: mrr_at_3

value: 26.679000000000002

- type: mrr_at_5

value: 28.483000000000004

- type: ndcg_at_1

value: 20.522000000000002

- type: ndcg_at_10

value: 30.656

- type: ndcg_at_100

value: 36.864999999999995

- type: ndcg_at_1000

value: 39.675

- type: ndcg_at_3

value: 25.319000000000003

- type: ndcg_at_5

value: 27.992

- type: precision_at_1

value: 20.522000000000002

- type: precision_at_10

value: 5.795999999999999

- type: precision_at_100

value: 1.027

- type: precision_at_1000

value: 0.13999999999999999

- type: precision_at_3

value: 12.396

- type: precision_at_5

value: 9.328

- type: recall_at_1

value: 16.407

- type: recall_at_10

value: 43.164

- type: recall_at_100

value: 69.695

- type: recall_at_1000

value: 89.41900000000001

- type: recall_at_3

value: 28.634999999999998

- type: recall_at_5

value: 35.308

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackPhysicsRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 30.473

- type: map_at_10

value: 41.676

- type: map_at_100

value: 43.120999999999995

- type: map_at_1000

value: 43.230000000000004

- type: map_at_3

value: 38.306000000000004

- type: map_at_5

value: 40.355999999999995

- type: mrr_at_1

value: 37.536

- type: mrr_at_10

value: 47.643

- type: mrr_at_100

value: 48.508

- type: mrr_at_1000

value: 48.551

- type: mrr_at_3

value: 45.348

- type: mrr_at_5

value: 46.744

- type: ndcg_at_1

value: 37.536

- type: ndcg_at_10

value: 47.823

- type: ndcg_at_100

value: 53.395

- type: ndcg_at_1000

value: 55.271

- type: ndcg_at_3

value: 42.768

- type: ndcg_at_5

value: 45.373000000000005

- type: precision_at_1

value: 37.536

- type: precision_at_10

value: 8.681

- type: precision_at_100

value: 1.34

- type: precision_at_1000

value: 0.165

- type: precision_at_3

value: 20.468

- type: precision_at_5

value: 14.495

- type: recall_at_1

value: 30.473

- type: recall_at_10

value: 60.092999999999996

- type: recall_at_100

value: 82.733

- type: recall_at_1000

value: 94.875

- type: recall_at_3

value: 45.734

- type: recall_at_5

value: 52.691

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackProgrammersRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 29.976000000000003

- type: map_at_10

value: 41.097

- type: map_at_100

value: 42.547000000000004

- type: map_at_1000

value: 42.659000000000006

- type: map_at_3

value: 37.251

- type: map_at_5

value: 39.493

- type: mrr_at_1

value: 37.557

- type: mrr_at_10

value: 46.605000000000004

- type: mrr_at_100

value: 47.487

- type: mrr_at_1000

value: 47.54

- type: mrr_at_3

value: 43.721

- type: mrr_at_5

value: 45.411

- type: ndcg_at_1

value: 37.557

- type: ndcg_at_10

value: 47.449000000000005

- type: ndcg_at_100

value: 53.052

- type: ndcg_at_1000

value: 55.010999999999996

- type: ndcg_at_3

value: 41.439

- type: ndcg_at_5

value: 44.292

- type: precision_at_1

value: 37.557

- type: precision_at_10

value: 8.847

- type: precision_at_100

value: 1.357

- type: precision_at_1000

value: 0.16999999999999998

- type: precision_at_3

value: 20.091

- type: precision_at_5

value: 14.384

- type: recall_at_1

value: 29.976000000000003

- type: recall_at_10

value: 60.99099999999999

- type: recall_at_100

value: 84.245

- type: recall_at_1000

value: 96.97200000000001

- type: recall_at_3

value: 43.794

- type: recall_at_5

value: 51.778999999999996

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 28.099166666666665

- type: map_at_10

value: 38.1365

- type: map_at_100

value: 39.44491666666667

- type: map_at_1000

value: 39.55858333333334

- type: map_at_3

value: 35.03641666666666

- type: map_at_5

value: 36.79833333333334

- type: mrr_at_1

value: 33.39966666666667

- type: mrr_at_10

value: 42.42583333333333

- type: mrr_at_100

value: 43.28575

- type: mrr_at_1000

value: 43.33741666666667

- type: mrr_at_3

value: 39.94975

- type: mrr_at_5

value: 41.41633333333334

- type: ndcg_at_1

value: 33.39966666666667

- type: ndcg_at_10

value: 43.81741666666667

- type: ndcg_at_100

value: 49.08166666666667

- type: ndcg_at_1000

value: 51.121166666666674

- type: ndcg_at_3

value: 38.73575

- type: ndcg_at_5

value: 41.18158333333333

- type: precision_at_1

value: 33.39966666666667

- type: precision_at_10

value: 7.738916666666667

- type: precision_at_100

value: 1.2265833333333331

- type: precision_at_1000

value: 0.15983333333333336

- type: precision_at_3

value: 17.967416666666665

- type: precision_at_5

value: 12.78675

- type: recall_at_1

value: 28.099166666666665

- type: recall_at_10

value: 56.27049999999999

- type: recall_at_100

value: 78.93291666666667

- type: recall_at_1000

value: 92.81608333333334

- type: recall_at_3

value: 42.09775

- type: recall_at_5

value: 48.42533333333334

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackStatsRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 23.663

- type: map_at_10

value: 30.377

- type: map_at_100

value: 31.426

- type: map_at_1000

value: 31.519000000000002

- type: map_at_3

value: 28.069

- type: map_at_5

value: 29.256999999999998

- type: mrr_at_1

value: 26.687

- type: mrr_at_10

value: 33.107

- type: mrr_at_100

value: 34.055

- type: mrr_at_1000

value: 34.117999999999995

- type: mrr_at_3

value: 31.058000000000003

- type: mrr_at_5

value: 32.14

- type: ndcg_at_1

value: 26.687

- type: ndcg_at_10

value: 34.615

- type: ndcg_at_100

value: 39.776

- type: ndcg_at_1000

value: 42.05

- type: ndcg_at_3

value: 30.322

- type: ndcg_at_5

value: 32.157000000000004

- type: precision_at_1

value: 26.687

- type: precision_at_10

value: 5.491

- type: precision_at_100

value: 0.877

- type: precision_at_1000

value: 0.11499999999999999

- type: precision_at_3

value: 13.139000000000001

- type: precision_at_5

value: 9.049

- type: recall_at_1

value: 23.663

- type: recall_at_10

value: 45.035

- type: recall_at_100

value: 68.554

- type: recall_at_1000

value: 85.077

- type: recall_at_3

value: 32.982

- type: recall_at_5

value: 37.688

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackTexRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 17.403

- type: map_at_10

value: 25.197000000000003

- type: map_at_100

value: 26.355

- type: map_at_1000

value: 26.487

- type: map_at_3

value: 22.733

- type: map_at_5

value: 24.114

- type: mrr_at_1

value: 21.37

- type: mrr_at_10

value: 29.091

- type: mrr_at_100

value: 30.018

- type: mrr_at_1000

value: 30.096

- type: mrr_at_3

value: 26.887

- type: mrr_at_5

value: 28.157

- type: ndcg_at_1

value: 21.37

- type: ndcg_at_10

value: 30.026000000000003

- type: ndcg_at_100

value: 35.416

- type: ndcg_at_1000

value: 38.45

- type: ndcg_at_3

value: 25.764

- type: ndcg_at_5

value: 27.742

- type: precision_at_1

value: 21.37

- type: precision_at_10

value: 5.609

- type: precision_at_100

value: 0.9860000000000001

- type: precision_at_1000

value: 0.14300000000000002

- type: precision_at_3

value: 12.423

- type: precision_at_5

value: 9.009

- type: recall_at_1

value: 17.403

- type: recall_at_10

value: 40.573

- type: recall_at_100

value: 64.818

- type: recall_at_1000

value: 86.53699999999999

- type: recall_at_3

value: 28.493000000000002

- type: recall_at_5

value: 33.660000000000004

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackUnixRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 28.639

- type: map_at_10

value: 38.951

- type: map_at_100

value: 40.238

- type: map_at_1000

value: 40.327

- type: map_at_3

value: 35.842

- type: map_at_5

value: 37.617

- type: mrr_at_1

value: 33.769

- type: mrr_at_10

value: 43.088

- type: mrr_at_100

value: 44.03

- type: mrr_at_1000

value: 44.072

- type: mrr_at_3

value: 40.656

- type: mrr_at_5

value: 42.138999999999996

- type: ndcg_at_1

value: 33.769

- type: ndcg_at_10

value: 44.676

- type: ndcg_at_100

value: 50.416000000000004

- type: ndcg_at_1000

value: 52.227999999999994

- type: ndcg_at_3

value: 39.494

- type: ndcg_at_5

value: 42.013

- type: precision_at_1

value: 33.769

- type: precision_at_10

value: 7.668

- type: precision_at_100

value: 1.18

- type: precision_at_1000

value: 0.145

- type: precision_at_3

value: 18.221

- type: precision_at_5

value: 12.966

- type: recall_at_1

value: 28.639

- type: recall_at_10

value: 57.687999999999995

- type: recall_at_100

value: 82.541

- type: recall_at_1000

value: 94.896

- type: recall_at_3

value: 43.651

- type: recall_at_5

value: 49.925999999999995

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWebmastersRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 29.57

- type: map_at_10

value: 40.004

- type: map_at_100

value: 41.75

- type: map_at_1000

value: 41.97

- type: map_at_3

value: 36.788

- type: map_at_5

value: 38.671

- type: mrr_at_1

value: 35.375

- type: mrr_at_10

value: 45.121

- type: mrr_at_100

value: 45.994

- type: mrr_at_1000

value: 46.04

- type: mrr_at_3

value: 42.227

- type: mrr_at_5

value: 43.995

- type: ndcg_at_1

value: 35.375

- type: ndcg_at_10

value: 46.392

- type: ndcg_at_100

value: 52.196

- type: ndcg_at_1000

value: 54.274

- type: ndcg_at_3

value: 41.163

- type: ndcg_at_5

value: 43.813

- type: precision_at_1

value: 35.375

- type: precision_at_10

value: 8.676

- type: precision_at_100

value: 1.678

- type: precision_at_1000

value: 0.253

- type: precision_at_3

value: 19.104

- type: precision_at_5

value: 13.913

- type: recall_at_1

value: 29.57

- type: recall_at_10

value: 58.779

- type: recall_at_100

value: 83.337

- type: recall_at_1000

value: 95.979

- type: recall_at_3

value: 44.005

- type: recall_at_5

value: 50.975

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWordpressRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 20.832

- type: map_at_10

value: 29.733999999999998

- type: map_at_100

value: 30.727

- type: map_at_1000

value: 30.843999999999998

- type: map_at_3

value: 26.834999999999997

- type: map_at_5

value: 28.555999999999997

- type: mrr_at_1

value: 22.921

- type: mrr_at_10

value: 31.791999999999998

- type: mrr_at_100

value: 32.666000000000004

- type: mrr_at_1000

value: 32.751999999999995

- type: mrr_at_3

value: 29.144

- type: mrr_at_5

value: 30.622

- type: ndcg_at_1

value: 22.921

- type: ndcg_at_10

value: 34.915

- type: ndcg_at_100

value: 39.744

- type: ndcg_at_1000

value: 42.407000000000004

- type: ndcg_at_3

value: 29.421000000000003

- type: ndcg_at_5

value: 32.211

- type: precision_at_1

value: 22.921

- type: precision_at_10

value: 5.675

- type: precision_at_100

value: 0.872

- type: precision_at_1000

value: 0.121

- type: precision_at_3

value: 12.753999999999998

- type: precision_at_5

value: 9.353

- type: recall_at_1

value: 20.832

- type: recall_at_10

value: 48.795

- type: recall_at_100

value: 70.703

- type: recall_at_1000

value: 90.187

- type: recall_at_3

value: 34.455000000000005

- type: recall_at_5

value: 40.967

- task:

type: Retrieval

dataset:

type: climate-fever

name: MTEB ClimateFEVER

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 10.334

- type: map_at_10

value: 19.009999999999998

- type: map_at_100

value: 21.129

- type: map_at_1000

value: 21.328

- type: map_at_3

value: 15.152

- type: map_at_5

value: 17.084

- type: mrr_at_1

value: 23.453

- type: mrr_at_10

value: 36.099

- type: mrr_at_100

value: 37.069

- type: mrr_at_1000

value: 37.104

- type: mrr_at_3

value: 32.096000000000004

- type: mrr_at_5

value: 34.451

- type: ndcg_at_1

value: 23.453

- type: ndcg_at_10

value: 27.739000000000004

- type: ndcg_at_100

value: 35.836

- type: ndcg_at_1000

value: 39.242

- type: ndcg_at_3

value: 21.263

- type: ndcg_at_5

value: 23.677

- type: precision_at_1

value: 23.453

- type: precision_at_10

value: 9.199

- type: precision_at_100

value: 1.791

- type: precision_at_1000

value: 0.242

- type: precision_at_3

value: 16.2

- type: precision_at_5

value: 13.147

- type: recall_at_1

value: 10.334

- type: recall_at_10

value: 35.177

- type: recall_at_100

value: 63.009

- type: recall_at_1000

value: 81.938

- type: recall_at_3

value: 19.914

- type: recall_at_5

value: 26.077

- task:

type: Retrieval

dataset:

type: dbpedia-entity

name: MTEB DBPedia

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 8.212

- type: map_at_10

value: 17.386

- type: map_at_100

value: 24.234

- type: map_at_1000

value: 25.724999999999998

- type: map_at_3

value: 12.727

- type: map_at_5

value: 14.785

- type: mrr_at_1

value: 59.25

- type: mrr_at_10

value: 68.687

- type: mrr_at_100

value: 69.133

- type: mrr_at_1000

value: 69.14099999999999

- type: mrr_at_3

value: 66.917

- type: mrr_at_5

value: 67.742

- type: ndcg_at_1

value: 48.625

- type: ndcg_at_10

value: 36.675999999999995

- type: ndcg_at_100

value: 41.543

- type: ndcg_at_1000

value: 49.241

- type: ndcg_at_3

value: 41.373

- type: ndcg_at_5

value: 38.707

- type: precision_at_1

value: 59.25

- type: precision_at_10

value: 28.525

- type: precision_at_100

value: 9.027000000000001

- type: precision_at_1000

value: 1.8339999999999999

- type: precision_at_3

value: 44.833

- type: precision_at_5

value: 37.35

- type: recall_at_1

value: 8.212

- type: recall_at_10

value: 23.188

- type: recall_at_100

value: 48.613

- type: recall_at_1000

value: 73.093

- type: recall_at_3

value: 14.419

- type: recall_at_5

value: 17.798

- task:

type: Classification

dataset:

type: mteb/emotion

name: MTEB EmotionClassification

config: default

split: test

revision: 4f58c6b202a23cf9a4da393831edf4f9183cad37

metrics:

- type: accuracy

value: 52.725

- type: f1

value: 46.50743309855908

- task:

type: Retrieval

dataset:

type: fever

name: MTEB FEVER

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 55.086

- type: map_at_10

value: 66.914

- type: map_at_100

value: 67.321

- type: map_at_1000

value: 67.341

- type: map_at_3

value: 64.75800000000001

- type: map_at_5

value: 66.189

- type: mrr_at_1

value: 59.28600000000001

- type: mrr_at_10

value: 71.005

- type: mrr_at_100

value: 71.304

- type: mrr_at_1000

value: 71.313

- type: mrr_at_3

value: 69.037

- type: mrr_at_5

value: 70.35

- type: ndcg_at_1

value: 59.28600000000001

- type: ndcg_at_10

value: 72.695

- type: ndcg_at_100

value: 74.432

- type: ndcg_at_1000

value: 74.868

- type: ndcg_at_3

value: 68.72200000000001

- type: ndcg_at_5

value: 71.081

- type: precision_at_1

value: 59.28600000000001

- type: precision_at_10

value: 9.499

- type: precision_at_100

value: 1.052

- type: precision_at_1000

value: 0.11100000000000002

- type: precision_at_3

value: 27.503

- type: precision_at_5

value: 17.854999999999997

- type: recall_at_1

value: 55.086

- type: recall_at_10

value: 86.453

- type: recall_at_100

value: 94.028

- type: recall_at_1000

value: 97.052

- type: recall_at_3

value: 75.821

- type: recall_at_5

value: 81.6

- task:

type: Retrieval

dataset:

type: fiqa

name: MTEB FiQA2018

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 22.262999999999998

- type: map_at_10

value: 37.488

- type: map_at_100

value: 39.498

- type: map_at_1000

value: 39.687

- type: map_at_3

value: 32.529

- type: map_at_5

value: 35.455

- type: mrr_at_1

value: 44.907000000000004

- type: mrr_at_10

value: 53.239000000000004

- type: mrr_at_100

value: 54.086

- type: mrr_at_1000

value: 54.122

- type: mrr_at_3

value: 51.235

- type: mrr_at_5

value: 52.415

- type: ndcg_at_1

value: 44.907000000000004

- type: ndcg_at_10

value: 45.446

- type: ndcg_at_100

value: 52.429

- type: ndcg_at_1000

value: 55.169000000000004

- type: ndcg_at_3

value: 41.882000000000005

- type: ndcg_at_5

value: 43.178

- type: precision_at_1

value: 44.907000000000004

- type: precision_at_10

value: 12.931999999999999

- type: precision_at_100

value: 2.025

- type: precision_at_1000

value: 0.248

- type: precision_at_3

value: 28.652

- type: precision_at_5

value: 21.204

- type: recall_at_1

value: 22.262999999999998

- type: recall_at_10

value: 52.447

- type: recall_at_100

value: 78.045

- type: recall_at_1000

value: 94.419

- type: recall_at_3

value: 38.064

- type: recall_at_5

value: 44.769

- task:

type: Retrieval

dataset:

type: hotpotqa

name: MTEB HotpotQA

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 32.519

- type: map_at_10

value: 45.831

- type: map_at_100

value: 46.815

- type: map_at_1000

value: 46.899

- type: map_at_3

value: 42.836