modelId

stringlengths 4

122

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

392M

| likes

int64 0

6.56k

| library_name

stringclasses 368

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 51

values | createdAt

unknown | card

stringlengths 1

1M

|

|---|---|---|---|---|---|---|---|---|---|

Qwen/Qwen1.5-0.5B | Qwen | "2024-04-05T10:38:41Z" | 391,680 | 143 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"pretrained",

"conversational",

"en",

"arxiv:2309.16609",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | "2024-01-22T16:30:10Z" | ---

license: other

license_name: tongyi-qianwen-research

license_link: >-

https://huggingface.co/Qwen/Qwen1.5-0.5B/blob/main/LICENSE

language:

- en

pipeline_tag: text-generation

tags:

- pretrained

---

# Qwen1.5-0.5B

## Introduction

Qwen1.5 is the beta version of Qwen2, a transformer-based decoder-only language model pretrained on a large amount of data. In comparison with the previous released Qwen, the improvements include:

* 8 model sizes, including 0.5B, 1.8B, 4B, 7B, 14B, 32B and 72B dense models, and an MoE model of 14B with 2.7B activated;

* Significant performance improvement in Chat models;

* Multilingual support of both base and chat models;

* Stable support of 32K context length for models of all sizes

* No need of `trust_remote_code`.

For more details, please refer to our [blog post](https://qwenlm.github.io/blog/qwen1.5/) and [GitHub repo](https://github.com/QwenLM/Qwen1.5).

## Model Details

Qwen1.5 is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. It is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, mixture of sliding window attention and full attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes. For the beta version, temporarily we did not include GQA and the mixture of SWA and full attention.

## Requirements

The code of Qwen1.5 has been in the latest Hugging face transformers and we advise you to install `transformers>=4.37.0`, or you might encounter the following error:

```

KeyError: 'qwen2'.

```

## Usage

We do not advise you to use base language models for text generation. Instead, you can apply post-training, e.g., SFT, RLHF, continued pretraining, etc., on this model.

## Citation

If you find our work helpful, feel free to give us a cite.

```

@article{qwen,

title={Qwen Technical Report},

author={Jinze Bai and Shuai Bai and Yunfei Chu and Zeyu Cui and Kai Dang and Xiaodong Deng and Yang Fan and Wenbin Ge and Yu Han and Fei Huang and Binyuan Hui and Luo Ji and Mei Li and Junyang Lin and Runji Lin and Dayiheng Liu and Gao Liu and Chengqiang Lu and Keming Lu and Jianxin Ma and Rui Men and Xingzhang Ren and Xuancheng Ren and Chuanqi Tan and Sinan Tan and Jianhong Tu and Peng Wang and Shijie Wang and Wei Wang and Shengguang Wu and Benfeng Xu and Jin Xu and An Yang and Hao Yang and Jian Yang and Shusheng Yang and Yang Yao and Bowen Yu and Hongyi Yuan and Zheng Yuan and Jianwei Zhang and Xingxuan Zhang and Yichang Zhang and Zhenru Zhang and Chang Zhou and Jingren Zhou and Xiaohuan Zhou and Tianhang Zhu},

journal={arXiv preprint arXiv:2309.16609},

year={2023}

}

``` |

google/paligemma-3b-mix-224 | google | "2024-07-19T12:09:50Z" | 390,935 | 60 | transformers | [

"transformers",

"safetensors",

"paligemma",

"image-text-to-text",

"arxiv:2310.09199",

"arxiv:2303.15343",

"arxiv:2403.08295",

"arxiv:1706.03762",

"arxiv:2010.11929",

"arxiv:2209.06794",

"arxiv:2209.04372",

"arxiv:2103.01913",

"arxiv:2205.12522",

"arxiv:2110.11624",

"arxiv:2108.03353",

"arxiv:2010.04295",

"arxiv:2401.06209",

"arxiv:2305.10355",

"arxiv:2203.10244",

"arxiv:1810.12440",

"arxiv:1905.13648",

"arxiv:1608.00272",

"arxiv:1908.04913",

"arxiv:2407.07726",

"license:gemma",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | image-text-to-text | "2024-05-12T23:03:44Z" | ---

library_name: transformers

license: gemma

pipeline_tag: image-text-to-text

extra_gated_heading: Access PaliGemma on Hugging Face

extra_gated_prompt: To access PaliGemma on Hugging Face, you’re required to review

and agree to Google’s usage license. To do this, please ensure you’re logged-in

to Hugging Face and click below. Requests are processed immediately.

extra_gated_button_content: Acknowledge license

---

# PaliGemma model card

**Model page:** [PaliGemma](https://ai.google.dev/gemma/docs/paligemma)

Transformers PaliGemma 3B weights, fine-tuned with 224*224 input images and 256 token input/output text sequences on a mixture of downstream academic datasets. The models are available in float32, bfloat16 and float16 format for research purposes only.

**Resources and technical documentation:**

* [Responsible Generative AI Toolkit](https://ai.google.dev/responsible)

* [PaliGemma on Kaggle](https://www.kaggle.com/models/google/paligemma)

* [PaliGemma on Vertex Model Garden](https://console.cloud.google.com/vertex-ai/publishers/google/model-garden/363)

**Terms of Use:** [Terms](https://www.kaggle.com/models/google/paligemma/license/consent/verify/huggingface?returnModelRepoId=google/paligemma-3b-mix-224)

**Authors:** Google

## Model information

### Model summary

#### Description

PaliGemma is a versatile and lightweight vision-language model (VLM) inspired by

[PaLI-3](https://arxiv.org/abs/2310.09199) and based on open components such as

the [SigLIP vision model](https://arxiv.org/abs/2303.15343) and the [Gemma

language model](https://arxiv.org/abs/2403.08295). It takes both image and text

as input and generates text as output, supporting multiple languages. It is designed for class-leading fine-tune performance on a wide range of vision-language tasks such as image and short video caption, visual question answering, text reading, object detection and object segmentation.

#### Model architecture

PaliGemma is the composition of a [Transformer

decoder](https://arxiv.org/abs/1706.03762) and a [Vision Transformer image

encoder](https://arxiv.org/abs/2010.11929), with a total of 3 billion

params. The text decoder is initialized from

[Gemma-2B](https://www.kaggle.com/models/google/gemma). The image encoder is

initialized from

[SigLIP-So400m/14](https://colab.research.google.com/github/google-research/big_vision/blob/main/big_vision/configs/proj/image_text/SigLIP_demo.ipynb).

PaliGemma is trained following the PaLI-3 recipes.

#### Inputs and outputs

* **Input:** Image and text string, such as a prompt to caption the image, or

a question.

* **Output:** Generated text in response to the input, such as a caption of

the image, an answer to a question, a list of object bounding box

coordinates, or segmentation codewords.

### Model data

#### Pre-train datasets

PaliGemma is pre-trained on the following mixture of datasets:

* **WebLI:** [WebLI (Web Language Image)](https://arxiv.org/abs/2209.06794) is

a web-scale multilingual image-text dataset built from the public web. A

wide range of WebLI splits are used to acquire versatile model capabilities,

such as visual semantic understanding, object localization,

visually-situated text understanding, multilinguality, etc.

* **CC3M-35L:** Curated English image-alt_text pairs from webpages ([Sharma et

al., 2018](https://aclanthology.org/P18-1238/)). We used the [Google Cloud

Translation API](https://cloud.google.com/translate) to translate into 34

additional languages.

* **VQ²A-CC3M-35L/VQG-CC3M-35L:** A subset of VQ2A-CC3M ([Changpinyo et al.,

2022a](https://aclanthology.org/2022.naacl-main.142/)), translated into the

same additional 34 languages as CC3M-35L, using the [Google Cloud

Translation API](https://cloud.google.com/translate).

* **OpenImages:** Detection and object-aware questions and answers

([Piergiovanni et al. 2022](https://arxiv.org/abs/2209.04372)) generated by

handcrafted rules on the [OpenImages dataset].

* **WIT:** Images and texts collected from Wikipedia ([Srinivasan et al.,

2021](https://arxiv.org/abs/2103.01913)).

[OpenImages dataset]: https://storage.googleapis.com/openimages/web/factsfigures_v7.html

#### Data responsibility filtering

The following filters are applied to WebLI, with the goal of training PaliGemma

on clean data:

* **Pornographic image filtering:** This filter removes images deemed to be of

pornographic nature.

* **Text safety filtering:** We identify and filter out images that are paired

with unsafe text. Unsafe text is any text deemed to contain or be about

CSAI, pornography, vulgarities, or otherwise offensive.

* **Text toxicity filtering:** We further use the [Perspective

API](https://perspectiveapi.com/) to identify and filter out images that are

paired with text deemed insulting, obscene, hateful or otherwise toxic.

* **Text personal information filtering:** We filtered certain personal information and other sensitive data using [Cloud Data Loss Prevention (DLP)

API](https://cloud.google.com/security/products/dlp) to protect the privacy

of individuals. Identifiers such as social security numbers and [other sensitive information types] were removed.

* **Additional methods:** Filtering based on content quality and safety in

line with our policies and practices.

[other sensitive information types]: https://cloud.google.com/sensitive-data-protection/docs/high-sensitivity-infotypes-reference?_gl=1*jg604m*_ga*ODk5MzA3ODQyLjE3MTAzMzQ3NTk.*_ga_WH2QY8WWF5*MTcxMDUxNTkxMS4yLjEuMTcxMDUxNjA2NC4wLjAuMA..&_ga=2.172110058.-899307842.1710334759

## How to Use

PaliGemma is a single-turn vision language model not meant for conversational use,

and it works best when fine-tuning to a specific use case.

You can configure which task the model will solve by conditioning it with task prefixes,

such as “detect” or “segment”. The pretrained models were trained in this fashion to imbue

them with a rich set of capabilities (question answering, captioning, segmentation, etc.).

However, they are not designed to be used directly, but to be transferred (by fine-tuning)

to specific tasks using a similar prompt structure. For interactive testing, you can use

the "mix" family of models, which have been fine-tuned on a mixture of tasks. To see model

[google/paligemma-3b-mix-448](https://huggingface.co/google/paligemma-3b-mix-448) in action,

check [this Space that uses the Transformers codebase](https://huggingface.co/spaces/big-vision/paligemma-hf).

Please, refer to the [usage and limitations section](#usage-and-limitations) for intended

use cases, or visit the [blog post](https://huggingface.co/blog/paligemma-google-vlm) for

additional details and examples.

## Use in Transformers

The following snippets use model `google/paligemma-3b-mix-224` for reference purposes.

The model in this repo you are now browsing may have been trained for other tasks, please

make sure you use appropriate inputs for the task at hand.

### Running the default precision (`float32`) on CPU

```python

from transformers import AutoProcessor, PaliGemmaForConditionalGeneration

from PIL import Image

import requests

import torch

model_id = "google/paligemma-3b-mix-224"

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

model = PaliGemmaForConditionalGeneration.from_pretrained(model_id).eval()

processor = AutoProcessor.from_pretrained(model_id)

# Instruct the model to create a caption in Spanish

prompt = "caption es"

model_inputs = processor(text=prompt, images=image, return_tensors="pt")

input_len = model_inputs["input_ids"].shape[-1]

with torch.inference_mode():

generation = model.generate(**model_inputs, max_new_tokens=100, do_sample=False)

generation = generation[0][input_len:]

decoded = processor.decode(generation, skip_special_tokens=True)

print(decoded)

```

Output: `Un auto azul estacionado frente a un edificio.`

### Running other precisions on CUDA

For convenience, the repos contain revisions of the weights already converted to `bfloat16` and `float16`,

so you can use them to reduce the download size and avoid casting on your local computer.

This is how you'd run `bfloat16` on an nvidia CUDA card.

```python

from transformers import AutoProcessor, PaliGemmaForConditionalGeneration

from PIL import Image

import requests

import torch

model_id = "google/paligemma-3b-mix-224"

device = "cuda:0"

dtype = torch.bfloat16

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

model = PaliGemmaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=dtype,

device_map=device,

revision="bfloat16",

).eval()

processor = AutoProcessor.from_pretrained(model_id)

# Instruct the model to create a caption in Spanish

prompt = "caption es"

model_inputs = processor(text=prompt, images=image, return_tensors="pt").to(model.device)

input_len = model_inputs["input_ids"].shape[-1]

with torch.inference_mode():

generation = model.generate(**model_inputs, max_new_tokens=100, do_sample=False)

generation = generation[0][input_len:]

decoded = processor.decode(generation, skip_special_tokens=True)

print(decoded)

```

### Loading in 4-bit / 8-bit

You need to install `bitsandbytes` to automatically run inference using 8-bit or 4-bit precision:

```

pip install bitsandbytes accelerate

```

```

from transformers import AutoProcessor, PaliGemmaForConditionalGeneration

from PIL import Image

import requests

import torch

model_id = "google/paligemma-3b-mix-224"

device = "cuda:0"

dtype = torch.bfloat16

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

quantization_config = BitsAndBytesConfig(load_in_8bit=True)

model = PaliGemmaForConditionalGeneration.from_pretrained(

model_id, quantization_config=quantization_config

).eval()

processor = AutoProcessor.from_pretrained(model_id)

# Instruct the model to create a caption in Spanish

prompt = "caption es"

model_inputs = processor(text=prompt, images=image, return_tensors="pt").to(model.device)

input_len = model_inputs["input_ids"].shape[-1]

with torch.inference_mode():

generation = model.generate(**model_inputs, max_new_tokens=100, do_sample=False)

generation = generation[0][input_len:]

decoded = processor.decode(generation, skip_special_tokens=True)

print(decoded)

```

## Implementation information

### Hardware

PaliGemma was trained using the latest generation of Tensor Processing Unit

(TPU) hardware (TPUv5e).

### Software

Training was done using [JAX](https://github.com/google/jax),

[Flax](https://github.com/google/flax),

[TFDS](https://github.com/tensorflow/datasets) and

[`big_vision`](https://github.com/google-research/big_vision).

JAX allows researchers to take advantage of the latest generation of hardware,

including TPUs, for faster and more efficient training of large models.

TFDS is used to access datasets and Flax is used for model architecture. The

PaliGemma fine-tune code and inference code are released in the `big_vision`

GitHub repository.

## Evaluation information

### Benchmark results

In order to verify the transferability of PaliGemma to a wide variety of

academic tasks, we fine-tune the pretrained models on each task. Additionally we

train the mix model with a mixture of the transfer tasks. We report results on

different resolutions to provide an impression of which tasks benefit from

increased resolution. Importantly, none of these tasks or datasets are part of

the pretraining data mixture, and their images are explicitly removed from the

web-scale pre-training data.

#### Single task (fine-tune on single task)

<table>

<tbody><tr>

<th>Benchmark<br>(train split)</th>

<th>Metric<br>(split)</th>

<th>pt-224</th>

<th>pt-448</th>

<th>pt-896</th>

</tr>

<tr>

<th>Captioning</th>

</tr>

<tr>

<td>

<a href="https://cocodataset.org/#home">COCO captions</a><br>(train+restval)

</td>

<td>CIDEr (val)</td>

<td>141.92</td>

<td>144.60</td>

</tr>

<tr>

<td>

<a href="https://nocaps.org/">NoCaps</a><br>(Eval of COCO<br>captions transfer)

</td>

<td>CIDEr (val)</td>

<td>121.72</td>

<td>123.58</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/pdf/2205.12522">COCO-35L</a><br>(train)

</td>

<td>CIDEr dev<br>(en/avg-34/avg)</td>

<td>

139.2<br>

115.8<br>

116.4

</td>

<td>

141.2<br>

118.0<br>

118.6

</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/pdf/2205.12522">XM3600</a><br>(Eval of COCO-35L transfer)

</td>

<td>CIDEr dev<br>(en/avg-34/avg)</td>

<td>

78.1<br>

41.3<br>

42.4

</td>

<td>

80.0<br>

41.9<br>

42.9

</td>

</tr>

<tr>

<td>

<a href="https://textvqa.org/textcaps/">TextCaps</a><br>(train)

</td>

<td>CIDEr (val)</td>

<td>127.48</td>

<td>153.94</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/2110.11624">SciCap</a><br>(first sentence, no subfigure)<br>(train+val)

</td>

<td>CIDEr/BLEU-4<br>(test)</td>

<td>

162.25<br>

0.192<br>

</td>

<td>

181.49<br>

0.211<br>

</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/2108.03353">Screen2words</a><br>(train+dev)

</td>

<td>CIDEr (test)</td>

<td>117.57</td>

<td>119.59</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/2010.04295">Widget Captioning</a><br>(train+dev)

</td>

<td>CIDEr (test)</td>

<td>136.07</td>

<td>148.36</td>

</tr>

<tr>

<th>Question answering</th>

</tr>

<tr>

<td>

<a href="https://visualqa.org/index.html">VQAv2</a><br>(train+validation)

</td>

<td>Accuracy<br>(Test server - std)</td>

<td>83.19</td>

<td>85.64</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/2401.06209">MMVP</a><br>(Eval of VQAv2 transfer)

</td>

<td>Paired Accuracy</td>

<td>47.33</td>

<td>45.33</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/2305.10355">POPE</a><br>(Eval of VQAv2 transfer)

</td>

<td>Accuracy<br>(random/popular/<br>adversarial)</td>

<td>

87.80<br>

85.87<br>

84.27

</td>

<td>

88.23<br>

86.77<br>

85.90

</td>

</tr>

<tr>

<td>

<a href="https://okvqa.allenai.org/">OKVQA</a><br>(train)

</td>

<td>Accuracy (val)</td>

<td>63.54</td>

<td>63.15</td>

</tr>

<tr>

<td>

<a href="https://allenai.org/project/a-okvqa/home">A-OKVQA</a> (MC)<br>(train+val)

</td>

<td>Accuracy<br>(Test server)</td>

<td>76.37</td>

<td>76.90</td>

</tr>

<tr>

<td>

<a href="https://allenai.org/project/a-okvqa/home">A-OKVQA</a> (DA)<br>(train+val)

</td>

<td>Accuracy<br>(Test server)</td>

<td>61.85</td>

<td>63.22</td>

</tr>

<tr>

<td>

<a href="https://cs.stanford.edu/people/dorarad/gqa/about.html">GQA</a><br>(train_balanced+<br>val_balanced)

</td>

<td>Accuracy<br>(testdev balanced)</td>

<td>65.61</td>

<td>67.03</td>

</tr>

<tr>

<td>

<a href="https://aclanthology.org/2022.findings-acl.196/">xGQA</a><br>(Eval of GQA transfer)

</td>

<td>Mean Accuracy<br>(bn, de, en, id,<br>ko, pt, ru, zh)</td>

<td>58.37</td>

<td>59.07</td>

</tr>

<tr>

<td>

<a href="https://lil.nlp.cornell.edu/nlvr/">NLVR2</a><br>(train+dev)

</td>

<td>Accuracy (test)</td>

<td>90.02</td>

<td>88.93</td>

</tr>

<tr>

<td>

<a href="https://marvl-challenge.github.io/">MaRVL</a><br>(Eval of NLVR2 transfer)

</td>

<td>Mean Accuracy<br>(test)<br>(id, sw, ta, tr, zh)</td>

<td>80.57</td>

<td>76.78</td>

</tr>

<tr>

<td>

<a href="https://allenai.org/data/diagrams">AI2D</a><br>(train)

</td>

<td>Accuracy (test)</td>

<td>72.12</td>

<td>73.28</td>

</tr>

<tr>

<td>

<a href="https://scienceqa.github.io/">ScienceQA</a><br>(Img subset, no CoT)<br>(train+val)

</td>

<td>Accuracy (test)</td>

<td>95.39</td>

<td>95.93</td>

</tr>

<tr>

<td>

<a href="https://zenodo.org/records/6344334">RSVQA-LR</a> (Non numeric)<br>(train+val)

</td>

<td>Mean Accuracy<br>(test)</td>

<td>92.65</td>

<td>93.11</td>

</tr>

<tr>

<td>

<a href="https://zenodo.org/records/6344367">RSVQA-HR</a> (Non numeric)<br>(train+val)

</td>

<td>Mean Accuracy<br>(test/test2)</td>

<td>

92.61<br>

90.58

</td>

<td>

92.79<br>

90.54

</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/2203.10244">ChartQA</a><br>(human+aug)x(train+val)

</td>

<td>Mean Relaxed<br>Accuracy<br>(test_human,<br>test_aug)</td>

<td>57.08</td>

<td>71.36</td>

</tr>

<tr>

<td>

<a href="https://vizwiz.org/tasks-and-datasets/vqa/">VizWiz VQA</a><br>(train+val)

</td>

<td>Accuracy<br>(Test server - std)</td>

<td>

73.7

</td>

<td>

75.52

</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/1810.12440">TallyQA</a><br>(train)

</td>

<td>Accuracy<br>(test_simple/<br>test_complex)</td>

<td>

81.72<br>

69.56

</td>

<td>

84.86<br>

72.27

</td>

</tr>

<tr>

<td>

<a href="https://ocr-vqa.github.io/">OCR-VQA</a><br>(train+val)

</td>

<td>Accuracy (test)</td>

<td>72.32</td>

<td>74.61</td>

<td>74.93</td>

</tr>

<tr>

<td>

<a href="https://textvqa.org/">TextVQA</a><br>(train+val)

</td>

<td>Accuracy<br>(Test server - std)</td>

<td>55.47</td>

<td>73.15</td>

<td>76.48</td>

</tr>

<tr>

<td>

<a href="https://www.docvqa.org/">DocVQA</a><br>(train+val)

</td>

<td>ANLS (Test server)</td>

<td>43.74</td>

<td>78.02</td>

<td>84.77</td>

</tr>

<tr>

<td>

<a href="https://openaccess.thecvf.com/content/WACV2022/papers/Mathew_InfographicVQA_WACV_2022_paper.pdf">Infographic VQA</a><br>(train+val)

</td>

<td>ANLS (Test server)</td>

<td>28.46</td>

<td>40.47</td>

<td>47.75</td>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/1905.13648">SceneText VQA</a><br>(train+val)

</td>

<td>ANLS (Test server)</td>

<td>63.29</td>

<td>81.82</td>

<td>84.40</td>

</tr>

<tr>

<th>Segmentation</th>

</tr>

<tr>

<td>

<a href="https://arxiv.org/abs/1608.00272">RefCOCO</a><br>(combined refcoco, refcoco+,<br>refcocog excluding val<br>and test images)

</td>

<td>MIoU<br>(validation)<br>refcoco/refcoco+/<br>refcocog</td>

<td>

73.40<br>

68.32<br>

67.65

</td>

<td>

75.57<br>

69.76<br>

70.17

</td>

<td>

76.94<br>

72.18<br>

72.22

</td>

</tr>

<tr>

<th>Video tasks (Caption/QA)</th>

</tr>

<tr>

<td>MSR-VTT (Captioning)</td>

<td>CIDEr (test)</td>

<td>70.54</td>

</tr>

<tr>

<td>MSR-VTT (QA)</td>

<td>Accuracy (test)</td>

<td>50.09</td>

</tr>

<tr>

<td>ActivityNet (Captioning)</td>

<td>CIDEr (test)</td>

<td>34.62</td>

</tr>

<tr>

<td>ActivityNet (QA)</td>

<td>Accuracy (test)</td>

<td>50.78</td>

</tr>

<tr>

<td>VATEX (Captioning)</td>

<td>CIDEr (test)</td>

<td>79.73</td>

</tr>

<tr>

<td>MSVD (QA)</td>

<td>Accuracy (test)</td>

<td>60.22</td>

</tr>

</tbody></table>

#### Mix model (fine-tune on mixture of transfer tasks)

<table>

<tbody><tr>

<th>Benchmark</th>

<th>Metric (split)</th>

<th>mix-224</th>

<th>mix-448</th>

</tr>

<tr>

<td><a href="https://arxiv.org/abs/2401.06209">MMVP</a></td>

<td>Paired Accuracy</td>

<td>46.00</td>

<td>45.33</td>

</tr>

<tr>

<td><a href="https://arxiv.org/abs/2305.10355">POPE</a></td>

<td>Accuracy<br>(random/popular/adversarial)</td>

<td>

88.00<br>

86.63<br>

85.67

</td>

<td>

89.37<br>

88.40<br>

87.47

</td>

</tr>

</tbody></table>

## Ethics and safety

### Evaluation approach

Our evaluation methods include structured evaluations and internal red-teaming

testing of relevant content policies. Red-teaming was conducted by a number of

different teams, each with different goals and human evaluation metrics. These

models were evaluated against a number of different categories relevant to

ethics and safety, including:

* Human evaluation on prompts covering child safety, content safety and

representational harms. See the [Gemma model

card](https://ai.google.dev/gemma/docs/model_card#evaluation_approach) for

more details on evaluation approach, but with image captioning and visual

question answering setups.

* Image-to-Text benchmark evaluation: Benchmark against relevant academic

datasets such as FairFace Dataset ([Karkkainen et al.,

2021](https://arxiv.org/abs/1908.04913)).

### Evaluation results

* The human evaluation results of ethics and safety evaluations are within

acceptable thresholds for meeting [internal

policies](https://storage.googleapis.com/gweb-uniblog-publish-prod/documents/2023_Google_AI_Principles_Progress_Update.pdf#page=11)

for categories such as child safety, content safety and representational

harms.

* On top of robust internal evaluations, we also use the Perspective API

(threshold of 0.8) to measure toxicity, profanity, and other potential

issues in the generated captions for images sourced from the FairFace

dataset. We report the maximum and median values observed across subgroups

for each of the perceived gender, ethnicity, and age attributes.

<table>

<tbody><tr>

</tr></tbody><tbody><tr><th>Metric</th>

<th>Perceived<br>gender</th>

<th></th>

<th>Ethnicity</th>

<th></th>

<th>Age group</th>

<th></th>

</tr>

<tr>

<th></th>

<th>Maximum</th>

<th>Median</th>

<th>Maximum</th>

<th>Median</th>

<th>Maximum</th>

<th>Median</th>

</tr>

<tr>

<td>Toxicity</td>

<td>0.04%</td>

<td>0.03%</td>

<td>0.08%</td>

<td>0.00%</td>

<td>0.09%</td>

<td>0.00%</td>

</tr>

<tr>

<td>Identity Attack</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

</tr>

<tr>

<td>Insult</td>

<td>0.06%</td>

<td>0.04%</td>

<td>0.09%</td>

<td>0.07%</td>

<td>0.16%</td>

<td>0.00%</td>

</tr>

<tr>

<td>Threat</td>

<td>0.06%</td>

<td>0.05%</td>

<td>0.14%</td>

<td>0.05%</td>

<td>0.17%</td>

<td>0.00%</td>

</tr>

<tr>

<td>Profanity</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

<td>0.00%</td>

</tr>

</tbody></table>

## Usage and limitations

### Intended usage

Open Vision Language Models (VLMs) have a wide range of applications across

various industries and domains. The following list of potential uses is not

comprehensive. The purpose of this list is to provide contextual information

about the possible use-cases that the model creators considered as part of model

training and development.

Fine-tune on specific vision-language task:

* The pre-trained models can be fine-tuned on a wide range of vision-language

tasks such as: image captioning, short video caption, visual question

answering, text reading, object detection and object segmentation.

* The pre-trained models can be fine-tuned for specific domains such as remote

sensing question answering, visual questions from people who are blind,

science question answering, describe UI element functionalities.

* The pre-trained models can be fine-tuned for tasks with non-textual outputs

such as bounding boxes or segmentation masks.

Vision-language research:

* The pre-trained models and fine-tuned models can serve as a foundation for researchers to experiment with VLM

techniques, develop algorithms, and contribute to the advancement of the

field.

### Ethical considerations and risks

The development of vision-language models (VLMs) raises several ethical concerns. In creating an open model, we have carefully considered the following:

* Bias and Fairness

* VLMs trained on large-scale, real-world image-text data can reflect socio-cultural biases embedded in the training material. These models underwent careful scrutiny, input data pre-processing described and posterior evaluations reported in this card.

* Misinformation and Misuse

* VLMs can be misused to generate text that is false, misleading, or harmful.

* Guidelines are provided for responsible use with the model, see the [Responsible Generative AI Toolkit](https://ai.google.dev/responsible).

* Transparency and Accountability

* This model card summarizes details on the models' architecture, capabilities, limitations, and evaluation processes.

* A responsibly developed open model offers the opportunity to share innovation by making VLM technology accessible to developers and researchers across the AI ecosystem.

Risks identified and mitigations:

* **Perpetuation of biases:** It's encouraged to perform continuous monitoring

(using evaluation metrics, human review) and the exploration of de-biasing

techniques during model training, fine-tuning, and other use cases.

* **Generation of harmful content:** Mechanisms and guidelines for content

safety are essential. Developers are encouraged to exercise caution and

implement appropriate content safety safeguards based on their specific

product policies and application use cases.

* **Misuse for malicious purposes:** Technical limitations and developer and

end-user education can help mitigate against malicious applications of LLMs.

Educational resources and reporting mechanisms for users to flag misuse are

provided. Prohibited uses of Gemma models are outlined in the [Gemma

Prohibited Use Policy](https://ai.google.dev/gemma/prohibited_use_policy).

* **Privacy violations:** Models were trained on data filtered to remove certain personal information and sensitive data. Developers are encouraged to adhere to privacy regulations with privacy-preserving techniques.

### Limitations

* Most limitations inherited from the underlying Gemma model still apply:

* VLMs are better at tasks that can be framed with clear prompts and

instructions. Open-ended or highly complex tasks might be challenging.

* Natural language is inherently complex. VLMs might struggle to grasp

subtle nuances, sarcasm, or figurative language.

* VLMs generate responses based on information they learned from their

training datasets, but they are not knowledge bases. They may generate

incorrect or outdated factual statements.

* VLMs rely on statistical patterns in language and images. They might

lack the ability to apply common sense reasoning in certain situations.

* PaliGemma was designed first and foremost to serve as a general pre-trained

model for transfer to specialized tasks. Hence, its "out of the box" or

"zero-shot" performance might lag behind models designed specifically for

that.

* PaliGemma is not a multi-turn chatbot. It is designed for a single round of

image and text input.

## Citation

```bibtex

@article{beyer2024paligemma,

title={{PaliGemma: A versatile 3B VLM for transfer}},

author={Lucas Beyer* and Andreas Steiner* and André Susano Pinto* and Alexander Kolesnikov* and Xiao Wang* and Daniel Salz and Maxim Neumann and Ibrahim Alabdulmohsin and Michael Tschannen and Emanuele Bugliarello and Thomas Unterthiner and Daniel Keysers and Skanda Koppula and Fangyu Liu and Adam Grycner and Alexey Gritsenko and Neil Houlsby and Manoj Kumar and Keran Rong and Julian Eisenschlos and Rishabh Kabra and Matthias Bauer and Matko Bošnjak and Xi Chen and Matthias Minderer and Paul Voigtlaender and Ioana Bica and Ivana Balazevic and Joan Puigcerver and Pinelopi Papalampidi and Olivier Henaff and Xi Xiong and Radu Soricut and Jeremiah Harmsen and Xiaohua Zhai*},

year={2024},

journal={arXiv preprint arXiv:2407.07726}

}

```

Find the paper [here](https://arxiv.org/abs/2407.07726).

|

stabilityai/stable-diffusion-xl-refiner-1.0 | stabilityai | "2023-09-25T13:42:56Z" | 390,857 | 1,712 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"image-to-image",

"arxiv:2307.01952",

"arxiv:2211.01324",

"arxiv:2108.01073",

"arxiv:2112.10752",

"license:openrail++",

"diffusers:StableDiffusionXLImg2ImgPipeline",

"region:us"

] | image-to-image | "2023-07-26T07:38:01Z" | ---

license: openrail++

tags:

- stable-diffusion

- image-to-image

---

# SD-XL 1.0-refiner Model Card

## Model

[SDXL](https://arxiv.org/abs/2307.01952) consists of an [ensemble of experts](https://arxiv.org/abs/2211.01324) pipeline for latent diffusion:

In a first step, the base model (available here: https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0) is used to generate (noisy) latents,

which are then further processed with a refinement model specialized for the final denoising steps.

Note that the base model can be used as a standalone module.

Alternatively, we can use a two-stage pipeline as follows:

First, the base model is used to generate latents of the desired output size.

In the second step, we use a specialized high-resolution model and apply a technique called SDEdit (https://arxiv.org/abs/2108.01073, also known as "img2img")

to the latents generated in the first step, using the same prompt. This technique is slightly slower than the first one, as it requires more function evaluations.

Source code is available at https://github.com/Stability-AI/generative-models .

### Model Description

- **Developed by:** Stability AI

- **Model type:** Diffusion-based text-to-image generative model

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0/blob/main/LICENSE.md)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses two fixed, pretrained text encoders ([OpenCLIP-ViT/G](https://github.com/mlfoundations/open_clip) and [CLIP-ViT/L](https://github.com/openai/CLIP/tree/main)).

- **Resources for more information:** Check out our [GitHub Repository](https://github.com/Stability-AI/generative-models) and the [SDXL report on arXiv](https://arxiv.org/abs/2307.01952).

### Model Sources

For research purposes, we recommned our `generative-models` Github repository (https://github.com/Stability-AI/generative-models), which implements the most popoular diffusion frameworks (both training and inference) and for which new functionalities like distillation will be added over time.

[Clipdrop](https://clipdrop.co/stable-diffusion) provides free SDXL inference.

- **Repository:** https://github.com/Stability-AI/generative-models

- **Demo:** https://clipdrop.co/stable-diffusion

## Evaluation

The chart above evaluates user preference for SDXL (with and without refinement) over SDXL 0.9 and Stable Diffusion 1.5 and 2.1.

The SDXL base model performs significantly better than the previous variants, and the model combined with the refinement module achieves the best overall performance.

### 🧨 Diffusers

Make sure to upgrade diffusers to >= 0.18.0:

```

pip install diffusers --upgrade

```

In addition make sure to install `transformers`, `safetensors`, `accelerate` as well as the invisible watermark:

```

pip install invisible_watermark transformers accelerate safetensors

```

Yon can then use the refiner to improve images.

```py

import torch

from diffusers import StableDiffusionXLImg2ImgPipeline

from diffusers.utils import load_image

pipe = StableDiffusionXLImg2ImgPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-refiner-1.0", torch_dtype=torch.float16, variant="fp16", use_safetensors=True

)

pipe = pipe.to("cuda")

url = "https://huggingface.co/datasets/patrickvonplaten/images/resolve/main/aa_xl/000000009.png"

init_image = load_image(url).convert("RGB")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt, image=init_image).images

```

When using `torch >= 2.0`, you can improve the inference speed by 20-30% with torch.compile. Simple wrap the unet with torch compile before running the pipeline:

```py

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

```

If you are limited by GPU VRAM, you can enable *cpu offloading* by calling `pipe.enable_model_cpu_offload`

instead of `.to("cuda")`:

```diff

- pipe.to("cuda")

+ pipe.enable_model_cpu_offload()

```

For more advanced use cases, please have a look at [the docs](https://huggingface.co/docs/diffusers/main/en/api/pipelines/stable_diffusion/stable_diffusion_xl).

## Uses

### Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

Excluded uses are described below.

### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model struggles with more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The autoencoding part of the model is lossy.

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. |

RunDiffusion/Juggernaut-XL-v6 | RunDiffusion | "2024-03-11T20:08:41Z" | 389,938 | 3 | diffusers | [

"diffusers",

"art",

"people",

"diffusion",

"Cinematic",

"Photography",

"Landscape",

"Interior",

"Food",

"Car",

"Wildlife",

"Architecture",

"en",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:finetune:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | "2024-02-22T00:14:34Z" | ---

language:

- en

license: creativeml-openrail-m

library_name: diffusers

tags:

- art

- people

- diffusion

- Cinematic

- Photography

- Landscape

- Interior

- Food

- Car

- Wildlife

- Architecture

thumbnail: https://imagedelivery.net/siANnpeNAc_S2q1M3-eDrA/a38aa9e8-e3cf-4d43-afbd-fd1de0896500/padthumb

base_model: stabilityai/stable-diffusion-xl-base-1.0

---

# Juggernaut XL v6 + RunDiffusion Photo v1 Official

## Juggernaut v9 is here! [Juggernaut v9 + RunDiffusion Photo v2](https://huggingface.co/RunDiffusion/Juggernaut-XL-v9)

This model is not permitted to be used behind API services. Please contact [juggernaut@rundiffusion.com](mailto:juggernaut@rundiffusion.com) for business inquires, commercial licensing, custom models, and consultation.

Juggernaut is available on the new Auto1111 Forge on [RunDiffusion](http://rundiffusion.com/?utm_source=huggingface&utm_medium=referral&utm_campaign=Kandoo)

A big thanks for Version 6 goes to [RunDiffusion](http://rundiffusion.com/?utm_source=huggingface&utm_medium=referral&utm_campaign=Kandoo) ([Photo Model](https://rundiffusion.com/rundiffusion-photo/?utm_source=huggingface&utm_medium=referral&utm_campaign=Kandoo)) and [Adam](https://twitter.com/Colorblind_Adam), who diligently helped me test :) (Leave some love for them ;) )

For business inquires, commercial licensing, custom models, and consultation contact me under juggernaut@rundiffusion.com

|

lmsys/vicuna-7b-v1.5 | lmsys | "2024-03-13T02:01:41Z" | 389,100 | 305 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"arxiv:2307.09288",

"arxiv:2306.05685",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | "2023-07-29T04:42:33Z" | ---

inference: false

license: llama2

---

# Vicuna Model Card

## Model Details

Vicuna is a chat assistant trained by fine-tuning Llama 2 on user-shared conversations collected from ShareGPT.

- **Developed by:** [LMSYS](https://lmsys.org/)

- **Model type:** An auto-regressive language model based on the transformer architecture

- **License:** Llama 2 Community License Agreement

- **Finetuned from model:** [Llama 2](https://arxiv.org/abs/2307.09288)

### Model Sources

- **Repository:** https://github.com/lm-sys/FastChat

- **Blog:** https://lmsys.org/blog/2023-03-30-vicuna/

- **Paper:** https://arxiv.org/abs/2306.05685

- **Demo:** https://chat.lmsys.org/

## Uses

The primary use of Vicuna is research on large language models and chatbots.

The primary intended users of the model are researchers and hobbyists in natural language processing, machine learning, and artificial intelligence.

## How to Get Started with the Model

- Command line interface: https://github.com/lm-sys/FastChat#vicuna-weights

- APIs (OpenAI API, Huggingface API): https://github.com/lm-sys/FastChat/tree/main#api

## Training Details

Vicuna v1.5 is fine-tuned from Llama 2 with supervised instruction fine-tuning.

The training data is around 125K conversations collected from ShareGPT.com.

See more details in the "Training Details of Vicuna Models" section in the appendix of this [paper](https://arxiv.org/pdf/2306.05685.pdf).

## Evaluation

Vicuna is evaluated with standard benchmarks, human preference, and LLM-as-a-judge. See more details in this [paper](https://arxiv.org/pdf/2306.05685.pdf) and [leaderboard](https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard).

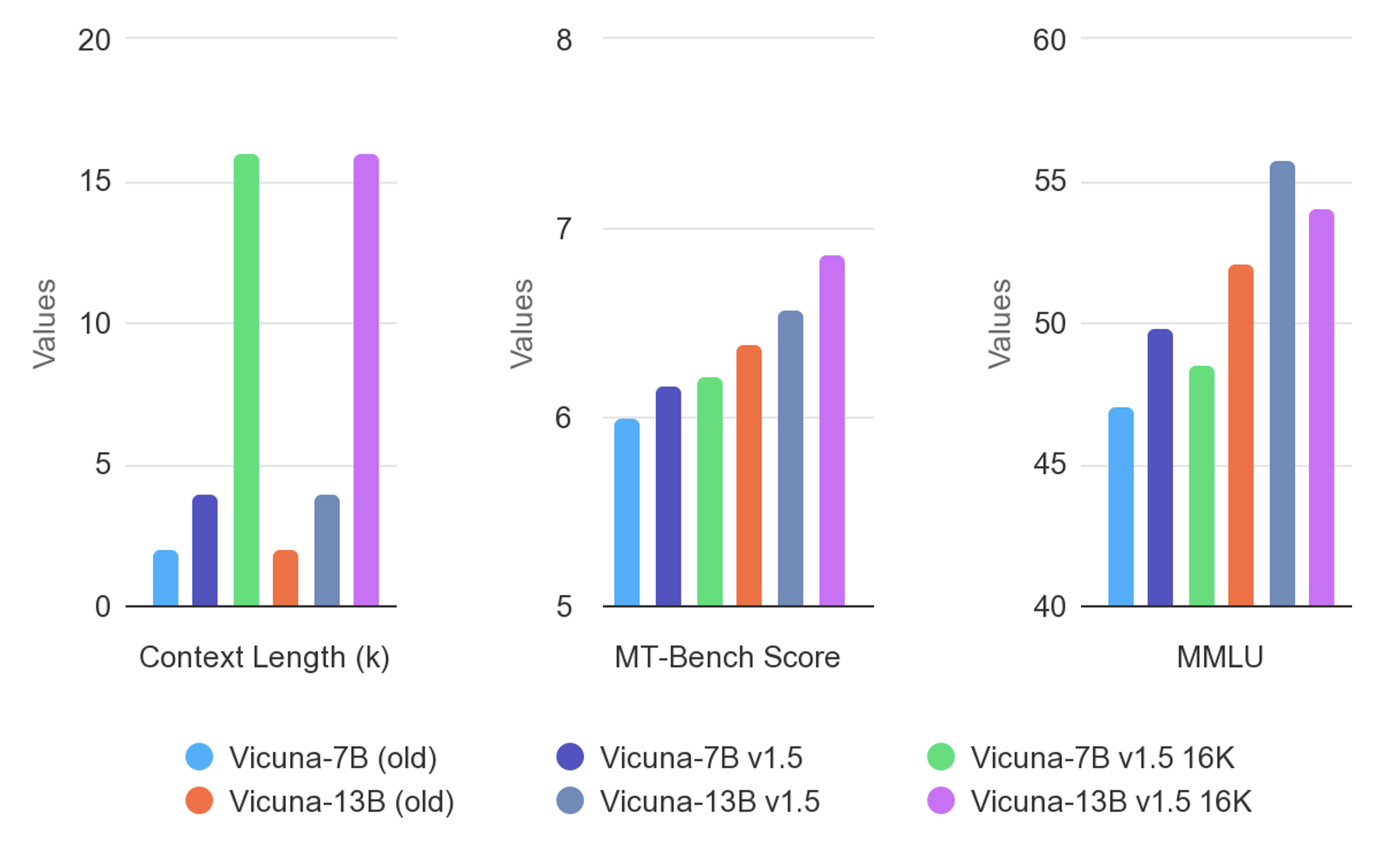

## Difference between different versions of Vicuna

See [vicuna_weights_version.md](https://github.com/lm-sys/FastChat/blob/main/docs/vicuna_weights_version.md) |

philschmid/bart-large-cnn-samsum | philschmid | "2022-12-23T19:48:57Z" | 388,954 | 250 | transformers | [

"transformers",

"pytorch",

"bart",

"text2text-generation",

"sagemaker",

"summarization",

"en",

"dataset:samsum",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | summarization | "2022-03-02T23:29:05Z" | ---

language: en

license: mit

tags:

- sagemaker

- bart

- summarization

datasets:

- samsum

widget:

- text: "Jeff: Can I train a \U0001F917 Transformers model on Amazon SageMaker? \n\

Philipp: Sure you can use the new Hugging Face Deep Learning Container. \nJeff:\

\ ok.\nJeff: and how can I get started? \nJeff: where can I find documentation?\

\ \nPhilipp: ok, ok you can find everything here. https://huggingface.co/blog/the-partnership-amazon-sagemaker-and-hugging-face\n"

model-index:

- name: bart-large-cnn-samsum

results:

- task:

type: summarization

name: Summarization

dataset:

name: 'SAMSum Corpus: A Human-annotated Dialogue Dataset for Abstractive Summarization'

type: samsum

metrics:

- type: rogue-1

value: 42.621

name: Validation ROGUE-1

- type: rogue-2

value: 21.9825

name: Validation ROGUE-2

- type: rogue-l

value: 33.034

name: Validation ROGUE-L

- type: rogue-1

value: 41.3174

name: Test ROGUE-1

- type: rogue-2

value: 20.8716

name: Test ROGUE-2

- type: rogue-l

value: 32.1337

name: Test ROGUE-L

- task:

type: summarization

name: Summarization

dataset:

name: samsum

type: samsum

config: samsum

split: test

metrics:

- type: rouge

value: 41.3282

name: ROUGE-1

verified: true

verifyToken: eyJhbGciOiJFZERTQSIsInR5cCI6IkpXVCJ9.eyJoYXNoIjoiZTYzNzZkZDUzOWQzNGYxYTJhNGE4YWYyZjA0NzMyOWUzMDNhMmVhYzY1YTM0ZTJhYjliNGE4MDZhMjhhYjRkYSIsInZlcnNpb24iOjF9.OOM6l3v5rJCndmUIJV-2SDh2NjbPo5IgQOSL-Ju1Gwbi1voL5amsDEDOelaqlUBE3n55KkUsMLZhyn66yWxZBQ

- type: rouge

value: 20.8755

name: ROUGE-2

verified: true

verifyToken: eyJhbGciOiJFZERTQSIsInR5cCI6IkpXVCJ9.eyJoYXNoIjoiMWZiODFiYWQzY2NmOTc5YjA3NTI0YzQ1MzQ0ODk2NjgyMmVlMjA5MjZiNTJkMGRmZGEzN2M3MDNkMjkxMDVhYSIsInZlcnNpb24iOjF9.b8cPk2-IL24La3Vd0hhtii4tRXujh5urAwy6IVeTWHwYfXaURyC2CcQOWtlOx5bdO5KACeaJFrFBCGgjk-VGCQ

- type: rouge

value: 32.1353

name: ROUGE-L

verified: true

verifyToken: eyJhbGciOiJFZERTQSIsInR5cCI6IkpXVCJ9.eyJoYXNoIjoiYWNmYzdiYWQ2ZWRkYzRiMGMxNWUwODgwZTdkY2NjZTc1NWE5NTFiMzU0OTU1N2JjN2ExYWQ2NGZkNjk5OTc4YSIsInZlcnNpb24iOjF9.Fzv4p-TEVicljiCqsBJHK1GsnE_AwGqamVmxTPI0WBNSIhZEhliRGmIL_z1pDq6WOzv3GN2YUGvhowU7GxnyAQ

- type: rouge

value: 38.401

name: ROUGE-LSUM

verified: true

verifyToken: eyJhbGciOiJFZERTQSIsInR5cCI6IkpXVCJ9.eyJoYXNoIjoiNGI4MWY0NWMxMmQ0ODQ5MDhiNDczMDAzYzJkODBiMzgzYWNkMWM2YTZkZDJmNWJiOGQ3MmNjMGViN2UzYWI2ZSIsInZlcnNpb24iOjF9.7lw3h5k5lJ7tYFLZGUtLyDabFYd00l6ByhmvkW4fykocBy9Blyin4tdw4Xps4DW-pmrdMLgidHxBWz5MrSx1Bw

- type: loss

value: 1.4297215938568115

name: loss

verified: true

verifyToken: eyJhbGciOiJFZERTQSIsInR5cCI6IkpXVCJ9.eyJoYXNoIjoiMzI0ZWNhNDM5YTViZDMyZGJjMDA1ZWFjYzNhOTdlOTFiNzhhMDBjNmM2MjA3ZmRkZjJjMjEyMGY3MzcwOTI2NyIsInZlcnNpb24iOjF9.oNaZsAtUDqGAqoZWJavlcW7PKx1AWsnkbhaQxadpOKk_u7ywJJabvTtzyx_DwEgZslgDETCf4MM-JKitZKjiDA

- type: gen_len

value: 60.0757

name: gen_len

verified: true

verifyToken: eyJhbGciOiJFZERTQSIsInR5cCI6IkpXVCJ9.eyJoYXNoIjoiYTgwYWYwMDRkNTJkMDM5N2I2MWNmYzQ3OWM1NDJmODUyZGViMGE4ZTdkNmIwYWM2N2VjZDNmN2RiMDE4YTYyYiIsInZlcnNpb24iOjF9.PbXTcNYX_SW-BuRQEcqyc21M7uKrOMbffQSAK6k2GLzTVRrzZxsDC57ktKL68zRY8fSiRGsnknOwv-nAR6YBCQ

---

## `bart-large-cnn-samsum`

> If you want to use the model you should try a newer fine-tuned FLAN-T5 version [philschmid/flan-t5-base-samsum](https://huggingface.co/philschmid/flan-t5-base-samsum) out socring the BART version with `+6` on `ROGUE1` achieving `47.24`.

# TRY [philschmid/flan-t5-base-samsum](https://huggingface.co/philschmid/flan-t5-base-samsum)

This model was trained using Amazon SageMaker and the new Hugging Face Deep Learning container.

For more information look at:

- [🤗 Transformers Documentation: Amazon SageMaker](https://huggingface.co/transformers/sagemaker.html)

- [Example Notebooks](https://github.com/huggingface/notebooks/tree/master/sagemaker)

- [Amazon SageMaker documentation for Hugging Face](https://docs.aws.amazon.com/sagemaker/latest/dg/hugging-face.html)

- [Python SDK SageMaker documentation for Hugging Face](https://sagemaker.readthedocs.io/en/stable/frameworks/huggingface/index.html)

- [Deep Learning Container](https://github.com/aws/deep-learning-containers/blob/master/available_images.md#huggingface-training-containers)

## Hyperparameters

```json

{

"dataset_name": "samsum",

"do_eval": true,

"do_predict": true,

"do_train": true,

"fp16": true,

"learning_rate": 5e-05,

"model_name_or_path": "facebook/bart-large-cnn",

"num_train_epochs": 3,

"output_dir": "/opt/ml/model",

"per_device_eval_batch_size": 4,

"per_device_train_batch_size": 4,

"predict_with_generate": true,

"seed": 7

}

```

## Usage

```python

from transformers import pipeline

summarizer = pipeline("summarization", model="philschmid/bart-large-cnn-samsum")

conversation = '''Jeff: Can I train a 🤗 Transformers model on Amazon SageMaker?

Philipp: Sure you can use the new Hugging Face Deep Learning Container.

Jeff: ok.

Jeff: and how can I get started?

Jeff: where can I find documentation?

Philipp: ok, ok you can find everything here. https://huggingface.co/blog/the-partnership-amazon-sagemaker-and-hugging-face

'''

summarizer(conversation)

```

## Results

| key | value |

| --- | ----- |

| eval_rouge1 | 42.621 |

| eval_rouge2 | 21.9825 |

| eval_rougeL | 33.034 |

| eval_rougeLsum | 39.6783 |

| test_rouge1 | 41.3174 |

| test_rouge2 | 20.8716 |

| test_rougeL | 32.1337 |

| test_rougeLsum | 38.4149 |

|

mistralai/Mistral-7B-v0.1 | mistralai | "2024-07-24T14:04:08Z" | 388,796 | 3,439 | transformers | [

"transformers",

"pytorch",

"safetensors",

"mistral",

"text-generation",

"pretrained",

"en",

"arxiv:2310.06825",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | "2023-09-20T13:03:50Z" | ---

language:

- en

license: apache-2.0

tags:

- pretrained

pipeline_tag: text-generation

inference:

parameters:

temperature: 0.7

extra_gated_description: If you want to learn more about how we process your personal data, please read our <a href="https://mistral.ai/terms/">Privacy Policy</a>.

---

# Model Card for Mistral-7B-v0.1

The Mistral-7B-v0.1 Large Language Model (LLM) is a pretrained generative text model with 7 billion parameters.

Mistral-7B-v0.1 outperforms Llama 2 13B on all benchmarks we tested.

For full details of this model please read our [paper](https://arxiv.org/abs/2310.06825) and [release blog post](https://mistral.ai/news/announcing-mistral-7b/).

## Model Architecture

Mistral-7B-v0.1 is a transformer model, with the following architecture choices:

- Grouped-Query Attention

- Sliding-Window Attention

- Byte-fallback BPE tokenizer

## Troubleshooting

- If you see the following error:

```

KeyError: 'mistral'

```

- Or:

```

NotImplementedError: Cannot copy out of meta tensor; no data!

```

Ensure you are utilizing a stable version of Transformers, 4.34.0 or newer.

## Notice

Mistral 7B is a pretrained base model and therefore does not have any moderation mechanisms.

## The Mistral AI Team

Albert Jiang, Alexandre Sablayrolles, Arthur Mensch, Chris Bamford, Devendra Singh Chaplot, Diego de las Casas, Florian Bressand, Gianna Lengyel, Guillaume Lample, Lélio Renard Lavaud, Lucile Saulnier, Marie-Anne Lachaux, Pierre Stock, Teven Le Scao, Thibaut Lavril, Thomas Wang, Timothée Lacroix, William El Sayed. |

vikp/surya_layout3 | vikp | "2024-07-12T15:32:03Z" | 384,125 | 1 | transformers | [

"transformers",

"safetensors",

"efficientvit",

"license:cc-by-nc-sa-4.0",

"endpoints_compatible",

"region:us"

] | null | "2024-07-09T18:30:07Z" | ---

library_name: transformers

license: cc-by-nc-sa-4.0

---

Layout model for [surya](https://www.github.com/VikParuchuri/surya). |

timm/efficientnet_b0.ra_in1k | timm | "2023-04-27T21:09:50Z" | 383,198 | 3 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2110.00476",

"arxiv:1905.11946",

"license:apache-2.0",

"region:us"

] | image-classification | "2022-12-12T23:52:52Z" | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for efficientnet_b0.ra_in1k

A EfficientNet image classification model. Trained on ImageNet-1k in `timm` using recipe template described below.

Recipe details:

* RandAugment `RA` recipe. Inspired by and evolved from EfficientNet RandAugment recipes. Published as `B` recipe in [ResNet Strikes Back](https://arxiv.org/abs/2110.00476).

* RMSProp (TF 1.0 behaviour) optimizer, EMA weight averaging

* Step (exponential decay w/ staircase) LR schedule with warmup

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 5.3

- GMACs: 0.4

- Activations (M): 6.7

- Image size: 224 x 224

- **Papers:**

- EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks: https://arxiv.org/abs/1905.11946

- ResNet strikes back: An improved training procedure in timm: https://arxiv.org/abs/2110.00476

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/huggingface/pytorch-image-models

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('efficientnet_b0.ra_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'efficientnet_b0.ra_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 16, 112, 112])

# torch.Size([1, 24, 56, 56])

# torch.Size([1, 40, 28, 28])

# torch.Size([1, 112, 14, 14])

# torch.Size([1, 320, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'efficientnet_b0.ra_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 1280, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

## Citation

```bibtex

@inproceedings{tan2019efficientnet,

title={Efficientnet: Rethinking model scaling for convolutional neural networks},

author={Tan, Mingxing and Le, Quoc},

booktitle={International conference on machine learning},

pages={6105--6114},

year={2019},

organization={PMLR}

}

```

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

```

```bibtex

@inproceedings{wightman2021resnet,

title={ResNet strikes back: An improved training procedure in timm},

author={Wightman, Ross and Touvron, Hugo and Jegou, Herve},

booktitle={NeurIPS 2021 Workshop on ImageNet: Past, Present, and Future}

}

```

|

sentence-transformers/distilbert-base-nli-mean-tokens | sentence-transformers | "2024-11-05T16:42:12Z" | 383,066 | 5 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"tf",

"onnx",

"safetensors",

"openvino",

"distilbert",

"feature-extraction",

"sentence-similarity",

"transformers",

"arxiv:1908.10084",

"license:apache-2.0",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | feature-extraction | "2022-03-02T23:29:05Z" | ---

license: apache-2.0

library_name: sentence-transformers

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

pipeline_tag: feature-extraction

---

**⚠️ This model is deprecated. Please don't use it as it produces sentence embeddings of low quality. You can find recommended sentence embedding models here: [SBERT.net - Pretrained Models](https://www.sbert.net/docs/pretrained_models.html)**

# sentence-transformers/distilbert-base-nli-mean-tokens

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/distilbert-base-nli-mean-tokens')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/distilbert-base-nli-mean-tokens')

model = AutoModel.from_pretrained('sentence-transformers/distilbert-base-nli-mean-tokens')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/distilbert-base-nli-mean-tokens)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

TahaDouaji/detr-doc-table-detection | TahaDouaji | "2024-08-27T12:39:30Z" | 382,609 | 50 | transformers | [

"transformers",

"pytorch",

"safetensors",

"detr",

"object-detection",

"arxiv:2005.12872",

"arxiv:1910.09700",

"base_model:facebook/detr-resnet-50",

"base_model:finetune:facebook/detr-resnet-50",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | object-detection | "2022-03-11T15:55:14Z" | ---

tags:

- object-detection

license: apache-2.0

base_model: facebook/detr-resnet-50

---

# Model Card for detr-doc-table-detection

# Model Details

detr-doc-table-detection is a model trained to detect both **Bordered** and **Borderless** tables in documents, based on [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50).

- **Developed by:** Taha Douaji

- **Shared by [Optional]:** Taha Douaji

- **Model type:** Object Detection

- **Language(s) (NLP):** More information needed

- **License:** More information needed

- **Parent Model:** [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50)

- **Resources for more information:**

- [Model Demo Space](https://huggingface.co/spaces/trevbeers/pdf-table-extraction)

- [Associated Paper](https://arxiv.org/abs/2005.12872)

# Uses

## Direct Use

This model can be used for the task of object detection.

## Out-of-Scope Use

The model should not be used to intentionally create hostile or alienating environments for people.

# Bias, Risks, and Limitations

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)). Predictions generated by the model may include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups.

## Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

# Training Details

## Training Data

The model was trained on ICDAR2019 Table Dataset

# Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

# Citation

**BibTeX:**

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

# Model Card Authors [optional]

Taha Douaji in collaboration with Ezi Ozoani and the Hugging Face team

# Model Card Contact

More information needed

# How to Get Started with the Model

Use the code below to get started with the model.

```python

from transformers import DetrImageProcessor, DetrForObjectDetection

import torch

from PIL import Image

import requests

image = Image.open("IMAGE_PATH")

processor = DetrImageProcessor.from_pretrained("TahaDouaji/detr-doc-table-detection")

model = DetrForObjectDetection.from_pretrained("TahaDouaji/detr-doc-table-detection")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.9

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.9)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

``` |

timm/tf_mobilenetv3_small_minimal_100.in1k | timm | "2023-04-27T22:49:57Z" | 381,087 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:1905.02244",

"license:apache-2.0",

"region:us"

] | image-classification | "2022-12-16T05:39:34Z" | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for tf_mobilenetv3_small_minimal_100.in1k

A MobileNet-v3 image classification model. Trained on ImageNet-1k in Tensorflow by paper authors, ported to PyTorch by Ross Wightman.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 2.0

- GMACs: 0.1

- Activations (M): 1.4

- Image size: 224 x 224

- **Papers:**

- Searching for MobileNetV3: https://arxiv.org/abs/1905.02244

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('tf_mobilenetv3_small_minimal_100.in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'tf_mobilenetv3_small_minimal_100.in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 16, 112, 112])

# torch.Size([1, 16, 56, 56])

# torch.Size([1, 24, 28, 28])

# torch.Size([1, 48, 14, 14])

# torch.Size([1, 576, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'tf_mobilenetv3_small_minimal_100.in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 576, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

## Citation

```bibtex

@inproceedings{howard2019searching,

title={Searching for mobilenetv3},

author={Howard, Andrew and Sandler, Mark and Chu, Grace and Chen, Liang-Chieh and Chen, Bo and Tan, Mingxing and Wang, Weijun and Zhu, Yukun and Pang, Ruoming and Vasudevan, Vijay and others},

booktitle={Proceedings of the IEEE/CVF international conference on computer vision},

pages={1314--1324},

year={2019}

}

```

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

```

|

neulab/codebert-python | neulab | "2023-02-27T20:56:57Z" | 379,461 | 24 | transformers | [

"transformers",

"pytorch",

"roberta",

"fill-mask",

"arxiv:2302.05527",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | "2022-09-23T15:01:36Z" | This is a `microsoft/codebert-base-mlm` model, trained for 1,000,000 steps (with `batch_size=32`) on **Python** code from the `codeparrot/github-code-clean` dataset, on the masked-language-modeling task.

It is intended to be used in CodeBERTScore: [https://github.com/neulab/code-bert-score](https://github.com/neulab/code-bert-score), but can be used for any other model or task.

For more information, see: [https://github.com/neulab/code-bert-score](https://github.com/neulab/code-bert-score)

## Citation

If you use this model for research, please cite:

```

@article{zhou2023codebertscore,

url = {https://arxiv.org/abs/2302.05527},

author = {Zhou, Shuyan and Alon, Uri and Agarwal, Sumit and Neubig, Graham},

title = {CodeBERTScore: Evaluating Code Generation with Pretrained Models of Code},

publisher = {arXiv},

year = {2023},

}

``` |

smallcloudai/Refact-1_6B-fim | smallcloudai | "2023-11-09T07:09:31Z" | 378,868 | 129 | transformers | [

"transformers",

"pytorch",

"safetensors",

"gpt_refact",

"text-generation",

"code",

"custom_code",

"en",

"dataset:bigcode/the-stack-dedup",

"dataset:rombodawg/2XUNCENSORED_MegaCodeTraining188k",

"dataset:bigcode/commitpackft",

"arxiv:2108.12409",

"arxiv:1607.06450",

"arxiv:1910.07467",

"arxiv:1911.02150",

"license:bigscience-openrail-m",

"model-index",

"autotrain_compatible",

"region:us"

] | text-generation | "2023-08-29T15:48:36Z" | ---

pipeline_tag: text-generation

inference: true

widget:

- text: 'def print_hello_world():'

example_title: Hello world

group: Python

license: bigscience-openrail-m

pretrain-datasets:

- books

- arxiv

- c4

- falcon-refinedweb

- wiki

- github-issues

- stack_markdown

- self-made dataset of permissive github code

datasets:

- bigcode/the-stack-dedup

- rombodawg/2XUNCENSORED_MegaCodeTraining188k

- bigcode/commitpackft

metrics:

- code_eval

library_name: transformers

tags:

- code

model-index:

- name: Refact-1.6B

results:

- task:

type: text-generation

dataset:

type: openai_humaneval

name: HumanEval

metrics:

- name: pass@1 (T=0.01)

type: pass@1

value: 32.0

verified: false

- name: pass@1 (T=0.2)

type: pass@1

value: 31.5

verified: false

- name: pass@10 (T=0.8)

type: pass@10

value: 53.0

verified: false

- name: pass@100 (T=0.8)

type: pass@100

value: 76.9

verified: false

- task: