modelId

stringlengths 4

122

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

392M

| likes

int64 0

6.56k

| library_name

stringclasses 368

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 51

values | createdAt

unknown | card

stringlengths 1

1M

|

|---|---|---|---|---|---|---|---|---|---|

google/owlvit-base-patch32 | google | "2023-12-12T13:47:41Z" | 552,847 | 123 | transformers | [

"transformers",

"pytorch",

"safetensors",

"owlvit",

"zero-shot-object-detection",

"vision",

"arxiv:2205.06230",

"license:apache-2.0",

"region:us"

] | zero-shot-object-detection | "2022-07-05T06:30:01Z" | ---

license: apache-2.0

tags:

- vision

- zero-shot-object-detection

inference: false

---

# Model Card: OWL-ViT

## Model Details

The OWL-ViT (short for Vision Transformer for Open-World Localization) was proposed in [Simple Open-Vocabulary Object Detection with Vision Transformers](https://arxiv.org/abs/2205.06230) by Matthias Minderer, Alexey Gritsenko, Austin Stone, Maxim Neumann, Dirk Weissenborn, Alexey Dosovitskiy, Aravindh Mahendran, Anurag Arnab, Mostafa Dehghani, Zhuoran Shen, Xiao Wang, Xiaohua Zhai, Thomas Kipf, and Neil Houlsby. OWL-ViT is a zero-shot text-conditioned object detection model that can be used to query an image with one or multiple text queries.

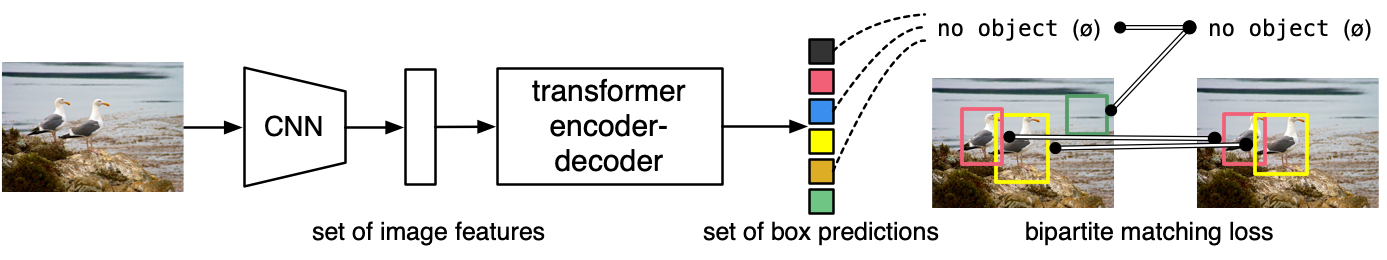

OWL-ViT uses CLIP as its multi-modal backbone, with a ViT-like Transformer to get visual features and a causal language model to get the text features. To use CLIP for detection, OWL-ViT removes the final token pooling layer of the vision model and attaches a lightweight classification and box head to each transformer output token. Open-vocabulary classification is enabled by replacing the fixed classification layer weights with the class-name embeddings obtained from the text model. The authors first train CLIP from scratch and fine-tune it end-to-end with the classification and box heads on standard detection datasets using a bipartite matching loss. One or multiple text queries per image can be used to perform zero-shot text-conditioned object detection.

### Model Date

May 2022

### Model Type

The model uses a CLIP backbone with a ViT-B/32 Transformer architecture as an image encoder and uses a masked self-attention Transformer as a text encoder. These encoders are trained to maximize the similarity of (image, text) pairs via a contrastive loss. The CLIP backbone is trained from scratch and fine-tuned together with the box and class prediction heads with an object detection objective.

### Documents

- [OWL-ViT Paper](https://arxiv.org/abs/2205.06230)

### Use with Transformers

```python3

import requests

from PIL import Image

import torch

from transformers import OwlViTProcessor, OwlViTForObjectDetection

processor = OwlViTProcessor.from_pretrained("google/owlvit-base-patch32")

model = OwlViTForObjectDetection.from_pretrained("google/owlvit-base-patch32")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

texts = [["a photo of a cat", "a photo of a dog"]]

inputs = processor(text=texts, images=image, return_tensors="pt")

outputs = model(**inputs)

# Target image sizes (height, width) to rescale box predictions [batch_size, 2]

target_sizes = torch.Tensor([image.size[::-1]])

# Convert outputs (bounding boxes and class logits) to COCO API

results = processor.post_process_object_detection(outputs=outputs, threshold=0.1, target_sizes=target_sizes)

i = 0 # Retrieve predictions for the first image for the corresponding text queries

text = texts[i]

boxes, scores, labels = results[i]["boxes"], results[i]["scores"], results[i]["labels"]

# Print detected objects and rescaled box coordinates

for box, score, label in zip(boxes, scores, labels):

box = [round(i, 2) for i in box.tolist()]

print(f"Detected {text[label]} with confidence {round(score.item(), 3)} at location {box}")

```

## Model Use

### Intended Use

The model is intended as a research output for research communities. We hope that this model will enable researchers to better understand and explore zero-shot, text-conditioned object detection. We also hope it can be used for interdisciplinary studies of the potential impact of such models, especially in areas that commonly require identifying objects whose label is unavailable during training.

#### Primary intended uses

The primary intended users of these models are AI researchers.

We primarily imagine the model will be used by researchers to better understand robustness, generalization, and other capabilities, biases, and constraints of computer vision models.

## Data

The CLIP backbone of the model was trained on publicly available image-caption data. This was done through a combination of crawling a handful of websites and using commonly-used pre-existing image datasets such as [YFCC100M](http://projects.dfki.uni-kl.de/yfcc100m/). A large portion of the data comes from our crawling of the internet. This means that the data is more representative of people and societies most connected to the internet. The prediction heads of OWL-ViT, along with the CLIP backbone, are fine-tuned on publicly available object detection datasets such as [COCO](https://cocodataset.org/#home) and [OpenImages](https://storage.googleapis.com/openimages/web/index.html).

### BibTeX entry and citation info

```bibtex

@article{minderer2022simple,

title={Simple Open-Vocabulary Object Detection with Vision Transformers},

author={Matthias Minderer, Alexey Gritsenko, Austin Stone, Maxim Neumann, Dirk Weissenborn, Alexey Dosovitskiy, Aravindh Mahendran, Anurag Arnab, Mostafa Dehghani, Zhuoran Shen, Xiao Wang, Xiaohua Zhai, Thomas Kipf, Neil Houlsby},

journal={arXiv preprint arXiv:2205.06230},

year={2022},

}

``` |

facebook/detr-resnet-50 | facebook | "2024-04-10T13:56:31Z" | 546,019 | 727 | transformers | [

"transformers",

"pytorch",

"safetensors",

"detr",

"object-detection",

"vision",

"dataset:coco",

"arxiv:2005.12872",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | object-detection | "2022-03-02T23:29:05Z" | ---

license: apache-2.0

tags:

- object-detection

- vision

datasets:

- coco

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/savanna.jpg

example_title: Savanna

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/football-match.jpg

example_title: Football Match

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/airport.jpg

example_title: Airport

---

# DETR (End-to-End Object Detection) model with ResNet-50 backbone

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrImageProcessor, DetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# you can specify the revision tag if you don't want the timm dependency

processor = DetrImageProcessor.from_pretrained("facebook/detr-resnet-50", revision="no_timm")

model = DetrForObjectDetection.from_pretrained("facebook/detr-resnet-50", revision="no_timm")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.9

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.9)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

This should output:

```

Detected remote with confidence 0.998 at location [40.16, 70.81, 175.55, 117.98]

Detected remote with confidence 0.996 at location [333.24, 72.55, 368.33, 187.66]

Detected couch with confidence 0.995 at location [-0.02, 1.15, 639.73, 473.76]

Detected cat with confidence 0.999 at location [13.24, 52.05, 314.02, 470.93]

Detected cat with confidence 0.999 at location [345.4, 23.85, 640.37, 368.72]

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of **42.0** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` |

ibm-granite/granite-timeseries-ttm-r1 | ibm-granite | "2024-11-07T10:18:15Z" | 542,791 | 218 | transformers | [

"transformers",

"safetensors",

"tinytimemixer",

"time series",

"forecasting",

"pretrained models",

"foundation models",

"time series foundation models",

"time-series",

"time-series-forecasting",

"arxiv:2401.03955",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | time-series-forecasting | "2024-04-05T03:20:10Z" | ---

license: apache-2.0

pipeline_tag: time-series-forecasting

tags:

- time series

- forecasting

- pretrained models

- foundation models

- time series foundation models

- time-series

---

# Granite-TimeSeries-TTM-R1 Model Card

<p align="center" width="100%">

<img src="ttm_image.webp" width="600">

</p>

TinyTimeMixers (TTMs) are compact pre-trained models for Multivariate Time-Series Forecasting, open-sourced by IBM Research.

**With less than 1 Million parameters, TTM (accepted in NeurIPS 24) introduces the notion of the first-ever “tiny” pre-trained models for Time-Series Forecasting.**

TTM outperforms several popular benchmarks demanding billions of parameters in zero-shot and few-shot forecasting. TTMs are lightweight

forecasters, pre-trained on publicly available time series data with various augmentations. TTM provides state-of-the-art zero-shot forecasts and can easily be

fine-tuned for multi-variate forecasts with just 5% of the training data to be competitive. Refer to our [paper](https://arxiv.org/pdf/2401.03955.pdf) for more details.

**The current open-source version supports point forecasting use-cases specifically ranging from minutely to hourly resolutions

(Ex. 10 min, 15 min, 1 hour.).**

**Note that zeroshot, fine-tuning and inference tasks using TTM can easily be executed in 1 GPU machine or in laptops too!!**

**New updates:** TTM-R1 comprises TTM variants pre-trained on 250M public training samples. We have another set of TTM models released recently under TTM-R2 trained on a much larger pretraining

dataset (~700M samples) which can be accessed from [here](https://huggingface.co/ibm-granite/granite-timeseries-ttm-r2). In general, TTM-R2 models perform better than

TTM-R1 models as they are trained on larger pretraining dataset. However, the choice of R1 vs R2 depends on your target data distribution. Hence requesting users to

try both R1 and R2 variants and pick the best for your data.

## Model Description

TTM falls under the category of “focused pre-trained models”, wherein each pre-trained TTM is tailored for a particular forecasting

setting (governed by the context length and forecast length). Instead of building one massive model supporting all forecasting settings,

we opt for the approach of constructing smaller pre-trained models, each focusing on a specific forecasting setting, thereby

yielding more accurate results. Furthermore, this approach ensures that our models remain extremely small and exceptionally fast,

facilitating easy deployment without demanding a ton of resources.

Hence, in this model card, we plan to release several pre-trained

TTMs that can cater to many common forecasting settings in practice. Additionally, we have released our source code along with

our pretraining scripts that users can utilize to pretrain models on their own. Pretraining TTMs is very easy and fast, taking

only 3-6 hours using 6 A100 GPUs, as opposed to several days or weeks in traditional approaches.

Each pre-trained model will be released in a different branch name in this model card. Kindly access the required model using our

getting started [notebook](https://github.com/IBM/tsfm/blob/main/notebooks/hfdemo/ttm_getting_started.ipynb) mentioning the branch name.

## Model Releases (along with the branch name where the models are stored):

- **512-96:** Given the last 512 time-points (i.e. context length), this model can forecast up to next 96 time-points (i.e. forecast length)

in future. This model is targeted towards a forecasting setting of context length 512 and forecast length 96 and

recommended for hourly and minutely resolutions (Ex. 10 min, 15 min, 1 hour, etc). This model refers to the TTM-Q variant used in the paper. (branch name: main) [[Benchmark Scripts]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/tinytimemixer/ttm-r1_benchmarking_512_96.ipynb)

- **1024-96:** Given the last 1024 time-points (i.e. context length), this model can forecast up to next 96 time-points (i.e. forecast length)

in future. This model is targeted towards a long forecasting setting of context length 1024 and forecast length 96 and

recommended for hourly and minutely resolutions (Ex. 10 min, 15 min, 1 hour, etc). (branch name: 1024-96-v1) [[Benchmark Scripts]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/tinytimemixer/ttm-r1_benchmarking_1024_96.ipynb)

We can also use the [[get_model]](https://github.com/ibm-granite/granite-tsfm/blob/main/tsfm_public/toolkit/get_model.py) utility to automatically select the required model based on your input context length and forecast length requirement.

For more variants (till forecast length 720), refer to our new model card [here](https://huggingface.co/ibm-granite/granite-timeseries-ttm-r2)

## Model Capabilities with example scripts

The below model scripts can be used for any of the above TTM models. Please update the HF model URL and branch name in the `from_pretrained` call appropriately to pick the model of your choice.

- Getting Started [[colab]](https://colab.research.google.com/github/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/ttm_getting_started.ipynb)

- Zeroshot Multivariate Forecasting [[Example]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/ttm_getting_started.ipynb)

- Finetuned Multivariate Forecasting:

- Channel-Independent Finetuning [[Example 1]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/ttm_getting_started.ipynb) [[Example 2]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/tinytimemixer/ttm_m4_hourly.ipynb)

- Channel-Mix Finetuning [[Example]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/tutorial/ttm_channel_mix_finetuning.ipynb)

- **New Releases (extended features released on October 2024)**

- Finetuning and Forecasting with Exogenous/Control Variables [[Example]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/tutorial/ttm_with_exog_tutorial.ipynb)

- Finetuning and Forecasting with static categorical features [Example: To be added soon]

- Rolling Forecasts - Extend forecast lengths beyond 96 via rolling capability [[Example]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/hfdemo/ttm_rolling_prediction_getting_started.ipynb)

- Helper scripts for optimal Learning Rate suggestions for Finetuning [[Example]](https://github.com/ibm-granite/granite-tsfm/blob/main/notebooks/tutorial/ttm_with_exog_tutorial.ipynb)

## Benchmarks

TTM outperforms popular benchmarks such as TimesFM, Moirai, Chronos, Lag-Llama, Moment, GPT4TS, TimeLLM, LLMTime in zero/fewshot forecasting while reducing computational requirements significantly.

Moreover, TTMs are lightweight and can be executed even on CPU-only machines, enhancing usability and fostering wider

adoption in resource-constrained environments. For more details, refer to our [paper](https://arxiv.org/pdf/2401.03955.pdf) TTM-Q referred in the paper maps to the `512-96` model

uploaded in the main branch. For other variants (TTM-B, TTM-E and TTM-A) please refer [here](https://huggingface.co/ibm-granite/granite-timeseries-ttm-r2). For more details, refer to the paper.

<p align="center" width="100%">

<img src="benchmarks.webp" width="600">

</p>

## Recommended Use

1. Users have to externally standard scale their data independently for every channel before feeding it to the model (Refer to [TSP](https://github.com/IBM/tsfm/blob/main/tsfm_public/toolkit/time_series_preprocessor.py), our data processing utility for data scaling.)

2. The current open-source version supports only minutely and hourly resolutions(Ex. 10 min, 15 min, 1 hour.). Other lower resolutions (say weekly, or monthly) are currently not supported in this version, as the model needs a minimum context length of 512 or 1024.

3. Enabling any upsampling or prepending zeros to virtually increase the context length for shorter-length datasets is not recommended and will

impact the model performance.

## Model Details

For more details on TTM architecture and benchmarks, refer to our [paper](https://arxiv.org/pdf/2401.03955.pdf).

TTM-1 currently supports 2 modes:

- **Zeroshot forecasting**: Directly apply the pre-trained model on your target data to get an initial forecast (with no training).

- **Finetuned forecasting**: Finetune the pre-trained model with a subset of your target data to further improve the forecast.

**Since, TTM models are extremely small and fast, it is practically very easy to finetune the model with your available target data in few minutes

to get more accurate forecasts.**

The current release supports multivariate forecasting via both channel independence and channel-mixing approaches.

Decoder Channel-Mixing can be enabled during fine-tuning for capturing strong channel-correlation patterns across

time-series variates, a critical capability lacking in existing counterparts.

In addition, TTM also supports exogenous infusion and categorical data infusion.

### Model Sources

- **Repository:** https://github.com/ibm-granite/granite-tsfm/tree/main/tsfm_public/models/tinytimemixer

- **Paper:** https://arxiv.org/pdf/2401.03955.pdf

### Blogs and articles on TTM:

- Refer to our [wiki](https://github.com/ibm-granite/granite-tsfm/wiki)

## Uses

```

# Load Model from HF Model Hub mentioning the branch name in revision field

model = TinyTimeMixerForPrediction.from_pretrained(

"https://huggingface.co/ibm/TTM", revision="main"

)

# Do zeroshot

zeroshot_trainer = Trainer(

model=model,

args=zeroshot_forecast_args,

)

)

zeroshot_output = zeroshot_trainer.evaluate(dset_test)

# Freeze backbone and enable few-shot or finetuning:

# freeze backbone

for param in model.backbone.parameters():

param.requires_grad = False

finetune_forecast_trainer = Trainer(

model=model,

args=finetune_forecast_args,

train_dataset=dset_train,

eval_dataset=dset_val,

callbacks=[early_stopping_callback, tracking_callback],

optimizers=(optimizer, scheduler),

)

finetune_forecast_trainer.train()

fewshot_output = finetune_forecast_trainer.evaluate(dset_test)

```

## Training Data

The original r1 TTM models were trained on a collection of datasets from the Monash Time Series Forecasting repository. The datasets used include:

- Australian Electricity Demand: https://zenodo.org/records/4659727

- Australian Weather: https://zenodo.org/records/4654822

- Bitcoin dataset: https://zenodo.org/records/5122101

- KDD Cup 2018 dataset: https://zenodo.org/records/4656756

- London Smart Meters: https://zenodo.org/records/4656091

- Saugeen River Flow: https://zenodo.org/records/4656058

- Solar Power: https://zenodo.org/records/4656027

- Sunspots: https://zenodo.org/records/4654722

- Solar: https://zenodo.org/records/4656144

- US Births: https://zenodo.org/records/4656049

- Wind Farms Production data: https://zenodo.org/records/4654858

- Wind Power: https://zenodo.org/records/4656032

## Citation

Kindly cite the following paper, if you intend to use our model or its associated architectures/approaches in your

work

**BibTeX:**

```

@inproceedings{ekambaram2024tinytimemixersttms,

title={Tiny Time Mixers (TTMs): Fast Pre-trained Models for Enhanced Zero/Few-Shot Forecasting of Multivariate Time Series},

author={Vijay Ekambaram and Arindam Jati and Pankaj Dayama and Sumanta Mukherjee and Nam H. Nguyen and Wesley M. Gifford and Chandra Reddy and Jayant Kalagnanam},

booktitle={Advances in Neural Information Processing Systems (NeurIPS 2024)},

year={2024},

}

```

## Model Card Authors

Vijay Ekambaram, Arindam Jati, Pankaj Dayama, Wesley M. Gifford, Sumanta Mukherjee, Chandra Reddy and Jayant Kalagnanam

## IBM Public Repository Disclosure:

All content in this repository including code has been provided by IBM under the associated

open source software license and IBM is under no obligation to provide enhancements,

updates, or support. IBM developers produced this code as an

open source project (not as an IBM product), and IBM makes no assertions as to

the level of quality nor security, and will not be maintaining this code going forward. |

sentence-transformers/stsb-xlm-r-multilingual | sentence-transformers | "2024-11-05T19:56:54Z" | 540,827 | 37 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"tf",

"onnx",

"safetensors",

"openvino",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"arxiv:1908.10084",

"license:apache-2.0",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | "2022-03-02T23:29:05Z" | ---

license: apache-2.0

library_name: sentence-transformers

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

pipeline_tag: sentence-similarity

---

# sentence-transformers/stsb-xlm-r-multilingual

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/stsb-xlm-r-multilingual')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/stsb-xlm-r-multilingual')

model = AutoModel.from_pretrained('sentence-transformers/stsb-xlm-r-multilingual')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/stsb-xlm-r-multilingual)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

diffusers/stable-diffusion-xl-1.0-inpainting-0.1 | diffusers | "2023-09-03T16:36:39Z" | 539,552 | 293 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"inpainting",

"arxiv:2112.10752",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:finetune:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"diffusers:StableDiffusionXLInpaintPipeline",

"region:us"

] | text-to-image | "2023-09-01T14:07:10Z" |

---

license: openrail++

base_model: stabilityai/stable-diffusion-xl-base-1.0

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- inpainting

inference: false

---

# SD-XL Inpainting 0.1 Model Card

SD-XL Inpainting 0.1 is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input, with the extra capability of inpainting the pictures by using a mask.

The SD-XL Inpainting 0.1 was initialized with the `stable-diffusion-xl-base-1.0` weights. The model is trained for 40k steps at resolution 1024x1024 and 5% dropping of the text-conditioning to improve classifier-free classifier-free guidance sampling. For inpainting, the UNet has 5 additional input channels (4 for the encoded masked-image and 1 for the mask itself) whose weights were zero-initialized after restoring the non-inpainting checkpoint. During training, we generate synthetic masks and, in 25% mask everything.

## How to use

```py

from diffusers import AutoPipelineForInpainting

from diffusers.utils import load_image

import torch

pipe = AutoPipelineForInpainting.from_pretrained("diffusers/stable-diffusion-xl-1.0-inpainting-0.1", torch_dtype=torch.float16, variant="fp16").to("cuda")

img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

image = load_image(img_url).resize((1024, 1024))

mask_image = load_image(mask_url).resize((1024, 1024))

prompt = "a tiger sitting on a park bench"

generator = torch.Generator(device="cuda").manual_seed(0)

image = pipe(

prompt=prompt,

image=image,

mask_image=mask_image,

guidance_scale=8.0,

num_inference_steps=20, # steps between 15 and 30 work well for us

strength=0.99, # make sure to use `strength` below 1.0

generator=generator,

).images[0]

```

**How it works:**

`image` | `mask_image`

:-------------------------:|:-------------------------:|

<img src="https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png" alt="drawing" width="300"/> | <img src="https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png" alt="drawing" width="300"/>

`prompt` | `Output`

:-------------------------:|:-------------------------:|

<span style="position: relative;bottom: 150px;">a tiger sitting on a park bench</span> | <img src="https://huggingface.co/datasets/valhalla/images/resolve/main/tiger.png" alt="drawing" width="300"/>

## Model Description

- **Developed by:** The Diffusers team

- **Model type:** Diffusion-based text-to-image generative model

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0/blob/main/LICENSE.md)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses two fixed, pretrained text encoders ([OpenCLIP-ViT/G](https://github.com/mlfoundations/open_clip) and [CLIP-ViT/L](https://github.com/openai/CLIP/tree/main)).

## Uses

### Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

Excluded uses are described below.

### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model struggles with more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The autoencoding part of the model is lossy.

- When the strength parameter is set to 1 (i.e. starting in-painting from a fully masked image), the quality of the image is degraded. The model retains the non-masked contents of the image, but images look less sharp. We're investing this and working on the next version.

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

|

facebook/mms-1b-all | facebook | "2023-06-15T10:45:44Z" | 536,378 | 112 | transformers | [

"transformers",

"pytorch",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"mms",

"ab",

"af",

"ak",

"am",

"ar",

"as",

"av",

"ay",

"az",

"ba",

"bm",

"be",

"bn",

"bi",

"bo",

"sh",

"br",

"bg",

"ca",

"cs",

"ce",

"cv",

"ku",

"cy",

"da",

"de",

"dv",

"dz",

"el",

"en",

"eo",

"et",

"eu",

"ee",

"fo",

"fa",

"fj",

"fi",

"fr",

"fy",

"ff",

"ga",

"gl",

"gn",

"gu",

"zh",

"ht",

"ha",

"he",

"hi",

"hu",

"hy",

"ig",

"ia",

"ms",

"is",

"it",

"jv",

"ja",

"kn",

"ka",

"kk",

"kr",

"km",

"ki",

"rw",

"ky",

"ko",

"kv",

"lo",

"la",

"lv",

"ln",

"lt",

"lb",

"lg",

"mh",

"ml",

"mr",

"mk",

"mg",

"mt",

"mn",

"mi",

"my",

"nl",

"no",

"ne",

"ny",

"oc",

"om",

"or",

"os",

"pa",

"pl",

"pt",

"ps",

"qu",

"ro",

"rn",

"ru",

"sg",

"sk",

"sl",

"sm",

"sn",

"sd",

"so",

"es",

"sq",

"su",

"sv",

"sw",

"ta",

"tt",

"te",

"tg",

"tl",

"th",

"ti",

"ts",

"tr",

"uk",

"vi",

"wo",

"xh",

"yo",

"zu",

"za",

"dataset:google/fleurs",

"arxiv:2305.13516",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | "2023-05-27T11:43:21Z" | ---

tags:

- mms

language:

- ab

- af

- ak

- am

- ar

- as

- av

- ay

- az

- ba

- bm

- be

- bn

- bi

- bo

- sh

- br

- bg

- ca

- cs

- ce

- cv

- ku

- cy

- da

- de

- dv

- dz

- el

- en

- eo

- et

- eu

- ee

- fo

- fa

- fj

- fi

- fr

- fy

- ff

- ga

- gl

- gn

- gu

- zh

- ht

- ha

- he

- hi

- sh

- hu

- hy

- ig

- ia

- ms

- is

- it

- jv

- ja

- kn

- ka

- kk

- kr

- km

- ki

- rw

- ky

- ko

- kv

- lo

- la

- lv

- ln

- lt

- lb

- lg

- mh

- ml

- mr

- ms

- mk

- mg

- mt

- mn

- mi

- my

- zh

- nl

- 'no'

- 'no'

- ne

- ny

- oc

- om

- or

- os

- pa

- pl

- pt

- ms

- ps

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- qu

- ro

- rn

- ru

- sg

- sk

- sl

- sm

- sn

- sd

- so

- es

- sq

- su

- sv

- sw

- ta

- tt

- te

- tg

- tl

- th

- ti

- ts

- tr

- uk

- ms

- vi

- wo

- xh

- ms

- yo

- ms

- zu

- za

license: cc-by-nc-4.0

datasets:

- google/fleurs

metrics:

- wer

---

# Massively Multilingual Speech (MMS) - Finetuned ASR - ALL

This checkpoint is a model fine-tuned for multi-lingual ASR and part of Facebook's [Massive Multilingual Speech project](https://research.facebook.com/publications/scaling-speech-technology-to-1000-languages/).

This checkpoint is based on the [Wav2Vec2 architecture](https://huggingface.co/docs/transformers/model_doc/wav2vec2) and makes use of adapter models to transcribe 1000+ languages.

The checkpoint consists of **1 billion parameters** and has been fine-tuned from [facebook/mms-1b](https://huggingface.co/facebook/mms-1b) on 1162 languages.

## Table Of Content

- [Example](#example)

- [Supported Languages](#supported-languages)

- [Model details](#model-details)

- [Additional links](#additional-links)

## Example

This MMS checkpoint can be used with [Transformers](https://github.com/huggingface/transformers) to transcribe audio of 1107 different

languages. Let's look at a simple example.

First, we install transformers and some other libraries

```

pip install torch accelerate torchaudio datasets

pip install --upgrade transformers

````

**Note**: In order to use MMS you need to have at least `transformers >= 4.30` installed. If the `4.30` version

is not yet available [on PyPI](https://pypi.org/project/transformers/) make sure to install `transformers` from

source:

```

pip install git+https://github.com/huggingface/transformers.git

```

Next, we load a couple of audio samples via `datasets`. Make sure that the audio data is sampled to 16000 kHz.

```py

from datasets import load_dataset, Audio

# English

stream_data = load_dataset("mozilla-foundation/common_voice_13_0", "en", split="test", streaming=True)

stream_data = stream_data.cast_column("audio", Audio(sampling_rate=16000))

en_sample = next(iter(stream_data))["audio"]["array"]

# French

stream_data = load_dataset("mozilla-foundation/common_voice_13_0", "fr", split="test", streaming=True)

stream_data = stream_data.cast_column("audio", Audio(sampling_rate=16000))

fr_sample = next(iter(stream_data))["audio"]["array"]

```

Next, we load the model and processor

```py

from transformers import Wav2Vec2ForCTC, AutoProcessor

import torch

model_id = "facebook/mms-1b-all"

processor = AutoProcessor.from_pretrained(model_id)

model = Wav2Vec2ForCTC.from_pretrained(model_id)

```

Now we process the audio data, pass the processed audio data to the model and transcribe the model output, just like we usually do for Wav2Vec2 models such as [facebook/wav2vec2-base-960h](https://huggingface.co/facebook/wav2vec2-base-960h)

```py

inputs = processor(en_sample, sampling_rate=16_000, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs).logits

ids = torch.argmax(outputs, dim=-1)[0]

transcription = processor.decode(ids)

# 'joe keton disapproved of films and buster also had reservations about the media'

```

We can now keep the same model in memory and simply switch out the language adapters by calling the convenient [`load_adapter()`]() function for the model and [`set_target_lang()`]() for the tokenizer. We pass the target language as an input - "fra" for French.

```py

processor.tokenizer.set_target_lang("fra")

model.load_adapter("fra")

inputs = processor(fr_sample, sampling_rate=16_000, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs).logits

ids = torch.argmax(outputs, dim=-1)[0]

transcription = processor.decode(ids)

# "ce dernier est volé tout au long de l'histoire romaine"

```

In the same way the language can be switched out for all other supported languages. Please have a look at:

```py

processor.tokenizer.vocab.keys()

```

For more details, please have a look at [the official docs](https://huggingface.co/docs/transformers/main/en/model_doc/mms).

## Supported Languages

This model supports 1162 languages. Unclick the following to toogle all supported languages of this checkpoint in [ISO 639-3 code](https://en.wikipedia.org/wiki/ISO_639-3).

You can find more details about the languages and their ISO 649-3 codes in the [MMS Language Coverage Overview](https://dl.fbaipublicfiles.com/mms/misc/language_coverage_mms.html).

<details>

<summary>Click to toggle</summary>

- abi

- abk

- abp

- aca

- acd

- ace

- acf

- ach

- acn

- acr

- acu

- ade

- adh

- adj

- adx

- aeu

- afr

- agd

- agg

- agn

- agr

- agu

- agx

- aha

- ahk

- aia

- aka

- akb

- ake

- akp

- alj

- alp

- alt

- alz

- ame

- amf

- amh

- ami

- amk

- ann

- any

- aoz

- apb

- apr

- ara

- arl

- asa

- asg

- asm

- ast

- ata

- atb

- atg

- ati

- atq

- ava

- avn

- avu

- awa

- awb

- ayo

- ayr

- ayz

- azb

- azg

- azj-script_cyrillic

- azj-script_latin

- azz

- bak

- bam

- ban

- bao

- bas

- bav

- bba

- bbb

- bbc

- bbo

- bcc-script_arabic

- bcc-script_latin

- bcl

- bcw

- bdg

- bdh

- bdq

- bdu

- bdv

- beh

- bel

- bem

- ben

- bep

- bex

- bfa

- bfo

- bfy

- bfz

- bgc

- bgq

- bgr

- bgt

- bgw

- bha

- bht

- bhz

- bib

- bim

- bis

- biv

- bjr

- bjv

- bjw

- bjz

- bkd

- bkv

- blh

- blt

- blx

- blz

- bmq

- bmr

- bmu

- bmv

- bng

- bno

- bnp

- boa

- bod

- boj

- bom

- bor

- bos

- bov

- box

- bpr

- bps

- bqc

- bqi

- bqj

- bqp

- bre

- bru

- bsc

- bsq

- bss

- btd

- bts

- btt

- btx

- bud

- bul

- bus

- bvc

- bvz

- bwq

- bwu

- byr

- bzh

- bzi

- bzj

- caa

- cab

- cac-dialect_sanmateoixtatan

- cac-dialect_sansebastiancoatan

- cak-dialect_central

- cak-dialect_santamariadejesus

- cak-dialect_santodomingoxenacoj

- cak-dialect_southcentral

- cak-dialect_western

- cak-dialect_yepocapa

- cap

- car

- cas

- cat

- cax

- cbc

- cbi

- cbr

- cbs

- cbt

- cbu

- cbv

- cce

- cco

- cdj

- ceb

- ceg

- cek

- ces

- cfm

- cgc

- che

- chf

- chv

- chz

- cjo

- cjp

- cjs

- ckb

- cko

- ckt

- cla

- cle

- cly

- cme

- cmn-script_simplified

- cmo-script_khmer

- cmo-script_latin

- cmr

- cnh

- cni

- cnl

- cnt

- coe

- cof

- cok

- con

- cot

- cou

- cpa

- cpb

- cpu

- crh

- crk-script_latin

- crk-script_syllabics

- crn

- crq

- crs

- crt

- csk

- cso

- ctd

- ctg

- cto

- ctu

- cuc

- cui

- cuk

- cul

- cwa

- cwe

- cwt

- cya

- cym

- daa

- dah

- dan

- dar

- dbj

- dbq

- ddn

- ded

- des

- deu

- dga

- dgi

- dgk

- dgo

- dgr

- dhi

- did

- dig

- dik

- dip

- div

- djk

- dnj-dialect_blowowest

- dnj-dialect_gweetaawueast

- dnt

- dnw

- dop

- dos

- dsh

- dso

- dtp

- dts

- dug

- dwr

- dyi

- dyo

- dyu

- dzo

- eip

- eka

- ell

- emp

- enb

- eng

- enx

- epo

- ese

- ess

- est

- eus

- evn

- ewe

- eza

- fal

- fao

- far

- fas

- fij

- fin

- flr

- fmu

- fon

- fra

- frd

- fry

- ful

- gag-script_cyrillic

- gag-script_latin

- gai

- gam

- gau

- gbi

- gbk

- gbm

- gbo

- gde

- geb

- gej

- gil

- gjn

- gkn

- gld

- gle

- glg

- glk

- gmv

- gna

- gnd

- gng

- gof-script_latin

- gog

- gor

- gqr

- grc

- gri

- grn

- grt

- gso

- gub

- guc

- gud

- guh

- guj

- guk

- gum

- guo

- guq

- guu

- gux

- gvc

- gvl

- gwi

- gwr

- gym

- gyr

- had

- hag

- hak

- hap

- hat

- hau

- hay

- heb

- heh

- hif

- hig

- hil

- hin

- hlb

- hlt

- hne

- hnn

- hns

- hoc

- hoy

- hrv

- hsb

- hto

- hub

- hui

- hun

- hus-dialect_centralveracruz

- hus-dialect_westernpotosino

- huu

- huv

- hvn

- hwc

- hye

- hyw

- iba

- ibo

- icr

- idd

- ifa

- ifb

- ife

- ifk

- ifu

- ify

- ign

- ikk

- ilb

- ilo

- imo

- ina

- inb

- ind

- iou

- ipi

- iqw

- iri

- irk

- isl

- ita

- itl

- itv

- ixl-dialect_sangasparchajul

- ixl-dialect_sanjuancotzal

- ixl-dialect_santamarianebaj

- izr

- izz

- jac

- jam

- jav

- jbu

- jen

- jic

- jiv

- jmc

- jmd

- jpn

- jun

- juy

- jvn

- kaa

- kab

- kac

- kak

- kam

- kan

- kao

- kaq

- kat

- kay

- kaz

- kbo

- kbp

- kbq

- kbr

- kby

- kca

- kcg

- kdc

- kde

- kdh

- kdi

- kdj

- kdl

- kdn

- kdt

- kea

- kek

- ken

- keo

- ker

- key

- kez

- kfb

- kff-script_telugu

- kfw

- kfx

- khg

- khm

- khq

- kia

- kij

- kik

- kin

- kir

- kjb

- kje

- kjg

- kjh

- kki

- kkj

- kle

- klu

- klv

- klw

- kma

- kmd

- kml

- kmr-script_arabic

- kmr-script_cyrillic

- kmr-script_latin

- kmu

- knb

- kne

- knf

- knj

- knk

- kno

- kog

- kor

- kpq

- kps

- kpv

- kpy

- kpz

- kqe

- kqp

- kqr

- kqy

- krc

- kri

- krj

- krl

- krr

- krs

- kru

- ksb

- ksr

- kss

- ktb

- ktj

- kub

- kue

- kum

- kus

- kvn

- kvw

- kwd

- kwf

- kwi

- kxc

- kxf

- kxm

- kxv

- kyb

- kyc

- kyf

- kyg

- kyo

- kyq

- kyu

- kyz

- kzf

- lac

- laj

- lam

- lao

- las

- lat

- lav

- law

- lbj

- lbw

- lcp

- lee

- lef

- lem

- lew

- lex

- lgg

- lgl

- lhu

- lia

- lid

- lif

- lin

- lip

- lis

- lit

- lje

- ljp

- llg

- lln

- lme

- lnd

- lns

- lob

- lok

- lom

- lon

- loq

- lsi

- lsm

- ltz

- luc

- lug

- luo

- lwo

- lww

- lzz

- maa-dialect_sanantonio

- maa-dialect_sanjeronimo

- mad

- mag

- mah

- mai

- maj

- mak

- mal

- mam-dialect_central

- mam-dialect_northern

- mam-dialect_southern

- mam-dialect_western

- maq

- mar

- maw

- maz

- mbb

- mbc

- mbh

- mbj

- mbt

- mbu

- mbz

- mca

- mcb

- mcd

- mco

- mcp

- mcq

- mcu

- mda

- mdf

- mdv

- mdy

- med

- mee

- mej

- men

- meq

- met

- mev

- mfe

- mfh

- mfi

- mfk

- mfq

- mfy

- mfz

- mgd

- mge

- mgh

- mgo

- mhi

- mhr

- mhu

- mhx

- mhy

- mib

- mie

- mif

- mih

- mil

- mim

- min

- mio

- mip

- miq

- mit

- miy

- miz

- mjl

- mjv

- mkd

- mkl

- mkn

- mlg

- mlt

- mmg

- mnb

- mnf

- mnk

- mnw

- mnx

- moa

- mog

- mon

- mop

- mor

- mos

- mox

- moz

- mpg

- mpm

- mpp

- mpx

- mqb

- mqf

- mqj

- mqn

- mri

- mrw

- msy

- mtd

- mtj

- mto

- muh

- mup

- mur

- muv

- muy

- mvp

- mwq

- mwv

- mxb

- mxq

- mxt

- mxv

- mya

- myb

- myk

- myl

- myv

- myx

- myy

- mza

- mzi

- mzj

- mzk

- mzm

- mzw

- nab

- nag

- nan

- nas

- naw

- nca

- nch

- ncj

- ncl

- ncu

- ndj

- ndp

- ndv

- ndy

- ndz

- neb

- new

- nfa

- nfr

- nga

- ngl

- ngp

- ngu

- nhe

- nhi

- nhu

- nhw

- nhx

- nhy

- nia

- nij

- nim

- nin

- nko

- nlc

- nld

- nlg

- nlk

- nmz

- nnb

- nno

- nnq

- nnw

- noa

- nob

- nod

- nog

- not

- npi

- npl

- npy

- nso

- nst

- nsu

- ntm

- ntr

- nuj

- nus

- nuz

- nwb

- nxq

- nya

- nyf

- nyn

- nyo

- nyy

- nzi

- obo

- oci

- ojb-script_latin

- ojb-script_syllabics

- oku

- old

- omw

- onb

- ood

- orm

- ory

- oss

- ote

- otq

- ozm

- pab

- pad

- pag

- pam

- pan

- pao

- pap

- pau

- pbb

- pbc

- pbi

- pce

- pcm

- peg

- pez

- pib

- pil

- pir

- pis

- pjt

- pkb

- pls

- plw

- pmf

- pny

- poh-dialect_eastern

- poh-dialect_western

- poi

- pol

- por

- poy

- ppk

- pps

- prf

- prk

- prt

- pse

- pss

- ptu

- pui

- pus

- pwg

- pww

- pxm

- qub

- quc-dialect_central

- quc-dialect_east

- quc-dialect_north

- quf

- quh

- qul

- quw

- quy

- quz

- qvc

- qve

- qvh

- qvm

- qvn

- qvo

- qvs

- qvw

- qvz

- qwh

- qxh

- qxl

- qxn

- qxo

- qxr

- rah

- rai

- rap

- rav

- raw

- rej

- rel

- rgu

- rhg

- rif-script_arabic

- rif-script_latin

- ril

- rim

- rjs

- rkt

- rmc-script_cyrillic

- rmc-script_latin

- rmo

- rmy-script_cyrillic

- rmy-script_latin

- rng

- rnl

- roh-dialect_sursilv

- roh-dialect_vallader

- rol

- ron

- rop

- rro

- rub

- ruf

- rug

- run

- rus

- sab

- sag

- sah

- saj

- saq

- sas

- sat

- sba

- sbd

- sbl

- sbp

- sch

- sck

- sda

- sea

- seh

- ses

- sey

- sgb

- sgj

- sgw

- shi

- shk

- shn

- sho

- shp

- sid

- sig

- sil

- sja

- sjm

- sld

- slk

- slu

- slv

- sml

- smo

- sna

- snd

- sne

- snn

- snp

- snw

- som

- soy

- spa

- spp

- spy

- sqi

- sri

- srm

- srn

- srp-script_cyrillic

- srp-script_latin

- srx

- stn

- stp

- suc

- suk

- sun

- sur

- sus

- suv

- suz

- swe

- swh

- sxb

- sxn

- sya

- syl

- sza

- tac

- taj

- tam

- tao

- tap

- taq

- tat

- tav

- tbc

- tbg

- tbk

- tbl

- tby

- tbz

- tca

- tcc

- tcs

- tcz

- tdj

- ted

- tee

- tel

- tem

- teo

- ter

- tes

- tew

- tex

- tfr

- tgj

- tgk

- tgl

- tgo

- tgp

- tha

- thk

- thl

- tih

- tik

- tir

- tkr

- tlb

- tlj

- tly

- tmc

- tmf

- tna

- tng

- tnk

- tnn

- tnp

- tnr

- tnt

- tob

- toc

- toh

- tom

- tos

- tpi

- tpm

- tpp

- tpt

- trc

- tri

- trn

- trs

- tso

- tsz

- ttc

- tte

- ttq-script_tifinagh

- tue

- tuf

- tuk-script_arabic

- tuk-script_latin

- tuo

- tur

- tvw

- twb

- twe

- twu

- txa

- txq

- txu

- tye

- tzh-dialect_bachajon

- tzh-dialect_tenejapa

- tzj-dialect_eastern

- tzj-dialect_western

- tzo-dialect_chamula

- tzo-dialect_chenalho

- ubl

- ubu

- udm

- udu

- uig-script_arabic

- uig-script_cyrillic

- ukr

- umb

- unr

- upv

- ura

- urb

- urd-script_arabic

- urd-script_devanagari

- urd-script_latin

- urk

- urt

- ury

- usp

- uzb-script_cyrillic

- uzb-script_latin

- vag

- vid

- vie

- vif

- vmw

- vmy

- vot

- vun

- vut

- wal-script_ethiopic

- wal-script_latin

- wap

- war

- waw

- way

- wba

- wlo

- wlx

- wmw

- wob

- wol

- wsg

- wwa

- xal

- xdy

- xed

- xer

- xho

- xmm

- xnj

- xnr

- xog

- xon

- xrb

- xsb

- xsm

- xsr

- xsu

- xta

- xtd

- xte

- xtm

- xtn

- xua

- xuo

- yaa

- yad

- yal

- yam

- yao

- yas

- yat

- yaz

- yba

- ybb

- ycl

- ycn

- yea

- yka

- yli

- yor

- yre

- yua

- yue-script_traditional

- yuz

- yva

- zaa

- zab

- zac

- zad

- zae

- zai

- zam

- zao

- zaq

- zar

- zas

- zav

- zaw

- zca

- zga

- zim

- ziw

- zlm

- zmz

- zne

- zos

- zpc

- zpg

- zpi

- zpl

- zpm

- zpo

- zpt

- zpu

- zpz

- ztq

- zty

- zul

- zyb

- zyp

- zza

</details>

## Model details

- **Developed by:** Vineel Pratap et al.

- **Model type:** Multi-Lingual Automatic Speech Recognition model

- **Language(s):** 1000+ languages, see [supported languages](#supported-languages)

- **License:** CC-BY-NC 4.0 license

- **Num parameters**: 1 billion

- **Audio sampling rate**: 16,000 kHz

- **Cite as:**

@article{pratap2023mms,

title={Scaling Speech Technology to 1,000+ Languages},

author={Vineel Pratap and Andros Tjandra and Bowen Shi and Paden Tomasello and Arun Babu and Sayani Kundu and Ali Elkahky and Zhaoheng Ni and Apoorv Vyas and Maryam Fazel-Zarandi and Alexei Baevski and Yossi Adi and Xiaohui Zhang and Wei-Ning Hsu and Alexis Conneau and Michael Auli},

journal={arXiv},

year={2023}

}

## Additional Links

- [Blog post](https://ai.facebook.com/blog/multilingual-model-speech-recognition/)

- [Transformers documentation](https://huggingface.co/docs/transformers/main/en/model_doc/mms).

- [Paper](https://arxiv.org/abs/2305.13516)

- [GitHub Repository](https://github.com/facebookresearch/fairseq/tree/main/examples/mms#asr)

- [Other **MMS** checkpoints](https://huggingface.co/models?other=mms)

- MMS base checkpoints:

- [facebook/mms-1b](https://huggingface.co/facebook/mms-1b)

- [facebook/mms-300m](https://huggingface.co/facebook/mms-300m)

- [Official Space](https://huggingface.co/spaces/facebook/MMS)

|

xiaxy/elastic-bert-chinese-ner | xiaxy | "2022-11-24T01:07:03Z" | 535,350 | 4 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | "2022-11-23T10:18:47Z" | ---

license: apache-2.0

---

用于适配elastic8的中文ner模型,支持人名、地名、组织机构名识别

|

jbetker/wav2vec2-large-robust-ft-libritts-voxpopuli | jbetker | "2022-02-25T19:07:57Z" | 534,537 | 8 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | "2022-03-02T23:29:05Z" | This checkpoint is a wav2vec2-large model that is useful for generating transcriptions with punctuation. It is intended for use in building transcriptions for TTS models, where punctuation is very important for prosody.

This model was created by fine-tuning the `facebook/wav2vec2-large-robust-ft-libri-960h` checkpoint on the [libritts](https://research.google/tools/datasets/libri-tts/) and [voxpopuli](https://github.com/facebookresearch/voxpopuli) datasets with a new vocabulary that includes punctuation.

The model gets a respectable WER of 4.45% on the librispeech validation set. The baseline, `facebook/wav2vec2-large-robust-ft-libri-960h`, got 4.3%.

Since the model was fine-tuned on clean audio, it is not well-suited for noisy audio like CommonVoice (though I may upload a checkpoint for that soon too). It still does pretty good, though.

The vocabulary is uploaded to the model hub as well `jbetker/tacotron_symbols`.

Check out my speech transcription script repo, [ocotillo](https://github.com/neonbjb/ocotillo) for usage examples: https://github.com/neonbjb/ocotillo |

davebulaval/MeaningBERT | davebulaval | "2024-03-24T01:17:22Z" | 534,118 | 2 | transformers | [

"transformers",

"safetensors",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2023-11-14T01:15:53Z" | ---

title: MeaningBERT

emoji: 🦀

colorFrom: purple

colorTo: indigo

sdk: gradio

sdk_version: 4.2.0

app_file: app.py

pinned: false

---

# Here is MeaningBERT

MeaningBERT is an automatic and trainable metric for assessing meaning preservation between sentences. MeaningBERT was

proposed in our

article [MeaningBERT: assessing meaning preservation between sentences](https://www.frontiersin.org/articles/10.3389/frai.2023.1223924/full).

Its goal is to assess meaning preservation between two sentences that correlate highly with human judgments and sanity

checks. For more details, refer to our publicly available article.

> This public version of our model uses the best model trained (where in our article, we present the performance results

> of an average of 10 models) for a more extended period (500 epochs instead of 250). We have observed later that the

> model can further reduce dev loss and increase performance. Also, we have changed the data augmentation technique used

> in the article for a more robust one, that also includes the commutative property of the meaning function. Namely, Meaning(Sent_a, Sent_b) = Meaning(Sent_b, Sent_a).

- [HuggingFace Model Card](https://huggingface.co/davebulaval/MeaningBERT)

- [HuggingFace Metric Card](https://huggingface.co/spaces/davebulaval/meaningbert)

## Sanity Check

Correlation to human judgment is one way to evaluate the quality of a meaning preservation metric.

However, it is inherently subjective, since it uses human judgment as a gold standard, and expensive since it requires

a large dataset

annotated by several humans. As an alternative, we designed two automated tests: evaluating meaning preservation between

identical sentences (which should be 100% preserving) and between unrelated sentences (which should be 0% preserving).

In these tests, the meaning preservation target value is not subjective and does not require human annotation to

be measured. They represent a trivial and minimal threshold a good automatic meaning preservation metric should be able to

achieve. Namely, a metric should be minimally able to return a perfect score (i.e., 100%) if two identical sentences are

compared and return a null score (i.e., 0%) if two sentences are completely unrelated.

### Identical Sentences

The first test evaluates meaning preservation between identical sentences. To analyze the metrics' capabilities to pass

this test, we count the number of times a metric rating was greater or equal to a threshold value X∈[95, 99] and divide

It is calculated by the number of sentences to create a ratio of the number of times the metric gives the expected rating. To account

for computer floating-point inaccuracy, we round the ratings to the nearest integer and do not use a threshold value of

100%.

### Unrelated Sentences

Our second test evaluates meaning preservation between a source sentence and an unrelated sentence generated by a large

language model.3 The idea is to verify that the metric finds a meaning preservation rating of 0 when given a completely

irrelevant sentence mainly composed of irrelevant words (also known as word soup). Since this test's expected rating is

0, we check that the metric rating is lower or equal to a threshold value X∈[5, 1].

Again, to account for computer floating-point inaccuracy, we round the ratings to the nearest integer and do not use

a threshold value of 0%.

## Use MeaningBERT

You can use MeaningBERT as a [model](https://huggingface.co/davebulaval/MeaningBERT) that you can retrain or use for

inference using the following with HuggingFace

```python

# Load model directly

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("davebulaval/MeaningBERT")

model = AutoModelForSequenceClassification.from_pretrained("davebulaval/MeaningBERT")

```

or you can use MeaningBERT as a metric for evaluation (no retrain) using the following with HuggingFace

```python

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("davebulaval/MeaningBERT")

scorer = AutoModelForSequenceClassification.from_pretrained("davebulaval/MeaningBERT")

scorer.eval()

documents = ["He wanted to make them pay.", "This sandwich looks delicious.", "He wants to eat."]

simplifications = ["He wanted to make them pay.", "This sandwich looks delicious.",

"Whatever, whenever, this is a sentence."]

# We tokenize the text as a pair and return Pytorch Tensors

tokenize_text = tokenizer(documents, simplifications, truncation=True, padding=True, return_tensors="pt")

with torch.no_grad():

# We process the text

scores = scorer(**tokenize_text)

print(scores.logits.tolist())

```

or using our HuggingFace Metric module

```python

import evaluate

documents = ["He wanted to make them pay.", "This sandwich looks delicious.", "He wants to eat."]

simplifications = ["He wanted to make them pay.", "This sandwich looks delicious.",

"Whatever, whenever, this is a sentence."]

meaning_bert = evaluate.load("davebulaval/meaningbert")

print(meaning_bert.compute(documents=documents, simplifications=simplifications))

```

------------------

## Cite

Use the following citation to cite MeaningBERT

```

@ARTICLE{10.3389/frai.2023.1223924,

AUTHOR={Beauchemin, David and Saggion, Horacio and Khoury, Richard},

TITLE={MeaningBERT: assessing meaning preservation between sentences},

JOURNAL={Frontiers in Artificial Intelligence},

VOLUME={6},

YEAR={2023},

URL={https://www.frontiersin.org/articles/10.3389/frai.2023.1223924},

DOI={10.3389/frai.2023.1223924},

ISSN={2624-8212},

}

```

------------------

## Contributing to MeaningBERT

We welcome user input, whether it regards bugs found in the library or feature propositions! Make sure to have a

look at our [contributing guidelines](https://github.com/GRAAL-Research/MeaningBERT/blob/main/.github/CONTRIBUTING.md)

for more details on this matter.

## License

MeaningBERT is MIT licensed, as found in

the [LICENSE file](https://github.com/GRAAL-Research/risc/blob/main/LICENSE).

------------------

|

Salesforce/blip2-opt-2.7b | Salesforce | "2024-03-22T11:58:17Z" | 533,780 | 314 | transformers | [

"transformers",

"pytorch",

"safetensors",

"blip-2",

"visual-question-answering",

"vision",

"image-to-text",

"image-captioning",

"en",

"arxiv:2301.12597",

"license:mit",

"endpoints_compatible",

"region:us"

] | image-to-text | "2023-02-06T16:21:49Z" | ---

language: en

license: mit

tags:

- vision

- image-to-text

- image-captioning

- visual-question-answering

pipeline_tag: image-to-text

---

# BLIP-2, OPT-2.7b, pre-trained only

BLIP-2 model, leveraging [OPT-2.7b](https://huggingface.co/facebook/opt-2.7b) (a large language model with 2.7 billion parameters).

It was introduced in the paper [BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models](https://arxiv.org/abs/2301.12597) by Li et al. and first released in [this repository](https://github.com/salesforce/LAVIS/tree/main/projects/blip2).

Disclaimer: The team releasing BLIP-2 did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

BLIP-2 consists of 3 models: a CLIP-like image encoder, a Querying Transformer (Q-Former) and a large language model.

The authors initialize the weights of the image encoder and large language model from pre-trained checkpoints and keep them frozen

while training the Querying Transformer, which is a BERT-like Transformer encoder that maps a set of "query tokens" to query embeddings,

which bridge the gap between the embedding space of the image encoder and the large language model.

The goal for the model is simply to predict the next text token, giving the query embeddings and the previous text.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/model_doc/blip2_architecture.jpg"

alt="drawing" width="600"/>

This allows the model to be used for tasks like:

- image captioning

- visual question answering (VQA)

- chat-like conversations by feeding the image and the previous conversation as prompt to the model

## Direct Use and Downstream Use

You can use the raw model for conditional text generation given an image and optional text. See the [model hub](https://huggingface.co/models?search=Salesforce/blip) to look for

fine-tuned versions on a task that interests you.

## Bias, Risks, Limitations, and Ethical Considerations

BLIP2-OPT uses off-the-shelf OPT as the language model. It inherits the same risks and limitations as mentioned in Meta's model card.

> Like other large language models for which the diversity (or lack thereof) of training

> data induces downstream impact on the quality of our model, OPT-175B has limitations in terms

> of bias and safety. OPT-175B can also have quality issues in terms of generation diversity and

> hallucination. In general, OPT-175B is not immune from the plethora of issues that plague modern

> large language models.

>

BLIP2 is fine-tuned on image-text datasets (e.g. [LAION](https://laion.ai/blog/laion-400-open-dataset/) ) collected from the internet. As a result the model itself is potentially vulnerable to generating equivalently inappropriate content or replicating inherent biases in the underlying data.

BLIP2 has not been tested in real world applications. It should not be directly deployed in any applications. Researchers should first carefully assess the safety and fairness of the model in relation to the specific context they’re being deployed within.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/blip-2#transformers.Blip2ForConditionalGeneration.forward.example).

### Memory requirements

The memory requirements differ based on the precision one uses. One can use 4-bit inference using [Bitsandbytes](https://huggingface.co/blog/4bit-transformers-bitsandbytes), which greatly reduce the memory requirements.

| dtype | Largest Layer or Residual Group | Total Size | Training using Adam |

|-------------------|---------------------------------|------------|----------------------|

| float32 | 490.94 MB | 14.43 GB | 57.72 GB |

| float16/bfloat16 | 245.47 MB | 7.21 GB | 28.86 GB |

| int8 | 122.73 MB | 3.61 GB | 14.43 GB |

| int4 | 61.37 MB | 1.8 GB | 7.21 GB |

#### Running the model on CPU

<details>

<summary> Click to expand </summary>

```python

import requests

from PIL import Image

from transformers import Blip2Processor, Blip2ForConditionalGeneration

processor = Blip2Processor.from_pretrained("Salesforce/blip2-opt-2.7b")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-opt-2.7b")

img_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg'

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True).strip())

```

</details>

#### Running the model on GPU

##### In full precision

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

import requests

from PIL import Image

from transformers import Blip2Processor, Blip2ForConditionalGeneration

processor = Blip2Processor.from_pretrained("Salesforce/blip2-opt-2.7b")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-opt-2.7b", device_map="auto")

img_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg'

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt").to("cuda")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True).strip())

```

</details>

##### In half precision (`float16`)

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

import torch

import requests

from PIL import Image

from transformers import Blip2Processor, Blip2ForConditionalGeneration

processor = Blip2Processor.from_pretrained("Salesforce/blip2-opt-2.7b")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-opt-2.7b", torch_dtype=torch.float16, device_map="auto")

img_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg'

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt").to("cuda", torch.float16)

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True).strip())

```

</details>

##### In 8-bit precision (`int8`)

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate bitsandbytes

import torch

import requests

from PIL import Image