url

stringlengths 59

59

| repository_url

stringclasses 1

value | labels_url

stringlengths 73

73

| comments_url

stringlengths 68

68

| events_url

stringlengths 66

66

| html_url

stringlengths 49

49

| id

int64 782M

1.89B

| node_id

stringlengths 18

24

| number

int64 4.97k

9.98k

| title

stringlengths 2

306

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 4

values | active_lock_reason

null | body

stringlengths 0

63.6k

⌀ | reactions

dict | timeline_url

stringlengths 68

68

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 0

classes | pull_request

dict | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/kubeflow/pipelines/issues/8313 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8313/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8313/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8313/events | https://github.com/kubeflow/pipelines/issues/8313 | 1,387,958,970 | I_kwDOB-71UM5SupK6 | 8,313 | [sdk] 403 error when API key is auto-refreshed with Out of Cluster kube-context | {

"login": "alexlatchford",

"id": 628146,

"node_id": "MDQ6VXNlcjYyODE0Ng==",

"avatar_url": "https://avatars.githubusercontent.com/u/628146?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/alexlatchford",

"html_url": "https://github.com/alexlatchford",

"followers_url": "https://api.github.com/users/alexlatchford/followers",

"following_url": "https://api.github.com/users/alexlatchford/following{/other_user}",

"gists_url": "https://api.github.com/users/alexlatchford/gists{/gist_id}",

"starred_url": "https://api.github.com/users/alexlatchford/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/alexlatchford/subscriptions",

"organizations_url": "https://api.github.com/users/alexlatchford/orgs",

"repos_url": "https://api.github.com/users/alexlatchford/repos",

"events_url": "https://api.github.com/users/alexlatchford/events{/privacy}",

"received_events_url": "https://api.github.com/users/alexlatchford/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | open | false | {

"login": "surajkota",

"id": 22246703,

"node_id": "MDQ6VXNlcjIyMjQ2NzAz",

"avatar_url": "https://avatars.githubusercontent.com/u/22246703?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/surajkota",

"html_url": "https://github.com/surajkota",

"followers_url": "https://api.github.com/users/surajkota/followers",

"following_url": "https://api.github.com/users/surajkota/following{/other_user}",

"gists_url": "https://api.github.com/users/surajkota/gists{/gist_id}",

"starred_url": "https://api.github.com/users/surajkota/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/surajkota/subscriptions",

"organizations_url": "https://api.github.com/users/surajkota/orgs",

"repos_url": "https://api.github.com/users/surajkota/repos",

"events_url": "https://api.github.com/users/surajkota/events{/privacy}",

"received_events_url": "https://api.github.com/users/surajkota/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "surajkota",

"id": 22246703,

"node_id": "MDQ6VXNlcjIyMjQ2NzAz",

"avatar_url": "https://avatars.githubusercontent.com/u/22246703?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/surajkota",

"html_url": "https://github.com/surajkota",

"followers_url": "https://api.github.com/users/surajkota/followers",

"following_url": "https://api.github.com/users/surajkota/following{/other_user}",

"gists_url": "https://api.github.com/users/surajkota/gists{/gist_id}",

"starred_url": "https://api.github.com/users/surajkota/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/surajkota/subscriptions",

"organizations_url": "https://api.github.com/users/surajkota/orgs",

"repos_url": "https://api.github.com/users/surajkota/repos",

"events_url": "https://api.github.com/users/surajkota/events{/privacy}",

"received_events_url": "https://api.github.com/users/surajkota/received_events",

"type": "User",

"site_admin": false

},

{

"login": "gkcalat",

"id": 35157096,

"node_id": "MDQ6VXNlcjM1MTU3MDk2",

"avatar_url": "https://avatars.githubusercontent.com/u/35157096?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gkcalat",

"html_url": "https://github.com/gkcalat",

"followers_url": "https://api.github.com/users/gkcalat/followers",

"following_url": "https://api.github.com/users/gkcalat/following{/other_user}",

"gists_url": "https://api.github.com/users/gkcalat/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gkcalat/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gkcalat/subscriptions",

"organizations_url": "https://api.github.com/users/gkcalat/orgs",

"repos_url": "https://api.github.com/users/gkcalat/repos",

"events_url": "https://api.github.com/users/gkcalat/events{/privacy}",

"received_events_url": "https://api.github.com/users/gkcalat/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Hi @alexlatchford!\r\n\r\nIt seems that the `refresh_token` got expired or removed. Could you logout from your Google account and try repeating the steps you outlined?",

"Hey @gkcalat we're on EKS 😅",

"@alexlatchford, I am not an AWS expert, but it might have an option to set the expiration of the tokens. @surajkota may be able to help here.\r\n\r\nGenerally, the error is by design. Feel free to propose your solution here. If there will be a change in the interface, you will need to write a short design doc and present it on a KFP Community Meeting.",

"> @alexlatchford, I am not an AWS expert, but it might have an option to set the expiration of the tokens. @surajkota may be able to help here.\r\n> \r\n> Generally, the error is by design. Feel free to propose your solution here. If there will be a change in the interface, you will need to write a short design doc and present it on a KFP Community Meeting.\r\n\r\nYep in general we can avoid but not having longer running workflows or but upping the time but as your mention it's an inherent flaw with the current design at the moment 😞 \r\n\r\nI'm not sure what the best solution here is given it seems like a thorny design caused by KFP not exposing a `VirtualService` by default and using `kube-proxy`, then it needing to interface with `kfp-server-api` which I believe is auto-generated so likely not super flexible either 😅 I'll have a think though.",

"Afaik, this is a known issue, we have encountered this before. This needs more investigation, I dont have a solution a this time"

] | "2022-09-27T15:29:08" | "2022-10-04T21:33:33" | null | CONTRIBUTOR | null | ### Environment

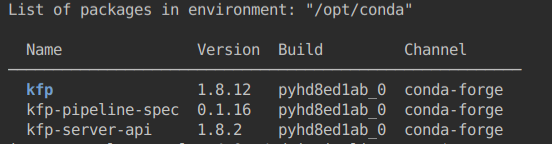

* KFP version:

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

We run KFP v1.8.1 via KF v1.5.0.

* KFP SDK version:

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

We run a forked version of KFP SDK which is at ~v1.9.0, mostly has a couple of patches for problems we've contributed back but haven't fully reincorporated.

* All dependencies version:

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

From my investigation of the current code on `master` in the open source repo, this bug still exists and should be pretty easy to reproduce with a sufficiently long KFP run such that the API needs to be refreshed.

### Steps to reproduce

1. Instantiate a `Client` using a local Kube config, outside of a Kubernetes cluster.

2. Use a long running method like `wait_for_run_completion` (code [here](https://github.com/kubeflow/pipelines/blob/master/sdk/python/kfp/client/client.py#L1302)).

3. Wait for long enough for the API key to expire.

4. You get a `403` error 😢

It gets a 403 because the URL it is hitting on the Kubernetes API service doesn't exist as we lose the "proxy path" that gets injected, more details below.

**PS. This has the potential to impact ANY KFP SDK call to the KFP API service from what I can tell when operating outside of a cluster.**

### Expected result

<!-- What should the correct behavior be? -->

It simply shouldn't error, it should refresh the `api_key` without needing to reload the `host`.

### Materials and Reference

The root cause of the 403 is that in these scenarios instead of making calls to:

`<Kube API Server Host>/api/v1/namespaces/<namespace>/services/ml-pipeline:http/proxy//apis/v1beta1/runs/<run id>`

Instead it calls (which doesn't exist, thus the 403):

`<Kube API Server Host>/apis/v1beta1/runs/<run id>`

Effectively it loses the `Client._KUBE_PROXY_PATH` that is injected [here](https://github.com/kubeflow/pipelines/blob/master/sdk/python/kfp/client/client.py#L302).

Now why this is happens because:

1. During `Client.__init__` the `load_kube_config` method is (see [here](https://github.com/kubeflow/pipelines/blob/master/sdk/python/kfp/client/client.py#L295)) called in the upstream `kubernetes` library.

- Under the hood eventually it sets the `refresh_api_key_hook` (see [here](https://github.com/kubernetes-client/python/blob/ada96faca164f5d5c018fb21b8ef2ecafbdf5e43/kubernetes/base/config/kube_config.py#L576)).

- In that `_refresh_api_key` method it calls `_set_config`, which will re-pull the `host` from the `~/.kube/config`.

- **Normally this would be fine but [here](https://github.com/kubeflow/pipelines/blob/master/sdk/python/kfp/client/client.py#L302) KFP mutates that value to inject `Client._KUBE_PROXY_PATH` 😢**

2. Now for `wait_for_run_completion`, that under the hood calls the `kfp_server_api.api.run_service_api.RunService. get_run_with_http_info`.

3. The `get_run_with_http_info` method calls the `auth_settings` method, which in scenarios when the credentials expire it'll call the `refresh_api_key_hook`.

**How to potentially fix this?**

I guess my preference would be to work out some way to not mutate the `config.host` to append the `Client._KUBE_PROXY_PATH` as that "hacky" approach clearly has its shortcomings. Unclear though what the alternative is as this is quite a convenient solution most of the time!

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8313/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8313/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8312 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8312/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8312/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8312/events | https://github.com/kubeflow/pipelines/issues/8312 | 1,387,804,595 | I_kwDOB-71UM5SuDez | 8,312 | [sdk] <Bug Name> | {

"login": "riyaj8888",

"id": 29457825,

"node_id": "MDQ6VXNlcjI5NDU3ODI1",

"avatar_url": "https://avatars.githubusercontent.com/u/29457825?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/riyaj8888",

"html_url": "https://github.com/riyaj8888",

"followers_url": "https://api.github.com/users/riyaj8888/followers",

"following_url": "https://api.github.com/users/riyaj8888/following{/other_user}",

"gists_url": "https://api.github.com/users/riyaj8888/gists{/gist_id}",

"starred_url": "https://api.github.com/users/riyaj8888/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/riyaj8888/subscriptions",

"organizations_url": "https://api.github.com/users/riyaj8888/orgs",

"repos_url": "https://api.github.com/users/riyaj8888/repos",

"events_url": "https://api.github.com/users/riyaj8888/events{/privacy}",

"received_events_url": "https://api.github.com/users/riyaj8888/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [] | "2022-09-27T13:49:34" | "2022-09-29T22:41:28" | "2022-09-29T22:41:28" | NONE | null | null | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8312/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8312/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8308 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8308/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8308/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8308/events | https://github.com/kubeflow/pipelines/issues/8308 | 1,386,500,014 | I_kwDOB-71UM5SpE-u | 8,308 | [feature] Container start and end times associated with a Pod | {

"login": "ameya-parab",

"id": 75458630,

"node_id": "MDQ6VXNlcjc1NDU4NjMw",

"avatar_url": "https://avatars.githubusercontent.com/u/75458630?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ameya-parab",

"html_url": "https://github.com/ameya-parab",

"followers_url": "https://api.github.com/users/ameya-parab/followers",

"following_url": "https://api.github.com/users/ameya-parab/following{/other_user}",

"gists_url": "https://api.github.com/users/ameya-parab/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ameya-parab/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ameya-parab/subscriptions",

"organizations_url": "https://api.github.com/users/ameya-parab/orgs",

"repos_url": "https://api.github.com/users/ameya-parab/repos",

"events_url": "https://api.github.com/users/ameya-parab/events{/privacy}",

"received_events_url": "https://api.github.com/users/ameya-parab/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"Hi @ameya-parab, thank you for bringing up this feature request. We recommend using the API from k8s to collect pod info now, and we don't have plans to implement this functionality. Feel free to open this thread if you have more thoughts!"

] | "2022-09-26T18:05:01" | "2022-09-29T22:52:10" | "2022-09-29T22:52:09" | NONE | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

/area backend

<!-- /area sdk -->

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

We want to track the start and end times of containers within a pod (Kubeflow Pipeline/Run task). In other words, for better observability, we would like to log the start and end times of the `init`, `wait` and `main` container which are triggered as part of a KFP pod. The `mlpipeline` metabase currently tracks total run and task duration but does not have information on container runtimes.

### What is the use case or pain point?

To improve the observability experience, we would like to track the initialization, wait, and execution time for a Kubeflow pipeline task (preferably in the metabase).

### Is there a workaround currently?

We are currently polling the Kubernetes API server periodically to retrieve container runtimes for a specific Pod (KFP task), but there is a major issue with short lived tasks (<10 seconds), where the Pod's container details are lost if the node on which the Pod was running is scaled down.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8308/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8308/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8307 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8307/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8307/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8307/events | https://github.com/kubeflow/pipelines/issues/8307 | 1,386,037,128 | I_kwDOB-71UM5SnT-I | 8,307 | [bug] Unable to produce pipeline with basic Component I/O | {

"login": "MartinRogmann",

"id": 93972266,

"node_id": "U_kgDOBZnnKg",

"avatar_url": "https://avatars.githubusercontent.com/u/93972266?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/MartinRogmann",

"html_url": "https://github.com/MartinRogmann",

"followers_url": "https://api.github.com/users/MartinRogmann/followers",

"following_url": "https://api.github.com/users/MartinRogmann/following{/other_user}",

"gists_url": "https://api.github.com/users/MartinRogmann/gists{/gist_id}",

"starred_url": "https://api.github.com/users/MartinRogmann/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/MartinRogmann/subscriptions",

"organizations_url": "https://api.github.com/users/MartinRogmann/orgs",

"repos_url": "https://api.github.com/users/MartinRogmann/repos",

"events_url": "https://api.github.com/users/MartinRogmann/events{/privacy}",

"received_events_url": "https://api.github.com/users/MartinRogmann/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1126834402,

"node_id": "MDU6TGFiZWwxMTI2ODM0NDAy",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/components",

"name": "area/components",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1260031624,

"node_id": "MDU6TGFiZWwxMjYwMDMxNjI0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/samples",

"name": "area/samples",

"color": "d2b48c",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"Hi @MartinRogmann, maybe consider upgrading the kfp SDK to 1.8.14 and see if the error persists? ",

"Hi @Linchin, that solved the problem. Thank you"

] | "2022-09-26T12:56:54" | "2022-09-30T08:19:53" | "2022-09-30T08:19:53" | NONE | null | ### Environment

<!-- Please fill in those that seem relevant. -->

* How do you deploy Kubeflow Pipelines (KFP)?

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

Installed kubeflow manifest **v1.6.0-rc.1** with **minikube v1.26.0** and **kubectl v1.21.14**

For detailed output:

minikube version: v1.26.0

commit: f4b412861bb746be73053c9f6d2895f12cf78565

kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.14", GitCommit:"0f77da5bd4809927e15d1658fb4aa8f13ad890a5", GitTreeState:"clean", BuildDate:"2022-06-15T14:17:29Z", GoVersion:"go1.16.15", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.14", GitCommit:"0f77da5bd4809927e15d1658fb4aa8f13ad890a5", GitTreeState:"clean", BuildDate:"2022-06-15T14:11:36Z", GoVersion:"go1.16.15", Compiler:"gc", Platform:"linux/amd64"}

* KFP version:

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

* KFP SDK version:

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

Inside notebook: pip list | grep kfp

kfp 1.6.3

kfp-pipeline-spec 0.1.16

kfp-server-api 1.6.0

### Steps to reproduce

<!--

Specify how to reproduce the problem.

This may include information such as: a description of the process, code snippets, log output, or screenshots.

-->

We tried to produce a basic pipeline that saves pandas dataframes in one component and uses it in the next component using artifacts. We experimented with both Output[Dataset] and OutputPath(str) and tried various samples from v1/v2 SDK without success.

To reproduce, create the following options:

**Imports**

```

import kfp

import kfp.dsl as dsl

from kfp.v2.dsl import component, Input, Output, InputPath, OutputPath, Dataset, Metrics, Model, Artifact

```

**Option A: Using csv**

```

@component(

packages_to_install = ["pandas", "sklearn"],

)

def load(data: Output[Dataset]):

import pandas as pd

from sklearn import datasets

dataset = datasets.load_iris()

df = pd.DataFrame(data=dataset.data, columns= ["Petal Length", "Petal Width", "Sepal Length", "Sepal Width"])

df.to_csv(data.path)

@component(

packages_to_install = ["pandas"],

)

def print_head(data: Input[Dataset]):

import pandas as pd

df = pd.read_csv(data.path)

print(df.head())

@dsl.pipeline(

name='Iris',

description='iris'

)

def pipeline():

load_task = load()

print_task = print_head(data=load_task.outputs["data"])

kfp.compiler.Compiler(mode=kfp.dsl.PipelineExecutionMode.V2_COMPATIBLE).compile(

pipeline_func=pipeline,

package_path='iris_csv.yaml')

```

**Option B: Using pickle**

```

@component(

packages_to_install = ["pandas", "sklearn"],

)

def load(data: Output[Dataset]):

import pickle

import pandas as pd

from sklearn import datasets

dataset = datasets.load_iris()

df = pd.DataFrame(data=dataset.data, columns= ["Petal Length", "Petal Width", "Sepal Length", "Sepal Width"])

with open(data.path, "wb") as f:

pickle.dump(df, f)

@component(

packages_to_install = ["pandas"],

)

def print_head(data: Input[Dataset]):

import pickle

import pandas as pd

with open(data.path, 'rb') as f:

df = pickle.load(f)

print(df.head())

@dsl.pipeline(

name='Iris',

description='iris'

)

def pipeline():

load_task = load()

print_task = print_head(data=load_task.outputs["data"])

kfp.compiler.Compiler(mode=kfp.dsl.PipelineExecutionMode.V2_COMPATIBLE).compile(

pipeline_func=pipeline,

package_path='iris_pickle.yaml')

```

**Option C: Using json**

```

@component(

packages_to_install = ["pandas", "sklearn"],

)

def load(data: Output[Dataset]):

import pandas as pd

from sklearn import datasets

dataset = datasets.load_iris()

df = pd.DataFrame(data=dataset.data, columns= ["Petal Length", "Petal Width", "Sepal Length", "Sepal Width"])

json_df = {"data": df}

with open(data.path, "wb") as f:

f.write(json.dumps(result, default=lambda df: json.loads(df.to_json())))

@component(

packages_to_install = ["pandas"],

)

def print_head(data: Input[Dataset]):

import pandas as pd

with open(data.path, 'rb') as f:

df = pd.DataFrame(json.loads(f.read())["data"])

print(df.head())

@dsl.pipeline(

name='Iris',

description='iris'

)

def pipeline():

load_task = load()

print_task = print_head(data=load_task.outputs["data"])

kfp.compiler.Compiler(mode=kfp.dsl.PipelineExecutionMode.V2_COMPATIBLE).compile(

pipeline_func=pipeline,

package_path='iris_json.yaml')

```

All three options result in the following error:

```

time="2022-09-26T09:50:42.264Z" level=info msg="capturing logs" argo=true

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead:

https://pip.pypa.io/warnings/venv

Traceback (most recent call last):

File "/tmp/tmp.CjkMbFoRTh", line 826, in <module>

executor_main()

File "/tmp/tmp.CjkMbFoRTh", line 820, in executor_main

function_to_execute=function_to_execute)

File "/tmp/tmp.CjkMbFoRTh", line 549, in __init__

artifacts_list[0])

File "/tmp/tmp.CjkMbFoRTh", line 562, in _make_output_artifact

os.makedirs(os.path.dirname(artifact.path), exist_ok=True)

File "/usr/local/lib/python3.7/posixpath.py", line 156, in dirname

p = os.fspath(p)

TypeError: expected str, bytes or os.PathLike object, not NoneType

F0926 09:51:03.011047 26 main.go:56] Failed to execute component: exit status 1

time="2022-09-26T09:51:03.028Z" level=error msg="cannot save artifact /tmp/outputs/data/data" argo=true error="stat /tmp/outputs/data/data: no such file or directory"

Error: exit status 1

```

### Expected result

<!-- What should the correct behavior be? -->

Clearer examples in https://github.com/kubeflow/pipelines/tree/master/samples. As it is hard to distinguish which sample are actually using the v1 or v2 syntax and are compatible with the latest version.

Can you provide a minimal working example that ideally also includes the use of multiple inputs and outputs and the logging of metrics and models. The samples we found in the docs or on github either use the deprecated container_ops instead of components or could not be run by us.

### Materials and reference

<!-- Help us debug this issue by providing resources such as: sample code, background context, or links to references. -->

### Labels

<!-- Please include labels below by uncommenting them to help us better triage issues -->

<!-- /area frontend -->

<!-- /area backend -->

<!-- /area testing -->

/area sdk

/area samples

/area components

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8307/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8307/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8302 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8302/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8302/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8302/events | https://github.com/kubeflow/pipelines/issues/8302 | 1,385,145,397 | I_kwDOB-71UM5Sj6Q1 | 8,302 | [sdk] KFPv2: Custom Container component set bool default value `False` to `0` which caused runtime failure | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | closed | false | {

"login": "Linchin",

"id": 12806577,

"node_id": "MDQ6VXNlcjEyODA2NTc3",

"avatar_url": "https://avatars.githubusercontent.com/u/12806577?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Linchin",

"html_url": "https://github.com/Linchin",

"followers_url": "https://api.github.com/users/Linchin/followers",

"following_url": "https://api.github.com/users/Linchin/following{/other_user}",

"gists_url": "https://api.github.com/users/Linchin/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Linchin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Linchin/subscriptions",

"organizations_url": "https://api.github.com/users/Linchin/orgs",

"repos_url": "https://api.github.com/users/Linchin/repos",

"events_url": "https://api.github.com/users/Linchin/events{/privacy}",

"received_events_url": "https://api.github.com/users/Linchin/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "Linchin",

"id": 12806577,

"node_id": "MDQ6VXNlcjEyODA2NTc3",

"avatar_url": "https://avatars.githubusercontent.com/u/12806577?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Linchin",

"html_url": "https://github.com/Linchin",

"followers_url": "https://api.github.com/users/Linchin/followers",

"following_url": "https://api.github.com/users/Linchin/following{/other_user}",

"gists_url": "https://api.github.com/users/Linchin/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Linchin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Linchin/subscriptions",

"organizations_url": "https://api.github.com/users/Linchin/orgs",

"repos_url": "https://api.github.com/users/Linchin/repos",

"events_url": "https://api.github.com/users/Linchin/events{/privacy}",

"received_events_url": "https://api.github.com/users/Linchin/received_events",

"type": "User",

"site_admin": false

},

{

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | "2022-09-25T19:56:04" | "2023-02-23T19:36:36" | "2023-02-23T19:36:36" | COLLABORATOR | null | ### Environment

* KFP version:

KFP 2.0.0-alpha.4

* KFP SDK version:

SDK 2.0.0-beta4

### Steps to reproduce

Create the following custom container component:

```

from kfp.dsl import Input, Output, Artifact, container_component, ContainerSpec

@dsl.container_component

def create_dataset(experiment_name: str, experiment_namespace: str, experiment_timeout_minutes: int,

experiment_spec_json: Input[Artifact], parameter_set: Output[Artifact], delete_finished_experiment: bool = False):

return ContainerSpec(

image='docker.io/kubeflowkatib/kubeflow-pipelines-launcher',

command=['python', 'src/launch_experiment.py'],

args=[

'--experiment-name', experiment_name,

'--experiment-namespace', experiment_namespace,

'--experiment-spec', experiment_spec_json.path,

'--experiment-timeout-minutes',experiment_timeout_minutes,

'--delete-after-done', delete_finished_experiment,

'--output-file', parameter_set.path,

])

```

Note that `delete_finished_experiment` has a default value `False`.

When compiling the pipeline, this component is converted to IR with the following format:

```

tasks:

create-dataset:

cachingOptions:

enableCache: true

componentRef:

name: comp-create-dataset

dependentTasks:

- create-katib-experiment-task

inputs:

artifacts:

experiment_spec_json:

taskOutputArtifact:

outputArtifactKey: experiment_spec_json

producerTask: create-katib-experiment-task

parameters:

delete_finished_experiment:

runtimeValue:

constant: 0

experiment_name:

componentInputParameter: name

experiment_namespace:

componentInputParameter: namespace

experiment_timeout_minutes:

runtimeValue:

constant: 60

taskInfo:

name: create-dataset

```

Note that `delete_finished_experiment` has a runtime value as 0.

### Expected result

What should the type of boolean default value be? I am expecting the value to be either of followings: bool `false`, bool `False` or string `False`. I didn't expect the value to be number 0.

### Materials and Reference

<!-- Help us debug this issue by providing resources such as: sample code, background context, or links to references. -->

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8302/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8302/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8300 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8300/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8300/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8300/events | https://github.com/kubeflow/pipelines/issues/8300 | 1,384,498,692 | I_kwDOB-71UM5ShcYE | 8,300 | [sdk] Unable to import ServiceAccountTokenVolumeCredentials in KFPv2 SDK | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [

"Thank you, @zijianjoy!"

] | "2022-09-24T03:09:25" | "2022-09-26T15:08:13" | null | COLLABORATOR | null | ### Environment

* KFP version:

KFP 2.0.0-alpha4

* KFP SDK version:

2.0.0-beta.4

### Steps to reproduce

Follow https://www.kubeflow.org/docs/components/pipelines/v1/sdk/connect-api/#full-kubeflow-subfrom-inside-clustersub to set the default SA token path as empty. But `ServiceAccountTokenVolumeCredentials` cannot be imported.

From the `__init__.py` file, ServiceAccountTokenVolumeCredentials is not exported:

https://github.com/kubeflow/pipelines/blob/e14a784327c83e2d7f3e66dc09f4b3af3323cbc7/sdk/python/kfp/client/__init__.py#L17

If we don't import `ServiceAccountTokenVolumeCredentials`, that means by default it should use default SA token path when creating KFP client: `kfp.Client()`. However, this command has failed due to:

```

File /opt/conda/lib/python3.8/site-packages/kfp/client/client.py:372, in Client._get_config_with_default_credentials(self, config)

360 """Apply default credentials to the configuration object.

361

362 This method accepts a Configuration object and extends it with

363 some default credentials interface.

364 """

365 # XXX: The default credentials are audience-based service account tokens

366 # projected by the kubelet (ServiceAccountTokenVolumeCredentials). As we

367 # implement more and more credentials, we can have some heuristic and

(...)

370

371 # TODO: auth.ServiceAccountCredentials does not exist... dead code path?

--> 372 credentials = auth.ServiceAccountTokenVolumeCredentials()

373 config_copy = copy.deepcopy(config)

375 try:

AttributeError: module 'kfp.client.auth' has no attribute 'ServiceAccountTokenVolumeCredentials'

```

### Expected result

User can create KFP client using the default SA token path. User can also configure SA token path to be custom value so KFP SDK can read from a different location. User can configure SA token path to be None, in this case, KFP SDK should be able to use environment variable KF_PIPELINES_SA_TOKEN_PATH.

cc @chensun @connor-mccarthy

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8300/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8300/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8294 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8294/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8294/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8294/events | https://github.com/kubeflow/pipelines/issues/8294 | 1,382,977,963 | I_kwDOB-71UM5SbpGr | 8,294 | [feature] Stop recurring run when failed | {

"login": "casassg",

"id": 6912589,

"node_id": "MDQ6VXNlcjY5MTI1ODk=",

"avatar_url": "https://avatars.githubusercontent.com/u/6912589?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/casassg",

"html_url": "https://github.com/casassg",

"followers_url": "https://api.github.com/users/casassg/followers",

"following_url": "https://api.github.com/users/casassg/following{/other_user}",

"gists_url": "https://api.github.com/users/casassg/gists{/gist_id}",

"starred_url": "https://api.github.com/users/casassg/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/casassg/subscriptions",

"organizations_url": "https://api.github.com/users/casassg/orgs",

"repos_url": "https://api.github.com/users/casassg/repos",

"events_url": "https://api.github.com/users/casassg/events{/privacy}",

"received_events_url": "https://api.github.com/users/casassg/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"This feels like a custom logic that probably shouldn't be part of a scheduling system. Alternatives could be implementing the custom logic yourself either from client side or in component level like using exit handler.",

"@chensun I wonder wdym to implement this from client side? Scheduled runs after they are created as a resource are only checked by the controller itself so not sure how could client perform retries. Unless you mean reimplementing recurring runs from client manually\r\n\r\nAlso exithandler is an option as I mentioned above, however it requires the pipeline component to access the server and modify its state. In addition to the fact that this means you cant do any other exit handler (aka you end up having to add all code within 1 component if you need any other clean up).\r\n\r\nalso if we use Airflow as reference this are all implement in the scheduler there btw, so it doesnt seem logic that shouldnt be part of a scheduling system \r\n"

] | "2022-09-22T20:28:32" | "2022-09-23T00:51:46" | "2022-09-22T22:54:31" | CONTRIBUTOR | null | ### Feature Area

/area backend

### What feature would you like to see?

Support for stopping recurring runs (or retry N times) if a run fails.

### What is the use case or pain point?

At the moment, when a recurring run has a failed run, it will continue scheduling new runs independently of previous state. Ideally, we would like to define a custom behaviour were a scheduled run gets retried N times or it stops scheduling new runs after trying to schedule N runs.

This is mostly for cases where running for date X+1 is counterproducing when X is missing. For example for data processing where you want to make sure that no gaps are missing between segments.

### Is there a workaround currently?

Currently there is some sort of way which is to use an ExitHandler to stop the run by instrospecting into itself and finding the recurring_run id associated with current run. However that makes it complicated to support. Ideally we make the handling more transparent by doing this on the controller and configuring it from UI.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8294/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8294/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8292 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8292/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8292/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8292/events | https://github.com/kubeflow/pipelines/issues/8292 | 1,382,464,256 | I_kwDOB-71UM5SZrsA | 8,292 | [feature] Add a progress bar in the DAG UI for long-running tasks | {

"login": "MainRo",

"id": 814804,

"node_id": "MDQ6VXNlcjgxNDgwNA==",

"avatar_url": "https://avatars.githubusercontent.com/u/814804?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/MainRo",

"html_url": "https://github.com/MainRo",

"followers_url": "https://api.github.com/users/MainRo/followers",

"following_url": "https://api.github.com/users/MainRo/following{/other_user}",

"gists_url": "https://api.github.com/users/MainRo/gists{/gist_id}",

"starred_url": "https://api.github.com/users/MainRo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/MainRo/subscriptions",

"organizations_url": "https://api.github.com/users/MainRo/orgs",

"repos_url": "https://api.github.com/users/MainRo/repos",

"events_url": "https://api.github.com/users/MainRo/events{/privacy}",

"received_events_url": "https://api.github.com/users/MainRo/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930619516,

"node_id": "MDU6TGFiZWw5MzA2MTk1MTY=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/frontend",

"name": "area/frontend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"This is infeasible, as each task is a containerized app. KFP system doesn't have visibility into the progress of a task. \r\nOur suggestion would be use the log workaround as you described.",

"ok, thanks for answering."

] | "2022-09-22T13:33:29" | "2022-09-23T08:06:10" | "2022-09-22T22:47:37" | NONE | null | ### Feature Area

/area frontend

/area backend

/area sdk

### What feature would you like to see?

In the UI displaying the DAG of the pipeline, it would be great to see a progress bar on each task. This would allow to visually see how far the task is to completion. In the code of the component, we could provide information on the progress with a dedicated API in the SDK.

### What is the use case or pain point?

It is difficult to estimate how long a task will run. The only option I know, as of today, is to log the progress information.

### Is there a workaround currently?

I add some traces/logs in my task, but this implies looking regularly to the logs of each running task.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8292/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8292/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8291 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8291/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8291/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8291/events | https://github.com/kubeflow/pipelines/issues/8291 | 1,382,180,542 | I_kwDOB-71UM5SYma- | 8,291 | [sdk] Package version conflict in kfp and google-cloud-pipeline-components | {

"login": "wardVD",

"id": 2136274,

"node_id": "MDQ6VXNlcjIxMzYyNzQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/2136274?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/wardVD",

"html_url": "https://github.com/wardVD",

"followers_url": "https://api.github.com/users/wardVD/followers",

"following_url": "https://api.github.com/users/wardVD/following{/other_user}",

"gists_url": "https://api.github.com/users/wardVD/gists{/gist_id}",

"starred_url": "https://api.github.com/users/wardVD/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/wardVD/subscriptions",

"organizations_url": "https://api.github.com/users/wardVD/orgs",

"repos_url": "https://api.github.com/users/wardVD/repos",

"events_url": "https://api.github.com/users/wardVD/events{/privacy}",

"received_events_url": "https://api.github.com/users/wardVD/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | open | false | {

"login": "chongyouquan",

"id": 48691403,

"node_id": "MDQ6VXNlcjQ4NjkxNDAz",

"avatar_url": "https://avatars.githubusercontent.com/u/48691403?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chongyouquan",

"html_url": "https://github.com/chongyouquan",

"followers_url": "https://api.github.com/users/chongyouquan/followers",

"following_url": "https://api.github.com/users/chongyouquan/following{/other_user}",

"gists_url": "https://api.github.com/users/chongyouquan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chongyouquan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chongyouquan/subscriptions",

"organizations_url": "https://api.github.com/users/chongyouquan/orgs",

"repos_url": "https://api.github.com/users/chongyouquan/repos",

"events_url": "https://api.github.com/users/chongyouquan/events{/privacy}",

"received_events_url": "https://api.github.com/users/chongyouquan/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chongyouquan",

"id": 48691403,

"node_id": "MDQ6VXNlcjQ4NjkxNDAz",

"avatar_url": "https://avatars.githubusercontent.com/u/48691403?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chongyouquan",

"html_url": "https://github.com/chongyouquan",

"followers_url": "https://api.github.com/users/chongyouquan/followers",

"following_url": "https://api.github.com/users/chongyouquan/following{/other_user}",

"gists_url": "https://api.github.com/users/chongyouquan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chongyouquan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chongyouquan/subscriptions",

"organizations_url": "https://api.github.com/users/chongyouquan/orgs",

"repos_url": "https://api.github.com/users/chongyouquan/repos",

"events_url": "https://api.github.com/users/chongyouquan/events{/privacy}",

"received_events_url": "https://api.github.com/users/chongyouquan/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"`google-cloud-pipeline-components` is currently not fully compatible with kfp 2.0*. We're working on the migration, and @chongyouquan may help provide a rough timeline.\r\n",

"Any update here?",

"Hi, the following `requirements.in` works:\r\n```\r\nkfp==2.0.0b10\r\nproto-plus==1.19.6\r\ngoogleapis-common-protos==1.56.4\r\ngoogle-api-core==2.8.1\r\ngoogle-cloud-notebooks==1.3.2\r\ngoogle-cloud-aiplatform==1.16.1\r\ngoogle-cloud-resource-manager==1.6.0\r\ngoogle-cloud-pipeline-components\r\ngrpcio-status==1.47.0\r\n```"

] | "2022-09-22T10:03:22" | "2023-01-11T08:51:00" | null | NONE | null | ### Environment

* KFP version:

2.0.0b1

### Steps to reproduce

Create a `requirements.in` file:

```

kfp==2.0.0b1

google-cloud-pipeline-components

```

Then run `pip-compile -v requirements.in`

### Result

```Using indexes:

https://pypi.org/simple

ROUND 1

Current constraints:

google-cloud-pipeline-components (from -r requirements.in (line 7))

kfp==2.0.0b1 (from -r requirements.in (line 6))

Finding the best candidates:

found candidate google-cloud-pipeline-components==1.0.22 (constraint was <any>)

found candidate kfp==2.0.0b1 (constraint was ==2.0.0b1)

Finding secondary dependencies:

kfp==2.0.0b1 requires absl-py<2,>=0.9, click<9,>=7.1.2, cloudpickle<3,>=2.0.0, Deprecated<2,>=1.2.7, docstring-parser<1,>=0.7.3, fire<1,>=0.3.1, google-api-core!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0dev,>=1.31.5, google-auth<3,>=1.6.1, google-cloud-storage<3,>=2.2.1, jsonschema<4,>=3.0.1, kfp-pipeline-spec<0.2.0,>=0.1.14, kfp-server-api<3.0.0,>=2.0.0a0, kubernetes<19,>=8.0.0, protobuf<4,>=3.13.0, PyYAML<6,>=5.3, requests-toolbelt<1,>=0.8.0, strip-hints<1,>=0.1.8, tabulate<1,>=0.8.6, typer<1.0,>=0.3.2, uritemplate<4,>=3.0.1

google-cloud-pipeline-components==1.0.22 requires google-api-core!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0dev,>=1.31.5, google-cloud-aiplatform<2,>=1.11.0, google-cloud-notebooks>=0.4.0, google-cloud-storage<2,>=1.20.0, kfp<2.0.0,>=1.8.9

New dependencies found in this round:

adding ('absl-py', '<2,>=0.9', [])

adding ('click', '<9,>=7.1.2', [])

adding ('cloudpickle', '<3,>=2.0.0', [])

adding ('deprecated', '<2,>=1.2.7', [])

adding ('docstring-parser', '<1,>=0.7.3', [])

adding ('fire', '<1,>=0.3.1', [])

adding ('google-api-core', '!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0dev,>=1.31.5', [])

adding ('google-auth', '<3,>=1.6.1', [])

adding ('google-cloud-aiplatform', '<2,>=1.11.0', [])

adding ('google-cloud-notebooks', '>=0.4.0', [])

adding ('google-cloud-storage', '<2,<3,>=1.20.0,>=2.2.1', [])

adding ('jsonschema', '<4,>=3.0.1', [])

adding ('kfp', '<2.0.0,>=1.8.9', [])

adding ('kfp-pipeline-spec', '<0.2.0,>=0.1.14', [])

adding ('kfp-server-api', '<3.0.0,>=2.0.0a0', [])

adding ('kubernetes', '<19,>=8.0.0', [])

adding ('protobuf', '<4,>=3.13.0', [])

adding ('pyyaml', '<6,>=5.3', [])

adding ('requests-toolbelt', '<1,>=0.8.0', [])

adding ('strip-hints', '<1,>=0.1.8', [])

adding ('tabulate', '<1,>=0.8.6', [])

adding ('typer', '<1.0,>=0.3.2', [])

adding ('uritemplate', '<4,>=3.0.1', [])

Removed dependencies in this round:

------------------------------------------------------------

Result of round 1: not stable

ROUND 2

Current constraints:

absl-py<2,>=0.9 (from kfp==2.0.0b1->-r requirements.in (line 6))

click<9,>=7.1.2 (from kfp==2.0.0b1->-r requirements.in (line 6))

cloudpickle<3,>=2.0.0 (from kfp==2.0.0b1->-r requirements.in (line 6))

Deprecated<2,>=1.2.7 (from kfp==2.0.0b1->-r requirements.in (line 6))

docstring-parser<1,>=0.7.3 (from kfp==2.0.0b1->-r requirements.in (line 6))

fire<1,>=0.3.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

google-api-core!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0dev,>=1.31.5 (from kfp==2.0.0b1->-r requirements.in (line 6))

google-auth<3,>=1.6.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

google-cloud-aiplatform<2,>=1.11.0 (from google-cloud-pipeline-components==1.0.22->-r requirements.in (line 7))

google-cloud-notebooks>=0.4.0 (from google-cloud-pipeline-components==1.0.22->-r requirements.in (line 7))

google-cloud-pipeline-components (from -r requirements.in (line 7))

google-cloud-storage<2,<3,>=1.20.0,>=2.2.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

jsonschema<4,>=3.0.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

kfp<2.0.0,==2.0.0b1,>=1.8.9 (from -r requirements.in (line 6))

kfp-pipeline-spec<0.2.0,>=0.1.14 (from kfp==2.0.0b1->-r requirements.in (line 6))

kfp-server-api<3.0.0,>=2.0.0a0 (from kfp==2.0.0b1->-r requirements.in (line 6))

kubernetes<19,>=8.0.0 (from kfp==2.0.0b1->-r requirements.in (line 6))

protobuf<4,>=3.13.0 (from kfp==2.0.0b1->-r requirements.in (line 6))

PyYAML<6,>=5.3 (from kfp==2.0.0b1->-r requirements.in (line 6))

requests-toolbelt<1,>=0.8.0 (from kfp==2.0.0b1->-r requirements.in (line 6))

strip-hints<1,>=0.1.8 (from kfp==2.0.0b1->-r requirements.in (line 6))

tabulate<1,>=0.8.6 (from kfp==2.0.0b1->-r requirements.in (line 6))

typer<1.0,>=0.3.2 (from kfp==2.0.0b1->-r requirements.in (line 6))

uritemplate<4,>=3.0.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

Finding the best candidates:

found candidate absl-py==1.2.0 (constraint was >=0.9,<2)

found candidate click==8.1.3 (constraint was >=7.1.2,<9)

found candidate cloudpickle==2.2.0 (constraint was >=2.0.0,<3)

found candidate deprecated==1.2.13 (constraint was >=1.2.7,<2)

found candidate docstring-parser==0.15 (constraint was >=0.7.3,<1)

found candidate fire==0.4.0 (constraint was >=0.3.1,<1)

found candidate google-api-core==2.10.1 (constraint was >=1.31.5,!=2.0.*,!=2.1.*,!=2.2.*,!=2.3.0,<3.0.0dev)

found candidate google-auth==1.35.0 (constraint was >=1.6.1,<3)

found candidate google-cloud-aiplatform==1.17.1 (constraint was >=1.11.0,<2)

found candidate google-cloud-notebooks==1.4.2 (constraint was >=0.4.0)

found candidate google-cloud-pipeline-components==1.0.22 (constraint was <any>)

Could not find a version that matches google-cloud-storage<2,<3,>=1.20.0,>=2.2.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

Tried: 0.20.0, 0.20.0, 0.21.0, 0.21.0, 0.22.0, 0.22.0, 0.23.0, 0.23.0, 0.23.1, 0.23.1, 1.0.0, 1.0.0, 1.1.0, 1.1.0, 1.1.1, 1.1.1, 1.2.0, 1.2.0, 1.3.0, 1.3.0, 1.3.1, 1.3.1, 1.3.2, 1.3.2, 1.4.0, 1.4.0, 1.5.0, 1.5.0, 1.6.0, 1.6.0, 1.7.0, 1.7.0, 1.8.0, 1.8.0, 1.9.0, 1.9.0, 1.10.0, 1.10.0, 1.11.0, 1.11.0, 1.11.1, 1.11.1, 1.12.0, 1.12.0, 1.12.1, 1.12.1, 1.13.0, 1.13.0, 1.13.1, 1.13.1, 1.13.2, 1.13.2, 1.13.3, 1.13.3, 1.14.0, 1.14.0, 1.14.1, 1.14.1, 1.15.0, 1.15.0, 1.15.1, 1.15.1, 1.15.2, 1.15.2, 1.16.0, 1.16.0, 1.16.1, 1.16.1, 1.16.2, 1.16.2, 1.17.0, 1.17.0, 1.17.1, 1.17.1, 1.18.0, 1.18.0, 1.18.1, 1.18.1, 1.19.0, 1.19.0, 1.19.1, 1.19.1, 1.20.0, 1.20.0, 1.21.0, 1.21.0, 1.22.0, 1.22.0, 1.23.0, 1.23.0, 1.24.0, 1.24.0, 1.24.1, 1.24.1, 1.25.0, 1.25.0, 1.26.0, 1.26.0, 1.27.0, 1.27.0, 1.28.0, 1.28.0, 1.28.1, 1.28.1, 1.29.0, 1.29.0, 1.30.0, 1.30.0, 1.31.0, 1.31.0, 1.31.1, 1.31.1, 1.31.2, 1.31.2, 1.32.0, 1.32.0, 1.33.0, 1.33.0, 1.34.0, 1.34.0, 1.35.0, 1.35.0, 1.35.1, 1.35.1, 1.36.0, 1.36.0, 1.36.1, 1.36.1, 1.36.2, 1.36.2, 1.37.0, 1.37.0, 1.37.1, 1.37.1, 1.38.0, 1.38.0, 1.39.0, 1.39.0, 1.40.0, 1.40.0, 1.41.0, 1.41.0, 1.41.1, 1.41.1, 1.42.0, 1.42.0, 1.42.1, 1.42.1, 1.42.2, 1.42.2, 1.42.3, 1.42.3, 1.43.0, 1.43.0, 1.44.0, 1.44.0, 2.0.0, 2.0.0, 2.1.0, 2.1.0, 2.2.0, 2.2.0, 2.2.1, 2.2.1, 2.3.0, 2.3.0, 2.4.0, 2.4.0, 2.5.0, 2.5.0

There are incompatible versions in the resolved dependencies:

google-cloud-storage<3,>=2.2.1 (from kfp==2.0.0b1->-r requirements.in (line 6))

google-cloud-storage<2,>=1.20.0 (from google-cloud-pipeline-components==1.0.22->-r requirements.in (line 7))

```

### Expected result

An output with compatible package versions.

### Possible solution

Bump the google-cloud-storage version to include `<3,>=2.2.1` in https://github.com/kubeflow/pipelines/blob/2.0.0b4/components/google-cloud/dependencies.py

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8291/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8291/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8283 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8283/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8283/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8283/events | https://github.com/kubeflow/pipelines/issues/8283 | 1,378,737,179 | I_kwDOB-71UM5SLdwb | 8,283 | [backend] metadata-writer cannot save metadata of S3 artifacts with argo v3.1+ | {

"login": "tktest1234",

"id": 113957092,

"node_id": "U_kgDOBsrY5A",

"avatar_url": "https://avatars.githubusercontent.com/u/113957092?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tktest1234",

"html_url": "https://github.com/tktest1234",

"followers_url": "https://api.github.com/users/tktest1234/followers",

"following_url": "https://api.github.com/users/tktest1234/following{/other_user}",

"gists_url": "https://api.github.com/users/tktest1234/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tktest1234/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tktest1234/subscriptions",

"organizations_url": "https://api.github.com/users/tktest1234/orgs",

"repos_url": "https://api.github.com/users/tktest1234/repos",

"events_url": "https://api.github.com/users/tktest1234/events{/privacy}",

"received_events_url": "https://api.github.com/users/tktest1234/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"@tktest1234 Are you running on AWS and want to use KFP with S3 as storage backend?\r\n\r\n",

"Yes, I install kubeflow1.4 on AWS(EKS) and want to use KFP with S3 as storage backend.\r\nIsn't it expected?",

"Hey @tktest1234 please follow the instructions on AWS distribution of Kubeflow to install KFP with S3 as artifact storage https://awslabs.github.io/kubeflow-manifests/docs/deployment/rds-s3/\r\n\r\nPlease create an issue on the awslabs repository if you face any issues",

"Thank you, but awslabs's installation doesn't solve the problem.\r\n\r\n#5829 is exactly the PR for this issue. I hope it to progress."

] | "2022-09-20T02:39:36" | "2022-12-28T03:18:38" | null | NONE | null | ### Environment

* How did you deploy Kubeflow Pipelines (KFP)?

kubeflow 1.4 manifest (git::https://github.com/kubeflow/manifests.git?ref=v1.4.0 )

and manually change config for S3

* KFP version:

1.7.0 (kubeflow 1.4)

* KFP SDK version:

1.7.1

### Steps to reproduce

1. setting to save artifacts to S3 (change configmap for workflow-controller)

1. Run pipeline: [Tutorial] Data passing in python components

metadata-writer output log

```

Kubernetes Pod event: ADDED file-passing-pipelines-txmqj-2254123803 14598940

Traceback (most recent call last):

File "/kfp/metadata_writer/metadata_writer.py", line 238, in <module>

artifact_uri = argo_artifact_to_uri(argo_artifact)

File "/kfp/metadata_writer/metadata_writer.py", line 106, in argo_artifact_to_uri

provider=get_object_store_provider(s3_artifact['endpoint']),

KeyError: 'endpoint'

```

result:

- Artifact in metadb is empty

### Expected result

metadata for the artifacts is logged into metadb

### Materials and Reference

I think it is caused by changes for argo Key-Only Artifacts.

In https://github.com/kubeflow/pipelines/blob/1.7.0/backend/metadata_writer/src/metadata_writer.py#L318, metadata-writer read "workflows.argoproj.io/outputs", but endpoint and bucket are not passed by argo3.1+. So https://github.com/kubeflow/pipelines/blob/1.7.0/backend/metadata_writer/src/metadata_writer.py#L105 cannot set the correct URL.

Related frontend issue: https://github.com/kubeflow/pipelines/issues/5930

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8283/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8283/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8269 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8269/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8269/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8269/events | https://github.com/kubeflow/pipelines/issues/8269 | 1,374,042,311 | I_kwDOB-71UM5R5jjH | 8,269 | Cannot get key for artifact location | {

"login": "SeibertronSS",

"id": 69496864,

"node_id": "MDQ6VXNlcjY5NDk2ODY0",

"avatar_url": "https://avatars.githubusercontent.com/u/69496864?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SeibertronSS",

"html_url": "https://github.com/SeibertronSS",

"followers_url": "https://api.github.com/users/SeibertronSS/followers",

"following_url": "https://api.github.com/users/SeibertronSS/following{/other_user}",

"gists_url": "https://api.github.com/users/SeibertronSS/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SeibertronSS/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SeibertronSS/subscriptions",

"organizations_url": "https://api.github.com/users/SeibertronSS/orgs",

"repos_url": "https://api.github.com/users/SeibertronSS/repos",

"events_url": "https://api.github.com/users/SeibertronSS/events{/privacy}",

"received_events_url": "https://api.github.com/users/SeibertronSS/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [

"@SeibertronSS, do other pipelines have this error? Have you successfully run a pipeline on this deployment?\r\n\r\nIt seems like there may be an issue with the deployment related to the [minio artifact secret](https://github.com/kubeflow/pipelines/blob/74c7773ca40decfd0d4ed40dc93a6af591bbc190/manifests/kustomize/third-party/minio/base/mlpipeline-minio-artifact-secret.yaml)."

] | "2022-09-15T07:11:35" | "2022-09-15T22:52:43" | null | NONE | null | ### Environment

<!-- Please fill in those that seem relevant. -->

* How do you deploy Kubeflow Pipelines (KFP)?

I deploy the Kubeflow Pipelines (standalone) by Kubeflow Manifests (https://github.com/kubeflow/manifests)

* KFP version:

1.7.0

* KFP SDK version:

1.8.2

When I submit a pipeline, I will get the error `This step is in Error state with this message: Error (exit code 1): key unsupported: cannot get key for artifact location, because it is invalid`

The code is as follows

```

import kfp

from kfp.components import create_component_from_func

client = kfp.Client()

def add(a: float, b: float) -> float:

'''Calculates sum of two arguments'''

return a + b

add_op = create_component_from_func(

add, output_component_file='add_component.yaml')

import kfp.dsl as dsl

@dsl.pipeline(

name='Addition pipeline',

description='An example pipeline that performs addition calculations.'

)

def add_pipeline(

a='1',

b='7',

):

# Passes a pipeline parameter and a constant value to the `add_op` factory

# function.

first_add_task = add_op(a, 4)

# Passes an output reference from `first_add_task` and a pipeline parameter

# to the `add_op` factory function. For operations with a single return

# value, the output reference can be accessed as `task.output` or

# `task.outputs['output_name']`.

second_add_task = add_op(first_add_task.output, b)

# Specify argument values for your pipeline run.

arguments = {'a': '7', 'b': '8'}

# Create a pipeline run, using the client you initialized in a prior step.

client.create_run_from_pipeline_func(add_pipeline, arguments=arguments, run_name="add-8")

print("submit pipeline")

```

This problem seems to be related to Minio.

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8269/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8269/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8267 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8267/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8267/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8267/events | https://github.com/kubeflow/pipelines/issues/8267 | 1,373,732,443 | I_kwDOB-71UM5R4X5b | 8,267 | [sdk] ssl.SSLError: [SSL: WRONG_VERSION_NUMBER] wrong version number | {

"login": "pablofiumara",

"id": 4154361,

"node_id": "MDQ6VXNlcjQxNTQzNjE=",

"avatar_url": "https://avatars.githubusercontent.com/u/4154361?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/pablofiumara",

"html_url": "https://github.com/pablofiumara",

"followers_url": "https://api.github.com/users/pablofiumara/followers",

"following_url": "https://api.github.com/users/pablofiumara/following{/other_user}",

"gists_url": "https://api.github.com/users/pablofiumara/gists{/gist_id}",

"starred_url": "https://api.github.com/users/pablofiumara/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/pablofiumara/subscriptions",

"organizations_url": "https://api.github.com/users/pablofiumara/orgs",

"repos_url": "https://api.github.com/users/pablofiumara/repos",

"events_url": "https://api.github.com/users/pablofiumara/events{/privacy}",

"received_events_url": "https://api.github.com/users/pablofiumara/received_events",

"type": "User",