url

stringlengths 59

59

| repository_url

stringclasses 1

value | labels_url

stringlengths 73

73

| comments_url

stringlengths 68

68

| events_url

stringlengths 66

66

| html_url

stringlengths 49

49

| id

int64 782M

1.89B

| node_id

stringlengths 18

24

| number

int64 4.97k

9.98k

| title

stringlengths 2

306

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 4

values | active_lock_reason

null | body

stringlengths 0

63.6k

⌀ | reactions

dict | timeline_url

stringlengths 68

68

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 0

classes | pull_request

dict | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/kubeflow/pipelines/issues/7455 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7455/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7455/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7455/events | https://github.com/kubeflow/pipelines/issues/7455 | 1,178,514,300 | I_kwDOB-71UM5GPrN8 | 7,455 | [feature] Support IR YAML format in API | {

"login": "Linchin",

"id": 12806577,

"node_id": "MDQ6VXNlcjEyODA2NTc3",

"avatar_url": "https://avatars.githubusercontent.com/u/12806577?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Linchin",

"html_url": "https://github.com/Linchin",

"followers_url": "https://api.github.com/users/Linchin/followers",

"following_url": "https://api.github.com/users/Linchin/following{/other_user}",

"gists_url": "https://api.github.com/users/Linchin/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Linchin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Linchin/subscriptions",

"organizations_url": "https://api.github.com/users/Linchin/orgs",

"repos_url": "https://api.github.com/users/Linchin/repos",

"events_url": "https://api.github.com/users/Linchin/events{/privacy}",

"received_events_url": "https://api.github.com/users/Linchin/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | {

"login": "Linchin",

"id": 12806577,

"node_id": "MDQ6VXNlcjEyODA2NTc3",

"avatar_url": "https://avatars.githubusercontent.com/u/12806577?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Linchin",

"html_url": "https://github.com/Linchin",

"followers_url": "https://api.github.com/users/Linchin/followers",

"following_url": "https://api.github.com/users/Linchin/following{/other_user}",

"gists_url": "https://api.github.com/users/Linchin/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Linchin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Linchin/subscriptions",

"organizations_url": "https://api.github.com/users/Linchin/orgs",

"repos_url": "https://api.github.com/users/Linchin/repos",

"events_url": "https://api.github.com/users/Linchin/events{/privacy}",

"received_events_url": "https://api.github.com/users/Linchin/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "Linchin",

"id": 12806577,

"node_id": "MDQ6VXNlcjEyODA2NTc3",

"avatar_url": "https://avatars.githubusercontent.com/u/12806577?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Linchin",

"html_url": "https://github.com/Linchin",

"followers_url": "https://api.github.com/users/Linchin/followers",

"following_url": "https://api.github.com/users/Linchin/following{/other_user}",

"gists_url": "https://api.github.com/users/Linchin/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Linchin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Linchin/subscriptions",

"organizations_url": "https://api.github.com/users/Linchin/orgs",

"repos_url": "https://api.github.com/users/Linchin/repos",

"events_url": "https://api.github.com/users/Linchin/events{/privacy}",

"received_events_url": "https://api.github.com/users/Linchin/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | "2022-03-23T18:37:11" | "2022-04-06T17:02:13" | "2022-04-06T17:02:13" | COLLABORATOR | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

/area backend

<!-- /area sdk -->

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

<!-- Provide a description of this feature and the user experience. -->

Use YAML instead of JSON for intermediate representation (IR).

### What is the use case or pain point?

<!-- It helps us understand the benefit of this feature for your use case. -->

YAML is a superset of JSON and is easier to understand for a reader. It also paves the way to enhancing readability in the future.

### Is there a workaround currently?

<!-- Without this feature, how do you accomplish your task today? -->

NA

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7455/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7455/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7454 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7454/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7454/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7454/events | https://github.com/kubeflow/pipelines/issues/7454 | 1,178,485,678 | I_kwDOB-71UM5GPkOu | 7,454 | [feature] Access to ParallelFor values and set_paralellism per Op | {

"login": "mikwieczorek",

"id": 40968185,

"node_id": "MDQ6VXNlcjQwOTY4MTg1",

"avatar_url": "https://avatars.githubusercontent.com/u/40968185?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mikwieczorek",

"html_url": "https://github.com/mikwieczorek",

"followers_url": "https://api.github.com/users/mikwieczorek/followers",

"following_url": "https://api.github.com/users/mikwieczorek/following{/other_user}",

"gists_url": "https://api.github.com/users/mikwieczorek/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mikwieczorek/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mikwieczorek/subscriptions",

"organizations_url": "https://api.github.com/users/mikwieczorek/orgs",

"repos_url": "https://api.github.com/users/mikwieczorek/repos",

"events_url": "https://api.github.com/users/mikwieczorek/events{/privacy}",

"received_events_url": "https://api.github.com/users/mikwieczorek/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | "2022-03-23T18:07:28" | "2022-03-24T22:53:58" | null | NONE | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

/area backend

/area sdk

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

I stumbled upon a case where two features could be useful when building dynamically a pipeline that is controlled by some outside config.

### What is the use case or pain point?

Let's say we have a number of datasets and number of models we want to train. Not all models should be run on all datasets, so we use a config to specify pipeline content. (Examples in the code). Moreover, we would like that each run-per-dataset is parallel to each other and some task may be CPU/RAM heavy so we would like to limit the parallelism per Op-type.

This i a case when we want to run a pipeline from github CI/CD to test the newly pushed code on a set of dataset and models to ensure it's validity and performance.

### Is there a workaround currently?

Semi-workaround is presented in the example as using config in a function that returns pipeline-function and iterating over the config without using `ParallelFor`, but this seems problematic in limiting parallelism per Op.

For the problem of paralellism I saw a related issue https://github.com/kubeflow/pipelines/issues/4089, but

also I know that Argo allows setting parallelism per Task/Step, so having that in Kubeflow would be nice.

Also using separate pipelines per dateset is somewhat a working option.

```import kfp

from kfp.components import func_to_container_op

import kfp.dsl as dsl

import json

### Functions

def print_fun(calculation: str) -> str:

print("Calculation Type: ",calculation)

return calculation

def add(a: float, b: float) -> float:

return a + b

def multiply(a: float, b: float) -> float:

return a * b

def divide(a: float, b: float) -> float:

return a / b

def subtract(a: float, b: float) -> float:

return a - b

### Container ops

print_op = func_to_container_op(print_fun)

add_op = func_to_container_op(add)

multiply_op = func_to_container_op(multiply)

divide_op = func_to_container_op(divide)

subtract_op = func_to_container_op(subtract)

### Dict to easily fetch op according to config

name2operator = {

"add": add_op,

"multiply": multiply_op,

"divide": divide_op,

"subtract": subtract_op

}

### Example config

master_config = [

{

"name": "name1",

"a": 1,

"b": 1,

"models": ["add", "subtract", "multiply", "divide"]

},

{

"name": "name2",

"a": 0,

"b": 1,

"models": ["multiply", "divide"]

},

{

"name": "name3",

"a": 100,

"b": 2,

"models": ["subtract", "multiply", "divide"]

},

]

```

Semi-working solution

```

### Workaround to use for-loop and create model-task in line with config.

### Using ParallelFor won't allow to get the config as

def get_pipeline(config):

@dsl.pipeline(

name='Parallel pipeline mock test',

description='Pipeline with for-loop and config-based operators.'

)

def multi_pipeline():

for config_item in config:

root_op = print_op(config_item['name'])

root_op.

for model_name in config_item['models']:

new_op = name2operator[model_name](config_item['a'], config_item['b'])

new_op.after(root_op)

return multi_pipeline

client = kfp.Client()

client.create_run_from_pipeline_func(get_pipeline(master_config), arguments={})

```

Example of my thought process in solving the problem – changing `ParallelFor`

```

@dsl.pipeline(

name='Parallel pipeline mock test',

description='Pipeline with for-loop and config-based operators.'

)

def multi_pipeline(

config

):

root_ops = []

# I only want parallelism limit on the print_op

for idx, item in enumerate(dsl.ParallelFor(config, parallelism=2)):

root_op = print_op(item['name'])

root_ops.append(root_op)

# Now it is impossible, as config is PipelineParam and it is not iterable, but would be nice if it is

for root_idx, item in enumerate(config):

# item['models'] is also not iterable

for model_name in item['models']:

new_op = name2operator[model_name](item.a, item.b)

new_op.after(root_ops[root_idx])

client = kfp.Client()

client.create_run_from_pipeline_func(multi_pipeline, arguments={

"config": json.dumps(master_config)

})

```

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7454/reactions",

"total_count": 10,

"+1": 10,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7454/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7450 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7450/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7450/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7450/events | https://github.com/kubeflow/pipelines/issues/7450 | 1,177,365,961 | I_kwDOB-71UM5GLS3J | 7,450 | [sdk] unarchive_run sets run.storage_state to None instead of STORAGESTATE_ACTIVE | {

"login": "dandawg",

"id": 12484302,

"node_id": "MDQ6VXNlcjEyNDg0MzAy",

"avatar_url": "https://avatars.githubusercontent.com/u/12484302?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dandawg",

"html_url": "https://github.com/dandawg",

"followers_url": "https://api.github.com/users/dandawg/followers",

"following_url": "https://api.github.com/users/dandawg/following{/other_user}",

"gists_url": "https://api.github.com/users/dandawg/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dandawg/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dandawg/subscriptions",

"organizations_url": "https://api.github.com/users/dandawg/orgs",

"repos_url": "https://api.github.com/users/dandawg/repos",

"events_url": "https://api.github.com/users/dandawg/events{/privacy}",

"received_events_url": "https://api.github.com/users/dandawg/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [

"Could you please archive and then unarchive the run on UI and then check the storage_state from sdk, and see if it behaves the same? Thank you."

] | "2022-03-22T22:20:48" | "2022-03-24T22:48:00" | null | NONE | null | ### Environment

* KFP version:

1.4

* KFP SDK version:

1.8.11

* All dependencies version:

NA

### Steps to reproduce

1. Do a pipeline run:

```

run = client.create_run_from_pipeline_package(

pipeline.yaml,

run_name='my_run',

experiment_name='my_exp',

arguments=args

)

result = run.wait_for_run_to_complete()

```

2. Archive the run

```

client.runs.archive_run(result.run.id)

```

3. Check that the storage state has transition to STORAGESTATE_ARCHIVED

```

run_after_archive = client.runs.get_run(result.run.id)

print(run_after_archive.run.storage_state)

```

4. Now, unarchive the run, and verify the storage state has transitioned to None

```

client.runs.unarchive_run(result.run.id)

run_after_unarchive = client.runs.get_run(result.run.id)

print(run_after_unarchive.run.storage_state)

```

### Expected result

When a run is unarchived, the storage state should transition back to STORAGESTATE_ACTIVE. This would be consistent with what I get when I archive, and then subsequently unarchive an experiment using similar commands (client.experiment.archive_experiment, and client.experiment.unarchive_experiment). In the experiment unarchive case, the storage state transitions back to STORAGESTATE_ACTIVE.

### Materials and Reference

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7450/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7450/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7449 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7449/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7449/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7449/events | https://github.com/kubeflow/pipelines/issues/7449 | 1,177,338,195 | I_kwDOB-71UM5GLMFT | 7,449 | kubeflow pipeline run in "kubeflow" namespace instead of user namespace | {

"login": "pwzhong",

"id": 15694079,

"node_id": "MDQ6VXNlcjE1Njk0MDc5",

"avatar_url": "https://avatars.githubusercontent.com/u/15694079?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/pwzhong",

"html_url": "https://github.com/pwzhong",

"followers_url": "https://api.github.com/users/pwzhong/followers",

"following_url": "https://api.github.com/users/pwzhong/following{/other_user}",

"gists_url": "https://api.github.com/users/pwzhong/gists{/gist_id}",

"starred_url": "https://api.github.com/users/pwzhong/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/pwzhong/subscriptions",

"organizations_url": "https://api.github.com/users/pwzhong/orgs",

"repos_url": "https://api.github.com/users/pwzhong/repos",

"events_url": "https://api.github.com/users/pwzhong/events{/privacy}",

"received_events_url": "https://api.github.com/users/pwzhong/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1682717392,

"node_id": "MDU6TGFiZWwxNjgyNzE3Mzky",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/question",

"name": "kind/question",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Hello @pwzhong , how do you deploy Kubeflow? You are probably installing KFP single-user mode in a full Kubeflow deployment."

] | "2022-03-22T21:41:17" | "2022-03-24T22:54:26" | null | NONE | null | /kind question

**Question:**

My team has installed kubeflow 1.4 on AKS 1.21 with multi-user mode enabled. When I created a pipeline run in a user namespace, it was run in “kubeflow” namespace, instead of the user namespace.

There is no namespace specified in pipeline yaml, and when a pipeline run is created, it automatically added "namespace: kubeflow" in the pod metadata and run it there, like what is shown in below screenshot.

I tried to specify namespace in pipeline yaml file, but it gives me error. Probably because kubeflow does not allow overwrite the namespace.

If I created a notebook, it was inside the user namespace as expected though.

Any idea how to fix this? | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7449/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7449/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7445 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7445/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7445/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7445/events | https://github.com/kubeflow/pipelines/issues/7445 | 1,175,154,847 | I_kwDOB-71UM5GC3Cf | 7,445 | [feature] Option to disable downloading of artifacts through UI | {

"login": "bodak",

"id": 6807878,

"node_id": "MDQ6VXNlcjY4MDc4Nzg=",

"avatar_url": "https://avatars.githubusercontent.com/u/6807878?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/bodak",

"html_url": "https://github.com/bodak",

"followers_url": "https://api.github.com/users/bodak/followers",

"following_url": "https://api.github.com/users/bodak/following{/other_user}",

"gists_url": "https://api.github.com/users/bodak/gists{/gist_id}",

"starred_url": "https://api.github.com/users/bodak/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/bodak/subscriptions",

"organizations_url": "https://api.github.com/users/bodak/orgs",

"repos_url": "https://api.github.com/users/bodak/repos",

"events_url": "https://api.github.com/users/bodak/events{/privacy}",

"received_events_url": "https://api.github.com/users/bodak/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930619516,

"node_id": "MDU6TGFiZWw5MzA2MTk1MTY=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/frontend",

"name": "area/frontend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Hello @bodak , are you using full fledged Kubeflow deployment? That is the only way we can use multi-user mode, where artifact access control is possible.\r\n\r\nAlso, data access restriction should be enforced on the storage side, by allowing only the selected personnel to download them. If such access control is in-place, UI doesn't need to do anything to disable download. It is because UI only open a URL link for download, which is coming from storage solution (based on which storage you are using). So if you are managing sensitive data, that restriction should happen on the storage IAM side. \r\n\r\nIf using minio, I am not sure whether whether minio has such access control, if not, it is the limitation on minio side.",

"Thanks for the explanation! This was for Kubeflow Pipelines only.\r\nYour suggestion makes sense and restriction on the storage side were already implemented.\r\nI'll close this issue."

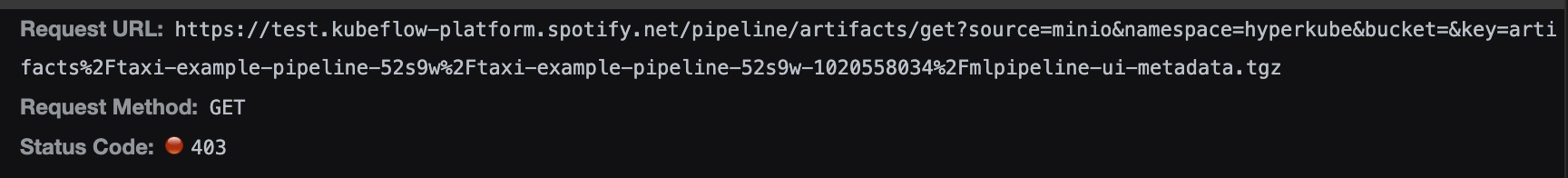

] | "2022-03-21T10:08:25" | "2022-03-31T09:05:31" | "2022-03-31T09:05:31" | NONE | null | ### Feature Area

/area frontend

/area backend

### What feature would you like to see?

- Option to disable the ability to download artifacts from the front-end UI. Some form of this would limit accidentally clicking the download link.

- Option to restrict access to the back-end artifact storage. Some form of this would limit "guessing" the download link (unsure if that is even possible).

### What is the use case or pain point?

We work with sensitive data. It is very simple to download artifacts produced from the front-end UI and save locally.

### Is there a workaround currently?

1. I have not found any option in https://github.com/kubeflow/pipelines/blob/master/frontend/server/app.ts#L119-L150, but I might have been looking in the wrong places.

2. We are looking into blocking access between the minio server and the UI server through kubernetes. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7445/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7445/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7444 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7444/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7444/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7444/events | https://github.com/kubeflow/pipelines/issues/7444 | 1,174,334,504 | I_kwDOB-71UM5F_uwo | 7,444 | Convert PipelineSpec format from json to yaml | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | {

"login": "jlyaoyuli",

"id": 56132941,

"node_id": "MDQ6VXNlcjU2MTMyOTQx",

"avatar_url": "https://avatars.githubusercontent.com/u/56132941?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jlyaoyuli",

"html_url": "https://github.com/jlyaoyuli",

"followers_url": "https://api.github.com/users/jlyaoyuli/followers",

"following_url": "https://api.github.com/users/jlyaoyuli/following{/other_user}",

"gists_url": "https://api.github.com/users/jlyaoyuli/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jlyaoyuli/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jlyaoyuli/subscriptions",

"organizations_url": "https://api.github.com/users/jlyaoyuli/orgs",

"repos_url": "https://api.github.com/users/jlyaoyuli/repos",

"events_url": "https://api.github.com/users/jlyaoyuli/events{/privacy}",

"received_events_url": "https://api.github.com/users/jlyaoyuli/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "jlyaoyuli",

"id": 56132941,

"node_id": "MDQ6VXNlcjU2MTMyOTQx",

"avatar_url": "https://avatars.githubusercontent.com/u/56132941?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jlyaoyuli",

"html_url": "https://github.com/jlyaoyuli",

"followers_url": "https://api.github.com/users/jlyaoyuli/followers",

"following_url": "https://api.github.com/users/jlyaoyuli/following{/other_user}",

"gists_url": "https://api.github.com/users/jlyaoyuli/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jlyaoyuli/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jlyaoyuli/subscriptions",

"organizations_url": "https://api.github.com/users/jlyaoyuli/orgs",

"repos_url": "https://api.github.com/users/jlyaoyuli/repos",

"events_url": "https://api.github.com/users/jlyaoyuli/events{/privacy}",

"received_events_url": "https://api.github.com/users/jlyaoyuli/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Related item: https://github.com/kubeflow/pipelines/pull/6524",

"cc @jlyaoyuli ",

"This PR can help validating the change: https://github.com/kubeflow/pipelines/pull/7570",

"Unit test: Update tests accordingly for new yaml format: https://github.com/kubeflow/pipelines/tree/master/frontend/src/data/test"

] | "2022-03-19T19:09:08" | "2022-05-11T19:50:21" | "2022-05-11T19:50:21" | COLLABORATOR | null | ## Problem

There are two types of Pipeline Template definition: ArgoWorkflow (for KFPv1) and PipelineSpec (for KFPv2). Currently we are using `isPipelineSpec()` to determine whether it is v1 or v2.

Reference:

https://github.com/kubeflow/pipelines/blob/939f81088b39ba703cc821d18e7a99523662bf5f/frontend/src/lib/v2/WorkflowUtils.ts#L48-L67

We are assuming that if the PipelineTemplate is not ArgoWorkflow, then it is PipelineSpec: see `isArgoWorkflowTemplate()`:

https://github.com/kubeflow/pipelines/blob/939f81088b39ba703cc821d18e7a99523662bf5f/frontend/src/lib/v2/WorkflowUtils.ts#L22-L31

We are assuming that PipelineSpec is in JSON format.

https://github.com/kubeflow/pipelines/blob/939f81088b39ba703cc821d18e7a99523662bf5f/frontend/src/lib/v2/WorkflowUtils.ts#L34-L45

Now the direction is to use YAML format instead of JSON format on PipelineSpec. We need to switch our logic to adopt YAML format and abandon JSON format. See SDK reference: https://github.com/kubeflow/pipelines/pull/7431.

## Possible solution

Possible tool: https://github.com/nodeca/js-yaml

## Note

We also need to update test data https://github.com/kubeflow/pipelines/tree/master/frontend/mock-backend/data/v2/pipeline | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7444/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7444/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7441 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7441/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7441/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7441/events | https://github.com/kubeflow/pipelines/issues/7441 | 1,173,988,703 | I_kwDOB-71UM5F-aVf | 7,441 | [feature] Clone recurring run capability in sdk | {

"login": "droctothorpe",

"id": 24783969,

"node_id": "MDQ6VXNlcjI0NzgzOTY5",

"avatar_url": "https://avatars.githubusercontent.com/u/24783969?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/droctothorpe",

"html_url": "https://github.com/droctothorpe",

"followers_url": "https://api.github.com/users/droctothorpe/followers",

"following_url": "https://api.github.com/users/droctothorpe/following{/other_user}",

"gists_url": "https://api.github.com/users/droctothorpe/gists{/gist_id}",

"starred_url": "https://api.github.com/users/droctothorpe/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/droctothorpe/subscriptions",

"organizations_url": "https://api.github.com/users/droctothorpe/orgs",

"repos_url": "https://api.github.com/users/droctothorpe/repos",

"events_url": "https://api.github.com/users/droctothorpe/events{/privacy}",

"received_events_url": "https://api.github.com/users/droctothorpe/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | "2022-03-18T20:18:54" | "2022-03-24T23:04:04" | null | CONTRIBUTOR | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

<!-- /area backend -->

/area sdk

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

There's no way to patch an existing recurring run via the SDK (or GUI). The GUI gets around this with the clone recurring run button, but there's no equivalent in the SDK.

A very common scenario, for example, is updating the cron string of an existing recurring run. This is more or less impossible via the SDK.

One way to address this would be by adding a `clone_recurring_run` method to the SDK that emulates what the frontend does.

<!-- Provide a description of this feature and the user experience. -->

### What is the use case or pain point?

Making minor modifications to an existing recurring run via the SDK is not possible.

<!-- It helps us understand the benefit of this feature for your use case. -->

### Is there a workaround currently?

Only through the GUI via the clone button.

<!-- Without this feature, how do you accomplish your task today? -->

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7441/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7441/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7437 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7437/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7437/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7437/events | https://github.com/kubeflow/pipelines/issues/7437 | 1,173,349,821 | I_kwDOB-71UM5F7-W9 | 7,437 | Why is certificates.k8s.io/v1 used in Cache Deployer instead of OpenSSL? | {

"login": "konsloiz",

"id": 22999070,

"node_id": "MDQ6VXNlcjIyOTk5MDcw",

"avatar_url": "https://avatars.githubusercontent.com/u/22999070?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/konsloiz",

"html_url": "https://github.com/konsloiz",

"followers_url": "https://api.github.com/users/konsloiz/followers",

"following_url": "https://api.github.com/users/konsloiz/following{/other_user}",

"gists_url": "https://api.github.com/users/konsloiz/gists{/gist_id}",

"starred_url": "https://api.github.com/users/konsloiz/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/konsloiz/subscriptions",

"organizations_url": "https://api.github.com/users/konsloiz/orgs",

"repos_url": "https://api.github.com/users/konsloiz/repos",

"events_url": "https://api.github.com/users/konsloiz/events{/privacy}",

"received_events_url": "https://api.github.com/users/konsloiz/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"Could you please discuss the problem in this issue?\r\nhttps://github.com/kubeflow/manifests/issues/2165"

] | "2022-03-18T08:56:01" | "2022-03-24T23:03:08" | null | NONE | null | Caching is one of the most crucial features of KFP. Each time a pipeline step is the same as an already executed, the results are loaded from the cache server. Caching is accomplished in KFP via two interdependent modules: the cache deployer and the cache server.

While trying to set up the modules in an enterprise cluster ([Mercedes-Benz AG](https://github.com/mercedes-benz/DnA)), it was noted that the installation couldn’t be completed. The reason was that the cache deployer is built to generate a Signed Certificate for the cache server by referring to the Kubernetes Certificate-SigningRequest API.

``` yaml

...

# create server cert/key CSR and send to k8s API

cat <<EOF | kubectl create -f -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: ${csrName}

spec:

groups:

- system:authenticated

request: $(cat ${tmpdir}/server.csr | base64 | tr -d '\n')

signerName: kubernetes.io/kubelet-serving

usages:

- digital signature

- key encipherment

- server auth

EOF

..

```

The usage of API server certificates in our enterprise environment is restricted because those allow permission escalation. The security risk is critical, as by using this API, users can order certificates that let them impersonate both Kubernetes control plane and cluster team access.

To adjust the cache deployer’s certificate generation process without affecting the actual functionality to avoid loosening the security restrictions, we [used](https://github.com/mercedes-benz/DnA/blob/9c2487e111490285ee57dc241aa27778a1acc774/deployment/dockerfiles/kubeflow/kfp/backend/src/cache/deployer/webhook-create-signed-cert.sh#L98) the widely-known OpenSSL.

Is there any specific reason for using the K8s API?

If not, would the community be interested in an upstream contribution? | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7437/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7437/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7435 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7435/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7435/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7435/events | https://github.com/kubeflow/pipelines/issues/7435 | 1,172,209,396 | I_kwDOB-71UM5F3n70 | 7,435 | [feature] Add a param in create_recurring_run to support override the exists job with the same name in the same experiment | {

"login": "haoxins",

"id": 2569835,

"node_id": "MDQ6VXNlcjI1Njk4MzU=",

"avatar_url": "https://avatars.githubusercontent.com/u/2569835?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/haoxins",

"html_url": "https://github.com/haoxins",

"followers_url": "https://api.github.com/users/haoxins/followers",

"following_url": "https://api.github.com/users/haoxins/following{/other_user}",

"gists_url": "https://api.github.com/users/haoxins/gists{/gist_id}",

"starred_url": "https://api.github.com/users/haoxins/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/haoxins/subscriptions",

"organizations_url": "https://api.github.com/users/haoxins/orgs",

"repos_url": "https://api.github.com/users/haoxins/repos",

"events_url": "https://api.github.com/users/haoxins/events{/privacy}",

"received_events_url": "https://api.github.com/users/haoxins/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | {

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Currently we don't have a plan for implementing this feature in `kfp`. If you are interested in contributing this feature, could you please compose a design doc for discussion in the [Kubeflow Pipelines Community](https://www.kubeflow.org/docs/about/community/) meeting?",

"Yeah, I can contribute to this.\r\n\r\nAnd this should be a small change so that is it really need a design doc?\r\n\r\nI think there are only two things need to be agreed?\r\n\r\n1. What is the param name should be?\r\n2. Is the flow I provided fine to be accepted?\r\n2.1 `list_recurring_runs()`: List the runs by `experiment_id` and `job name`\r\n2.2 `Delete the jobs`\r\n\r\nIf so, I can submit a PR to continue the feature implementation.",

"Thanks for your response, @haoxins, and for your interest in contributing! And thanks also for contributing a comment fix the other day -- that's very helpful.\r\n\r\n**Why a design doc**\r\nI do think a design doc is warranted to explore two aspects of this contribution:\r\n\r\n1) **Costs and benefits of including this feature.** User-facing features in particular require maintenance and possibly slow down future feature development in an effort to maintain complete functionality and backward-compatibility. Those are some of the costs, so it's helpful to explicitly lay out the benefits.\r\n\r\n2) **Different approaches for implementation and their associated costs and benefits.** I think there are a few complexities that may be introduced at implementation time. The current suggested implementation probably would get us most of the way there, but may have some unintended side-effects that are worth exploring in a design doc.\r\n\r\n**Some considerations**\r\nFor number 2 of \"Why a design doc\", I think it may make more sense to implement the CRUD logic in the backend instead of doing so in the SDK. Put differently: we probably would want an SDK PUT rather than an SDK DELETE + POST. This is both a) consistent with the current paradigm of the SDK as a client of the backed and b) allows the backend to do additional cleanup and reference updating associated with deleting a job. @zijianjoy can speak more to the backend responsibilities for an operation like this. This is just one example of some of the considerations that should be explored in a design doc.\r\n\r\n**Design doc structure**\r\nA basic design doc structure is:\r\n1) What is the user story? In other words: what is the objective of the feature contribution? What are users **current** options for achieving this objective?\r\n2) What are some reasonable ways of implementing this objective as a feature? Include pros/cons. (This addresses number 1 of \"Why a design doc\".)\r\n3) Pick a preferred solution from \"Design doc structure\" part 2. What options are there for implementing this solution? Include an explanation, API/code snippets (if relevant), and pros/cons for each. (This addresses number 2 of \"Why a design doc\".)\r\n4) Are there any additional considerations not already discussed?\r\n\r\nPlease don't be discouraged by the enumeration of parts 1-4 here. My intention by being explicit is actually to make the design doc _easier_ to put together. Each section can be somewhat brief so long as it captures the key points.\r\n\r\nThanks again and please follow up with any questions.\r\n\r\ncc/ @ji-yaqi @chensun",

"I'm closing this because I don't want to waste too much time for this. "

] | "2022-03-17T10:40:08" | "2022-03-18T16:58:25" | "2022-03-18T16:58:24" | CONTRIBUTOR | null | ### Feature Area

/area sdk

### What feature would you like to see?

Add a param in `create_recurring_run` func to support override exists recurring job with the same name.

### What is the use case or pain point?

In my use case, when I changed the logic of my job, I will remove the jobs with the same name first,

and create a new one with the same name again.

I do this because I don't want to create duplicated jobs.

The code will looks like

```python

recurring_run_name = "..."

recurring_run_description = "..."

jobs = kfp_client.list_recurring_runs(

experiment_id=experiment_id,

filter=filter_by_job_name,

...

).jobs

if jobs != None:

# Delete the jobs

kfp_client.create_recurring_run(

experiment_id=experiment_id,

job_name=recurring_run_name,

description=recurring_run_description,

...

)

```

So, would it accepted for you guys to add a param such as `replace_exists` to implement these logic.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7435/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7435/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7432 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7432/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7432/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7432/events | https://github.com/kubeflow/pipelines/issues/7432 | 1,171,726,909 | I_kwDOB-71UM5F1yI9 | 7,432 | [feature] Support for features in v2 | {

"login": "casassg",

"id": 6912589,

"node_id": "MDQ6VXNlcjY5MTI1ODk=",

"avatar_url": "https://avatars.githubusercontent.com/u/6912589?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/casassg",

"html_url": "https://github.com/casassg",

"followers_url": "https://api.github.com/users/casassg/followers",

"following_url": "https://api.github.com/users/casassg/following{/other_user}",

"gists_url": "https://api.github.com/users/casassg/gists{/gist_id}",

"starred_url": "https://api.github.com/users/casassg/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/casassg/subscriptions",

"organizations_url": "https://api.github.com/users/casassg/orgs",

"repos_url": "https://api.github.com/users/casassg/repos",

"events_url": "https://api.github.com/users/casassg/events{/privacy}",

"received_events_url": "https://api.github.com/users/casassg/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"reference from community meeting: https://docs.google.com/document/d/1cHAdK1FoGEbuQ-Rl6adBDL5W2YpDiUbnMLIwmoXBoAU/edit#bookmark=id.pn3sq4nva5w0",

"Another tentative question is: What are the current ways to follow along the state of v1 to v2 transition for backend and all?"

] | "2022-03-17T00:27:03" | "2022-03-17T22:45:01" | null | CONTRIBUTOR | null | ### Feature Area

/area sdk

### What feature would you like to see?

Would like to decipher wether KFP v2 will continue to support:

- Recursive pipelines (https://www.kubeflow.org/docs/components/pipelines/sdk/dsl-recursion/)

- ContainerOp components using python SDK directly (not python functions)

- LocalClient for local execution

### What is the use case or pain point?

Recursive pipelines is useful for research HPT and similar use cases where it's not clear when will a pipeline need to stop executing.

### Is there a workaround currently?

This is just a request for more info since I lack seeing it in the docs themselves.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7432/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7432/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7421 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7421/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7421/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7421/events | https://github.com/kubeflow/pipelines/issues/7421 | 1,170,384,578 | I_kwDOB-71UM5FwqbC | 7,421 | [backend] "Updating" a recurring run, whilst a recurring run is in progress causes it to terminate | {

"login": "alexlatchford",

"id": 628146,

"node_id": "MDQ6VXNlcjYyODE0Ng==",

"avatar_url": "https://avatars.githubusercontent.com/u/628146?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/alexlatchford",

"html_url": "https://github.com/alexlatchford",

"followers_url": "https://api.github.com/users/alexlatchford/followers",

"following_url": "https://api.github.com/users/alexlatchford/following{/other_user}",

"gists_url": "https://api.github.com/users/alexlatchford/gists{/gist_id}",

"starred_url": "https://api.github.com/users/alexlatchford/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/alexlatchford/subscriptions",

"organizations_url": "https://api.github.com/users/alexlatchford/orgs",

"repos_url": "https://api.github.com/users/alexlatchford/repos",

"events_url": "https://api.github.com/users/alexlatchford/events{/privacy}",

"received_events_url": "https://api.github.com/users/alexlatchford/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [] | "2022-03-16T00:06:23" | "2022-03-17T22:40:21" | null | CONTRIBUTOR | null | ### Environment

* How did you deploy Kubeflow Pipelines (KFP)?

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

Atop AWS using the `kubeflow/manifests` official distribution with some overlays specific to Zillow.

* KFP version:

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

We use KF v1.3.1 currently (plan to update to v1.5 next month), looks like that includes KFP v1.5.1 ([link](https://github.com/kubeflow/manifests/blob/v1.3.1/apps/pipeline/upstream/base/pipeline/kustomization.yaml#L43)).

* KFP SDK version:

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

We run a forked version ([see here](https://github.com/zillow/pipelines)), I think we last pulled in at ~v1.8.

### Steps to reproduce

<!--

Specify how to reproduce the problem.

This may include information such as: a description of the process, code snippets, log output, or screenshots.

-->

The problem is that via CICD we want our scientists to be able to define a `recurring-run` and have it updated on subsequent CICD executions if they change their settings. Given the limitations of the "Job" API in KFP we delete and re-add to "update" a recurring run, (see the [API reference](https://www.kubeflow.org/docs/components/pipelines/reference/api/kubeflow-pipeline-api-spec/#tag-JobService) for more info).

What happens in this case if the first recurring run has triggered a workflow then the scheduledworkflow controller deletes the `ScheduledWorkflow` and then via Kubernetes garbage collection it cascades and deletes any in-progress `Workflow` resources. Then when the "new" updated recurring run is created it needs to wait for the new trigger point to schedule the next run, it won't try to resurrect the now terminated run (and it definitely shouldn't thinking about it).

### Expected result

<!-- What should the correct behavior be? -->

Basically some way of keeping the run that was in progress before the "update" occurred.

Potentially when deleting a recurring run if there was a flag available in the API/SDK/CLI to say to [orphan on deletion](https://kubernetes.io/docs/tasks/administer-cluster/use-cascading-deletion/#set-orphan-deletion-policy) that'd solve this issue and you'd not need a complicated update API.

### Materials and Reference

<!-- Help us debug this issue by providing resources such as: sample code, background context, or links to references. -->

Apologies, maybe this is more of a "feature" request thinking about it now. Apologies for the mischaracterization!

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7421/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7421/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7420 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7420/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7420/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7420/events | https://github.com/kubeflow/pipelines/issues/7420 | 1,170,348,318 | I_kwDOB-71UM5Fwhke | 7,420 | [SDK] Pipeline runs web UI shows the wrong namespace | {

"login": "emenendez",

"id": 3814114,

"node_id": "MDQ6VXNlcjM4MTQxMTQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/3814114?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/emenendez",

"html_url": "https://github.com/emenendez",

"followers_url": "https://api.github.com/users/emenendez/followers",

"following_url": "https://api.github.com/users/emenendez/following{/other_user}",

"gists_url": "https://api.github.com/users/emenendez/gists{/gist_id}",

"starred_url": "https://api.github.com/users/emenendez/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/emenendez/subscriptions",

"organizations_url": "https://api.github.com/users/emenendez/orgs",

"repos_url": "https://api.github.com/users/emenendez/repos",

"events_url": "https://api.github.com/users/emenendez/events{/privacy}",

"received_events_url": "https://api.github.com/users/emenendez/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

}

] | open | false | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Hello @emenendez , this is a known limitation we have right now, because SDK doesn't have the information about whether it is an KFP standalone or a full-fledged Kubeflow deployment. We don't have a clear idea yet about how to overcome this.",

"Thanks so much for following up @zijianjoy!"

] | "2022-03-15T23:01:38" | "2022-03-21T22:56:09" | null | NONE | null | ### Environment

* How did you deploy Kubeflow Pipelines (KFP)? As part of a full Kubeflow 1.4 install on GKE.

* KFP version: 1.7.0, packaged with Kubeflow 1.4

### Steps to reproduce

1. Use the KFP Python SDK (for example, `create_run_from_pipeline_func()` to create a pipeline from a Jupyter Notebook. Use the `namespace` argument to create this pipeline run in a namespace that's not your own, but in which you are a collaborator.

2. The notebook will display a link to the "run details" page in the web UI of the following form: https://<cluster-domain>/pipeline/#/runs/details/<uuid>

3. Open the link.

4. The pipeline run details page is shown, but the namespace selector in the top-left corner shows your own default namespace, not the namespace in which the pipeline was actually run.

### Expected result

The pipeline run details should be shown, and the namespace selector in the top-left corner should show the namespace in which the pipeline was run (not your own namespace).

Thank you for your help debugging this issue!

---

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7420/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7420/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7416 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7416/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7416/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7416/events | https://github.com/kubeflow/pipelines/issues/7416 | 1,169,117,766 | I_kwDOB-71UM5Fr1JG | 7,416 | [bug] google-cloud-pipeline-components.readthedocs.io now points to 1.0.1 version instead of 1.0.0 | {

"login": "dianeo-mit",

"id": 46697321,

"node_id": "MDQ6VXNlcjQ2Njk3MzIx",

"avatar_url": "https://avatars.githubusercontent.com/u/46697321?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dianeo-mit",

"html_url": "https://github.com/dianeo-mit",

"followers_url": "https://api.github.com/users/dianeo-mit/followers",

"following_url": "https://api.github.com/users/dianeo-mit/following{/other_user}",

"gists_url": "https://api.github.com/users/dianeo-mit/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dianeo-mit/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dianeo-mit/subscriptions",

"organizations_url": "https://api.github.com/users/dianeo-mit/orgs",

"repos_url": "https://api.github.com/users/dianeo-mit/repos",

"events_url": "https://api.github.com/users/dianeo-mit/events{/privacy}",

"received_events_url": "https://api.github.com/users/dianeo-mit/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [] | "2022-03-15T02:47:00" | "2022-03-17T22:43:12" | "2022-03-17T22:43:12" | NONE | null | ### What steps did you take

I went to the main documentation URL: https://google-cloud-pipeline-components.readthedocs.io/

### What happened:

I was directed to this URL, which doesn't exist: https://google-cloud-pipeline-components.readthedocs.io/en/google-cloud-pipeline-components-1.0.1/

### What did you expect to happen:

I expected to be taken to this URL, which does exist - and which worked perfectly last week: https://google-cloud-pipeline-components.readthedocs.io/en/google-cloud-pipeline-components-1.0.0/

### Environment:

Google Chrome Version 99.0.4844.57 (Official Build) (64-bit)

### Anything else you would like to add: