url

stringlengths 59

59

| repository_url

stringclasses 1

value | labels_url

stringlengths 73

73

| comments_url

stringlengths 68

68

| events_url

stringlengths 66

66

| html_url

stringlengths 49

49

| id

int64 782M

1.89B

| node_id

stringlengths 18

24

| number

int64 4.97k

9.98k

| title

stringlengths 2

306

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 4

values | active_lock_reason

null | body

stringlengths 0

63.6k

⌀ | reactions

dict | timeline_url

stringlengths 68

68

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 0

classes | pull_request

dict | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/kubeflow/pipelines/issues/8688 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8688/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8688/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8688/events | https://github.com/kubeflow/pipelines/issues/8688 | 1,537,094,194 | I_kwDOB-71UM5bnjIy | 8,688 | [chore] Investigate/implement automation of KFP SDK release note generation | {

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | null | [] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions."

] | "2023-01-17T22:05:13" | "2023-08-27T07:42:10" | null | MEMBER | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

<!-- /area backend -->

/area sdk

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

KFP SDK uses [conventional commits](https://www.conventionalcommits.org/en/v1.0.0/). Can we automate the creation of release notes to (1) ensure that they comprehensively document all SDK changes and (2) eliminate the need to ask OSS contributors to update the release notes by hand in [RELEASE.md](https://github.com/kubeflow/pipelines/blob/master/sdk/RELEASE.md).

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8688/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8688/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8684 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8684/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8684/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8684/events | https://github.com/kubeflow/pipelines/issues/8684 | 1,536,845,087 | I_kwDOB-71UM5bmmUf | 8,684 | [feature] Multi-layer lineage graph with MLMD | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930619516,

"node_id": "MDU6TGFiZWw5MzA2MTk1MTY=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/frontend",

"name": "area/frontend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2152751095,

"node_id": "MDU6TGFiZWwyMTUyNzUxMDk1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/frozen",

"name": "lifecycle/frozen",

"color": "ededed",

"default": false,

"description": null

}

] | open | false | null | [] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions."

] | "2023-01-17T18:29:00" | "2023-08-28T16:21:40" | null | COLLABORATOR | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

/area frontend

<!-- /area backend -->

<!-- /area sdk -->

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

With MLMD upgrade to 1.4.0, we are able to list multi-layer lineage graph using the new MLMD API call. As a result, we can introduce this feature on KFP UI.

<!-- Provide a description of this feature and the user experience. -->

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8684/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8684/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8683 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8683/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8683/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8683/events | https://github.com/kubeflow/pipelines/issues/8683 | 1,536,381,224 | I_kwDOB-71UM5bk1Eo | 8,683 | [bug] 1.6 manifests leads to deployment issue with kubeflow pipelines | {

"login": "MatthewRalston",

"id": 4308024,

"node_id": "MDQ6VXNlcjQzMDgwMjQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/4308024?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/MatthewRalston",

"html_url": "https://github.com/MatthewRalston",

"followers_url": "https://api.github.com/users/MatthewRalston/followers",

"following_url": "https://api.github.com/users/MatthewRalston/following{/other_user}",

"gists_url": "https://api.github.com/users/MatthewRalston/gists{/gist_id}",

"starred_url": "https://api.github.com/users/MatthewRalston/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/MatthewRalston/subscriptions",

"organizations_url": "https://api.github.com/users/MatthewRalston/orgs",

"repos_url": "https://api.github.com/users/MatthewRalston/repos",

"events_url": "https://api.github.com/users/MatthewRalston/events{/privacy}",

"received_events_url": "https://api.github.com/users/MatthewRalston/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

}

] | closed | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

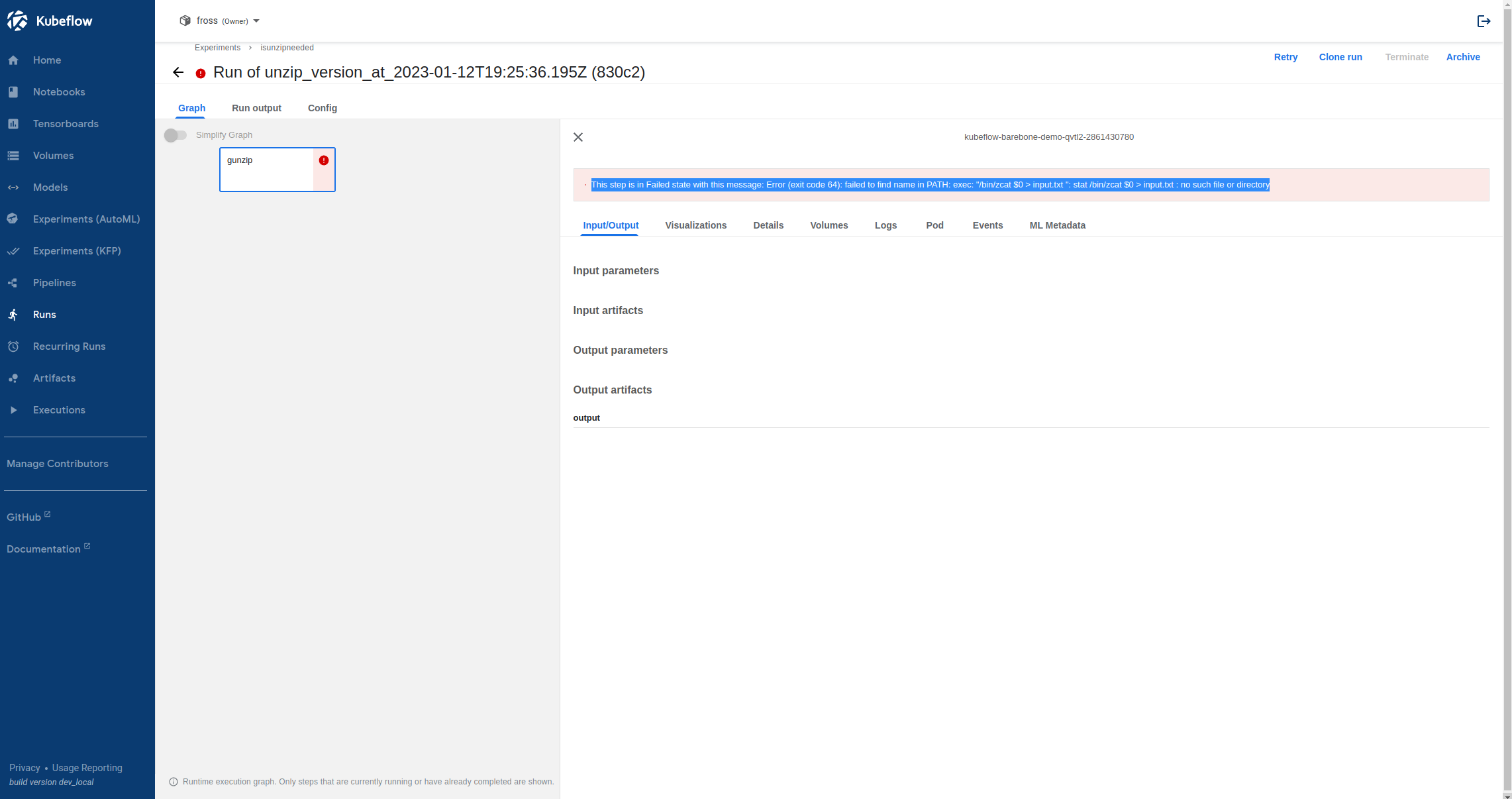

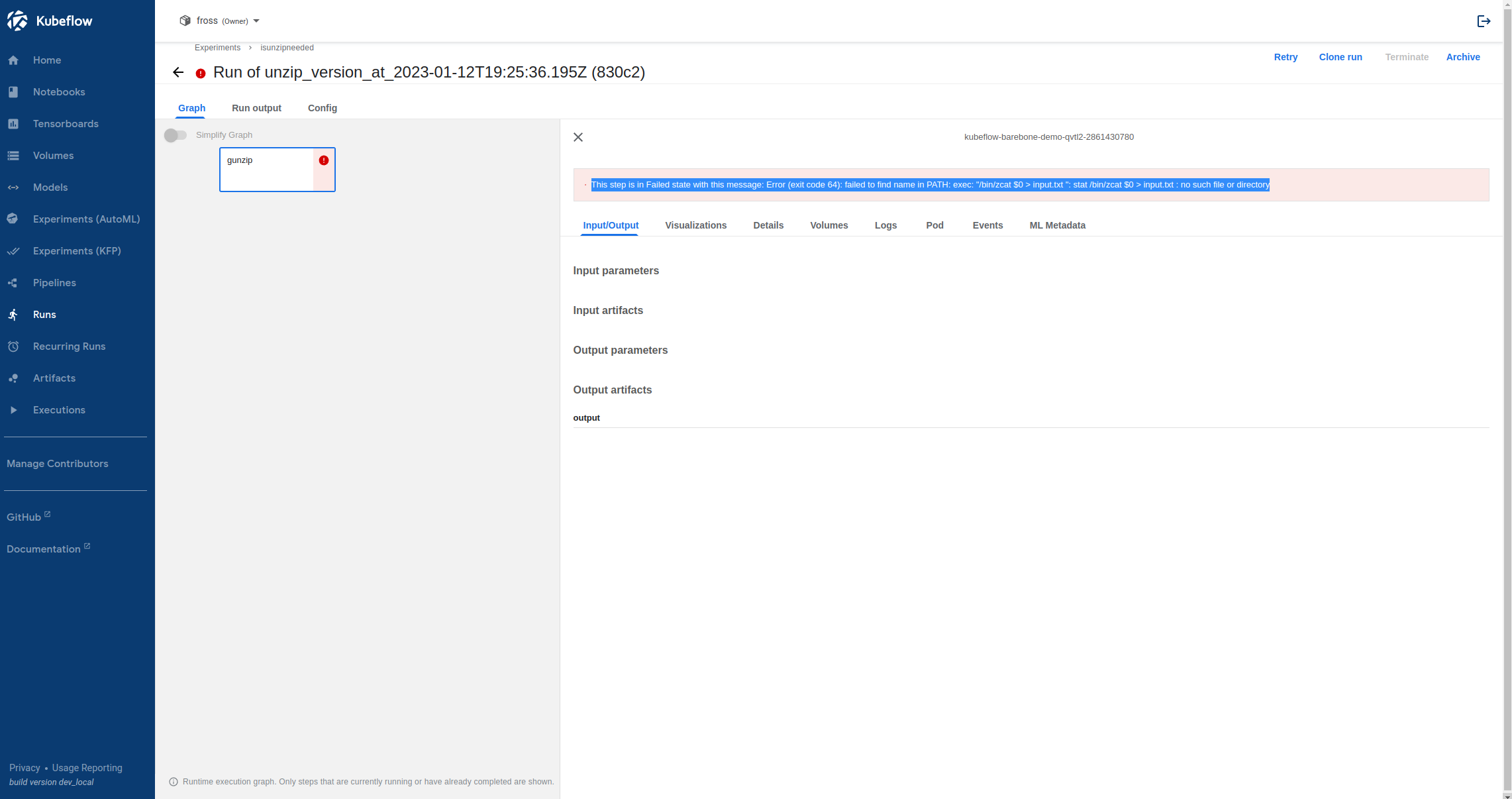

"Hi @MatthewRalston , \r\n\r\nFirst of all `dsl.ContainerOp` has been deprecated as a user interface, you should have seen a deprecation warning on it.\r\nHowever, I think the real culprit for the error is `str(fastq1).rstrip(\".gz\")`, this is code not containerized. You can either wrap the code in a lightweight component, or handle the logic within `gunzip` component.",

"Hi thanks for the quick response.\n\nI needed to use this interface to set the container requirements (CPU, memory request/limit) via kfp. Is there an alternative way to specify container limits via the v1.8 kfp on a 1.6 manifests Kubeflow instance?",

"Also I can confirm that removal of the str(fastq) etc component is not the culprit of the bash builtin \"exec\" not being found in the Alpine Linux container. \n\nNew syntax is\n\n```python\ngunzip1 = gunzip (infile=fastq1).set_cpu_request('1') etc.\n```",

"Hey @chensun, when you get a chance, would you mind revisiting this issue please? Again, I'm using a vanilla kubernetes instance (minikube; compliant) and deploying kubeflow pipelines via the v1.6 branch manifests from kubeflow/manifests. Again *I recognize that the ContainerOp invocation pattern has been deprecated and should migrate to new syntax*. But that has nothing to do with Kubeflow's exec builtin. Please, let me know if you have any questions.\r\n\r\n\r\n\r\n\r\n\r\n```python\r\n#!/bin/env python\r\n\r\nimport os\r\nimport sys\r\nimport argparse\r\n\r\nimport kfp.dsl as dsl\r\nimport kfp.components as comp\r\nimport kfp\r\n\r\n\r\n\r\nimport logging\r\nglobal logger\r\nlogger = None\r\n\r\ndef get_root_logger(level):\r\n levels=[logging.WARNING, logging.INFO, logging.DEBUG]\r\n if level < 0 or level > 2:\r\n raise TypeError(\"{0}.get_root_logger expects a verbosity between 0-2\".format(__file__))\r\n logging.basicConfig(level=levels[level], format=\"%(levelname)s: %(asctime)s %(funcName)s L%(lineno)s| %(message)s\", datefmt=\"%Y/%m/%d %I:%M:%S\")\r\n root_logger = logging.getLogger()\r\n return root_logger\r\n\r\n\r\n\r\ncomponents_dir = os.path.join(os.path.dirname(__file__), \"components\")\r\n\r\n#gunzip = comp.load_component_from_file(os.path.join(components_dir, \"gunzip.yaml\"))\r\n\r\n\r\nis_unzip_needed = comp.load_component_from_file(os.path.join(components_dir, \"is_unzip_needed.yaml\"))\r\n\r\ndef gunzip(infile:str):\r\n \"\"\" Infile like 'path/to/example.txt.gz', outfile like 'path/to/example.txt' \"\"\"\r\n\r\n return dsl.ContainerOp(\r\n name='gunzip',\r\n image='debian:latest',\r\n command=[\r\n '/bin/zcat $0 > input.txt ',\r\n '|| ',\r\n 'mv $0 input.txt'\r\n ],\r\n file_outputs={\r\n 'output': 'input.txt'\r\n }\r\n )\r\n\r\n\r\n@dsl.pipeline(\r\n name='kubeflow-barebone-demo',\r\n description='kubeflow demo with minimal setup'\r\n)\r\ndef rnaseq_pipeline(fastq1:str):\r\n # Step 1: training component\r\n\r\n gunzip1 = gunzip(infile=fastq1).set_cpu_request('1').set_cpu_limit('1').set_memory_request('512Mi').set_memory_limit('512Mi')\r\n\r\n\r\n\r\nif __name__ == \"__main__\":\r\n logger = get_root_logger(2)\r\n \r\n kfp.compiler.Compiler().compile(rnaseq_pipeline, 'pipeline.yaml')\r\n\r\n```",

"\r\n> Also I can confirm that removal of the str(fastq) etc component is not the culprit of the bash builtin \"exec\" not being found in the Alpine Linux container.\r\n> \r\n> New syntax is\r\n> \r\n> ```python\r\n> gunzip1 = gunzip (infile=fastq1).set_cpu_request('1') etc.\r\n> ```\r\n\r\nSorry, I spoke too earlier without looking carefully at the error message--though the `str(fastq)` usage was also an error that would not give you the expected value.\r\n\r\nYou're right that the culprit is the about the exec path, to solve that you should add `sh -c` in front of your command. But then another issue is you're not really passing the value as you thought--`$0` doesn't magically map to `infile`. \r\n\r\nI would still suggest dropping `dsl.ContainerOp` so that you know what a proper component interface and data-passing story should look like.\r\n\r\nTry the following \r\n```python\r\nfrom kfp import components\r\nfrom kfp import dsl\r\n\r\nfoo = components.load_component_from_text(\"\"\"\r\n name: foo\r\n inputs:\r\n - {name: msg, type: String}\r\n implementation:\r\n container:\r\n image: debian:latest\r\n command: \r\n - sh\r\n - -c\r\n - /bin/echo $0\r\n args:\r\n - {inputValue: msg}\r\n\"\"\")\r\n\r\n@dsl.pipeline\r\ndef bar():\r\n foo('hello')\r\n```\r\n\r\n",

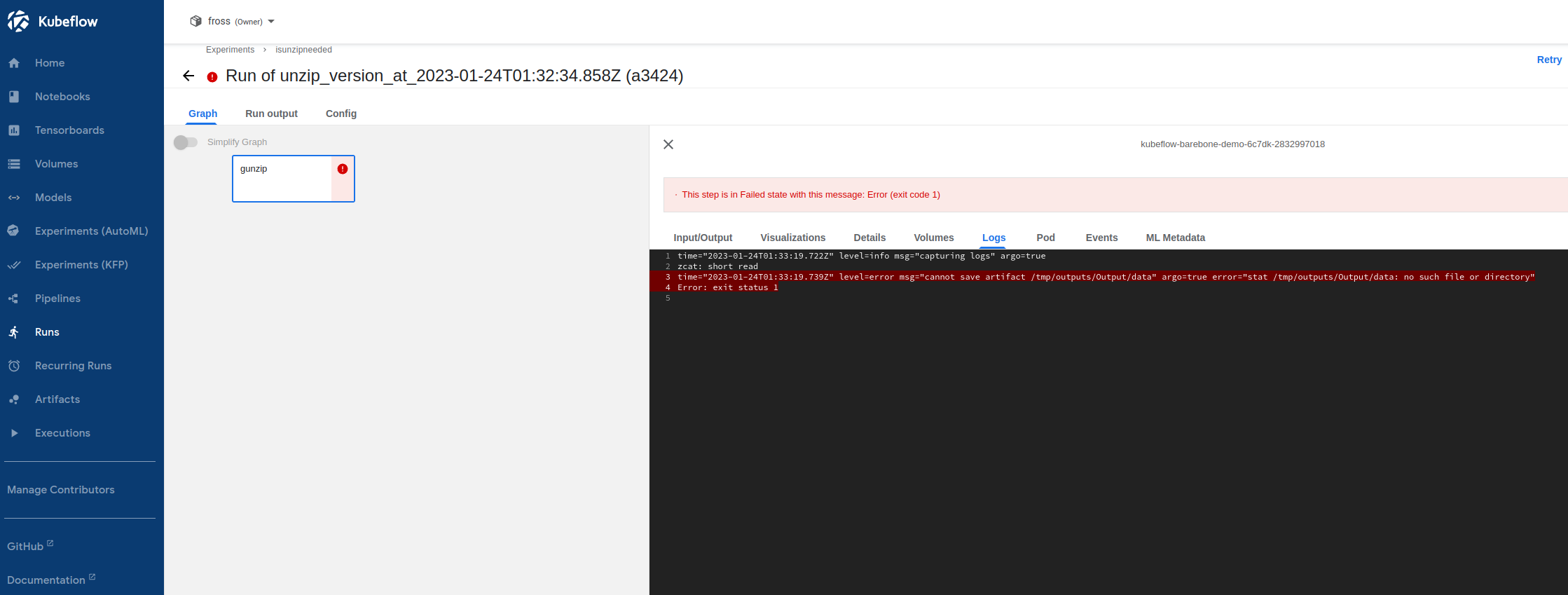

"@chensun Done. New version uploaded. Fails to write outputs, which is distinctly a different error. Should I follow up on gitter, Slack, or just start a new issue?\r\n\r\n\r\n",

"As a follow up, is this something that could be handle with a more graceful error checking process, to see if MinIO is reachable? or that the /tmp directory is available in the container before data is written? I'm pretty sure MinIO is part of my problem, because there's a 90% chance that it's a issue with deployment, and not bash syntax.",

"Thanks for checking this issue out last week @chensun . I'm fairly confident the issue isn't my bash syntax related to the $0? I've since reworked the script as follows:\r\n\r\n* pipeline.py\r\n\r\n```python\r\n...\r\ngunzip = comp.load_component_from_file(\"gunzip.yaml\")\r\n\r\n@dsl.pipeline(name=\"kubeflow-barebone-demo\", description=\"\")\r\ndef pipeline(fastq1: str):\r\n gunzip1 = gunzip(infile=fastq1).set_cpu_request(...) # as above\r\n```\r\n\r\n* gunzip.yaml\r\n```yaml\r\nname: gunzip\r\ndescription: Gunzips an Input file to an Ouput filepath\r\ninputs:\r\n- {name: infile, type: String, description: 'Data for gzip decompression'}\r\noutputs:\r\n- {name: Output, type: String, description: 'Output decompressed plaintext'}\r\nimplementation:\r\n container:\r\n image: alpine:latest\r\n # command is a list of strings (command-line arguments). \r\n # The YAML language has two syntaxes for lists and you can use either of them. \r\n # Here we use the \"flow syntax\" - comma-separated strings inside square brackets.\r\n command: [\r\n sh,\r\n -c,\r\n zcat,\r\n {inputPath: infile},\r\n '>',\r\n {outputPath: Output}\r\n ]\r\n\r\n```",

"Thank you to Chen Sun and Benjamin Tan for encouraging the `sh -c` prelude and the v1.8 syntax, respectively. Closing the original issue thanks to chensun , opened a new issue linked above."

] | "2023-01-17T13:27:32" | "2023-02-02T23:56:41" | "2023-02-02T23:56:41" | NONE | null | ### Environment

<!-- Please fill in those that seem relevant. -->

Minikube v1.28.0

kubernetes 1.22.2

Kustomize v3.2.0

kubectl v1.25.5

Manifests v1.6.1

* How do you deploy Kubeflow Pipelines (KFP)?

Locally, on minikube, via manifests v1.6.1.

I've also experimented with Argoflow on my OS, but it's not relevant here.

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

* KFP version:

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

* KFP SDK version:

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

kfp 1.8.17

kfp-pipeline-spec 0.1.16

kfp-server-api 1.8.5

### Steps to reproduce

Create pipeline.py from the following.

```python

#!/bin/env python

import os

import sys

import argparse

import kfp.dsl as dsl

import kfp.components as comp

import kfp

import logging

global logger

logger = None

def get_root_logger(level):

levels=[logging.WARNING, logging.INFO, logging.DEBUG]

if level < 0 or level > 2:

raise TypeError("{0}.get_root_logger expects a verbosity between 0-2".format(__file__))

logging.basicConfig(level=levels[level], format="%(levelname)s: %(asctime)s %(funcName)s L%(lineno)s| %(message)s", datefmt="%Y/%m/%d %I:%M:%S")

root_logger = logging.getLogger()

return root_logger

components_dir = os.path.join(os.path.dirname(__file__), "components")

#gunzip = comp.load_component_from_file(os.path.join(components_dir, "gunzip.yaml"))

#is_unzip_needed = comp.load_component_from_file(os.path.join(components_dir, "is_unzip_needed.yaml"))

def gunzip(infile:str, outfile:str):

""" Infile like 'path/to/example.txt.gz', outfile like 'path/to/example.txt' """

return dsl.ContainerOp(

name='gunzip',

image='bitnami/minideb:latest',

command=[

'/bin/zcat $0 > input.txt ',

'|| ',

'mv $0 input.txt'

],

file_outputs={

'output': 'input.txt'

}

)

@dsl.pipeline(

name='kubeflow-barebone-demo',

description='kubeflow demo with minimal setup'

)

def pipeline(fastq1:str):

# Step 1: training component

gunzip1 = gunzip(infile=fastq1, outfile = str(fastq1).rstrip(".gz")).set_cpu_request('1').set_cpu_limit('1').set_memory_request('512Mi').set_memory_limit('512Mi')

if __name__ == "__main__":

logger = get_root_logger(2)

kfp.compiler.Compiler().compile(rnaseq_pipeline, 'pipeline.yaml')

```

<!--

Specify how to reproduce the problem.

This may include information such as: a description of the process, code snippets, log output, or screenshots.

-->

### Expected result

I expect that my 'gunzip' container should run, given that the executable listed can be verified to be installed in that container (alpine:latest or bitnami/minideb:latest)

However, I am observing the following in the dashboard logs.

```bash

failed to find name in PATH: exec: "echo $0": executable file not found in $PATH

```

<!-- What should the correct behavior be? -->

The correct behavior should be to locate or understand the shell builtin 'exec'.

### Materials and reference

<!-- Help us debug this issue by providing resources such as: sample code, background context, or links to references. -->

### Labels

<!-- Please include labels below by uncommenting them to help us better triage issues -->

<!-- /area frontend -->

<!-- /area backend -->

<!-- /area sdk -->

<!-- /area testing -->

<!-- /area samples -->

<!-- /area components -->

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8683/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8683/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8682 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8682/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8682/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8682/events | https://github.com/kubeflow/pipelines/issues/8682 | 1,535,345,011 | I_kwDOB-71UM5bg4Fz | 8,682 | [sdk] KFP v2 pipeline with error | {

"login": "TrevorM15",

"id": 30201274,

"node_id": "MDQ6VXNlcjMwMjAxMjc0",

"avatar_url": "https://avatars.githubusercontent.com/u/30201274?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/TrevorM15",

"html_url": "https://github.com/TrevorM15",

"followers_url": "https://api.github.com/users/TrevorM15/followers",

"following_url": "https://api.github.com/users/TrevorM15/following{/other_user}",

"gists_url": "https://api.github.com/users/TrevorM15/gists{/gist_id}",

"starred_url": "https://api.github.com/users/TrevorM15/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/TrevorM15/subscriptions",

"organizations_url": "https://api.github.com/users/TrevorM15/orgs",

"repos_url": "https://api.github.com/users/TrevorM15/repos",

"events_url": "https://api.github.com/users/TrevorM15/events{/privacy}",

"received_events_url": "https://api.github.com/users/TrevorM15/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | closed | false | {

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"The Error comes out because you failed to access to kfp client.\r\nTry like this\r\n```\r\nimport requests\r\n\r\nUSERNAME = \"user@example.com\"\r\nPASSWORD = \"12341234\" \r\nNAMESPACE = \"kubeflow-user-example-com\"\r\nHOST = \"http://127.0.0.1:8080\" # your istio-ingressgateway pod ip:8080\r\n\r\nsession = requests.Session()\r\nresponse = session.get(HOST)\r\n\r\nheaders = {\r\n \"Content-Type\": \"application/x-www-form-urlencoded\",\r\n}\r\n\r\ndata = {\"login\": \"user@example.com\", \"password\": \"12341234\"}\r\nsession.post(response.url, headers=headers, data=data)\r\nsession_cookie = session.cookies.get_dict()[\"authservice_session\"]\r\n\r\nclient = kfp.Client(\r\n host=f\"{HOST}/pipeline\",\r\n namespace=f\"{NAMESPACE}\",\r\n cookies=f\"authservice_session={session_cookie}\",\r\n)\r\n```",

"We have our namespace set up to not require any arguments in the Client method. My pipeline worked in kfp v1.8, but I needed a newer version of the SDK to get a newer Kubernetes client version. ",

"oic did you solve the error by upgrading SDK version?",

"No, upgrading to kfp v2.0.0 is what's causing the issue. It appears from the diffs between 1.8.18 and 2.0.0b10 that all the changes were in the SDK, not in the kfp backend, so not sure what's causing these issues.",

"well for me it works fine\r\n<img width=\"862\" alt=\"image\" src=\"https://user-images.githubusercontent.com/63439911/212796975-5914fb18-09c7-4a74-89aa-ed6e7c3c1efd.png\">\r\n\r\n",

"@kimkihoon0515 @TrevorM15, if you `pip install kfp==2.0.0b9 kfp-pipeline-spec==0.1.16` (and pin these versions in your SDK environment) is the error resolved?",

"@connor-mccarthy yes. There's no error on kfp 2.0 beta.",

"@connor-mccarthy please ignore @kimkihoon0515, he does not speak for me. I did `pip install kfp==2.0.0b9 kfp-pipeline-spec==0.1.16`, but still got the error `Reason: Bad Request\r\nHTTP response headers: HTTPHeaderDict({'content-type': 'application/json', 'date': 'Fri, 20 Jan 2023 15:46:05 GMT', 'content-length': '548', 'x-envoy-upstream-service-time': '1', 'server': 'envoy'})\r\nHTTP response body: {\"error\":\"Validate create run request failed.: InvalidInputError: Invalid IR spec format.: invalid character 'c' looking for beginning of value\",\"code\":3,\"message\":\"Validate create run request failed.: InvalidInputError: Invalid IR spec format.: invalid character 'c' looking for beginning of value\",\"details\":[{\"@type\":\"type.googleapis.com/api.Error\",\"error_message\":\"Invalid IR spec format.\",\"error_details\":\"Validate create run request failed.: InvalidInputError: Invalid IR spec format.: invalid character 'c' looking for beginning of value\"}]}` after compiling and attempting to create a run from the pipeline. ",

"@TrevorM15, I was able to reproduce this with `kfp>=2.0.0b10`, but it resolved when I downgraded to `kfp==2.0.0b9`. This is because `kfp==2.0.0b10` (this release was yanked today, replaced by b11) began writing the `isOptional` field with https://github.com/kubeflow/pipelines/pull/8612 and https://github.com/kubeflow/pipelines/pull/8623 ([release notes](https://github.com/kubeflow/pipelines/blob/master/sdk/RELEASE.md#bug-fixes-and-other-changes-2)).\r\n\r\nCan you double check that this error is not resolved when you use 2.0.0b9? This `isOptional` field should not be present in your compiled YAML. If you still have the error, can you please run `pip freeze` and share the output here?",

"Edit: This is the solution for #8734, not this bug.\r\n\r\nIn the short term, this is resolved by downgrading to `kfp==2.0.0b9` (and recompiling your pipeline with this version). In the long term, this will be resolved for `kfp>=2.0.0b10` by the upcoming KFP BE beta release.\r\n\r\ncc @chensun @gkcalat @Linchin ",

"@connor-mccarthy the issue still persists for me when downgrading to 2.0.0b9",

"@TrevorM15, can you confirm that the `isOptional` field is not present in your compiled YAML? Can you run `pip freeze and share the output`?",

"`pip freeze` output:\r\n```python\r\nabsl-py==0.11.0\r\nadal==1.2.7\r\nanyio==3.1.0\r\nargon2-cffi==20.1.0\r\nasync-generator==1.10\r\nattrs==21.2.0\r\navro==1.11.0\r\nazure-common==1.1.28\r\nazure-storage-blob==2.1.0\r\nazure-storage-common==2.1.0\r\nBabel==2.9.1\r\nbackcall==0.2.0\r\nbleach==3.3.0\r\nblis==0.7.6\r\nbokeh==2.3.2\r\nbrotlipy==0.7.0\r\ncachetools==4.2.4\r\ncatalogue==2.0.6\r\ncertifi==2021.5.30\r\ncffi @ file:///home/conda/feedstock_root/build_artifacts/cffi_1613413861439/work\r\nchardet @ file:///home/conda/feedstock_root/build_artifacts/chardet_1610093490430/work\r\nclick==7.1.2\r\ncloudevents==1.2.0\r\ncloudpickle==2.2.1\r\ncolorama==0.4.4\r\nconda==4.10.1\r\nconda-package-handling @ file:///home/conda/feedstock_root/build_artifacts/conda-package-handling_1618231394280/work\r\nconfigparser==5.2.0\r\ncryptography @ file:///home/conda/feedstock_root/build_artifacts/cryptography_1616851476134/work\r\ncycler==0.11.0\r\ncymem==2.0.6\r\ndecorator==5.0.9\r\ndefusedxml==0.7.1\r\nDeprecated==1.2.13\r\ndeprecation==2.1.0\r\ndill==0.3.4\r\ndocstring-parser==0.13\r\nentrypoints==0.3\r\nfastai==2.4\r\nfastcore==1.3.29\r\nfastprogress==1.0.2\r\nfire==0.4.0\r\ngitdb==4.0.9\r\nGitPython==3.1.27\r\ngoogle-api-core==2.7.1\r\ngoogle-api-python-client==1.12.10\r\ngoogle-auth==1.35.0\r\ngoogle-auth-httplib2==0.1.0\r\ngoogle-cloud-core==2.3.2\r\ngoogle-cloud-storage==2.7.0\r\ngoogle-crc32c==1.3.0\r\ngoogle-resumable-media==2.3.2\r\ngoogleapis-common-protos==1.55.0\r\nhttplib2==0.20.4\r\nidna @ file:///home/conda/feedstock_root/build_artifacts/idna_1593328102638/work\r\nimageio==2.16.1\r\nipykernel==5.5.5\r\nipympl==0.7.0\r\nipython==7.24.1\r\nipython-genutils==0.2.0\r\nipywidgets==7.6.3\r\njedi==0.18.0\r\nJinja2==3.0.1\r\njoblib==1.1.0\r\njson5==0.9.5\r\njsonschema==3.2.0\r\njupyter-client==6.1.12\r\njupyter-core==4.7.1\r\njupyter-server==1.8.0\r\njupyter-server-mathjax==0.2.5\r\njupyterlab==3.0.16\r\njupyterlab-git==0.30.1\r\njupyterlab-pygments==0.1.2\r\njupyterlab-server==2.6.0\r\njupyterlab-widgets==1.0.2\r\nkfp==2.0.0b9\r\nkfp-pipeline-spec==0.1.16\r\nkfp-server-api==2.0.0a6\r\nkfserving==0.5.1\r\nkiwisolver==1.3.2\r\nkubernetes==12.0.1\r\nlangcodes==3.3.0\r\nMarkupSafe==2.0.1\r\nmatplotlib==3.4.2\r\nmatplotlib-inline==0.1.2\r\nminio==6.0.2\r\nmistune==0.8.4\r\nmurmurhash==1.0.6\r\nnbclassic==0.3.1\r\nnbclient==0.5.3\r\nnbconvert==6.0.7\r\nnbdime==3.1.1\r\nnbformat==5.1.3\r\nnest-asyncio==1.5.1\r\nnetworkx==2.7.1\r\nnotebook==6.4.0\r\nnumpy==1.20.3\r\noauthlib==3.2.0\r\npackaging==20.9\r\npandas==1.2.4\r\npandocfilters==1.4.3\r\nparso==0.8.2\r\npathy==0.6.1\r\npexpect==4.8.0\r\npickleshare==0.7.5\r\nPillow==9.0.1\r\npreshed==3.0.6\r\nprometheus-client==0.11.0\r\nprompt-toolkit==3.0.18\r\nprotobuf==3.19.4\r\nptyprocess==0.7.0\r\npyasn1==0.4.8\r\npyasn1-modules==0.2.8\r\npycosat @ file:///home/conda/feedstock_root/build_artifacts/pycosat_1610094800877/work\r\npycparser @ file:///home/conda/feedstock_root/build_artifacts/pycparser_1593275161868/work\r\npydantic==1.8.2\r\nPygments==2.9.0\r\nPyJWT==2.3.0\r\npyOpenSSL @ file:///home/conda/feedstock_root/build_artifacts/pyopenssl_1608055815057/work\r\npyparsing==2.4.7\r\npyrsistent==0.17.3\r\nPySocks @ file:///home/conda/feedstock_root/build_artifacts/pysocks_1610291447907/work\r\npython-dateutil==2.8.1\r\npytz==2021.1\r\nPyWavelets==1.2.0\r\nPyYAML==5.4.1\r\npyzmq==22.1.0\r\nrequests @ file:///home/conda/feedstock_root/build_artifacts/requests_1608156231189/work\r\nrequests-oauthlib==1.3.1\r\nrequests-toolbelt==0.9.1\r\nrsa==4.8\r\nruamel-yaml-conda @ file:///home/conda/feedstock_root/build_artifacts/ruamel_yaml_1611943339799/work\r\nscikit-image==0.18.1\r\nscikit-learn==0.24.2\r\nscipy==1.7.0\r\nseaborn==0.11.1\r\nSend2Trash==1.5.0\r\nsix @ file:///home/conda/feedstock_root/build_artifacts/six_1620240208055/work\r\nsmart-open==5.2.1\r\nsmmap==5.0.0\r\nsniffio==1.2.0\r\nspacy==3.2.3\r\nspacy-legacy==3.0.9\r\nspacy-loggers==1.0.1\r\nsrsly==2.4.2\r\nstrip-hints==0.1.10\r\ntable-logger==0.3.6\r\ntabulate==0.8.9\r\ntermcolor==1.1.0\r\nterminado==0.10.0\r\ntestpath==0.5.0\r\nthinc==8.0.13\r\nthreadpoolctl==3.1.0\r\ntifffile==2022.2.9\r\ntorch==1.8.1+cpu\r\ntorchaudio==0.8.1\r\ntorchvision==0.9.1+cpu\r\ntornado==6.1\r\ntqdm @ file:///home/conda/feedstock_root/build_artifacts/tqdm_1621890532941/work\r\ntraitlets==5.0.5\r\ntyper==0.4.0\r\ntyping-extensions==3.10.0.0\r\nuritemplate==3.0.1\r\nurllib3 @ file:///home/conda/feedstock_root/build_artifacts/urllib3_1622056799390/work\r\nwasabi==0.9.0\r\nwcwidth==0.2.5\r\nwebencodings==0.5.1\r\nwebsocket-client==1.0.1\r\nwidgetsnbextension==3.5.2\r\nwrapt==1.13.3\r\nxgboost==1.4.2\r\n```\r\n\r\nThe yaml:\r\n```yaml\r\n# PIPELINE DEFINITION\r\n# Name: addition-pipeline\r\n# Inputs:\r\n# a: int [Default: 1.0]\r\n# b: int [Default: 2.0]\r\n# c: int [Default: 10.0]\r\ncomponents:\r\n comp-addition-component:\r\n executorLabel: exec-addition-component\r\n inputDefinitions:\r\n parameters:\r\n num1:\r\n parameterType: NUMBER_INTEGER\r\n num2:\r\n parameterType: NUMBER_INTEGER\r\n outputDefinitions:\r\n parameters:\r\n Output:\r\n parameterType: NUMBER_INTEGER\r\n comp-addition-component-2:\r\n executorLabel: exec-addition-component-2\r\n inputDefinitions:\r\n parameters:\r\n num1:\r\n parameterType: NUMBER_INTEGER\r\n num2:\r\n parameterType: NUMBER_INTEGER\r\n outputDefinitions:\r\n parameters:\r\n Output:\r\n parameterType: NUMBER_INTEGER\r\ndeploymentSpec:\r\n executors:\r\n exec-addition-component:\r\n container:\r\n args:\r\n - --executor_input\r\n - '{{$}}'\r\n - --function_to_execute\r\n - addition_component\r\n command:\r\n - sh\r\n - -c\r\n - \"\\nif ! [ -x \\\"$(command -v pip)\\\" ]; then\\n python3 -m ensurepip ||\\\r\n \\ python3 -m ensurepip --user || apt-get install python3-pip\\nfi\\n\\nPIP_DISABLE_PIP_VERSION_CHECK=1\\\r\n \\ python3 -m pip install --quiet --no-warn-script-location 'kfp==2.0.0-beta.9'\\\r\n \\ && \\\"$0\\\" \\\"$@\\\"\\n\"\r\n - sh\r\n - -ec\r\n - 'program_path=$(mktemp -d)\r\n\r\n printf \"%s\" \"$0\" > \"$program_path/ephemeral_component.py\"\r\n\r\n python3 -m kfp.components.executor_main --component_module_path \"$program_path/ephemeral_component.py\" \"$@\"\r\n\r\n '\r\n - \"\\nimport kfp\\nfrom kfp import dsl\\nfrom kfp.dsl import *\\nfrom typing import\\\r\n \\ *\\n\\ndef addition_component(num1: int, num2: int) -> int:\\n return num1\\\r\n \\ + num2\\n\\n\"\r\n image: python:3.7\r\n exec-addition-component-2:\r\n container:\r\n args:\r\n - --executor_input\r\n - '{{$}}'\r\n - --function_to_execute\r\n - addition_component\r\n command:\r\n - sh\r\n - -c\r\n - \"\\nif ! [ -x \\\"$(command -v pip)\\\" ]; then\\n python3 -m ensurepip ||\\\r\n \\ python3 -m ensurepip --user || apt-get install python3-pip\\nfi\\n\\nPIP_DISABLE_PIP_VERSION_CHECK=1\\\r\n \\ python3 -m pip install --quiet --no-warn-script-location 'kfp==2.0.0-beta.9'\\\r\n \\ && \\\"$0\\\" \\\"$@\\\"\\n\"\r\n - sh\r\n - -ec\r\n - 'program_path=$(mktemp -d)\r\n\r\n printf \"%s\" \"$0\" > \"$program_path/ephemeral_component.py\"\r\n\r\n python3 -m kfp.components.executor_main --component_module_path \"$program_path/ephemeral_component.py\" \"$@\"\r\n\r\n '\r\n - \"\\nimport kfp\\nfrom kfp import dsl\\nfrom kfp.dsl import *\\nfrom typing import\\\r\n \\ *\\n\\ndef addition_component(num1: int, num2: int) -> int:\\n return num1\\\r\n \\ + num2\\n\\n\"\r\n image: python:3.7\r\npipelineInfo:\r\n name: addition-pipeline\r\nroot:\r\n dag:\r\n tasks:\r\n addition-component:\r\n cachingOptions:\r\n enableCache: true\r\n componentRef:\r\n name: comp-addition-component\r\n inputs:\r\n parameters:\r\n num1:\r\n componentInputParameter: a\r\n num2:\r\n componentInputParameter: b\r\n taskInfo:\r\n name: addition-component\r\n addition-component-2:\r\n cachingOptions:\r\n enableCache: true\r\n componentRef:\r\n name: comp-addition-component-2\r\n dependentTasks:\r\n - addition-component\r\n inputs:\r\n parameters:\r\n num1:\r\n taskOutputParameter:\r\n outputParameterKey: Output\r\n producerTask: addition-component\r\n num2:\r\n componentInputParameter: c\r\n taskInfo:\r\n name: addition-component-2\r\n inputDefinitions:\r\n parameters:\r\n a:\r\n defaultValue: 1.0\r\n parameterType: NUMBER_INTEGER\r\n b:\r\n defaultValue: 2.0\r\n parameterType: NUMBER_INTEGER\r\n c:\r\n defaultValue: 10.0\r\n parameterType: NUMBER_INTEGER\r\nschemaVersion: 2.1.0\r\nsdkVersion: kfp-2.0.0-beta.9\r\n```",

"I am unable to reproduce this on later versions of the KFP BE. 1.5 is a fairly old version of the KFP BE. I suggest you upgrade the BE and see if the issue continues.\r\n\r\nYou can find some [upgrade instructions here](https://googlecloudplatform.github.io/kubeflow-gke-docs/docs/pipelines/upgrade/) (specific to Google Cloud).",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions."

] | "2023-01-16T18:32:28" | "2023-08-28T15:35:22" | "2023-08-28T15:35:21" | NONE | null | ### Environment

* KFP version:

Kubeflow 1.5

* KFP SDK version:

KFP v2.0.0b10

* All dependencies version:

kfp 2.0.0b10

kfp-pipeline-spec 0.1.17

kfp-server-api 2.0.0a6

### Steps to reproduce

Tried upgrading from KFP 1.8 to 2.0 for higher version kubernetes sdk support. Tried copying the example from [here](https://www.kubeflow.org/docs/components/pipelines/v2/compile-a-pipeline/)

```python3

import kfp

from kfp import compiler

from kfp import dsl

@dsl.component

def addition_component(num1: int, num2: int) -> int:

return num1 + num2

@dsl.pipeline(name='addition-pipeline')

def my_pipeline(a: int=1, b: int=2, c: int = 10):

add_task_1 = addition_component(num1=a, num2=b)

add_task_2 = addition_component(num1=add_task_1.output, num2=c)

cmplr = compiler.Compiler()

cmplr.compile(my_pipeline, package_path='my_pipeline.yaml')

client=kfp.Client()

client.create_run_from_pipeline_package('my_pipeline.yaml',arguments={"a":1,"b":2})

```

When I give it arguments I get the error `ApiException: (400)

Reason: Bad Request

HTTP response headers: HTTPHeaderDict({'content-type': 'application/json', 'date': 'Mon, 16 Jan 2023 18:20:24 GMT', 'content-length': '190', 'x-envoy-upstream-service-time': '0', 'server': 'envoy'})

HTTP response body: {"error":"json: cannot unmarshal number into Go value of type map[string]json.RawMessage","code":3,"message":"json: cannot unmarshal number into Go value of type map[string]json.RawMessage"}`

And without arguments I get the error `ApiException: (400)

Reason: Bad Request

HTTP response headers: HTTPHeaderDict({'content-type': 'application/json', 'date': 'Mon, 16 Jan 2023 18:30:38 GMT', 'content-length': '548', 'x-envoy-upstream-service-time': '1', 'server': 'envoy'})

HTTP response body: {"error":"Validate create run request failed.: InvalidInputError: Invalid IR spec format.: invalid character 'c' looking for beginning of value","code":3,"message":"Validate create run request failed.: InvalidInputError: Invalid IR spec format.: invalid character 'c' looking for beginning of value","details":[{"@type":"type.googleapis.com/api.Error","error_message":"Invalid IR spec format.","error_details":"Validate create run request failed.: InvalidInputError: Invalid IR spec format.: invalid character 'c' looking for beginning of value"}]}`

### Expected result

Pipeline compiles and runs.

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8682/reactions",

"total_count": 5,

"+1": 5,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8682/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8680 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8680/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8680/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8680/events | https://github.com/kubeflow/pipelines/issues/8680 | 1,534,951,739 | I_kwDOB-71UM5bfYE7 | 8,680 | What is Difference of pipelines between V1 and V2??? | {

"login": "kimkihoon0515",

"id": 63439911,

"node_id": "MDQ6VXNlcjYzNDM5OTEx",

"avatar_url": "https://avatars.githubusercontent.com/u/63439911?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/kimkihoon0515",

"html_url": "https://github.com/kimkihoon0515",

"followers_url": "https://api.github.com/users/kimkihoon0515/followers",

"following_url": "https://api.github.com/users/kimkihoon0515/following{/other_user}",

"gists_url": "https://api.github.com/users/kimkihoon0515/gists{/gist_id}",

"starred_url": "https://api.github.com/users/kimkihoon0515/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/kimkihoon0515/subscriptions",

"organizations_url": "https://api.github.com/users/kimkihoon0515/orgs",

"repos_url": "https://api.github.com/users/kimkihoon0515/repos",

"events_url": "https://api.github.com/users/kimkihoon0515/events{/privacy}",

"received_events_url": "https://api.github.com/users/kimkihoon0515/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Hi @kimkihoon0515 \r\n\r\n> Does V2 still supports argo workflow or not??\r\n\r\nKFP v2 still supports argo workflow.\r\n\r\n> And for now V1 compiler compiles pipeline into yaml file but in V2 the compiler only supports json file format.\r\n> Are you guys planning to support a function that supports to be compiled as a yaml file??\r\n\r\nIn master branch, KFP SDK (currently at 2.0-beta) support compiling to YAML as well. However, it's worth noting that the file format itself isn't what matter, but the content format. In KFP v1, the YAML is of Argo Workflow CRD, while in KFP v2, the JSON/YAML is of PipelineSpec--and this leads to your third question.\r\n\r\n> Plus what is pipeline Spec?\r\n\r\nPipeline spec is the spec definition described via [this proto message](https://github.com/kubeflow/pipelines/blob/d1f1ee9f2bbd09df7ea6ab51b21f07ba5f86c871/api/v2alpha1/pipeline_spec.proto#L50). It's meant to be an Intermediate Representation (IR) of pipeline. KFP v2 creates this abstraction layer on top of Argo, the new IR spec is platform-agnostic. The goal is to support running the same pipeline across multiple platforms/engines: KFP, KFP on tekton, Vertex Pipelines, etc.\r\n\r\nThis [\"Understanding KFP v2\"](https://docs.google.com/presentation/d/1HzMwtI2QN67xQp2lSxmuXhitEsukLB7mvZx4KAPub3A/edit#slide=id.gb4a3fac3a8_7_1911) deck we presented at Kubeflow Pipelines community meeting may help you have a better view of KFP v2. You need to join [kubeflow-discuss](https://groups.google.com/g/kubeflow-discuss) Google group to gain access to the docs we've shared with the community. \r\n\r\n\r\n",

"@chensun I saw the roadmap on YT. So you guys gonna stop releasing major version of v1? ",

"> @chensun I saw the roadmap on YT. So you guys gonna stop releasing major version of v1?\r\n\r\nThat is correct. We will continue patching v1 (1.8) for security and vulnerability fixes, but there's no planned feature work/release for v1. ",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions."

] | "2023-01-16T13:34:09" | "2023-08-28T07:42:20" | null | NONE | null | Does V2 still supports argo workflow or not??

And for now V1 compiler compiles pipeline into yaml file but in V2 the compiler only supports json file format.

Are you guys planning to support a function that supports to be compiled as a yaml file??

Plus what is pipeline Spec? | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8680/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8680/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8676 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8676/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8676/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8676/events | https://github.com/kubeflow/pipelines/issues/8676 | 1,533,811,531 | I_kwDOB-71UM5bbBtL | 8,676 | [feature] conda-forge feedstock github repository for google-cloud-pipeline-components | {

"login": "tarrade",

"id": 12021701,

"node_id": "MDQ6VXNlcjEyMDIxNzAx",

"avatar_url": "https://avatars.githubusercontent.com/u/12021701?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tarrade",

"html_url": "https://github.com/tarrade",

"followers_url": "https://api.github.com/users/tarrade/followers",

"following_url": "https://api.github.com/users/tarrade/following{/other_user}",

"gists_url": "https://api.github.com/users/tarrade/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tarrade/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tarrade/subscriptions",

"organizations_url": "https://api.github.com/users/tarrade/orgs",

"repos_url": "https://api.github.com/users/tarrade/repos",

"events_url": "https://api.github.com/users/tarrade/events{/privacy}",

"received_events_url": "https://api.github.com/users/tarrade/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | {

"login": "IronPan",

"id": 2348602,

"node_id": "MDQ6VXNlcjIzNDg2MDI=",

"avatar_url": "https://avatars.githubusercontent.com/u/2348602?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/IronPan",

"html_url": "https://github.com/IronPan",

"followers_url": "https://api.github.com/users/IronPan/followers",

"following_url": "https://api.github.com/users/IronPan/following{/other_user}",

"gists_url": "https://api.github.com/users/IronPan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/IronPan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/IronPan/subscriptions",

"organizations_url": "https://api.github.com/users/IronPan/orgs",

"repos_url": "https://api.github.com/users/IronPan/repos",

"events_url": "https://api.github.com/users/IronPan/events{/privacy}",

"received_events_url": "https://api.github.com/users/IronPan/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "IronPan",

"id": 2348602,

"node_id": "MDQ6VXNlcjIzNDg2MDI=",

"avatar_url": "https://avatars.githubusercontent.com/u/2348602?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/IronPan",

"html_url": "https://github.com/IronPan",

"followers_url": "https://api.github.com/users/IronPan/followers",

"following_url": "https://api.github.com/users/IronPan/following{/other_user}",

"gists_url": "https://api.github.com/users/IronPan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/IronPan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/IronPan/subscriptions",

"organizations_url": "https://api.github.com/users/IronPan/orgs",

"repos_url": "https://api.github.com/users/IronPan/repos",

"events_url": "https://api.github.com/users/IronPan/events{/privacy}",

"received_events_url": "https://api.github.com/users/IronPan/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Happy to help or to give more context on this FR",

"just some context, there are already 62 google-cloud python packages available in conda-forge channel\r\n\r\nhttps://github.com/orgs/conda-forge/repositories?q=google-cloud-&type=all&language=&sort=\r\n**62 results for all repositories matching google-cloud- sorted by last updated**\r\n\r\nSome example of feedstock related to kfp:\r\nhttps://github.com/conda-forge/kfp-pipeline-spec-feedstock\r\nhttps://github.com/conda-forge/kfp-feedstock\r\n\r\nSome example from gcp python sdk:\r\nhttps://github.com/conda-forge/google-cloud-logging-feedstock\r\nhttps://github.com/conda-forge/google-cloud-aiplatform-feedstock\r\n\r\nstep by step:\r\nhttps://conda-forge.org/docs/maintainer/adding_pkgs.html\r\n\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions."

] | "2023-01-15T13:17:57" | "2023-08-28T07:42:22" | null | NONE | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

<!-- /area backend -->

<!-- /area sdk -->

<!-- /area samples -->

/area components

### What feature would you like to see?

<!-- Provide a description of this feature and the user experience. -->

Would like to have google-cloud-pipeline-components python package in the conda-forge channel.

There is no conda forge feedstock available yet [link](https://github.com/orgs/conda-forge/repositories?q=google-cloud-&type=all&language=&sort=)

### What is the use case or pain point?

<!-- It helps us understand the benefit of this feature for your use case. -->

Mamba is a much better python package manager than what we can get with pip/pypi which works for example with conda-forge channel. Most of the open source libraries and gpc python lib (cloud storage, logging, hyperparameter, veretx ai ..) exist in the conda-forge channel like the kfp python sdk [link](https://anaconda.org/conda-forge/kfp) with the following conda-forge feedstock [link](https://github.com/conda-forge/kfp-feedstock).

When a conda-forge feedstock is created for google-cloud-pipeline-components, I will be happy to do PR for new version as I did for kfp sdk but this is is a google/gcp package I guess the conda-forge feedstock repository need to be created by google/gcp as well asthe approval of the PR to avoid malicious actor to use it no ? Happy to help but never create a conda-forge feedstock from scratch

### Is there a workaround currently?

<!-- Without this feature, how do you accomplish your task today? -->

The package exist in pypi [link](https://pypi.org/project/google-cloud-pipeline-components/)

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8676/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8676/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8675 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8675/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8675/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8675/events | https://github.com/kubeflow/pipelines/issues/8675 | 1,533,198,027 | I_kwDOB-71UM5bYr7L | 8,675 | [bug] <KFP v2 Metrics Doesn't show up> | {

"login": "kimkihoon0515",

"id": 63439911,

"node_id": "MDQ6VXNlcjYzNDM5OTEx",

"avatar_url": "https://avatars.githubusercontent.com/u/63439911?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/kimkihoon0515",

"html_url": "https://github.com/kimkihoon0515",

"followers_url": "https://api.github.com/users/kimkihoon0515/followers",

"following_url": "https://api.github.com/users/kimkihoon0515/following{/other_user}",

"gists_url": "https://api.github.com/users/kimkihoon0515/gists{/gist_id}",

"starred_url": "https://api.github.com/users/kimkihoon0515/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/kimkihoon0515/subscriptions",

"organizations_url": "https://api.github.com/users/kimkihoon0515/orgs",

"repos_url": "https://api.github.com/users/kimkihoon0515/repos",

"events_url": "https://api.github.com/users/kimkihoon0515/events{/privacy}",

"received_events_url": "https://api.github.com/users/kimkihoon0515/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"Hello @kimkihoon0515 , can you deploy Kubeflow Pipelines 2.0.0-alpha versions like this one? https://github.com/kubeflow/pipelines/releases/tag/2.0.0-alpha.6.\r\n\r\nAlso, please use KFP SDK version which is 2.0.0-beta like this one: https://github.com/kubeflow/pipelines/releases/tag/2.0.0b10",

"@zijianjoy Hey Thx for your help. I tried kfp version 2.0.0b2 and i got the screen that i wanted :) "

] | "2023-01-14T09:42:34" | "2023-01-19T23:40:33" | "2023-01-19T23:40:33" | NONE | null | ### Environment

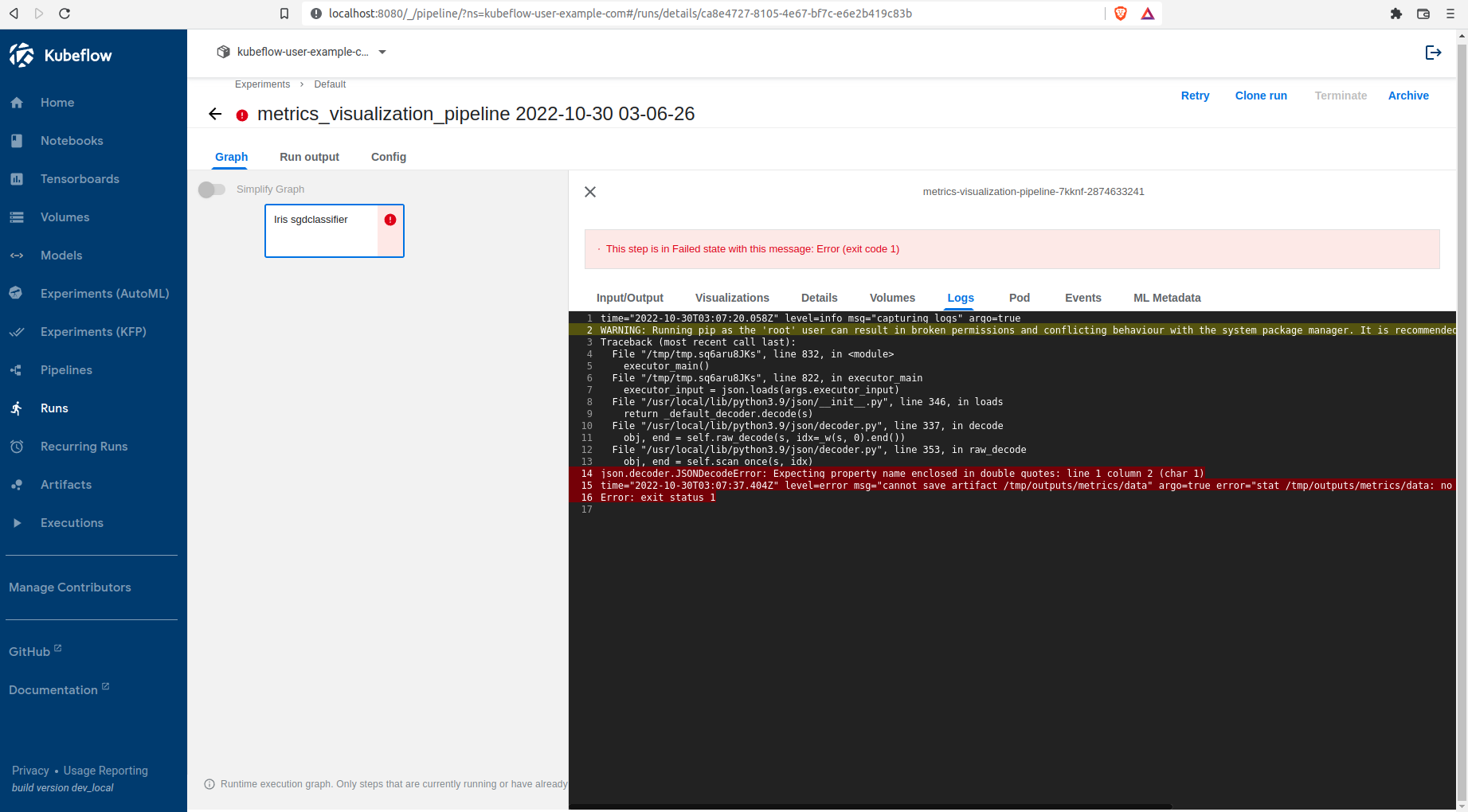

<!-- Please fill in those that seem relevant. -->

* How do you deploy Kubeflow Pipelines (KFP)?

I deployed Kubeflow using this command

```

minikube start --driver=docker --kubernetes-version=1.23.14 --memory=12g --cpus=4

```

* KFP version:

1.8.18

### Steps to reproduce

I'm trying to get confusion matrix metric output by using kfp v2 like this

```

@component(

packages_to_install=['scikit-learn'],

base_image='python:3.9'

)

def iris_sgdclassifier(test_samples_fraction: float, metrics: Output[ClassificationMetrics]):

from sklearn import datasets, model_selection

from sklearn.linear_model import SGDClassifier

from sklearn.metrics import confusion_matrix

iris_dataset = datasets.load_iris()

train_x, test_x, train_y, test_y = model_selection.train_test_split(

iris_dataset['data'], iris_dataset['target'], test_size=test_samples_fraction)

classifier = SGDClassifier()

classifier.fit(train_x, train_y)

predictions = model_selection.cross_val_predict(classifier, train_x, train_y, cv=3)

metrics.log_confusion_matrix(

['Setosa', 'Versicolour', 'Virginica'],

confusion_matrix(train_y, predictions).tolist() # .tolist() to convert np array to list.

)

```

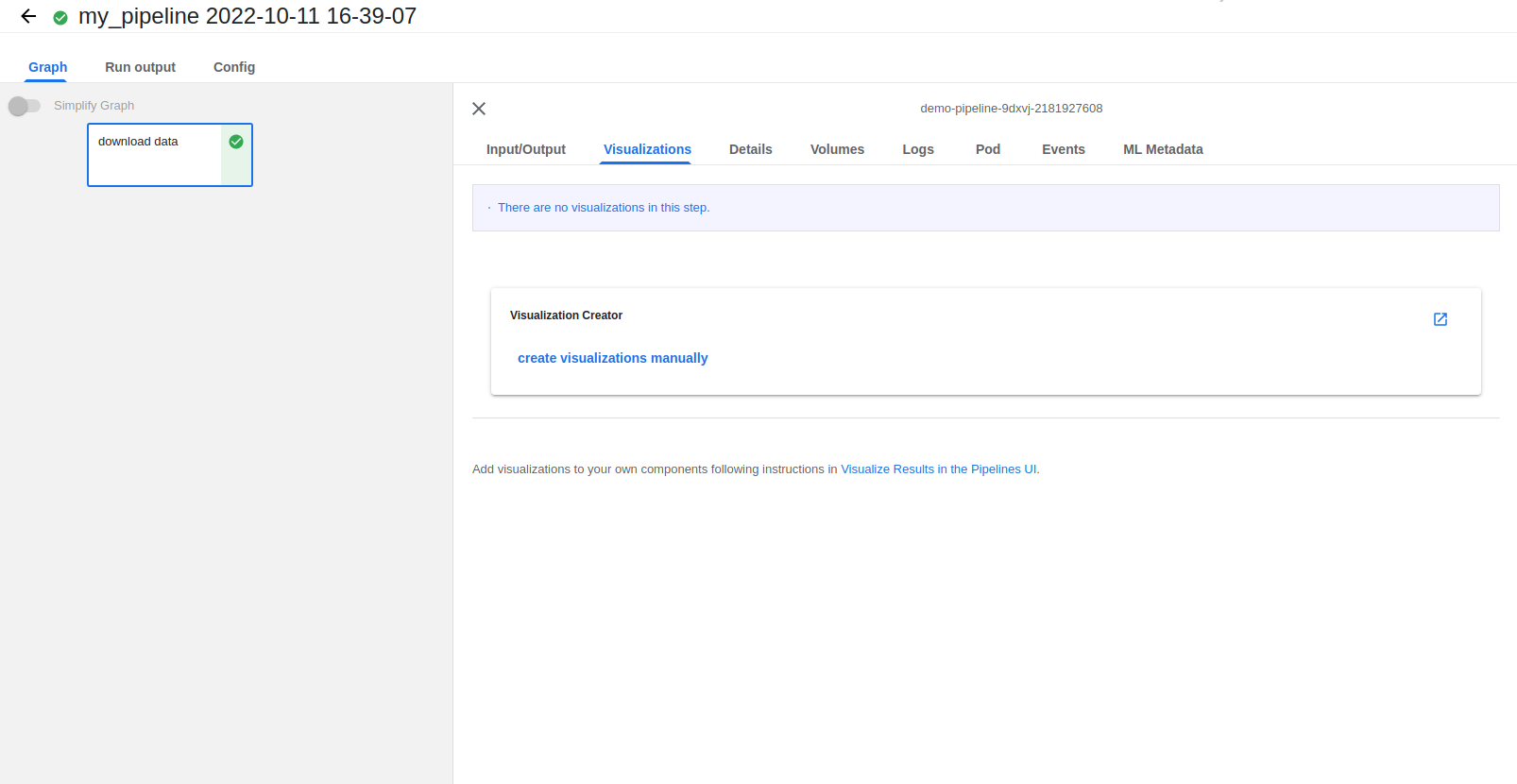

and it shows me a confusion matrix output in "Visualizations" tab.

But nothing shows up in "Input/Output" tab.

### Expected result

Actually I'm trying to compare confusion matrixes of several runs which is possible on kfp v2.

<img width="749" alt="image" src="https://user-images.githubusercontent.com/63439911/212465776-cbeb5807-4294-4dac-91ba-6c0c52ec750e.png">

the log says

```

launcher.go:560] Local filepath "/minio/mlpipeline/v2/artifacts/pipeline/v2-metrics/58c160ea-4ef3-49c8-a08a-0e1c0347a031/iris-sgdclassifier/metrics" does not exist

```

I think that log comes out because theres no output file in the component. But idk how to do it.

### Materials and reference

https://www.kubeflow.org/docs/components/pipelines/v1/sdk-v2/run-comparison/

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8675/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8675/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8672 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8672/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8672/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8672/events | https://github.com/kubeflow/pipelines/issues/8672 | 1,532,822,877 | I_kwDOB-71UM5bXQVd | 8,672 | [sdk] Notebook Executor pipeline component does not cause the pipeline to fail when a notebook execution fails | {

"login": "nturner-maritz",

"id": 105460605,

"node_id": "U_kgDOBkkzfQ",

"avatar_url": "https://avatars.githubusercontent.com/u/105460605?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/nturner-maritz",

"html_url": "https://github.com/nturner-maritz",

"followers_url": "https://api.github.com/users/nturner-maritz/followers",

"following_url": "https://api.github.com/users/nturner-maritz/following{/other_user}",

"gists_url": "https://api.github.com/users/nturner-maritz/gists{/gist_id}",

"starred_url": "https://api.github.com/users/nturner-maritz/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/nturner-maritz/subscriptions",

"organizations_url": "https://api.github.com/users/nturner-maritz/orgs",

"repos_url": "https://api.github.com/users/nturner-maritz/repos",

"events_url": "https://api.github.com/users/nturner-maritz/events{/privacy}",

"received_events_url": "https://api.github.com/users/nturner-maritz/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | {

"login": "chongyouquan",

"id": 48691403,

"node_id": "MDQ6VXNlcjQ4NjkxNDAz",

"avatar_url": "https://avatars.githubusercontent.com/u/48691403?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chongyouquan",

"html_url": "https://github.com/chongyouquan",

"followers_url": "https://api.github.com/users/chongyouquan/followers",

"following_url": "https://api.github.com/users/chongyouquan/following{/other_user}",

"gists_url": "https://api.github.com/users/chongyouquan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chongyouquan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chongyouquan/subscriptions",

"organizations_url": "https://api.github.com/users/chongyouquan/orgs",

"repos_url": "https://api.github.com/users/chongyouquan/repos",

"events_url": "https://api.github.com/users/chongyouquan/events{/privacy}",

"received_events_url": "https://api.github.com/users/chongyouquan/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chongyouquan",

"id": 48691403,

"node_id": "MDQ6VXNlcjQ4NjkxNDAz",

"avatar_url": "https://avatars.githubusercontent.com/u/48691403?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chongyouquan",

"html_url": "https://github.com/chongyouquan",

"followers_url": "https://api.github.com/users/chongyouquan/followers",

"following_url": "https://api.github.com/users/chongyouquan/following{/other_user}",

"gists_url": "https://api.github.com/users/chongyouquan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chongyouquan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chongyouquan/subscriptions",

"organizations_url": "https://api.github.com/users/chongyouquan/orgs",

"repos_url": "https://api.github.com/users/chongyouquan/repos",

"events_url": "https://api.github.com/users/chongyouquan/events{/privacy}",

"received_events_url": "https://api.github.com/users/chongyouquan/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions."

] | "2023-01-13T19:48:16" | "2023-08-28T07:42:25" | null | NONE | null | ### Environment

* KFP version:

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

* KFP SDK version: 1.8.12

* `google-cloud-pipeline-components 1.0.4`

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

* All dependencies version:

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

`kfp 1.8.12`

`kfp-pipeline-spec 0.1.14`

`kfp-server-api 1.8.1`

### Steps to reproduce

<!--

Specify how to reproduce the problem.

This may include information such as: a description of the process, code snippets, log output, or screenshots.

--> I encountered this error when using the kubeflow pipelines sdk with Vertex AI Pipelines.

Run a pipeline which uses the notebook executor Google Cloud pipeline component. When the notebook execution raises an error, the pipeline will continue to run until stopped manually. This is due to a bug in executor.py that results in an infinite loop if the notebook job fails with an error.

### Expected result

<!-- What should the correct behavior be? -->

When the notebook throws an error, the pipeline should stop running and return an error message.

### Materials and Reference

<!-- Help us debug this issue by providing resources such as: sample code, background context, or links to references. -->

components/google-cloud/google_cloud_pipeline_components/container/experimental/notebooks/executor.py

https://github.com/kubeflow/pipelines/blob/master/components/google-cloud/google_cloud_pipeline_components/container/experimental/notebooks/executor.py#L220

By changing Line 220 to the following:

`if job_state.JobState(custom_job_state) in _STATES_COMPLETED:`

I was able to get the expected behavior.

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8672/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8672/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8666 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8666/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8666/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8666/events | https://github.com/kubeflow/pipelines/issues/8666 | 1,528,626,831 | I_kwDOB-71UM5bHP6P | 8,666 | [sdk] Accept higher PyYAML versions | {

"login": "mai-nakagawa",

"id": 2883424,

"node_id": "MDQ6VXNlcjI4ODM0MjQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/2883424?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mai-nakagawa",

"html_url": "https://github.com/mai-nakagawa",

"followers_url": "https://api.github.com/users/mai-nakagawa/followers",

"following_url": "https://api.github.com/users/mai-nakagawa/following{/other_user}",

"gists_url": "https://api.github.com/users/mai-nakagawa/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mai-nakagawa/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mai-nakagawa/subscriptions",

"organizations_url": "https://api.github.com/users/mai-nakagawa/orgs",

"repos_url": "https://api.github.com/users/mai-nakagawa/repos",

"events_url": "https://api.github.com/users/mai-nakagawa/events{/privacy}",

"received_events_url": "https://api.github.com/users/mai-nakagawa/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | {

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "connor-mccarthy",

"id": 55268212,

"node_id": "MDQ6VXNlcjU1MjY4MjEy",

"avatar_url": "https://avatars.githubusercontent.com/u/55268212?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/connor-mccarthy",

"html_url": "https://github.com/connor-mccarthy",

"followers_url": "https://api.github.com/users/connor-mccarthy/followers",

"following_url": "https://api.github.com/users/connor-mccarthy/following{/other_user}",

"gists_url": "https://api.github.com/users/connor-mccarthy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/connor-mccarthy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/connor-mccarthy/subscriptions",

"organizations_url": "https://api.github.com/users/connor-mccarthy/orgs",

"repos_url": "https://api.github.com/users/connor-mccarthy/repos",

"events_url": "https://api.github.com/users/connor-mccarthy/events{/privacy}",

"received_events_url": "https://api.github.com/users/connor-mccarthy/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | "2023-01-11T08:28:12" | "2023-01-17T18:46:44" | "2023-01-17T18:46:44" | CONTRIBUTOR | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

<!-- /area backend -->

/area sdk

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

<!-- Provide a description of this feature and the user experience. -->

The SDK currently requires `PyYAML>=5.3,<6`. I would like the SDK to accept higher versions - `>=6` specifically.

### What is the use case or pain point?

Would like to use kfp SDK from Google Cloud Composer. However, the latest Google Cloud Composer comes with `PyYAML==6.0` as per [Cloud Composer version list](https://cloud.google.com/composer/docs/concepts/versioning/composer-versions)

### Is there a workaround currently?

<!-- Without this feature, how do you accomplish your task today? -->

There are some workarounds but I think it's no problem for the SDK to accept newer PyYAML versions. I double-checked the [change list of PyYAML 6.0](https://github.com/yaml/pyyaml/blob/6.0/CHANGES#L7-L18). I believe those changes have no impact to kfp SDK.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/8666/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/8666/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/8661 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/8661/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/8661/comments | https://api.github.com/repos/kubeflow/pipelines/issues/8661/events | https://github.com/kubeflow/pipelines/issues/8661 | 1,526,100,273 | I_kwDOB-71UM5a9nEx | 8,661 | [sdk] `wait_for_run_completion` occasionally hangs forever in `RunServiceApi.get_run` call | {

"login": "jli",

"id": 133466,

"node_id": "MDQ6VXNlcjEzMzQ2Ng==",

"avatar_url": "https://avatars.githubusercontent.com/u/133466?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jli",

"html_url": "https://github.com/jli",

"followers_url": "https://api.github.com/users/jli/followers",

"following_url": "https://api.github.com/users/jli/following{/other_user}",

"gists_url": "https://api.github.com/users/jli/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jli/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jli/subscriptions",

"organizations_url": "https://api.github.com/users/jli/orgs",

"repos_url": "https://api.github.com/users/jli/repos",

"events_url": "https://api.github.com/users/jli/events{/privacy}",

"received_events_url": "https://api.github.com/users/jli/received_events",

"type": "User",