url

stringlengths 59

59

| repository_url

stringclasses 1

value | labels_url

stringlengths 73

73

| comments_url

stringlengths 68

68

| events_url

stringlengths 66

66

| html_url

stringlengths 49

49

| id

int64 782M

1.89B

| node_id

stringlengths 18

24

| number

int64 4.97k

9.98k

| title

stringlengths 2

306

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 4

values | active_lock_reason

null | body

stringlengths 0

63.6k

⌀ | reactions

dict | timeline_url

stringlengths 68

68

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 0

classes | pull_request

dict | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/kubeflow/pipelines/issues/5137 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5137/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5137/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5137/events | https://github.com/kubeflow/pipelines/issues/5137 | 808,427,374 | MDU6SXNzdWU4MDg0MjczNzQ= | 5,137 | Problems upgrading to TFX 0.27.0 | {

"login": "rafaascensao",

"id": 17235468,

"node_id": "MDQ6VXNlcjE3MjM1NDY4",

"avatar_url": "https://avatars.githubusercontent.com/u/17235468?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rafaascensao",

"html_url": "https://github.com/rafaascensao",

"followers_url": "https://api.github.com/users/rafaascensao/followers",

"following_url": "https://api.github.com/users/rafaascensao/following{/other_user}",

"gists_url": "https://api.github.com/users/rafaascensao/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rafaascensao/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rafaascensao/subscriptions",

"organizations_url": "https://api.github.com/users/rafaascensao/orgs",

"repos_url": "https://api.github.com/users/rafaascensao/repos",

"events_url": "https://api.github.com/users/rafaascensao/events{/privacy}",

"received_events_url": "https://api.github.com/users/rafaascensao/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

}

] | closed | false | {

"login": "neuromage",

"id": 206520,

"node_id": "MDQ6VXNlcjIwNjUyMA==",

"avatar_url": "https://avatars.githubusercontent.com/u/206520?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/neuromage",

"html_url": "https://github.com/neuromage",

"followers_url": "https://api.github.com/users/neuromage/followers",

"following_url": "https://api.github.com/users/neuromage/following{/other_user}",

"gists_url": "https://api.github.com/users/neuromage/gists{/gist_id}",

"starred_url": "https://api.github.com/users/neuromage/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/neuromage/subscriptions",

"organizations_url": "https://api.github.com/users/neuromage/orgs",

"repos_url": "https://api.github.com/users/neuromage/repos",

"events_url": "https://api.github.com/users/neuromage/events{/privacy}",

"received_events_url": "https://api.github.com/users/neuromage/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "neuromage",

"id": 206520,

"node_id": "MDQ6VXNlcjIwNjUyMA==",

"avatar_url": "https://avatars.githubusercontent.com/u/206520?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/neuromage",

"html_url": "https://github.com/neuromage",

"followers_url": "https://api.github.com/users/neuromage/followers",

"following_url": "https://api.github.com/users/neuromage/following{/other_user}",

"gists_url": "https://api.github.com/users/neuromage/gists{/gist_id}",

"starred_url": "https://api.github.com/users/neuromage/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/neuromage/subscriptions",

"organizations_url": "https://api.github.com/users/neuromage/orgs",

"repos_url": "https://api.github.com/users/neuromage/repos",

"events_url": "https://api.github.com/users/neuromage/events{/privacy}",

"received_events_url": "https://api.github.com/users/neuromage/received_events",

"type": "User",

"site_admin": false

},

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

},

{

"login": "numerology",

"id": 9604122,

"node_id": "MDQ6VXNlcjk2MDQxMjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/9604122?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/numerology",

"html_url": "https://github.com/numerology",

"followers_url": "https://api.github.com/users/numerology/followers",

"following_url": "https://api.github.com/users/numerology/following{/other_user}",

"gists_url": "https://api.github.com/users/numerology/gists{/gist_id}",

"starred_url": "https://api.github.com/users/numerology/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/numerology/subscriptions",

"organizations_url": "https://api.github.com/users/numerology/orgs",

"repos_url": "https://api.github.com/users/numerology/repos",

"events_url": "https://api.github.com/users/numerology/events{/privacy}",

"received_events_url": "https://api.github.com/users/numerology/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"@rafaascensao Which version of TFX are you using? the tfx.dsl package was introduced in TFX 0.25.0 I think, and if you are using an older version this error would pop up. Try running updating TFX to latest by running `python3 -m pip install -U tfx`. Anyway, this is a package import issue and most likely has nothing to do with KFP.",

"@ConverJens I am talking about the sample pipeline of TFX Taxi that comes with the deployment (https://github.com/kubeflow/pipelines/blob/master/samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py). If I see the code, it uses a TFX image with the version 0.22.0. ",

"@rafaascensao Sorry! In that case, the image needs to be updated to tfx>=0.25.0. Ping @Bobgy, do you know who maintains this?",

"/assign @numerology @chensun @neuromage \n\nThis seems a P0.",

"Dont know why the linking dont work, but PR for fix: https://github.com/kubeflow/pipelines/pull/5165",

"Thanks @NikeNano !\r\n\r\n@Bobgy IIRC our integration test should cover this sample, do we know why this is not captured?",

"I have the same concern, @Ark-kun do you know why?\r\n\r\n/reopen\r\nLet's make sure to also address why it slipped integration tests.\r\n\r\nEDIT: figured out root cause in https://github.com/kubeflow/pipelines/issues/5178",

"@Bobgy: Reopened this issue.\n\n<details>\n\nIn response to [this](https://github.com/kubeflow/pipelines/issues/5137#issuecomment-784242034):\n\n>I have the same concern, @Ark-kun do you know why?\n>\n>/reopen\n>Let's make sure to also address why it slipped integration tests.\n\n\nInstructions for interacting with me using PR comments are available [here](https://git.k8s.io/community/contributors/guide/pull-requests.md). If you have questions or suggestions related to my behavior, please file an issue against the [kubernetes/test-infra](https://github.com/kubernetes/test-infra/issues/new?title=Prow%20issue:) repository.\n</details>",

"@Bobgy: Reopened this issue.\n\n<details>\n\nIn response to [this](https://github.com/kubeflow/pipelines/issues/5137#issuecomment-784242034):\n\n>I have the same concern, @Ark-kun do you know why?\n>\n>/reopen\n>Let's make sure to also address why it slipped integration tests.\n\n\nInstructions for interacting with me using PR comments are available [here](https://git.k8s.io/community/contributors/guide/pull-requests.md). If you have questions or suggestions related to my behavior, please file an issue against the [kubernetes/test-infra](https://github.com/kubernetes/test-infra/issues/new?title=Prow%20issue:) repository.\n</details>",

"I tried to upgrade some other TFX related deps, however, I'm getting the following errors in various places in parameterized_tfx_sample:\r\n* when clicking visualizations on `examplevalidator`, I'm seeing error: `NotFoundError: The specified path gs://gongyuan-test/tfx_taxi_simple/6f9ad66d-2897-4e11-8c2c-e3c614920f35/ExampleValidator/anomalies/32/anomalies.pbtxt was not found.` EDIT: fix sent in https://github.com/kubeflow/pipelines/pull/5186\r\n* when clicking visualizations on `evaluator`, the visualization js simply crashes without any clear error message. I tried to take a look at browser console, but it only shows. EDIT: workarounded in https://github.com/kubeflow/pipelines/pull/5191\r\n```\r\nUncaught Error: Script error for: tensorflow_model_analysis\r\nhttp://requirejs.org/docs/errors.html#scripterror\r\n at C (require.min.js:8)\r\n at HTMLScriptElement.onScriptError (require.min.js:29)\r\n```",

"Another problem:\r\nKFP depends on absl-py: https://github.com/kubeflow/pipelines/blob/a394f8cdf34de71f305ca1a49220a859c81ea502/sdk/python/requirements.in#L26\r\n\r\nconflicts with\r\n\r\ntfx==0.27.0\r\n\r\n\r\n```\r\nERROR: Cannot install absl-py<1 and >=0.11.0 and tfx==0.27.0 because these package versions have conflicting dependencies.\r\n\r\nThe conflict is caused by:\r\n The user requested absl-py<1 and >=0.11.0\r\n tfx 0.27.0 depends on absl-py<0.11 and >=0.9\r\n```\r\n\r\nEDIT: I'm going to use absl that works with TFX, let's see whether it passes presubmit & postsubmit tests.\r\nhttps://github.com/kubeflow/pipelines/pull/5179",

"The third problem I found, https://github.com/kubeflow/pipelines/tree/master/samples/core/tfx-oss sample is still mentioning very old version of TFX. Can someone verify and fix? but this does not block current release.",

"> Another problem:\r\n> KFP depends on absl-py:\r\n> \r\n> https://github.com/kubeflow/pipelines/blob/a394f8cdf34de71f305ca1a49220a859c81ea502/sdk/python/requirements.in#L26\r\n> \r\n> conflicts with\r\n> \r\n> tfx==0.27.0\r\n> \r\n> ```\r\n> ERROR: Cannot install absl-py<1 and >=0.11.0 and tfx==0.27.0 because these package versions have conflicting dependencies.\r\n> \r\n> The conflict is caused by:\r\n> The user requested absl-py<1 and >=0.11.0\r\n> tfx 0.27.0 depends on absl-py<0.11 and >=0.9\r\n> ```\r\n> \r\n> EDIT: I'm going to use absl that works with TFX, let's see whether it passes presubmit & postsubmit tests.\r\n> #5179\r\n\r\nThis one actually conflicts with KFP setup.py\r\nhttps://github.com/kubeflow/pipelines/blob/cd55a55c6229e9e734a35c166c002728b8fa4a72/sdk/python/setup.py#L23\r\nIt's an easy fix that we should do anyway, but I want to understand where `requirements.txt` is used in this case? A KFP SDK user doesn't need to install from `requirements.txt`.",

">I want to understand where requirements.txt is used in this case? A KFP SDK user doesn't need to install from requirements.txt.\r\n\r\nIt's used in automated tests and documentation generation. Some users might be using it as well. `requirements.txt` provides a known good dependency snapshot while `requirements.in` or `setup.py` specify loose top layer dependencies.\r\nSee the official documentation: https://packaging.python.org/discussions/install-requires-vs-requirements/#requirements-files\r\n\r\nIn the future we should make `setup.py` use `requirements.in` instead of duplicating the list.",

">KFP depends on absl-py:\r\n\r\nIt looks like a new dependency. Do we need it much?",

"> * when clicking visualizations on `examplevalidator`, I'm seeing error: `NotFoundError: The specified path gs://gongyuan-test/tfx_taxi_simple/6f9ad66d-2897-4e11-8c2c-e3c614920f35/ExampleValidator/anomalies/32/anomalies.pbtxt was not found.`\r\n\r\nDoes this file exist? What does the validator component produce?",

"> > I want to understand where requirements.txt is used in this case? A KFP SDK user doesn't need to install from requirements.txt.\r\n> \r\n> It's used in automated tests and documentation generation. Some users might be using it as well. `requirements.txt` provides a known good dependency snapshot while `requirements.in` or `setup.py` specify loose top layer dependencies.\r\n> See the official documentation: https://packaging.python.org/discussions/install-requires-vs-requirements/#requirements-files\r\n> \r\n\r\nMy understanding is that `setup.py` is for end users, while `requirements.txt` is for developers. So my question was more like do we need to install `requirements.txt` during tests? I could imagine we need `requirements-test.txt` for test library dependencies. But other than that, shouldn't we just rely on `setup.py` for runtime dependencies? This will test against what end users get rather than a stable known-good environment -- I think test is meant to discover possible end user issues earlier.\r\n\r\n> In the future we should make `setup.py` use `requirements.in` instead of duplicating the list. \r\n\r\nI thought we're deprecating `requirements.in` with the recently introduced bot that updates `requirements.txt` automatically?",

"> > KFP depends on absl-py:\r\n> \r\n> It looks like a new dependency. Do we need it much?\r\n\r\nIt was recently introduced, but seems likely only for logging purpose. So I think it's avoidable at least for now.\r\n\r\nhttps://github.com/kubeflow/pipelines/blob/665f3ce8ba0ac0cada2b4a1bd6c5bf8414d99bcb/sdk/python/kfp/dsl/artifact.py#L17\r\n\r\nhttps://github.com/kubeflow/pipelines/blob/665f3ce8ba0ac0cada2b4a1bd6c5bf8414d99bcb/sdk/python/kfp/containers/entrypoint.py#L17",

">My understanding is that setup.py is for end users, while requirements.txt is for developers.\r\n\r\nI'm not sure there is a hard distinction like this. As a snapshot of tested dependencies, `requirements.txt` can still be useful for the users. For example, TFX setup if often broken due to self-conflicting dependencies. However a `requirements.txt` can be installed.\r\nSame with the KFP and tests: with `requirements.txt` there is a tested configuration. Unlike setup.py it's not affected by the environment and already installed packages. Before we started using it, we had issues when CI was flaky or installed different versions of packages at random. Especially when testing on different versions of python. Now we have the full package snapshot that it tested and a way to update that snapshot.\r\n\r\n>This will test against what end users get\r\n\r\nI'm not sure about this. Without requirements.txt our test environment can be very different from the user environments. The user can have very old packages, but our tests will have the latest ones. `requirements.txt` fixes that by letting the users install the same packages as us.\r\n\r\n>I thought we're deprecating requirements.in with the recently introduced bot that updates requirements.txt automatically?\r\n\r\nWhere does the bot get the initial requirements? Many guides advice putting the dependecies outside the setup.py file.",

"Thanks, then absl problem is solved, I verified 0.10.0 is compatible with both.\r\n\r\nLet me provide more information about the visualization one",

"Another new issue is that postsubmit for iris pipeline is failing after upgrade, we need to figure out if the pipeline file needs any update.\r\n\r\nEDIT:\r\n\r\nThe error message is: `No such file or directory: '/tfx-src/tfx/examples/iris/iris_utils_native_keras.py'`\r\n\r\n```\r\n/tfx/src/tfx/examples# ls\r\n__init__.py bert chicago_taxi_pipeline containers imdb penguin\r\nairflow_workshop bigquery_ml cifar10 custom_components mnist ranking\r\n```\r\n\r\nI found that iris example is either deleted or renamed.\r\n\r\nConfirmed, iris example is removed, and replaced by penguin: https://github.com/tensorflow/tfx/tree/master/tfx/examples/penguin",

">Let me provide more information about the visualization one\r\n\r\nThank you.\r\n\r\nVisualization can be a tough one. It bothers me that our visualizations are version-dependent, but are baked into the backend. We should try to move to the \"visualizations as components\" vision, so that the visualizations can be versioned independently.",

"> > My understanding is that setup.py is for end users, while requirements.txt is for developers.\r\n> \r\n> I'm not sure there is a hard distinction like this. \r\n\r\nRight, I didn't mean there's a hard distinction between the two, but just my personal experience/observation -- if I'm a KFP user, I only care about `pip install kfp`, and probably never bother to go to this GitHub repo and do an additional install step using the `requirements.txt`. \r\nMy point is that what's specified in `setup.py` is the single source of truth for an end user (as I don't think most of them would come and grab the requirements.txt from our GitHub repo). So the tests should try to simulate that as close as possible. Having a safe snapshot reduces or even eliminates test flakiness, but we also lose the opportunity to discover possible dependency issues an end user may hit.\r\n\r\n> >This will test against what end users get\r\n> \r\n> I'm not sure about this. Without requirements.txt our test environment can be very different from the user environments. The user can have very old packages, but our tests will have the latest ones. requirements.txt fixes that by letting the users install the same packages as us.\r\n\r\nI think in this case our tests at least has what a fresh installation of `kfp` has, which is good enough, and sufficient to find the latest incompatible issues.\r\n\r\n> > I thought we're deprecating requirements.in with the recently introduced bot that updates requirements.txt automatically?\r\n>\r\n> Where does the bot get the initial requirements? Many guides advice putting the dependecies outside the setup.py file.\r\n\r\nAccording to [this](https://github.com/kubeflow/pipelines/pull/5056#issuecomment-770346410), it looks at the existing `requirements.txt` and keep moving forward to the next available minor version.\r\n",

"@chensun @Ark-kun two PRs pending review: #5186 #5187 ",

"Found a new problem, KFP cache webhook starts to cache tfx pipelines.\r\n\r\nEDIT: Sent out a fix: https://github.com/kubeflow/pipelines/pull/5188",

"I'm renaming the issue, because we are using it as a collector for all tfx issues.",

"All immediate blockers for release have been resolved. I'm going to create separate issues that we can tackle later."

] | "2021-02-15T10:53:53" | "2021-02-25T11:09:29" | "2021-02-25T11:09:29" | NONE | null | ### What steps did you take:

Installed Kubeflow Pipelines on GCP via kustomize manifests.

Tried to run the Taxi TFX Demo.

### What happened:

On the first step, I got the error "No module named 'tfx.dsl.components'"

### What did you expect to happen:

To successfully run the TFX Taxi Demo.

### Environment:

How did you deploy Kubeflow Pipelines (KFP)?

Via the kustomize manifests in GCP.

KFP version: 1.4.0-rc.1

/kind bug

/area backend | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5137/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5137/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5136 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5136/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5136/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5136/events | https://github.com/kubeflow/pipelines/issues/5136 | 808,166,096 | MDU6SXNzdWU4MDgxNjYwOTY= | 5,136 | [Discuss - frontend best practice] one way data flow | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2152751095,

"node_id": "MDU6TGFiZWwyMTUyNzUxMDk1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/frozen",

"name": "lifecycle/frozen",

"color": "ededed",

"default": false,

"description": null

}

] | open | false | null | [] | null | [

"/cc @zijianjoy @StefanoFioravanzo ",

"Another partially related problem is the Page abstraction: https://github.com/kubeflow/pipelines/blob/1f32e90ecd1fe8657c084d623b2fcd23ead13c48/frontend/src/pages/Page.tsx#L40-L99\r\n\r\n## Recap of what problem the Page abstraction tries to solve\r\n\r\nThis is a standard KFP page:\r\n\r\n\r\nSome UI elements are common:\r\n* title\r\n* back button\r\n* breadcrumbs\r\n* buttons in toolbar\r\n\r\nIn order to reuse this design and logic for all KFP pages, they are built as root of router in https://github.com/kubeflow/pipelines/blob/1f32e90ecd1fe8657c084d623b2fcd23ead13c48/frontend/src/components/Router.tsx#L230.\r\n\r\nThis is a reasonable choice, because in the DOM tree, these items are close to the root. So callbacks to control these common elements are passed using the Page interface to each page in a route.\r\n\r\n## Several problems with Page\r\n\r\n* Page uses inheritance (deprecated) to reuse some common logic and build a common interface.\r\n* Callbacks to control global state: `updateToolbar`, `updateBanner` are passed to Page. The interface for controlling toolbar state is imperative, rather than declarative. After each state update, we need to call `updateToolbar` in a child component. e.g. [_selectionChanged](https://github.com/kubeflow/pipelines/blob/1f32e90ecd1fe8657c084d623b2fcd23ead13c48/frontend/src/pages/ExperimentDetails.tsx#L376-L414) handler.\r\n* Tests used Page interface implementation details pervasively, e.g. https://github.com/kubeflow/pipelines/blob/1f32e90ecd1fe8657c084d623b2fcd23ead13c48/frontend/src/pages/ExperimentDetails.test.tsx#L412-L415 and https://github.com/kubeflow/pipelines/blob/1f32e90ecd1fe8657c084d623b2fcd23ead13c48/frontend/src/pages/ExperimentDetails.test.tsx#L385 Therefore, it's a lot of work to refactor these components, all tests that use these implementation details need to be refactored at the same time.\r\n\r\n## Why it's still like this?\r\n\r\nBecause\r\n* the efforts needed to refactor (including the tests) is huge\r\n* the implementation sort of scales OK for KFP current use-cases\r\n* the problems they incur are more of aesthetic & tech debt than productivity (at least until today)\r\n\r\nTherefore, I have never thought a refactoring is of enough priority, considering the number of other more meaningful ways we can improve KFP.\r\n",

"## How I would have implemented it?\r\n\r\nFrom my past experience, more idiomatic way of implementing these is:\r\n* Build standard layout & atomic components for the common page features. A layout component can accept either [render props](https://reactjs.org/docs/render-props.html) or react element, so that what's rendered inside the layout can still be determined by page component. These components should better be stateless and controlled by their parents.\r\n* Let each page component use these common elements to implement pages.\r\n* Build page component tests using react testing library, rather than accessing component instance and methods. (as documented in https://github.com/kubeflow/pipelines/issues/5118#issuecomment-776177682)\r\n\r\nBenefits:\r\n* All the elements are easily reusable and composable.\r\n* Page component rendering logic can be declarative (e.g. which buttons exist on the page can be derived from state of the page, instead of controlled imperatively via callback).\r\n\r\nNote, I think these only apply to Banners, Toolbars, Breadcrumbs and Title. Snackbar and dialogs might need to be rendered across pages, so they are probably not a good fit.\r\n\r\n## Plan\r\n\r\nIf we agree on the ideas, what we can do is building new pages using this more idiomatic paradigm. I still don't think it's worth it to rewrite the entire KFP UI just for this refactoring. If there are other reasons we need to build/rebuild pages, that will be good chances to start applying the new practices.",

"Thank you for the detailed explanation of the concept! I agree with using render props for reusable and portable components on the common UI elements on the top of pages. One question: The UI elements listed are unified across different pages, except buttons in toolbar. Each page will have different set of buttons, and based on user action on children element, these buttons might change on the same page. How does each layer look like if we apply render props for button elements? ",

"@zijianjoy I built a quick demo: https://codesandbox.io/s/magical-violet-hwfxb?file=/src/App.js\r\n\r\nNotice how the Layout component defines layout of the page, while App component can inject stuff into the Layout component dynamically using either render prop or react elements as props.\r\n\r\nSo that, back to KFP UI, we can define a common PageLayout component that gets reused across each Page, and the pages can control what buttons to render in layout slots.",

"@Bobgy Thank you so much Yuan for building the sample demo! \r\n\r\nTo confirm if I understand it correctly, here is a simplified example for KFP UI - RunList:\r\n\r\n```\r\n<Page>\r\n <ExperimentDetail>\r\n <PageLayout />\r\n <RunsList>\r\n <CustomTable />\r\n </RunList>\r\n </ExperimentDetail>\r\n</Page>\r\n```\r\n\r\nIn the above layout, `RunsList` determines what buttons to show (for example, Archive/Activate/Clone runs), and `CustomTable` determines which buttons are enabled/disabled because of user selection, and `RunsList` collects **run selection** callback from `CustomTable`. `ExperimentDetail` Collects button information from `RunsList` callbacks, and updates `PageLayout` accordingly. In such case, can I assume that `ExperimentDetail` determines what buttons should show in `PageLayout`? Because `ExperimentDetail` collects all button related information from children, and use render props to render `PageLayout`. \r\n\r\n\r\nAs reference, the `Page` component looks like below:\r\n\r\n```\r\n render() {\r\n return (\r\n <div>\r\n <ExperimentDetail render={buttonState => (\r\n <PageLayout buttonState={buttonState} />\r\n )}/>\r\n </div>\r\n );\r\n }\r\n```",

"```\n <ExperimentDetail>\n <PageLayout />\n <RunsList>\n <CustomTable />\n </RunList>\n </ExperimentDetail>\n```\n\nPage is unnecessary, files in the pages folder are already pages.\n\nThe following understanding has a key difference, because button management can be very dynamic, the most declarative way is to move state that can affect buttons up to the page level and render the buttons in layout from e.g. move state in RunList up to ExperimentDetails's state, and render buttons directly from ExperimentDetails.\nhttps://reactjs.org/docs/lifting-state-up.html\n\nMoving state up might sound daunting at first, but React has also built React hooks, they allow making abstractions on state logic, so that moving some state can be as simple as moving a hook call from child to parent and pass needed props to the child.",

"I agree with moving the state up to parent so the parent has all the information it needs to determine buttons arrangement. Thank you Yuan!",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"/lifecycle frozen"

] | "2021-02-15T04:57:02" | "2021-08-24T13:52:30" | null | CONTRIBUTOR | null | https://reactjs.org/docs/refs-and-the-dom.html

It's recommended to avoid using ref when possible, because the data/event flow mechanism conflicts with React's recommendation of one-way data flow philosophy (see https://reactjs.org/docs/thinking-in-react.html).

> For example, instead of exposing open() and close() methods on a Dialog component, pass an isOpen prop to it.

Similar to this example, I think to avoid exposing `refresh()` as a public method, we could have a prop called sth like `refreshCounter: number`, the child components can do a refresh whenever it sees an update in refreshCounter -- using `componentDidUpdate` or `useEffect react hook`. In this way, we no longer need to add refs all the way and trigger a method to refresh components, one refresh would be as simple as a state update to the refreshCounter.

We'd also need a `onLoad` callback, that will be triggered by child components when a page finishes loading.

After avoiding using ref to get component instances, we can write components using functions and react hooks: https://reactjs.org/docs/hooks-overview.html. It's a nice way to make abstraction on state changes (and a lot more!).

_Originally posted by @Bobgy in https://github.com/kubeflow/pipelines/pull/5040#discussion_r569956232_ | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5136/reactions",

"total_count": 2,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5136/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5134 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5134/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5134/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5134/events | https://github.com/kubeflow/pipelines/issues/5134 | 807,736,947 | MDU6SXNzdWU4MDc3MzY5NDc= | 5,134 | Presubmit failure | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | "2021-02-13T12:06:23" | "2021-02-14T00:31:58" | "2021-02-14T00:31:58" | CONTRIBUTOR | null | If you look into the test it said

```

Traceback (most recent call last):

File "<string>", line 3, in <module>

File "/usr/local/lib/python3.6/site-packages/kfp/__init__.py", line 24, in <module>

from ._client import Client

File "/usr/local/lib/python3.6/site-packages/kfp/_client.py", line 31, in <module>

from kfp.compiler import compiler

File "/usr/local/lib/python3.6/site-packages/kfp/compiler/__init__.py", line 17, in <module>

from ..containers._component_builder import build_python_component, build_docker_image, VersionedDependency

File "/usr/local/lib/python3.6/site-packages/kfp/containers/_component_builder.py", line 32, in <module>

from kfp.containers import entrypoint

File "/usr/local/lib/python3.6/site-packages/kfp/containers/entrypoint.py", line 23, in <module>

from kfp.containers import entrypoint_utils

File "/usr/local/lib/python3.6/site-packages/kfp/containers/entrypoint_utils.py", line 23, in <module>

from kfp.pipeline_spec import pipeline_spec_pb2

File "/usr/local/lib/python3.6/site-packages/kfp/pipeline_spec/pipeline_spec_pb2.py", line 23, in <module>

create_key=_descriptor._internal_create_key,

AttributeError: module 'google.protobuf.descriptor' has no attribute '_internal_create_key'

```

Looks like the `protobuf` version is not matching in this case. @Bobgy are you aware of this error? Thanks.

_Originally posted by @Tomcli in https://github.com/kubeflow/pipelines/pull/5059#issuecomment-777656530_

/cc @numerology @chensun @Ark-kun

Can you take a look at this issue? I have seen multiple reports, this error seems to fail consistently. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5134/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5134/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5133 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5133/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5133/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5133/events | https://github.com/kubeflow/pipelines/issues/5133 | 807,511,449 | MDU6SXNzdWU4MDc1MTE0NDk= | 5,133 | Kubeflow Pipelines: Move manifests development upstream | {

"login": "yanniszark",

"id": 6123106,

"node_id": "MDQ6VXNlcjYxMjMxMDY=",

"avatar_url": "https://avatars.githubusercontent.com/u/6123106?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yanniszark",

"html_url": "https://github.com/yanniszark",

"followers_url": "https://api.github.com/users/yanniszark/followers",

"following_url": "https://api.github.com/users/yanniszark/following{/other_user}",

"gists_url": "https://api.github.com/users/yanniszark/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yanniszark/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yanniszark/subscriptions",

"organizations_url": "https://api.github.com/users/yanniszark/orgs",

"repos_url": "https://api.github.com/users/yanniszark/repos",

"events_url": "https://api.github.com/users/yanniszark/events{/privacy}",

"received_events_url": "https://api.github.com/users/yanniszark/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | {

"login": "yanniszark",

"id": 6123106,

"node_id": "MDQ6VXNlcjYxMjMxMDY=",

"avatar_url": "https://avatars.githubusercontent.com/u/6123106?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yanniszark",

"html_url": "https://github.com/yanniszark",

"followers_url": "https://api.github.com/users/yanniszark/followers",

"following_url": "https://api.github.com/users/yanniszark/following{/other_user}",

"gists_url": "https://api.github.com/users/yanniszark/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yanniszark/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yanniszark/subscriptions",

"organizations_url": "https://api.github.com/users/yanniszark/orgs",

"repos_url": "https://api.github.com/users/yanniszark/repos",

"events_url": "https://api.github.com/users/yanniszark/events{/privacy}",

"received_events_url": "https://api.github.com/users/yanniszark/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "yanniszark",

"id": 6123106,

"node_id": "MDQ6VXNlcjYxMjMxMDY=",

"avatar_url": "https://avatars.githubusercontent.com/u/6123106?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yanniszark",

"html_url": "https://github.com/yanniszark",

"followers_url": "https://api.github.com/users/yanniszark/followers",

"following_url": "https://api.github.com/users/yanniszark/following{/other_user}",

"gists_url": "https://api.github.com/users/yanniszark/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yanniszark/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yanniszark/subscriptions",

"organizations_url": "https://api.github.com/users/yanniszark/orgs",

"repos_url": "https://api.github.com/users/yanniszark/repos",

"events_url": "https://api.github.com/users/yanniszark/events{/privacy}",

"received_events_url": "https://api.github.com/users/yanniszark/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"Thank you!\n\n> I understand that the installs folder would probably be transferred under env in this repo.\n\nLet me think about it, there might be too many types and they are not conceptually consistent. Current manifests in env all include mysql and minio, but those in kubeflow/manifest/.../installs don't.\n\n> What about all the other folders? Are they maintained by KFP? Are they abandoned? cc\n\nNo, you can remove the other folders.",

"> Let me think about it, there might be too many types and they are not conceptually consistent\\\r\n\r\n@Bobgy I was wondering if you had any thoughts on this issue. I can start a PR making `installs` into `envs`. What do you think?",

"My current preference is to move installs into kustomize/base/installs/{generic,multi-user}\n(Probably rename base to core, but that can be done later)\n\nMove mysql and minio into kustomize/storage/mysql, kustomize/storage/minio.\n\nAnd, use kustomize/env/xxx to compose them.\n\nWhat do you think?\nThe main motivation is to make component role & boundary clearer.",

"@yanniszark pinging for status update, I want to make sure we don't have duplicate efforts",

"@Bobgy thanks for the ping. I want to ask for more info on your suggestion.\r\nYou proposed:\r\n\r\n> kustomize/base/installs/{generic,multi-user}\r\n\r\nCurrently, the base folder is broken up per-component (application. argo, cache, metadata, pipeline).\r\nThis includes both pipelines (pipelines, cache) and non-pipelines apps (argo, application-controller, metadata).\r\nThose are composed into a kustomization in `base/kustomization.yaml`. In addition, `base/kustomization.yaml` only contains namespaced resources.\r\n\r\nThe multi-user pipelines manifests adds/edits resources to pipelines components (api-service, cache, metadata-writer, persistence-agent, pipelines-profile-controller, pipelines-ui, scheduled-workflow, viewer-controller).\r\n\r\nSo if I understand correctly:\r\n- `base/kustomization.yaml` will become `base/installs/generic`\r\n- `base/installs/multi-user` will contain the resources for multi-user pipelines. However, these resources are both cluster and namespace-scoped. Personally, I don't see the reason for the namespaced/non-namespaced distinction but I see there was some quite some effort to structure this way, so I'd love to know more. Alternatively, we could do `base/installs/multi-user/cluster-scoped` too.\r\n\r\nFinally, what about the tekton installs? Should they go under `env`?\r\n\r\n",

"That reminds me a few things,\n`application` should now be moved out as optional, because we don't need it in kubeflow.\n\n> Personally, I don't see the reason for the namespaced/non-namespaced distinction\n\nBecause in namespaced installation mode, cluster admin can be a separate group of people than namespace admins. So it's more convenient if there's a clear separation of cluster resources.\n\nTherefore, this requirement isn't necessary for multi-user mode, because it's already meant to be shared across namespaces.",

"For tekton KFP, @animeshsingh what do you think?\n\nMy current thoughts are manifest should be next to where development is happening, so maintainence is easier if they are in kfp-tekton repo.",

"Fixed by #5256 "

] | "2021-02-12T20:01:48" | "2021-03-10T01:51:48" | "2021-03-10T01:51:48" | CONTRIBUTOR | null | ### Background

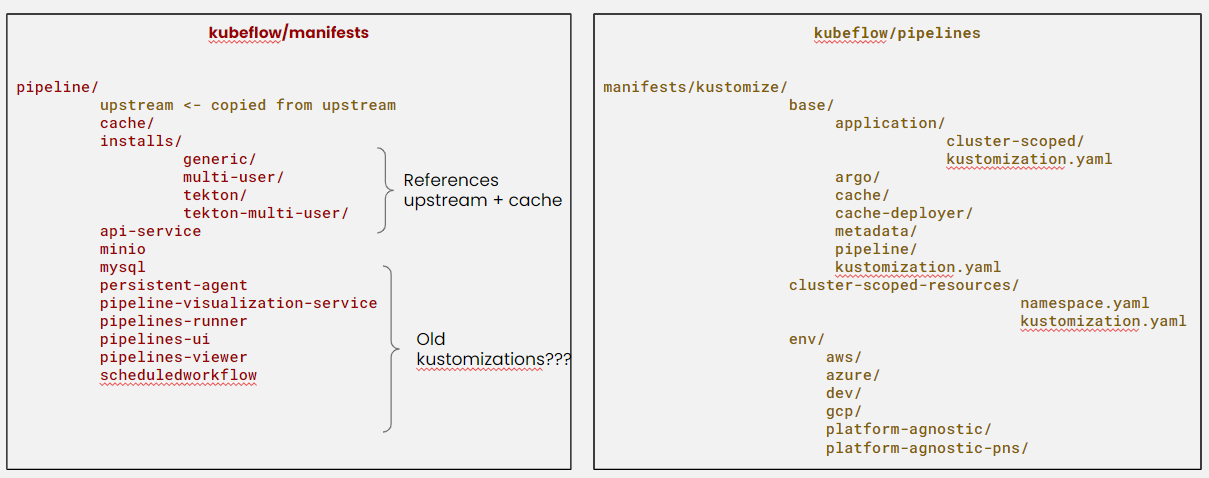

Umbrella-Issue: https://github.com/kubeflow/manifests/issues/1740

As part of the work of wg-manifests for 1.3

(https://github.com/kubeflow/manifests/issues/1735), we are moving manifests

development to upstream repos. This gives the application developers full

ownership of their manifests, tracked in a single place.

To give an example from the `notebook-controller`, the diagram shows the current

situation. Manifests are in two repos, blurring separation of responsibilities

and making things harder for application developers.

Instead, we will copy all manifests from the manifests repo back to each

upstream's repo. From there, they will be imported in the manifests repo. The

following diagram presents the desired state:

### Current State

Kubeflow Pipelines has manifests:

- In the manifests repo, under folder `apps/pipeline/upstream`.

- In upstream repo (https://github.com/kubeflow/pipelines) under folder `manifests/kustomize`.

The current state of the manifests repo and the upstream repo is the following:

The kubeflow/manifests part of pipelines consists of the following:

- An `upstream` folder, which contains the copied upstream manifests from kubeflow/manifests.

- A kustomization for the pipelines cache (cache folder).

- The `installs` folder, which contains various installation profiles for pipelines (generic, multi-user, tekton, multi-user-tekton). The kustomizations under `installs` refer to the `cache` folder and the `upstream` folder.

- A bunch of folders (`api-service`, `minio`, `mysql`, `persistent-agent`, `pipeline-visualization-service`, `pipelines-runner`, `pipelines-ui`, `pipelines-viewer`, `scheduledworkflow`) which seem unused, since those manifests already exist upstream (most are in kubeflow/pipelines under `manifests/kustomize/base/pipeline`, `minio` and `mysql` are under `manifests/kustomize/env/platform-agnostic`)

I understand that the `installs` folder would probably be transferred under `env` in this repo.

What about all the other folders? Are they maintained by KFP? Are they abandoned? cc @Bobgy

### Desired State

The proposed folder in the upstream repo to receive the manifests is:

`manifests/kustomize`.

The goal is to consolidate all manifests development in the upstream repo.

The manifests repo will include a copy of the manifests under `apps/pipeline/upstream`.

This copy will be synced periodically.

### Success Criteria

The manifests in `apps/pipeline/upstream` should be a copy of the upstream manifests

folder. To do that, the application manifests in `kubeflow/manifests` should be

moved to the upstream application repo.

/assign @yanniszark

cc @kubeflow/wg-pipeline-leads | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5133/reactions",

"total_count": 4,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5133/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5215 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5215/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5215/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5215/events | https://github.com/kubeflow/pipelines/issues/5215 | 818,615,384 | MDU6SXNzdWU4MTg2MTUzODQ= | 5,215 | Pipeline metrics are always sorted alphabetically | {

"login": "ypitrey",

"id": 17247240,

"node_id": "MDQ6VXNlcjE3MjQ3MjQw",

"avatar_url": "https://avatars.githubusercontent.com/u/17247240?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ypitrey",

"html_url": "https://github.com/ypitrey",

"followers_url": "https://api.github.com/users/ypitrey/followers",

"following_url": "https://api.github.com/users/ypitrey/following{/other_user}",

"gists_url": "https://api.github.com/users/ypitrey/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ypitrey/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ypitrey/subscriptions",

"organizations_url": "https://api.github.com/users/ypitrey/orgs",

"repos_url": "https://api.github.com/users/ypitrey/repos",

"events_url": "https://api.github.com/users/ypitrey/events{/privacy}",

"received_events_url": "https://api.github.com/users/ypitrey/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930476737,

"node_id": "MDU6TGFiZWw5MzA0NzY3Mzc=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/help%20wanted",

"name": "help wanted",

"color": "db1203",

"default": true,

"description": "The community is welcome to contribute."

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2186355346,

"node_id": "MDU6TGFiZWwyMTg2MzU1MzQ2",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/good%20first%20issue",

"name": "good first issue",

"color": "fef2c0",

"default": true,

"description": ""

}

] | closed | false | null | [] | null | [

"/area pipelines\r\n@Bobgy maybe you can move this over to the pipelines repo",

"Any updates on this issue? I'll have to name my metrics `1. dropsPerHour` and `2. unscheduled` for them to appear at the top of the list for now, but I fear the gods of software design are going to be angry with me. 😄 \r\n\r\nThanks guys",

"We are open for contributions, here's frontend development guide: https://github.com/kubeflow/pipelines/tree/master/frontend.\r\n\r\nAnd I believe related code to this issue is in https://github.com/kubeflow/pipelines/blob/45c5c18716b57fbf9d491d88ab2fe7e345dc7edb/frontend/src/pages/RunDetails.tsx#L584.",

"Hi @Bobgy @ypitrey, a new contributor here looking for a good first issue to work on. \r\nI like to work on this issue if this is still relevant & have a few questions to clarify the ask.\r\n\r\nIs the ask to simply remove the sorting of metrics or to create a functionality that a user can choose the metrics that show up on the UI? \r\n\r\n> Note: I need to expose more than these two metrics, as I am using these metrics to aggregate results across multiple runs.\r\n\r\nIn addition, I think you're looking for a way to show more than two metrics? Should this be part of this issue?",

"Thank you @annajung !\nThis is still relevant, it seems removing sorting would be enough.",

"Thanks for the clarification @Bobgy \r\nCreated a PR https://github.com/kubeflow/pipelines/pull/5701, any feedback would be appreciated! Thanks"

] | "2021-02-12T16:51:23" | "2021-05-27T02:38:17" | "2021-05-27T02:38:17" | NONE | null | /kind bug

I have a pipeline that exposes pipeline metrics following [this example](https://www.kubeflow.org/docs/pipelines/sdk/pipelines-metrics/). It's working great, but it seems that the metrics always appear sorted alphabetically. I would like to make use of the fact that the first two metrics are displayed next to each run in the run list view, but the two metrics I'm interested in are not the first two in alphabetical order.

Note: I need to expose more than these two metrics, as I am using these metrics to aggregate results across multiple runs.

Am I doing something wrong? Is there a way to not sort the metrics alphabetically?

For context, here is the code I'm using to expose the metrics:

```

def write_metrics_data(kpis: dict):

metrics = {

'metrics': [

{

'name': name,

'numberValue': value,

'format': "PERCENTAGE" if 'percent' in name else "RAW",

}

for name, value in kpis.items()

]

}

myjson.write(metrics, Path('/mlpipeline-metrics.json'))

```

and this is the header row as displayed in the _Run Output_ tab:

```

activeTrips | dropsPerHour | idleHours | kilometresPerDrop | kilometresPerHour | percentReloads | ...

```

which seems to be in alphabetical order. However, if I do:

```

print(kpis.keys())

```

I get this:

```

['dropsPerHour', 'unscheduled', 'activeTrips', 'totalHours', ...]

```

which isn't in alphabetical order. And the two metrics I'm interested in are the first two ones in this list.

**Environment:**

- Kubeflow version: 1.0.4

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5215/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5215/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5130 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5130/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5130/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5130/events | https://github.com/kubeflow/pipelines/issues/5130 | 807,199,813 | MDU6SXNzdWU4MDcxOTk4MTM= | 5,130 | Error pulling gcr.io/ml-pipeline/ml-pipeline-gcp:1.4.0 | {

"login": "BorFour",

"id": 25534385,

"node_id": "MDQ6VXNlcjI1NTM0Mzg1",

"avatar_url": "https://avatars.githubusercontent.com/u/25534385?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/BorFour",

"html_url": "https://github.com/BorFour",

"followers_url": "https://api.github.com/users/BorFour/followers",

"following_url": "https://api.github.com/users/BorFour/following{/other_user}",

"gists_url": "https://api.github.com/users/BorFour/gists{/gist_id}",

"starred_url": "https://api.github.com/users/BorFour/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/BorFour/subscriptions",

"organizations_url": "https://api.github.com/users/BorFour/orgs",

"repos_url": "https://api.github.com/users/BorFour/repos",

"events_url": "https://api.github.com/users/BorFour/events{/privacy}",

"received_events_url": "https://api.github.com/users/BorFour/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Hey @BorFour, sorry about the inconvenience, there was a short period the image was not built yet.\r\n\r\nYou should be able to use it now.\r\n\r\nThe best source is to look at github releases, if the release note isn't appended, that means the release was not yet finished."

] | "2021-02-12T12:32:56" | "2021-02-25T08:34:33" | "2021-02-25T08:34:33" | NONE | null | I've been using a component that uses the image `gcr.io/ml-pipeline/ml-pipeline-gcp:1.4.0`. When I try to use it, the component's pod seems to be stuck with the `ImagePullBackOff` status and, eventually, the pipeline times out. I then tried to run the image locally like so:

```bash

docker pull gcr.io/ml-pipeline/ml-pipeline-gcp:1.4.0

```

And then I get this error message:

```bash

Error response from daemon: manifest for gcr.io/ml-pipeline/ml-pipeline-gcp:1.4.0 not found: manifest unknown: Failed to fetch "1.4.0" from request "/v2/ml-pipeline/ml-pipeline-gcp/manifests/1.4.0".

```

I then tried to pull `gcr.io/ml-pipeline/ml-pipeline-gcp:1.3.0` locally and it works just fine. My workaround was to use the same component but with the repo tag `1.3.0`, as it uses this image, but I can't figure out why 1.4.0 doesn't work.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5130/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5130/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5129 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5129/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5129/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5129/events | https://github.com/kubeflow/pipelines/issues/5129 | 807,145,970 | MDU6SXNzdWU4MDcxNDU5NzA= | 5,129 | Support textarea input for pipeline parameter | {

"login": "kim-sardine",

"id": 8458055,

"node_id": "MDQ6VXNlcjg0NTgwNTU=",

"avatar_url": "https://avatars.githubusercontent.com/u/8458055?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/kim-sardine",

"html_url": "https://github.com/kim-sardine",

"followers_url": "https://api.github.com/users/kim-sardine/followers",

"following_url": "https://api.github.com/users/kim-sardine/following{/other_user}",

"gists_url": "https://api.github.com/users/kim-sardine/gists{/gist_id}",

"starred_url": "https://api.github.com/users/kim-sardine/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/kim-sardine/subscriptions",

"organizations_url": "https://api.github.com/users/kim-sardine/orgs",

"repos_url": "https://api.github.com/users/kim-sardine/repos",

"events_url": "https://api.github.com/users/kim-sardine/events{/privacy}",

"received_events_url": "https://api.github.com/users/kim-sardine/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930476737,

"node_id": "MDU6TGFiZWw5MzA0NzY3Mzc=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/help%20wanted",

"name": "help wanted",

"color": "db1203",

"default": true,

"description": "The community is welcome to contribute."

},

{

"id": 930619516,

"node_id": "MDU6TGFiZWw5MzA2MTk1MTY=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/frontend",

"name": "area/frontend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

},

{

"id": 2186355346,

"node_id": "MDU6TGFiZWwyMTg2MzU1MzQ2",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/good%20first%20issue",

"name": "good first issue",

"color": "fef2c0",

"default": true,

"description": ""

}

] | closed | false | null | [] | null | [

"Hi, this is a nice idea! Sounds useful to me\r\n\r\nWelcome contribution on this!\r\n\r\nyou can find frontend contribution guide in https://github.com/kubeflow/pipelines/tree/master/frontend,\r\nand the create run page is implemented in https://github.com/kubeflow/pipelines/blob/master/frontend/src/pages/NewRun.tsx",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"This issue has been automatically closed because it has not had recent activity. Please comment \"/reopen\" to reopen it.\n"

] | "2021-02-12T11:07:27" | "2022-04-18T17:27:55" | "2022-04-18T17:27:55" | CONTRIBUTOR | null | ### What steps did you take:

I'd like to get multi-line bash script from user and pass it into ContainerOp.

### What happened:

but for now, Pipeline frontend only support two types of pipeline parameter - one line text input, json editor.

of course I can do it by appending all lines with semi-colon or by using list type and joining dynamically, but it's not intuitive.

but I'm not sure how to make pipeline code tell frontend to use textarea input. beacuse there is no type hint for multi line string.

maybe we could use custom type or use textarea input as default

### Environment:

kfp_istio_dex_1.2.0 on Windows Docker Desktop

/kind feature

/area frontend

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5129/reactions",

"total_count": 3,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5129/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5126 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5126/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5126/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5126/events | https://github.com/kubeflow/pipelines/issues/5126 | 806,604,398 | MDU6SXNzdWU4MDY2MDQzOTg= | 5,126 | Can not load pipeline | {

"login": "dwu926",

"id": 52472341,

"node_id": "MDQ6VXNlcjUyNDcyMzQx",

"avatar_url": "https://avatars.githubusercontent.com/u/52472341?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/dwu926",

"html_url": "https://github.com/dwu926",

"followers_url": "https://api.github.com/users/dwu926/followers",

"following_url": "https://api.github.com/users/dwu926/following{/other_user}",

"gists_url": "https://api.github.com/users/dwu926/gists{/gist_id}",

"starred_url": "https://api.github.com/users/dwu926/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/dwu926/subscriptions",

"organizations_url": "https://api.github.com/users/dwu926/orgs",

"repos_url": "https://api.github.com/users/dwu926/repos",

"events_url": "https://api.github.com/users/dwu926/events{/privacy}",

"received_events_url": "https://api.github.com/users/dwu926/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | closed | false | null | [] | null | [

"Can you answer the environment questions? I need more information to scope down the problem",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"This issue has been automatically closed because it has not had recent activity. Please comment \"/reopen\" to reopen it.\n"

] | "2021-02-11T17:41:17" | "2022-04-28T18:00:33" | "2022-04-28T18:00:33" | NONE | null | ### What steps did you take:

When I tried to upload the pipeline which is in format of "pipeline.tar.gz" and there is always an error and can not load the pipeline.

### What happened:

Pipeline version creation failed

upstream connect error or disconnect/reset before headers. reset reason: connection termination

### What did you expect to happen:

### Environment:

<!-- Please fill in those that seem relevant. -->

How did you deploy Kubeflow Pipelines (KFP)?

<!-- If you are not sure, here's [an introduction of all options](https://www.kubeflow.org/docs/pipelines/installation/overview/). -->

KFP version: <!-- If you are not sure, build commit shows on bottom of KFP UI left sidenav. -->

KFP SDK version: <!-- Please attach the output of this shell command: $pip list | grep kfp -->

### Anything else you would like to add:

[Miscellaneous information that will assist in solving the issue.]

/kind bug

<!-- Please include labels by uncommenting them to help us better triage issues, choose from the following -->

<!--

// /area frontend

// /area backend

// /area sdk

// /area testing

// /area engprod

-->

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5126/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5126/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5125 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5125/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5125/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5125/events | https://github.com/kubeflow/pipelines/issues/5125 | 806,211,828 | MDU6SXNzdWU4MDYyMTE4Mjg= | 5,125 | Upgrade gorm | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930619513,

"node_id": "MDU6TGFiZWw5MzA2MTk1MTM=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/priority/p1",

"name": "priority/p1",

"color": "cb03cc",

"default": false,

"description": ""

},

{

"id": 1682717397,

"node_id": "MDU6TGFiZWwxNjgyNzE3Mzk3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/process",

"name": "kind/process",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | closed | false | null | [] | null | [

"@Bobgy \r\nBy the way, I noticed that we are not using the main gorm repository ```\"gorm.io/gorm\"```, this will lead to conflict in the future. It should probably become a future refactoring task for the KFP team. \r\n\r\nFor example the way to add a composite unique index is shows as below, but this gave me a syntax error with the current gorm repository being used... ```\"github.com/jinzhu/gorm\"```\r\n\r\n```golang\r\npackage main\r\n\r\nimport (\r\n\t\"gorm.io/driver/mysql\"\r\n\t\"gorm.io/gorm\"\r\n)\r\n\r\n// ALTER TABLE dev.pipelines ADD UNIQUE `unique_index`(`Name`, `Namespace`);\r\ntype Pipeline struct {\r\n\tgorm.Model\r\n\tNamespace string `gorm:\"column:Namespace; size:63; default:''; index:idx_name_ns, unique\"`\r\n\tName string `gorm:\"column:Name; not null; index:idx_name_ns\"`\r\n}\r\n\r\nfunc main() {\r\n\t// db, err := gorm.Open(sqlite.Open(\"test.db\"), &gorm.Config{})\r\n\tdsn := \"root:@tcp(localhost:3306)/dev?charset=utf8mb4&parseTime=True&loc=Local\"\r\n\tdb, err := gorm.Open(mysql.Open(dsn), &gorm.Config{})\r\n\tif err != nil {\r\n\t\tpanic(\"failed to connect database\")\r\n\t}\r\n\r\n\t// Migrate the schema\r\n\tdb.AutoMigrate(&Pipeline{})\r\n\r\n\t// Create\r\n\tdb.Create(&Pipeline{Namespace: \"admin\", Name: \"p2\"})\r\n}\r\n```",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"This issue has been automatically closed because it has not had recent activity. Please comment \"/reopen\" to reopen it.\n"

] | "2021-02-11T09:23:12" | "2022-04-18T17:27:59" | "2022-04-18T17:27:59" | CONTRIBUTOR | null |

@capri-xiyue The creation of a unique index with (Namespace, Name) is done in the client manager. The reason we can't do this as part of the Pipeline struct definition is because the KFP repository is using a branch of the gorm package ```"github.com/jinzhu/gorm"``` which does not support it, the refactoring for this is not in scope.

_Originally posted by @maganaluis in https://github.com/kubeflow/pipelines/pull/4835#discussion_r571721941_ | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5125/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5125/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5124 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5124/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5124/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5124/events | https://github.com/kubeflow/pipelines/issues/5124 | 805,513,534 | MDU6SXNzdWU4MDU1MTM1MzQ= | 5,124 | Data Versioning with Kubeflow | {

"login": "Vindhya-Singh",

"id": 20332927,

"node_id": "MDQ6VXNlcjIwMzMyOTI3",

"avatar_url": "https://avatars.githubusercontent.com/u/20332927?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Vindhya-Singh",

"html_url": "https://github.com/Vindhya-Singh",

"followers_url": "https://api.github.com/users/Vindhya-Singh/followers",

"following_url": "https://api.github.com/users/Vindhya-Singh/following{/other_user}",

"gists_url": "https://api.github.com/users/Vindhya-Singh/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Vindhya-Singh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Vindhya-Singh/subscriptions",

"organizations_url": "https://api.github.com/users/Vindhya-Singh/orgs",

"repos_url": "https://api.github.com/users/Vindhya-Singh/repos",

"events_url": "https://api.github.com/users/Vindhya-Singh/events{/privacy}",

"received_events_url": "https://api.github.com/users/Vindhya-Singh/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1682717392,

"node_id": "MDU6TGFiZWwxNjgyNzE3Mzky",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/question",

"name": "kind/question",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [

"Hi @VindhyaSRajan!\r\n\r\nDid you look into https://github.com/pachyderm/pachyderm? I think they also have some integration with KFP.\r\nKFP is very flexible to work with any external system by components.\r\n\r\nAny feedback on gaps?",

"Can KFP be set to take kubernetes snapshots after each step, and then pass the name of that snapshot as the `data_source` for the next step? If not, I think that is a solid place to start for having data versioning built into KFP. I have been working on code for Kale that, once working, should create pipelines that create snapshots after each step https://github.com/kubeflow-kale/kale/pulls. ",

"That's an interesting idea, how do you envision that being a 1st party feature? Does it need to?",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"Sorry, I completely missed your response. I’d need to think a bit about how this could be a first party feature, but I do think it is something people would be interested in. It would allow for data lineage which is needed in some environments (like research) and could help with debugging a pipeline. \r\n\r\nAm I right in thinking the implementation for a feature like this would greatly differ between KFP V1 and V2?",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"Hey just wanted to comment on this to ask if there's any progress? I've a team of researchers and we're interested in using something like DVC in kfp. ",

"+1",

"+1",

"+1",

"+1",

"Any news or comment on this?"

] | "2021-02-10T13:28:33" | "2023-03-10T17:14:53" | null | NONE | null | Hello,

I am working on setting up an in-house ML infrastructure for my company and we decided to go with Kubeflow. We need to ensure that the pipelines have the provision for data versioning as well. I understand from the official documentation of Kubeflow that it is possible with Rok Data Management. However, we are interested in exploring other options as well. Thus, my question comes in two parts:

1. Is there any alternative to using Rok for data versioning with Kubeflow pipelines?

2. Is it possible to use DVC for data versioning with Kubeflow?

Thanks :) | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/5124/reactions",

"total_count": 5,

"+1": 5,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/5124/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/5123 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/5123/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/5123/comments | https://api.github.com/repos/kubeflow/pipelines/issues/5123/events | https://github.com/kubeflow/pipelines/issues/5123 | 805,484,972 | MDU6SXNzdWU4MDU0ODQ5NzI= | 5,123 | [Multi User] failed to call 'kfp.get_run' in in-cluster juypter notebook | {

"login": "anneum",

"id": 60262966,

"node_id": "MDQ6VXNlcjYwMjYyOTY2",

"avatar_url": "https://avatars.githubusercontent.com/u/60262966?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/anneum",

"html_url": "https://github.com/anneum",

"followers_url": "https://api.github.com/users/anneum/followers",

"following_url": "https://api.github.com/users/anneum/following{/other_user}",

"gists_url": "https://api.github.com/users/anneum/gists{/gist_id}",

"starred_url": "https://api.github.com/users/anneum/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/anneum/subscriptions",

"organizations_url": "https://api.github.com/users/anneum/orgs",

"repos_url": "https://api.github.com/users/anneum/repos",

"events_url": "https://api.github.com/users/anneum/events{/privacy}",

"received_events_url": "https://api.github.com/users/anneum/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1682627575,

"node_id": "MDU6TGFiZWwxNjgyNjI3NTc1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/misc",

"name": "kind/misc",

"color": "c2e0c6",

"default": false,

"description": "types beside feature and bug"

}

] | closed | false | null | [] | null | [

"I have checked if the run has been correctly inserted into the `mlpipeline` database. I see both the run and the correct namespace.\r\n```\r\nkubectl -nkubeflow exec -it mysql-7694c6b8b7-nxn2h -- bash\r\nroot@mysql-7694c6b8b7-nxn2h:/# mysql\r\nmysql> use mlpipeline;\r\nmysql> select uuid, DisplayName, namespace, ServiceAccount from run_details where uuid = 'e5b4e73c-2709-41b0-af75-f9b9dcb372f2';\r\n+--------------------------------------+---------------------------------+-----------+----------------+\r\n| uuid | DisplayName | namespace | ServiceAccount |\r\n+--------------------------------------+---------------------------------+-----------+----------------+\r\n| e5b4e73c-2709-41b0-af75-f9b9dcb372f2 | candies-sharing-0s70d_run-13csv | mynamespace | default-editor |\r\n+--------------------------------------+---------------------------------+-----------+----------------+\r\n```",

"Long term solution should be https://github.com/kubeflow/pipelines/issues/5138"

] | "2021-02-10T12:49:34" | "2021-02-26T01:08:10" | "2021-02-26T01:08:10" | NONE | null | ### What steps did you take:

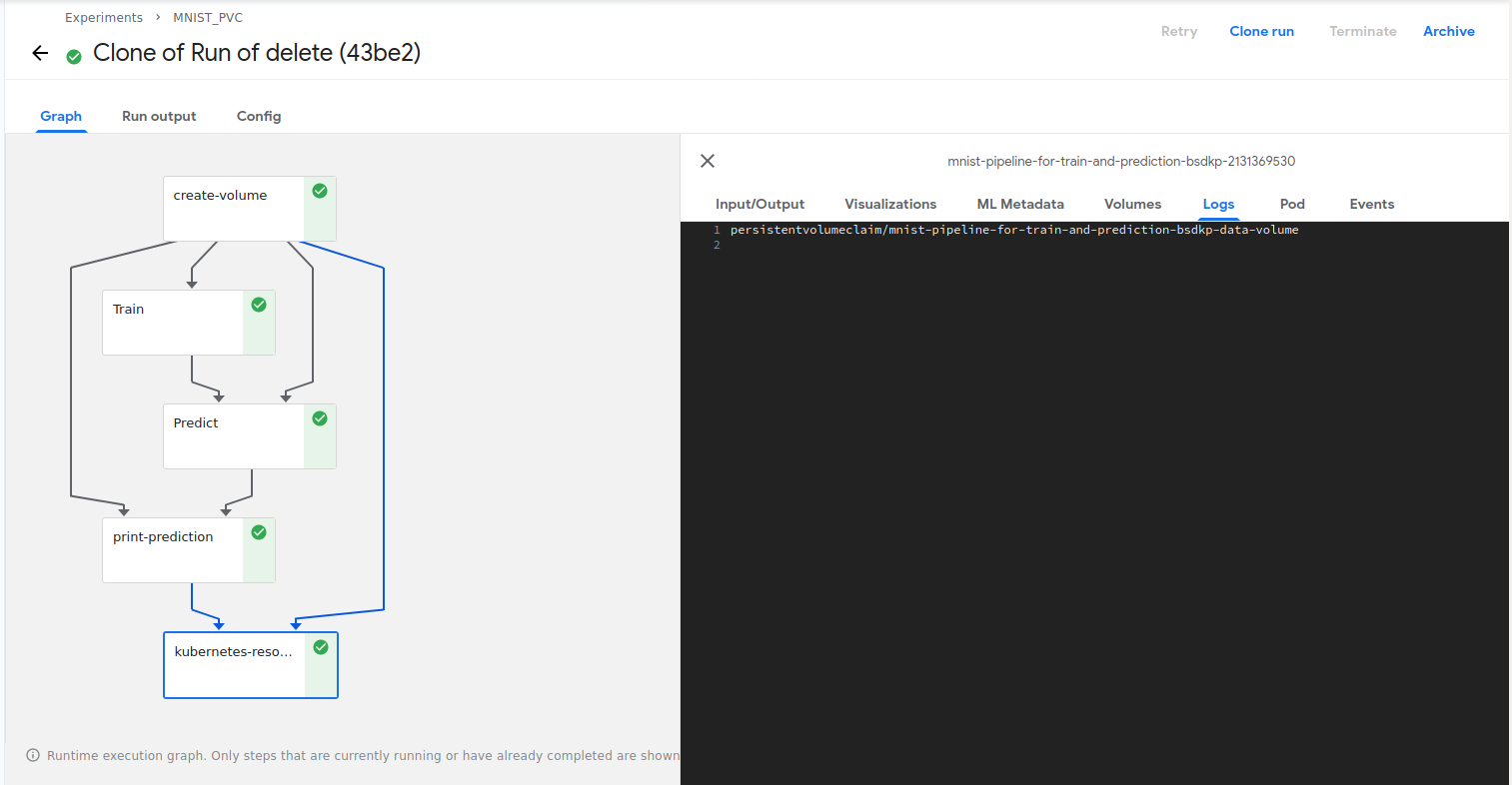

I have a notebook server in a multiuser environment with kale.

After fixing several bugs based on community comments (see below) I run into a new issue.

Added `ServiceRoleBinding` and `EnvoyFilter` as mentioned in https://github.com/kubeflow/pipelines/issues/4440#issuecomment-733702980

```

export NAMESPACE=mynamespace

export NOTEBOOK=mynotebook

export USER=myuser@domain.com

cat > ./envoy_filter.yaml << EOM

apiVersion: rbac.istio.io/v1alpha1

kind: ServiceRoleBinding

metadata:

name: bind-ml-pipeline-nb-${NAMESPACE}

namespace: kubeflow

spec:

roleRef:

kind: ServiceRole

name: ml-pipeline-services

subjects:

- properties:

source.principal: cluster.local/ns/${NAMESPACE}/sa/default-editor

---

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: add-header

namespace: ${NAMESPACE}

spec:

configPatches:

- applyTo: VIRTUAL_HOST

match:

context: SIDECAR_OUTBOUND

routeConfiguration:

vhost:

name: ml-pipeline.kubeflow.svc.cluster.local:8888

route:

name: default

patch:

operation: MERGE

value:

request_headers_to_add:

- append: true

header:

key: kubeflow-userid

value: ${USER}

workloadSelector:

labels:

notebook-name: ${NOTEBOOK}

EOM

```

Added the namespace to `.config/kfp/context.json` as metioned in https://github.com/kubeflow-kale/kale/issues/210#issuecomment-727018461

Added `RoleBinding` as mentioned in https://github.com/kubeflow-kale/kale/issues/210#issuecomment-697231513

```

cat <<EOF | kubectl apply -f -

apiVersion: rbac.authorization.k8s.io/v1