repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

huggingface/datasets

| 4,101

|

How can I download only the train and test split for full numbers using load_dataset()?

|

How can I download only the train and test split for full numbers using load_dataset()?

I do not need the extra split and it will take 40 mins just to download in Colab. I have very short time in hand. Please help.

|

https://github.com/huggingface/datasets/issues/4101

|

open

|

[

"enhancement"

] | 2022-04-05T16:00:15Z

| 2022-04-06T13:09:01Z

| 1

|

Nakkhatra

|

pytorch/TensorRT

| 960

|

❓ [Question] Problem with cudnn dependency when compiling plugins on windows?

|

## ❓ Question

<!-- Your question -->

I am trying to compile a windows dll for torch-tensorRT, however I get the following traceback:

ERROR: C:/users/48698/source/libraries/torch-tensorrt-1.0.0/core/plugins/BUILD:10:11: Compiling core/plugins/register_plugins.cpp failed: undeclared inclusion(s) in rule '//core/plugins:torch_tensorrt_plugins':

this rule is missing dependency declarations for the following files included by 'core/plugins/register_plugins.cpp':

'external/cuda/cudnn.h'

'external/cuda/cudnn_version.h'

'external/cuda/cudnn_ops_infer.h'

'external/cuda/cudnn_ops_train.h'

'external/cuda/cudnn_adv_infer.h'

'external/cuda/cudnn_adv_train.h'

'external/cuda/cudnn_cnn_infer.h'

'external/cuda/cudnn_cnn_train.h'

'external/cuda/cudnn_backend.h'

which is weird cause I do have the cudnn included, and can find the files under the C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.6 path

I am new to Bazel, is there another way I could link those?

## What you have already tried

<!-- A clear and concise description of what you have already done. -->

Followed this guide: https://github.com/NVIDIA/Torch-TensorRT/issues/856 to a t. I think I am linking cudnn in a weird way?

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.11.0

- OS (e.g., Linux): windows

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): libtorch

- Build command you used (if compiling from source):

- Are you using local sources or building from archives: local

- Python version: 3.9

- CUDA version: 11.6

- GPU models and configuration: 3070

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

My torch-tensorrt-1.0.0/core/plugins/BUILD is as follows:

```package(default_visibility = ["//visibility:public"])

config_setting(

name = "use_pre_cxx11_abi",

values = {

"define": "abi=pre_cxx11_abi",

}

)

cc_library(

name = "torch_tensorrt_plugins",

hdrs = [

"impl/interpolate_plugin.h",

"impl/normalize_plugin.h",

"plugins.h",

],

srcs = [

"impl/interpolate_plugin.cpp",

"impl/normalize_plugin.cpp",

"register_plugins.cpp",

],

deps = [

"@tensorrt//:nvinfer",

"@tensorrt//:nvinferplugin",

"//core/util:prelude",

] + select({

":use_pre_cxx11_abi": ["@libtorch_pre_cxx11_abi//:libtorch"],

"//conditions:default": ["@libtorch//:libtorch"],

}),

alwayslink = True,

copts = [

"-pthread"

],

linkopts = [

"-lpthread",

]

)

load("@rules_pkg//:pkg.bzl", "pkg_tar")

pkg_tar(

name = "include",

package_dir = "core/plugins/",

srcs = ["plugins.h"],

)

pkg_tar(

name = "impl_include",

package_dir = "core/plugins/impl",

srcs = ["impl/interpolate_plugin.h",

"impl/normalize_plugin.h"],

)

I could attach more build files if needed, but everything apart from the paths is the same as in the referenced issue.

|

https://github.com/pytorch/TensorRT/issues/960

|

closed

|

[

"question",

"channel: windows"

] | 2022-04-04T00:38:36Z

| 2022-09-02T17:51:14Z

| null |

pepinu

|

huggingface/datasets

| 4,074

|

Error in google/xtreme_s dataset card

|

**Link:** https://huggingface.co/datasets/google/xtreme_s

Not a big deal but Hungarian is considered an Eastern European language, together with Serbian, Slovak, Slovenian (all correctly categorized; Slovenia is mostly to the West of Hungary, by the way).

|

https://github.com/huggingface/datasets/issues/4074

|

closed

|

[

"documentation",

"dataset bug"

] | 2022-03-31T18:07:45Z

| 2022-04-01T08:12:56Z

| 1

|

wranai

|

pytorch/TensorRT

| 947

|

hown to compile model for multi inputs?

|

1)My model : out1, out2 = model(input1, input2)

2)How should i set compile settings, just like this:

trt_ts_module = torch_tensorrt.compile(torch_script_module,

inputs = [example_tensor, # Provide example tensor for input shape or...

torch_tensorrt.Input( # Specify input object with shape and dtype

min_shape=[1, 3, 224, 224],

opt_shape=[1, 3, 512, 512],

max_shape=[1, 3, 1024, 1024],

# For static size shape=[1, 3, 224, 224]

dtype=torch.half) # Datatype of input tensor. Allowed options torch.(float|half|int8|int32|bool)

],

enabled_precisions = {torch.half}, # Run with FP16)

|

https://github.com/pytorch/TensorRT/issues/947

|

closed

|

[

"question"

] | 2022-03-31T08:00:41Z

| 2022-03-31T20:29:39Z

| null |

shuaizzZ

|

pytorch/data

| 339

|

Build the nightlies a little earlier

|

`torchdata` builds the nightlies at 15:00 UTC+0

https://github.com/pytorch/data/blob/198cffe7e65a633509ca36ad744f7c3059ad1190/.github/workflows/nightly_release.yml#L6

and publishes them roughly 30 minutes later. The `torchvision` nightlies are build at 11:00 UTC+0 and also published roughly 30 minutes later.

This creates a 4 hour window where the `torchvision` tests that pull in `torchdata` run on outdated nightlies. For example see [this CI run](https://app.circleci.com/pipelines/github/pytorch/vision/16169/workflows/652e06c3-c941-4520-b6ee-f69b2348dd57/jobs/1309833):

In the step "Install PyTorch from the nightly releases" we have

```

Installing collected packages: typing-extensions, torch

Successfully installed torch-1.12.0.dev20220329+cpu typing-extensions-4.1.1

```

Two steps later in "Install torchdata from nightly releases" we have

```

Installing collected packages: torch, torchdata

Attempting uninstall: torch

Found existing installation: torch 1.12.0.dev20220329+cpu

Uninstalling torch-1.12.0.dev20220329+cpu:

Successfully uninstalled torch-1.12.0.dev20220329+cpu

Successfully installed torch-1.12.0.dev20220328+cpu torchdata-0.4.0.dev20220328

```

Was the release schedule deliberately chosen this way? If not can we maybe move it to four hours earlier?

|

https://github.com/meta-pytorch/data/issues/339

|

closed

|

[] | 2022-03-29T15:42:24Z

| 2022-03-29T19:24:52Z

| 5

|

pmeier

|

pytorch/torchx

| 441

|

[Req] LSF scheduler support

|

## Description

LSF scheduler support

Does torchx team have plan to support LSF scheduler?

Or is there any guide for extension, I would make PR.

## Motivation/Background

Thanks for torchx utils. We can target various scheduler by configure torchxconfig.

## Detailed Proposal

It would be better to support LSF scheduler.

|

https://github.com/meta-pytorch/torchx/issues/441

|

open

|

[

"enhancement",

"module: runner",

"scheduler-request"

] | 2022-03-29T04:47:30Z

| 2022-10-10T22:27:47Z

| 6

|

ckddls1321

|

pytorch/data

| 335

|

[BE] Unify `buffer_size` across datapipes

|

The `buffer_size` parameter is currently fairly inconsistent across datapipes:

| name | default `buffer_size` | infinite `buffer_size` | warn on infinite |

|--------------------|-------------------------|--------------------------|--------------------|

| Demultiplexer | 1e3 | -1 | yes |

| Forker | 1e3 | -1 | yes |

| Grouper | 1e4 | N/A | N/A |

| Shuffler | 1e4 | N/A | N/A |

| MaxTokenBucketizer | 1e3 | N/A | N/A |

| UnZipper | 1e3 | -1 | yes |

| IterKeyZipper | 1e4 | None | no |

Here are my suggestion on how to unify this:

- Use the same default `buffer_size` everywhere. It makes little difference whether we use `1e3` or `1e4` given that it is tightly coupled with the data we know nothing about. Given today's hardware / datasets, I would go with 1e4, but no strong opinion.

- Give every datapipe with buffer the ability for an infinite buffer. Otherwise users will just be annoyed and use a workaround. For example, `torchvision` simply uses [`INFINITE_BUFFER_SIZE = 1_000_000_000`](https://github.com/pytorch/vision/blob/1db8795733b91cd6dd62a0baa7ecbae6790542bc/torchvision/prototype/datasets/utils/_internal.py#L42-L43), which for all intents and purposes lives up to its name. Which sentinel we use, i.e. `-1` or `None`, again makes little difference. I personally would use `None` to have a clear separation, but again no strong opinion other than being consistent.

- Do not warn on infinite buffer sizes. Especially since infinite buffer is not the default behavior, the user is expected to know what they are doing when setting `buffer_size=None`. I'm all for having a warning like this in the documentation, but I'm strongly against a runtime warning. For example, `torchvision` datasets need to use an infinite buffer everywhere. Thus, by using the infinite buffer sentinel, users would always get runtime warnings although neither them nor we did anything wrong.

|

https://github.com/meta-pytorch/data/issues/335

|

open

|

[

"Better Engineering"

] | 2022-03-28T17:36:32Z

| 2022-07-06T18:44:05Z

| 8

|

pmeier

|

huggingface/datasets

| 4,041

|

Add support for IIIF in datasets

|

This is a feature request for support for IIIF in `datasets`. Apologies for the long issue. I have also used a different format to the usual feature request since I think that makes more sense but happy to use the standard template if preferred.

## What is [IIIF](https://iiif.io/)?

IIIF (International Image Interoperability Framework)

> is a set of open standards for delivering high-quality, attributed digital objects online at scale. It’s also an international community developing and implementing the IIIF APIs. IIIF is backed by a consortium of leading cultural institutions.

The tl;dr is that IIIF provides various specifications for implementing useful functionality for:

- Institutions to make available images for various use cases

- Users to have a consistent way of interacting/requesting these images

- For developers to have a common standard for developing tools for working with IIIF images that will work across all institutions that implement a particular IIIF standard (for example the image viewer for the BNF can also work for the Library of Congress if they both use IIIF).

Some institutions that various levels of support IIF include: The British Library, Internet Archive, Library of Congress, Wikidata. There are also many smaller institutions that have IIIF support. An incomplete list can be found here: https://iiif.io/guides/finding_resources/

## IIIF APIs

IIIF consists of a number of APIs which could be integrated with datasets. I think the most obvious candidate for inclusion would be the [Image API](https://iiif.io/api/image/3.0/)

### IIIF Image API

The Image API https://iiif.io/api/image/3.0/ is likely the most suitable first candidate for integration with datasets. The Image API offers a consistent protocol for requesting images via a URL:

```{scheme}://{server}{/prefix}/{identifier}/{region}/{size}/{rotation}/{quality}.{format}```

A concrete example of this:

```https://stacks.stanford.edu/image/iiif/hg676jb4964%2F0380_796-44/full/full/0/default.jpg```

As you can see the scheme offers a number of options that can be specified in the URL, for example, size. Using the example URL we return:

We can change the size to request a size of 250 by 250, this is done by changing the size from `full` to `250,250` i.e. switching the URL to `https://stacks.stanford.edu/image/iiif/hg676jb4964%2F0380_796-44/full/250,250/0/default.jpg`

We can also request the image with max width 250, max height 250 whilst maintaining the aspect ratio using `!w,h`. i.e. change the url to `https://stacks.stanford.edu/image/iiif/hg676jb4964%2F0380_796-44/full/!250,250/0/default.jpg`

A full overview of the options for size can be found here: https://iiif.io/api/image/3.0/#42-size

## Why would/could this be useful for datasets?

There are a few reasons why support for the IIIF Image API could be useful. Broadly the ability to have more control over how an image is returned from a server is useful for many ML workflows:

- images can be requested in the right size, this prevents having to download/stream large images when the actual desired size is much smaller

- can select a subset of an image: it is possible to select a sub-region of an image, this could be useful for example when you already have a bounding box for a subset of an image and then want to use this subset of an image for another task. For example, https://github.com/Living-with-machines/nnanno uses IIIF to request parts of a newspaper image that have been detected as 'photograph', 'illustration' etc for downstream use.

- options for quality, rotation, the format can all be encoded in the URL request.

These may become particularly useful when pre-training models on large image datasets where the cost of downloading images with 1600 pixel width when you actually want 240 has a larger impact.

## What could this look like in datasets?

I think there are various ways in which support for IIIF could potentially be included in `datasets`. These suggestions aren't fully fleshed out but hopefully, give a sense of possible approaches that match existing `datasets` methods in their approach.

### Use through datasets scripts

Loading images via URL is already supported. There are a few possible 'extras' that could be included when using IIIF. One option is to leverage the IIIF protocol in datasets scripts, i.e. the dataset script can expose the IIIF options via the dataset script:

```python

ds = load_dataset("iiif_dataset", image_size="250,250", fmt="jpg")

```

This is already possible. The approach to parsing the IIIF URLs would be left to the person creating the dataset script.

### Sup

|

https://github.com/huggingface/datasets/issues/4041

|

open

|

[

"enhancement"

] | 2022-03-28T15:19:25Z

| 2022-04-05T18:20:53Z

| 1

|

davanstrien

|

pytorch/vision

| 5,686

|

Question on segmentation code

|

### 🚀 The feature

Hello.

I want to ask you a simple question.

I'm not sure if it's right to post a question in this 'Feature request' category.

In train.py code in the reference/segmentation, the get_dataset function is set the coco dataset classes 21.

Why the number of classes is 21?

Is it wrong to set the number of classes to 91 which is the number of classes in the coco dataset?

Here is the reference code.

```python

def get_dataset(dir_path, name, image_set, transform):

def sbd(*args, **kwargs):

return torchvision.datasets.SBDataset(*args, mode="segmentation", **kwargs)

paths = {

"voc": (dir_path, torchvision.datasets.VOCSegmentation, 21),

"voc_aug": (dir_path, sbd, 21),

"coco": (dir_path, get_coco, 21),

}

p, ds_fn, num_classes = paths[name]

ds = ds_fn(p, image_set=image_set, transforms=transform)

return ds, num_classes

cc @vfdev-5 @datumbox @YosuaMichael

|

https://github.com/pytorch/vision/issues/5686

|

closed

|

[

"question",

"topic: semantic segmentation"

] | 2022-03-28T06:05:39Z

| 2022-03-28T07:29:35Z

| null |

kcs6568

|

pytorch/torchx

| 435

|

[torchx/examples] Remove usages of custom components in app/pipeline examples

|

## 📚 Documentation

Since we are making TorchX focused on Job launching and less about authoring components and AppDefs, we need to adjust our app and pipeline examples to demonstrate running the applications with the builtin `dist.ddp` and `utils.python` components rather than showing how to author a component for the application.

For 90% of the launch patterns `dist.ddp` (multi-homogeneous node) and `utils.python` (single node) is sufficient.

There are a couple of things we need to do:

1. Delete `torchx/example/apps/**/component.py`

2. For each application example show how to run it with the existing `dist.ddp` or `utils.python` builtin

3. Link a section on how to copy existing components and further customizing (e.g. `torchx builtins --print dist.ddp > custom.py`)

4. Make adjustments to the integration tests to test the example applications using builtin components (as advertised)

5. Do 1-4 for the pipeline examples too.

|

https://github.com/meta-pytorch/torchx/issues/435

|

closed

|

[

"documentation"

] | 2022-03-25T23:34:26Z

| 2022-05-25T22:52:40Z

| 0

|

kiukchung

|

huggingface/datasets

| 4,027

|

ElasticSearch Indexing example: TypeError: __init__() missing 1 required positional argument: 'scheme'

|

## Describe the bug

I am following the example in the documentation for elastic search step by step (on google colab): https://huggingface.co/docs/datasets/faiss_es#elasticsearch

```

from datasets import load_dataset

squad = load_dataset('crime_and_punish', split='train[:1000]')

```

When I run the line:

`squad.add_elasticsearch_index("context", host="localhost", port="9200")`

I get the error:

`TypeError: __init__() missing 1 required positional argument: 'scheme'`

## Expected results

No error message

## Actual results

```

TypeError Traceback (most recent call last)

[<ipython-input-23-9205593edef3>](https://localhost:8080/#) in <module>()

1 import elasticsearch

----> 2 squad.add_elasticsearch_index("text", host="localhost", port="9200")

6 frames

[/usr/local/lib/python3.7/dist-packages/elasticsearch/_sync/client/utils.py](https://localhost:8080/#) in host_mapping_to_node_config(host)

209 options["path_prefix"] = options.pop("url_prefix")

210

--> 211 return NodeConfig(**options) # type: ignore

212

213

TypeError: __init__() missing 1 required positional argument: 'scheme'

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.2.0

- Platform: Linux, Google Colab

- Python version: Google Colab (probably 3.7)

- PyArrow version: ?

|

https://github.com/huggingface/datasets/issues/4027

|

closed

|

[

"bug",

"duplicate"

] | 2022-03-25T16:22:28Z

| 2022-04-07T10:29:52Z

| 2

|

MoritzLaurer

|

pytorch/tutorials

| 1,872

|

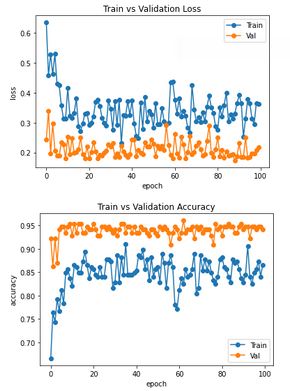

Transfer learning tutorial: Loss and Accuracy curves the wrong way

|

Hey,

I have a question concerning the transfer learning tutorial (https://pytorch.org/tutorials/beginner/transfer_learning_tutorial.html).

For a few days, I've been trying to figure out why the validation and training curves are reversed there. By this, I mean that for general neural networks the training curves are always better than the validation curves (lower loss and higher accuracy). However, as in the tutorial itself, this is not the case (see also values in the tutorial). To make the whole thing clearer, I also ran the tutorial for 100 epochs and plotted the accuracy and loss for training and validation. The graph looks like this:

Unfortunately, I haven't found a real reason for this yet.

It shouldn't be the dataset itself (I tried the same with other data). The only thing is the BatchNorm, which is different for training and validation. But I also suspect that this is not the reason for this big difference and the changing role. In past projects also on neural networks, with batch normalization at least I didn't have these reversed roles of validation and training.

Has anybody an idea, why this happens here and why it has not that effect using other neural networks?

cc @suraj813

|

https://github.com/pytorch/tutorials/issues/1872

|

closed

|

[

"question",

"intro"

] | 2022-03-25T15:23:39Z

| 2023-03-06T21:50:25Z

| null |

AlexanderGeng

|

pytorch/pytorch

| 74,741

|

[FSDP] How to use fsdp in GPT model in Megatron-LM

|

### 🚀 The feature, motivation and pitch

Are there any examples similar to DeepSpeed that can experience the fsdp function of pytorch. It would be nice to provide the GPT model in Megatron-LM.

### Alternatives

I hope to provide examples of benchmarking DeepSpeed to facilitate the in-depth use of the fsdp function.

### Additional context

_No response_

|

https://github.com/pytorch/pytorch/issues/74741

|

closed

|

[] | 2022-03-25T08:30:05Z

| 2022-03-25T21:12:04Z

| null |

Baibaifan

|

pytorch/text

| 1,662

|

How to install LTS (0.9.2)?

|

## ❓ Questions and Help

**Description**

I've found that my PyTorch version is 1.8.2, so according to https://github.com/pytorch/text/#installation , the torchtext version is 0.9.2:

But as I use `conda install -c pytorch torchtext` to install, the version I installed defaultly is 0.6.0. So I wander, is this version also OK for me as the torchtext version 0.9.2 is the highest version I can install, or it's not OK as I can only install 0.9.2 version?

|

https://github.com/pytorch/text/issues/1662

|

closed

|

[] | 2022-03-25T08:12:03Z

| 2024-03-11T00:55:30Z

| null |

PolarisRisingWar

|

pytorch/pytorch

| 74,740

|

How to export onnx with dynamic batch size for models with multiple outputs?

|

## Issue description

I want to export my model to onnx. Following is my code:

torch.onnx._export(

model,

dummy_input,

args.output_name,

input_names=[args.input],

output_names=args.output,

opset_version=args.opset,

)

It works well. But I want to export it with dynamic batch size. So I try this:

torch.onnx._export(

model,

dummy_input,

args.output_name,

input_names=[args.input],

output_names=args.output,

opset_version=args.opset,

dynamic_axes={'input_tensor' : {0 : 'batch_size'},

'classes' : {0 : 'batch_size'},

'boxes' : {0 : 'batch_size'},

'scores' : {0 : 'batch_size'},}

)

It crashed with following message:

``2022-03-25 13:38:11.201 | ERROR | main::114 - An error has been caught in function '', process 'MainProcess' (1376540), thread 'MainThread' (139864366814016):

Traceback (most recent call last):

File "tools/export_onnx.py", line 114, in

main()

└ <function main at 0x7f3434447f70>

File "tools/export_onnx.py", line 107, in main

model_simp, check = simplify(onnx_model)

│ └ ir_version: 7

│ producer_name: "pytorch"

│ producer_version: "1.10"

│ graph {

│ node {

│ output: "607"

│ name: "Constant_0"

│ ...

└ <function simplify at 0x7f3417604dc0>

File "/home/xyz/anaconda3/envs/yolox/lib/python3.8/site-packages/onnxsim/onnx_simplifier.py", line 483, in simplify

model = fixed_point(model, infer_shapes_and_optimize, constant_folding)

│ │ │ └ <function simplify..constant_folding at 0x7f34175d5f70>

│ │ └ <function simplify..infer_shapes_and_optimize at 0x7f342715c160>

│ └ ir_version: 7

│ producer_name: "pytorch"

│ producer_version: "1.10"

│ graph {

│ node {

│ output: "607"

│ name: "Constant_0"

│ ...

└ <function fixed_point at 0x7f3417604d30>

File "/home/xyz/anaconda3/envs/yolox/lib/python3.8/site-packages/onnxsim/onnx_simplifier.py", line 384, in fixed_point

x = func_b(x)

│ └ ir_version: 7

│ producer_name: "pytorch"

│ producer_version: "1.10"

│ graph {

│ node {

│ input: "input_tensor"

│ input: "608"

│ ...

└ <function simplify..constant_folding at 0x7f34175d5f70>

File "/home/xyz/anaconda3/envs/yolox/lib/python3.8/site-packages/onnxsim/onnx_simplifier.py", line 473, in constant_folding

res = forward_for_node_outputs(model,

│ └ ir_version: 7

│ producer_name: "pytorch"

│ producer_version: "1.10"

│ graph {

│ node {

│ input: "input_tensor"

│ input: "608"

│ ...

└ <function forward_for_node_outputs at 0x7f34176048b0>

File "/home/xyz/anaconda3/envs/yolox/lib/python3.8/site-packages/onnxsim/onnx_simplifier.py", line 229, in forward_for_node_outputs

res = forward(model,

│ └ ir_version: 7

│ producer_name: "pytorch"

│ producer_version: "1.10"

│ graph {

│ node {

│ input: "input_tensor"

│ input: "608"

│ ...

└ <function forward at 0x7f3417604820>

File "/home/xyz/anaconda3/envs/yolox/lib/python3.8/site-packages/onnxsim/onnx_simplifier.py", line 210, in forward

inputs.update(generate_specific_rand_input(model, {name: shape}))

│ │ │ │ │ └ [0, 3, 640, 640]

│ │ │ │ └ 'input_tensor'

│ │ │ └ ir_version: 7

│ │ │ producer_name: "pytorch"

│ │ │ producer_version: "1.10"

│ │ │ graph {

│ │ │ node {

│ │ │ input: "input_tensor"

│ │ │ input: "608"

│ │ │ ...

│ │ └ <function generate_specific_rand_input at 0x7f3417604550>

│ └ <method 'update' of 'dict' objects>

└ {}

File "/home/xyz/anaconda3/envs/yolox/lib/python3.8/site-packages/onnxsim/onnx_simplifier.py", line 98, in generate_specific_rand_input

raise RuntimeError(

RuntimeError: The shape of input "input_tensor" has dynamic size "[0, 3, 640, 640]", please determine the input size manually by "--dynamic-input-shape --input-shape xxx" or "--input-shape xxx". Run "python3 -m onnxsim -h" for details

``

My environments:

`pip list

Package Version Editable project location

------------------------- --------------------- ------------------------------------------------------------------

absl-py 1.0.0

albumentations 1.1.0

anykeystore 0.2

apex 0.1

appdirs 1.4.4

cachetools 4.2.4

certifi 2021.10.8

charset-normalizer 2.0.9

cryptacular 1.6.2

cycler 0.11.0

Cython 0.29.25

defusedxml 0.7.1

flatbuffers 2.0

fonttools 4.28.3

google-auth 2.3.3

google-auth-oauthlib 0.4.6

greenlet 1.1.2

grpcio 1.42.0

hupper 1.10.3

idna 3.3

imageio 2.13.3

imgaug 0.4.0

importlib-metadata 4.8.2

joblib 1.1.0

kiwisolver 1.3.2

loguru 0.5.3

Mako 1.1.6

Markdown 3.3.6

MarkupSafe 2.0.1

matplotlib 3.5.1

networkx 2.6.3

ninja 1.10.2.3

numpy 1.2

|

https://github.com/pytorch/pytorch/issues/74740

|

closed

|

[] | 2022-03-25T07:55:45Z

| 2022-03-25T08:15:58Z

| null |

LLsmile

|

pytorch/pytorch

| 74,616

|

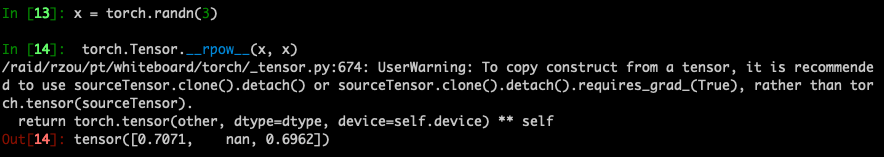

__rpow__(self, other) OpInfo should not test the case where `other` is a Tensor

|

### 🐛 Describe the bug

After https://github.com/pytorch/pytorch/pull/74280 (cc @mruberry), the `__rpow__` OpInfo has a sample input where `other` is a Tensor. This cannot happen during normal execution: to get to `Tensor.__rpow__` a user does the following:

```

# self = some_tensor

# other = not_a_tensor

not_a_tensor ** some_tensor

```

If instead `not_a_tensor` is a Tensor, this ends up calling `__pow__` in Python which will then handle the case.

Are there any legitimate cases where we do want this to happen?

## Context

This caused some functorch tests to fail because we don't support the route where both `self` and `other` are Tensors. pytorch/pytorch also has some cryptic warning in that route:

but it's not clear to me if we want to support this or not.

### Versions

pytorch main branch

|

https://github.com/pytorch/pytorch/issues/74616

|

open

|

[

"module: tests",

"triaged"

] | 2022-03-23T15:28:17Z

| 2022-04-18T02:34:55Z

| null |

zou3519

|

pytorch/TensorRT

| 936

|

❓[Question] RuntimeError: [Error thrown at core/conversion/converters/impl/select.cpp:236] Expected const_layer to be true but got false

|

## ❓ Question

when i convert jit model, got the error

this is my forward code:

input `x` shape is `(batch, 6, height, width)`, first step is to split `x` into two tensors, but failed

```

def forward(self, x):

fg = x[:,0:3,:,:] ## this line got error

bg = x[:,3:,:,:]

fg = self.backbone(fg)

bg = self.backbone(bg)

out = self.heads(fg, bg)

return out

```

complete traceback:

```

ERROR: [Torch-TensorRT TorchScript Conversion Context] - 3: [network.cpp::addConstant::1052] Error Code 3: Internal Error (Parameter check failed at: optimizer/api/network.cpp::addConstant::1052, condition: !weights.values == !weights.count

)

Traceback (most recent call last):

File "model_converter.py", line 263, in <module>

engine = get_engine(model_info.trt_engine_path, calib, int8_mode=int8_mode, optimize_params=optimize_params)

File "model_converter.py", line 173, in get_engine

return build_engine(max_batch_size)

File "model_converter.py", line 95, in build_engine

return build_engine_from_jit(max_batch_size)

File "model_converter.py", line 80, in build_engine_from_jit

tensorrt_engine_model = torch_tensorrt.ts.convert_method_to_trt_engine(traced_model, "forward", **compile_settings)

File "/usr/local/lib/python3.6/dist-packages/torch_tensorrt/ts/_compiler.py", line 211, in convert_method_to_trt_engine

return _C.convert_graph_to_trt_engine(module._c, method_name, _parse_compile_spec(compile_spec))

RuntimeError: [Error thrown at core/conversion/converters/impl/select.cpp:236] Expected const_layer to be true but got false

Unable to create constant layer from node: %575 : Tensor = aten::slice(%570, %13, %12, %14, %13) # /data/small_detection/centernet_pytorch_small_detection/models/low_freeze_comb_net.py:455:0

```

## What you have already tried

try use `fg, bg = x.split(int(x.shape[1] // 2), dim=1)` instead of `fg = x[:,0:3,:,:]` and `bg = x[:,3:,:,:]` but got convert error for op not support

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.4.0

- CPU Architecture: arm (nx)

- OS (e.g., Linux):

- How you installed PyTorch: docker of nvidia l4t

- Python version: 3.6.9

- CUDA version: 10.2.300

- Tensorrt version: 8.0.1.6

|

https://github.com/pytorch/TensorRT/issues/936

|

closed

|

[

"question",

"component: converters",

"No Activity"

] | 2022-03-22T02:40:39Z

| 2023-02-10T00:13:18Z

| null |

pupumao

|

pytorch/text

| 1,661

|

what's is the replacement of legacy?

|

## ❓ Questions and Help

**Description**

<!-- Please send questions or ask for help here. -->

in torchtext0.12.0, the module legacy has been removed, so how to implement the same functions as the class legacy.Field?

thanks for your help.

|

https://github.com/pytorch/text/issues/1661

|

closed

|

[] | 2022-03-21T11:03:55Z

| 2022-10-04T01:51:51Z

| null |

1152545264

|

pytorch/serve

| 1,518

|

How to return a dict response, not a list

|

<!--

Thank you for suggesting an idea to improve torchserve model serving experience.

Please fill in as much of the template below as you're able.

-->

## Is your feature request related to a problem? Please describe.

<!-- Please describe the problem you are trying to solve. -->

when I retuan a dict value, serve return a error.

## Describe the solution

<!-- Please describe the desired behavior. -->

## Describe alternatives solution

<!-- Please describe alternative solutions or features you have considered. -->

|

https://github.com/pytorch/serve/issues/1518

|

closed

|

[] | 2022-03-20T10:30:09Z

| 2022-03-25T20:14:17Z

| null |

liuhuiCNN

|

pytorch/data

| 310

|

MapDatapipe Mux/Demux Support

|

### 🚀 The feature

MapDatapipes are missing Mux and Demux pipes as noted in https://github.com/pytorch/pytorch/issues/57031

Talked to @ejguan on https://discuss.pytorch.org/t/mapdatapipe-support-mux-demux/146305, I plan to do a PR with Mux/Demux added. However, I will add rough outlines / ideas here first. I plan to match the same test strategy as the Mux/Demux pipes already in IterDataPipes.

### Motivation, pitch

For Demux: My basic test/goal is to download mnist, and split it into train/validation sets using map.

For Mux: Then attempt to mux them back together (not sure how to come up with a useful example of this).

- Might try a scenario where I split train into k splits and rejoin them?

Not sure when this should be converted to a pr. This would be my first pr into pytorch, so I want the pr to be as clean as possible. Putting code changes ideas here I feel could allow for more dramatic/messy changes/avoid a messy git diff/worry about formatting once code is finalized.

Note: doc strings are removed to make code shorter and will be readded in pr. Not-super-useful comments will be removed in pr.

Note: let me know if a draft pr would be better.

Demux working code:

Draft 1: https://github.com/josiahls/fastrl/blob/848f90d0ed5b0c2cd0dd3e134b0b922dd8a53d7c/fastrl/fastai/data/pipes.py

Demux working code + Basic Test

Draft 1: https://github.com/josiahls/fastrl/blob/848f90d0ed5b0c2cd0dd3e134b0b922dd8a53d7c/nbs/02c_fastai.data.pipes.ipynb

Mux working code:

Draft 1: https://github.com/josiahls/fastrl/blob/30cd47766e9fb1bc75d32de877f54b8de9567c36/fastrl/fastai/data/pipes/mux.py

Basic Test

Draft 1: https://github.com/josiahls/fastrl/blob/30cd47766e9fb1bc75d32de877f54b8de9567c36/nbs/02c_fastai.data.pipes.mux.ipynb

|

https://github.com/meta-pytorch/data/issues/310

|

open

|

[] | 2022-03-19T19:31:49Z

| 2022-03-27T03:31:32Z

| 7

|

josiahls

|

pytorch/data

| 303

|

DataPipe for GCS (Google Cloud Storage)

|

### 🚀 The feature

Build a DataPipe that allows users to connect to GCS (Google Cloud Storage). There is a chance that existing DataPipes may suffice, so we should examine the relevant APIs first.

### Motivation, pitch

GCS (Google Cloud Storage) is one of the commonly used cloud storage for storing data.

### Alternatives

Existing DataPipes are sufficient and we should provide an example of how that can be done instead.

### Additional context

Feel free to react or leave a comment if this feature is important for you or for any other suggestion.

|

https://github.com/meta-pytorch/data/issues/303

|

closed

|

[] | 2022-03-16T19:01:03Z

| 2023-03-07T14:49:15Z

| 2

|

NivekT

|

pytorch/data

| 302

|

Notes on shuffling, sharding, and batchsize

|

(I'm writing this down here to have a written trace, but I'm looking forward to discuss this with you all in our upcoming meetings :) )

I spent some time porting the torchvision training recipes to use datapipes, and I noticed that the model I trained on ImageNet with DPs was much less accurate than the one with regular datasets. After **a lot** of digging I came to the following conclusion:

1. the datapipe must be shuffled **before** it is sharded

2. the DataLoader does not behave in the same way with a datapipe and with a regular indexable dataset, in particular when it comes to size of the last batches in an epoch. This has a **dramatic** effect on accuracy (probably because of batch-norm).

Details below. Note: for sharding, I used [this custom torchvision sharder](https://github.com/pytorch/vision/blob/eb6e39157cf1aaca184b52477cf1e9159bbcbd63/torchvision/prototype/datasets/utils/_internal.py#L120) which takes DDP and dataloader workers into account, + the TakerIterDataPipe below it.

-----

### Shuffle before shard

First, some quick results (training a resnext50_32x4d for 5 epochs with 8 GPUs and 12 workers per GPU):

Shuffle before shard: Acc@1 = 47% -- this is on par with the regular indexable dataset version (phew!!)

Shuffle after shard: Acc@1 = 2%

One way to explain this is that if we shuffle after we shard, then only sub-parts of the dataset get shuffled. Namely, each of the 8 * 12 = 96 dataloader workers receive ~1/96th of the dataset, and each of these parts get shuffled. But that means that the shuffling is far from uniform and for datasets in which the layout is `all_samples_from_class1, all_samples_from_class2, ... all_samples_from_classN`, it's possible that some class i is **never** in the same batch as class j.

So it looks like we need to shuffle before we shard. Now, if we shuffle before sharding, we still need to make sure that all of the 96 workers shuffle the dataset with the same RNG. Otherwise we risk sampling a given sample in more than one worker, or not at all. For that to happen, one can set a random seed in `worker_init_fn`, but that causes a second problem: the random transformations of each worker will also be the same, and this will lead to slightly less accurate results; on top of that, all epochs will start with the same seed, so the shuffling is the same across all epochs. **I do not know how to solve this problem yet.**

Note that TF shuffles the dataset before storing it. We might do something similar, but that would still not solve the issue for custom users datasets.

----

### Size of the batches at the end of an epoch

Some quick results (same experiment as above):

with drop_last=True: Acc@1 = 47%

with drop_last=False: Acc@1 = 11%

Near the end of the epoch, the dataloader with DP will produce a lot of batches with size 1 if drop_last is False. See the last batches of an epoch on indices from `[0, len(imagenet))` with a requested batch size of 32: https://pastebin.com/wjS7YC90. In contrast, this does not happen when using an indexable dataset: https://pastebin.com/Rje0U8Dx.

I'm not too sure of why this has such a dramatic impact, but it's possible that this has to do with batch-norm, as @fmassa pointed out offline. Using `drop_last` will make sure that the 1-sized batches are eliminated, producing a much better accuracy.

I guess the conclusion here is that it's worth unifying the behaviour of the DataLoader both DPs and regular indexable datasets regarding the batch size, because with indexable datasets and drop_last=False we still get ~47% acc.

|

https://github.com/meta-pytorch/data/issues/302

|

open

|

[] | 2022-03-16T18:08:41Z

| 2022-05-24T12:55:18Z

| 28

|

NicolasHug

|

pytorch/data

| 301

|

Add TorchArrow Nightly CI Test

|

### 🚀 The feature

TorchArrow nightly build is now [available for Linux](https://download.pytorch.org/whl/nightly/cpu/torch_nightly.html) (other versions will be next).

We should add TorchArrow nightly CI tests for these [TorchArrow dataframe related unit tests](https://github.com/pytorch/data/blob/main/test/test_dataframe.py).

### Motivation, pitch

This will ensure that our usages remain compatible with TA's APIs.

### Additional context

This is a good first issue for people who want to understand how our CI works. Other [domain CI tests](https://github.com/pytorch/data/blob/main/.github/workflows/domain_ci.yml) (for Vision, Text) can serve as examples on how to set this up.

|

https://github.com/meta-pytorch/data/issues/301

|

closed

|

[

"good first issue"

] | 2022-03-16T17:28:27Z

| 2022-05-09T15:38:31Z

| 1

|

NivekT

|

pytorch/pytorch

| 74,288

|

How to Minimize Rounding Error in torch.autograd.functional.jacobian?

|

### 🐛 Describe the bug

Before I start, let me express my sincerest gratitude to issue #49171, in making it possible to take the jacobian wrt all model parameters! A great functionality indeed!

I am raising an issue about the approximation error when the jacobian function goes to high dimensions. This is necessary when calculating the jacobian wrt parameters using batch inputs. In low dimensions, the following code work fine

```

import torch

from torch.autograd.functional import jacobian

from torch.nn.utils import _stateless

from torch import nn

from torch.nn import functional as F

```

```

model = nn.Conv2d(3,1,1)

input = torch.rand(1, 3, 32, 32)

two_input = torch.cat([input, torch.rand(1, 3, 32, 32)], dim=0)

names = list(n for n, _ in model.named_parameters())

# This is exactly the same code as in issue #49171

jac1 = jacobian(lambda *params: _stateless.functional_call(model, {n: p for n, p in zip(names, params)}, input), tuple(model.parameters()))

jac2 = jacobian(lambda *params: _stateless.functional_call(model, {n: p for n, p in zip(names, params)}, two_input), tuple(model.parameters()))

assert torch.allclose(jac1[0][0], jac2[0][0])

```

However, when I make the model slightly larger the assertion breaks down, which seem like it's due to rounding errors

```

class ResBasicBlock(nn.Module):

def __init__(self, n_channels, n_inner_channels, kernel_size=3):

super().__init__()

self.conv1 = nn.Conv2d(n_channels, n_inner_channels, (kernel_size, kernel_size), padding=kernel_size // 2,

bias=False)

self.conv2 = nn.Conv2d(n_inner_channels, n_channels, (kernel_size, kernel_size), padding=kernel_size // 2,

bias=False)

self.norm1 = nn.BatchNorm2d(n_inner_channels)

self.norm2 = nn.BatchNorm2d(n_channels)

self.norm3 = nn.BatchNorm2d(n_channels)

def forward(self, z, x=None):

if x == None:

x = torch.zeros_like(z)

y = self.norm1(F.relu(self.conv1(z)))

return self.norm3(F.relu(z + self.norm2(x + self.conv2(y))))

model = ResBasicBlock(3, 1)

input = torch.rand(1, 3, 32, 32)

two_input = torch.cat([input, torch.rand(1, 3, 32, 32)], dim=0)

names = list(n for n, _ in model.named_parameters())

# This is exactly the same code as in issue #49171

jac1 = jacobian(lambda *params: _stateless.functional_call(model, {n: p for n, p in zip(names, params)}, input), tuple(model.parameters()))

jac2 = jacobian(lambda *params: _stateless.functional_call(model, {n: p for n, p in zip(names, params)}, two_input), tuple(model.parameters()))

assert torch.allclose(jac1[0][0], jac2[0][0])

```

### Versions

```

Collecting environment information...

PyTorch version: 1.11.0

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.3 (x86_64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2)

CMake version: version 3.17.1

Libc version: N/A

Python version: 3.8.12 (default, Oct 12 2021, 06:23:56) [Clang 10.0.0 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] functorch==0.1.0

[pip3] numpy==1.21.2

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl defaults

[conda] ffmpeg 4.3 h0a44026_0 pytorch

[conda] functorch 0.1.0 pypi_0 pypi

[conda] mkl 2021.4.0 hecd8cb5_637 defaults

[conda] mkl-service 2.4.0 py38h9ed2024_0 defaults

[conda] mkl_fft 1.3.1 py38h4ab4a9b_0 defaults

[conda] mkl_random 1.2.2 py38hb2f4e1b_0 defaults

[conda] numpy 1.21.2 py38h4b4dc7a_0 defaults

[conda] numpy-base 1.21.2 py38he0bd621_0 defaults

[conda] pytorch 1.11.0 py3.8_0 pytorch

[conda] torchaudio 0.11.0 py38_cpu pytorch

[conda] torchvision 0.12.0 py38_cpu pytorch

```

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

|

https://github.com/pytorch/pytorch/issues/74288

|

closed

|

[

"module: numerical-stability",

"module: autograd",

"triaged"

] | 2022-03-16T09:25:18Z

| 2022-03-17T14:17:29Z

| null |

QiyaoWei

|

pytorch/pytorch

| 74,256

|

Create secure credential storage for metrics credentials and associated documentation on how to regenerate them if needed

|

cc @seemethere @malfet @pytorch/pytorch-dev-infra

|

https://github.com/pytorch/pytorch/issues/74256

|

open

|

[

"module: ci",

"triaged"

] | 2022-03-15T20:21:20Z

| 2022-03-16T17:30:02Z

| null |

seemethere

|

pytorch/torchx

| 422

|

kubernetes: add support for persistent volume claim volumes

|

## Description

<!-- concise description of the feature/enhancement -->

Add support for PersistentVolumeClaim mounts to Kubernetes scheduler.

## Motivation/Background

<!-- why is this feature/enhancement important? provide background context -->

https://github.com/pytorch/torchx/pull/420 adds bindmounts to K8S, we want to add in persistent volume claims for Kubernetes which will let us support most of the other remote mounts.

## Detailed Proposal

<!-- provide a detailed proposal -->

Add a new mount type to specs:

```

class MountTypes(Enum):

PERSISTENT_CLAIM = "persistent-claim"

BIND = "bind"

class PersistentClaimMount(Mount):

name: str

dst_path: str

read_only: bool = False

class Role:

...

mounts: List[Union[BindMount,PersistentClaimMount]]

```

Add a new format to `parse_mounts`:

```

--mounts bind=persistent-claim,name=foo,dst=/foo[,readonly]

```

## Alternatives

<!-- discuss the alternatives considered and their pros/cons -->

Users can already mount a volume on the host node and then bind mount it into kubernetes pod but this violates some isolation principles and can be an issue from a security perspective. It also is a worse experience for users since the mounts need to be mounted on ALL hosts.

## Additional context/links

<!-- link to code, documentation, etc. -->

* V1Volume https://github.com/kubernetes-client/python/blob/master/kubernetes/docs/V1Volume.md

* V1PersistentVolume https://github.com/kubernetes-client/python/blob/master/kubernetes/docs/V1PersistentVolumeClaimVolumeSource.md

* FSx on EKS https://github.com/kubernetes-sigs/aws-fsx-csi-driver/blob/master/examples/kubernetes/static_provisioning/README.md

|

https://github.com/meta-pytorch/torchx/issues/422

|

closed

|

[] | 2022-03-15T18:21:10Z

| 2022-03-16T22:12:26Z

| 0

|

d4l3k

|

pytorch/TensorRT

| 929

|

❓ [Question] Expected isITensor() to be true but got false Requested ITensor from Var, however Var type is c10::IValue

|

I try to use python trtorch==0.4.1 to compile my own pytorch jit traced model, and I find that it goes wrong with the following information:

`

Traceback (most recent call last):

File "./prerecall_server.py", line 278, in <module>

ModelServing(args),

File "./prerecall_server.py",, line 133, in __init__

self.model = trtorch.compile(self.model, compile_settings)

File "/usr/local/lib/python3.6/dist-packages/trtorch/_compiler.py", line 73, in compile

compiled_cpp_mod = trtorch._C.compile_graph(module._c, _parse_compile_spec(compile_spec))

RuntimeError: [Error thrown at core/conversion/var/Var.cpp:149] Expected isITensor() to be true but got false

Requested ITensor from Var, however Var type is c10::IValue

`

I make debug and find that the module contains the unknown operation.

`

class Causal_Norm_Classifier(nn.Module):

def __init__(self, num_classes=1000, feat_dim=2048, use_effect=False, num_head=2, tau=16.0, alpha=1.0, gamma=0.03125, mu=0.9, *args):

super(Causal_Norm_Classifier, self).__init__()

# default alpha = 3.0

#self.weight = nn.Parameter(torch.Tensor(num_classes, feat_dim).cuda(), requires_grad=True)

self.scale = tau / num_head # 16.0 / num_head

self.norm_scale = gamma # 1.0 / 32.0

self.alpha = alpha # 3.0

self.num_head = num_head

self.feat_dim = feat_dim

self.head_dim = feat_dim // num_head

self.use_effect = use_effect

self.relu = nn.ReLU(inplace=True)

self.mu = mu

self.register_parameter('weight', nn.Parameter(torch.Tensor(num_classes, feat_dim), requires_grad=True))

self.reset_parameters(self.weight)

def reset_parameters(self, weight):

stdv = 1. / math.sqrt(weight.size(1))

weight.data.uniform_(-stdv, stdv)

def forward(self, x, training=True, use_effect=True):

# calculate capsule normalized feature vector and predict

normed_w = self.multi_head_call(self.causal_norm, self.weight, weight=self.norm_scale)

normed_x = self.multi_head_call(self.l2_norm, x)

y = torch.mm(normed_x * self.scale, normed_w.t())

return y

def multi_head_call(self, func, x, weight=None):

assert len(x.shape) == 2

x_list = torch.split(x, self.head_dim, dim=1)

if weight:

y_list = [func(item, weight) for item in x_list]

else:

y_list = [func(item) for item in x_list]

assert len(x_list) == self.num_head

assert len(y_list) == self.num_head

return torch.cat(y_list, dim=1)

def l2_norm(self, x):

normed_x = x / torch.norm(x, 2, 1, keepdim=True)

return normed_x

def causal_norm(self, x, weight):

norm= torch.norm(x, 2, 1, keepdim=True)

normed_x = x / (norm + weight)

return normed_x

`

Can you help me with this?

|

https://github.com/pytorch/TensorRT/issues/929

|

closed

|

[

"question",

"No Activity",

"component: partitioning"

] | 2022-03-15T10:17:07Z

| 2023-04-01T00:02:11Z

| null |

clks-wzz

|

pytorch/tutorials

| 1,860

|

Where is the mnist_sample notebook?

|

In tutorial [WHAT IS TORCH.NN REALLY?](https://pytorch.org/tutorials/beginner/nn_tutorial.html#closing-thoughts), `Closing thoughts` part:

```

To see how simple training a model can now be, take a look at the mnist_sample sample notebook.

```

Does`mnist_sample notebook ` refer to https://github.com/pytorch/tutorials/blob/master/beginner_source/nn_tutorial.py and https://pytorch.org/tutorials/_downloads/5ddab57bb7482fbcc76722617dd47324/nn_tutorial.ipynb ?

Note:

https://github.com/pytorch/tutorials/blob/b1d8993adc3663f0f00d142ac67f6695baaf107a/beginner_source/nn_tutorial.py#L853

|

https://github.com/pytorch/tutorials/issues/1860

|

closed

|

[] | 2022-03-14T12:21:14Z

| 2022-08-18T17:35:34Z

| null |

Yang-Xijie

|

pytorch/torchx

| 421

|

Document usage of .torchxconfig

|

## 📚 Documentation

## Link

Current `.torchxconfig` docs (https://pytorch.org/torchx/main/runner.config.html) explain how it works and its APIs but does not provide any practical guidance on what configs can be put into it and why its useful.

## What does it currently say?

Nothing wrong with what it currently says.

## What should it say?

Should add more practical user guide on what are the supported configs in `.torchxconfig` and under what circumstances it gets picked up with the `torchx` CLI. As well as:

1. Examples

2. Best Practices

## Why?

Current .torchxconfig docs is useful to the programmer but not for the user.

|

https://github.com/meta-pytorch/torchx/issues/421

|

closed

|

[] | 2022-03-12T00:30:59Z

| 2022-03-28T20:58:44Z

| 1

|

kiukchung

|

pytorch/torchx

| 418

|

cli/colors: crash when importing if sys.stdout is closed

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

Sometimes `sys.stdout` is closed and `isatty()` throws an error at https://github.com/pytorch/torchx/blob/main/torchx/cli/colors.py#L11

Switching to a variant that checks if it's closed should work:

```

not sys.stdout.closed and sys.stdout.isatty()

```

Module (check all that applies):

* [ ] `torchx.spec`

* [ ] `torchx.component`

* [ ] `torchx.apps`

* [ ] `torchx.runtime`

* [x] `torchx.cli`

* [ ] `torchx.schedulers`

* [ ] `torchx.pipelines`

* [ ] `torchx.aws`

* [ ] `torchx.examples`

* [ ] `other`

## To Reproduce

I'm not sure how to repro this externally other than explicitly closing `sys.stdout`

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

```

I/O operation on closed file

Stack trace:

...

from torchx.cli.cmd_log import get_logs

File: <"/mnt/xarfuse/uid-27156/4adc7caa-seed-nspid4026533510_cgpid2017229-ns-4026533507/torchx/cli/cmd_log.py">, line 20, in <module>

from torchx.cli.colors import GREEN, ENDC

File: <"/mnt/xarfuse/uid-27156/4adc7caa-seed-nspid4026533510_cgpid2017229-ns-4026533507/torchx/cli/colors.py">, line 11, in <module>

if sys.stdout.isatty():

```

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

Doesn't crash

## Environment

- torchx version (e.g. 0.1.0rc1): main

- Python version:

- OS (e.g., Linux):

- How you installed torchx (`conda`, `pip`, source, `docker`):

- Docker image and tag (if using docker):

- Git commit (if installed from source):

- Execution environment (on-prem, AWS, GCP, Azure etc):

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/meta-pytorch/torchx/issues/418

|

closed

|

[

"bug",

"cli"

] | 2022-03-11T19:24:44Z

| 2022-03-11T23:32:30Z

| 0

|

d4l3k

|

pytorch/extension-cpp

| 76

|

How to debug in cuda-pytorch env?

|

Hi! I am wondering how to debug in such environment? I have tried to insert a "printf("hello wolrd")" sentence in .cu file, but it compiles failure! If I delete it, everything works fine..... So how you debug in such environment? Thank you!!!!

|

https://github.com/pytorch/extension-cpp/issues/76

|

open

|

[] | 2022-03-10T07:45:31Z

| 2022-03-10T07:45:31Z

| null |

Arsmart123

|

huggingface/datasets

| 3,881

|

How to use Image folder

|

Ran this code

```

load_dataset("imagefolder", data_dir="./my-dataset")

```

`https://raw.githubusercontent.com/huggingface/datasets/master/datasets/imagefolder/imagefolder.py` missing

```

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

/tmp/ipykernel_33/1648737256.py in <module>

----> 1 load_dataset("imagefolder", data_dir="./my-dataset")

/opt/conda/lib/python3.7/site-packages/datasets/load.py in load_dataset(path, name, data_dir, data_files, split, cache_dir, features, download_config, download_mode, ignore_verifications, keep_in_memory, save_infos, revision, use_auth_token, task, streaming, script_version, **config_kwargs)

1684 revision=revision,

1685 use_auth_token=use_auth_token,

-> 1686 **config_kwargs,

1687 )

1688

/opt/conda/lib/python3.7/site-packages/datasets/load.py in load_dataset_builder(path, name, data_dir, data_files, cache_dir, features, download_config, download_mode, revision, use_auth_token, script_version, **config_kwargs)

1511 download_config.use_auth_token = use_auth_token

1512 dataset_module = dataset_module_factory(

-> 1513 path, revision=revision, download_config=download_config, download_mode=download_mode, data_files=data_files

1514 )

1515

/opt/conda/lib/python3.7/site-packages/datasets/load.py in dataset_module_factory(path, revision, download_config, download_mode, force_local_path, dynamic_modules_path, data_files, **download_kwargs)

1200 f"Couldn't find a dataset script at {relative_to_absolute_path(combined_path)} or any data file in the same directory. "

1201 f"Couldn't find '{path}' on the Hugging Face Hub either: {type(e1).__name__}: {e1}"

-> 1202 ) from None

1203 raise e1 from None

1204 else:

FileNotFoundError: Couldn't find a dataset script at /kaggle/working/imagefolder/imagefolder.py or any data file in the same directory. Couldn't find 'imagefolder' on the Hugging Face Hub either: FileNotFoundError: Couldn't find file at https://raw.githubusercontent.com/huggingface/datasets/master/datasets/imagefolder/imagefolder.py

```

|

https://github.com/huggingface/datasets/issues/3881

|

closed

|

[

"question"

] | 2022-03-09T21:18:52Z

| 2022-03-11T08:45:52Z

| null |

rozeappletree

|

pytorch/examples

| 969

|

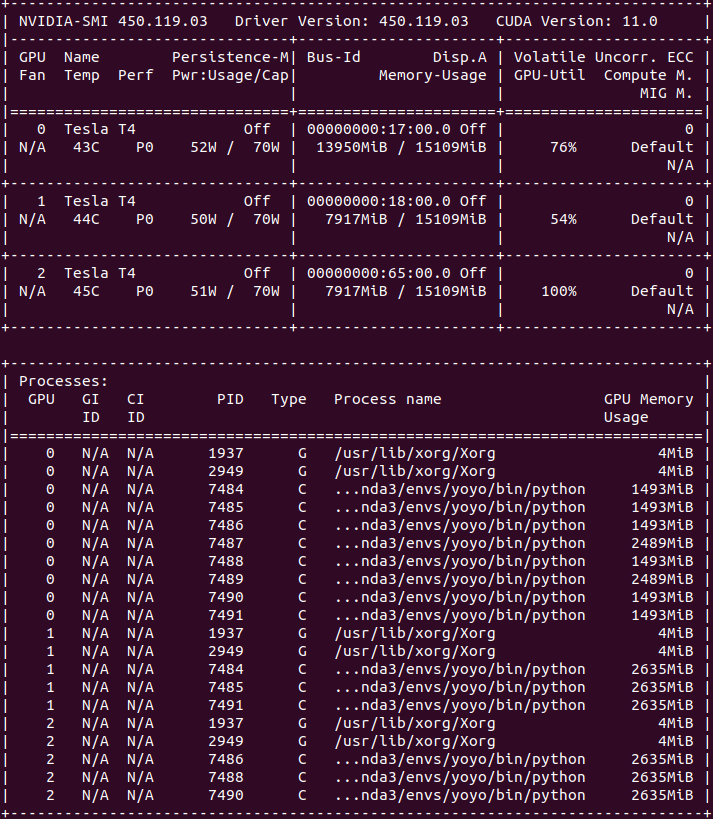

DDP: why does every process allocate memory of GPU 0 and how to avoid it?

|

Run [this](https://github.com/pytorch/examples/tree/main/imagenet) example with 2 GPUs.

process 2 will allocate some memory on GPU 0.

```

python main.py --multiprocessing-distributed --world-size 1 --rank 0

```

I have carefully checked the sample code and there seems to be no obvious error that would cause process 2 to transfer data to GPU 0.

So:

1. Why does process 2 allocate memory of GPU 0?

2. Is this part of the data involved in the calculation? I think if this part of the data is involved in the calculation when the number of processes becomes large, it will cause GPU 0 to be seriously overloaded?

3. Is there any way to avoid it?

Thanks in advance to partners in the PyTorch community for their hard work.

|

https://github.com/pytorch/examples/issues/969

|

open

|

[

"distributed"

] | 2022-03-08T13:41:16Z

| 2024-09-22T11:41:26Z

| null |

siaimes

|

huggingface/datasets

| 3,854

|

load only England English dataset from common voice english dataset

|

training_data = load_dataset("common_voice", "en",split='train[:250]+validation[:250]')

testing_data = load_dataset("common_voice", "en", split="test[:200]")

I'm trying to load only 8% of the English common voice data with accent == "England English." Can somebody assist me with this?

**Typical Voice Accent Proportions:**

- 24% United States English

- 8% England English

- 5% India and South Asia (India, Pakistan, Sri Lanka)

- 3% Australian English

- 3% Canadian English

- 2% Scottish English

- 1% Irish English

- 1% Southern African (South Africa, Zimbabwe, Namibia)

- 1% New Zealand English

Can we replicate this for Age as well?

**Age proportions of the common voice:-**

- 24% 19 - 29

- 14% 30 - 39

- 10% 40 - 49

- 6% < 19

- 4% 50 - 59

- 4% 60 - 69

- 1% 70 – 79

|

https://github.com/huggingface/datasets/issues/3854

|

closed

|

[

"question"

] | 2022-03-08T09:40:52Z

| 2024-03-23T12:40:58Z

| null |

amanjaiswal777

|

pytorch/TensorRT

| 912

|

✨[Feature] New Release for pip

|

Would it be possible to get a new release for use with pip?

There have been quite a few features and bug-fixes added since November, and it would be great to have an up to date version available.

I know that docker containers are often recommended, but that's often not a viable option.

Thank you for all of the great work!!

|

https://github.com/pytorch/TensorRT/issues/912

|

closed

|

[

"question"

] | 2022-03-06T05:27:27Z

| 2022-03-06T21:25:13Z

| null |

dignakov

|

pytorch/torchx

| 405

|

SLURM quality of life improvements

|

## Description

Making a couple of requests to improve QoL on SLURM

## Detailed Proposal

It would be helpful to have -

- [x] The ability to specify the output path. Currently, you need to cd to the right path for this, which generally needs a helper function to set up the directory, cd to it, and then launch via torchx. torchx can ideally handle it for us. #416

- [x] Code isolation and reproducibility. While doing research, we make a change, launch an experiment, and repeat. To make sure each experiment uses the same consistent code, we copy the code to the experiment directory (which also helps with reproducibility). #416

- [ ] Verification of the passed launch script. If I launch from a wrong directory for instance, I would still queue up the job, wait for a few minutes / hours only to crash because of a wrong path (i.e. the launch script does not exist).

- [x] Being able to specify a job name - SLURM shows job details when running the `squeue` command including the job name. If our jobs are all run via torchx, every job will be named `train_app-{i}` which makes it hard to identify which experiment / project the job is from.

- [x] The `time` argument doesn't say what the unit is - maybe we just follow the SLURM API, but it would be nice if we clarified that.

- [ ] torchx submits jobs in [heterogeneous mode](https://slurm.schedmd.com/heterogeneous_jobs.html). This is something FAIR users don't have familiarity with - I'm guessing in terms of execution and command support there should be feature and scheduling speed parity (not sure about the latter)? The `squeue` logs show every node as a separate line - so a 32 node job would take 32 lines instead of 1. This just makes it harder to monitor jobs - not a technical issue, just a QoL one :)

- [x] The job logs are created in `slurm-{job-id}-train_app-{node-id}.out` files (per node) and a single `slurm-{job-id}.out`. Normally, our jobs instead have logs of the form `{job-id}-{node-id}.out` and `{job-id}-{node-id}.err` (per node) - the separation between `stderr` and `stdout` helps find which machine actually crashed more easily. And I'm not sure what `slurm-{job-id}.out` corresponds to - maybe it's a consequence of the heterogeneous jobs? With torchelastic, it becomes harder to debug which node crashed since every node logs a crash (so grepping for `Traceback` will return each log file instead of just the node which originally crashed) - maybe there is a way to figure this out and I just don't know what to look for?

- [ ] The `global_rank` is not equal to `local_rank + node_id * gpus_per_node`, i.e. the global rank 0 can be on node 3.

- [ ] automatically set nomem on pcluster

|

https://github.com/meta-pytorch/torchx/issues/405

|

open

|

[

"slurm"

] | 2022-03-04T17:42:08Z

| 2022-04-14T21:42:21Z

| 5

|

mannatsingh

|

pytorch/serve

| 1,487

|

how to get model.py file ?

|

`https://github.com/pytorch/serve/blob/master/docker/README.md#create-torch-model-archiver-from-container` in

the 4 step ,how to get model.py file?

I followed the doc step by step ,but in step 4

`torch-model-archiver --model-name densenet161 --version 1.0 --model-file /home/model-server/examples/image_classifier/densenet_161/model.py --serialized-file /home/model-server/examples/image_classifier/densenet161-8d451a50.pth --export-path /home/model-server/model-store --extra-files /home/model-server/examples/image_classifier/index_to_name.json --handler image_classifier`

error because no model.py file.

where to get this model.py file

|

https://github.com/pytorch/serve/issues/1487

|

closed

|

[] | 2022-03-04T01:41:59Z

| 2022-03-04T20:03:41Z

| null |

jaffe-fly

|

pytorch/pytorch

| 73,699

|

How to get tolerance override in OpInfo-based test?

|

### 🐛 Describe the bug

The documentation appears to be wrong, it suggests to use self.rtol and self.precision:

https://github.com/pytorch/pytorch/blob/4168c87ed3ba044c9941447579487a2f37eb7973/torch/testing/_internal/common_device_type.py#L1000

self.tol doesn't seem to exist in my tests.

I did find a self.rel_tol, is that the right flag?

### Versions

main

cc @brianjo @mruberry

|

https://github.com/pytorch/pytorch/issues/73699

|

open

|

[

"module: docs",

"triaged",

"module: testing"

] | 2022-03-02T22:48:11Z

| 2022-03-07T14:42:39Z

| null |

zou3519

|

pytorch/vision

| 5,510

|

[RFC] How do we want to deal with images that include alpha channels?

|

This discussion started in https://github.com/pytorch/vision/pull/5500#discussion_r816503203 and @vfdev-5 and I continued offline.

PIL as well as our image reading functions support RGBA images

https://github.com/pytorch/vision/blob/95d418970e6dbf2e4d928a204c4e620da7bccdc0/torchvision/io/image.py#L16-L31

but our color transformations currently only support RGB images ignoring an extra alpha channel. This leads to wrong results. One thing that we agreed upon is that these transforms should fail if anything but 3 channels is detected.

Still, some datasets include non-RGB images so we need to deal with this for a smooth UX. Previously we implicitly converted every image to RGB before returning it from a dataset

https://github.com/pytorch/vision/blob/f9fbc104c02f277f9485d9f8727f3d99a1cf5f0b/torchvision/datasets/folder.py#L245-L249

Since we no longer decode images in the datasets, we need to provide a solution for the users here. I currently see two possible options:

1. We could deal with this on a per-image basis within the dataset. For example, the train split of ImageNet contains a single RGBA image. We could simply perform an appropriate conversion for irregular image modes in the dataset so this issue is abstracted away from the user. `tensorflow-datasets` uses this approach: https://github.com/tensorflow/datasets/blob/a1caff379ed3164849fdefd147473f72a22d3fa7/tensorflow_datasets/image_classification/imagenet.py#L105-L131

2. The most common non-RGB image in datasets are grayscale images. For example, the train split of ImageNet contains 19970 grayscale images. Thus, the users will need a `transforms.ConvertImageColorSpace("rgb")` in most cases anyway. If that would support RGBA to RGB conversions the problem would also be solved. The conversion happens with this formula:

```

pixel_new = (1 - alpha) * background + alpha * pixel_old

```

where `pixel_{old|new}` is a single value from a color channel. Since we don't know `background` we need to either make assumptions or require the user to provide a value for it. I'd wager a guess that in 99% of the cases the background is white. i.e. `background == 1`, but we can't be sure about that.

Another issue with this is that the user has no option to set the background on a per-image basis in the transforms pipeline if that is needed.

In special case for `alpha == 1` everywhere, the equation above simplifies to

```

pixel_new = pixel_old

```

which is equivalent to stripping the alpha channel. We could check for that and only perform the RGBA to RGB transform if the condition holds or the user supplies a background color.

cc @pmeier @vfdev-5 @datumbox @bjuncek

|

https://github.com/pytorch/vision/issues/5510

|

closed

|

[

"module: datasets",

"module: transforms",

"prototype"

] | 2022-03-02T09:43:42Z

| 2023-03-28T13:01:09Z

| null |

pmeier

|

pytorch/pytorch

| 73,600

|

Add a section in DDP tutorial to explain why DDP sometimes is slower than local training and how to improve it

|

### 📚 The doc issue

Add a section in DDP tutorial to explain why DDP sometimes is slower than local training and how to improve it

### Suggest a potential alternative/fix

_No response_

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang

|

https://github.com/pytorch/pytorch/issues/73600

|

open

|

[

"oncall: distributed",

"triaged",

"module: ddp"

] | 2022-03-01T20:34:58Z

| 2022-03-08T22:03:17Z

| null |

zhaojuanmao

|

pytorch/tensorpipe

| 431

|

How to enable CudaGdrChannel registration in tensorpipeAgent when using pytorch's rpc

|

Can we just enable it by define some environment variables or we need to recompile pytorch? Thx!

|

https://github.com/pytorch/tensorpipe/issues/431

|

closed

|

[] | 2022-03-01T08:14:17Z

| 2022-03-01T12:09:53Z

| null |

eedalong

|

pytorch/tutorials

| 1,839

|

Missing 'img/teapot.jpg', 'img/trilobite.jpg' for `MODEL UNDERSTANDING WITH CAPTUM` tutorial.

|

Running this tutorial: https://pytorch.org/tutorials/beginner/introyt/captumyt.html

Could not found 'img/teapot.jpg', 'img/trilobite.jpg' under _static folder.

Could anyone help to provide?

Thanks!

|

https://github.com/pytorch/tutorials/issues/1839

|

closed

|

[

"question"

] | 2022-02-26T10:32:52Z

| 2022-10-17T16:24:06Z

| null |

MonkandMonkey

|

pytorch/data

| 256

|

Support `keep_key` in `Grouper`?

|

`IterKeyZipper` has an option to keep the key that was zipped on:

https://github.com/pytorch/data/blob/2cf1f208e76301f3e013b7569df0d75275f1aaee/torchdata/datapipes/iter/util/combining.py#L53

Is this something we want to support going forward? If yes, it would be nice to have this also on `Grouper` and possibly other similar datapipes. That would come in handy in situations if the key is used multiple times for example if we have a `IterKeyZipper` after an `Grouper`.

### Additional Context for New Contributors

See comment below

|

https://github.com/meta-pytorch/data/issues/256

|

closed

|

[

"good first issue"

] | 2022-02-25T08:39:53Z

| 2023-01-27T19:03:08Z

| 15

|

pmeier

|

pytorch/TensorRT

| 894

|

❓ [Question] Can you convert model that operates on custom classes?

|

## ❓ Question

I have a torch module that creates objects of custom classes that have tensors as fields. It can be torch.jit.scripted but torch.jit.trace can be problematic. When I torch.jit.script module and then torch_tensorrt.compile it I get the following error: `Unable to get schema for Node %317 : __torch__.src.MyClass = prim::CreateObject() (conversion.VerifyCoverterSupportForBlock)`

## What you have already tried

torch.jit.trace avoids the problem but introduces problems with loops in module.

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.10.2

- CPU Architecture: intel

- OS (e.g., Linux): linux

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Build command you used (if compiling from source):

- Are you using local sources or building from archives: from archives

- Python version: 3.8

- CUDA version: 11.3

- GPU models and configuration: rtx 3090

- Any other relevant information:

|

https://github.com/pytorch/TensorRT/issues/894

|

closed

|

[

"question"

] | 2022-02-24T09:51:13Z

| 2022-05-18T21:21:05Z

| null |

MarekPokropinski

|

pytorch/xla

| 3,391

|

I want to Multi-Node Multi GPU training, how should I configure the environment

|

## ❓ Questions and Help

Running XLA MultiGPU MultiNode,I know that I need to set XRT_SHARD_WORLD_SIZE and XRT_WORKERS, but I don't know how to configure the variable value of XRT_WORKERS.

Are there some examples that exist for me to refer to?

|

https://github.com/pytorch/xla/issues/3391

|

closed

|

[

"stale",

"xla:gpu"

] | 2022-02-23T06:52:01Z

| 2022-04-28T00:10:36Z

| null |

ZhongYFeng

|

pytorch/TensorRT

| 881

|

❓ [Question] How do you convert part of the model to TRT?

|

## ❓ Question

Is it possible to convert only part of the model to TRT. I have model that cannot be directly converted to trt because it uses custom classes. I wanted to convert only modules that can be converted but as I tried it torch cannot save it.

## What you have already tried

I tried the following:

```

import torch.nn

import torch_tensorrt

class MySubmodule(torch.nn.Module):

def __init__(self):

super(MySubmodule, self).__init__()

self.layer = torch.nn.Linear(10, 10)

def forward(self, x):

return self.layer(x)

class MyMod(torch.nn.Module):

def __init__(self):

super(MyMod, self).__init__()

self.submod = MySubmodule()

self.submod = torch_tensorrt.compile(self.submod, inputs=[

torch_tensorrt.Input(shape=(1, 10))

])

def forward(self, x):

return self.submod(x)

if __name__ == "__main__":

model = MyMod()

scripted = torch.jit.script(model)

scripted(torch.zeros(1, 10).cuda())

scripted.save("test.pt")

```

But it raises exception: `RuntimeError: method.qualname() == QualifiedName(selfClass->name()->qualifiedName(), methodName)INTERNAL ASSERT FAILED at "../torch/csrc/jit/serialization/python_print.cpp":1105, please report a bug to PyTorch.

`

## Environment

- PyTorch Version (e.g., 1.0): 1.10.2

- CPU Architecture: intel

- OS (e.g., Linux): linux

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Build command you used (if compiling from source):

- Are you using local sources or building from archives: from archives

- Python version: 3.8

- CUDA version: 11.3

- GPU models and configuration: rtx 3090

- Any other relevant information:

|

https://github.com/pytorch/TensorRT/issues/881

|

closed

|

[

"question"

] | 2022-02-18T09:00:43Z

| 2022-02-19T23:57:17Z

| null |

MarekPokropinski

|

pytorch/TensorRT

| 880

|

❓ [Question] What is the difference between docker built on PyTorch NGC Container and PyTorch NGC Container?

|

## ❓ Question

Since PyTorch NGC 21.11+ already includes Torch-TensorRT, is it possible to use Torch-TensorRT directly in PyTorch NGC Container?

## What you have already tried