repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

huggingface/datasets

| 4,491

|

Dataset Viewer issue for Pavithree/test

|

### Link

https://huggingface.co/datasets/Pavithree/test

### Description

I have extracted the subset of original eli5 dataset found at hugging face. However, while loading the dataset It throws ArrowNotImplementedError: Unsupported cast from string to null using function cast_null error. Is there anything missing from my end? Kindly help.

### Owner

_No response_

|

https://github.com/huggingface/datasets/issues/4491

|

closed

|

[

"dataset-viewer"

] | 2022-06-14T13:23:10Z

| 2022-06-14T14:37:21Z

| 1

|

Pavithree

|

pytorch/examples

| 1,012

|

Using SLURM for Imagenet training on multiple nodes

|

In the pytorch imagenet example of this repo, it says that for multiple nodes we have to run the command on each node like below:

Since I am using a shared HPC cluster with SLURM, I cannot actively know which nodes my training will use so I'm not sure how to run these two commands. How can I run these two commands on the separate nodes using SLURM?

|

https://github.com/pytorch/examples/issues/1012

|

closed

|

[

"distributed"

] | 2022-06-14T09:39:59Z

| 2022-07-10T20:11:43Z

| 2

|

b0neval

|

pytorch/pytorch

| 79,495

|

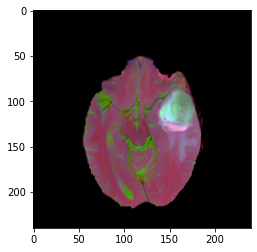

How to stacked RGB images

|

### 🚀 The feature, motivation and pitch

Hi, pytorch support teams.

I want to stack a RGB images.

I want to construct a 3D or 4D RGB tensor.

And, create a GAN model using these tensor.

How do I define how to create such a tensor?

I would like to stack the attached 2D RGB images.

Or can you extract each RGB element from a 3D image as a 3D tensor?

Kind regards,

yoshimura.

### Alternatives

_No response_

### Additional context

|

https://github.com/pytorch/pytorch/issues/79495

|

closed

|

[] | 2022-06-14T02:40:40Z

| 2022-06-14T18:01:50Z

| null |

kazuma0606

|

pytorch/tutorials

| 1,945

|

Calculating accuracy.

|

How can i calculate the accuracy of the model on seq2seq with attention chatbot?

|

https://github.com/pytorch/tutorials/issues/1945

|

closed

|

[

"question"

] | 2022-06-13T22:34:03Z

| 2022-08-17T20:26:00Z

| null |

OmarHaitham520

|

pytorch/torchx

| 514

|

Launching hello world job on Kubernetes and getting logs

|

## 📚 Documentation

## Link

<!-- link to the problematic documentation -->

https://pytorch.org/torchx/0.1.0rc2/quickstart.html

## What does it currently say?

<!-- copy paste the section that is wrong -->

`torchx run --scheduler kubernetes my_component.py:greet --image "my_app:latest" --user "your name"`

The documentation lacks information about getting logs for the hello world example with Kubernetes cluster.

## What should it say?

<!-- the proposed new documentation -->

The user should have a kubectl CLI configured. Refer to [this](https://kubernetes.io/docs/reference/kubectl/)

To get the logs of hello world job:

`kubectl logs <pod name>`

|

https://github.com/meta-pytorch/torchx/issues/514

|

open

|

[

"documentation"

] | 2022-06-13T14:20:20Z

| 2022-06-13T16:50:35Z

| 1

|

vishakha-ramani

|

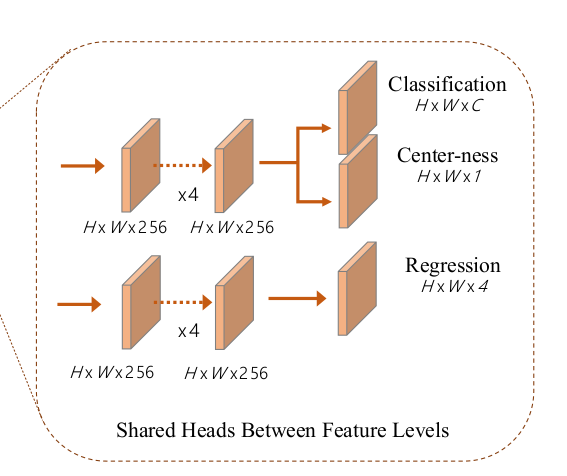

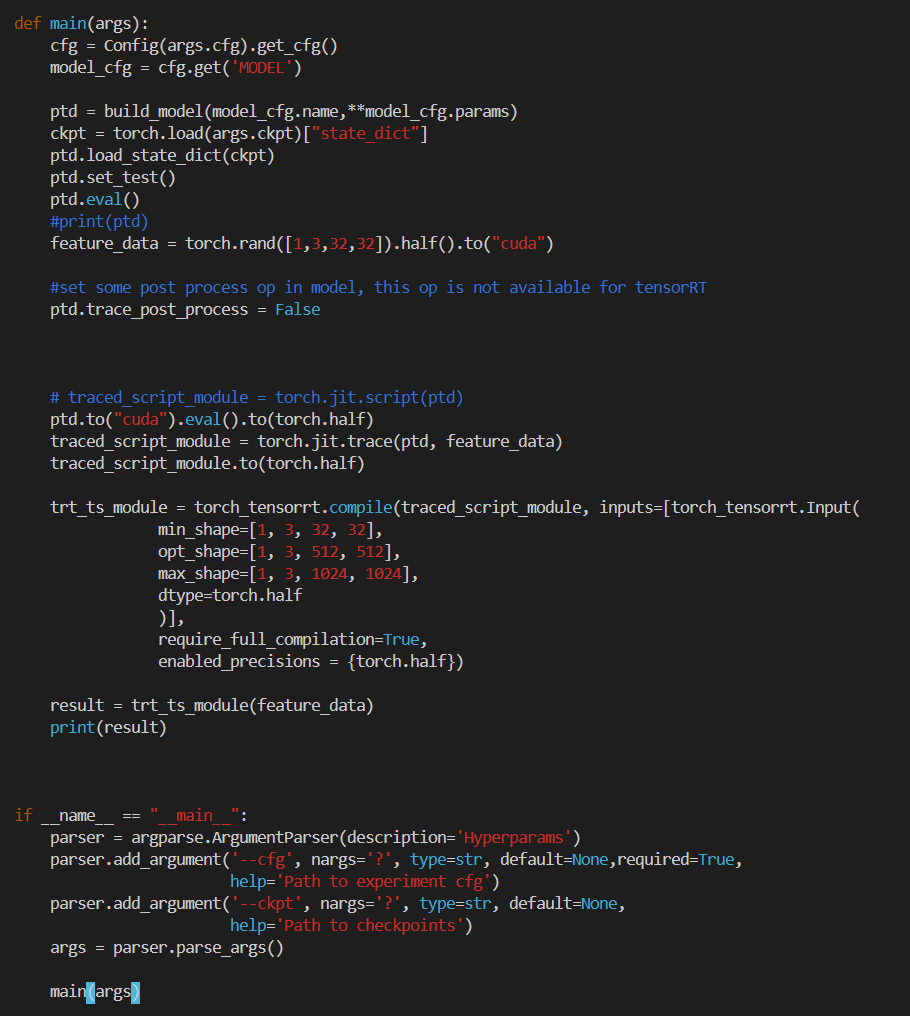

pytorch/TensorRT

| 1,114

|

How can i compile CUDA C in this project❓ [Question] How do you ....?

|

## ❓ Question

I want compile tensorrt plugin in this project. But I do not know how to use bazel to compile the cuda c.

## What you have already tried

<!-- A clear and concise description of what you have already done. -->

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0):

- CPU Architecture:

- OS (e.g., Linux):

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source):

- Build command you used (if compiling from source):

- Are you using local sources or building from archives:

- Python version:

- CUDA version:

- GPU models and configuration:

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1114

|

closed

|

[

"question"

] | 2022-06-13T11:27:52Z

| 2022-06-20T22:11:37Z

| null |

p517332051

|

pytorch/serve

| 1,684

|

How to decode the gRPC PredictionResponse string efficiently

|

### 📚 The doc issue

There is no documentation about decoding the received bytes form PredictionResponse into torch tensor efficiently. Currently, the only working solution is using `ast.literal_eval`, which is extremely slow.

```

response = inference_stub.Predictions(

inference_pb2.PredictionsRequest(model_name=model_name, input=input_data))

predictions = torch.astensor(literal_eval(response.prediction.decode('utf-8')))

```

Using methods like numpy.fromstring, numpy.frombuffer or torch.frombuffer returns the following error:

```

> np.fromstring(response.prediction.decode("utf-8"))

Traceback (most recent call last):

File "<string>", line 1, in <module>

ValueError: string size must be a multiple of element size

```

The following returns an incorrect tensor values. The number of elements are not the same as expected number of elements.

```

torch.frombuffer(response.prediction, dtype = torch.float32)

```

### Suggest a potential alternative/fix

_No response_

|

https://github.com/pytorch/serve/issues/1684

|

open

|

[

"documentation"

] | 2022-06-13T10:47:16Z

| 2022-09-20T11:50:44Z

| null |

IamMohitM

|

pytorch/pytorch

| 79,384

|

torch.load() fails on MPS backend ("don't know how to restore data location")

|

### 🐛 Describe the bug

```bash

# warning: 5.8GB file

wget https://huggingface.co/Cene655/ImagenT5-3B/resolve/main/model.pt

```

```python

import torch

torch.load('./model.pt', map_location='mps')

```

Error thrown [from serialization.py](https://github.com/pytorch/pytorch/blob/bd1a35dfc894eced537b825e5569836e6a91266d/torch/serialization.py#L178):

```

Exception has occurred: RuntimeError (note: full exception trace is shown but execution is paused at: _run_module_as_main)

don't know how to restore data location of torch.storage._UntypedStorage (tagged with mps)

File "/Users/birch/git/imagen-pytorch-cene/venv/lib/python3.9/site-packages/torch/serialization.py", line 178, in default_restore_location

raise RuntimeError("don't know how to restore data location of "

File "/Users/birch/git/imagen-pytorch-cene/venv/lib/python3.9/site-packages/torch/serialization.py", line 970, in restore_location

return default_restore_location(storage, map_location)

File "/Users/birch/git/imagen-pytorch-cene/venv/lib/python3.9/site-packages/torch/serialization.py", line 1001, in load_tensor

wrap_storage=restore_location(storage, location),

File "/Users/birch/git/imagen-pytorch-cene/venv/lib/python3.9/site-packages/torch/serialization.py", line 1019, in persistent_load

load_tensor(dtype, nbytes, key, _maybe_decode_ascii(location))

File "/Users/birch/git/imagen-pytorch-cene/venv/lib/python3.9/site-packages/torch/serialization.py", line 1049, in _load

result = unpickler.load()

File "/Users/birch/git/imagen-pytorch-cene/venv/lib/python3.9/site-packages/torch/serialization.py", line 712, in load

return _load(opened_zipfile, map_location, pickle_module, **pickle_load_args)

File "/Users/birch/git/imagen-pytorch-cene/repro.py", line 2, in <module>

torch.load('./ImagenT5-3B/model.pt', map_location='mps')

File "/Users/birch/anaconda3/envs/torch-nightly/lib/python3.9/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/Users/birch/anaconda3/envs/torch-nightly/lib/python3.9/runpy.py", line 97, in _run_module_code

_run_code(code, mod_globals, init_globals,

File "/Users/birch/anaconda3/envs/torch-nightly/lib/python3.9/runpy.py", line 268, in run_path

return _run_module_code(code, init_globals, run_name,

File "/Users/birch/anaconda3/envs/torch-nightly/lib/python3.9/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/Users/birch/anaconda3/envs/torch-nightly/lib/python3.9/runpy.py", line 197, in _run_module_as_main (Current frame)

return _run_code(code, main_globals, None,

```

I think the solution will involve adding a [`register_package()` entry](https://github.com/pytorch/pytorch/blob/bd1a35dfc894eced537b825e5569836e6a91266d/torch/serialization.py#L160-L161) for the mps backend.

### Versions

```

PyTorch version: 1.13.0.dev20220610

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.4 (arm64)

GCC version: Could not collect

Clang version: 13.0.0 (clang-1300.0.29.30)

CMake version: version 3.22.1

Libc version: N/A

Python version: 3.9.12 (main, Jun 1 2022, 06:34:44) [Clang 12.0.0 ] (64-bit runtime)

Python platform: macOS-12.4-arm64-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] imagen-pytorch==0.0.0

[pip3] numpy==1.22.4

[pip3] torch==1.13.0.dev20220610

[pip3] torchaudio==0.14.0.dev20220603

[pip3] torchvision==0.14.0.dev20220609

[conda] numpy 1.23.0rc2 pypi_0 pypi

[conda] torch 1.13.0.dev20220606 pypi_0 pypi

[conda] torchaudio 0.14.0.dev20220603 pypi_0 pypi

[conda] torchvision 0.14.0a0+f9f721d pypi_0 pypi

```

cc @mruberry @kulinseth @albanD

|

https://github.com/pytorch/pytorch/issues/79384

|

closed

|

[

"module: serialization",

"triaged",

"module: mps"

] | 2022-06-12T19:30:24Z

| 2022-08-06T09:25:21Z

| null |

Birch-san

|

huggingface/datasets

| 4,478

|

Dataset slow during model training

|

## Describe the bug

While migrating towards 🤗 Datasets, I encountered an odd performance degradation: training suddenly slows down dramatically. I train with an image dataset using Keras and execute a `to_tf_dataset` just before training.

First, I have optimized my dataset following https://discuss.huggingface.co/t/solved-image-dataset-seems-slow-for-larger-image-size/10960/6, which actually improved the situation from what I had before but did not completely solve it.

Second, I saved and loaded my dataset using `tf.data.experimental.save` and `tf.data.experimental.load` before training (for which I would have expected no performance change). However, I ended up with the performance I had before tinkering with 🤗 Datasets.

Any idea what's the reason for this and how to speed-up training with 🤗 Datasets?

## Steps to reproduce the bug

```python

# Sample code to reproduce the bug

from datasets import load_dataset

import os

dataset_dir = "./dataset"

prep_dataset_dir = "./prepdataset"

model_dir = "./model"

# Load Data

dataset = load_dataset("Lehrig/Monkey-Species-Collection", "downsized")

def read_image_file(example):

with open(example["image"].filename, "rb") as f:

example["image"] = {"bytes": f.read()}

return example

dataset = dataset.map(read_image_file)

dataset.save_to_disk(dataset_dir)

# Preprocess

from datasets import (

Array3D,

DatasetDict,

Features,

load_from_disk,

Sequence,

Value

)

import numpy as np

from transformers import ImageFeatureExtractionMixin

dataset = load_from_disk(dataset_dir)

num_classes = dataset["train"].features["label"].num_classes

one_hot_matrix = np.eye(num_classes)

feature_extractor = ImageFeatureExtractionMixin()

def to_pixels(image):

image = feature_extractor.resize(image, size=size)

image = feature_extractor.to_numpy_array(image, channel_first=False)

image = image / 255.0

return image

def process(examples):

examples["pixel_values"] = [

to_pixels(image) for image in examples["image"]

]

examples["label"] = [

one_hot_matrix[label] for label in examples["label"]

]

return examples

features = Features({

"pixel_values": Array3D(dtype="float32", shape=(size, size, 3)),

"label": Sequence(feature=Value(dtype="int32"), length=num_classes)

})

prep_dataset = dataset.map(

process,

remove_columns=["image"],

batched=True,

batch_size=batch_size,

num_proc=2,

features=features,

)

prep_dataset = prep_dataset.with_format("numpy")

# Split

train_dev_dataset = prep_dataset['test'].train_test_split(

test_size=test_size,

shuffle=True,

seed=seed

)

train_dev_test_dataset = DatasetDict({

'train': train_dev_dataset['train'],

'dev': train_dev_dataset['test'],

'test': prep_dataset['test'],

})

train_dev_test_dataset.save_to_disk(prep_dataset_dir)

# Train Model

import datetime

import tensorflow as tf

from tensorflow.keras import Sequential

from tensorflow.keras.applications import InceptionV3

from tensorflow.keras.layers import Dense, Dropout, GlobalAveragePooling2D, BatchNormalization

from tensorflow.keras.callbacks import ReduceLROnPlateau, ModelCheckpoint, EarlyStopping

from transformers import DefaultDataCollator

dataset = load_from_disk(prep_data_dir)

data_collator = DefaultDataCollator(return_tensors="tf")

train_dataset = dataset["train"].to_tf_dataset(

columns=['pixel_values'],

label_cols=['label'],

shuffle=True,

batch_size=batch_size,

collate_fn=data_collator

)

validation_dataset = dataset["dev"].to_tf_dataset(

columns=['pixel_values'],

label_cols=['label'],

shuffle=False,

batch_size=batch_size,

collate_fn=data_collator

)

print(f'{datetime.datetime.now()} - Saving Data')

tf.data.experimental.save(train_dataset, model_dir+"/train")

tf.data.experimental.save(validation_dataset, model_dir+"/val")

print(f'{datetime.datetime.now()} - Loading Data')

train_dataset = tf.data.experimental.load(model_dir+"/train")

validation_dataset = tf.data.experimental.load(model_dir+"/val")

shape = np.shape(dataset["train"][0]["pixel_values"])

backbone = InceptionV3(

include_top=False,

weights='imagenet',

input_shape=shape

)

for layer in backbone.layers:

layer.trainable = False

model = Sequential()

model.add(backbone)

model.add(GlobalAveragePooling2D())

model.add(Dense(128, activation='relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

model.add(Dense(64, activation='relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

model.add(Dense(10, activation='softmax'))

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

print(model.summary())

earlyStopping = EarlyStopping(

monitor='val_loss',

patience=10,

verbose=0,

mode='min'

)

mcp_save = ModelCheckp

|

https://github.com/huggingface/datasets/issues/4478

|

open

|

[

"bug"

] | 2022-06-11T19:40:19Z

| 2022-06-14T12:04:31Z

| 5

|

lehrig

|

pytorch/pytorch

| 79,332

|

How to reimplement same behavior in AdaptiveAvgPooling2D

|

### 📚 The doc issue

Hi, am trying written an op which should mimic behavior in Pytorch's AdaptiveAvgPooling, but I can not align the result.

Here is what I do:

```

def test_pool():

a = np.fromfile("in.bin", dtype=np.float32)

a = np.reshape(a, [1, 12, 25, 25])

a = torch.as_tensor(a)

b = F.adaptive_avg_pool2d(a, [7, 7])

print(b)

print(b.shape)

avg_pool = torch.nn.AvgPool2d([7, 7], [3, 3])

c = avg_pool(a)

print(c)

print(c.shape)

```

the `b` and `c` are not equal.

My algorithm was:

```

k = output_size // input_size

stride = input_size - (output_size - 1) * k

padding = 0

```

I think there maybe some gap in real algorithm in pytorch. But can not found any where said it.

so, please make me clarify.

### Suggest a potential alternative/fix

Details in adaptiveavgpool2d

|

https://github.com/pytorch/pytorch/issues/79332

|

closed

|

[] | 2022-06-11T02:06:59Z

| 2022-08-18T11:39:51Z

| null |

lucasjinreal

|

pytorch/functorch

| 867

|

Why is using vmap(jacrev) for BatchNorm2d in non-tracking mode not working?

|

Hi, experts.

I am trying to use vmap(jacrev) to calculate the per-sample jacobian in a batch for my network during inference. However, when there is BatchNorm2d, it does not work. Because during inference, BatchNorm2d is simply applying the statistics previously tracked (and not doing any inter-sample operations), I think it should work just as any other simple operation from my understanding. Is there a way for me to make it work, or is there anything I am misunderstanding?

Below is my minimal code:

```

from functorch import jacrev, vmap

import torch

from torch import nn

layers = nn.Sequential(

nn.Conv2d(3, 3, kernel_size=(3, 3)),

nn.BatchNorm2d(3, track_running_stats=False),

)

x = torch.randn(4, 3, 30, 30)

j = vmap(jacrev(layers))(x)

```

And I get this error in the bn layer

`ValueError: expected 4D input (got 3D input)`

I think this should fundamentally be doable, and just might be because of how vmap and jacrev is implemented.

Is there any simple workaround, or am I misunderstanding anything?

Thank you for any help

|

https://github.com/pytorch/functorch/issues/867

|

closed

|

[] | 2022-06-11T00:15:32Z

| 2022-07-18T18:44:14Z

| 6

|

kwmaeng91

|

pytorch/pytorch

| 79,106

|

How to find the code in '...'?

|

https://github.com/pytorch/pytorch/blob/4305f8e9bda34f18eb7aacab51c63651cfc61802/torch/storage.py#L34

Here, I want to read the detailed code in `.cuda` func, however, I do not find any code about this api?😢

Hope someone could help me!❤

cc @ngimel

|

https://github.com/pytorch/pytorch/issues/79106

|

closed

|

[

"module: cuda",

"triaged"

] | 2022-06-08T02:49:10Z

| 2022-06-13T20:44:10Z

| null |

juinshell

|

pytorch/data

| 574

|

Support offloading data pre-processing to auxiliary devices

|

### 🚀 The feature, motivation and pitch

Occasionally one might find that their GPU is idle due to a bottleneck on the input data pre-processing pipeline (which might include data loading/filtering/manipulation/augmentation/etc). In these cases one could improve resource utilization by offloading some of the pre-processing to auxiliary CPU devices.

I have demonstrated how to do this using gRPC in the following blog post: https://towardsdatascience.com/overcoming-ml-data-preprocessing-bottlenecks-with-grpc-ca30fdc01bee

TensorFlow has built in (experimental) support for this feature (https://www.tensorflow.org/api_docs/python/tf/data/experimental/service) that enables offloading in a few simple steps.

The request here is to include PyTorch APIs for offloading data pre-processing in a manner that would be simple and straight forward to the user... Similar to the TensorFlow APIs (though preferably without any limitations on pre-processing workload) .

### Alternatives

_No response_

### Additional context

_No response_

cc @SsnL @VitalyFedyunin @ejguan @NivekT

|

https://github.com/meta-pytorch/data/issues/574

|

open

|

[

"feature",

"module: dataloader",

"triaged",

"module: data"

] | 2022-06-07T10:12:00Z

| 2022-07-06T18:12:47Z

| 2

|

czmrand

|

pytorch/kineto

| 615

|

How to limit the scope of the profiler?

|

I am wondering if it is possible to limit the scope of the profiler to a particular part of the neural network. Currently, I am trying to analyze the bottleneck of my model using the following pseudocode:

```

import torch.profiler as profiler

with profiler.profile(

activities=[

profiler.ProfilerActivity.CPU,

profiler.ProfilerActivity.CUDA,

],

profile_memory=True,

schedule=profiler.schedule(wait=5, warmup=2, active=1, repeat=1),

on_trace_ready=profiler.tensorboard_trace_handler(tensorboard_logdir)

) as p:

for sample in dataloader:

model(sample)

```

However, the trace I created is still way too large (~800MB) for the tensorboard to function properly. Apparently tensorboard is only able to load the trace if it is smaller than about 500 MB, so I am thinking about limiting the trace of the profiler to only look at part of the neural net that leads to the issue. However, it seems like a warmup is necessary, so inserting the profiler.profile within a network will generate inaccurate results. Is there a way to limit the scope of the profiler without breaking the interface?

|

https://github.com/pytorch/kineto/issues/615

|

closed

|

[] | 2022-06-06T20:34:35Z

| 2022-06-21T17:57:42Z

| null |

hyhuang00

|

pytorch/torchx

| 510

|

Implement an HPO builtin

|

## Description

Add a builtin component for launching HPO (hyper-parameter optimization) jobs. At a high-level something akin to:

```

# for grid search

$ torchx run -s kubernetes hpo.grid_search --paramspacefile=~/parameters.json --component dist.ddp

# for bayesian search

$ torchx run -s kubernetes hpo.bayesian ...

```

In both cases we use the Ax/TorchX integration to run the HPO driver job. (see motivation section below for details)

## Motivation/Background

TorchX already integrates with Ax that supports both bayesian and grid_search HPO. Some definitions before we get started:

1. Ax: Experiment - ([docs](https://ax.dev/docs/glossary.html#experiment)) Defines the HPO search space and holds the optimizer state. Vends out the next set of parameters to search based on the observed results (relevant for Bayesian and Bandit optimizations, not so much for grid search).

2. Ax: Trials - ([docs](https://ax.dev/docs/glossary.html#trial)) A step in an experiment, aka a (training) job that runs with a specific set of hyper-parameters as vended out by the optimizer in the experiment

3. Ax: Runner - ([docs](https://ax.dev/docs/glossary.html#runner)) Responsible for launching trials.

Ax/TorchX integration is done at the Runner level. We implemented an [`ax/TorchXRunner`](https://ax.dev/api/runners.html#module-ax.runners.torchx) that implements Ax's `Runner` interface (do not confuse this with the TorchX runner. TorchX itself defines a runner concept). The `ax/TorchXRunner` runs the ax Trials using TorchX.

The [`ax/TorchXRunnerTest`](https://github.com/facebook/Ax/blob/main/ax/runners/tests/test_torchx.py#L72) serves as a full end-to-end example of how everything works. In summary the test runs a bayesian HPO to minimize the ["booth" function](https://en.wikipedia.org/wiki/Test_functions_for_optimization). **Note that in practice this function is replaced by your "trainer"**. The main module that computes the booth function given the parameters `x_1` and `x_2` as inputs is defined in [`torchx.apps.utils.booth`](https://github.com/pytorch/torchx/blob/main/torchx/apps/utils/booth_main.py).

The abridged code looks something like this:

```python

parameters: List[Parameter] = [

RangeParameter(

name="x1",

lower=-10.0,

upper=10.0,

parameter_type=ParameterType.FLOAT,

),

RangeParameter(

name="x2",

lower=-10.0,

upper=10.0,

parameter_type=ParameterType.FLOAT,

),

]

experiment = Experiment(

name="torchx_booth_sequential_demo",

search_space=SearchSpace(parameters=self._parameters),

optimization_config=OptimizationConfig(

objective = Objective(metric=TorchXMetric(name="booth_eval"),

minimize=True,

),

runner=TorchXRunner(

tracker_base=self.test_dir,

component=utils.booth,

scheduler="local_cwd",

cfg={"prepend_cwd": True},

),

)

scheduler = Scheduler(

experiment=experiment,

generation_strategy=choose_generation_strategy(search_space=experiment.search_space),

options=SchedulerOptions(),

)

for _ in range(3):

scheduler.run_n_trials(max_trials=2)

scheduler.report_results()

```

## Detailed Proposal

The task here is to essentially create pre-packaged applications for the code above. We can define a two types of HPO apps by the "strategy" used:

1. hpo.grid_search

2. hpo.bayesian

Each application will come with a companion "component" (e.g. `hpo.grid_search` and `hpo.bayesian`). The applications should be designed to take as input:

1. parameter space

2. what the objective function is (e.g. trainer)

3. torchx cfgs (e.g. scheduler, scheduler runcfg, etc)

4. ax experiment configs

The challenge is to be able to correctly and sanely "parameterize" the application in such a way that allows the user to sanely pass these argument from the CLI. For complex parameters such as parameter space, one might consider taking a file in a specific format rather than conjuring up a complex string encoding to pass as CLI input.

For instance for the `20 x 20` for `x_1` and `x_2` in the example above, rather than taking the parameter space as:

```

$ torchx run hpo.bayesian --parameter_space x_1=-10:10,x2_=-10:10

```

One can take it as a well defined python parameter file:

```

# params.py

# just defines the parameters using the regular Ax APIs

parameters: List[Parameter] = [

RangeParameter(

name="x1",

lower=-10.0,

upper=10.0,

parameter_type=ParameterType.FLOAT,

),

RangeParameter(

name="x2",

low

|

https://github.com/meta-pytorch/torchx/issues/510

|

open

|

[

"enhancement",

"module: components"

] | 2022-06-03T20:06:10Z

| 2022-10-27T01:55:08Z

| 0

|

kiukchung

|

huggingface/datasets

| 4,439

|

TIMIT won't load after manual download: Errors about files that don't exist

|

## Describe the bug

I get the message from HuggingFace that it must be downloaded manually. From the URL provided in the message, I got to UPenn page for manual download. (UPenn apparently want $250? for the dataset??) ...So, ok, I obtained a copy from a friend and also a smaller version from Kaggle. But in both cases the HF dataloader fails; it is looking for files that don't exist anywhere in the dataset: it is looking for files with lower-case letters like "**test*" (all the filenames in both my copies are uppercase) and certain file extensions that exclude the .DOC which is provided in TIMIT:

## Steps to reproduce the bug

```python

data = load_dataset('timit_asr', 'clean')['train']

```

## Expected results

The dataset should load with no errors.

## Actual results

This error message:

```

File "/home/ubuntu/envs/data2vec/lib/python3.9/site-packages/datasets/data_files.py", line 201, in resolve_patterns_locally_or_by_urls

raise FileNotFoundError(error_msg)

FileNotFoundError: Unable to resolve any data file that matches '['**test*', '**eval*']' at /home/ubuntu/datasets/timit with any supported extension ['csv', 'tsv', 'json', 'jsonl', 'parquet', 'txt', 'blp', 'bmp', 'dib', 'bufr', 'cur', 'pcx', 'dcx', 'dds', 'ps', 'eps', 'fit', 'fits', 'fli', 'flc', 'ftc', 'ftu', 'gbr', 'gif', 'grib', 'h5', 'hdf', 'png', 'apng', 'jp2', 'j2k', 'jpc', 'jpf', 'jpx', 'j2c', 'icns', 'ico', 'im', 'iim', 'tif', 'tiff', 'jfif', 'jpe', 'jpg', 'jpeg', 'mpg', 'mpeg', 'msp', 'pcd', 'pxr', 'pbm', 'pgm', 'ppm', 'pnm', 'psd', 'bw', 'rgb', 'rgba', 'sgi', 'ras', 'tga', 'icb', 'vda', 'vst', 'webp', 'wmf', 'emf', 'xbm', 'xpm', 'zip']

```

But this is a strange sort of error: why is it looking for lower-case file names when all the TIMIT dataset filenames are uppercase? Why does it exclude .DOC files when the only parts of the TIMIT data set with "TEST" in them have ".DOC" extensions? ...I wonder, how was anyone able to get this to work in the first place?

The files in the dataset look like the following:

```

³ PHONCODE.DOC

³ PROMPTS.TXT

³ SPKRINFO.TXT

³ SPKRSENT.TXT

³ TESTSET.DOC

```

...so why are these being excluded by the dataset loader?

## Environment info

- `datasets` version: 2.2.2

- Platform: Linux-5.4.0-1060-aws-x86_64-with-glibc2.27

- Python version: 3.9.9

- PyArrow version: 8.0.0

- Pandas version: 1.4.2

|

https://github.com/huggingface/datasets/issues/4439

|

closed

|

[

"bug"

] | 2022-06-02T16:35:56Z

| 2022-06-03T08:44:17Z

| 3

|

drscotthawley

|

pytorch/vision

| 6,124

|

How to timing 'model.to(device)' correctly?

|

I am using pytorch's api in my python code to measure time for different layers of resnet152 to device(GPU, V-100).However, I cannot get a stable result.

Here is my code:

```python

import torch.nn as nn

device = torch.device('cuda:3' if torch.cuda.is_available() else 'cpu')

model = torchvision.models.resnet152(pretrained=True)

def todevice(_model_, _device_=device):

T0 = time.perf_counter()

_model_.to(_device_)

torch.cuda.synchronize()

T1 = time.perf_counter()

print("model to device %s cost:%s ms" % (_device_, ((T1 - T0) * 1000)))

model1 = nn.Sequential(*list(resnet152.children())[:6])

todevice(model1)

```

When I use the code to test at different time, I can always get different answers, some of them are ridiculous, even to `200ms`.

Also, there are 4 GPU(Tesla V100) in my lab, I don't know whether other extra GPUs will affect my result.

Could you tell me how to timing `model.to(device)` correctly? Is there anything wrong with my code or my lab environment?

|

https://github.com/pytorch/vision/issues/6124

|

closed

|

[

"question"

] | 2022-06-02T11:55:14Z

| 2022-06-06T08:34:34Z

| null |

juinshell

|

pytorch/functorch

| 848

|

AOTAutograd makes unsafe assumptions on how the backward pass will look like

|

## Context: how AOTAutograd works today

Given a function `f`:

- AOTAutograd traces out `run_forward_and_backward_f(*args, *grad_outputs)` to produce `forward_and_backward_trace`

- AOTAutograd partitions `forward_and_backward_trace` into a forward_trace and a backward_trace

- AOTAutograd compiles the forward_trace and backward_trace separately

- The compiled_forward_trace and compiled_backward_trace are stitched into an autograd.Function

## The Problem

In order to trace `run_forward_and_backward_f(*args, *grad_outputs)`, AOTAutograd needs to construct a Proxy for the grad_outputs. This ends up assuming properties of the grad_output: for example, AOTAutograd assumes that the grad_outputs are contiguous.

There are some more adversarial examples that we could construct. If the backward formula of at::sin were instead:

```

def sin_backward(grad_output, input):

if grad_output.is_sparse():

return grad_output * input.sin()

return grad_output * input.cos()

```

then, depending on the properties of the input, the backward that should get executed is different. If AOTAutograd assumes that the Proxy is dense and contiguous, then the backward pass of the generated autograd.Function would be incorrect.

## Potential proposal

Proposal: delay tracing the backward pass until the backward pass is invoked.

So, given a function `f`:

- AOTAutograd constructs a trace of f (that includes intermediates as outputs), `forward_trace`

- AOTAutograd constructs an autograd.Function that has `compiled(forward_trace)` as the forward pass

The autograd.Function's backward pass, when invoked:

- traces out `run_forward_and_backward_f(*args, *grad_outputs)` to produce `forward_and_backward_trace`

- takes the difference of `forward_and_backward_trace` and `forward_trace` to produce `backward_trace`.

- compiles `backward_trace` into `compiled_backward_trace`

- then invokes it.

Things that we haven't mentioned that will need to be thought about:

- how does AOTAutograd's rematerialization come into play here?

Things that we haven't mentioned that should be orthogonal:

- caching. `compiled(forward_trace)` needs a cache that uses the inputs as keys (among other things), `compiled(backward_trace)` needs a cache that takes the (inputs, grad_outputs) as keys.

- what if the backward is user-defined (e.g., autograd.Function) and isn't traceable? See https://github.com/pytorch/pytorch/issues/93723 for ideas

## Alternatives

Keep the current scheme (AOTAutograd traces out both the forward+backward pass at the time of the forward), but somehow prove to ourselves that the produced trace of the backward pass is always correct.

cc @Chillee @anijain2305 @ezyang @anjali411 @albanD

|

https://github.com/pytorch/functorch/issues/848

|

open

|

[] | 2022-06-01T18:18:28Z

| 2023-02-01T01:10:36Z

| 4

|

zou3519

|

huggingface/dataset-viewer

| 332

|

Change moonlanding app token?

|

Should we replace `dataset-preview-backend`with `datasets-server`:

- here: https://github.com/huggingface/moon-landing/blob/f2ee3896cff3aa97aafb3476e190ef6641576b6f/server/models/App.ts#L16

- and here: https://github.com/huggingface/moon-landing/blob/82e71c10ed0b385e55a29f43622874acfc35a9e3/server/test/end_to_end_apps.ts#L243-L271

What are the consequences then? How to do it without too much downtime?

|

https://github.com/huggingface/dataset-viewer/issues/332

|

closed

|

[

"question"

] | 2022-06-01T09:29:12Z

| 2022-09-19T09:33:33Z

| null |

severo

|

huggingface/dataset-viewer

| 325

|

Test if /valid is a blocking request

|

https://github.com/huggingface/datasets-server/issues/250#issuecomment-1142013300

> > the requests to /valid are very long: do they block the incoming requests?)

> Depends on if your long running query is blocking the GIL or not. If you have async calls, it should be able to switch and take care of other requests, if it's computing something then yeah, probably blocking everything else.

- [ ] find if the long requests like /valid are blocking the concurrent requests

- [ ] if so: fix it

|

https://github.com/huggingface/dataset-viewer/issues/325

|

closed

|

[

"bug",

"question"

] | 2022-05-31T13:43:20Z

| 2022-09-16T17:39:20Z

| null |

severo

|

huggingface/datasets

| 4,419

|

Update `unittest` assertions over tuples from `assertEqual` to `assertTupleEqual`

|

**Is your feature request related to a problem? Please describe.**

So this is more a readability improvement rather than a proposal, wouldn't it be better to use `assertTupleEqual` over the tuples rather than `assertEqual`? As `unittest` added that function in `v3.1`, as detailed at https://docs.python.org/3/library/unittest.html#unittest.TestCase.assertTupleEqual, so maybe it's worth updating.

Find an example of an `assertEqual` over a tuple in 🤗 `datasets` unit tests over an `ArrowDataset` at https://github.com/huggingface/datasets/blob/0bb47271910c8a0b628dba157988372307fca1d2/tests/test_arrow_dataset.py#L570

**Describe the solution you'd like**

Start slowly replacing all the `assertEqual` statements with `assertTupleEqual` if the assertion is done over a Python tuple, as we're doing with the Python lists using `assertListEqual` rather than `assertEqual`.

**Additional context**

If so, please let me know and I'll try to go over the tests and create a PR if applicable, otherwise, if you consider this should stay as `assertEqual` rather than `assertSequenceEqual` feel free to close this issue! Thanks 🤗

|

https://github.com/huggingface/datasets/issues/4419

|

closed

|

[

"enhancement"

] | 2022-05-30T12:13:18Z

| 2022-09-30T16:01:37Z

| 3

|

alvarobartt

|

huggingface/datasets

| 4,417

|

how to convert a dict generator into a huggingface dataset.

|

### Link

_No response_

### Description

Hey there, I have used seqio to get a well distributed mixture of samples from multiple dataset. However the resultant output from seqio is a python generator dict, which I cannot produce back into huggingface dataset.

The generator contains all the samples needed for training the model but I cannot convert it into a huggingface dataset.

The code looks like this:

```

for ex in seqio_data:

print(ex[“text”])

```

I need to convert the seqio_data (generator) into huggingface dataset.

the complete seqio code goes here:

```

import functools

import seqio

import tensorflow as tf

import t5.data

from datasets import load_dataset

from t5.data import postprocessors

from t5.data import preprocessors

from t5.evaluation import metrics

from seqio import FunctionDataSource, utils

TaskRegistry = seqio.TaskRegistry

def gen_dataset(split, shuffle=False, seed=None, column="text", dataset_params=None):

dataset = load_dataset(**dataset_params)

if shuffle:

if seed:

dataset = dataset.shuffle(seed=seed)

else:

dataset = dataset.shuffle()

while True:

for item in dataset[str(split)]:

yield item[column]

def dataset_fn(split, shuffle_files, seed=None, dataset_params=None):

return tf.data.Dataset.from_generator(

functools.partial(gen_dataset, split, shuffle_files, seed, dataset_params=dataset_params),

output_signature=tf.TensorSpec(shape=(), dtype=tf.string, name=dataset_name)

)

@utils.map_over_dataset

def target_to_key(x, key_map, target_key):

"""Assign the value from the dataset to target_key in key_map"""

return {**key_map, target_key: x}

dataset_name = 'oscar-corpus/OSCAR-2109'

subset= 'mr'

dataset_params = {"path": dataset_name, "language":subset, "use_auth_token":True}

dataset_shapes = None

TaskRegistry.add(

"oscar_marathi_corpus",

source=seqio.FunctionDataSource(

dataset_fn=functools.partial(dataset_fn, dataset_params=dataset_params),

splits=("train", "validation"),

caching_permitted=False,

num_input_examples=dataset_shapes,

),

preprocessors=[

functools.partial(

target_to_key, key_map={

"targets": None,

}, target_key="targets")],

output_features={"targets": seqio.Feature(vocabulary=seqio.PassThroughVocabulary, add_eos=False, dtype=tf.string, rank=0)},

metric_fns=[]

)

dataset = seqio.get_mixture_or_task("oscar_marathi_corpus").get_dataset(

sequence_length=None,

split="train",

shuffle=True,

num_epochs=1,

shard_info=seqio.ShardInfo(index=0, num_shards=10),

use_cached=False,

seed=42

)

for _, ex in zip(range(5), dataset):

print(ex['targets'].numpy().decode())

```

### Owner

_No response_

|

https://github.com/huggingface/datasets/issues/4417

|

closed

|

[

"question"

] | 2022-05-29T16:28:27Z

| 2022-09-16T14:44:19Z

| null |

StephennFernandes

|

pytorch/pytorch

| 78,365

|

How to calculate the gradient of the previous layer when the gradient of the latter layer is given?

|

Hi, there. Can someone help me solve this problem? if the gradients of a certain layer is known, how can I use the API in torch to calculate the gradient of the previous layer?I would appreciate it if anyone could reply me in time.

|

https://github.com/pytorch/pytorch/issues/78365

|

closed

|

[] | 2022-05-26T16:05:40Z

| 2022-05-31T14:46:40Z

| null |

mankasto

|

pytorch/data

| 469

|

Suggestion: Dataloader with RPC-based workers

|

### 🚀 The feature

A dataloader which communicates with its workers via torch.distributed.rpc API.

### Motivation, pitch

Presently, process-based workers for Dataloader mean the workers live on the same server/PC as the process consuming that data. This incurs the following limitations:

- the pre-processing workload cannot scale beyond the GPU server capacity

- with random sampling, each worker might eventually see all the dataset, which is not cache friendly

### Alternatives

_No response_

### Additional context

A proof of concept is available ~~[here](https://github.com/nlgranger/data/blob/rpc_dataloader/torchdata/rpc/dataloader.py)~~ -> https://github.com/CEA-LIST/RPCDataloader

I have not yet tested how efficient this is compared to communicating the preprocessed batch data via process pipes. Obviously the use of shared-memory is lost when the worker is remote but the TensorPipe rpc backend might be able to take advantage of other fast transfer methods (GPUDirect, rmda?).

The load distribution scheme used in this first implementation is round-robin. I have not yet put thoughts on how to make this modifiable both in term of implementation and API.

|

https://github.com/meta-pytorch/data/issues/469

|

closed

|

[] | 2022-05-26T11:14:13Z

| 2024-01-30T09:29:17Z

| 2

|

nlgranger

|

pytorch/examples

| 1,010

|

Accessing weights of a pre-trained model

|

Hi,

Can you share how to print weights and biases for each layer of a pre-trained Alexnet model?

Regards,

Nivedita

|

https://github.com/pytorch/examples/issues/1010

|

closed

|

[] | 2022-05-26T06:50:13Z

| 2022-06-02T00:11:56Z

| 1

|

nivi1501

|

pytorch/TensorRT

| 1,091

|

❓ [Question] Linking error with PTQ function

|

## ❓ Question

I am getting a linking error when using `torch_tensorrt::ptq::make_int8_calibrator`. I am using the Windows build based on CMake, so I'm not sure if it's a problem with the way it was built, but I suspect not since I can use functions from ::torchscript just fine.

I am trying to create a barebones program to test ptq based on examples/int8/ptq/main.cpp, and I get this linker error whenever `torch_tensorrt::ptq::make_int8_calibrator` is used. Any help would be greatly appreciated.

## Environment

- PyTorch Version (e.g., 1.0): 1.11+cu113

- OS (e.g., Linux): Windows 10

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): libtorch from pytorch.org

- CUDA version: 11.3

## Additional context

This is the linker error that I get:

> Severity Code Description Project File Line Suppression State

Error LNK2019 unresolved external symbol "__declspec(dllimport) class torch_tensorrt::ptq::Int8Calibrator<class nvinfer1::IInt8EntropyCalibrator2,class std::unique_ptr<class torch::data::StatelessDataLoader<class torch::data::datasets::MapDataset<class torch::data::datasets::MapDataset<class datasets::CIFAR10,struct torch::data::transforms::Normalize<class at::Tensor> >,struct torch::data::transforms::Stack<struct torch::data::Example<class at::Tensor,class at::Tensor> > >,class torch::data::samplers::RandomSampler>,struct std::default_delete<class torch::data::StatelessDataLoader<class torch::data::datasets::MapDataset<class torch::data::datasets::MapDataset<class datasets::CIFAR10,struct torch::data::transforms::Normalize<class at::Tensor> >,struct torch::data::transforms::Stack<struct torch::data::Example<class at::Tensor,class at::Tensor> > >,class torch::data::samplers::RandomSampler> > > > __cdecl torch_tensorrt::ptq::make_int8_calibrator<class nvinfer1::IInt8EntropyCalibrator2,class std::unique_ptr<class torch::data::StatelessDataLoader<class torch::data::datasets::MapDataset<class torch::data::datasets::MapDataset<class datasets::CIFAR10,struct torch::data::transforms::Normalize<class at::Tensor> >,struct torch::data::transforms::Stack<struct torch::data::Example<class at::Tensor,class at::Tensor> > >,class torch::data::samplers::RandomSampler>,struct std::default_delete<class torch::data::StatelessDataLoader<class torch::data::datasets::MapDataset<class torch::data::datasets::MapDataset<class datasets::CIFAR10,struct torch::data::transforms::Normalize<class at::Tensor> >,struct torch::data::transforms::Stack<struct torch::data::Example<class at::Tensor,class at::Tensor> > >,class torch::data::samplers::RandomSampler> > > >(class std::unique_ptr<class torch::data::StatelessDataLoader<class torch::data::datasets::MapDataset<class torch::data::datasets::MapDataset<class datasets::CIFAR10,struct torch::data::transforms::Normalize<class at::Tensor> >,struct torch::data::transforms::Stack<struct torch::data::Example<class at::Tensor,class at::Tensor> > >,class torch::data::samplers::RandomSampler>,struct std::default_delete<class torch::data::StatelessDataLoader<class torch::data::datasets::MapDataset<class torch::data::datasets::MapDataset<class datasets::CIFAR10,struct torch::data::transforms::Normalize<class at::Tensor> >,struct torch::data::transforms::Stack<struct torch::data::Example<class at::Tensor,class at::Tensor> > >,class torch::data::samplers::RandomSampler> > >,class std::basic_string<char,struct std::char_traits<char>,class std::allocator<char> > const &,bool)" (__imp_??$make_int8_calibrator@VIInt8EntropyCalibrator2@nvinfer1@@V?$unique_ptr@V?$StatelessDataLoader@V?$MapDataset@V?$MapDataset@VCIFAR10@datasets@@U?$Normalize@VTensor@at@@@transforms@data@torch@@@datasets@data@torch@@U?$Stack@U?$Example@VTensor@at@@V12@@data@torch@@@transforms@34@@datasets@data@torch@@VRandomSampler@samplers@34@@data@torch@@U?$default_delete@V?$StatelessDataLoader@V?$MapDataset@V?$MapDataset@VCIFAR10@datasets@@U?$Normalize@VTensor@at@@@transforms@data@torch@@@datasets@data@torch@@U?$Stack@U?$Example@VTensor@at@@V12@@data@torch@@@transforms@34@@datasets@data@torch@@VRandomSampler@samplers@34@@data@torch@@@std@@@std@@@ptq@torch_tensorrt@@YA?AV?$Int8Calibrator@VIInt8EntropyCalibrator2@nvinfer1@@V?$unique_ptr@V?$StatelessDataLoader@V?$MapDataset@V?$MapDataset@VCIFAR10@datasets@@U?$Normalize@VTensor@at@@@transforms@data@torch@@@datasets@data@torch@@U?$Stack@U?$Example@VTensor@at@@V12@@data@torch@@@transforms@34@@datasets@data@torch@@VRandomSampler@samplers@34@@data@torch@@U?$default_delete@V?$StatelessDataLoader@V?$MapDataset@V?$MapDataset@VCIFAR10@datasets@@U?$Normalize@VTensor@at@@@transforms@data@torch@@@datasets@data@torch@@U?$Stack@U?$Example@VTensor@at@@V12@@data@torch@@@transforms@34@@datasets@data@torch@@VRandomSampler@samplers@34@@data@torch@@@std@@@std@@@01@V?$unique_ptr@V?$StatelessDataLoader@V?$MapDataset@V?$MapDataset@VCIFAR10@datasets@@U?$Normalize@VTensor@at@@@transforms@data@torch@@@datasets@data@torch@@U?$Stack@U?$Example@VTensor@at@@V12@@data@torch@@@transforms@34@@

|

https://github.com/pytorch/TensorRT/issues/1091

|

closed

|

[

"question",

"component: quantization",

"channel: windows"

] | 2022-05-26T01:19:17Z

| 2022-09-02T17:45:50Z

| null |

jonahclarsen

|

pytorch/torchx

| 503

|

add `torchx list` command and `Runner.list` APIs

|

## Description

<!-- concise description of the feature/enhancement -->

Add a `torchx list` and `Runner/Scheduler.list` methods. This would allow listing all jobs the user has launched and see their status when tracking multiple different jobs.

## Motivation/Background

<!-- why is this feature/enhancement important? provide background context -->

Currently users have to use the scheduler specific tools like `sacct/vcctl/ray job list` to see all of their jobs. Adding this would allow users to just interact via the torchx interface and not have to worry about interacting with other tools.

## Detailed Proposal

<!-- provide a detailed proposal -->

We'd likely want something similar to https://docker-py.readthedocs.io/en/stable/containers.html#docker.models.containers.ContainerCollection.list

Filters may be hard to support across all schedulers so we probably want to limit it to just a few common ones or none at all initially. We also want to filter so we only return torchx jobs instead of all jobs on the scheduler.

Limiting it to jobs that the user owns would also be nice to have though may not be feasible for all schedulers.

## Alternatives

<!-- discuss the alternatives considered and their pros/cons -->

## Additional context/links

<!-- link to code, documentation, etc. -->

* https://docker-py.readthedocs.io/en/stable/containers.html#docker.models.containers.ContainerCollection.list

* https://slurm.schedmd.com/sacct.html

* https://docs.aws.amazon.com/batch/latest/APIReference/API_ListJobs.html

* https://github.com/kubernetes-client/python/blob/master/kubernetes/docs/CustomObjectsApi.md#list_namespaced_custom_object

* https://docs.ray.io/en/master/cluster/jobs-package-ref.html#jobsubmissionclient

|

https://github.com/meta-pytorch/torchx/issues/503

|

closed

|

[

"enhancement",

"module: runner",

"cli"

] | 2022-05-25T21:02:11Z

| 2022-09-21T21:52:31Z

| 10

|

d4l3k

|

pytorch/TensorRT

| 1,089

|

I wonder if torch_tensorrt support mixed precisions for different layer

|

**Is your feature request related to a problem? Please describe.**

I write a converter and plugin, but plugin only support fp32, then if I convert with enabled_precisions: torch.int8, then error happend

**Describe the solution you'd like**

if different layer can use different precisions, i can use fp32 this plugin layer and int8 other layers

|

https://github.com/pytorch/TensorRT/issues/1089

|

closed

|

[

"question"

] | 2022-05-25T10:07:21Z

| 2022-05-30T06:05:07Z

| null |

pupumao

|

huggingface/dataset-viewer

| 309

|

Scale the worker pods depending on prometheus metrics?

|

We could scale the number of worker pods depending on:

- the size of the job queue

- the available resources

These data are available in prometheus, and we could use them to autoscale the pods.

|

https://github.com/huggingface/dataset-viewer/issues/309

|

closed

|

[

"question"

] | 2022-05-25T09:56:05Z

| 2022-09-19T09:30:49Z

| null |

severo

|

huggingface/dataset-viewer

| 307

|

Add a /metrics endpoint on every worker?

|

https://github.com/huggingface/dataset-viewer/issues/307

|

closed

|

[

"question"

] | 2022-05-25T09:52:28Z

| 2022-09-16T17:40:55Z

| null |

severo

|

|

pytorch/data

| 454

|

Make `IterToMap` loading more lazily

|

### 🚀 The feature

Currently, `IterToMap` starts to load all data from prior `IterDataPipe` when the first `__getitem__` is invoked here.

https://github.com/pytorch/data/blob/13b574c80e8732744fee6ab9cb7e35b5afc34a3c/torchdata/datapipes/iter/util/converter.py#L78

We can stop loading data from prior `IterDataPipe` whenever we find the requested index. And, we might need to add a flag to prevent loading data multiple times.

### Motivation, pitch

This would improve the performance if users simply iterate over the `MapDataPipe` as we don't need to pre-load everything at the beginning of the iteration, basically, simulating the behavior of `IterDataPipe`.

### Alternatives

_No response_

### Additional context

_No response_

|

https://github.com/meta-pytorch/data/issues/454

|

open

|

[

"help wanted"

] | 2022-05-24T14:14:30Z

| 2022-06-02T08:24:35Z

| 7

|

ejguan

|

pytorch/data

| 453

|

Fix installation document for nightly and official release

|

### 📚 The doc issue

In https://github.com/pytorch/data#local-pip-or-conda, we talk about the commands would install nightly pytorch and torchdata, which is actually the official release.

We should change this part and add another section for nightly installation

### Suggest a potential alternative/fix

_No response_

|

https://github.com/meta-pytorch/data/issues/453

|

closed

|

[

"documentation"

] | 2022-05-24T14:07:13Z

| 2022-05-24T17:33:20Z

| 0

|

ejguan

|

pytorch/torchx

| 498

|

Document .torchxconfig behavior in home directory

|

## 📚 Documentation

## Link

<!-- link to the problematic documentation -->

https://pytorch.org/torchx/main/runner.config.html

Context: https://fb.workplace.com/groups/140700188041197/posts/326515519459662/?comment_id=328106399300574&reply_comment_id=328113552633192

## What does it currently say?

<!-- copy paste the section that is wrong -->

```

The CLI only picks up .torchxconfig files from the current-working-directory (CWD) so chose a directory where you typically run torchx from.

```

## What should it say?

<!-- the proposed new documentation -->

It should explain how it can also be read from home and how the options are merged together.

## Why?

<!-- (if not clear from the proposal) why is the new proposed documentation more correct/improvement over the existing one? -->

Behavior is unclear to users.

|

https://github.com/meta-pytorch/torchx/issues/498

|

open

|

[

"documentation"

] | 2022-05-23T18:39:05Z

| 2022-06-16T00:04:19Z

| 2

|

d4l3k

|

pytorch/serve

| 1,647

|

How to return n images instead of 1?

|

Hi,

I am trying to deploy a DALL-E type model, in which you get as input a text and you receive as output a couple of images.

```

outputs = []

for i, image in enumerate(images):

byte_output = io.BytesIO()

output.convert('RGB').save(byte_output, format='JPEG')

bin_img_data = byte_output.getvalue()

outputs.append(bin_img_data)

return [outputs]

```

This does not work and results in a failure, with the logs from torchserve saying 'object of type bytearray is not json serializable'

However, changing `return [outputs]` into `return [outputs[0]]` makes it work. What can I do regarding this?

|

https://github.com/pytorch/serve/issues/1647

|

closed

|

[] | 2022-05-23T15:13:07Z

| 2022-05-23T17:21:30Z

| null |

mhashas

|

pytorch/data

| 436

|

Is our handling of open files safe?

|

Our current strategy is to wrap all file handles in a [`StreamWrapper`](https://github.com/pytorch/pytorch/blob/88fca3be5924dd089235c72e651f3709e18f76b8/torch/utils/data/datapipes/utils/common.py#L154). It dispatches all calls to wrapped object and adds a `__del__` method:

```py

class StreamWrapper:

def __init__(self, file_obj):

self.file_obj = file_obj

def __del__(self):

try:

self.file_obj.close()

except Exception:

pass

```

It will be called as soon as there are no more references to instance. The rationale is that if this happens we can close the wrapped file object. Since the `StreamWrapper` has a reference to the file object, GC should never try to delete the file object before `__del__` of the `StreamWrapper` is called. Thus, we should never delete an open file object.

Unfortunately, the reasoning above seems not to be correct. In some cases, it seems GC will delete the file object before the `StreamWrapper` is deleted. This will emit a warning which the `torchvision` test suite will turn into an error. This was discussed at length in pytorch/vision#5801 and includes minimum requirements to reproduce the issue. Still, there was no minimal reproduction outside of the test environment found. The issue was presumably fixed in pytorch/pytorch#76345, but was popping up again in https://github.com/pytorch/data/runs/6500848588#step:9:1977.

Thus, I think it is valid question to ask if our approach is safe at all. It would be a quite bad UX if a user gets a lot of unclosed file warnings although they used `torchdata` or in extension `torchvision.datasets` as documented.

|

https://github.com/meta-pytorch/data/issues/436

|

closed

|

[] | 2022-05-23T10:37:11Z

| 2023-01-05T15:05:51Z

| 3

|

pmeier

|

huggingface/sentence-transformers

| 1,562

|

Why is "max_position_embeddings" 514 in sbert where as 512 in bert

|

Why is "max_position_embeddings" different in sbert then in Bert?

|

https://github.com/huggingface/sentence-transformers/issues/1562

|

open

|

[] | 2022-05-22T17:27:01Z

| 2022-05-22T20:52:40Z

| null |

omerarshad

|

pytorch/TensorRT

| 1,076

|

❓ [Question] What am I missing to install TensorRT v1.1.0 in a Jetson with JetPack 4.6

|

## ❓ Question

I am getting some errors trying to install TensorRT v1.1.0 in a Jetson with JetPack 4.6 for using with Python3

## What you have already tried

I followed the Official installation of Pytorch v1.10.0 by using binaries according to the [ offical Nvidia Forum](https://forums.developer.nvidia.com/t/pytorch-for-jetson-version-1-10-now-available/72048). Then, I followed the official steps of this repository which are:

1. Install Bazel - successfully

2. Build Natively on aarch64 (Jetson) - Here I am getting the problem

## Environment

- PyTorch Version :1.10.0

- OS (e.g., Linux): Ubuntu 18.04

- How you installed PyTorch: Using pip3 according to [Nvidia Forum](https://forums.developer.nvidia.com/t/pytorch-for-jetson-version-1-11-now-available/72048)

- Python version: 3.6

- CUDA version: 10.2

- TensorRT version: 8.2.1.8

- CUDNN version: 8.2.0.1

- GPU models and configuration: Jetson NX with JetPack 4.6

- Any other relevant information: Installation is clean. I am using the CUDA and TensorRT that come flashed wich JetPack.

## Additional context

Starting from a clean installation of JetPack and Torch 1.10.0 installed by using official binaries, I describe the installation steps I did for using this repository with the errors I am getting.

### 1- Install Bazel

```

git clone -b v1.1.0 https://github.com/pytorch/TensorRT.git

sudo apt-get install openjdk-11-jdk

export BAZEL_VERSION=$(cat /home/tkh/TensorRT.bazelversion)

mkdir bazel

cd bazel

curl -fSsL -O https://github.com/bazelbuild/bazel/releases/download/$BAZEL_VERSION/bazel-$BAZEL_VERSION-dist.zip

unzip bazel-$BAZEL_VERSION-dist.zip

bash ./compile.sh

cp output/bazel /usr/local/bin/

```

At this point I can see `bazel 5.1.1- (@non-git)` with `bazel --version`.

### 2- Build Natively on aarch64 (Jetson)

Then, I modified my WORKSPACE file of this repository in this way

```

workspace(name = "Torch-TensorRT")

load("@bazel_tools//tools/build_defs/repo:http.bzl", "http_archive")

load("@bazel_tools//tools/build_defs/repo:git.bzl", "git_repository")

http_archive(

name = "rules_python",

sha256 = "778197e26c5fbeb07ac2a2c5ae405b30f6cb7ad1f5510ea6fdac03bded96cc6f",

url = "https://github.com/bazelbuild/rules_python/releases/download/0.2.0/rules_python-0.2.0.tar.gz",

)

load("@rules_python//python:pip.bzl", "pip_install")

http_archive(

name = "rules_pkg",

sha256 = "038f1caa773a7e35b3663865ffb003169c6a71dc995e39bf4815792f385d837d",

urls = [

"https://mirror.bazel.build/github.com/bazelbuild/rules_pkg/releases/download/0.4.0/rules_pkg-0.4.0.tar.gz",

"https://github.com/bazelbuild/rules_pkg/releases/download/0.4.0/rules_pkg-0.4.0.tar.gz",

],

)

load("@rules_pkg//:deps.bzl", "rules_pkg_dependencies")

rules_pkg_dependencies()

git_repository(

name = "googletest",

commit = "703bd9caab50b139428cea1aaff9974ebee5742e",

remote = "https://github.com/google/googletest",

shallow_since = "1570114335 -0400",

)

# External dependency for torch_tensorrt if you already have precompiled binaries.

local_repository(

name = "torch_tensorrt",

path = "/opt/conda/lib/python3.8/site-packages/torch_tensorrt"

)

# CUDA should be installed on the system locally

new_local_repository(

name = "cuda",

build_file = "@//third_party/cuda:BUILD",

path = "/usr/local/cuda-10.2/",

)

new_local_repository(

name = "cublas",

build_file = "@//third_party/cublas:BUILD",

path = "/usr",

)

#############################################################################################################

# Tarballs and fetched dependencies (default - use in cases when building from precompiled bin and tarballs)

#############################################################################################################

####################################################################################

# Locally installed dependencies (use in cases of custom dependencies or aarch64)

####################################################################################

# NOTE: In the case you are using just the pre-cxx11-abi path or just the cxx11 abi path

# with your local libtorch, just point deps at the same path to satisfy bazel.

# NOTE: NVIDIA's aarch64 PyTorch (python) wheel file uses the CXX11 ABI unlike PyTorch's standard

# x86_64 python distribution. If using NVIDIA's version just point to the root of the package

# for both versions here and do not use --config=pre-cxx11-abi

new_local_repository(

name = "libtorch",

path = "/home/tkh-ad/.local/lib/python3.6/site-packages/torch",

build_file = "third_party/libtorch/BUILD"

)

new_local_repository(

name = "libtorch_pre_cxx11_abi",

path = "/home/tkh-ad/.local/lib/python3.6/site-packages/torch",

build_file = "third_party/libtorch/BUILD"

)

new_local_repository(

name = "cudnn",

path = "/usr/local/cud

|

https://github.com/pytorch/TensorRT/issues/1076

|

closed

|

[

"question",

"channel: linux-jetpack"

] | 2022-05-20T13:56:30Z

| 2022-05-20T22:35:42Z

| null |

mjack3

|

pytorch/data

| 433

|

HashChecker example is broken

|

https://github.com/pytorch/data/blob/6a8415b1ced33e5653f7a38c93f767ac8e1c7e79/torchdata/datapipes/iter/util/hashchecker.py#L36-L48

Running this will raise a `StopIteration`. The reason is simple: we want to read from a stream that was already exhausted by the hash checking. The docstring tells us that much

https://github.com/pytorch/data/blob/6a8415b1ced33e5653f7a38c93f767ac8e1c7e79/torchdata/datapipes/iter/util/hashchecker.py#L32-L33

and we correctly set `rewind=False`.

|

https://github.com/meta-pytorch/data/issues/433

|

closed

|

[

"documentation",

"good first issue"

] | 2022-05-20T11:44:59Z

| 2022-05-23T22:29:38Z

| 1

|

pmeier

|

pytorch/functorch

| 823

|

Dynamic shape error in vmap with jacrev of jacrev

|

I'd like to compute the following expression in a vectorized way: first take the derivative wrt. to the data, and then take the derivative of this expression wrt. the parameters. I tried implementing it like this

```

func, params, buffer = make_functional_with_buffers(network)

vmap(jacrev(jacrev(func, 2), 0), (None, None, 0))(params, buffers, data)

```

but this isn't working since I get this error message:

> RuntimeError: vmap: We do not support batching operators that can support dynamic shape. Attempting to batch over indexing with a boolean mask.

I'm a bit surprised since I expected a second application of `jacrev` shouldn't change how `vmap` interacts with the function, but I guess that was incorrect.

**Edit**:

I also tried replacing this expression above using the `hessian` operation (and just ignoring the small computational overhead of computing the double derivatives I'm not interested in)

```

vmap(hessian(func, (0, 2)), (None, None, 0))(params, buffers, data)

```

but that code resulted in the same error.

Can you please point me to information about how to solve this problem?

|

https://github.com/pytorch/functorch/issues/823

|

closed

|

[] | 2022-05-20T10:41:39Z

| 2022-05-25T12:12:20Z

| 5

|

zimmerrol

|

pytorch/data

| 432

|

The developer install instruction are outdated

|

https://github.com/pytorch/data/blob/6a8415b1ced33e5653f7a38c93f767ac8e1c7e79/CONTRIBUTING.md?plain=1#L49-L56

While debugging #418 it took my quite a while to figure out that I need to set

https://github.com/pytorch/data/blob/6a8415b1ced33e5653f7a38c93f767ac8e1c7e79/tools/setup_helpers/extension.py#L41

for the C++ code to be built.

|

https://github.com/meta-pytorch/data/issues/432

|

closed

|

[

"documentation"

] | 2022-05-20T08:35:01Z

| 2022-06-10T20:04:08Z

| 3

|

pmeier

|

huggingface/datasets

| 4,374

|

extremely slow processing when using a custom dataset

|

## processing a custom dataset loaded as .txt file is extremely slow, compared to a dataset of similar volume from the hub

I have a large .txt file of 22 GB which i load into HF dataset

`lang_dataset = datasets.load_dataset("text", data_files="hi.txt")`

further i use a pre-processing function to clean the dataset

`lang_dataset["train"] = lang_dataset["train"].map(

remove_non_indic_sentences, num_proc=12, batched=True, remove_columns=lang_dataset['train'].column_names), batch_size=64)`

the following processing takes astronomical time to process, while hoging all the ram.

similar dataset of same size that's available in the huggingface hub works completely fine. which runs the same processing function and has the same amount of data.

`lang_dataset = datasets.load_dataset("oscar-corpus/OSCAR-2109", "hi", use_auth_token=True)`

the hours predicted to preprocess are as follows:

huggingface hub dataset: 6.5 hrs

custom loaded dataset: 7000 hrs

note: both the datasets are almost actually same, just provided by different sources with has +/- some samples, only one is hosted on the HF hub and the other is downloaded in a text format.

## Steps to reproduce the bug

```

import datasets

import psutil

import sys

import glob

from fastcore.utils import listify

import re

import gc

def remove_non_indic_sentences(example):

tmp_ls = []

eng_regex = r'[. a-zA-Z0-9ÖÄÅöäå _.,!"\'\/$]*'

for e in listify(example['text']):

matches = re.findall(eng_regex, e)

for match in (str(match).strip() for match in matches if match not in [""," ", " ", ",", " ,", ", ", " , "]):

if len(list(match.split(" "))) > 2:

e = re.sub(match," ",e,count=1)

tmp_ls.append(e)

gc.collect()

example['clean_text'] = tmp_ls

return example

lang_dataset = datasets.load_dataset("text", data_files="hi.txt")

lang_dataset["train"] = lang_dataset["train"].map(

remove_non_indic_sentences, num_proc=12, batched=True, remove_columns=lang_dataset['train'].column_names), batch_size=64)

## same thing work much faster when loading similar dataset from hub

lang_dataset = datasets.load_dataset("oscar-corpus/OSCAR-2109", "hi", split="train", use_auth_token=True)

lang_dataset["train"] = lang_dataset["train"].map(

remove_non_indic_sentences, num_proc=12, batched=True, remove_columns=lang_dataset['train'].column_names), batch_size=64)

```

## Actual results

similar dataset of same size that's available in the huggingface hub works completely fine. which runs the same processing function and has the same amount of data.

`lang_dataset = datasets.load_dataset("oscar-corpus/OSCAR-2109", "hi", use_auth_token=True)

**the hours predicted to preprocess are as follows:**

huggingface hub dataset: 6.5 hrs

custom loaded dataset: 7000 hrs

**i even tried the following:**

- sharding the large 22gb text files into smaller files and loading

- saving the file to disk and then loading

- using lesser num_proc

- using smaller batch size

- processing without batches ie : without `batched=True`

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.2.2.dev0

- Platform: Ubuntu 20.04 LTS

- Python version: 3.9.7

- PyArrow version:8.0.0

|

https://github.com/huggingface/datasets/issues/4374

|

closed

|

[

"bug",

"question"

] | 2022-05-19T14:18:05Z

| 2023-07-25T15:07:17Z

| null |

StephennFernandes

|

huggingface/optimum

| 198

|

Posibility to load an ORTQuantizer or ORTOptimizer from Onnx

|

FIrst, thanks a lot for this library, it make work so much easier.

I was wondering if it's possible to quantize and then optimize a model (or the reverse) but looking at the doc, it seems possible to do so only by passing a huggingface vanilla model.

Is it possible to do so with already compiled models?

Like : MyFineTunedModel ---optimize----> MyFineTunedOnnxOptimizedModel -----quantize-----> MyFinalReallyLightModel

```python

# Note that self.model_dir is my local folder with my custom fine-tuned hugginface model

onnx_path = self.model_dir.joinpath("model.onnx")

onnx_quantized_path = self.model_dir.joinpath("quantized_model.onnx")

onnx_chad_path = self.model_dir.joinpath("chad_model.onnx")

onnx_path.unlink(missing_ok=True)

onnx_quantized_path.unlink(missing_ok=True)

onnx_chad_path.unlink(missing_ok=True)

quantizer = ORTQuantizer.from_pretrained(self.model_dir, feature="token-classification")

quantized_path = quantizer.export(

onnx_model_path=onnx_path, onnx_quantized_model_output_path=onnx_quantized_path,

quantization_config=AutoQuantizationConfig.arm64(is_static=False, per_channel=False),

)

quantizer.model.save_pretrained(optimized_path.parent) # To have the model config.json

quantized_path.parent.joinpath("pytorch_model.bin").unlink() # To ensure that we're not loading the vanilla pytorch model

# Load an Optimizer from an onnx path...

# optimizer = ORTOptimizer.from_pretrained(quantized_path.parent, feature="token-classification") <-- this fails

# optimizer.export(

# onnx_model_path=onnx_path,

# onnx_optimized_model_output_path=onnx_chad_path,

# optimization_config=OptimizationConfig(optimization_level=99),

# )

model = ORTModelForTokenClassification.from_pretrained(quantized_path.parent, file_name="quantized_model.onnx")

# Ideally would load onnx_chad_path (with chad_model.onnx) if the commented section works.

tokenizer: PreTrainedTokenizer = AutoTokenizer.from_pretrained(self.model_dir)

self.pipeline = cast(TokenClassificationPipeline, pipeline(

model=model, tokenizer=tokenizer,

task="token-classification", accelerator="ort",

aggregation_strategy=AggregationStrategy.SIMPLE,

device=device_number(self.device),

))

```

Note that optimization alone works perfectly fine, quantization too, but I was hopping that both would be feasible.. unless optimization also does some kind of quantization or lighter model ?

Thanks in advance.

Have a great day

|

https://github.com/huggingface/optimum/issues/198

|

closed

|

[] | 2022-05-18T20:19:23Z

| 2022-06-30T08:33:58Z

| 1

|

ierezell

|

pytorch/pytorch

| 77,732

|

multiprocessing: how to put a model which copied from main thread in the shared_queue

|

### 🐛 Describe the bug

1. If I shared a model in cuda, it raises

```RuntimeError: Attempted to send CUDA tensor received from another process; this is not currently supported. Consider cloning before sending.```

Specifically, I accept a model from the main process and return a duplication create by using ```copy.deepcopy(model)```

2. ```torch.multiprocessing.manager.queue.get``` taken a long time to finish. If the queue just passed a file descriptor, I don't think it should take 1/3 of the total time, is there any faster way?

Here's my script

I opened a [thread](https://discuss.pytorch.org/t/how-sharing-memory-actually-worked-in-pytorch/151706) in pytorch'forum also

I think this is related to #10375 #9996 and #7204

```python

import torch

import torch.multiprocessing as mp

from copy import deepcopy

from functools import partial

from time import *

from torchvision import models

import numpy as np

from tqdm import tqdm

def parallel_produce(

queue: mp.Queue,

model_method,

i

) -> None:

pure_model: torch.nn.Module = model_method()

# if you delete this line, model can be passed

pure_model.to('cuda')

pure_model.share_memory()

while True:

corrupt_model = deepcopy(pure_model)

dic = corrupt_model.state_dict()

dic[list(dic.keys())[0]]*=2

corrupt_model.share_memory()

queue.put(corrupt_model)

def parallel(

valid,

iteration: int = 1000,

process_size: int=2,

buffer_size: int=2

):

pool = mp.Pool(process_size)

manager = mp.Manager()

queue = manager.Queue(buffer_size)

SeedSequence = np.random.SeedSequence()

model_method = partial(models.squeezenet1_1,True)

async_result = pool.map_async(

partial(

parallel_produce,

queue,

model_method,

),

SeedSequence.spawn(process_size),

)

time = 0

for iter_times in tqdm(range(iteration)):

start = monotonic_ns()

# this takes a long time

corrupt_model: torch.nn.Module = queue.get()

time += monotonic_ns() - start

corrupt_model.to("cuda")

corrupt_result = corrupt_model(valid)

del corrupt_model

pool.terminate()

print(time / 1e9)

if __name__ == "__main__":

valid = torch.randn(1,3,224,224).to('cuda')

parallel(valid)

```

#total time of queue.get taken

### Versions

Collecting environment information...

PyTorch version: 1.10.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Microsoft Windows 11 Home

GCC version: Could not collect

Clang version: Could not collect

CMake version: Could not collect

Libc version: N/A