repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

pytorch/TensorRT

| 1,351

|

❓ [Question] Not enough inputs provided (runtime.RunCudaEngine)

|

## ❓ Question

<!-- Your question -->

i make a pressure test on my model compiled by torch-tensorrt, it will report errors after 5 minutes, the traceback as blow:

```shell

2022-09-09T09:16:01.618971735Z File "/component/text_detector.py", line 135, in __call__

2022-09-09T09:16:01.618975181Z outputs = self.net(inp)

2022-09-09T09:16:01.618978313Z File "/miniconda/envs/python36/lib/python3.6/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

2022-09-09T09:16:01.618981965Z return forward_call(*input, **kwargs)

2022-09-09T09:16:01.618985142Z RuntimeError: The following operation failed in the TorchScript interpreter.

2022-09-09T09:16:01.618988457Z Traceback of TorchScript, serialized code (most recent call last):

2022-09-09T09:16:01.618991980Z File "code/__torch__.py", line 8, in forward

2022-09-09T09:16:01.618995305Z input_0: Tensor) -> Tensor:

2022-09-09T09:16:01.618998495Z __torch___ModelWrapper_trt_engine_ = self_1.__torch___ModelWrapper_trt_engine_

2022-09-09T09:16:01.619001820Z _0 = ops.tensorrt.execute_engine([input_0], __torch___ModelWrapper_trt_engine_)

2022-09-09T09:16:01.619005168Z ~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

2022-09-09T09:16:01.619008442Z _1, = _0

2022-09-09T09:16:01.619011485Z return _1

2022-09-09T09:16:01.619014563Z

2022-09-09T09:16:01.619017565Z Traceback of TorchScript, original code (most recent call last):

2022-09-09T09:16:01.619020865Z RuntimeError: [Error thrown at core/runtime/register_trt_op.cpp:101] Expected compiled_engine->exec_ctx->allInputDimensionsSpecified() to be true but got false

2022-09-09T09:16:01.619024625Z Not enough inputs provided (runtime.RunCudaEngine)

```

then i get an error about cuda memory illegal access:

```shell

2022-09-13T02:32:46.621963863Z File "/component/text_detector.py", line 136, in __call__

2022-09-13T02:32:46.621966267Z inp = inp.cuda()

2022-09-13T02:32:46.621968419Z RuntimeError: CUDA error: an illegal memory access was encountered

```

## What you have already tried

<!-- A clear and concise description of what you have already done. -->

I have tried upgrade the pytorch version from 1.10.0 to 1.10.2, also tried upgrade torch to 1.11.0 python 3.7, but it didn't works.

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.10.2

- CPU Architecture: x86

- OS (e.g., Linux): centos 7

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Build command you used (if compiling from source): /

- Are you using local sources or building from archives: no

- Python version: 3.6

- CUDA version: 11.3

- GPU models and configuration: gpu is nvidia-T4 with 16G memory

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1351

|

closed

|

[

"question",

"No Activity",

"component: runtime"

] | 2022-09-13T02:39:11Z

| 2023-03-26T00:02:17Z

| null |

Pekary

|

pytorch/TensorRT

| 1,340

|

❓ [Question] No improvement when I use sparse-weights?

|

## ❓ Question

<!-- Your question -->

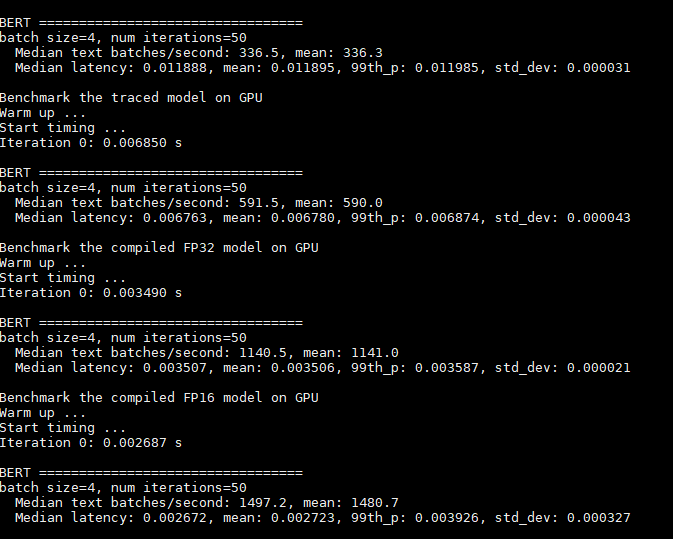

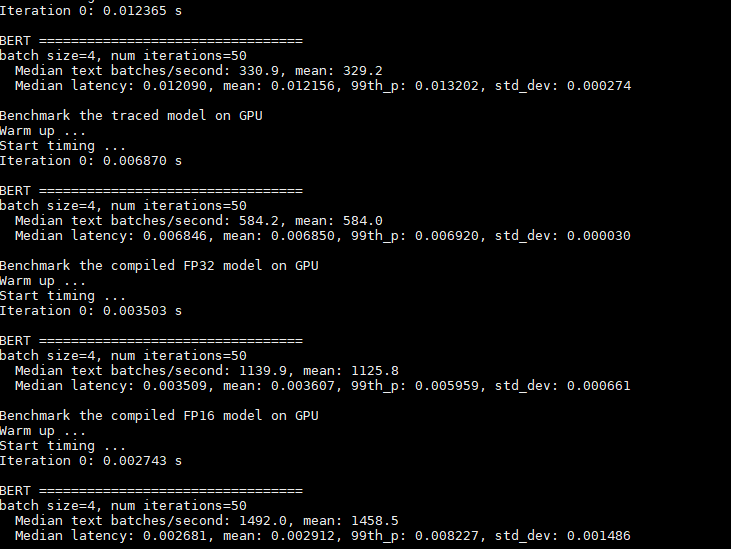

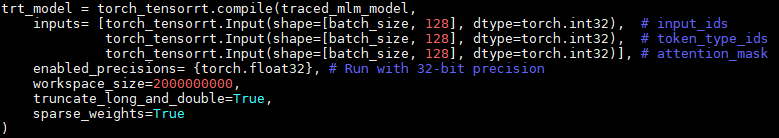

**No speed improvement when I use sparse-weights.**

I just modified this notebook https://github.com/pytorch/TensorRT/blob/master/notebooks/Hugging-Face-BERT.ipynb

And add the sparse_weights=True in the compile part. I also changed the regional bert-base model when I apply 2:4 sparse on most parts of the FC layers.

But whether I set the "sparse_weights=True", the results look like no changes.

Here are some results.

set sparse_weights=False

set sparse_weights=True

<!-- A clear and concise description of what you have already done. -->

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.13

- CPU Architecture:x86-64

- OS (e.g., Linux):Ubuntu 18.04

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source):

- Build command you used (if compiling from source):

- Are you using local sources or building from archives:

- Python version: 3.8

- CUDA version: 11.7.1

- GPU models and configuration: Nvidia A100 GPU & CUDA Driver Version 515.65.01

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1340

|

closed

|

[

"question",

"No Activity",

"performance"

] | 2022-09-09T02:26:48Z

| 2023-03-26T00:02:17Z

| null |

wzywzywzy

|

pytorch/vision

| 6,545

|

add quantized vision transformer model

|

### 🚀 The feature

hi, thanks for your great work. I hope to be able to add quantized vit model (for ptq or qat).

### Motivation, pitch

In 'torchvision/models/quantization', there are several quantized model (Eager Mode Quantization) that is very useful for me to learn quantization. In recent years, Transformer model is very popular. I want to learn how to quantized Transformer model, e.g Vision Transformer, Swin Transformer etc, using pytorch official tools like Eager Mode Quantization. I also tried to modify it myself, but failed. I don't know how to quantify 'pos_embedding' (nn.Parameter) and nn.MultiheadAttention module. look forward to your reply.

### Alternatives

_No response_

### Additional context

_No response_

|

https://github.com/pytorch/vision/issues/6545

|

open

|

[

"question",

"module: models.quantization"

] | 2022-09-08T09:34:33Z

| 2022-09-09T11:17:45Z

| null |

WZMIAOMIAO

|

huggingface/datasets

| 4,944

|

larger dataset, larger GPU memory in the training phase? Is that correct?

|

from datasets import set_caching_enabled

set_caching_enabled(False)

for ds_name in ["squad","newsqa","nqopen","narrativeqa"]:

train_ds = load_from_disk("../../../dall/downstream/processedproqa/{}-train.hf".format(ds_name))

break

train_ds = concatenate_datasets([train_ds,train_ds,train_ds,train_ds]) #operation 1

trainer = QuestionAnsweringTrainer( #huggingface trainer

model=model,

args=training_args,

train_dataset=train_ds,

eval_dataset= None,

eval_examples=None,

answer_column_name=answer_column,

dataset_name="squad",

tokenizer=tokenizer,

data_collator=data_collator,

compute_metrics=compute_metrics if training_args.predict_with_generate else None,

)

with operation 1, the GPU memory increases from 16G to 23G

|

https://github.com/huggingface/datasets/issues/4944

|

closed

|

[

"bug"

] | 2022-09-07T08:46:30Z

| 2022-09-07T12:34:58Z

| 2

|

debby1103

|

pytorch/vision

| 6,543

|

Inconsistent use of FrozenBatchNorm in Faster-RCNN?

|

Hi,

while customizing and training a Faster-RCNN object detection model based on `torchvision.models.detection.faster_rcnn`, I've noticed that the pre-trained model of type `fasterrcnn_resnet50_fpn_v2` always use `nn.BatchNorm2d` normalization layers, while `fasterrcnn_resnet50_fpn` uses `torchvision.models.ops.misc.FrozenBatchNorm2d` when pretrained weights are loaded. I've noticed deteriorating performance of the V2 model when training a COCO pretrained model with low batch size. I am suspecting that this is related to the un-frozen `nn.BatchNorm2d` layers, and indeed, replacing `nn.BatchNorm2d` with `torchvision.models.ops.misc.FrozenBatchNorm2d` improves the performance for my task.

Thus, my question is: Is this discrepancy in normalization layers intentional, and if yes what could be other reasons for V2 model underperforming compared to the V1 model?

I'm using pytorch 1.12, torchvision 0.13.

Thanks!

cc @datumbox

|

https://github.com/pytorch/vision/issues/6543

|

closed

|

[

"question",

"module: models",

"topic: object detection"

] | 2022-09-07T08:16:00Z

| 2024-06-23T16:24:37Z

| null |

MoPl90

|

huggingface/datasets

| 4,942

|

Trec Dataset has incorrect labels

|

## Describe the bug

Both coarse and fine labels seem to be out of line.

## Steps to reproduce the bug

```python

from datasets import load_dataset

dataset = "trec"

raw_datasets = load_dataset(dataset)

df = pd.DataFrame(raw_datasets["test"])

df.head()

```

## Expected results

text (string) | coarse_label (class label) | fine_label (class label)

-- | -- | --

How far is it from Denver to Aspen ? | 5 (NUM) | 40 (NUM:dist)

What county is Modesto , California in ? | 4 (LOC) | 32 (LOC:city)

Who was Galileo ? | 3 (HUM) | 31 (HUM:desc)

What is an atom ? | 2 (DESC) | 24 (DESC:def)

When did Hawaii become a state ? | 5 (NUM) | 39 (NUM:date)

## Actual results

index | label-coarse |label-fine | text

-- |-- | -- | --

0 | 4 | 40 | How far is it from Denver to Aspen ?

1 | 5 | 21 | What county is Modesto , California in ?

2 | 3 | 12 | Who was Galileo ?

3 | 0 | 7 | What is an atom ?

4 | 4 | 8 | When did Hawaii become a state ?

## Environment info

- `datasets` version: 2.4.0

- Platform: Linux-5.4.0-1086-azure-x86_64-with-glibc2.27

- Python version: 3.9.13

- PyArrow version: 8.0.0

- Pandas version: 1.4.3

|

https://github.com/huggingface/datasets/issues/4942

|

closed

|

[

"bug"

] | 2022-09-06T22:13:40Z

| 2022-09-08T11:12:03Z

| 1

|

wmpauli

|

pytorch/data

| 763

|

Online doc for DataLoader2/ReadingService and etc.

|

### 📚 The doc issue

As we are preparing the next release with `DataLoader2`, we might need to add a few pages for `DL2`, `ReadingService` and all other related functionalities in https://pytorch.org/data/main/

- [x] DataLoader2

- [x] ReadingService

- [x] Adapter

- [ ] Linter

- [x] Graph function

- [ ]

### Suggest a potential alternative/fix

_No response_

|

https://github.com/meta-pytorch/data/issues/763

|

open

|

[

"documentation"

] | 2022-09-06T15:37:49Z

| 2022-11-15T15:13:49Z

| 4

|

ejguan

|

pytorch/TensorRT

| 1,335

|

[Question? Bug?] Tried to allocate 166.38 GiB, seems weird

|

## ❓ Question

<!-- Your question -->

I got errors

```

model_new_trt = trt.compile(

File "/opt/conda/lib/python3.8/site-packages/torch_tensorrt/_compile.py", line 109, in compile

return torch_tensorrt.ts.compile(ts_mod, inputs=inputs, enabled_precisions=enabled_precisions, **kwargs)

File "/opt/conda/lib/python3.8/site-packages/torch_tensorrt/ts/_compiler.py", line 113, in compile

compiled_cpp_mod = _C.compile_graph(module._c, _parse_compile_spec(spec))

RuntimeError: The following operation failed in the TorchScript interpreter.

Traceback of TorchScript (most recent call last):

%1 : bool = prim::Constant[value=0]()

%2 : int[] = prim::Constant[value=[0, 0, 0]]()

%4 : Tensor = aten::_convolution(%x, %w, %b, %s, %p, %d, %1, %2, %g, %1, %1, %1, %1)

~~~~ <--- HERE

return (%4)

RuntimeError: CUDA out of memory. Tried to allocate 166.38 GiB (GPU 0; 31.75 GiB total capacity; 1.31 GiB already allocated; 29.14 GiB free; 1.53 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

```

Converting Script

```

model_new_trt = trt.compile(

model_new,

inputs=[trt.Input(

min_shape=[1, 1, 210, 748, 748],

opt_shape=[1, 1, 210, 748, 748],

max_shape=[1, 1, 210, 748, 748],

dtype=torch.float32

)],

)

```

My model takes 28GB on inference forward.

But the 166GB so huge, is this correct memory usage?

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- Docker : nvcr.io/nvidia/pytorch:22.07-py3

- TRT : 1.2.0a0

- GPU models and configuration: V100 32GB

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1335

|

closed

|

[

"question",

"No Activity",

"component: partitioning"

] | 2022-09-06T15:16:41Z

| 2022-12-26T00:02:39Z

| null |

zsef123

|

huggingface/datasets

| 4,936

|

vivos (Vietnamese speech corpus) dataset not accessible

|

## Describe the bug

VIVOS data is not accessible anymore, neither of these links work (at least from France):

* https://ailab.hcmus.edu.vn/assets/vivos.tar.gz (data)

* https://ailab.hcmus.edu.vn/vivos (dataset page)

Therefore `load_dataset` doesn't work.

## Steps to reproduce the bug

```python

ds = load_dataset("vivos")

```

## Expected results

dataset loaded

## Actual results

```

ConnectionError: Couldn't reach https://ailab.hcmus.edu.vn/assets/vivos.tar.gz (ConnectionError(MaxRetryError("HTTPSConnectionPool(host='ailab.hcmus.edu.vn', port=443): Max retries exceeded with url: /assets/vivos.tar.gz (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x7f9d8a27d190>: Failed to establish a new connection: [Errno -5] No address associated with hostname'))")))

```

Will try to contact the authors, as we wanted to use Vivos as an example in documentation on how to create scripts for audio datasets (https://github.com/huggingface/datasets/pull/4872), because it's small and straightforward and uses tar archives.

|

https://github.com/huggingface/datasets/issues/4936

|

closed

|

[

"dataset bug"

] | 2022-09-06T13:17:55Z

| 2022-09-21T06:06:02Z

| 3

|

polinaeterna

|

pytorch/data

| 762

|

Allow Header(limit=None) ?

|

Not urgent at all, just a minor suggestion:

In the benchmark scripts I'm currently running I want to limit the number of samples in a data-pipe according to an `args.limit` CLI parameter. I'd be nice to be able to just write:

```py

dp = Header(dp, limit=args.limit)

```

and let `Header` be a no-op when `limit=None`. This might be a bit niche, and the alternative is to just protect the call in a `if` block, so I would totally understand if this isn't in scope (and it's really not urgent in any case)

|

https://github.com/meta-pytorch/data/issues/762

|

closed

|

[

"good first issue"

] | 2022-09-06T11:04:57Z

| 2022-12-06T20:20:58Z

| 4

|

NicolasHug

|

huggingface/datasets

| 4,932

|

Dataset Viewer issue for bigscience-biomedical/biosses

|

### Link

https://huggingface.co/datasets/bigscience-biomedical/biosses

### Description

I've just been working on adding the dataset loader script to this dataset and working with the relative imports. I'm not sure how to interpret the error below (show where the dataset preview used to be) .

```

Status code: 400

Exception: ModuleNotFoundError

Message: No module named 'datasets_modules.datasets.bigscience-biomedical--biosses.ddbd5893bf6c2f4db06f407665eaeac619520ba41f69d94ead28f7cc5b674056.bigbiohub'

```

### Owner

Yes

|

https://github.com/huggingface/datasets/issues/4932

|

closed

|

[] | 2022-09-05T22:40:32Z

| 2022-09-06T14:24:56Z

| 4

|

galtay

|

pytorch/pytorch

| 84,553

|

[ONNX] Change how context is given to symbolic functions

|

Current symbolic functions can take a context as an input, pushing graphs to the second argument. To support these functions, we need to annotate the first argument as symbolic context and tell them part in call time by examining the annotations.

Checking annotations is slow and this process complicates the logic in the caller.

Instead we can wrap the graph object in a GraphContext, exposing all methods used from the graph and include the context in the GraphContext. This way all the old symbolic functions continue to work and we do not need to do the annotation checking if we know the symbolic function is a "new function".

We can edit a private field in the functions at registration time to tag them as "new style" symbolic functions that always takes a wrapped Graph with context object as input.

This also has the added benefit where we no longer need to monkey patch the Graph object to expose the g.op method. Instead the method can be defined in the graph context object.

|

https://github.com/pytorch/pytorch/issues/84553

|

closed

|

[

"module: onnx",

"triaged",

"topic: improvements"

] | 2022-09-05T22:04:52Z

| 2022-09-28T22:56:39Z

| null |

justinchuby

|

pytorch/TensorRT

| 1,332

|

❓ [Question] Using torch-trt to test bert's qat quantitative model

|

## ❓ Question

When using torch-trt to test Bert's qat quantization ( https://zenodo.org/record/4792496#.YxGrdRNBy3J ) model, I encountered many FakeTensorQuantFunction nodes in the pass, and at the same time triggered many nodes that could not convert TRT, and split the graph into many subgraphs

question:

1. Can you tell me how to explain the nodes that appear in the pass, and how to explain the symbols (^) in front of these nodes?

2. How can these quantization nodes be converted into qat nodes corresponding to torch-trt( https://github.com/pytorch/TensorRT/blob/master/core/conversion/converters/impl/quantization.cpp )?

|

https://github.com/pytorch/TensorRT/issues/1332

|

closed

|

[

"question",

"No Activity",

"component: quantization"

] | 2022-09-05T12:35:41Z

| 2023-03-25T00:02:27Z

| null |

lixiaolx

|

pytorch/serve

| 1,851

|

High utilization of hardware

|

HI, I'm trying to use torchserve as a backend with a custom hardware setup. How do you suggest to run such that the hardware is maximally utilized? For example I tried using the benchmarks-ab.py script to test the server for throughput on resnet18 but only achieved ~200 requests per second (tried different batch sizes) while the hardware is capable of crunching at least 10,000 images per second.

Thanks for any help.

|

https://github.com/pytorch/serve/issues/1851

|

closed

|

[

"question",

"triaged"

] | 2022-09-05T05:15:29Z

| 2022-09-08T09:13:40Z

| null |

Vert53

|

pytorch/data

| 761

|

Would TorchData provide GPU support for loading and preprocessing images?

|

### 🚀 The feature

Would TorchData provide GPU support for loading and preprocessing images?

### Motivation, pitch

When I am learning PyTorch, I find, currently, it do not support using GPU to load images or any other transforms of preprocessing and encoding data.

I want to know whether this would be taken into consideration into the design of TorchData.

### Alternatives

Currently, NVIDIA-DALI is an impressive alternative for loading and preprocessing images with GPU.

### Additional context

_No response_

|

https://github.com/meta-pytorch/data/issues/761

|

open

|

[

"topic: new feature",

"triaged"

] | 2022-09-03T09:16:30Z

| 2022-11-21T20:06:25Z

| 5

|

songyuc

|

pytorch/serve

| 1,842

|

initial parameters transmit

|

### 🚀 The feature

how transmit the initial parameters from the first model to laters in workflow.

### Motivation, pitch

how transmit the initial parameters from the first model to laters in workflow.

### Alternatives

_No response_

### Additional context

_No response_

|

https://github.com/pytorch/serve/issues/1842

|

open

|

[

"question",

"triaged_wait",

"workflowx"

] | 2022-09-02T14:51:38Z

| 2022-09-06T10:42:39Z

| null |

jack-gits

|

pytorch/serve

| 1,841

|

how to register a workflow directly when docker is started.

|

### 🚀 The feature

how to register a workflow directly when docker is started.

### Motivation, pitch

how to register a workflow directly when docker is started.

### Alternatives

_No response_

### Additional context

_No response_

|

https://github.com/pytorch/serve/issues/1841

|

open

|

[

"help wanted",

"triaged",

"workflowx"

] | 2022-09-02T14:21:34Z

| 2023-11-15T06:49:21Z

| null |

jack-gits

|

huggingface/datasets

| 4,924

|

Concatenate_datasets loads everything into RAM

|

## Describe the bug

When loading the datasets seperately and saving them on disk, I want to concatenate them. But `concatenate_datasets` is filling up my RAM and the process gets killed. Is there a way to prevent this from happening or is this intended behaviour? Thanks in advance

## Steps to reproduce the bug

```python

gcs = gcsfs.GCSFileSystem(project='project')

datasets = [load_from_disk(f'path/to/slice/of/data/{i}', fs=gcs, keep_in_memory=False) for i in range(10)]

dataset = concatenate_datasets(datasets)

```

## Expected results

A concatenated dataset which is stored on my disk.

## Actual results

Concatenated dataset gets loaded into RAM and overflows it which gets the process killed.

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.4.0

- Platform: Linux-4.19.0-21-cloud-amd64-x86_64-with-glibc2.10

- Python version: 3.8.13

- PyArrow version: 8.0.1

- Pandas version: 1.4.3

|

https://github.com/huggingface/datasets/issues/4924

|

closed

|

[

"bug"

] | 2022-09-01T10:25:17Z

| 2022-09-01T11:50:54Z

| 0

|

louisdeneve

|

pytorch/TensorRT

| 1,328

|

❓ [Question] How do you ....?

|

## ❓ Question

Hi,

I am trying to use torch-tensorrt to optimize my model for inference. I first compile the model with torch.jit.script and then covnert it to tesnsorrt.

```shell

model = MoViNet(movinet_c.MODEL.MoViNetA0)

model.eval().cuda()

scripted_model = torch.jit.script(model)

trt_model = torch_tensorrt.compile(model,

inputs = [torch_tensorrt.Input((8, 3, 16, 344, 344))],

enabled_precisions= {torch.half}, # Run with FP16

workspace_size= 1 << 20,

truncate_long_and_double=True,

require_full_compilation=True, #True

)

```

However, the tensorrt model has almost the same speed as the regular PyTorch model. And the torchscript model is about 2 times slower:

```shell

cur_time = time.time()

with torch.inference_mode():

for _ in range(100):

x = torch.rand(4, 3, 16, 344, 344).cuda()

detections_batch = model(x)

print(time.time() - cur_time) #11.20 seconds

cur_time = time.time()

with torch.inference_mode():

scripted_model(x)

for _ in range(100):

x = torch.rand(4, 3, 16, 344, 344).cuda()

detections_batch = scripted_model(x)

print(time.time() - cur_time) #23.76 seconds

cur_time = time.time()

with torch.inference_mode():

trt_model(x)

for _ in range(100):

x = torch.rand(4, 3, 16, 344, 344).cuda()

detections_batch = trt_model(x)

print(time.time() - cur_time) #11.01 seconds

```

I'd really appreciate it if someone can help me understand what could be causing this issue.

## What you have already tried

I tried compiling and converting the model layer by layer and it doesn't seem like there is a specific operation or layer that takes too much time, however, each layer adds a little bit (0.5 seconds) to the runtime of the scripted model while it only adds about 0.01 to the runtime of the regular PyTorch model.

## Environment

Torch-TensorRT Version: 1.1.0

PyTorch Version: 1.11.0+cu113

CPU Architecture: x86_64

OS: Ubuntu 20.04

How you installed PyTorch: pip

Python version: 3.8

CUDA version: 11.3

GPU models and configuration: NVIDIA GeForce RTX 3070

## Additional context

This is the model. It's taken from here: [MoViNet-pytorch/models.py at main · Atze00/MoViNet-pytorch · GitHub](https://github.com/Atze00/MoViNet-pytorch/blob/main/movinets/models.py)

I made some changes to resolve the errors I was getting from torch.jit.script and torch-tensorrt.

```shell

class Swish(nn.Module):

def __init__(self) -> None:

super().__init__()

def forward(self, x: Tensor) -> Tensor:

return x * torch.sigmoid(x)

class Conv3DBNActivation(nn.Sequential):

def __init__(

self,

in_planes: int,

out_planes: int,

*,

kernel_size: Union[int, Tuple[int, int, int]],

padding: Union[int, Tuple[int, int, int]],

stride: Union[int, Tuple[int, int, int]] = 1,

groups: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

activation_layer: Optional[Callable[..., nn.Module]] = None,

**kwargs: Any,

) -> None:

super().__init__()

kernel_size = _triple(kernel_size)

stride = _triple(stride)

padding = _triple(padding)

if norm_layer is None:

norm_layer = nn.Identity

if activation_layer is None:

activation_layer = nn.Identity

self.kernel_size = kernel_size

self.stride = stride

dict_layers = OrderedDict({

"conv3d": nn.Conv3d(in_planes, out_planes,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups,

**kwargs),

"norm": norm_layer(out_planes, eps=0.001),

"act": activation_layer()

})

self.out_channels = out_planes

self.seq_layer = nn.Sequential(dict_layers)

# super(Conv3DBNActivation, self).__init__(dict_layers)

def forward(self, input):

return self.seq_layer(input)

class ConvBlock3D(nn.Module):

def __init__(

self,

in_planes: int,

out_planes: int,

*,

kernel_size: Union[int, Tuple[int, int, int]],

conv_type: str,

padding: Union[int, Tuple[int, int, int]] = 0,

stride: Union[int, Tuple[int, int, int]] = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

activation_layer: Optio

|

https://github.com/pytorch/TensorRT/issues/1328

|

closed

|

[

"question",

"No Activity",

"performance"

] | 2022-08-31T15:06:50Z

| 2022-12-12T00:03:55Z

| null |

ghazalehtrb

|

pytorch/data

| 756

|

[RFC] More support for functionalities from `itertools`

|

### 🚀 The feature

Over time, we have received more and more request for additional `IterDataPipe` (e.g. #648, #754, plus many more). Sometimes, these functionalities are very similar to what is already implemented in [`itertools`](https://docs.python.org/3/library/itertools.html) and [`more-itertools`](https://github.com/more-itertools/more-itertools).

Keep adding more `IterDataPipe` one at a time seems unsustainable(?). Perhaps, we should draw a line somewhere or provide better interface for users to directly use functions from `itertools`. At the same time, providing APIs with names that are already familiar to Python users can improve the user experience. As @msaroufim mentioned, the Core library does aim to match operators with what is available in `numpy`.

We will need to decide on:

1. Coverage - which set of functionalities should we officially in `torchdata`?

2. Implementation - how will users be able to invoke those functions?

### Coverage

0. Arbitrary based on estimated user requests/contributions

1. `itertools` ~20 functions (some of which already exist in `torchdata`)

- **This seems common enough and reasonable?**

2. `more-itertools` ~100 functions?

- This is probably too much.

If we provide a good wrapper, we might not need to worry about the actual coverage too much?

### Implementation

0. Keep adding each function as a new `IterDataPipe`

- This is what we have been doing. We can keep doing that but the cost of maintenance will increase over time.

Currently, you can use `IterableWrapper`, but it doesn't always work well since it accepts an iterable, and an iterable doesn't guarantee to restart if you call `iter()` on it again.

```python

from torchdata.datapipes.iter import IterableWrapper

from itertools import accumulate

source_dp = IterableWrapper(range(10))

dp3 = IterableWrapper(accumulate(source_dp), deepcopy=False)

list(dp3) # [0, 1, 3, 6, 10, 15, 21, 28, 36, 45]

list(dp3) # []

```

One idea to work around that is to:

1. Provide a different wrapper that accepts a `Callable` that returns an `Iterable`, which will be iterated over

- Users can use `functool.partial` to pass in arguments (including `DataPipes` if desired)

- **I personally think we should do this since the cost of doing so is low and unlocks other possibilities.**

2. Create an `Itertools` DataPipe that delegates other DataPipes, it might look some like this:

```python

class ItertoolsIterDataPipe(IterDataPipe):

supported_operations: Dict[str, Callable] = {

"repeat": Repeater,

"chain": Concater,

"filterfalse": filter_false_constructor,

# most/all 20 `itertools` functions here?

}

def __new__(cls, name, *args, **kwargs):

if name not in cls.supported_operations:

raise RuntimeError("Operator is not supported")

constructor = cls.supported_operations[name]

return constructor(*args, **kwargs)

source_dp = IterableWrapper(range(10))

dp1 = source_dp.filter(lambda x: x >= 5)

dp2 = ItertoolsIterDataPipe("filterfalse", source_dp, lambda x: x >= 5)

list(dp1) # [5, 6, 7, 8, 9]

list(dp2) # [0, 1, 2, 3, 4]

```

These options are incomplete. If you have more ideas, please comment below.

### Motivation, pitch

These functionalities are commonly used and can be valuable for users.

### Additional context

Credit to @NicolasHug @msaroufim @pmeier and many others for past feedback and discussion related to this topic.

cc: @VitalyFedyunin @ejguan

|

https://github.com/meta-pytorch/data/issues/756

|

open

|

[] | 2022-08-30T21:30:19Z

| 2022-09-08T06:54:28Z

| 5

|

NivekT

|

pytorch/TensorRT

| 1,322

|

Error when I'm trying to use torch-tensorrt

|

## ❓ Question

Hi

I'm trying to use torch-tensorrt with the pre built ngc container

I built it with 22.04 branch and with 22.04 version of ngc

My versions are:

cuda 10.2

torchvision 0.13.1

torch 1.12.1

But I get that error:

Traceback (most recent call last):

File "main.py", line 31, in <module>

import torch_tensorrt

File "/usr/local/lib/python3.8/dist-packages/torch_tensorrt/__init__.py", line 11, in <module>

from torch_tensorrt._compile import *

File "/usr/local/lib/python3.8/dist-packages/torch_tensorrt/_compile.py", line 2, in <module>

from torch_tensorrt import _enums

File "/usr/local/lib/python3.8/dist-packages/torch_tensorrt/_enums.py", line 1, in <module>

from torch_tensorrt._C import dtype, DeviceType, EngineCapability, TensorFormat

ImportError: /usr/local/lib/python3.8/dist-packages/torch_tensorrt/lib/libtorchtrt.so: undefined symbol: _ZNK3c1010TensorImpl36is_contiguous_nondefault_policy_implENS_12MemoryFormatE

Thank's!!

|

https://github.com/pytorch/TensorRT/issues/1322

|

closed

|

[

"question",

"channel: NGC"

] | 2022-08-30T13:09:18Z

| 2022-12-15T17:43:52Z

| null |

EstherMalam

|

huggingface/diffusers

| 267

|

Non-squared Image shape

|

Is it possible to use diffusers on non-squared images?

That would be a very interesting feature!

|

https://github.com/huggingface/diffusers/issues/267

|

closed

|

[

"question"

] | 2022-08-29T01:29:33Z

| 2022-09-13T15:57:36Z

| null |

LucasSilvaFerreira

|

pytorch/functorch

| 1,011

|

memory_efficient_fusion leads to RuntimeError for higher-order gradients calculation. RuntimeError: You are attempting to call Tensor.requires_grad_()

|

Hi All,

I've tried improving the speed of my code via using `memory_efficient_fusion`, however, it leads to `Tensor.requires_grad_()` error and I have no idea why. The error is as follows,

```

RuntimeError: You are attempting to call Tensor.requires_grad_() (or perhaps using torch.autograd.functional.* APIs) inside of a function being transformed by a functorch transform. This is unsupported, please attempt to use the functorch transforms (e.g. grad, vjp, jacrev, jacfwd, hessian) or call requires_grad_() outside of a function being transformed instead.

```

I've attached a 'minimal' reproducible example of this behaviour below. I've tried a few different things but nothing's seems to have worked. I did see in #840 `memory_efficient_fusion` is done within a context manager, however, when using that I get the same error.

Thanks in advance!

EDIT: When I tried running it, it tried to use the `networkx` package but that wasn't installed by default. So, I had to manually install that (which wasn't a problem), just not sure if installing from source should also include install those packages as well!

```

import torch

from torch import nn

import functorch

from functorch import make_functional, vmap, jacrev, grad

from functorch.compile import memory_efficient_fusion

import time

_ = torch.manual_seed(1234)

#version info

print("PyTorch version: ", torch.__version__)

print("CUDA version: ", torch.version.cuda)

print("FuncTorch version: ", functorch.__version__)

#=============================================#

#time with torch synchronization

def sync_time() -> float:

torch.cuda.synchronize()

return time.perf_counter()

class model(nn.Module):

def __init__(self, num_inputs, num_hidden):

super(model, self).__init__()

self.num_inputs=num_inputs

self.func = nn.Tanh()

self.fc1 = nn.Linear(2, num_hidden)

self.fc2 = nn.Linear(num_hidden, num_inputs)

def forward(self, x):

"""

Takes x in [B,A,1] and maps it to sign/logabsdet value in Tuple([B,], [B,])

"""

idx=len(x.shape) #creates args for repeat if vmap is used or not

rep=[1 for _ in range(idx)]

rep[-2] = self.num_inputs

g = x.mean(dim=(idx-2), keepdim=True).repeat(*rep)

f = torch.cat((x,g), dim=-1)

h = self.func(self.fc1(f))

mat = self.fc2(h)

sgn, logabs = torch.linalg.slogdet(mat)

return sgn, logabs

#=============================================#

B=4096 #batch

N=2 #input nodes

H=64 #number of hidden nodes

device = torch.device('cuda')

x = torch.randn(B, N, 1, device=device) #input data

net = model(N, H) #our model

net=net.to(device)

fnet, params = make_functional(net)

def calc_logabs(params, x):

_, logabs = fnet(params, x)

return logabs

def calc_dlogabs_dx(params, x):

dlogabs_dx = jacrev(func=calc_logabs, argnums=1)(params, x)

return dlogabs_dx, dlogabs_dx #return aux

def local_kinetic_from_log_vmap(params, x):

d2logabs_dx2, dlogabs_dx = jacrev(func=calc_dlogabs_dx, argnums=1, has_aux=True)(params, x)

_local_kinetic = -0.5*(d2logabs_dx2.diagonal(0,-4,-2).sum() + dlogabs_dx.pow(2).sum())

return _local_kinetic

#memory efficient fusion here

#with torch.jit.fuser("fuser2"): is this needed (from functorch/issues/840)

ps_elocal = grad(local_kinetic_from_log_vmap, argnums=0)

ps_elocal_fusion = memory_efficient_fusion(grad(local_kinetic_from_log_vmap, argnums=0))

#ps_elocal_fusion(params, x) #no vmap attempt (throws size mis-match error)

t1=sync_time()

vmap(ps_elocal, in_dims=(None, 0))(params, x) #works fine

t2=sync_time()

vmap(ps_elocal_fusion, in_dims=(None, 0))(params, x) #error (crashes on this line)

t3=sync_time()

print("Laplacian (standard): %4.2e (s)",t2-t1)

print("Laplacian (fusion): %4.2e (s)",t3-t2)

```

|

https://github.com/pytorch/functorch/issues/1011

|

open

|

[] | 2022-08-28T16:56:02Z

| 2022-12-22T19:59:22Z

| 3

|

AlphaBetaGamma96

|

pytorch/functorch

| 1,010

|

Multiple gradient calculation for single sample

|

[According to the README](https://github.com/pytorch/functorch#working-with-nn-modules-make_functional-and-friends), we are able to calculate **per-sample-gradients** with functorch.

But what if we want to get multiple gradients for a **single sample**? For example, imagine that we are calculating multiple losses.

We can split each loss calculation as a different sample, but that implementation is inefficient, especially when the forward pass is expensive. Can we at least re-use forward computations?

|

https://github.com/pytorch/functorch/issues/1010

|

closed

|

[] | 2022-08-28T14:31:11Z

| 2023-01-08T10:23:04Z

| 23

|

JoaoLages

|

pytorch/TensorRT

| 1,317

|

caffe2

|

Why don't you install caffe2 with pytorch in NGC container 22.08?

|

https://github.com/pytorch/TensorRT/issues/1317

|

closed

|

[

"question",

"channel: NGC"

] | 2022-08-27T15:45:17Z

| 2023-01-03T18:30:26Z

| null |

s-mohaghegh97

|

pytorch/serve

| 1,819

|

How to transfer files to a custom handler with curl command

|

I have created a custom handler that inputs and outputs wav files.

The code is as follows

```Python

# custom handler file

# model_handler.py

"""

ModelHandler defines a custom model handler.

"""

import os

import soundfile

from espnet2.bin.enh_inference import *

from ts.torch_handler.base_handler import BaseHandler

class ModelHandler(BaseHandler):

"""

A custom model handler implementation.

"""

def __init__(self):

self._context = None

self.initialized = False

self.model = None

self.device = None

def initialize(self, context):

"""

Invoke by torchserve for loading a model

:param context: context contains model server system properties

:return:

"""

# load the model

self.manifest = context.manifest

properties = context.system_properties

model_dir = properties.get("model_dir")

self.device = torch.device("cuda:" + str(properties.get("gpu_id")) if torch.cuda.is_available() else "cpu")

# Read model serialize/pt file

serialized_file = self.manifest['model']['serializedFile']

model_pt_path = os.path.join(model_dir, serialized_file)

if not os.path.isfile(model_pt_path):

raise RuntimeError("Missing the model.pt file")

self.model = SeparateSpeech("./train_enh_transformer_tf.yaml", "./valid.loss.best.pth", normalize_output_wav=True)

self.initialized = True

def preprocess(self,data):

audio_data, rate = soundfile.read(data)

preprocessed_data = audio_data[np.newaxis, :]

return preprocessed_data

def inference(self, model_input):

model_output = self.model(model_input)

return model_output

def postprocess(self, inference_output):

"""

Return inference result.

:param inference_output: list of inference output

:return: list of predict results

"""

# Take output from network and post-process to desired format

postprocess_output = inference_output

#wav ni suru

return postprocess_output

def handle(self, data, context):

model_input = self.preprocess(data)

model_output = self.inference(model_input)

return self.postprocess(model_output)

```

I transferred the wav file to torhserve with the following command

> curl --data-binary @Mix.wav --noproxy '*' http://127.0.0.1:8080/predictions/denoise_transformer -v

However, I got the following response

```

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0)

> POST /predictions/denoise_transformer HTTP/1.1

> Host: 127.0.0.1:8080

> User-Agent: curl/7.58.0

> Accept: */*

> Content-Length: 128046

> Content-Type: application/x-www-form-urlencoded

> Expect: 100-continue

>

< HTTP/1.1 100 Continue

* We are completely uploaded and fine

< HTTP/1.1 500 Internal Server Error

< content-type: application/json

< x-request-id: 445155a4-5971-490a-ba7c-206f8eda5ea0

< Pragma: no-cache

< Cache-Control: no-cache; no-store, must-revalidate, private

< Expires: Thu, 01 Jan 1970 00:00:00 UTC

< content-length: 89

< connection: close

<

{

"code": 500,

"type": "ErrorDataDecoderException",

"message": "Bad end of line"

}

* Closing connection 0

```

What is wrong?

I have confirmed that the following command returns the response.

> curl --noproxy '*' http://127.0.0.1:8081/models

```

{

"models": [

{

"modelName": "denoise_transformer",

"modelUrl": "denoise_transformer.mar"

}

]

}

```

|

https://github.com/pytorch/serve/issues/1819

|

closed

|

[

"triaged_wait",

"support"

] | 2022-08-27T10:30:27Z

| 2022-08-30T23:40:53Z

| null |

Shin-ichi-Takayama

|

pytorch/data

| 754

|

A more powerful Mapper than can restrict function application to only part of the datapipe items?

|

We often have datapipes that return tuples `(img, target)` where we just want to call transformations on the img, but not the target. Sometimes it's the opposite: I want to apply a function to the target, and not to the img.

This usually forces us to write wrappers that "passthrough" either the img or the target. For example:

```py

def decode_img_only(data): # boilerplate wrapper

img, target = data

img = decode(img)

return img, data

def resize_img_only(data): # boilerplate wrapper

img, target = data

img = resize(img)

return img, data

def add_label_noise(data): # boilerplate wrapper

img, target = data

target = make_noisy_label(target)

return img, data

dp = ...

dp = dp.map(decode_img_only).map(resize_img_only).map(add_label_noise)

```

Perhaps a more convenient way of doing this would be to implement something similar to WebDataset's `map_dict` and `map_tuple`? This would avoid all the boilerplate wrappers. For example we could imagine the code above to simply be:

```py

dp = ...

dp = dp.map_tuple(decode, None).map(resize, None).map(None, make_noisy_label)

# or even

dp = dp.map_tuple(decode, None).map(resize, make_noisy_label)

# if the datapipes was returning a dict with "img" and "target" keys this could also be

dp = dp.map_dict("img"=decode).map_dict("img"=decode, "target"=make_noisy_label)

```

I even think it might be possible to implement all of `map_dict()` and `map_tuple()` functionalities withing the `.map()` function:

- 1 arg == current `map()`

- 1+ arg == `map_tuple()`

- keyword arg == `map_dict()`

CC @pmeier and @msaroufim to whom this might be of interest

|

https://github.com/meta-pytorch/data/issues/754

|

open

|

[] | 2022-08-26T21:16:32Z

| 2022-08-30T21:48:10Z

| 5

|

NicolasHug

|

huggingface/dataset-viewer

| 534

|

Store the cached responses on the Hub instead of mongodb?

|

The config and split info will be stored in the YAML of the dataset card (see https://github.com/huggingface/datasets/issues/4876), and the idea is to compute them and update the dataset card automatically. This means that storing the responses for `/splits` in the MongoDB is duplication.

If we store the responses for `/first-rows` in the Hub too (maybe in a special git ref), we might get rid of the MongoDB storage, or use another simpler cache mechanism if response time is an issue.

WDYT @huggingface/datasets-server @julien-c ?

|

https://github.com/huggingface/dataset-viewer/issues/534

|

closed

|

[

"question"

] | 2022-08-26T16:24:39Z

| 2022-09-19T09:09:29Z

| null |

severo

|

huggingface/datasets

| 4,902

|

Name the default config `default`

|

Currently, if a dataset has no configuration, a default configuration is created from the dataset name.

For example, for a dataset loaded from the hub repository, such as https://huggingface.co/datasets/user/dataset (repo id is `user/dataset`), the default configuration will be `user--dataset`.

It might be easier to handle to set it to `default`, or another reserved word.

|

https://github.com/huggingface/datasets/issues/4902

|

closed

|

[

"enhancement",

"question"

] | 2022-08-26T16:16:22Z

| 2023-07-24T21:15:31Z

| null |

severo

|

huggingface/optimum

| 362

|

unexpect behavior GPU runtime with ORTModelForSeq2SeqLM

|

### System Info

```shell

OS: Ubuntu 20.04.4 LTS

CARD: RTX 3080

Libs:

python 3.10.4

onnx==1.12.0

onnxruntime-gpu==1.12.1

torch==1.12.1

transformers==4.21.2

```

### Who can help?

@lewtun @michaelbenayoun @JingyaHuang @echarlaix

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

Steps to reproceduce the behavior:

1. Convert a public translation from here: [vinai-translate-en2vi](https://huggingface.co/vinai/vinai-translate-en2vi)

```

from optimum.onnxruntime import ORTModelForSeq2SeqLM

save_directory = "models/en2vi_onnx"

# Load a model from transformers and export it through the ONNX format

model = ORTModelForSeq2SeqLM.from_pretrained('vinai/vinai-translate-en2vi', from_transformers=True)

# Save the onnx model and tokenizer

model.save_pretrained(save_directory)

```

2. Load model with modified from [example of origin creater model](https://github.com/VinAIResearch/VinAI_Translate#english-to-vietnamese-translation)

```

from transformers import AutoTokenizer, pipeline

from optimum.onnxruntime import ORTModelForSeq2SeqLM

import torch

import time

device = "cuda:0" if torch.cuda.is_available() else "cpu"

tokenizer_en2vi = AutoTokenizer.from_pretrained("vinai/vinai-translate-en2vi", src_lang="en_XX")

model_en2vi = ORTModelForSeq2SeqLM.from_pretrained("models/en2vi_onnx")

model_en2vi.to(device)

# onnx_en2vi = pipeline("translation_en_to_vi", model=model_en2vi, tokenizer=tokenizer_en2vi, device=0)

# en_text = '''It's very cold to go out.'''

# start = time.time()

# outpt = onnx_en2vi(en_text)

# end = time.time()

# print(outpt)

# print("time: ", end - start)

def translate_en2vi(en_text: str) -> str:

start = time.time()

input_ids = tokenizer_en2vi(en_text, return_tensors="pt").input_ids.to(device)

end = time.time()

print("Tokenize time: {:.2f}s".format(end - start))

# print(input_ids.shape)

# print(input_ids)

start = time.time()

output_ids = model_en2vi.generate(

input_ids,

do_sample=True,

top_k=100,

top_p=0.8,

decoder_start_token_id=tokenizer_en2vi.lang_code_to_id["vi_VN"],

num_return_sequences=1,

)

end = time.time()

print("Generate time: {:.2f}s".format(end - start))

vi_text = tokenizer_en2vi.batch_decode(output_ids, skip_special_tokens=True)

vi_text = " ".join(vi_text)

return vi_text

en_text = '''It's very cold to go out.''' # long paragraph

start = time.time()

result = translate_en2vi(en_text)

print(result)

end = time.time()

print('{:.2f} seconds'.format((end - start)))

```

I change [line 167](https://github.com/huggingface/optimum/blob/661f4423097f580a06759ced557ecd638ab6b13a/optimum/onnxruntime/utils.py#L167) in optimum/onnxruntime/utils.py to _**return "CUDAExecutionProvider"**_ to run with GPU instead of an error.

3. run [example of origin creater model](https://github.com/VinAIResearch/VinAI_Translate#english-to-vietnamese-translation) with gpu and compare runtimes

### Expected behavior

The onnx model was expected run faster the result is unexpected:

- Runtime origin model with gpu is 3-5s while take about 3.5GB GPU

- Runtime onnx converted model with gpu is 70-80s while take about 7.7GB GPU

|

https://github.com/huggingface/optimum/issues/362

|

closed

|

[

"bug",

"inference",

"onnxruntime"

] | 2022-08-26T02:11:26Z

| 2022-12-09T09:13:22Z

| 3

|

tranmanhdat

|

huggingface/dataset-viewer

| 528

|

metrics: how to manage variability between the admin pods?

|

The metrics include one entry per uvicorn worker of the `admin` service, but they give different values.

<details>

<summary>Example of a response to https://datasets-server.huggingface.co/admin/metrics</summary>

<pre>

# HELP starlette_requests_in_progress Multiprocess metric

# TYPE starlette_requests_in_progress gauge

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="16"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="16"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="12"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="12"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="15"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="15"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="13"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="13"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="11"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="11"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="18"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="18"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="14"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="14"} 1.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="10"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="10"} 0.0

starlette_requests_in_progress{method="GET",path_template="/healthcheck",pid="17"} 0.0

starlette_requests_in_progress{method="GET",path_template="/metrics",pid="17"} 0.0

# HELP queue_jobs_total Multiprocess metric

# TYPE queue_jobs_total gauge

queue_jobs_total{pid="16",queue="/splits",status="waiting"} 0.0

queue_jobs_total{pid="16",queue="/splits",status="started"} 5.0

queue_jobs_total{pid="16",queue="/splits",status="success"} 71154.0

queue_jobs_total{pid="16",queue="/splits",status="error"} 41640.0

queue_jobs_total{pid="16",queue="/splits",status="cancelled"} 133.0

queue_jobs_total{pid="16",queue="/rows",status="waiting"} 372.0

queue_jobs_total{pid="16",queue="/rows",status="started"} 21.0

queue_jobs_total{pid="16",queue="/rows",status="success"} 300541.0

queue_jobs_total{pid="16",queue="/rows",status="error"} 121306.0

queue_jobs_total{pid="16",queue="/rows",status="cancelled"} 1500.0

queue_jobs_total{pid="16",queue="/splits-next",status="waiting"} 0.0

queue_jobs_total{pid="16",queue="/splits-next",status="started"} 4.0

queue_jobs_total{pid="16",queue="/splits-next",status="success"} 30896.0

queue_jobs_total{pid="16",queue="/splits-next",status="error"} 25611.0

queue_jobs_total{pid="16",queue="/splits-next",status="cancelled"} 92.0

queue_jobs_total{pid="16",queue="/first-rows",status="waiting"} 11406.0

queue_jobs_total{pid="16",queue="/first-rows",status="started"} 52.0

queue_jobs_total{pid="16",queue="/first-rows",status="success"} 142201.0

queue_jobs_total{pid="16",queue="/first-rows",status="error"} 30097.0

queue_jobs_total{pid="16",queue="/first-rows",status="cancelled"} 573.0

queue_jobs_total{pid="12",queue="/splits",status="waiting"} 0.0

queue_jobs_total{pid="12",queue="/splits",status="started"} 5.0

queue_jobs_total{pid="12",queue="/splits",status="success"} 71154.0

queue_jobs_total{pid="12",queue="/splits",status="error"} 41638.0

queue_jobs_total{pid="12",queue="/splits",status="cancelled"} 133.0

queue_jobs_total{pid="12",queue="/rows",status="waiting"} 424.0

queue_jobs_total{pid="12",queue="/rows",status="started"} 21.0

queue_jobs_total{pid="12",queue="/rows",status="success"} 300489.0

queue_jobs_total{pid="12",queue="/rows",status="error"} 121306.0

queue_jobs_total{pid="12",queue="/rows",status="cancelled"} 1500.0

queue_jobs_total{pid="12",queue="/splits-next",status="waiting"} 0.0

queue_jobs_total{pid="12",queue="/splits-next",status="started"} 4.0

queue_jobs_total{pid="12",queue="/splits-next",status="success"} 30896.0

queue_jobs_total{pid="12",queue="/splits-next",status="error"} 25610.0

queue_jobs_total{pid="12",queue="/splits-next",status="cancelled"} 92.0

queue_jobs_total{pid="12",queue="/first-rows",status="waiting"} 11470.0

queue_jobs_total{pid="12",queue="/first-rows",status="started"} 52.0

queue_jobs_total{pid="12",queue="/first-rows",status="success"} 142144.0

queue_jobs_total{pid="12",queue="/first-rows",status="error"} 30090.0

queue_jobs_total{pid="12",queue="/first-rows",status="cancelled"} 573.0

queue_jobs_total{pid="15",queue="/splits",status="waiting"} 0.0

queue_jobs_total{pid="15",queue="/splits",status="started"} 5.0

queue_jobs_total{pid="15",queue="/splits",status="success"} 71154.0

queue_jobs_total{pid="15",queue="/splits",status="error"} 41640.0

queue_jobs

|

https://github.com/huggingface/dataset-viewer/issues/528

|

closed

|

[

"bug",

"question"

] | 2022-08-25T19:48:44Z

| 2022-09-19T09:10:11Z

| null |

severo

|

pytorch/torchx

| 589

|

Add per workspace runopts/config

|

## Description

<!-- concise description of the feature/enhancement -->

## Motivation/Background

<!-- why is this feature/enhancement important? provide background context -->

Currently Workspaces piggyback on the config options for the scheduler. This means that every scheduler is deeply tied to the workspace and we have to copy the options to every runner.

https://github.com/pytorch/torchx/blob/main/torchx/schedulers/kubernetes_scheduler.py#L654-L658

## Detailed Proposal

<!-- provide a detailed proposal -->

1. Add a new method to the Workspace base class that allows specifying runopts from them

```python

@abstractmethod

def workspace_run_opts(self) -> runopts:

...

```

2. Update runner to call the workspace runopts method

3. Migrate all `image_repo` DockerWorkspace runopts to the class.

## Alternatives

<!-- discuss the alternatives considered and their pros/cons -->

## Additional context/links

<!-- link to code, documentation, etc. -->

https://github.com/pytorch/torchx/blob/main/torchx/schedulers/api.py#L187

https://github.com/pytorch/torchx/blob/main/torchx/schedulers/docker_scheduler.py

https://github.com/pytorch/torchx/blob/main/torchx/workspace/docker_workspace.py

|

https://github.com/meta-pytorch/torchx/issues/589

|

open

|

[

"enhancement",

"module: runner",

"docker"

] | 2022-08-25T18:18:35Z

| 2022-08-25T18:18:35Z

| 0

|

d4l3k

|

pytorch/pytorch

| 84,014

|

fill_ OpInfo code not used, also, doesn't test the case where the second argument is a Tensor

|

Two observations:

1. `sample_inputs_fill_` is no longer used. Can be deleted (https://github.com/pytorch/pytorch/blob/master/torch/testing/_internal/common_methods_invocations.py#L1798-L1807)

2. The new OpInfo for fill doesn't actually test the `tensor.fill_(other_tensor)` case. Previously we did test this, as shown by `sample_inputs_fill_`

cc @mruberry

|

https://github.com/pytorch/pytorch/issues/84014

|

open

|

[

"module: tests",

"triaged"

] | 2022-08-24T20:39:11Z

| 2022-08-24T20:40:39Z

| null |

zou3519

|

huggingface/datasets

| 4,881

|

Language names and language codes: connecting to a big database (rather than slow enrichment of custom list)

|

**The problem:**

Language diversity is an important dimension of the diversity of datasets. To find one's way around datasets, being able to search by language name and by standardized codes appears crucial.

Currently the list of language codes is [here](https://github.com/huggingface/datasets/blob/main/src/datasets/utils/resources/languages.json), right? At about 1,500 entries, it is roughly at 1/4th of the world's diversity of extant languages. (Probably less, as the list of 1,418 contains variants that are linguistically very close: 108 varieties of English, for instance.)

Looking forward to ever increasing coverage, how will the list of language names and language codes improve over time?

Enrichment of the custom list by HFT contributors (like [here](https://github.com/huggingface/datasets/pull/4880)) has several issues:

* progress is likely to be slow:

(input required from reviewers, etc.)

* the more contributors, the less consistency can be expected among contributions. No need to elaborate on how much confusion is likely to ensue as datasets accumulate.

* there is no information on which language relates with which: no encoding of the special closeness between the languages of the Northwestern Germanic branch (English+Dutch+German etc.), for instance. Information on phylogenetic closeness can be relevant to run experiments on transfer of technology from one language to its close relatives.

**A solution that seems desirable:**

Connecting to an established database that (i) aims at full coverage of the world's languages and (ii) has information on higher-level groupings, alternative names, etc.

It takes a lot of hard work to do such databases. Two important initiatives are [Ethnologue](https://www.ethnologue.com/) (ISO standard) and [Glottolog](https://glottolog.org/). Both have pros and cons. Glottolog contains references to Ethnologue identifiers, so adopting Glottolog entails getting the advantages of both sets of language codes.

Both seem technically accessible & 'developer-friendly'. Glottolog has a [GitHub repo](https://github.com/glottolog/glottolog). For Ethnologue, harvesting tools have been devised (see [here](https://github.com/lyy1994/ethnologue); I did not try it out).

In case a conversation with linguists seemed in order here, I'd be happy to participate ('pro bono', of course), & to rustle up more colleagues as useful, to help this useful development happen.

With appreciation of HFT,

|

https://github.com/huggingface/datasets/issues/4881

|

open

|

[

"enhancement"

] | 2022-08-23T20:14:24Z

| 2024-04-22T15:57:28Z

| 49

|

alexis-michaud

|

pytorch/examples

| 1,040

|

In example DCGAN, curl timed out

|

Your issue may already be reported!

Please search on the [issue tracker](https://github.com/pytorch/serve/examples) before creating one.

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

* Pytorch version: 1.12.1

* Operating System and version: 20.04.4 LTS (Focal Fossa)

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Installed using source? [yes/no]: no

* Are you planning to deploy it using docker container? [yes/no]: yes

* Is it a CPU or GPU environment?: GPU

* Which example are you using: DCGAN

* Link to code or data to repro [if any]:

## Expected Behavior

<!--- If you're describing a bug, tell us what should happen -->

dcgan finishes without errors

## Current Behavior

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

dcgan fails with exceptions

## Possible Solution

<!--- Not obligatory, but suggest a fix/reason for the bug -->

## Steps to Reproduce

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

<!--- reproduce this bug. Include code to reproduce, if relevant -->

1. cd examples

2. bash run_python_examples.sh "install_deps, dcgan"

## Failure Logs [if any]

<!--- Provide any relevant log snippets or files here. -->

```

Downloading classroom train set

--

181 | curl: /opt/conda/lib/libcurl.so.4: no version information available (required by curl)

182 | % Total % Received % Xferd Average Speed Time Time Time Current

183 | Dload Upload Total Spent Left Speed

...

curl: (18) transfer closed with 3277022655 bytes remaining to read

...

Some examples failed:

couldn't unzip classroom

```

I know this is a thrid-party repo issue, which I have already raised in [lsun repo](https://github.com/fyu/lsun/issues/46)

Is it possible that you could have a solution on your end? The request speed of the domain http://dl.yf.io is just slow in general.

Thank you!

|

https://github.com/pytorch/examples/issues/1040

|

open

|

[

"data"

] | 2022-08-23T18:34:09Z

| 2022-08-24T02:46:44Z

| 1

|

ShiboXing

|

huggingface/datasets

| 4,878

|

[not really a bug] `identical_ok` is deprecated in huggingface-hub's `upload_file`

|

In the huggingface-hub dependency, the `identical_ok` argument has no effect in `upload_file` (and it will be removed soon)

See

https://github.com/huggingface/huggingface_hub/blob/43499582b19df1ed081a5b2bd7a364e9cacdc91d/src/huggingface_hub/hf_api.py#L2164-L2169

It's used here:

https://github.com/huggingface/datasets/blob/fcfcc951a73efbc677f9def9a8707d0af93d5890/src/datasets/dataset_dict.py#L1373-L1381

https://github.com/huggingface/datasets/blob/fdcb8b144ce3ef241410281e125bd03e87b8caa1/src/datasets/arrow_dataset.py#L4354-L4362

https://github.com/huggingface/datasets/blob/fdcb8b144ce3ef241410281e125bd03e87b8caa1/src/datasets/arrow_dataset.py#L4197-L4213

We should remove it.

Maybe the third code sample has an unexpected behavior since it uses the non-default value `identical_ok = False`, but the argument is ignored.

|

https://github.com/huggingface/datasets/issues/4878

|

closed

|

[

"help wanted",

"question"

] | 2022-08-23T17:09:55Z

| 2022-09-13T14:00:06Z

| null |

severo

|

pytorch/TensorRT

| 1,303

|

How to correctly format input for Fp16 inference using torch-tensorrt C++

|

## ❓ Question

<!-- Your question -->

## What you have already tried

<!-- A clear and concise description of what you have already done. -->

Hi, I am using the following to export a torch scripted model to Fp16 tensorrt which will then be used in a C++ environment.

`network.load_state_dict(torch.load(path_weights, map_location="cuda:0"))

network.eval().cuda()

dummy_input = torch.rand(1, 6, 320, 224).cuda()

network_traced = torch.jit.trace(network, dummy_input) # converting to plain torchscript

# convert/ compile to trt

compile_settings = {

"inputs": [torchtrt.Input([1, 6, 320, 224])],

"enabled_precisions": {torch.half},

"workspace_size": 6 << 22

}

trt_ts_module = torchtrt.compile(network_traced, inputs=[torchtrt.Input((1, 6, 320, 224), dtype=torch.half)],

enabled_precisions={torch.half},

workspace_size=6<<22)

torch.jit.save(trt_ts_module, trt_ts_save_path)`

Is this correct?

If yes, then what is the correct way to cast the input tensor in C++?

Do I need to convert it to torck::kHalf explicitly? Or can the inputs stay as FP32

Please let me know.

Here is my code for loading the CNN for inference:

`try {

// Deserialize the ScriptModule from a file using torch::jit::load().

trt_ts_mod_cnn = torch::jit::load(trt_ts_module_path);

trt_ts_mod_cnn.to(torch::kCUDA);

cout << trt_ts_mod_cnn.type() << endl;

cout << trt_ts_mod_cnn.dump_to_str(true, true, false) << endl;

} catch (const c10::Error& e) {

std::cerr << "error loading the model from : " << trt_ts_module_path << std::endl;

// return -1;

}

auto inBEVInference = torch::rand({1, bevSettings.N_CHANNELS_BEV, bevSettings.N_ROWS_BEV, bevSettings.N_COLS_BEV},\

{at::kCUDA}).to(torch::kFloat32);

// auto inBEVInference = torch::rand({1, bevSettings.N_CHANNELS_BEV, bevSettings.N_ROWS_BEV, bevSettings.N_COLS_BEV},\

// {at::kCUDA}).to(torch::kFloat16);

std::vector<torch::jit::IValue> trt_inputs_ivalues;

trt_inputs_ivalues.push_back(inBEVInference);

auto outputs = trt_ts_mod_cnn.forward(trt_inputs_ivalues).toTuple();

auto kp = outputs->elements()[0].toTensor();

auto hwl = outputs->elements()[1].toTensor();

auto rot = outputs->elements()[2].toTensor();

auto dxdy = outputs->elements()[3].toTensor();

cout << "Size KP out -> " << kp.sizes() << endl;

cout << "Size HWL out -> " << hwl.sizes() << endl;

cout << "Size ROT out -> " << rot.sizes() << endl;

cout << "Size DXDY out -> " << dxdy.sizes() << endl;`

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.11.0+cu113

- CPU Architecture: x86_64

- OS (e.g., Linux): Linux, Ubuntu 20.04, docker container

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Build command you used (if compiling from source):

- Are you using local sources or building from archives: local

- Python version: 3.8.10

- CUDA version: Cuda compilation tools, release 11.4, V11.4.152 (on the linux system)

- GPU models and configuration: RTX2080 MaxQ

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1303

|

closed

|

[

"question",

"No Activity"

] | 2022-08-23T14:05:05Z

| 2022-12-04T00:02:10Z

| null |

SM1991CODES

|

pytorch/examples

| 1,039

|

FileNotFoundError: Couldn't find any class folder in /content/train2014.

|

Your issue may already be reported!

Please search on the [issue tracker](https://github.com/pytorch/serve/examples) before creating one.

I wanna train new style model

run this cmd

!unzip train2014.zip -d /content

!python /content/examples/fast_neural_style/neural_style/neural_style.py train --dataset /content/train2014 --style-image /content/A.jpg --save-model-dir /content --epochs 2 --cuda 1

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

* Pytorch version:

* Operating System and version:

## Your Environment

Colab

https://colab.research.google.com/github/pytorch/xla/blob/master/contrib/colab/style_transfer_inference.ipynb#scrollTo=EozMXwIV9iOJ

got this error

Traceback (most recent call last):

File "/content/examples/fast_neural_style/neural_style/neural_style.py", line 249, in <module>

main()

File "/content/examples/fast_neural_style/neural_style/neural_style.py", line 243, in main

train(args)

File "/content/examples/fast_neural_style/neural_style/neural_style.py", line 43, in train

train_dataset = datasets.ImageFolder(args.dataset, transform)

File "/usr/local/lib/python3.7/dist-packages/torchvision/datasets/folder.py", line 316, in __init__

is_valid_file=is_valid_file,

File "/usr/local/lib/python3.7/dist-packages/torchvision/datasets/folder.py", line 145, in __init__

classes, class_to_idx = self.find_classes(self.root)

File "/usr/local/lib/python3.7/dist-packages/torchvision/datasets/folder.py", line 219, in find_classes

return find_classes(directory)

File "/usr/local/lib/python3.7/dist-packages/torchvision/datasets/folder.py", line 43, in find_classes

raise FileNotFoundError(f"Couldn't find any class folder in {directory}.")

FileNotFoundError: Couldn't find any class folder in /content/train2014.

How can I fix it?

thx

|

https://github.com/pytorch/examples/issues/1039

|

open

|

[

"bug",

"data"

] | 2022-08-23T07:33:17Z

| 2023-06-08T03:09:42Z

| 2

|

sevaroy

|

huggingface/diffusers

| 228

|

stable-diffusion-v1-4 link in release v0.2.3 is broken

|

### Describe the bug

@anton-l the link (https://huggingface.co/CompVis/stable-diffusion-v1-4) in the [release v0.2.3](https://github.com/huggingface/diffusers/releases/tag/v0.2.3) returns a 404.

### Reproduction

_No response_

### Logs

_No response_

### System Info

```shell

N/A

```

|

https://github.com/huggingface/diffusers/issues/228

|

closed

|

[

"question"

] | 2022-08-22T09:07:27Z

| 2022-08-22T20:53:00Z

| null |

leszekhanusz

|

huggingface/pytorch-image-models

| 1,424

|

[FEATURE] What hyperparameters is used to get the results stated in the paper with the ViT-B pretrained miil weights on imagenet1k?

|

**Is your feature request related to a problem? Please describe.**

What hyperparameters are used to get the results stated in this paper (https://arxiv.org/pdf/2104.10972.pdf) on ImageNet1k with the ViT-B pretrained miil weights from vision_transformer.py in line 164-167? I tried the hyperparemeters as stated in the paper for TResNet but I'm getting below average results. I'm not sure what other hyperparameter details i'm missing. How is the classifier head initialized? Do they use sgd momentum or without momentum? Do they use Hflip or random erasing? I think the hyperparameters stated in the paper is only applicable for TResNet and the code in https://github.com/Alibaba-MIIL/ImageNet21K is missing a lot of details in finetuning stage.

|

https://github.com/huggingface/pytorch-image-models/issues/1424

|

closed

|

[

"enhancement"

] | 2022-08-21T22:26:48Z

| 2022-08-22T04:17:43Z

| null |

Phuoc-Hoan-Le

|

pytorch/functorch

| 1,006

|

RuntimeError: CUDA error: no kernel image is available for execution on the device

|

Hi, I have cuda 11.7 on my system and I am trying to install functorch, since the stable version of pytorch for cuda 11.7 is not available [here](https://pytorch.org/get-started/previous-versions/), I just run `pip install functorch` which also installs the compatible version of pytorch.

But when I run my code that uses the GPU, I get the following error :

`RuntimeError: CUDA error: no kernel image is available for execution on the device`

Is it possible to use functorch in my case?

|

https://github.com/pytorch/functorch/issues/1006

|

closed

|

[] | 2022-08-21T19:30:34Z

| 2022-08-24T13:58:45Z

| 8

|

ykemiche

|

pytorch/TensorRT

| 1,295

|

Jetpack 5.0.2

|

## ❓ Question

Is it known yet whether Torch TensorRT is compatible with NVIDIA Jetpack 5.0.2 on NVIDIA Jetson devices?

## What you have already tried

I am trying to install torch-tensorrt for Python on my Jetson Xavier NX with Jetpack 5.0.2. Followed the instructions for the Jetpack 5.0 install and have successfully run everything up until ```python3 setup.py install --use-cxx11-abi``` which ran all the way until it got to “Allowing ninja to set a default number of workers” which it hung on for quite some time until eventually erroring out with the output listed below. Any advice would be much appreciated.

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0): 1.13.0a0+08820cb0.nv22.07

- CPU Architecture: aarch64

- OS (e.g., Linux): Jetson Linux (Ubuntu)

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Build command you used (if compiling from source):

- Are you using local sources or building from archives: Honestly don't know the difference

- Python version: 3.8.10

- CUDA version: 11.4

- GPU models and configuration: Jetson Xavier NX

- Any other relevant information:

## Additional context

```

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)