repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

huggingface/dataset-viewer

| 455

|

what to do with /is-valid?

|

Currently, the endpoint /is-valid is not documented in https://redocly.github.io/redoc/?url=https://datasets-server.huggingface.co/openapi.json (but it is in https://github.com/huggingface/datasets-server/blob/main/services/api/README.md).

It's not used in the dataset viewer in moonlanding, but https://github.com/huggingface/model-evaluator uses it (cc @lewtun).

I have the impression that we could change this endpoint to something more precise, since "valid" is a bit loose, and will be less and less precise when other services will be added to the dataset server (statistics, random access, parquet file, etc). Instead, maybe we could create a new endpoint with more details about what services are working for the dataset. Or do we consider a dataset valid if all the services are available?

What should we do?

- [ ] keep it this way

- [ ] create a new endpoint with details of the available services

also cc @lhoestq

|

https://github.com/huggingface/dataset-viewer/issues/455

|

closed

|

[

"question"

] | 2022-07-22T19:29:08Z

| 2022-08-02T14:16:24Z

| null |

severo

|

pytorch/torchx

| 567

|

[exploratory] TorchX Dashboard

|

## Description

<!-- concise description of the feature/enhancement -->

Add a new `torchx dashboard` command that will launch a local HTTP server that allows users to view all of their jobs with statuses, logs and integration with any ML specific extras such as artifacts, Tensorboard, etc.

## Motivation/Background

<!-- why is this feature/enhancement important? provide background context -->

Currently the interface for TorchX is only via programmatic or via the CLI. It would also be nice to have a UI dashboard that could be used to monitor all of your job as well as support deeper integrations such as experiment tracking and metrics.

Right now if users want to use a UI they have to use their platform specific one (i.e aws batch/ray dashboard) and many don't have one (slurm/volcano).

## Detailed Proposal

<!-- provide a detailed proposal -->

This would be a fairly simple interface built on top of something such as Flask (https://flask.palletsprojects.com/en/2.1.x/quickstart/).

Pages:

* `/` the main page with a list of all of the users jobs and filters

* `/<scheduler>/<jobid>` an overview of the job, the job def and the status with a tab for logs, artifacts and any other URLs that are logged

* `/<scheduler>/<jobid>/logs` - view the logs

* `/<scheduler>/<jobid>/external/<metadata key>` - iframes based off of external services such as tensorboard etc

## Alternatives

<!-- discuss the alternatives considered and their pros/cons -->

Providing a way to view URLs for external services via the terminal.

## Additional context/links

<!-- link to code, documentation, etc. -->

* https://docs.ray.io/en/latest/ray-core/ray-dashboard.html#logical-view

|

https://github.com/meta-pytorch/torchx/issues/567

|

open

|

[

"enhancement",

"RFC",

"cli"

] | 2022-07-22T19:28:51Z

| 2022-08-02T21:23:14Z

| 1

|

d4l3k

|

pytorch/torchx

| 566

|

add a TORCHX_JOB_ID environment variable to all jobs launched via runner

|

## Description

<!-- concise description of the feature/enhancement -->

As part of the future experiment tracking we want to be able to have the application know it's own identity. When we launch a job we return the full job id (i.e. `kubernetes://session/app_id`) but the app itself doesn't have this exact same job ID. We do provide an `app_id` macro that can be used in the app def for both env and arguments but it's up to the app owner to manually add that.

## Motivation/Background

<!-- why is this feature/enhancement important? provide background context -->

If we add a `TORCHX_JOB_ID` environment variable it allows us to write more standardized integrations for experiment tracking that use the job ID as a key. There's no added cost from an extra environment variable and will enable deeper automatic integrations into other libraries.

## Detailed Proposal

<!-- provide a detailed proposal -->

Add a new environment variable to Runner.dryrun

https://github.com/pytorch/torchx/blob/main/torchx/runner/api.py#L241

that uses the macros.app_id to add the full job ID using the scheduler and session information form the runner.

https://github.com/pytorch/torchx/blob/main/torchx/specs/api.py#L156

## Alternatives

<!-- discuss the alternatives considered and their pros/cons -->

## Additional context/links

<!-- link to code, documentation, etc. -->

|

https://github.com/meta-pytorch/torchx/issues/566

|

open

|

[

"enhancement",

"module: runner",

"tracking"

] | 2022-07-22T18:22:24Z

| 2022-07-22T21:28:02Z

| 0

|

d4l3k

|

pytorch/functorch

| 979

|

ImportError: ~/.local/lib/python3.9/site-packages/functorch/_C.so: undefined symbol: _ZNK3c1010TensorImpl16sym_sizes_customEv

|

Hi All,

I was running an older version of PyTorch ( - built from source) with FuncTorch ( - built from source), and somehow I've broken the older version of functorch. When I import functorch I get the following error,

```

import functorch

#returns ImportError: ~/.local/lib/python3.9/site-packages/functorch/_C.so: undefined symbol: _ZNK3c1010TensorImpl16sym_sizes_customEv

```

The version I had of `functorch` was `0.2.0a0+9d6ee76`, is there a way to perhaps re-install to fix this ImportError? I do have the latest version of PyTorch/FuncTorch in a separate conda environment but I wanted to check how it compares to the older version in this 'older' conda environment PyTorch/Functorch were versions ,1.12.0a0+git7c2103a and 0.2.0a0+9d6ee76 respectively.

Is there a way to download a specific version of `functorch` with `https://github.com/pytorch/functorch.git` ? Or another way to fix this issue?

|

https://github.com/pytorch/functorch/issues/979

|

closed

|

[] | 2022-07-22T14:51:13Z

| 2022-07-25T19:22:04Z

| 24

|

AlphaBetaGamma96

|

huggingface/datasets

| 4,736

|

Dataset Viewer issue for deepklarity/huggingface-spaces-dataset

|

### Link

https://huggingface.co/datasets/deepklarity/huggingface-spaces-dataset/viewer/deepklarity--huggingface-spaces-dataset/train

### Description

Hi Team,

I'm getting the following error on a uploaded dataset. I'm getting the same status for a couple of hours now. The dataset size is `<1MB` and the format is csv, so I'm not sure if it's supposed to take this much time or not.

```

Status code: 400

Exception: Status400Error

Message: The split is being processed. Retry later.

```

Is there any explicit step to be taken to get the viewer to work?

### Owner

Yes

|

https://github.com/huggingface/datasets/issues/4736

|

closed

|

[

"dataset-viewer"

] | 2022-07-22T12:14:18Z

| 2022-07-22T13:46:38Z

| 1

|

dk-crazydiv

|

pytorch/TensorRT

| 1,199

|

Cant import torch_tensorrt

|

ERROR:

from torch.fx.passes.pass_manager import PassManager

ModuleNotFoundError: No module named 'torch.fx.passes.pass_manager'

- PyTorch Version : 1.11

- CPU Architecture: jetson AGX xavier

- OS (e.g., Linux):

- How you installed PyTorch: nvidia forum wheel

- Build command you used (if compiling from source):

- Are you using local sources or building from archives:

- Python version:3.8

- CUDA version: 11.4

|

https://github.com/pytorch/TensorRT/issues/1199

|

closed

|

[

"question",

"channel: linux-jetpack",

"component: fx"

] | 2022-07-22T08:00:34Z

| 2022-09-02T18:04:29Z

| null |

sanath-tech

|

huggingface/datasets

| 4,732

|

Document better that loading a dataset passing its name does not use the local script

|

As reported by @TrentBrick here https://github.com/huggingface/datasets/issues/4725#issuecomment-1191858596, it could be more clear that loading a dataset by passing its name does not use the (modified) local script of it.

What he did:

- he installed `datasets` from source

- he modified locally `datasets/the_pile/the_pile.py` loading script

- he tried to load it but using `load_dataset("the_pile")` instead of `load_dataset("datasets/the_pile")`

- as explained here https://github.com/huggingface/datasets/issues/4725#issuecomment-1191040245:

- the former does not use the local script, but instead it downloads a copy of `the_pile.py` from our GitHub, caches it locally (inside `~/.cache/huggingface/modules`) and uses that.

He suggests adding a more clear explanation about this. He suggests adding it maybe in [Installation > source](https://huggingface.co/docs/datasets/installation))

CC: @stevhliu

|

https://github.com/huggingface/datasets/issues/4732

|

closed

|

[

"documentation"

] | 2022-07-22T06:07:31Z

| 2022-08-23T16:32:23Z

| 3

|

albertvillanova

|

pytorch/TensorRT

| 1,198

|

❓ [Question] Where can we get VGG-16 checkpoint pretrained on CIFAR-10 ?

|

## ❓ Question

To get $pwd/vgg16_ckpts/ckpt_epoch110.pth, I tried to run the script named [python3 finetune_qat.py](https://github.com/pytorch/TensorRT/tree/v1.1.1/examples/int8/training/vgg16#quantization-aware-fine-tuning-for-trying-out-qat-workflows).

However, the script needs VGG-16 pretrained model at 100-epoch as follows:

```bash

Loading from checkpoint $(PATH_TOTensorRT)/examples/int8/training/vgg16/vgg16_ckpts/ckpt_epoch100.pth

```

Then where can we download the checkpoint-epoch 100 model?

I failed to download it from other internet site

|

https://github.com/pytorch/TensorRT/issues/1198

|

closed

|

[

"question"

] | 2022-07-22T05:06:34Z

| 2022-07-22T05:13:32Z

| null |

zinuok

|

pytorch/TensorRT

| 1,197

|

❓ [Question] Where can we get 'trained_vgg16_qat.jit.pt' ?

|

## ❓ Question

Where can we get 'trained_vgg16_qat.jit.pt' ?

the link in [test_qat_trt_accuracy.py](https://github.com/pytorch/TensorRT/blob/master/tests/py/test_qat_trt_accuracy.py#L74)

doesn't work now.

|

https://github.com/pytorch/TensorRT/issues/1197

|

closed

|

[

"question"

] | 2022-07-22T04:38:53Z

| 2022-07-22T04:46:46Z

| null |

zinuok

|

pytorch/serve

| 1,753

|

how to return the predictions in JSON format(in JSON string and JSON header)?

|

I was using torchserve to production service, I was able to return the predictions with a JSON string, but I was unable to get the response with a JSON header.

|

https://github.com/pytorch/serve/issues/1753

|

closed

|

[

"triaged_wait",

"support"

] | 2022-07-22T04:04:26Z

| 2022-07-24T16:50:32Z

| null |

Vincentwei1021

|

pytorch/functorch

| 977

|

Hessian (w.r.t inputs) calculation in PyTorch differs from FuncTorch

|

Hi All,

I've been trying to calculate the Hessian of the output of my network with respect to its inputs within FuncTorch. I had a version within PyTorch that supports batches, however, they seem to disagree with each other and I have no idea why they don't give the same results. Something is clearly wrong, I know my PyTorch version is right so either there's an issue in my version of FuncTorch or I've implemented it wrong in FuncTorch.

Also, how can I use the `has_aux` flag in `jacrev` to return the jacobian from the first `jacrev` so I don't have to repeat the jacobian calculation?

The only problem with my example is that it uses `torch.linalg.slogdet` and from what I remember FuncTorch can't vmap over `.item()`. I do have my own fork of pytorch where I edited the backward to remove the `.item()` call so it works with vmap. Although, it's not the greatest implementation as I just set it to the default `nonsingular_case_backward` like so,

```

Tensor slogdet_backward(const Tensor& grad_logabsdet,

const Tensor& self,

const Tensor& signdet, const Tensor& logabsdet) {

auto singular_case_backward = [&](const Tensor& grad_logabsdet, const Tensor& self) -> Tensor {

Tensor u, sigma, vh;

std::tie(u, sigma, vh) = at::linalg_svd(self, false);

Tensor v = vh.mH();

// sigma has all non-negative entries (also with at least one zero entry)

// so logabsdet = \sum log(abs(sigma))

// but det = 0, so backward logabsdet = \sum log(sigma)

auto gsigma = grad_logabsdet.unsqueeze(-1).div(sigma);

return svd_backward({}, gsigma, {}, u, sigma, vh);

};

auto nonsingular_case_backward = [&](const Tensor& grad_logabsdet, const Tensor& self) -> Tensor {

// TODO: replace self.inverse with linalg_inverse

return unsqueeze_multiple(grad_logabsdet, {-1, -2}, self.dim()) * self.inverse().mH();

};

auto nonsingular = nonsingular_case_backward(grad_logabsdet, self);

return nonsingular;

}

```

My 'minimal' reproducible script is below with the output shown below that. It computes the Laplacian via a PyTorch method and via FuncTorch for a single sample of size `[A,1]` where `A` is the number of input nodes to the network.

```

import torch

import torch.nn as nn

from torch import Tensor

import functorch

from functorch import jacrev, jacfwd, hessian, make_functional, vmap

import time

_ = torch.manual_seed(0)

print("PyTorch version: ", torch.__version__)

print("CUDA version: ", torch.version.cuda)

print("FuncTorch version: ", functorch.__version__)

def sync_time() -> float:

torch.cuda.synchronize()

return time.perf_counter()

B=1 #batch

A=3 #input nodes

device=torch.device("cuda")

class model(nn.Module):

def __init__(self, num_inputs, num_hidden):

super(model, self).__init__()

self.num_inputs=num_inputs

self.func = nn.Tanh()

self.fc1 = nn.Linear(2, num_hidden)

self.fc2 = nn.Linear(num_hidden, num_inputs)

def forward(self, x):

"""

Takes x in [B,A,1] and maps it to sign/logabsdet value in Tuple([B,], [B,])

"""

idx=len(x.shape)

rep=[1 for _ in range(idx)]

rep[-2] = self.num_inputs

g = x.mean(dim=(idx-2), keepdim=True).repeat(*rep)

f = torch.cat((x,g), dim=-1)

h = self.func(self.fc1(f))

mat = self.fc2(h)

sgn, logabs = torch.linalg.slogdet(mat)

return sgn, logabs

net = model(A, 64)

net = net.to(device)

fnet, params = make_functional(net)

def logabs(params, x):

_, logabs = fnet(params, x)

#print("functorch logabs: ",logabs)

return logabs

def kinetic_pytorch(xs: Tensor) -> Tensor:

"""Method to calculate the local kinetic energy values of a netork function, f, for samples, x.

The values calculated here are 1/f d2f/dx2 which is equivalent to d2log(|f|)/dx2 + (dlog(|f|)/dx)^2

within the log-domain (rather than the linear-domain).

:param xs: The input positions of the many-body particles

:type xs: class: `torch.Tensor`

"""

xis = [xi.requires_grad_() for xi in xs.flatten(start_dim=1).t()]

xs_flat = torch.stack(xis, dim=1)

_, ys = net(xs_flat.view_as(xs))

#print("pytorch logabs: ",ys)

ones = torch.ones_like(ys)

#df_dx calculation

(dy_dxs, ) = torch.autograd.grad(ys, xs_flat, ones, retain_graph=True, create_graph=True)

#d2f_dx2 calculation (diagonal only)

lay_ys = sum(torch.autograd.grad(dy_dxi, xi, ones, retain_graph=True, create_graph=False)[0] \

for xi, dy_dxi in zip(xis, (dy_dxs[..., i] for i in range(len(xis))))

)

#print("(PyTorch): ",lay_ys, dy_dxs)

ek_local_per_walker = -0.5 * (lay_ys + dy_dxs.pow(2).sum(-1)) #move const out of loop?

return ek_local_per_walker

jacjaclogabs = jacrev(jacrev(logabs, argnums=1), argnums=1)

jaclogabs = jacrev(logabs, argnums=1)

def kinetic_functorch(params, x):

d2f_dx2 = vmap(jacjaclogabs, in_dims=(None, 0))(par

|

https://github.com/pytorch/functorch/issues/977

|

closed

|

[] | 2022-07-21T12:11:09Z

| 2022-08-01T19:37:18Z

| 18

|

AlphaBetaGamma96

|

pytorch/benchmark

| 1,046

|

How to add an new backend?

|

Hello, I want to add an new backend to run benchmark **without** modify this repo's code. In torchdynamo repo, I use @create_backend decorator to finish this, but I can't find suitable interface in this repo.

|

https://github.com/pytorch/benchmark/issues/1046

|

closed

|

[] | 2022-07-20T08:45:36Z

| 2022-07-27T22:47:49Z

| null |

zzpmiracle

|

huggingface/datasets

| 4,719

|

Issue loading TheNoob3131/mosquito-data dataset

|

So my dataset is public in the Huggingface Hub, but when I try to load it using the load_dataset command, it shows that it is downloading the files, but throws a ValueError. When I went to my directory to see if the files were downloaded, the folder was blank.

Here is the error below:

ValueError Traceback (most recent call last)

Input In [8], in <cell line: 3>()

1 from datasets import load_dataset

----> 3 dataset = load_dataset("TheNoob3131/mosquito-data", split="train")

File ~\Anaconda3\lib\site-packages\datasets\load.py:1679, in load_dataset(path, name, data_dir, data_files, split, cache_dir, features, download_config, download_mode, ignore_verifications, keep_in_memory, save_infos, revision, use_auth_token, task, streaming, **config_kwargs)

1676 try_from_hf_gcs = path not in _PACKAGED_DATASETS_MODULES

1678 # Download and prepare data

-> 1679 builder_instance.download_and_prepare(

1680 download_config=download_config,

1681 download_mode=download_mode,

1682 ignore_verifications=ignore_verifications,

1683 try_from_hf_gcs=try_from_hf_gcs,

1684 use_auth_token=use_auth_token,

1685 )

1687 # Build dataset for splits

1688 keep_in_memory = (

1689 keep_in_memory if keep_in_memory is not None else is_small_dataset(builder_instance.info.dataset_size)

1690 )

Is the dataset in the wrong format or is there some security permission that I should enable?

|

https://github.com/huggingface/datasets/issues/4719

|

closed

|

[] | 2022-07-19T17:47:37Z

| 2022-07-20T06:46:57Z

| 2

|

thenerd31

|

pytorch/TensorRT

| 1,189

|

❓ [Question]Why the GPU memory has doubled when I loaded model from Torch-TensorRT by Pytorch?

|

## ❓ Question

<!-- Your question -->

When I'm using Pytorch to load model from Torch-TensorRT(torch.jit.load (*.ts)) file, the model's GPU memory has doubled(1602MB to 3242MB of GPU Memory from Nvidia-smi). At the same time, the gradient of model tensors are both not included. What I'm concern is that the context memory of torch is not reused, is restart a new context memory of torch.

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0):1.10.0

- OS (e.g., Linux): Linux

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Python version: 3.7

- CUDA version:11.2

- Any other relevant information: torch-tensorrt version: 1.1.0

- NVIDIA GPU: Tesla v100

## Additional context

import torch

import torch_tensorrt

# memory is 1.6G

a= torch.randn()

a= torch.randn([1,1,224,224])

a.cuda()

# memory become 3.2G

model = torch.jit.load()

|

https://github.com/pytorch/TensorRT/issues/1189

|

closed

|

[

"question",

"No Activity",

"performance"

] | 2022-07-19T10:21:14Z

| 2023-03-26T00:02:18Z

| null |

Jancapcc

|

huggingface/datasets

| 4,711

|

Document how to create a dataset loading script for audio/vision

|

Currently, in our docs for Audio/Vision/Text, we explain how to:

- Load data

- Process data

However we only explain how to *Create a dataset loading script* for text data.

I think it would be useful that we add the same for Audio/Vision as these have some specificities different from Text.

See, for example:

- #4697

- and comment there: https://github.com/huggingface/datasets/issues/4697#issuecomment-1191502492

CC: @stevhliu

|

https://github.com/huggingface/datasets/issues/4711

|

closed

|

[

"documentation"

] | 2022-07-19T08:03:40Z

| 2023-07-25T16:07:52Z

| 1

|

albertvillanova

|

huggingface/optimum

| 306

|

`ORTModelForConditionalGeneration` did not have `generate()` module after converting from `T5ForConditionalGeneration`

|

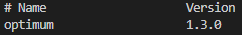

### System Info

```shell

Machine: Apple M1 Pro

Optimum version: 1.3.0

Transformers version: 4.20.1

Onnxruntime version: 1.11.1

# Question

How to inference a quantized onnx model from class ORTModelForConditionalGeneration (previously using T5ForConditionalGeneration). I've successfully converted T5ForConditionalGeneration PyTorch model to onnx, then quantize it. But did not know why the `model.generate` was not found from ORTModelForConditionalGeneration model. How to inference?

A bit of context, this is text to text generation task. So generate a paraphrase from a sentence.

```

### Who can help?

_No response_

### Information

- [X] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Sample code:

```

import os

from optimum.onnxruntime.modeling_seq2seq import ORTModelForConditionalGeneration

from transformers import T5ForConditionalGeneration,T5Tokenizer

save_directory = "onnx/"

file_name = "model.onnx"

onnx_path = os.path.join(save_directory, "model.onnx")

# Load a model from transformers and export it through the ONNX format

# model_raw = T5ForConditionalGeneration.from_pretrained(f'model_{version}/t5_keyword')

model = ORTModelForConditionalGeneration.from_pretrained(f'model_{version}/t5_keyword', from_transformers=True)

tokenizer = T5Tokenizer.from_pretrained(f'model_{version}/t5_keyword')

# Save the onnx model and tokenizer

model.save_pretrained(save_directory, file_name=file_name)

tokenizer.save_pretrained(save_directory)

```

Quantization code:

```

from optimum.onnxruntime.configuration import AutoQuantizationConfig

from optimum.onnxruntime import ORTQuantizer

# Define the quantization methodology

qconfig = AutoQuantizationConfig.arm64(is_static=False, per_channel=False)

quantizer = ORTQuantizer.from_pretrained(f'model_{version}/t5_keyword', feature="seq2seq-lm")

# Apply dynamic quantization on the model

quantizer.export(

onnx_model_path=onnx_path,

onnx_quantized_model_output_path=os.path.join(save_directory, "model-quantized.onnx"),

quantization_config=qconfig,

)

```

Reader:

```

from optimum.onnxruntime.modeling_seq2seq import ORTModelForConditionalGeneration

from transformers import pipeline, AutoTokenizer

model = ORTModelForConditionalGeneration.from_pretrained(save_directory, file_name="model-quantized.onnx")

tokenizer = AutoTokenizer.from_pretrained(save_directory)

```

Error when:

```

text = "Hotelnya bagus sekali"

encoding = tokenizer.encode_plus(text,padding=True, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"], encoding["attention_mask"]

beam_outputs = model.generate(

input_ids=input_ids,

attention_mask=attention_masks,

)

```

`AttributeError: 'ORTModelForConditionalGeneration' object has no attribute 'generate'`

### Expected behavior

Can predict using same T5 class `generate`

|

https://github.com/huggingface/optimum/issues/306

|

closed

|

[

"bug"

] | 2022-07-19T07:14:48Z

| 2022-07-19T09:29:09Z

| 2

|

tiketdatailham

|

pytorch/TensorRT

| 1,188

|

❓ [Question] Cannot install torch-tensorrt package

|

Hi! Can someone explain why this is error

```shell

(tf-gpu-11.6) C:\Users\myxzlpltk>pip install torch-tensorrt -f https://github.com/NVIDIA/Torch-TensorRT/releases

Looking in links: https://github.com/NVIDIA/Torch-TensorRT/releases

Collecting torch-tensorrt

Using cached torch-tensorrt-0.0.0.post1.tar.gz (9.0 kB)

Preparing metadata (setup.py) ... error

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [13 lines of output]

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "C:\Users\myxzlpltk\AppData\Local\Temp\pip-install-t86xj3rx\torch-tensorrt_a472ada85c9e492d8f4d7d614046053d\setup.py", line 125, in <module>

raise RuntimeError(open("ERROR.txt", "r").read())

RuntimeError:

###########################################################################################

The package you are trying to install is only a placeholder project on PyPI.org repository.

To install Torch-TensorRT please run the following command:

$ pip install torch-tensorrt -f https://github.com/NVIDIA/Torch-TensorRT/releases

###########################################################################################

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

```

|

https://github.com/pytorch/TensorRT/issues/1188

|

closed

|

[

"question",

"channel: windows"

] | 2022-07-19T01:48:13Z

| 2024-02-26T17:16:23Z

| null |

myxzlpltk

|

pytorch/TensorRT

| 1,186

|

❓ [Question] Python Package for V1.1.1 Release?

|

## ❓ Question

Does the latest release include the python package for supporting JP5.0 too?

- PyTorch Version (e.g., 1.0): 1.11

- CPU Architecture: Arm64

- Python version: 3.8

- CUDA version: 11.4

|

https://github.com/pytorch/TensorRT/issues/1186

|

closed

|

[

"question",

"release: patch",

"channel: linux-jetpack"

] | 2022-07-18T15:20:13Z

| 2022-07-18T21:47:06Z

| null |

haichuanwang001

|

huggingface/datasets

| 4,694

|

Distributed data parallel training for streaming datasets

|

### Feature request

Any documentations for the the `load_dataset(streaming=True)` for (multi-node multi-GPU) DDP training?

### Motivation

Given a bunch of data files, it is expected to split them onto different GPUs. Is there a guide or documentation?

### Your contribution

Does it requires manually split on data files for each worker in `DatasetBuilder._split_generator()`? What is`IterableDatasetShard` expected to do?

|

https://github.com/huggingface/datasets/issues/4694

|

open

|

[

"enhancement"

] | 2022-07-17T01:29:43Z

| 2023-04-26T18:21:09Z

| 6

|

cyk1337

|

pytorch/data

| 661

|

DataLoader2 with reading service

|

For user dev and onboarding experience of the data component, we will provide examples, tutorials, up-to-date documentations as well as the operational support. We added a simple train loop example. This is to further track adding the uscase and example of DataLoader2 with different reading services.

|

https://github.com/meta-pytorch/data/issues/661

|

closed

|

[

"documentation"

] | 2022-07-15T17:29:41Z

| 2022-11-10T23:07:24Z

| 2

|

dahsh

|

huggingface/datasets

| 4,684

|

How to assign new values to Dataset?

|

Hi, if I want to change some values of the dataset, or add new columns to it, how can I do it?

For example, I want to change all the labels of the SST2 dataset to `0`:

```python

from datasets import load_dataset

data = load_dataset('glue','sst2')

data['train']['label'] = [0]*len(data)

```

I will get the error:

```

TypeError: 'Dataset' object does not support item assignment

```

|

https://github.com/huggingface/datasets/issues/4684

|

closed

|

[

"enhancement"

] | 2022-07-15T04:17:57Z

| 2023-03-20T15:50:41Z

| 2

|

beyondguo

|

pytorch/data

| 655

|

DataLoader2 with OSS datasets/datapipes

|

For user dev and onboarding experience of the data component, we will provide examples, tutorials, up-to-date documentations as well as the operational support. We added a simple train loop example. This is to further track adding the uscase and example of DataLoader2 with open source datasets/datapipes.

|

https://github.com/meta-pytorch/data/issues/655

|

closed

|

[] | 2022-07-14T17:51:13Z

| 2022-11-10T23:06:20Z

| 2

|

dahsh

|

huggingface/datasets

| 4,682

|

weird issue/bug with columns (dataset iterable/stream mode)

|

I have a dataset online (CloverSearch/cc-news-mutlilingual) that has a bunch of columns, two of which are "score_title_maintext" and "score_title_description". the original files are jsonl formatted. I was trying to iterate through via streaming mode and grab all "score_title_description" values, but I kept getting key not found after a certain point of iteration. I found that some json objects in the file don't have "score_title_description". And in SOME cases, this returns a NONE and in others it just gets a key error. Why is there an inconsistency here and how can I fix it?

|

https://github.com/huggingface/datasets/issues/4682

|

open

|

[] | 2022-07-14T13:26:47Z

| 2022-07-14T13:26:47Z

| 0

|

eunseojo

|

pytorch/torchx

| 557

|

how does i run the script and use script args

|

## ❓ Questions and Help

how does i run the script and use the script_args --

torchx run --scheduler local_cwd --scheduler_args log_dir=/tmp dist.ddp -j 1x2 --script dlrm_main.py --epoch 30

when i test dlrm by next code

```shell

torchx run --scheduler local_cwd --scheduler_args log_dir=/tmp dist.ddp -j 1x2 --script dlrm_main.py --epoch 30

```

### Question

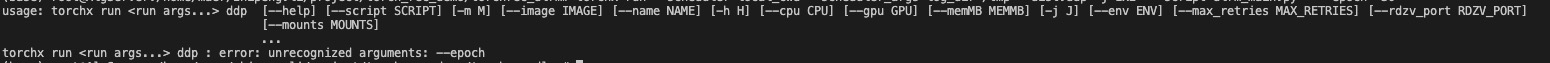

the error is :

usage: torchx run <run args...> ddp [--help] [--script SCRIPT] [-m M] [--image IMAGE] [--name NAME] [-h H] [--cpu CPU] [--gpu GPU] [--memMB MEMMB] [-j J] [--env ENV] [--max_retries MAX_RETRIES] [--rdzv_port RDZV_PORT]

[--mounts MOUNTS]

...

torchx run <run args...> ddp : error: unrecognized arguments: --epoch

|

https://github.com/meta-pytorch/torchx/issues/557

|

closed

|

[] | 2022-07-14T08:50:39Z

| 2023-07-03T19:51:50Z

| 3

|

davidxiaozhi

|

pytorch/examples

| 1,022

|

How to build a generator for a layout 2 image GANs with images of size 256 and 512

|

Hello I am new to GANs and I need you help :

Please could you help me to make the model accept the image size of 256x256 and 512x512

I included the generator model for 128x128

`import torch

import torch.nn as nn

import torch.nn.functional as F

from math import *

from models.bilinear import crop_bbox_batch

def get_z_random(batch_size, z_dim, random_type='gauss'):

if random_type == 'uni':

z = torch.rand(batch_size, z_dim) * 2.0 - 1.0

elif random_type == 'gauss':

z = torch.randn(batch_size, z_dim)

return z

def transform_z_flat(batch_size, time_step, z_flat, obj_to_img):

# restore z to batch with padding

z = torch.zeros(batch_size, time_step, z_flat.size(1)).to(z_flat.device)

for i in range(batch_size):

idx = (obj_to_img.data == i).nonzero()

if idx.dim() == 0:

continue

idx = idx.view(-1)

n = idx.size(0)

z[i, :n] = z_flat[idx]

return z

class ConditionalBatchNorm2d(nn.Module):

def __init__(self, num_features, num_classes):

super().__init__()

self.num_features = num_features

self.bn = nn.BatchNorm2d(num_features, affine=False)

self.embed = nn.Embedding(num_classes, num_features * 2)

self.embed.weight.data[:, :num_features].normal_(1, 0.02) # Initialise scale at N(1, 0.02)

self.embed.weight.data[:, num_features:].zero_() # Initialise bias at 0

def forward(self, x, y):

out = self.bn(x)

gamma, beta = self.embed(y).chunk(2, 1)

out = gamma.view(-1, self.num_features, 1, 1) * out + beta.view(-1, self.num_features, 1, 1)

return out

class ResidualBlock(nn.Module):

"""Residual Block with instance normalization."""

def __init__(self, dim_in, dim_out):

super(ResidualBlock, self).__init__()

self.main = nn.Sequential(

nn.Conv2d(dim_in, dim_out, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(dim_out, affine=True, track_running_stats=True),

nn.ReLU(inplace=True),

nn.Conv2d(dim_out, dim_out, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(dim_out, affine=True, track_running_stats=True))

def forward(self, x):

return x + self.main(x)

class ConvLSTMCell(nn.Module):

def __init__(self, input_size, input_dim, hidden_dim, kernel_size, bias):

"""

Initialize ConvLSTM cell.

Parameters

----------

input_size: (int, int)

Height and width of input tensor as (height, width).

input_dim: int

Number of channels of input tensor.

hidden_dim: int

Number of channels of hidden state.

kernel_size: (int, int)

Size of the convolutional kernel.

bias: bool

Whether or not to add the bias.

"""

super(ConvLSTMCell, self).__init__()

self.height, self.width = input_size

self.input_dim = input_dim

self.hidden_dim = hidden_dim

self.kernel_size = kernel_size

self.padding = kernel_size[0] // 2, kernel_size[1] // 2

self.bias = bias

self.conv = nn.Conv2d(in_channels=self.input_dim + self.hidden_dim,

out_channels=4 * self.hidden_dim,

kernel_size=self.kernel_size,

padding=self.padding,

bias=self.bias)

def forward(self, input_tensor, cur_state):

h_cur, c_cur = cur_state

combined = torch.cat([input_tensor, h_cur], dim=1) # concatenate along channel axis

combined_conv = self.conv(combined)

cc_i, cc_f, cc_o, cc_g = torch.split(combined_conv, self.hidden_dim, dim=1)

i = torch.sigmoid(cc_i)

f = torch.sigmoid(cc_f)

o = torch.sigmoid(cc_o)

g = torch.tanh(cc_g)

c_next = f * c_cur + i * g

h_next = o * torch.tanh(c_next)

return h_next, c_next

def init_hidden(self, batch_size, device):

return (torch.zeros(batch_size, self.hidden_dim, self.height, self.width).to(device),

torch.zeros(batch_size, self.hidden_dim, self.height, self.width).to(device))

class ConvLSTM(nn.Module):

def __init__(self, input_size, input_dim, hidden_dim, kernel_size, batch_first=False, bias=True, return_all_layers=False):

super(ConvLSTM, self).__init__()

self._check_kernel_size_consistency(kernel_size)

if isinstance(hidden_dim, list):

num_layers = len(hidden_dim)

elif isinstance(hidden_dim, int):

num_layers = 1

# Make sure that both `kernel_size` and `hidden_dim` are lists having len == num_layers

kernel_size = self._extend_for_multilayer(kernel_size, num_layers)

hidden_dim = self._extend_for_multilayer(hidden_di

|

https://github.com/pytorch/examples/issues/1022

|

closed

|

[] | 2022-07-13T15:45:09Z

| 2022-07-16T17:13:15Z

| null |

TahaniFennir

|

pytorch/data

| 648

|

Chainer/Concater from single datapipe?

|

The `Concater` datapipe takes multiple DPs as input. Is there a class that would take a **single** datapipe of iterables instead? Something like this:

```py

class ConcaterIterable(IterDataPipe):

def __init__(self, source_datapipe):

self.source_datapipe = source_datapipe

def __iter__(self):

for iterable in self.source_datapipe:

yield from iterable

```

Basically:

[`itertools.chain` ](https://docs.python.org/3/library/itertools.html#itertools.chain)== `Concater`

[`itertools.chain.from_iterable`](https://docs.python.org/3/library/itertools.html#itertools.chain.from_iterable) == `ConcaterIterable`

Maybe a neat way of implementing this would be to keep a single `Concater` class, which would fall back to the `ConcaterIterable` behaviour if it's passed only one DP as input?

-----

Details: I need this for my benchmarking on manifold where each file is a big pickle archive of multiple images. My DP builder looks like this:

```py

def make_manifold_dp(root, dataset_size):

handler = ManifoldPathHandler()

dp = IoPathFileLister(root=root)

dp.register_handler(handler)

dp = dp.shuffle(buffer_size=dataset_size).sharding_filter()

dp = IoPathFileOpener(dp, mode="rb")

dp.register_handler(handler)

dp = PickleLoaderDataPipe(dp)

dp = ConcaterIterable(dp) # <-- Needed here!

return dp

```

|

https://github.com/meta-pytorch/data/issues/648

|

closed

|

[

"good first issue"

] | 2022-07-13T14:19:43Z

| 2023-03-14T20:25:01Z

| 9

|

NicolasHug

|

huggingface/optimum

| 290

|

Quantized Model size difference when using Optimum vs. Onnxruntime

|

Package versions

While exporting a question answering model ("deepset/minilm-uncased-squad2") to ONNX and quantizing it(dynamic quantization) with Optimum, the model size is 68 MB.

The same model exported while using ONNXRuntime is 32 MB.

Why is there a difference between both the exported models when the model is the same and the quantization too ?

**Optimum Code to convert the model to ONNX and Quantization.**

```python

from pathlib import Path

from optimum.onnxruntime import ORTModelForQuestionAnswering, ORTOptimizer

from optimum.onnxruntime.configuration import AutoQuantizationConfig, OptimizationConfig

from optimum.onnxruntime import ORTQuantizer

from optimum.pipelines import pipeline

from transformers import AutoTokenizer

model_checkpoint = "deepset/minilm-uncased-squad2"

save_directory = Path.home()/'onnx/optimum/minilm-uncased-squad2'

save_directory.mkdir(exist_ok=True,parents=True)

file_name = "minilm-uncased-squad2.onnx"

onnx_path = save_directory/"minilm-uncased-squad2.onnx"

# Load a model from transformers and export it through the ONNX format

model = ORTModelForQuestionAnswering.from_pretrained(model_checkpoint, from_transformers=True)

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

# Save the onnx model and tokenizer

model.save_pretrained(save_directory, file_name=file_name)

tokenizer.save_pretrained(save_directory)

# Define the quantization methodology

qconfig = AutoQuantizationConfig.avx2(is_static=False, per_channel=True)

quantizer = ORTQuantizer.from_pretrained(model_checkpoint, feature="question-answering")

# Apply dynamic quantization on the model

quantizer.export(

onnx_model_path=onnx_path,

onnx_quantized_model_output_path= save_directory/"minilm-uncased-squad2-quantized.onnx",

quantization_config=qconfig,

)

quantizer.model.config.save_pretrained(save_directory)

Path(save_directory/"minilm-uncased-squad2-quantized.onnx").stat().st_size/1024**2

```

**ONNX Runtime Code**

```python

from transformers.convert_graph_to_onnx import convert

from transformers import AutoTokenizer

from pathlib import Path

model_ckpt = "deepset/minilm-uncased-squad2"

onnx_model_path = Path("../../onnx/minilm-uncased-squad2.onnx")

tokenizer= AutoTokenizer.from_pretrained(model_ckpt)

convert(framework="pt", model=model_ckpt, tokenizer=tokenizer,

output=onnx_model_path, opset=12, pipeline_name="question-answering")

from onnxruntime.quantization import quantize_dynamic, QuantType

onnx_model_path = Path("../../../onnx/minilm-uncased-squad2.onnx")

model_output = "../../onnx/minilm-uncased-squad2.quant.onnx"

quantize_dynamic(onnx_model_path, model_output, weight_type=QuantType.QInt8)

Path(model_output).stat().st_size/1024**2

```

Thank you

|

https://github.com/huggingface/optimum/issues/290

|

closed

|

[] | 2022-07-13T10:12:45Z

| 2022-07-14T09:24:23Z

| 3

|

Shamik-07

|

pytorch/pytorch

| 81,395

|

How to Do Semi-Asynchronous or Asynchronous Training with Pytorch

|

### 🚀 The feature, motivation and pitch

When PyTorch is used for distributed training, DDP is normally good enough for most situations. However, when if performance of different nodes differs, the performance of the whole training will be decided by the worst node. E.g. worker 0 needs 1 second for a forward and backward pass while worker 1 needs 2 seconds, the time for one step will be 2 seconds.

So I am wondering if there is way to do semi-asynchronous training with Pytorch?

### Alternatives

There is a similar library called [hivemind](tps://github.com/learning-at-home/hivemind), but it is designed for Internet while we prefer to run the training job in our cluster.

### Additional context

_No response_

|

https://github.com/pytorch/pytorch/issues/81395

|

closed

|

[] | 2022-07-13T09:42:48Z

| 2022-07-13T16:50:59Z

| null |

lsy643

|

pytorch/data

| 647

|

Update out-of-date example and colab

|

### 📚 The doc issue

The examples for Text/Vision/Audio are out-of-date: https://github.com/pytorch/data/tree/main/examples

The colab attached in README needs to be updated as well:

- How to install torchdata

- Example needs shuffle + sharding_filter

### Suggest a potential alternative/fix

None

|

https://github.com/meta-pytorch/data/issues/647

|

closed

|

[] | 2022-07-12T21:09:53Z

| 2023-02-02T14:39:40Z

| 5

|

ejguan

|

huggingface/datasets

| 4,675

|

Unable to use dataset with PyTorch dataloader

|

## Describe the bug

When using `.with_format("torch")`, an arrow table is returned and I am unable to use it by passing it to a PyTorch DataLoader: please see the code below.

## Steps to reproduce the bug

```python

from datasets import load_dataset

from torch.utils.data import DataLoader

ds = load_dataset(

"para_crawl",

name="enfr",

cache_dir="/tmp/test/",

split="train",

keep_in_memory=True,

)

dataloader = DataLoader(ds.with_format("torch"), num_workers=32)

print(next(iter(dataloader)))

```

Is there something I am doing wrong? The documentation does not say much about the behavior of `.with_format()` so I feel like I am a bit stuck here :-/

Thanks in advance for your help!

## Expected results

The code should run with no error

## Actual results

```

AttributeError: 'str' object has no attribute 'dtype'

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.3.2

- Platform: Linux-4.18.0-348.el8.x86_64-x86_64-with-glibc2.28

- Python version: 3.10.4

- PyArrow version: 8.0.0

- Pandas version: 1.4.3

|

https://github.com/huggingface/datasets/issues/4675

|

open

|

[

"bug"

] | 2022-07-12T15:04:04Z

| 2022-07-14T14:17:46Z

| 1

|

BlueskyFR

|

pytorch/functorch

| 956

|

Batching rule for searchsorted implementation

|

Hi,

Thanks for the great work, really enjoying functorch in my work. I have encountered the following when using vmap on a function which uses torch.searchsorted:

UserWarning: There is a performance drop because we have not yet implemented the batching rule for aten::searchsorted.Tensor. Please file us an issue on GitHub so that we can prioritize its implementation. (Triggered internally at /Users/runner/work/functorch/functorch/functorch/csrc/BatchedFallback.cpp:85.)

Looking forward to the implementation.

|

https://github.com/pytorch/functorch/issues/956

|

closed

|

[

"actionable"

] | 2022-07-12T06:36:04Z

| 2022-07-18T13:49:42Z

| 6

|

mingu6

|

pytorch/data

| 637

|

[TODO] Create dependency on TorchArrow?

|

This issue is generated from the TODO line

https://github.com/pytorch/data/blob/2f29adba451e1b87f1c0c654557d9dd98673fdd8/torchdata/datapipes/iter/util/dataframemaker.py#L15

|

https://github.com/meta-pytorch/data/issues/637

|

open

|

[] | 2022-07-11T17:34:07Z

| 2022-07-11T17:34:07Z

| 0

|

VitalyFedyunin

|

huggingface/datasets

| 4,671

|

Dataset Viewer issue for wmt16

|

### Link

https://huggingface.co/datasets/wmt16

### Description

[Reported](https://huggingface.co/spaces/autoevaluate/model-evaluator/discussions/12#62cb83f14c7f35284e796f9c) by a user of AutoTrain Evaluate. AFAIK this dataset was working 1-2 weeks ago, and I'm not sure how to interpret this error.

```

Status code: 400

Exception: NotImplementedError

Message: This is a abstract method

```

Thanks!

### Owner

No

|

https://github.com/huggingface/datasets/issues/4671

|

closed

|

[

"dataset-viewer"

] | 2022-07-11T08:34:11Z

| 2022-09-13T13:27:02Z

| 6

|

lewtun

|

huggingface/optimum

| 276

|

Force write of vanilla onnx model with `ORTQuantizer.export()`

|

### Feature request

Force write of the non-quantized onnx model with `ORTQuantizer.export()`, or add an option to force write.

### Motivation

Currently, if the `onnx_model_path` already exists, we don't write the non-quantized model in to the indicated path.

https://github.com/huggingface/optimum/blob/04a2a6d290ca6ea6949844d1ae9a208ca95a79da/optimum/onnxruntime/quantization.py#L313-L315

Meanwhile, the quantized model is always written, even if there is already a model at the `onnx_quantized_model_output_path` (see https://github.com/onnx/onnx/blob/60d29c10c53ef7aa580291cb2b6360813b4328a3/onnx/__init__.py#L170).

Is there any reason for this different behavior? It led me to unexpected behaviors, where the non-quantized / quantized models don't correspond if I change the model in my script. In this case, the `export()` reuses the old non-quantized model to generate the quantized model, and all the quantizer attributes are ignored!

### Your contribution

I can do this if approved

|

https://github.com/huggingface/optimum/issues/276

|

closed

|

[] | 2022-07-09T08:44:27Z

| 2022-07-11T10:38:48Z

| 2

|

fxmarty

|

pytorch/data

| 580

|

[Linter] Ability to disable some lints

|

### 🚀 The feature

There are several options to disable specific linters.

Option 1. Disable with `linter-ignore: code`

Pros:

- Similar to known syntax of various linters

Cons:

- Need to modify code of datasets to disable something

```

datapipe = datapipe.sharding_filter().shuffle() # linter-ignore: shuffle-shard

```

Option 2. Global & Context disables

Pros:

- Can control datasets without modification of the code

Cons:

- Global might disable important errors

- Context requires additional indent

- Syntax feels weird

- Annoying to disable construct time linters (see below)

```

from torchdata import linter

linter.disable('shuffle-shard') # global

with linter.disable('shuffle-shard'): # context based

dl = DataLoader2(...)

```

Option 3. DLv2 argument / ReadingService argument

Pros:

- Local to specific DataLoader

- Can control datasets without modication of the code

Cons:

- Syntax feels weird

- Some linters might trigger/not in various ReadingServices

- Annoying to disable construct time linters (see below)

```

dl = DataLoader2(dp_graph, [adapter], disable_lint = ['shuffle-shard'])

```

Option 4. DataPipe 'attribute'

Pros:

- Can be defined by DataSet developer or by the user

- Can impact construct time error handling

Cons:

- Syntax feels weird

```datapipe = datapipe.sharding_filter().shuffle().disable_lint('shuffle-shard')```

and/or (as we can have an adapter to do the same job)

```dl = DataLoader(dp_graph,[DisableLint('shuffle-shard')], ...)```

Personally, I prefer the last variant, but I'm open to discussion.

|

https://github.com/meta-pytorch/data/issues/580

|

open

|

[] | 2022-07-08T17:25:25Z

| 2022-07-15T21:23:17Z

| 3

|

VitalyFedyunin

|

pytorch/pytorch

| 81,103

|

[Discussion] How to add MPS extension with custom kernel?

|

### 🚀 The feature, motivation and pitch

Hi,

I am working on adding MPS op for MPS backend with a custom kernel.

Here is an example:

https://github.com/grimoire/TorchMPSCustomOpsDemo

I am new to Metal. I am not sure if it is a good way (or the right way) to add such op. There are something I want to discuss:

## Device and CommandQueue

Since PyTorch has not exposed the MPS-related API, I have to copy some head [from torch csrc](https://github.com/grimoire/TorchMPSCustomOpsDemo/tree/master/csrc/pytorch/mps). The library is build with `MPSDevice::getInstance()->device()` and the command is commit to `getCurrentMPSStream()`. I am not sure if I should flush on commit or not.

## LibraryFromUrl vs LibraryFromSource

It seems that Metal library can not be linked together with the other object file. So I have to:

Either load it at runtime, which leads to the problem of how to find the relative location of the `.metallib`.

```objc

// load from url

NSURL* metal_url = [NSURL fileURLWithPath: utl_str];

library->_library = [at::mps::MPSDevice::getInstance()->device() newLibraryWithURL: metal_url error:&error];

```

Or build it at runtime. Which might take a long time to compile the kernel at runtime.

```objc

// build library from source string

NSString* code_str = [NSString stringWithCString: sources.c_str()];

library->_library = [at::mps::MPSDevice::getInstance()->device() newLibraryWithSource: code_str options: nil error:&error];

```

## BuildExtension

If we does not build metal kernel at runtime, we need to setup the compiler for metal kernel in the `setup.py`.

Since the `build_ext` provided by Python and PyTorch does not support build Metal, I patched the `UnixCCompiler` in `BuildExtension` to add the support. Both `compile` and `link` need to be updated:

```python

# compile

def darwin_wrap_single_compile(obj, src, ext, cc_args, extra_postargs,

pp_opts) -> None:

cflags = copy.deepcopy(extra_postargs)

try:

original_compiler = self.compiler.compiler_so

if _is_metal_file(src):

# use xcrun metal to compile metal file to `.air`

metal = ['xcrun', 'metal']

self.compiler.set_executable('compiler_so', metal)

if isinstance(cflags, dict):

cflags = cflags.get('metal', [])

else:

cflags = []

elif isinstance(cflags, dict):

cflags = cflags['cxx']

original_compile(obj, src, ext, cc_args, cflags, pp_opts)

finally:

self.compiler.set_executable('compiler_so', original_compiler)

# link

def darwin_wrap_single_link(target_desc,

objects,

output_filename,

output_dir=None,

libraries=None,

library_dirs=None,

runtime_library_dirs=None,

export_symbols=None,

debug=0,

extra_preargs=None,

extra_postargs=None,

build_temp=None,

target_lang=None):

if osp.splitext(objects[0])[1].lower() == '.air':

for obj in objects:

assert osp.splitext(obj)[1].lower(

) == '.air', f'Expect .air file, but get {obj}.'

# link `.air` with xcrun metallib

linker = ['xcrun', 'metallib']

self.compiler.spawn(linker + objects + ['-o', output_filename])

else:

return original_link(target_desc, objects, output_filename,

output_dir, libraries, library_dirs,

runtime_library_dirs, export_symbols,

debug, extra_preargs, extra_postargs,

build_temp, target_lang)

```

The code looks ... ugly. Hope there is a better way to do that.

So ... any advice?

### Alternatives

_No response_

### Additional context

_No response_

cc @malfet @zou3519 @kulinseth @albanD

|

https://github.com/pytorch/pytorch/issues/81103

|

closed

|

[

"module: cpp-extensions",

"triaged",

"enhancement",

"topic: docs",

"module: mps"

] | 2022-07-08T12:32:14Z

| 2023-07-28T17:11:42Z

| null |

grimoire

|

pytorch/pytorch.github.io

| 1,071

|

Where is documented the resize and crop in EfficientNet for torchvision v0.12.0

|

## 📚 Documentation

Hello, I do not see in any place what resize and center crop were done for training the efficientNet_bx models.

Where is that information?

I saw it in the torchvision v0.13.0 documentation or code ([for example](https://github.com/pytorch/vision/blob/main/torchvision/models/efficientnet.py#L522))

Many of us have still projects in the older version.

Thanks

|

https://github.com/pytorch/pytorch.github.io/issues/1071

|

closed

|

[] | 2022-07-08T12:20:23Z

| 2022-07-22T22:06:23Z

| null |

mjack3

|

pytorch/vision

| 6,249

|

Error when create_feature_extractor in AlexNet

|

### 🐛 Describe the bug

When I try to obtain the feature of layer "classifier.4" in AlexNet, the program has reported an error. The code is as follows:

```

import torch

from torchvision.models import alexnet, AlexNet_Weights

from torchvision.models.feature_extraction import create_feature_extractor

model = alexnet(weights=AlexNet_Weights.IMAGENET1K_V1)

extractor = create_feature_extractor(model, {'classifier.4': 'feat'})

img = torch.rand(3,224,224)

out = extractor(img)

```

**Error message**

```

RuntimeError: mat1 and mat2 shapes cannot be multiplied (256x36 and 9216x4096)

```

I guess it is because that the shape of output from "flatten" of AlexNet is 256x36 rather than 9216.

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0+cu116

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.31

Python version: 3.9.12 (main, Jun 1 2022, 11:38:51) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-117-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.6.55

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

GPU 2: NVIDIA GeForce RTX 3090

GPU 3: NVIDIA GeForce RTX 3090

GPU 4: NVIDIA GeForce RTX 3090

GPU 5: NVIDIA GeForce RTX 3090

GPU 6: NVIDIA GeForce RTX 3090

GPU 7: NVIDIA GeForce RTX 3090

Nvidia driver version: 510.73.08

cuDNN version: Probably one of the following:

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn.so.8.4.0

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.4.0

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.4.0

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.4.0

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.4.0

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.4.0

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.4.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.12.0+cu116

[pip3] torchmetrics==0.9.1

[pip3] torchtext==0.12.0

[pip3] torchvision==0.13.0+cu116

[conda] blas 1.0 mkl defaults

[conda] cudatoolkit 11.3.1 h2bc3f7f_2 defaults

[conda] mkl 2021.4.0 h06a4308_640 defaults

[conda] mkl-service 2.4.0 py39h7f8727e_0 defaults

[conda] mkl_fft 1.3.1 py39hd3c417c_0 defaults

[conda] mkl_random 1.2.2 py39h51133e4_0 defaults

[conda] numpy 1.22.3 py39he7a7128_0 defaults

[conda] numpy-base 1.22.3 py39hf524024_0 defaults

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch 1.12.0+cu116 pypi_0 pypi

[conda] torchmetrics 0.9.1 pypi_0 pypi

[conda] torchtext 0.12.0 py39 pytorch

[conda] torchvision 0.13.0+cu116 pypi_0 pypi

```

cc @datumbox

|

https://github.com/pytorch/vision/issues/6249

|

closed

|

[

"question",

"module: models",

"topic: feature extraction"

] | 2022-07-08T09:28:06Z

| 2022-07-08T10:11:43Z

| null |

githwd2016

|

pytorch/vision

| 6,247

|

Probable missing argument for swin transformer

|

Hello,

When I inspect the swin transformer codes in the original swin repo, mmdetection or detectron2, I have noticed that there is a parameter called `drop_path_rate` which I cannot see in the in the torchvision repo. Maybe, I am overlooking. Is there a similar parameter and is it an important parameter?

Thanks in advance

cc @datumbox

|

https://github.com/pytorch/vision/issues/6247

|

closed

|

[

"question",

"module: models"

] | 2022-07-08T08:21:58Z

| 2022-07-11T13:17:40Z

| null |

artest08

|

pytorch/functorch

| 940

|

Question on how to batch over both: inputs and tangent vectors

|

I want to compute the jacobian vector product of a function F from R^d to R^D. But I need to do this at a batch of points x_1, ..., x_n in R^d and a batch of tangent vectors v_1, ..., v_m in R^d. Namely, for all i = 1, ..., n and j = 1, ..., m I need to compute the nxm jacobian vector products: J_F(x_i) * v_j.

Is there a way to do this by using vmap twice to loop over the batches x_i and v_j?

|

https://github.com/pytorch/functorch/issues/940

|

open

|

[] | 2022-07-07T14:57:28Z

| 2022-07-12T17:47:23Z

| null |

sgstepaniants

|

pytorch/serve

| 1,725

|

Serving other framework models with Torchserve?

|

Hi everyone.

As in the title, I want to ask if torchserve can serve other framework models or pytorch models only?

For example, I have a model written in mxnet. This is the snippet code of `initialize` method in my custom handler.

```python

def initialize(self, context):

properties = context.system_properties

if (torch.cuda.is_available() and

properties.get("gpu_id") is not None):

ctx_id = properties.get("gpu_id")

else:

ctx_id = -1

self.manifest = context.manifest

model_dir = properties.get("model_dir")

prefix = os.path.join(model_dir, "model/resnet-50")

# load model

sym, arg_params, aux_params = mx.model.load_checkpoint(prefix, 0)

if ctx_id >= 0:

self.ctx = mx.gpu(ctx_id)

else:

self.ctx = mx.cpu()

self.model = mx.mod.Module(symbol=sym,

context=self.ctx,

label_names=None)

self.model.bind(

data_shapes=[('data', (1, 3, 640, 640))],

for_training=False

)

self.model.set_params(arg_params, aux_params)

self.initialized = True

```

For some reason, pretrained mxnet model can't be loaded. But that same model works fine in my training and inferencing script. This is the error log.

```

2022-07-06T15:48:07,468 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - File "/apps/conda/huyvd/envs/insightface/lib/python3.8/site-packages/ts/model_loader.py", line 151, in load

2022-07-06T15:48:07,468 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - initialize_fn(service.context)

2022-07-06T15:48:07,469 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - File "/tmp/models/4b6bbba5e16445ffbe70f89282a0d30a/handler.py", line 34, in initialize

2022-07-06T15:48:07,469 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - sym, arg_params, aux_params = mx.model.load_checkpoint(prefix, 0)

2022-07-06T15:48:07,469 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - File "/apps/conda/huyvd/envs/insightface/lib/python3.8/site-packages/mxnet/model.py", line 476, in load_checkpoint

2022-07-06T15:48:07,470 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - symbol = sym.load('%s-symbol.json' % prefix)

2022-07-06T15:48:07,470 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - File "/apps/conda/huyvd/envs/insightface/lib/python3.8/site-packages/mxnet/symbol/symbol.py", line 3054, in load

2022-07-06T15:48:07,470 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - check_call(_LIB.MXSymbolCreateFromFile(c_str(fname), ctypes.byref(handle)))

2022-07-06T15:48:07,471 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - File "/apps/conda/huyvd/envs/insightface/lib/python3.8/site-packages/mxnet/base.py", line 246, in check_call

2022-07-06T15:48:07,471 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - raise get_last_ffi_error()

2022-07-06T15:48:07,471 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - mxnet.base.MXNetError: Traceback (most recent call last):

2022-07-06T15:48:07,472 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - File "../include/dmlc/././json.h", line 718

2022-07-06T15:48:07,472 [INFO ] W-9000-face_detect_1.0-stdout MODEL_LOG - MXNetError: Check failed: !is_->fail(): Error at Line 32, around ^``, Expect number

```

|

https://github.com/pytorch/serve/issues/1725

|

closed

|

[

"help wanted",

"question"

] | 2022-07-06T09:08:44Z

| 2022-07-13T07:58:10Z

| null |

vuongdanghuy

|

huggingface/optimum

| 262

|

How can i set number of threads for Optimum exported model?

|

### System Info

```shell

optimum==1.2.3

onnxruntime==1.11.1

onnx==1.12.0

transformers==4.20.1

python version 3.7.13

```

### Who can help?

@JingyaHuang @echarlaix

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

Hi!

I can't specify the number of threads for inferencing Optimum ONNX models.

I didn't have such a problem with the default transformers model before.

Is there any Configuration in Optimum?

### Optimum doesn't have a config for assigning the number of threads

```

from onnxruntime import SessionOptions

SessionOptions().intra_op_num_threads = 1

```

### also limiting on OS level doesn't work:

```bash

taskset -c 0-16 python inference_onnx.py

```

```bash

taskset -c 0 python inference_onnx.py

```

|

https://github.com/huggingface/optimum/issues/262

|

closed

|

[

"bug"

] | 2022-07-06T06:53:30Z

| 2022-09-19T11:25:23Z

| 1

|

MiladMolazadeh

|

huggingface/optimum

| 257

|

Optimum Inference next steps

|

# What is this issue for?

This issue is a list of potential next steps for improving inference experience using `optimum`. The current list applies to the main namespace of optimum but should be soon extended to other namespaces including `intel`, `habana`, `graphcore`.

## Next Steps/Features

- [x] #199

- [x] #254

- [x] #213

- [x] #258

- [x] #259

- [x] #260

- [x] #261

- [ ] add new Accelerators, INC, OpenVino.....

---

_Note: this issue will be continuously updated to keep track of the developments. If you are part of the community and interested in contributing feel free to pick on and open a PR._

|

https://github.com/huggingface/optimum/issues/257

|

closed

|

[

"inference",

"Stale"

] | 2022-07-06T05:02:12Z

| 2025-09-13T02:01:29Z

| 1

|

philschmid

|

pytorch/TensorRT

| 1,166

|

❓ [Question] How to run Torch-Tensorrt on JETSON AGX ORIN?

|

## ❓ Question

**Not able to run Torch-Tensorrt on Jetson AGX ORIN**

As per the [release note](https://github.com/pytorch/TensorRT/discussions/1043), it is mentioned that current release doesn't have support for Jetpack 5.0DP but ORIN only supports Jetpack 5.0DP (I might be wrong but inferring from this [Jetpack Archives.](https://developer.nvidia.com/embedded/jetpack-archive). **Is there a way to run Torch-Tensort on ORIN?** if not what's the possible timeline for new release with this support?

## What you have already tried

I did tried building for python, as suggested in the repo, it enables `import torch_tensorrt` but doesn't supports any attributes.

## Environment

- PyTorch Version (e.g., 1.0): 1.11

- CPU Architecture: arm64

- OS (e.g., Linux): Ubuntu 20.04

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): tried both, wheels provided [here ](https://forums.developer.nvidia.com/t/pytorch-for-jetson-version-1-11-now-available/72048) and building from source(instruction from [here](https://forums.developer.nvidia.com/t/pytorch-for-jetson-version-1-11-now-available/72048)).

- Build command you used (if compiling from source): python3 setup.py --use_cxx11_abi (however, this refers to jetpack 4.6 by default)

- Python version: 3.8

- CUDA version: 11.4

- GPU models and configuration: Jetson ORIN

- Any other relevant information:

|

https://github.com/pytorch/TensorRT/issues/1166

|

closed

|

[

"question",

"channel: linux-jetpack"

] | 2022-07-05T19:46:00Z

| 2022-08-11T02:55:46Z

| null |

krmayankb

|

pytorch/functorch

| 933

|

Cannot import vmap after new release

|

I am installing functorch on google colab; when I don't specify the version, it installs version 0.2.2 and PyTorch version 1.12.0, and uninstall currently installed PyTorch 1.11.0 on colab. But, in the line where I import vmap, it throws an error that functorch is not compatible with PyTorch 1.12.0:

```

RuntimeError Traceback (most recent call last)

[<ipython-input-1-0691ca18293b>](https://localhost:8080/#) in <module>()

3

4 from torchsummary import summary

----> 5 from functorch import vmap

6 import torch

7 import torch.nn as nn

[/usr/local/lib/python3.7/dist-packages/functorch/__init__.py](https://localhost:8080/#) in <module>()

20 if torch_cuda_version not in pytorch_cuda_restrictions:

21 raise RuntimeError(

---> 22 f"We've detected an installation of PyTorch 1.12 with {verbose_torch_cuda_version} support. "

23 "This functorch 0.2.0 binary is not compatible with the PyTorch installation. "

24 "Please see our install page for suggestions on how to resolve this: "

RuntimeError: We've detected an installation of PyTorch 1.12 with CUDA 10.2 support. This functorch 0.2.0 binary is not compatible with the PyTorch installation. Please see our install page for suggestions on how to resolve this: https://pytorch.org/functorch/stable/install.html

```

I tried the older version, functorch 0.1.1 with PyTorch 1.11.0, but it also gives some errors during the import:

```

ImportError Traceback (most recent call last)

[<ipython-input-3-abbd2ba6241c>](https://localhost:8080/#) in <module>()

3

4 from torchsummary import summary

----> 5 from functorch import vmap

6 import torch

7 import torch.nn as nn

[/usr/local/lib/python3.7/dist-packages/functorch/__init__.py](https://localhost:8080/#) in <module>()

5 # LICENSE file in the root directory of this source tree.

6 import torch

----> 7 from . import _C

8

9 # Monkey patch PyTorch. This is a hack, we should try to upstream

ImportError: /usr/local/lib/python3.7/dist-packages/functorch/_C.so: undefined symbol: _ZNK3c1010TensorImpl5sizesEv

```

Note: I was able to use vmap from older version just a few hours ago, then I came to notebook started it and now it doesn't work

|

https://github.com/pytorch/functorch/issues/933

|

open

|

[] | 2022-07-05T18:47:06Z

| 2022-08-08T14:31:27Z

| 4

|

KananMahammadli

|

pytorch/vision

| 6,239

|

n classes in ConvNeXt model

|

### 🐛 Describe the bug

HI,

I'm trying to train a ConvNeXt tiny model as a binary classifier by loading the model architecture and pretrained weights from torchvision.models.

I use the following two lines of code to load the model and change the number of output nodes:

>num_classes=2

model_ft = models.convnext_tiny(weights=ConvNeXt_Tiny_Weights.DEFAULT)

model_ft.classifier[2].out_features = num_classes

And when I print this layer of the mode I get:

>print(model_ft.classifier[2])

>Linear(in_features=768, out_features=2, bias=True)

This suggests that the change had been made. However, when I train the model, the output has dimensions of 42 x 1,000. _i.e. batch_size_ x n classes in ImageNet:

>batch_size=42

outputs = model(inputs)

print(outputs.size())

>torch.Size([42, 1000])

Any thoughts on how solve this problem?

Cheers,

Jamie

p.s. it seems like the issue might be that the number of classes is hard coded as 1000 in:

pytorch/vision/tree/main/torchvision/models/convnext.py

Lines 90:100

>class ConvNeXt(nn.Module):

def init(

self,

block_setting: List[CNBlockConfig],

stochastic_depth_prob: float = 0.0,

layer_scale: float = 1e-6,

num_classes: int = 1000,

block: Optional[Callable[..., nn.Module]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

**kwargs: Any,

) -> None:

### Versions

Pytorch version: 1.13.0.dev20220624

Python 3.8

cc @datumbox

|

https://github.com/pytorch/vision/issues/6239

|

closed

|

[

"question",

"module: models"

] | 2022-07-05T17:47:40Z

| 2022-07-06T08:13:15Z

| null |

jrsykes

|

pytorch/vision

| 6,235

|

Creating a `cache-dataset` for Video classification.

|

Hello, now I am trying to test the video classification model R(2+1)D on Kinetics400. However the speed of loading data is so slow. I believe the loading speed can be improved by caching the data but I am not sure how to cache video files. In the code also, it is mentioned. I want to know to cache video files? is cache dataset creating feature also included in future updates?

Thank you !

cc @datumbox

|

https://github.com/pytorch/vision/issues/6235

|

closed

|

[

"question",

"module: reference scripts",

"module: video"

] | 2022-07-05T04:27:54Z

| 2022-07-05T08:28:20Z

| null |

yakhyo

|

huggingface/datasets

| 4,621

|

ImageFolder raises an error with parameters drop_metadata=True and drop_labels=False when metadata.jsonl is present

|

## Describe the bug

If you pass `drop_metadata=True` and `drop_labels=False` when a `data_dir` contains at least one `matadata.jsonl` file, you will get a KeyError. This is probably not a very useful case but we shouldn't get an error anyway. Asking users to move metadata files manually outside `data_dir` or pass features manually (when there is a tool that can infer them automatically) don't look like a good idea to me either.

## Steps to reproduce the bug

### Clone an example dataset from the Hub

```bash

git clone https://huggingface.co/datasets/nateraw/test-imagefolder-metadata

```

### Try to load it

```python

from datasets import load_dataset

ds = load_dataset("test-imagefolder-metadata", drop_metadata=True, drop_labels=False)

```

or even just

```python

ds = load_dataset("test-imagefolder-metadata", drop_metadata=True)

```

as `drop_labels=False` is a default value.

## Expected results

A DatasetDict object with two features: `"image"` and `"label"`.

## Actual results

```

Traceback (most recent call last):

File "/home/polina/workspace/datasets/debug.py", line 18, in <module>

ds = load_dataset(

File "/home/polina/workspace/datasets/src/datasets/load.py", line 1732, in load_dataset

builder_instance.download_and_prepare(

File "/home/polina/workspace/datasets/src/datasets/builder.py", line 704, in download_and_prepare

self._download_and_prepare(

File "/home/polina/workspace/datasets/src/datasets/builder.py", line 1227, in _download_and_prepare

super()._download_and_prepare(dl_manager, verify_infos, check_duplicate_keys=verify_infos)

File "/home/polina/workspace/datasets/src/datasets/builder.py", line 793, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/home/polina/workspace/datasets/src/datasets/builder.py", line 1218, in _prepare_split

example = self.info.features.encode_example(record)

File "/home/polina/workspace/datasets/src/datasets/features/features.py", line 1596, in encode_example

return encode_nested_example(self, example)

File "/home/polina/workspace/datasets/src/datasets/features/features.py", line 1165, in encode_nested_example

{

File "/home/polina/workspace/datasets/src/datasets/features/features.py", line 1165, in <dictcomp>

{

File "/home/polina/workspace/datasets/src/datasets/utils/py_utils.py", line 249, in zip_dict

yield key, tuple(d[key] for d in dicts)

File "/home/polina/workspace/datasets/src/datasets/utils/py_utils.py", line 249, in <genexpr>

yield key, tuple(d[key] for d in dicts)

KeyError: 'label'

```

## Environment info

`datasets` master branch

- `datasets` version: 2.3.3.dev0

- Platform: Linux-5.14.0-1042-oem-x86_64-with-glibc2.17