repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

pytorch/pytorch

| 93,516

|

[Question] How to debug "munmap_chunk(): invalid pointer" when compiling to triton?

|

I'm trying to use torchdynamo to compile a function to triton.

My logs indicate that the function optimizes without issue,

but when running the function on a given input, I just get "munmap_chunk(): invalid pointer" w/o a stack trace / any useful debugging information.

I'm wondering how to go about debugging such an error.

Are any developers familiar with what this indicates?

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

|

https://github.com/pytorch/pytorch/issues/93516

|

closed

|

[

"oncall: pt2"

] | 2023-01-22T03:36:25Z

| 2023-02-01T14:19:30Z

| null |

vedantroy

|

pytorch/TensorRT

| 1,600

|

❓ [Question] How do you compile for Jetson 5.0?

|

## ❓ Question

Hi, as there seems to be no prebuilt python binary, just wanted to know if there is any way to install this package on jetson 5.0?

## What you have already tried

I tried normal installation for jetson 4.6 which fails, I aslo tried this https://forums.developer.nvidia.com/t/installing-building-torch-tensorrt-for-jetpack-5-0-1-dp-l4t-ml-r34-1-1-py3/220565/6 which gives me this error:

```

user@ubuntu:/mnt/Data/home/ParentCode/TensorRT$ bazel build //:libtorchtrt --platforms //toolchains:jetpack_5.0

Starting local Bazel server and connecting to it...

INFO: Analyzed target //:libtorchtrt (71 packages loaded, 9773 targets configured).

INFO: Found 1 target...

ERROR: /mnt/Data/home/ParentCode/TensorRT/cpp/lib/BUILD:5:10: Linking cpp/lib/libtorchtrt_plugins.so failed: (Exit 1): gcc failed: error executing command /usr/bin/gcc @bazel-out/aarch64-fastbuild/bin/cpp/lib/libtorchtrt_plugins.so-2.params

Use --sandbox_debug to see verbose messages from the sandbox and retain the sandbox build root for debugging

/usr/bin/ld.gold: warning: skipping incompatible bazel-out/aarch64-fastbuild/bin/_solib_aarch64/_U@libtorch_S_S_Ctorch___Ulib/libtorch.so while searching for torch

/usr/bin/ld.gold: error: cannot find -ltorch

/usr/bin/ld.gold: warning: skipping incompatible bazel-out/aarch64-fastbuild/bin/_solib_aarch64/_U@libtorch_S_S_Ctorch___Ulib/libtorch_cuda.so while searching for torch_cuda

/usr/bin/ld.gold: error: cannot find -ltorch_cuda

/usr/bin/ld.gold: warning: skipping incompatible bazel-out/aarch64-fastbuild/bin/_solib_aarch64/_U@libtorch_S_S_Ctorch___Ulib/libtorch_cpu.so while searching for torch_cpu

/usr/bin/ld.gold: error: cannot find -ltorch_cpu

/usr/bin/ld.gold: warning: skipping incompatible bazel-out/aarch64-fastbuild/bin/_solib_aarch64/_U@libtorch_S_S_Ctorch___Ulib/libtorch_global_deps.so while searching for torch_global_deps

/usr/bin/ld.gold: error: cannot find -ltorch_global_deps

/usr/bin/ld.gold: warning: skipping incompatible bazel-out/aarch64-fastbuild/bin/_solib_aarch64/_U@libtorch_S_S_Cc10_Ucuda___Ulib/libc10_cuda.so while searching for c10_cuda

/usr/bin/ld.gold: error: cannot find -lc10_cuda

/usr/bin/ld.gold: warning: skipping incompatible bazel-out/aarch64-fastbuild/bin/_solib_aarch64/_U@libtorch_S_S_Cc10___Ulib/libc10.so while searching for c10

/usr/bin/ld.gold: error: cannot find -lc10

collect2: error: ld returned 1 exit status

Target //:libtorchtrt failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 19.738s, Critical Path: 6.94s

INFO: 12 processes: 10 internal, 2 linux-sandbox.

FAILED: Build did NOT complete successfully

```

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version: 1.13.0a0+d0d6b1f2.nv22.10

- CPU Architecture: aarch64

- OS (e.g., Linux): Linux, NVidia's version of ubuntu 20.04 for jetson

- Python version: Python 3.8.10

- CUDA version:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Wed_May__4_00:02:26_PDT_2022

Cuda compilation tools, release 11.4, V11.4.239

Build cuda_11.4.r11.4/compiler.31294910_0

|

https://github.com/pytorch/TensorRT/issues/1600

|

closed

|

[

"question",

"No Activity",

"channel: linux-jetpack"

] | 2023-01-20T17:08:42Z

| 2023-09-15T11:33:27Z

| null |

arnaghizadeh

|

huggingface/setfit

| 282

|

Loading a trained SetFit model without setfit?

|

SetFit team, first off, thanks for the awesome library!

I'm running into trouble trying to load and run inference on a trained SetFit model without using `SetFitModel.from_pretrained()`. Instead, I'd like to load the model using torch, transformers, sentence_transformers, or some combination thereof. Is there a clear-cut example anywhere of how to do this?

Here's my current code, which does not return clean predictions. Thank you in advance for the help. For reference, this was trained as a multiclass classification model with 18 potential classes:

```from sentence_transformers import SentenceTransformer

from transformers import AutoTokenizer, AutoModel

import torch

import torch.nn.functional as F

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/all-MiniLM-L6-v2')

inputs = ['xxx', 'yyy', 'zzz']

encoded_inputs = tokenizer(inputs,

padding = True,

truncation = True,

return_tensors = 'pt')

model = AutoModel.from_pretrained('/path/to/trained/setfit/model/')

with torch.no_grad():

preds = model(**encoded_inputs)

preds```

|

https://github.com/huggingface/setfit/issues/282

|

closed

|

[

"question"

] | 2023-01-20T01:08:00Z

| 2024-05-21T08:11:08Z

| null |

ZQ-Dev8

|

huggingface/datasets

| 5,442

|

OneDrive Integrations with HF Datasets

|

### Feature request

First of all , I would like to thank all community who are developed DataSet storage and make it free available

How to integrate our Onedrive account or any other possible storage clouds (like google drive,...) with the **HF** datasets section.

For example, if I have **50GB** on my **Onedrive** account and I want to move between drive and Hugging face repo or vis versa

### Motivation

make the dataset section more flexible with other possible storage

like the integration between Google Collab and Google drive the storage

### Your contribution

Can be done using Hugging face CLI

|

https://github.com/huggingface/datasets/issues/5442

|

closed

|

[

"enhancement"

] | 2023-01-19T23:12:08Z

| 2023-02-24T16:17:51Z

| 2

|

Mohammed20201991

|

pytorch/xla

| 4,482

|

How to save checkpoints in XLA

|

Hello

I have training scripts running on CPUs and GPUs without error.

I am trying to make the scripts compatible with TPUs.

I was using the following lines to save checkpoints

```

torch.save(checkpoint, path_checkpoints_file )

```

and following lines to load checkpoints

```

checkpoint = torch.load(path_checkpoints_file, map_location=torch.device('cpu'))

lastEpoch = checkpoint['lastEpoch']

activeChunk = checkpoint['activeChunk']

chunk_count = checkpoint['chunk_count']

model.load_state_dict(checkpoint['model_state_dict'])

model.to(device)

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

lr_scheduler.load_state_dict(checkpoint['lr_scheduler_state_dict'])

```

For TPUs I replaced the saving operation with

```

xm.save(checkpoint, path_checkpoints_file )

```

and the loading part with

```

checkpoint = xser.load( path_checkpoints_file )

lastEpoch = checkpoint['lastEpoch']

activeChunk = checkpoint['activeChunk']

chunk_count = checkpoint['chunk_count']

model.load_state_dict(checkpoint['model_state_dict'])

model.to(device)

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

lr_scheduler.load_state_dict(checkpoint['lr_scheduler_state_dict'])

```

But during training, the loss remains almost constant.

Do we have a template to save and load checkpoints having models, optimizers and learning schedulers?

best regards

|

https://github.com/pytorch/xla/issues/4482

|

open

|

[] | 2023-01-19T21:50:05Z

| 2023-02-15T22:58:13Z

| null |

mfatih7

|

pytorch/functorch

| 1,106

|

Vmap and backward hook problem

|

I try to get the gradient of the intermedia layer of model, so I use the backwards hook with functroch.grad to get the gradient of each image. When I used for loop to iterate each image, I successfully obtained 5000 gradients (dataset size). However, when I use vmap to do the same thing, I only get 40 gradients (40 batches in 1 epoch). Is there any way to solve it, or I have to use for loop?

|

https://github.com/pytorch/functorch/issues/1106

|

open

|

[] | 2023-01-19T21:25:02Z

| 2023-01-23T05:08:49Z

| 1

|

pmzzs

|

pytorch/tutorials

| 2,175

|

OSError: Missing: valgrind, callgrind_control, callgrind_annotate

|

The error occurs on below step:

8. Collecting instruction counts with Callgrind:

Traceback (most recent call last):

File "benchmark.py", line 805, in <module>

stats_v0 = t0.collect_callgrind()

File "/usr/local/lib/python3.8/dist-packages/torch/utils/benchmark/utils/timer.py", line 486, in collect_callgrind

result = valgrind_timer_interface.wrapper_singleton().collect_callgrind(

File "/usr/local/lib/python3.8/dist-packages/torch/utils/benchmark/utils/valgrind_wrapper/timer_interface.py", line 526, in collect_callgrind

self._validate()

File "/usr/local/lib/python3.8/dist-packages/torch/utils/benchmark/utils/valgrind_wrapper/timer_interface.py", line 512, in _validate

raise OSError("Missing: " + ", ".join(missing_cmds))

OSError: Missing: valgrind, callgrind_control, callgrind_annotate

|

https://github.com/pytorch/tutorials/issues/2175

|

open

|

[

"question"

] | 2023-01-19T16:00:52Z

| 2023-01-24T10:47:08Z

| null |

ghost

|

pytorch/data

| 949

|

`torchdata` not available through `pytorch-nightly` conda channel

|

### 🐛 Describe the bug

The nightly version of torchdata does not seem available through the corresponding conda channel.

**Command:**

```

$ conda install torchdata -c pytorch-nightly --override-channels

```

**Result:**

```

Collecting package metadata (current_repodata.json): done

Solving environment: failed with initial frozen solve. Retrying with flexible solve.

Collecting package metadata (repodata.json): done

Solving environment: failed with initial frozen solve. Retrying with flexible solve.

PackagesNotFoundError: The following packages are not available from current channels:

- torchdata

Current channels:

- https://conda.anaconda.org/pytorch-nightly/osx-arm64

- https://conda.anaconda.org/pytorch-nightly/noarch

To search for alternate channels that may provide the conda package you're

looking for, navigate to

https://anaconda.org

and use the search bar at the top of the page.

```

### Versions

```

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: macOS 13.1 (arm64)

GCC version: Could not collect

Clang version: Could not collect

CMake version: version 3.22.4

Libc version: N/A

Python version: 3.10.8 | packaged by conda-forge | (main, Nov 22 2022, 08:25:29) [Clang 14.0.6 ] (64-bit runtime)

Python platform: macOS-13.1-arm64-arm-64bit

Is CUDA available: N/A

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.23.5

[conda] numpy 1.23.5 py310h5d7c261_0 conda-forge

```

|

https://github.com/meta-pytorch/data/issues/949

|

closed

|

[

"high priority"

] | 2023-01-18T15:49:29Z

| 2023-01-18T17:11:32Z

| 4

|

PierreGtch

|

pytorch/tutorials

| 2,173

|

Area calculation in TorchVision Object Detection Finetuning Tutorial

|

I see that at https://pytorch.org/tutorials/intermediate/torchvision_tutorial.html,

` area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])` but shouldn't it be something like `area = ((boxes[:, 3] - boxes[:, 1]) + 1) * ((boxes[:, 2] - boxes[:, 0]) + 1) ` for calculating areas? If I am wrong, can someone explain me why is it so. Thanks in advance !

cc @datumbox @nairbv

|

https://github.com/pytorch/tutorials/issues/2173

|

closed

|

[

"module: vision"

] | 2023-01-18T08:55:55Z

| 2023-02-15T16:55:24Z

| 1

|

Himanshunitrr

|

pytorch/torchx

| 684

|

Docker workspace: How to specify "latest" (nightly) base image?

|

## ❓ Questions and Help

For my docker workspace (e.g. scheduler == "local_docker" or "aws_batch"), I'd like to use a base image that is published on a nightly cadence. So I have this `Dockerfile.torchx`

```

# Dockerfile.torchx

ARGS IMAGE

ARGS WORKSPACE

FROM $IMAGE

WORKDIR /workspace/mfive

COPY $WORKSPACE .

# installs my workspace (has setup.py)

RUN pip install -e .[dev]

```

In my `.torchxconfig` I've specified the latest default image as:

```

# .torchxconfig

[dist.ddp]

image = registry.gitlab.aws.dev/mfive/mfive-nightly:latest

```

The nightly build tags the nightly image as `latest` in addition to the `YYYY.MM.DD` (e.g. `mfive-nightly:2023.01.15`) but because the [`DockerWorkspace`'s build argument has `pull=False`](https://github.com/pytorch/torchx/blob/main/torchx/workspace/docker_workspace.py#L126) this won't work since `latest` will be cached.

Is there a better way for me to specify a "use-the-latest" base image from the repo policy when building a docker workspace?

cc) @d4l3k

|

https://github.com/meta-pytorch/torchx/issues/684

|

closed

|

[] | 2023-01-17T22:03:19Z

| 2023-03-17T22:06:25Z

| 3

|

kiukchung

|

pytorch/PiPPy

| 723

|

How to reduce memory costs when running on CPU

|

I running HF_inference.py on my CPU and it works well! It can successfully applying pipeline parallelism on CPU. However, when I applying pipeline parallelism, I found that each rank will load the whole model and it seems not necessary since each rank only performs a part of the model. There must be some ways can figure out this issue and I would love to solve this issue. It would be great if developers of TAU can give me some advice, we can discuss more about it if you have any idea. Thanks!

|

https://github.com/pytorch/PiPPy/issues/723

|

closed

|

[] | 2023-01-17T07:54:31Z

| 2025-06-10T02:40:11Z

| null |

jiqing-feng

|

huggingface/diffusers

| 2,012

|

Reduce Imagic Pipeline Memory Consumption

|

I'm running the [Imagic Stable Diffusion community pipeline](https://github.com/huggingface/diffusers/blob/main/examples/community/imagic_stable_diffusion.py) and it's routinely allocating 25-38 GiB GPU vRAM which seems excessively high.

@MarkRich any ideas on how to reduce memory usage? Xformers and attention slicing brings it down to 20-25 GiB but fp16 doesn't work and memory consumption in general seems excessively high (trying to deploy on serverless GPUs)

|

https://github.com/huggingface/diffusers/issues/2012

|

closed

|

[

"question",

"stale"

] | 2023-01-16T23:43:03Z

| 2023-02-24T15:03:35Z

| null |

andreemic

|

huggingface/optimum

| 697

|

Custom model output

|

### System Info

```shell

Copy-and-paste the text below in your GitHub issue:

- `optimum` version: 1.6.1

- `transformers` version: 4.25.1

- Platform: Linux-5.19.0-29-generic-x86_64-with-glibc2.36

- Python version: 3.10.8

- Huggingface_hub version: 0.11.1

- PyTorch version (GPU?): 1.13.1 (cuda availabe: True)

```

### Who can help?

@michaelbenayoun @lewtun @fxm

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

no code

### Expected behavior

Sorry in advance if it does exists already, but I didn't find any doc on this.

In transformers it is possible to custom the output of a model by adding some boolean input arguments such as `output_attentions` and `output_hiddens`. How to make them available in my exported ONNX model?

If it is not possible yet, I will update this thread into a feature request :)

Thanks in advance.

|

https://github.com/huggingface/optimum/issues/697

|

open

|

[

"bug"

] | 2023-01-16T14:08:12Z

| 2023-04-11T12:30:04Z

| 3

|

jplu

|

huggingface/datasets

| 5,424

|

When applying `ReadInstruction` to custom load it's not DatasetDict but list of Dataset?

|

### Describe the bug

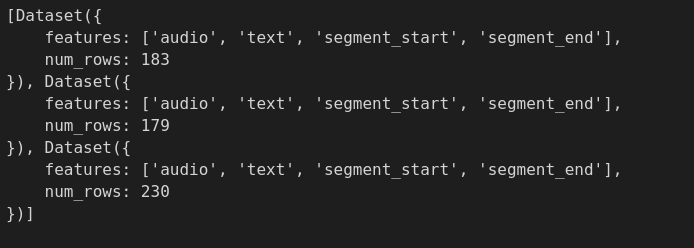

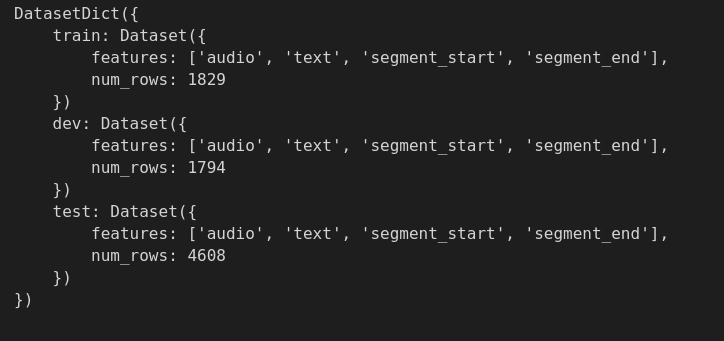

I am loading datasets from custom `tsv` files stored locally and applying split instructions for each split. Although the ReadInstruction is being applied correctly and I was expecting it to be `DatasetDict` but instead it is a list of `Dataset`.

### Steps to reproduce the bug

Steps to reproduce the behaviour:

1. Import

`from datasets import load_dataset, ReadInstruction`

2. Instruction to load the dataset

```

instructions = [

ReadInstruction(split_name="train", from_=0, to=10, unit='%', rounding='closest'),

ReadInstruction(split_name="dev", from_=0, to=10, unit='%', rounding='closest'),

ReadInstruction(split_name="test", from_=0, to=5, unit='%', rounding='closest')

]

```

3. Load

`dataset = load_dataset('csv', data_dir="data/", data_files={"train":"train.tsv", "dev":"dev.tsv", "test":"test.tsv"}, delimiter="\t", split=instructions)`

### Expected behavior

**Current behaviour**

:

**Expected behaviour**

### Environment info

``datasets==2.8.0

``

`Python==3.8.5

`

`Platform - Ubuntu 20.04.4 LTS`

|

https://github.com/huggingface/datasets/issues/5424

|

closed

|

[] | 2023-01-16T06:54:28Z

| 2023-02-24T16:19:00Z

| 1

|

macabdul9

|

pytorch/pytorch

| 92,202

|

Generator: when I want to use a new backend, how to create a Generator with the new backend?

|

### 🐛 Describe the bug

I want to add a new backend, so I add my backend by referring to this tutorial. https://github.com/bdhirsh/pytorch_open_registration_example

But how to create a Generator with my new backend ?

I see the code related to 'Generator' is in the file, https://github.com/pytorch/pytorch/blob/master/torch/csrc/Generator.cpp

static PyObject* THPGenerator_pynew(

PyTypeObject* type,

PyObject* args,

PyObject* kwargs) {

HANDLE_TH_ERRORS

static torch::PythonArgParser parser({"Generator(Device device=None)"});

torch::ParsedArgs<1> parsed_args;

auto r = parser.parse(args, kwargs, parsed_args);

auto device = r.deviceWithDefault(0, at::Device(at::kCPU));

THPGeneratorPtr self((THPGenerator*)type->tp_alloc(type, 0));

if (device.type() == at::kCPU) {

self->cdata = make_generator<CPUGeneratorImpl>();

}

#ifdef USE_CUDA

else if (device.type() == at::kCUDA) {

self->cdata = make_generator<CUDAGeneratorImpl>(device.index());

}

#elif USE_MPS

else if (device.type() == at::kMPS) {

self->cdata = make_generator<MPSGeneratorImpl>();

}

#endif

else {

AT_ERROR(

"Device type ",

c10::DeviceTypeName(device.type()),

" is not supported for torch.Generator() api.");

}

return (PyObject*)self.release();

END_HANDLE_TH_ERRORS

}

So how to create a Generator with my new backend named "privateuseone" ?

### Versions

new backend

python:3.7.5

pytorch: 2.0.0

CUDA: None

|

https://github.com/pytorch/pytorch/issues/92202

|

closed

|

[

"triaged",

"module: backend"

] | 2023-01-14T08:11:04Z

| 2023-10-28T15:02:10Z

| null |

heidongxianhua

|

pytorch/TensorRT

| 1,592

|

❓ [Question] How should recompilation in Torch Dynamo + `fx2trt` be handled?

|

## ❓ Question

Given that Torch Dynamo compiles models lazily, how should benchmarking/usage of Torch Dynamo models, especially in cases where the inputs have a dynamic batch dimension, be handled?

## What you have already tried

Based on compiling and running inference using `fx2trt` with Torch Dynamo on the [BERT base-uncased model](https://huggingface.co/bert-base-uncased), and other similar networks, it seems that Torch Dynamo recompiles the model for each different batch-size input provided. Additionally, once the object has encountered a particular batch size, it does not recompile the model from scratch upon seeing another input of the same shape. It seems that Dynamo may be caching statically-shaped model compilations and dynamically selecting among these at inference time. The code used to generate the dynamo model and outputs is:

```python

dynamo_model = torchdynamo.optimize("fx2trt")(model)

output = dynamo_model(input)

```

While Dynamo does have a flag which allows users to specify dynamic shape prior to compilation (`torchdynamo.config.dynamic_shapes=True`), for BERT this seems to break compilation with `fx2trt`.

Recompilation of the model for each new batch size makes inference challenging for benchmarking and general usage tasks, as each time the model encounters an input of new shape, it would take much longer to complete the inference task than otherwise.

## Environment

- PyTorch Version (e.g., 1.0): 1.14.0.dev20221114+cu116

- Torch-TRT Version: dc570e47

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): pip

- Python version: 3.8

- CUDA version: 11.6

## Additional context

Dynamo provides many benefits when used in conjunction with fx2trt, as it enables accelerated inference even when the graph might not normally be traceable due to control flow constraints. It would be beneficial to understand the dynamic batch/recompilation issue so Dynamo can be more effectively integrated into benchmarking for Torch-TRT.

Question #1569 could be relevant to this issue as it also relates to Dynamic Batch + FX.

|

https://github.com/pytorch/TensorRT/issues/1592

|

closed

|

[

"question",

"No Activity",

"component: fx"

] | 2023-01-14T02:18:53Z

| 2023-05-09T00:02:14Z

| null |

gs-olive

|

pytorch/functorch

| 1,101

|

How to get only the last few layers' gradident?

|

```

from functorch import make_functional_with_buffers, vmap, grad

fmodel, params, buffers = make_functional_with_buffers(net,disable_autograd_tracking=True)

def compute_loss_stateless_model (params, buffers, sample, target):

batch = sample.unsqueeze(0)

targets = target.unsqueeze(0)

predictions = fmodel(params, buffers, batch)

loss = criterion(predictions, targets)

return loss

ft_compute_grad = grad(compute_loss_stateless_model)

gradinet = ft_compute_grad(params, buffers, train_poi_set[0][0].cuda(), torch.tensor(train_poi_set[0][1]).cuda())

```

This will return the gradient of the whole model. However, I only want the second last layers' gradient, like:

```

gradinet = ft_compute_grad(params, buffers, train_poi_set[0][0].cuda(), torch.tensor(train_poi_set[0][1]).cuda())[-2]

```

Although this method can also obtain the required gradient, it will cause a lot of unnecessary overhead. Is there any way to close the 'require_grad' of all previous layers? Thanks for your answer!

|

https://github.com/pytorch/functorch/issues/1101

|

open

|

[] | 2023-01-13T21:48:42Z

| 2024-04-05T03:02:41Z

| null |

pmzzs

|

pytorch/functorch

| 1,099

|

Will pmap be supported in functorh?

|

Greetings, I am very grateful that vmap is supported in functorch. Is there any plan to include support for pmap in the future? Thank you. Additionally, what are the ways that I can contribute to the development of this project?

|

https://github.com/pytorch/functorch/issues/1099

|

open

|

[] | 2023-01-11T17:32:48Z

| 2024-06-05T16:32:36Z

| 2

|

shixun404

|

pytorch/TensorRT

| 1,585

|

❓ [Question] How can I make deserialized targets compatible with Torch-TensorRT ABI?

|

## ❓ Question

When I load my compiled model:

`model = torch.jit.load('model.ts')

`

**I keep getting the error:**

`RuntimeError: [Error thrown at core/runtime/TRTEngine.cpp:250] Expected serialized_info.size() == SERIALIZATION_LEN to be true but got false

Program to be deserialized targets an incompatible Torch-TensorRT ABI`

## What you have already tried

It works when I run the model inside the official [Nvidia PyTorch Release 22.12](https://docs.nvidia.com/deeplearning/frameworks/pytorch-release-notes/rel-22-12.html#rel-22-12) docker image:

```

model = torch.jit.load('model.ts')

model.eval()

```

However, I want to run the model in my normal environment for debugging purposes.

I've installed torch-tensorrt with: pip install torch-tensorrt==1.3.0 --find-links https://github.com/pytorch/TensorRT/releases/expanded_assets/v1.3.0

And I've compiled my model using docker: nvcr.io/nvidia/pytorch:22.12-py3

The [1.3.0 Release](https://github.com/pytorch/TensorRT/releases/tag/v1.3.0) says that it's based on TensorRT 8.5, and the docker image: [TensorRT™ 8.5.1](https://docs.nvidia.com/deeplearning/frameworks/pytorch-release-notes/rel-22-12.html#rel-22-12). I've also tried the 22.09 image that specifies NVIDIA TensorRT™ [8.5.0.12](https://docs.nvidia.com/deeplearning/frameworks/pytorch-release-notes/rel-22-09.html#rel-22-09), but I'm still getting the same error.

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version: 1.13.0

- CPU Architecture: AMD Rome

- OS: Ubuntu 22.04 LTS

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source):

pip install torch==1.13.0+cu117 torchvision==0.14.0+cu117 --extra-index-url https://download.pytorch.org/whl/cu117 https://download.pytorch.org/whl/cu113

pip install nvidia-pyindex

pip install nvidia-tensorrt

pip install torch-tensorrt==1.3.0 --find-links https://github.com/pytorch/TensorRT/releases/expanded_assets/v1.3.0

import torch_tensorrt

- Build command you used (if compiling from source):

- Are you using local sources or building from archives:

- Python version: 3.9

- CUDA version: cu117

- GPU models and configuration: Nvidia A6000 (Ampere)

- Any other relevant information:

|

https://github.com/pytorch/TensorRT/issues/1585

|

closed

|

[

"question",

"No Activity",

"component: runtime"

] | 2023-01-11T12:44:58Z

| 2023-05-04T00:02:16Z

| null |

emilwallner

|

pytorch/kineto

| 713

|

How to get the CPU utilization by Pytorch Profiler?

|

According to the code of gpu_metrics_parser.py of torch-tb-profiler, I understand that the gpu utilization is actually the sum of event times of type EventTypes.KERNEL over a period of time / total time. So, is CPU utilization the sum of event times of type EventTypes.OPERATOR over a period of time / total time?

It seems that the result of this calculation is not normal.

|

https://github.com/pytorch/kineto/issues/713

|

closed

|

[] | 2023-01-11T09:46:20Z

| 2023-02-15T03:53:40Z

| null |

young-chao

|

pytorch/TensorRT

| 1,580

|

I am deleting some layers of Resneet152 for example del resnet152.fc and del resnet152.layer4 and save it locally in order to get the dimension of 1024. Later when I import this saved model it complains about the missing layer4. What might be the the reason? Does still try tp access the original model. How can get 1024 feature vectors for a given image using Resnet1024.

|

## ❓ Question

<!-- Your question -->

## What you have already tried

<!-- A clear and concise description of what you have already done. -->

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0):

- CPU Architecture:

- OS (e.g., Linux):

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source):

- Build command you used (if compiling from source):

- Are you using local sources or building from archives:

- Python version:

- CUDA version:

- GPU models and configuration:

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1580

|

closed

|

[

"question",

"No Activity"

] | 2023-01-09T15:22:23Z

| 2023-04-22T00:02:19Z

| null |

pradeep10kumar

|

pytorch/TensorRT

| 1,579

|

When I delete some layers from Resnet152 for example

|

## ❓ Question

<!-- Your question -->

## What you have already tried

<!-- A clear and concise description of what you have already done. -->

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.0):

- CPU Architecture:

- OS (e.g., Linux):

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source):

- Build command you used (if compiling from source):

- Are you using local sources or building from archives:

- Python version:

- CUDA version:

- GPU models and configuration:

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/1579

|

closed

|

[

"question"

] | 2023-01-09T15:17:50Z

| 2023-01-09T15:18:31Z

| null |

pradeep10kumar

|

pytorch/TensorRT

| 1,578

|

❓ [Question] Failed to compile EfficientNet: Error: Segmentation fault (core dumped)

|

I followed the step in the demo notebook `[EfficientNet-example.ipynb](https://github.com/pytorch/TensorRT/blob/main/notebooks/EfficientNet-example.ipynb)`

When I try to compile EfficientNet, an error occurred: `Segmentation fault (core dumped)`

I have located the error is caused by

`

trt_model_fp32 = torch_tensorrt.compile(model, inputs = [torch_tensorrt.Input((1, 3, 512, 512), dtype=torch.float32)],

enabled_precisions = torch.float32, # Run with FP32

workspace_size = 1 << 22

)

`

Full code

```

import os

import numpy as np

from PIL import Image

from torchvision import transforms

import sys

import timm

import torch.nn as nn

import torch

import io

import torch.backends.cudnn as cudnn

import torch_tensorrt

SIZE = 512

cudnn.benchmark = True

preprocess_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Resize((SIZE, SIZE)),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225],

)])

def preprocess(byteImage):

image = Image.open(io.BytesIO(byteImage))

image = Image.fromarray(np.array(image)[:,:,:3])

return preprocess_transform(image).unsqueeze(0)

class CustomModel(nn.Module):

def __init__(self):

super().__init__()

self.model = timm.create_model('tf_efficientnet_b0_ns', pretrained=False)

self.n_features = self.model.classifier.in_features

self.model.classifier = nn.Identity()

self.fc = nn.Linear(self.n_features, 1)

def feature(self, image):

feature = self.model(image)

return feature

def forward(self, image):

feature = self.feature(image)

output = self.fc(feature)

return output

def predict(tensorImage):

tensorImage = tensorImage.to('cuda')

with torch.no_grad:

pred = trt_model_fp32(tensorImage)

torch.cuda.synchronize()

return pred.cpu().detach().numpy()

model = CustomModel().to('cuda')

state_dict = torch.load(my_weight_path, map_location='cuda')

model.load_state_dict(state_dict['model'])

model.eval()

trt_model_fp32 = torch_tensorrt.compile(model, inputs = [torch_tensorrt.Input((1, 3, 512, 512), dtype=torch.float32)],

enabled_precisions = torch.float32, # Run with FP32

workspace_size = 1 << 22

)

input_data = torch.randn(1,3,512,512).to('cuda')

pred = predict(input_data)

print(pred.shape)

print(pred)

```

Please help❗

|

https://github.com/pytorch/TensorRT/issues/1578

|

closed

|

[

"question"

] | 2023-01-09T14:44:25Z

| 2023-02-28T23:40:20Z

| null |

Tonyboy999

|

huggingface/setfit

| 260

|

How to use .predict() function

|

Hi,

I am new at using the setfit. I will be running many tunings for models and currently achieved getting evaluation metrics using ("trainer.evaluate())

However, is there any way to do something like the following to save the trained model's predictions?

trainer = SetFitTrainer(......)

trainer.train()

**predictions=trainer.predict(testX)**

SetFitTrainer has no predict function

I can achieve that with trainer.push_to_hub() and downloading back with SetFitModel.from_pretrained() but there is probably a better way without publishing on the hub?

|

https://github.com/huggingface/setfit/issues/260

|

closed

|

[

"question"

] | 2023-01-08T23:18:56Z

| 2023-01-09T10:00:38Z

| null |

yafsin

|

huggingface/setfit

| 256

|

Contrastive training number of epochs

|

The `SentenceTransformer` number of epochs is the same as the number of epochs for the classification head.

Even when `SetFitTrainer` is initialized with `num_epochs=1` and then `trainer.train(num_epochs=10)`, the sentence transformer runs with 10 epochs. Ideally, senatence transformer should run 1 epoch and the classifier should run for 10.

The reason for this is that in `trainer.py`, the `model_body.fit()` is called with `num_epochs` rather than `self.num_epochs`. Is this intended??

I can write a PR to fix this if needed.

|

https://github.com/huggingface/setfit/issues/256

|

closed

|

[

"question"

] | 2023-01-06T02:26:30Z

| 2023-01-09T10:54:45Z

| null |

abhinav-kashyap-asus

|

pytorch/serve

| 2,057

|

what is my_tc ?

|

### 🐛 Describe the bug

torchserve --start --model-store model_store --models my_tc=BERTTokenClassification.mar --ncs

curl -X POST http://127.0.0.1:8080/predictions/my_tc -T Token_classification_artifacts/sample_text_captum_input.txt

### Error logs

2023-01-05T15:51:41,260 [INFO ] W-9001-my_tc_1.0-stdout MODEL_LOG - model_name: my_tc, batchSize: 1

2023-01-05T15:51:41,628 [INFO ] W-9003-my_tc_1.0-stdout MODEL_LOG - Listening on port: /tmp/.ts.sock.9003

2023-01-05T15:51:41,633 [INFO ] W-9003-my_tc_1.0-stdout MODEL_LOG - Successfully loaded /data//python3.9/site-packages/ts/configs/metrics.yaml.

So **model_name** is my_tc ? not BERTTokenClassification

### Installation instructions

conda install -c pytorch torchserve torch-model-archiver torch-workflow-archiver

### Model Packaing

torch-model-archiver --model-name BERTTokenClassification --version 1.0 --serialized-file Transformer_model/pytorch_model.bin --handler ./Transformer_handler_generalized.py --extra-files "Transformer_model/config.json,./setup_config.json,./Token_classification_artifacts/index_to_name.json"

model_name is what ?

### config.properties

_No response_

### Versions

------------------------------------------------------------------------------------------

Environment headers

------------------------------------------------------------------------------------------

Torchserve branch:

torchserve==0.7.0b20221212

torch-model-archiver==0.7.0b20221212

Python version: 3.9 (64-bit runtime)

Python executable: /data/python

Versions of relevant python libraries:

captum==0.6.0

future==0.18.2

numpy==1.24.1

nvgpu==0.9.0

psutil==5.9.4

requests==2.28.1

sentence-transformers==2.2.2

sentencepiece==0.1.97

torch==1.13.1+cu116

torch-model-archiver==0.7.0b20221212

torch-workflow-archiver==0.2.6b20221212

torchaudio==0.13.1+cu116

torchserve==0.7.0b20221212

torchvision==0.14.1+cu116

transformers==4.26.0.dev0

wheel==0.37.1

torch==1.13.1+cu116

**Warning: torchtext not present ..

torchvision==0.14.1+cu116

torchaudio==0.13.1+cu116

Java Version:

OS: CentOS Linux release 7.9.2009 (Core)

GCC version: (GCC) 4.8.5 20150623 (Red Hat 4.8.5-44)

Clang version: N/A

CMake version: version 2.8.12.2

Is CUDA available: Yes

CUDA runtime version: 11.6.124

GPU models and configuration:

GPU 0: Tesla T4

GPU 1: Tesla T4

GPU 2: Tesla T4

Nvidia driver version: 510.108.03

cuDNN version: Probably one of the following:

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn.so.8

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_adv_train.so.8

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8

/usr/local/cuda-11.6/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8

### Repro instructions

just want to know what is my_tc

### Possible Solution

_No response_

|

https://github.com/pytorch/serve/issues/2057

|

open

|

[

"triaged"

] | 2023-01-05T07:57:46Z

| 2023-01-05T15:00:14Z

| null |

ucas010

|

huggingface/diffusers

| 1,921

|

How to finetune inpainting model for object removal? What is the input prompt for object removal for both training and inference?

|

Hi team,

Thanks for your great work!

I am trying to get object removal functionality from inpainting of SD.

How to finetune inpainting model for object removal?

What is the input prompt for object removal for both training and inference?

Thanks

|

https://github.com/huggingface/diffusers/issues/1921

|

closed

|

[

"stale"

] | 2023-01-05T01:23:20Z

| 2023-04-03T14:50:38Z

| null |

hdjsjyl

|

pytorch/functorch

| 1,094

|

batching over model parameters

|

I have a use-case for `functorch`. I would like to check possible iterations of model parameters in a very efficient way (I want to eliminate the loop). Here's an example code for a simplified case I got it working:

```python

linear = torch.nn.Linear(10,2)

default_weight = linear.weight.data

sample_input = torch.rand(3,10)

sample_add = torch.rand_like(default_weight)

def interpolate_weights(alpha):

with torch.no_grad():

res_weight = torch.nn.Parameter(default_weight + alpha*sample_add)

linear.weight = res_weight

return linear(sample_input)

```

now I could do `for alpha in np.np.linspace(0.0, 1.0, 100)` but I want to vectorise this loop since my code is prohibitively slow. Is functorch here applicable? Executing:

```python

alphas = torch.linspace(0.0, 1.0, 100)

vmap(interpolate_weights)(alphas)

```

works, but how to do something similar for a simple resnet does not work. I've tried using `load_state_dict` but that's not working:

```python

from torchvision import models

model_resnet = models.resnet18(pretrained=True)

named_params = list(model_resnet.named_parameters())

named_params_data = [(n,p.data.clone()) for (n,p) in named_params]

sample_data = torch.rand(10,3,224,244)

def test_resnet(new_params):

def interpolate(alpha):

with torch.no_grad():

p_dict = {name:(old + alpha*new_params[i]) for i,(name, old) in enumerate(named_params_data)}

model_resnet.load_state_dict(p_dict, strict=False)

out = model_resnet(sample_data)

return out

return interpolate

rand_tensor = [torch.rand_like(p) for n,p in named_params_data]

to_vamp_resnet = test_thing(rand_tensor)

vmap(to_vamp_resnet)(alphas)

```

results in:

`

While copying the parameter named "fc.bias", whose dimensions in the model are torch.Size([1000]) and whose dimensions in the checkpoint are torch.Size([1000]), an exception occurred : ('vmap: inplace arithmetic(self, *extra_args) is not possible because there exists a Tensor `other` in extra_args that has more elements than `self`. This happened due to `other` being vmapped over but `self` not being vmapped over in a vmap. Please try to use out-of-place operators instead of inplace arithmetic. If said operator is being called inside the PyTorch framework, please file a bug report instead.',).

`

|

https://github.com/pytorch/functorch/issues/1094

|

open

|

[] | 2023-01-04T17:59:59Z

| 2023-01-04T21:42:36Z

| 2

|

LeanderK

|

huggingface/setfit

| 254

|

Why are the models fine-tuned with CosineSimilarity between 0 and 1?

|

Hi everyone,

This is a small question related to how models are fine-tuned during the first step of training. I see that the default loss function is `losses.CosineSimilarityLoss`. But when generating sentence pairs [here](https://github.com/huggingface/setfit/blob/35c0511fa9917e653df50cb95a22105b397e14c0/src/setfit/modeling.py#L546), negative ones are assigned a 0 label.

I understand that having scores between 0 and 1 is ideal, because they can be interpreted as probabilities. But cosine similarity ranges from -1 to 1, so shouldn't we expect the full range to be used? The model head can then make predictions on a more isotropic embedding space.

Is this related to how Sentence Transformers are pre-trained?

Thanks for your clarifications!

|

https://github.com/huggingface/setfit/issues/254

|

open

|

[

"question"

] | 2023-01-03T09:47:11Z

| 2023-03-14T10:24:17Z

| null |

EdouardVilain-Git

|

pytorch/TensorRT

| 1,570

|

❓ [Question] When I use fx2trt, can an unsupported op fallback to pytorch like the TorchScript compiler?

|

## ❓ Question

<!-- Your question -->

When I use fx2trt, can an unsupported op fallback to pytorch like the TorchScript compiler?

|

https://github.com/pytorch/TensorRT/issues/1570

|

closed

|

[

"question"

] | 2023-01-03T05:26:00Z

| 2023-01-06T22:22:12Z

| null |

chenzhengda

|

pytorch/TensorRT

| 1,569

|

❓ [Question] How do you use dynamic shape when using fx as ir and the model is not fully lowerable

|

## ❓ Question

I have a pytorch model that contains a Pixel Shuffle operation (which is not fully supported) and I would like to convert it to TensorRT, while being able to specify a dynamic shape as input. The "ts" path does not work as there is an issue, the "fx" path has problems too and I am not able to use a splitted model with dynamic shapes.

## What you have already tried

* The conversion using TorchScript as "ir" is not working (see Issue #1568)

* The conversion using `torch_tensorrt.fx.compile` succeeds when I use a static shape, however there is no way of specifying a dynamic shape

* Using a manual approach (that is by manually tracing with `acc_tracer`, then constructing the `TRTInterpreter` and finally the `TRTModule`) fails as there is a non supported operation (a pixel shuffle layer) (Maybe I should open an Issue for this too?)

* Using the manual approach with a `TRTSplitter` is maybe the way to go but I don't know how to specify the dynamic shape constraints in this situation.

The "manual" approach that I mentioned is the one specified in [examples/fx/fx2trt_example.py](https://github.com/pytorch/TensorRT/blob/master/examples/fx/fx2trt_example.py) and in the docs.

Here is the code as I have it now. Please note that the branch with the splitter is executed and the result is errors when I execute the trt model with different shapes. If `do_split` is set to `False` the conversion fails as `nn.PixelShuffle` is not supported.

```python

import tensorrt as trt

import torch.fx

import torch.nn as nn

import torch_tensorrt.fx.tracer.acc_tracer.acc_tracer as acc_tracer

import torchvision.models as models

from torch_tensorrt.fx import InputTensorSpec, TRTInterpreter, TRTModule

from torch_tensorrt.fx.utils import LowerPrecision

from torch_tensorrt.fx.tools.trt_splitter import TRTSplitter

class MyModel(nn.Module):

def __init__(self):

super().__init__()

self.conv = nn.Conv2d(3, 16, kernel_size=3, padding=1)

self.shuffle = nn.PixelShuffle(2)

def forward(self, x):

return self.shuffle(self.conv(x))

torch.set_grad_enabled(False)

# inputs

inputs = [torch.rand(1, 3, 224, 224).cuda()]

factory_kwargs = {"dtype": torch.float32, "device": torch.device("cuda:0")}

model = MyModel().to(**factory_kwargs)

model = model.eval()

out = model(inputs[0])

# sybolic trace

acc_model = acc_tracer.trace(model, inputs)

do_split = True

if do_split:

# split

splitter = TRTSplitter(acc_model, inputs)

splitter.node_support_preview(dump_graph=False)

split_mod = splitter()

print(split_mod.graph)

def get_submod_inputs(mod, submod, inputs):

acc_inputs = None

def get_input(self, inputs):

nonlocal acc_inputs

acc_inputs = inputs

handle = submod.register_forward_pre_hook(get_input)

mod(*inputs)

handle.remove()

return acc_inputs

for name, _ in split_mod.named_children():

if "_run_on_acc" in name:

submod = getattr(split_mod, name)

# Get submodule inputs for fx2trt

acc_inputs = get_submod_inputs(split_mod, submod, inputs)

# fx2trt replacement

interp = TRTInterpreter(

submod,

InputTensorSpec.from_tensors(acc_inputs),

explicit_batch_dimension=True,

)

r = interp.run(lower_precision=LowerPrecision.FP32)

trt_mod = TRTModule(*r)

setattr(split_mod, name, trt_mod)

trt_model = split_mod

else:

# input specs

input_specs = [

InputTensorSpec(

shape=(1, 3, -1, -1),

dtype=torch.float32,

device="cuda:0",

shape_ranges=[((1, 3, 112, 112), (1, 3, 224, 224), (1, 3, 512, 512))],

),

]

# input_specs = [

# InputTensorSpec(

# shape=(1, 3, 224, 224),

# dtype=torch.float32,

# device="cuda:0",

# ),

# ]

# TRT interpreter

interp = TRTInterpreter(

acc_model,

input_specs,

explicit_batch_dimension=True,

explicit_precision=True,

logger_level=trt.Logger.INFO,

)

interpreter_result = interp.run(

max_batch_size=4, lower_precision=LowerPrecision.FP32

)

# TRT module

trt_model = TRTModule(

interpreter_result.engine,

interpreter_result.input_names,

interpreter_result.output_names,

)

trt_out = trt_model(inputs[0])

trt_model(torch.rand(1,3, 112, 112).cuda())

trt_model(torch.rand(1,3, 150, 150).cuda())

trt_model(torch.rand(1,3, 400, 400).cuda())

trt_model(torch.rand(1,3, 512, 512).cuda())

print((trt_out - out).max())

```

## Environment

The official NVIDIA Pytorch Docker image version 22.12 is used.

> Build information about Torch-TensorRT can be found by turning on debug

|

https://github.com/pytorch/TensorRT/issues/1569

|

closed

|

[

"question",

"No Activity",

"component: fx"

] | 2023-01-02T14:44:52Z

| 2023-04-15T00:02:10Z

| null |

ivan94fi

|

pytorch/pytorch

| 91,537

|

Unclear how to change compiler used by `torch.compile`

|

### 📚 The doc issue

It is not clear from https://pytorch.org/tutorials//intermediate/torch_compile_tutorial.html, nor from the docs in `torch.compile`, nor even from looking through `_dynamo/config.py`, how one can change the compiler used by PyTorch.

Right now I am seeing the following issue. My code:

```python

import torch

@torch.compile

def f(x):

return 0.5 * x

f(torch.tensor(1.0))

```

<details><summary>This produces the following error message (click to toggle):</summary>

```

---------------------------------------------------------------------------

CalledProcessError Traceback (most recent call last)

File ~/venvs/main/lib/python3.10/site-packages/torch/_inductor/codecache.py:445, in CppCodeCache.load(cls, source_code)

444 try:

--> 445 subprocess.check_output(cmd, stderr=subprocess.STDOUT)

446 except subprocess.CalledProcessError as e:

File /opt/homebrew/Cellar/python@3.10/3.10.9/Frameworks/Python.framework/Versions/3.10/lib/python3.10/subprocess.py:421, in check_output(timeout, *popenargs, **kwargs)

419 kwargs['input'] = empty

--> 421 return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

422 **kwargs).stdout

File /opt/homebrew/Cellar/python@3.10/3.10.9/Frameworks/Python.framework/Versions/3.10/lib/python3.10/subprocess.py:526, in run(input, capture_output, timeout, check, *popenargs, **kwargs)

525 if check and retcode:

--> 526 raise CalledProcessError(retcode, process.args,

527 output=stdout, stderr=stderr)

528 return CompletedProcess(process.args, retcode, stdout, stderr)

CalledProcessError: Command '['g++', '/tmp/torchinductor_mcranmer/p4/cp42uf272g2qggmogzazkui7he4vnm4ftyfi2ghvyudtmaxxi25x.cpp', '-shared', '-fPIC', '-Wall', '-std=c++17', '-Wno-unused-variable', '-I/Users/mcranmer/venvs/main/lib/python3.10/site-packages/torch/include', '-I/Users/mcranmer/venvs/main/lib/python3.10/site-packages/torch/include/torch/csrc/api/include', '-I/Users/mcranmer/venvs/main/lib/python3.10/site-packages/torch/include/TH', '-I/Users/mcranmer/venvs/main/lib/python3.10/site-packages/torch/include/THC', '-I/opt/homebrew/opt/python@3.10/Frameworks/Python.framework/Versions/3.10/include/python3.10', '-lgomp', '-march=native', '-O3', '-ffast-math', '-fno-finite-math-only', '-fopenmp', '-D', 'C10_USING_CUSTOM_GENERATED_MACROS', '-o/tmp/torchinductor_mcranmer/p4/cp42uf272g2qggmogzazkui7he4vnm4ftyfi2ghvyudtmaxxi25x.so']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

CppCompileError Traceback (most recent call last)

File ~/venvs/main/lib/python3.10/site-packages/torch/_dynamo/output_graph.py:676, in OutputGraph.call_user_compiler(self, gm)

675 else:

--> 676 compiled_fn = compiler_fn(gm, self.fake_example_inputs())

677 _step_logger()(logging.INFO, f"done compiler function {name}")

File ~/venvs/main/lib/python3.10/site-packages/torch/_dynamo/debug_utils.py:1032, in wrap_backend_debug.<locals>.debug_wrapper(gm, example_inputs, **kwargs)

1031 else:

-> 1032 compiled_gm = compiler_fn(gm, example_inputs, **kwargs)

1034 return compiled_gm

File ~/venvs/main/lib/python3.10/site-packages/torch/__init__.py:1190, in _TorchCompileInductorWrapper.__call__(self, model_, inputs_)

1189 with self.cm:

-> 1190 return self.compile_fn(model_, inputs_)

File ~/venvs/main/lib/python3.10/site-packages/torch/_inductor/compile_fx.py:398, in compile_fx(model_, example_inputs_, inner_compile)

393 with overrides.patch_functions():

394

395 # TODO: can add logging before/after the call to create_aot_dispatcher_function

396 # in torch._functorch/aot_autograd.py::aot_module_simplified::aot_function_simplified::new_func

397 # once torchdynamo is merged into pytorch

--> 398 return aot_autograd(

399 fw_compiler=fw_compiler,

400 bw_compiler=bw_compiler,

401 decompositions=select_decomp_table(),

402 partition_fn=functools.partial(

403 min_cut_rematerialization_partition, compiler="inductor"

404 ),

405 )(model_, example_inputs_)

File ~/venvs/main/lib/python3.10/site-packages/torch/_dynamo/optimizations/training.py:78, in aot_autograd.<locals>.compiler_fn(gm, example_inputs)

77 with enable_aot_logging():

---> 78 cg = aot_module_simplified(gm, example_inputs, **kwargs)

79 counters["aot_autograd"]["ok"] += 1

File ~/venvs/main/lib/python3.10/site-packages/torch/_functorch/aot_autograd.py:2355, in aot_module_simplified(mod, args, fw_compiler, bw_compiler, partition_fn, decompositions, hasher_type, static_argnums)

2353 full_args.extend(args)

-> 2355 compiled_fn = create_aot_dispatcher_function(

2356 functional_call,

2357 full_args,

2358 aot_config,

2359 )

2361 # TODO: Th

|

https://github.com/pytorch/pytorch/issues/91537

|

closed

|

[

"module: docs",

"triaged",

"oncall: pt2",

"module: dynamo"

] | 2022-12-30T15:40:11Z

| 2023-12-01T19:00:48Z

| null |

MilesCranmer

|

pytorch/pytorch

| 91,498

|

how to Wrap normalization layers like LayerNorm in FP32 when use FSDP

|

in the blog https://pytorch.org/blog/scaling-vision-model-training-platforms-with-pytorch/

<img width="904" alt="image" src="https://user-images.githubusercontent.com/16861194/209910992-619704cd-0ef4-42ec-9d5c-ec7b42005b8b.png">

how to Wrap normalization layers like LayerNorm in FP32 when use FSDP, do we have a example code?

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu

|

https://github.com/pytorch/pytorch/issues/91498

|

closed

|

[

"oncall: distributed",

"triaged",

"module: fsdp"

] | 2022-12-29T06:13:34Z

| 2023-08-04T17:17:32Z

| null |

xiaohu2015

|

huggingface/setfit

| 251

|

Using setfit with the Hugging Face API

|

Hi, thank you so much for this amazing library!

I have trained my model and pushed it to the Hugging Face hub.

Since the output is a text-classification task, and the model card uploaded is for the sentence transformers, how should I use the model to run the classification model through the Hugging Face API?

Thank you!

|

https://github.com/huggingface/setfit/issues/251

|

open

|

[

"question"

] | 2022-12-29T01:46:37Z

| 2023-01-01T07:53:43Z

| null |

kwen1510

|

huggingface/setfit

| 249

|

Sentence Pairs generation: is possible to parallelize it?

|

My dataset has 20k samples, 200 labels, and 32 iterations, so that means around 128 million samples, right?

there's some way to parallelize the pairs sentences creation?

or at least to save these pairs to create one time and reuse multiple times (i.e. to train with different epochs)

Thanks

|

https://github.com/huggingface/setfit/issues/249

|

open

|

[

"question"

] | 2022-12-28T17:50:02Z

| 2023-02-14T20:04:29Z

| null |

info2000

|

huggingface/setfit

| 245

|

extracting embeddings from a trained SetFit model.

|

Hey First of All, Thank You For This Great Package!

IMy task relates to semantic similarity, in which I find 'closeness' of a query sentence to a list of candidate sentences. Something like [shown here](https://www.sbert.net/docs/usage/semantic_textual_similarity.html)

I wanted to know if there was a way to extract embeddings from a 'trained SetFit' model and then instead of utilizing the classification head just compute similarity of a given query sentences to the embeddings in SetFit.

Awaiting your answer,

Thanks again

|

https://github.com/huggingface/setfit/issues/245

|

closed

|

[

"question"

] | 2022-12-26T12:27:50Z

| 2023-12-06T13:21:04Z

| null |

moonisali

|

huggingface/optimum

| 640

|

Improve documentations around ONNX export

|

### Feature request

* Document `-with-past`, `--for-ort`, why use it

* Add more details in `optimum-cli export onnx --help` directly

### Motivation

/

### Your contribution

/

|

https://github.com/huggingface/optimum/issues/640

|

closed

|

[

"documentation",

"onnx",

"exporters"

] | 2022-12-23T15:54:32Z

| 2023-01-03T16:34:56Z

| 0

|

fxmarty

|

huggingface/datasets

| 5,385

|

Is `fs=` deprecated in `load_from_disk()` as well?

|

### Describe the bug

The `fs=` argument was deprecated from `Dataset.save_to_disk` and `Dataset.load_from_disk` in favor of automagically figuring it out via fsspec:

https://github.com/huggingface/datasets/blob/9a7272cd4222383a5b932b0083a4cc173fda44e8/src/datasets/arrow_dataset.py#L1339-L1340

Is there a reason the same thing shouldn't also apply to `datasets.load.load_from_disk()` as well ?

https://github.com/huggingface/datasets/blob/9a7272cd4222383a5b932b0083a4cc173fda44e8/src/datasets/load.py#L1779

### Steps to reproduce the bug

n/a

### Expected behavior

n/a

### Environment info

n/a

|

https://github.com/huggingface/datasets/issues/5385

|

closed

|

[] | 2022-12-22T21:00:45Z

| 2023-01-23T10:50:05Z

| 3

|

dconathan

|

pytorch/examples

| 1,105

|

MNIST Hogwild on Apple Silicon

|

Any help would be appreciated! Unable to run multiprocessing with mps device

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

* Pytorch version: 2.0.0.dev20221220

* Operating System and version: macOS 13.1

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Installed using source? [yes/no]: no

* Are you planning to deploy it using docker container? [yes/no]: no

* Is it a CPU or GPU environment?: Trying to use GPU

* Which example are you using: MNIST Hogwild

* Link to code or data to repro [if any]: https://github.com/pytorch/examples/tree/main/mnist_hogwild

## Expected Behavior

<!--- If you're describing a bug, tell us what should happen -->

Adding argument --mps should result in training with GPU

## Current Behavior

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

Runtimeerror: _share_filename_: only available on CPU

```

Traceback (most recent call last):

File "/Volumes/Main/pytorch/main.py", line 87, in <module>

model.share_memory() # gradients are allocated lazily, so they are not shared here

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 2340, in share_memory

return self._apply(lambda t: t.share_memory_())

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 784, in _apply

module._apply(fn)

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 807, in _apply

param_applied = fn(param)

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 2340, in <lambda>

return self._apply(lambda t: t.share_memory_())

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/_tensor.py", line 616, in share_memory_

self._typed_storage()._share_memory_()

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/storage.py", line 701, in _share_memory_

self._untyped_storage.share_memory_()

File "/Users/jeffreythomas/opt/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/storage.py", line 209, in share_memory_

self._share_filename_cpu_()

RuntimeError: _share_filename_: only available on CPU

```

## Possible Solution

<!--- Not obligatory, but suggest a fix/reason for the bug -->

## Steps to Reproduce

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

<!--- reproduce this bug. Include code to reproduce, if relevant -->

1. Clone repo

2. Run with --mps on Apple M1 Ultra

...

|

https://github.com/pytorch/examples/issues/1105

|

open

|

[

"help wanted"

] | 2022-12-22T06:25:48Z

| 2023-12-09T09:43:08Z

| 4

|

jeffreykthomas

|

pytorch/functorch

| 1,088

|

Add vmap support for PyTorch operators

|

We're looking for more motivated open-source developers to help build out functorch (and PyTorch, since functorch is now just a part of PyTorch). Below is a selection of good first issues.

- [x] https://github.com/pytorch/pytorch/issues/91174

- [x] https://github.com/pytorch/pytorch/issues/91175

- [x] https://github.com/pytorch/pytorch/issues/91176

- [x] https://github.com/pytorch/pytorch/issues/91177

- [x] https://github.com/pytorch/pytorch/issues/91402

- [x] https://github.com/pytorch/pytorch/issues/91403

- [x] https://github.com/pytorch/pytorch/issues/91404

- [x] https://github.com/pytorch/pytorch/issues/91415

- [ ] https://github.com/pytorch/pytorch/issues/91700

In general there's a high barrier to developing PyTorch and/or functorch. We've collected topics and information over at the [PyTorch Developer Wiki](https://github.com/pytorch/pytorch/wiki/Core-Frontend-Onboarding)

|

https://github.com/pytorch/functorch/issues/1088

|

open

|

[

"good first issue"

] | 2022-12-20T18:51:16Z

| 2023-04-19T23:40:06Z

| 2

|

zou3519

|

huggingface/optimum

| 625

|

Add support for Speech Encoder Decoder models in `optimum.exporters.onnx`

|

### Feature request

Add support for [Speech Encoder Decoder Models](https://huggingface.co/docs/transformers/v4.25.1/en/model_doc/speech-encoder-decoder#speech-encoder-decoder-models)

### Your contribution

Me or other members can implement it (cc @mht-sharma @fxmarty )

|

https://github.com/huggingface/optimum/issues/625

|

open

|

[

"feature-request",

"onnx"

] | 2022-12-20T16:48:49Z

| 2023-11-15T10:02:54Z

| 4

|

michaelbenayoun

|

huggingface/optimum

| 615

|

Shall we set diffusers as soft dependency for onnxruntime module?

|

It seems a little bit strange for me that we need to have diffusers for doing sequence classification.

### System Info

```shell

Dev branch of Optimum

```

### Who can help?

@echarlaix @JingyaHuang

### Reproduction

```python

from optimum.onnxruntime import ORTModelForSequenceClassification

```

### Error message

```

RuntimeError: Failed to import optimum.onnxruntime.modeling_ort because of the following error (look up to see its traceback):

No module named 'diffusers'

```

### Expected behavior

Be able to do sequence classification without diffusers.

### Contribution

I can open a PR to make diffusers a soft dependency

|

https://github.com/huggingface/optimum/issues/615

|

closed

|

[

"bug"

] | 2022-12-19T11:23:34Z

| 2022-12-21T14:02:45Z

| 1

|

JingyaHuang

|

pytorch/android-demo-app

| 287

|

How to convert live camera to landscape object detection with correct camera aspect ratio?

|

https://github.com/pytorch/android-demo-app/issues/287

|

open

|

[] | 2022-12-19T10:01:47Z

| 2022-12-19T10:05:06Z

| null |

pratheeshsuvarna

|

|

pytorch/vision

| 7,043

|

How to generate the score for a determined region of an image using Mask R-CNN

|

### 🐛 Describe the bug

I want to change the RegionProposalNetwork of Mask R-CNN to generate the score for a determined region of an image using Mask R-CNN.

```

import torch

from torch import nn

import torchvision.models as models

import torchvision

from torchvision.models.detection import MaskRCNN

from torchvision.models.detection.anchor_utils import AnchorGenerator

model = models.detection.maskrcnn_resnet50_fpn(pretrained=True)

class rpn_help(nn.Module):

def __init__(self,) -> None:

super().__init__()

def forward(self,) :

proposals=torch.tensor([ 78.0753, 12.7310, 165.6465, 153.7253])

proposal_losses=0

return proposals, proposal_losses

model.rpn= rpn_help

model.eval()

model(input_tensor) # input_tensor is an image

```

It takes error like this

<img width="786" alt="WeChatbb686829d6c0f06106e53c1e3feecb55" src="https://user-images.githubusercontent.com/98499594/208374946-137eb9b2-6a64-4a06-8d4e-57caf1bb72b3.png">

Does anyone know how to generate the score for a determined region of an image using Mask R-CNN

?

### Versions

python 3.8

|

https://github.com/pytorch/vision/issues/7043

|

open

|

[] | 2022-12-19T08:04:50Z

| 2022-12-19T08:04:50Z

| null |

mingqiJ

|

pytorch/serve

| 2,039

|

how to load models at startup for docker

|

First, I created docker container by followed https://github.com/pytorch/serve/tree/master/docker#create-torchserve-docker-image, I leaves all configs default except remove `--rm` in `docker run ...` and make docker container start automatically by

```docker update --restart unless-stopped mytorchserve```

Then, I registered some model via Management API.

Now, how to make models automated registed when my PC reboot?

|

https://github.com/pytorch/serve/issues/2039

|

closed

|

[] | 2022-12-17T02:55:23Z

| 2022-12-18T01:00:29Z

| null |

hungtooc

|

huggingface/transformers

| 20,794

|

When I use the following code on tpuvm and use model.generate() to infer, the speed is very slow. It seems that the tpu is not used. What is the problem?

|

### System Info

When I use the following code on tpuvm and use model.generate() to infer, the speed is very slow. It seems that the tpu is not used. What is the problem?

jax device is exist

```python

import jax

num_devices = jax.device_count()

device_type = jax.devices()[0].device_kind

assert "TPU" in device_type

from transformers import AutoTokenizer, FlaxAutoModelForCausalLM

model = FlaxMT5ForConditionalGeneration.from_pretrained("google/mt5-small")

tokenizer = T5Tokenizer.from_pretrained("google/mt5-small")

input_context = "The dog"

# encode input context

input_ids = tokenizer(input_context, return_tensors="np").input_ids

# generate candidates using sampling

outputs = model.generate(input_ids=input_ids, max_length=20, top_k=30, do_sample=True)

print(outputs)

```

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

```python

import jax

num_devices = jax.device_count()

device_type = jax.devices()[0].device_kind

assert "TPU" in device_type

from transformers import AutoTokenizer, FlaxAutoModelForCausalLM

model = FlaxMT5ForConditionalGeneration.from_pretrained("google/mt5-small")

tokenizer = T5Tokenizer.from_pretrained("google/mt5-small")

input_context = "The dog"

# encode input context

input_ids = tokenizer(input_context, return_tensors="np").input_ids

# generate candidates using sampling

outputs = model.generate(input_ids=input_ids, max_length=20, top_k=30, do_sample=True)

print(outputs)

```

### Expected behavior

Expect it to be fast

|

https://github.com/huggingface/transformers/issues/20794

|

closed

|

[] | 2022-12-16T09:15:32Z

| 2023-05-21T15:03:06Z

| null |

joytianya

|

huggingface/optimum

| 595

|

Document and (possibly) improve the `use_past`, `use_past_in_inputs`, `use_present_in_outputs` API

|

### Feature request

As the title says.

Basically, for `OnnxConfigWithPast` there are three attributes:

- `use_past_in_inputs`: to specify that the exported model should have `past_key_values` as inputs

- `use_present_in_outputs`: to specify that the exported model should have `past_key_values` as outputs

- `use_past`, which is basically used for either of the previous attributes when those are left unspecified

It is not currently documented, and their current meaning might be unclear to the user.

Also, maybe it is possible to find a better way of handling those.

cc @mht-sharma @fxmarty

### Motivation

The current way is working, but might not be the best way of solving the problem, and might cause some misunderstanding for potential contributors.

### Your contribution

I can work on this.

|

https://github.com/huggingface/optimum/issues/595

|

closed

|

[

"documentation",

"Stale"

] | 2022-12-15T13:57:43Z

| 2025-07-03T02:16:51Z

| 2

|

michaelbenayoun

|

huggingface/datasets

| 5,362

|

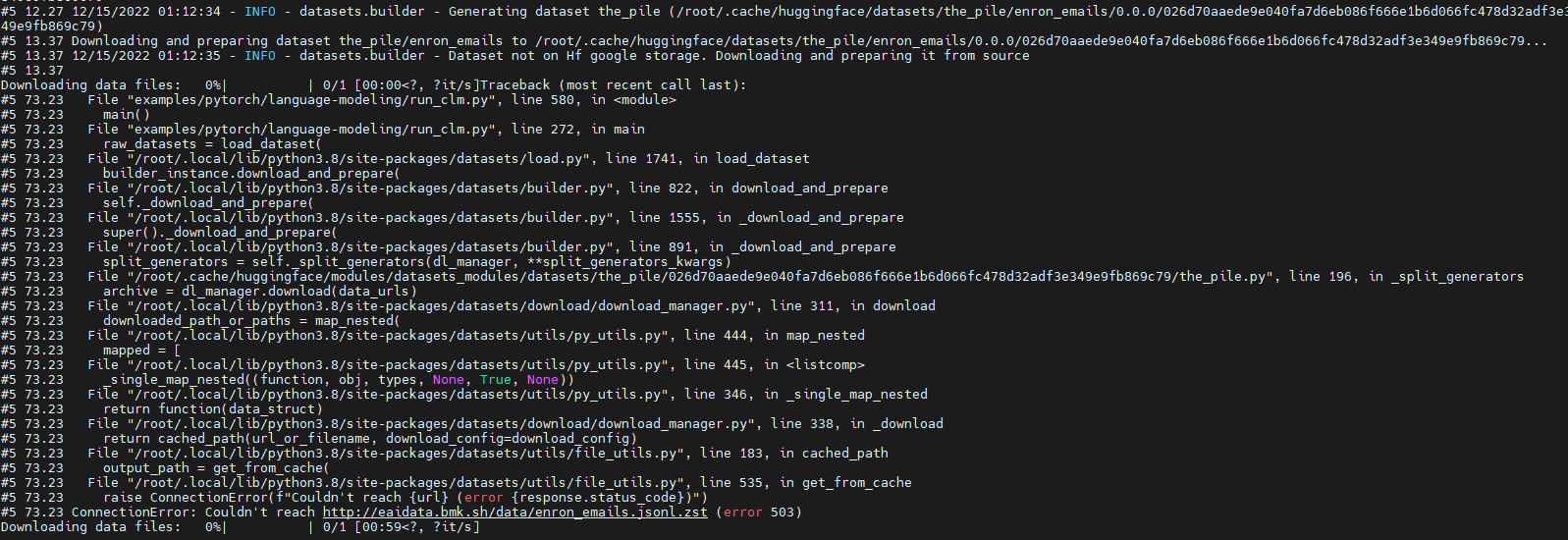

Run 'GPT-J' failure due to download dataset fail (' ConnectionError: Couldn't reach http://eaidata.bmk.sh/data/enron_emails.jsonl.zst ' )

|

### Describe the bug

Run model "GPT-J" with dataset "the_pile" fail.

The fail out is as below:

Looks like which is due to "http://eaidata.bmk.sh/data/enron_emails.jsonl.zst" unreachable .

### Steps to reproduce the bug

Steps to reproduce this issue:

git clone https://github.com/huggingface/transformers

cd transformers

python examples/pytorch/language-modeling/run_clm.py --model_name_or_path EleutherAI/gpt-j-6B --dataset_name the_pile --dataset_config_name enron_emails --do_eval --output_dir /tmp/output --overwrite_output_dir

### Expected behavior

This issue looks like due to "http://eaidata.bmk.sh/data/enron_emails.jsonl.zst " couldn't be reached.

Is there another way to download the dataset "the_pile" ?

Is there another way to cache the dataset "the_pile" but not let the hg to download it when runtime ?

### Environment info

huggingface_hub version: 0.11.1

Platform: Linux-5.15.0-52-generic-x86_64-with-glibc2.35

Python version: 3.9.12

Running in iPython ?: No

Running in notebook ?: No

Running in Google Colab ?: No

Token path ?: /home/taosy/.huggingface/token

Has saved token ?: False

Configured git credential helpers:

FastAI: N/A

Tensorflow: N/A

Torch: N/A

Jinja2: N/A

Graphviz: N/A

Pydot: N/A

|

https://github.com/huggingface/datasets/issues/5362

|

closed

|

[] | 2022-12-15T01:23:03Z

| 2022-12-15T07:45:54Z

| 2

|

shaoyuta

|

pytorch/TensorRT

| 1,547

|

❓ [Question] How can I load a TensorRT model generated with `trtexec`?

|

## ❓ Question

How can I load into Pytorch a TensorRT model engine (.trt or .plan) generated with `trtexec` ?

I have the following TensorRT model engine (generated from a ONNX file) using the `trtexec` tool provided by Nvidia

```

trtexec --onnx=../2.\ ONNX/CLIP-B32-image.onnx \

--saveEngine=../4.\ TensorRT/CLIP-B32-image.trt \

--minShapes=input:1x3x224x224 \

--optShapes=input:1x3x224x224 \

--maxShapes=input:32x3x224x224 \

--fp16

```

I want to load it into Pytorch for using the Pytorch's dataloader for fast batch ineference.

|

https://github.com/pytorch/TensorRT/issues/1547

|

closed

|

[

"question"

] | 2022-12-13T11:47:49Z

| 2022-12-13T17:49:06Z

| null |

javiabellan

|

huggingface/datasets

| 5,354

|

Consider using "Sequence" instead of "List"

|

### Feature request

Hi, please consider using `Sequence` type annotation instead of `List` in function arguments such as in [`Dataset.from_parquet()`](https://github.com/huggingface/datasets/blob/main/src/datasets/arrow_dataset.py#L1088). It leads to type checking errors, see below.

**How to reproduce**

```py

list_of_filenames = ["foo.parquet", "bar.parquet"]

ds = Dataset.from_parquet(list_of_filenames)

```

**Expected mypy output:**

```

Success: no issues found

```

**Actual mypy output:**

```py

test.py:19: error: Argument 1 to "from_parquet" of "Dataset" has incompatible type "List[str]"; expected "Union[Union[str, bytes, PathLike[Any]], List[Union[str, bytes, PathLike[Any]]]]" [arg-type]

test.py:19: note: "List" is invariant -- see https://mypy.readthedocs.io/en/stable/common_issues.html#variance

test.py:19: note: Consider using "Sequence" instead, which is covariant

```

**Env:** mypy 0.991, Python 3.10.0, datasets 2.7.1

|

https://github.com/huggingface/datasets/issues/5354

|

open

|

[

"enhancement",

"good first issue"

] | 2022-12-12T15:39:45Z

| 2025-11-21T22:35:10Z

| 13

|

tranhd95

|

huggingface/transformers

| 20,733

|

Verify that a test in `LayoutLMv3` 's tokenizer is checking what we want

|

I'm taking the liberty of opening an issue to share a question I've been keeping in the corner of my head, but now that I'll have less time to devote to `transformers` I prefer to share it before it's forgotten.