repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

huggingface/datasets

| 5,665

|

Feature request: IterableDataset.push_to_hub

|

### Feature request

It'd be great to have a lazy push to hub, similar to the lazy loading we have with `IterableDataset`.

Suppose you'd like to filter [LAION](https://huggingface.co/datasets/laion/laion400m) based on certain conditions, but as LAION doesn't fit into your disk, you'd like to leverage streaming:

```

from datasets import load_dataset

dataset = load_dataset("laion/laion400m", streaming=True, split="train")

```

Then you could filter the dataset based on certain conditions:

```

filtered_dataset = dataset.filter(lambda example: example['HEIGHT'] > 400)

```

In order to persist this dataset and push it back to the hub, one currently needs to first load the entire filtered dataset on disk and then push:

```

from datasets import Dataset

Dataset.from_generator(filtered_dataset.__iter__).push_to_hub(...)

```

It would be great if we can instead lazy push to the data to the hub (basically stream the data to the hub), not being limited by our disk size:

```

filtered_dataset.push_to_hub("my-filtered-dataset")

```

### Motivation

This feature would be very useful for people that want to filter huge datasets without having to load the entire dataset or a filtered version thereof on their local disk.

### Your contribution

Happy to test out a PR :)

|

https://github.com/huggingface/datasets/issues/5665

|

closed

|

[

"enhancement"

] | 2023-03-23T09:53:04Z

| 2025-06-06T16:13:22Z

| 13

|

NielsRogge

|

pytorch/examples

| 1,128

|

Question about the difference between at::Tensor and torch::Tensor in PyTorch c++

|

I think the document of the PyTorch c++ library is not quite complete.

I noticed that there are some codes in the cppdoc use torch::Tensor, especially in the “Tensor Basics” and “Tensor Creation API”. I can’t find “torch::Tensor” in “Library API” but the “at::Tensor “.

I want to know is there any difference between them, and where can I find a more complete document about “PyTorch cpp”

|

https://github.com/pytorch/examples/issues/1128

|

closed

|

[] | 2023-03-23T06:59:46Z

| 2023-03-25T01:56:58Z

| 1

|

Ningreka

|

pytorch/pytorch

| 97,364

|

Confused as to where a script is.

|

According to pytorch/torch/_C/__init__.pyi.in there's supposed to be a torch/aten script but I can't find it, has this been phased out, because if it has is it in an older version of PyTorch? It's just without it, it completely stops one of the programs I downloaded from working, called Colossalai. It tries to call from aten.upsample_nearest2d_backward.vec and can't. According to ChatGPT the last version of PyTorch it saw, PyTorch 1.9.0 has aten in it, but both versions that you can download from the get started on the PyTorch website don't have it. Any recommendations would be great thanks.

|

https://github.com/pytorch/pytorch/issues/97364

|

closed

|

[] | 2023-03-22T18:04:44Z

| 2023-03-24T17:03:18Z

| null |

Shikamaru5

|

huggingface/datasets

| 5,660

|

integration with imbalanced-learn

|

### Feature request

Wouldn't it be great if the various class balancing operations from imbalanced-learn were available as part of datasets?

### Motivation

I'm trying to use imbalanced-learn to balance a dataset, but it's not clear how to get the two to interoperate - what would be great would be some examples. I've looked online, asked gpt-4, but so far not making much progress.

### Your contribution

If I can get this working myself I can submit a PR with example code to go in the docs

|

https://github.com/huggingface/datasets/issues/5660

|

closed

|

[

"enhancement",

"wontfix"

] | 2023-03-22T11:05:17Z

| 2023-07-06T18:10:15Z

| 1

|

tansaku

|

pytorch/TensorRT

| 1,758

|

❓ [Question] The compilation process does not display errors, but the program does not continue...

|

With resnet it works fine, but with my model it compiles but doesn't output the result. I don't know if there is a problem with Input.

|

https://github.com/pytorch/TensorRT/issues/1758

|

closed

|

[

"question",

"No Activity"

] | 2023-03-22T02:30:28Z

| 2023-07-02T00:02:37Z

| null |

AllesOderNicht

|

huggingface/safetensors

| 202

|

`safetensor.torch.save_file()` throws `RuntimeError` - any recommended way to enforce?

|

was confronted with `RuntimeError: Some tensors share memory, this will lead to duplicate memory on disk and potential differences when loading them again`.

Can we explicitly disregard "**potential** differences"?

|

https://github.com/huggingface/safetensors/issues/202

|

closed

|

[] | 2023-03-21T21:24:38Z

| 2024-06-06T02:29:48Z

| 26

|

drahnreb

|

pytorch/text

| 2,125

|

How to install torchtext for cmake c++?

|

https://github.com/pytorch/text/issues/2125

|

open

|

[] | 2023-03-21T19:07:38Z

| 2023-06-06T22:01:16Z

| null |

Toocic

|

|

pytorch/data

| 1,104

|

Add documentation about custom Shuffle and Sharding DataPipe

|

### 📚 The doc issue

TorchData has a few special graph functions to handle Shuffle and Sharding DataPipe. But, we never document what is expected for those graph functions, which leads users to extend custom shuffle and sharding by diving into our code base.

We should add clear document about the expected methods attached to Shuffle or Sharding DataPipe.

This problem has been discussed in the #1081 as well, but I want to track documentation issue separately

### Suggest a potential alternative/fix

_No response_

|

https://github.com/meta-pytorch/data/issues/1104

|

open

|

[] | 2023-03-21T18:14:29Z

| 2023-03-21T21:47:32Z

| 0

|

ejguan

|

huggingface/optimum

| 906

|

Optimum export of whisper raises ValueError: There was an error while processing timestamps, we haven't found a timestamp as last token. Was WhisperTimeStampLogitsProcessor used?

|

### System Info

```shell

optimum: 1.7.1

Python: 3.8.3

transformers: 4.27.2

platform: Windows 10

```

### Who can help?

@philschmid @michaelbenayoun

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [x] My own task or dataset (give details below)

### Reproduction

1. Convert the model to ONNX:

```

python -m optimum.exporters.onnx --model openai/whisper-tiny.en whisper_onnx/

```

2. Due to [another bug in the pipeline function](https://github.com/huggingface/optimum/issues/905), you may need to comment out the lines in the generate function which raises an error for unused model kwargs:

https://github.com/huggingface/transformers/blob/48327c57182fdade7f7797d1eaad2d166de5c55b/src/transformers/generation/utils.py#L1104-L1108

3. Try to transcribe longer audio clip:

```python

import onnxruntime

from transformers import pipeline, AutoProcessor

from optimum.onnxruntime import ORTModelForSpeechSeq2Seq

whisper_model_name = './whisper_onnx/'

processor = AutoProcessor.from_pretrained(whisper_model_name)

session_options = onnxruntime.SessionOptions()

model_ort = ORTModelForSpeechSeq2Seq.from_pretrained(

whisper_model_name,

use_io_binding=True,

session_options=session_options

)

generator_ort = pipeline(

task="automatic-speech-recognition",

model=model_ort,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

)

out = generator_ort(

'https://xenova.github.io/transformers.js/assets/audio/ted_60.wav',

return_timestamps=True,

chunk_length_s=30,

stride_length_s=5

)

print(f'{out=}')

```

4. This raises the error:

```python

│ 878 │ │ if return_timestamps: │

│ 879 │ │ │ # Last token should always be timestamps, so there shouldn't be │

│ 880 │ │ │ # leftover │

│ ❱ 881 │ │ │ raise ValueError( │

│ 882 │ │ │ │ "There was an error while processing timestamps, we haven't found a time │

│ 883 │ │ │ │ " WhisperTimeStampLogitsProcessor used?" │

│ 884 │ │ │ ) │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

ValueError: There was an error while processing timestamps, we haven't found a timestamp as last token. Was WhisperTimeStampLogitsProcessor used?

```

### Expected behavior

The program should act like the transformers version and not crash:

```python

from transformers import pipeline

transcriber = pipeline('automatic-speech-recognition', 'openai/whisper-tiny.en')

text = transcriber(

'https://xenova.github.io/transformers.js/assets/audio/ted_60.wav',

return_timestamps=True,

chunk_length_s=30,

stride_length_s=5

)

print(f'{text=}')

# outputs correctly

```

|

https://github.com/huggingface/optimum/issues/906

|

closed

|

[

"bug"

] | 2023-03-21T13:45:10Z

| 2023-03-24T18:26:17Z

| 3

|

xenova

|

pytorch/vision

| 7,438

|

Feedback on Video APIs

|

### Feedback request

With torchaudio's recent success in getting a clean FFMPEG build with a full support for FFMPEG 5 and 6 (something we can't replicate in torchvision easily yet), we are thinking of adopting their API and joining efforts to have a better support for video reading.

With that in mind, we were hoping to gather a some feedback from TV users who rely on video reader (or would like to use it but find it hard to do so):

1. What are your main pain points with our current API?

2. What do you wish was supported?

3. What are the most important features of a video IO for you?

We can't promise we'll support everything (of course), but we'd love to gather as much feedback as possible and get as much of it incorporated as possible.

|

https://github.com/pytorch/vision/issues/7438

|

open

|

[

"question",

"needs discussion",

"module: io",

"module: video"

] | 2023-03-21T13:20:36Z

| 2024-05-20T14:50:59Z

| null |

bjuncek

|

huggingface/datasets

| 5,653

|

Doc: save_to_disk, `num_proc` will affect `num_shards`, but it's not documented

|

### Describe the bug

[`num_proc`](https://huggingface.co/docs/datasets/main/en/package_reference/main_classes#datasets.DatasetDict.save_to_disk.num_proc) will affect `num_shards`, but it's not documented

### Steps to reproduce the bug

Nothing to reproduce

### Expected behavior

[document of `num_shards`](https://huggingface.co/docs/datasets/main/en/package_reference/main_classes#datasets.DatasetDict.save_to_disk.num_shards) explicitly says that it depends on `max_shard_size`, it should also mention `num_proc`.

### Environment info

datasets main document

|

https://github.com/huggingface/datasets/issues/5653

|

closed

|

[

"documentation",

"good first issue"

] | 2023-03-21T05:25:35Z

| 2023-03-24T16:36:23Z

| 1

|

RmZeta2718

|

pytorch/kineto

| 743

|

Questions about ROCm profiler

|

Hi @mwootton @aaronenyeshi ,

I found some interesting results for the models running on NVIDIA A100 and AMD MI210 GPUs. For example, I tested model resnext50_32x4d in [TorchBench](https://github.com/pytorch/benchmark). resnext50_32x4d obtains about 4.89X speedup on MI210. However, when I use PyTorch Profiler to profile models on MI210, the profile trace is strange. The total execution time of resnext50_32x4d is about 32ms on A100 and 7ms on MI210. But in the profile traces, the execution time is about 117ms on A100 and 106ms on MI210. I tested PyTorch 1.13.1 with CUDA 11.7 and ROCm 5.2. And the profile traces have been attached. Do you have any ideas?

Another question is that what do the GPU kernels do before the `Profiler Step` in ROCm profiling trace? These kernels take about 45s but no python calling context is shown in the trace view.

[resnext50.zip](https://github.com/pytorch/kineto/files/11021559/resnext50.zip)

|

https://github.com/pytorch/kineto/issues/743

|

closed

|

[

"question"

] | 2023-03-20T18:11:16Z

| 2023-10-24T17:39:57Z

| null |

FindHao

|

huggingface/dataset-viewer

| 965

|

Change the limit of started jobs? all kinds -> per kind

|

Currently, the `QUEUE_MAX_JOBS_PER_NAMESPACE` parameter limits the number of started jobs for the same namespace (user or organization). Maybe we should enforce this limit **per job kind** instead of **globally**.

|

https://github.com/huggingface/dataset-viewer/issues/965

|

closed

|

[

"question",

"improvement / optimization"

] | 2023-03-20T17:40:45Z

| 2023-04-29T15:03:57Z

| null |

severo

|

huggingface/dataset-viewer

| 964

|

Kill a job after a maximum duration?

|

The heartbeat already allows to detect if a job has crashed and to generate an error in that case. But some jobs can take forever, while not crashing. Should we set a maximum duration for the jobs, in order to save resources and free the queue? I imagine that we could automatically kill a job that takes more than 20 minutes to run, and insert an error in the cache.

|

https://github.com/huggingface/dataset-viewer/issues/964

|

closed

|

[

"question",

"improvement / optimization"

] | 2023-03-20T17:37:35Z

| 2023-03-23T13:16:33Z

| null |

severo

|

huggingface/optimum

| 903

|

Support transformers export to ggml format

|

### Feature request

ggml is gaining traction (e.g. llama.cpp has 10k stars), and it would be great to extend optimum.exporters and enable the community to export PyTorch/Tensorflow transformers weights to the format expected by ggml, having a more streamlined and single-entry export.

This could avoid duplicates as

https://github.com/ggerganov/llama.cpp/blob/master/convert-pth-to-ggml.py

https://github.com/ggerganov/whisper.cpp/blob/master/models/convert-pt-to-ggml.py

https://github.com/ggerganov/ggml/blob/master/examples/gpt-j/convert-h5-to-ggml.py

### Motivation

/

### Your contribution

I could have a look at it and submit a POC, cc @NouamaneTazi @ggerganov

Open to contribution as well, I don't expect it to be too much work

|

https://github.com/huggingface/optimum/issues/903

|

open

|

[

"feature-request",

"help wanted",

"exporters"

] | 2023-03-20T12:51:51Z

| 2023-07-03T04:51:18Z

| 2

|

fxmarty

|

pytorch/TensorRT

| 1,749

|

How to import after compilation

|

Show me that I don't have this package when I import torch_tensorrt

|

https://github.com/pytorch/TensorRT/issues/1749

|

closed

|

[

"question",

"No Activity"

] | 2023-03-20T10:35:37Z

| 2023-06-29T00:02:42Z

| null |

AllesOderNicht

|

pytorch/rl

| 977

|

[Feature Request] How to implement algorithms with multiple optimise phase like PPG?

|

## Motivation

I'm trying to implement [PPG](https://proceedings.mlr.press/v139/cobbe21a) and [DNA](https://arxiv.org/pdf/2206.10027.pdf) algorithms with torchrl, and both algorithms have more than one optimise phase in a single training loop. However, I suggest the [Trainer class](https://pytorch.org/rl/reference/trainers.html) doesn't support multiple loss modules or optimisers.

## Solution

I wish there will be an example code of how to implement the aforementioned algorithms, or alternatively, good guidance on how to customise the Trainer.

## Checklist

- [ x] I have checked that there is no similar issue in the repo

|

https://github.com/pytorch/rl/issues/977

|

closed

|

[

"enhancement"

] | 2023-03-20T04:57:59Z

| 2023-03-21T06:44:16Z

| null |

levilovearch

|

huggingface/datasets

| 5,650

|

load_dataset can't work correct with my image data

|

I have about 20000 images in my folder which divided into 4 folders with class names.

When i use load_dataset("my_folder_name", split="train") this function create dataset in which there are only 4 images, the remaining 19000 images were not added there. What is the problem and did not understand. Tried converting images and the like but absolutely nothing worked

|

https://github.com/huggingface/datasets/issues/5650

|

closed

|

[] | 2023-03-18T13:59:13Z

| 2023-07-24T14:13:02Z

| 21

|

WiNE-iNEFF

|

pytorch/pytorch

| 97,026

|

How to get list of all valid devices?

|

### 📚 The doc issue

`torch.testing.get_all_device_types()`

yields all valid devices on the current machine however unlike `torch._tensor_classes` , `torch.testing.get_all_dtypes()`, and `import typing; typing.get_args(torch.types.Device)`, there doesn't seem to be a comprehensive list of all valid device types, which gets listed when I force an error

```

torch.devcie('asdasjdfas')

RuntimeError: Expected one of cpu, cuda, ipu, xpu, mkldnn, opengl, opencl, ideep, hip, ve, fpga, ort, xla, lazy, vulkan, mps, meta, hpu, privateuseone device type at start of device string: asdasjdfas

```

### Suggest a potential alternative/fix

```

torch._device_names = cpu, cuda, ipu, xpu, mkldnn, opengl, opencl, ideep, hip, ve, fpga, ort, xla, lazy, vulkan, mps, meta, hpu, privateuseone

```

cc @svekars @carljparker

|

https://github.com/pytorch/pytorch/issues/97026

|

open

|

[

"module: docs",

"triaged"

] | 2023-03-17T15:37:00Z

| 2023-03-20T23:49:13Z

| null |

dsm-72

|

pytorch/kineto

| 742

|

How can I get detailed aten::op name like add.Tensor/abs.out?

|

I wonder if I could trace detailed op name like add.Tensor、add.Scalar、sin.out、abs.out

Currently the profiler only gives me add/sin/abs, etc.

Is there a method to acquire detailed dispatched op name?

|

https://github.com/pytorch/kineto/issues/742

|

closed

|

[

"question"

] | 2023-03-17T05:25:42Z

| 2024-04-23T15:31:20Z

| null |

Hurray0

|

pytorch/cppdocs

| 16

|

How to set up pytorch for c++ (with g++) via commandline not cmake

|

I have a Lapop with a nvidia graphicscard and I'm trying to use pytorch for cuda with g++. But i couldn't find any good information about dependecies e.g and my compiler always trohws errors, I'm currently using this command I found on the internet: "g++ -std=c++14 main.cpp -I ${TORCH_DIR}/include/torch/csrc/api/include/ -I ${TORCH_DIR}/include/ -L ${TORCH_DIR}/lib/ -L /usr/local/cuda/lib64 -L /usr/local/cuda/nvvm/lib64 -ltorch -lc10 -lc10_cuda -lnvrtc -lcudart_static -ldl -lrt -pthread -o out", but it just says: "torch/torch.h: file not found"

|

https://github.com/pytorch/cppdocs/issues/16

|

closed

|

[] | 2023-03-13T21:15:08Z

| 2023-03-18T22:36:04Z

| null |

usr577

|

huggingface/dataset-viewer

| 924

|

Support webhook version 3?

|

The Hub provides different formats for the webhooks. The current version, used in the public feature (https://huggingface.co/docs/hub/webhooks) is version 3. Maybe we should support version 3 soon.

|

https://github.com/huggingface/dataset-viewer/issues/924

|

closed

|

[

"question",

"refactoring / architecture"

] | 2023-03-13T13:39:59Z

| 2023-04-21T15:03:54Z

| null |

severo

|

huggingface/datasets

| 5,632

|

Dataset cannot convert too large dictionnary

|

### Describe the bug

Hello everyone!

I tried to build a new dataset with the command "dict_valid = datasets.Dataset.from_dict({'input_values': values_array})".

However, I have a very large dataset (~400Go) and it seems that dataset cannot handle this.

Indeed, I can create the dataset until a certain size of my dictionnary, and then I have the error "OverflowError: Python int too large to convert to C long".

Do you know how to solve this problem?

Unfortunately I cannot give a reproductible code because I cannot share a so large file, but you can find the code below (it's a test on only a part of the validation data ~10Go, but it's already the case).

Thank you!

### Steps to reproduce the bug

SAVE_DIR = './data/'

features = h5py.File(SAVE_DIR+'features.hdf5','r')

valid_data = features["validation"]["data/features"]

v_array_values = [np.float32(item[()]) for item in valid_data.values()]

for i in range(len(v_array_values)):

v_array_values[i] = v_array_values[i].round(decimals=5)

dict_valid = datasets.Dataset.from_dict({'input_values': v_array_values})

### Expected behavior

The code is expected to give me a Huggingface dataset.

### Environment info

python: 3.8.15

numpy: 1.22.3

datasets: 2.3.2

pyarrow: 8.0.0

|

https://github.com/huggingface/datasets/issues/5632

|

open

|

[] | 2023-03-13T10:14:40Z

| 2023-03-16T15:28:57Z

| 1

|

MaraLac

|

pytorch/pytorch

| 96,655

|

What is the state of support for AD of BatchNormalization and DropOut layers?

|

I have come to this issue from this post.

https://pytorch.org/functorch/stable/notebooks/per_sample_grads.html

## Background

What I am doing requires per-sample gradient (in fact, I migrated from TF, so I do not have much experience with pytorch, but I have a sufficient understanding of NN training).

When reading the post, I could not figure out whether functorch's `vmap` supports AD function of BN and DropOut layers.

In my understanding, these layers are relatively popular.

Because they are not pure functions (different behaviors in training and testing modes), and also not pure (e.g., BN layer accumulates the average across the different forward pass in training mode), which makes me wonder:

## My questions

1. Does functorch's `vmap` support AD function of BN and DropOut layers?

2. If yes, how does it do that?

I tried searching for issues with BatchNormalization or DropOut keywords, but the results were fragmented and I still do not know what is the current state now.

Opacus says that only `EmbeddingBag` is not supported (https://github.com/pytorch/opacus/blob/5aa378ea98df9caf8ca1987ee4d636219267d17e/opacus/grad_sample/functorch.py#L22).

Could anyone tell me the answer?

If possible, updating the docs to clarify the supports for these layers would be great.

Thank you very much.

cc @zou3519 @Chillee @samdow @soumith @kshitij12345 @janeyx99

|

https://github.com/pytorch/pytorch/issues/96655

|

closed

|

[

"triaged",

"module: functorch"

] | 2023-03-10T01:47:20Z

| 2023-03-15T16:02:05Z

| null |

tranvansang

|

huggingface/ethics-education

| 1

|

What is AI Ethics?

|

With the amount of hype around things like ChatGPT, AI art, etc., there are a lot of misunderstandings being propagated through the media! Additionally, many people are not aware of the ethical impacts of AI, and they're even less aware about the work that folks in academia + industry are doing to ensure that AI systems are being developed and deployed in ways that are equitable, sustainable, etc.

This is a great opportunity for us to put together a simple explainer, with some very high-level information aimed at non-technical people, that runs through what AI Ethics is and why people should care. Format-wise, I'm aiming towards something like a light blog post.

More specifically, it would be really cool to have something that ties into the categories outlined on [hf.co/ethics](https://hf.co/ethics). A more detailed description is available here on [Google Docs](https://docs.google.com/document/d/19Ga4PX0xbRxMlAwoK-q7Xjuy9B9Z0jFvFuVYdhfcKiY/edit).

If you're interested in helping out with this, a great first step would be to collect some resources and start outlining a bullet-point draft on a Google Doc that I can share with you 😄

I've also got plans for the actual distribution of it (e.g. design-wise, distribution), which I'll follow up with soon.

|

https://github.com/huggingface/ethics-education/issues/1

|

open

|

[

"help wanted",

"explainer",

"audience: non-technical"

] | 2023-03-09T20:58:02Z

| 2023-03-17T14:50:39Z

| null |

NimaBoscarino

|

huggingface/diffusers

| 2,633

|

Asymmetric tiling

|

Hello. I'm trying to achieve tiling asymmetrically using Diffusers, in a similar fashion to the asymmetric tiling in Automatic1111's extension https://github.com/tjm35/asymmetric-tiling-sd-webui.

My understanding is that I must traverse all layers to alter the padding, in my case circular in X and constant in Y, but I would love to get advice on how to make a such change to the conv2d system in DIffusers.

Your advice is highly appreciated, as it may also help others down the road facing the same need.

|

https://github.com/huggingface/diffusers/issues/2633

|

closed

|

[

"good first issue",

"question"

] | 2023-03-09T19:09:34Z

| 2025-07-29T08:48:27Z

| null |

alejobrainz

|

huggingface/optimum

| 874

|

Assistance exporting git-large to ONNX

|

Hello! I am looking to export an image captioning Hugging Face model to ONNX (specifically I was playing with the [git-large](https://huggingface.co/microsoft/git-large) model but if anyone knows of one that might be easier to deal with in terms of exporting that is great too)

I'm trying to follow [these](https://huggingface.co/docs/transformers/serialization#exporting-a-model-for-an-unsupported-architecture) instructions for exporting an unsupported architecture, and I am a bit stuck on figuring out what base class to inherit from and how to define the custom ONNX Configuration since I'm not sure what examples to look at (the model card says this is a transformer decoder model, but it looks like i that it has both encoding and decoding so I am a bit confused)

I also found [this](https://github.com/huggingface/notebooks/blob/main/examples/onnx-export.ipynb) notebook but I am again not sure if it would work with this sort of model.

Any comments, advice, or suggestions would be so helpful -- I am feeling a bit stuck with how to proceed in deploying this model in the school capstone project I'm working on. In a worst-case scenario, can I use `from_pretrained` in my application?

|

https://github.com/huggingface/optimum/issues/874

|

closed

|

[

"Stale"

] | 2023-03-09T18:25:57Z

| 2025-06-22T02:17:24Z

| 3

|

gracemcgrath

|

huggingface/safetensors

| 190

|

Rust save ndarray using safetensors

|

I've been loving this library!

I have a question, how can I save an ndarray using safetensors?

https://docs.rs/ndarray/latest/ndarray/

For context: I am preprocessing data in rust and would like to then load it in python to do machine learning with pytorch.

|

https://github.com/huggingface/safetensors/issues/190

|

closed

|

[

"Stale"

] | 2023-03-08T22:29:11Z

| 2024-01-10T16:48:07Z

| 7

|

StrongChris

|

huggingface/optimum

| 867

|

Auto-detect framework for large models at ONNX export

|

### System Info

- `transformers` version: 4.26.1

- Platform: Linux-4.4.0-142-generic-x86_64-with-glibc2.23

- Python version: 3.9.15

- Huggingface_hub version: 0.11.1

- PyTorch version (GPU?): 1.13.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: no

- Using distributed or parallel set-up in script?: no

### Who can help?

@sgugger @muellerzr

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

```python

import torch

import torch.nn as nn

from transformers import GPT2Config, GPT2Tokenizer, GPT2Model

num_attention_heads = 40

num_layers = 40

hidden_size = 5120

configuration = GPT2Config(

n_embd=hidden_size,

n_layer=num_layers,

n_head=num_attention_heads

)

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2Model(configuration)

tokenizer.save_pretrained('gpt2_checkpoint')

model.save_pretrained('gpt2_checkpoint')

```

```shell

python -m transformers.onnx --model=gpt2_checkpoint onnx/

```

### Expected behavior

I created a GPT2 with a parameter volume of 13B. Just for testing, refer to https://huggingface.co/docs/transformers/serialization, I save it to gpt2_checkpoint. Then convert it to onnx using transformers.onnx. Due to the large amount of parameters, `save_pretrained` saves the model as *-0001.bin, *-0002.bin and so on. Later, when running ‘python -m transformers.onnx --model=gpt2_checkpoint onnx/’, an error `FileNotFoundError: Cannot determine framework from given checkpoint location. There should be a pytorch_model.bin for PyTorch or tf_model.h5 for TensorFlow.` So, I would like to ask how to convert a model with a large number of parameters into onnx for inference.

|

https://github.com/huggingface/optimum/issues/867

|

closed

|

[

"feature-request",

"onnx"

] | 2023-03-08T03:43:53Z

| 2023-03-16T15:52:39Z

| 3

|

WangYizhang01

|

pytorch/TensorRT

| 1,730

|

❓ [Question] Does torch-tensorrt support seq2seq models?

|

## ❓ Question

Does torch-tensorrt support seq2seq models? Are there any documentation/examples?

## What you have already tried

Previously, when I tried to use TensorRT, I need to convert the original torch seq2seq model to 2 onnx files, then convert them separately to TensorRT using trtexec. Not sure if this has changed with torch-tensorrt.

Thanks!

|

https://github.com/pytorch/TensorRT/issues/1730

|

closed

|

[

"question",

"No Activity"

] | 2023-03-08T00:51:31Z

| 2023-06-19T00:02:34Z

| null |

brevity2021

|

pytorch/TensorRT

| 1,727

|

complie model failed

|

## compile model failed with torchtrt-fp32 opt

#### ERROR INFO

WARNING: [Torch-TensorRT TorchScript Conversion Context] - Tensor DataType is determined at build time for tensors not marked as input or output.

ERROR: [Torch-TensorRT TorchScript Conversion Context] - 4: [graphShapeAnalyzer.cpp::analyzeShapes::1285] Error Code 4: Miscellaneous (IShuffleLayer (Unnamed Layer* 84) [Shuffle]: reshape changes volume. Reshaping [1,512,1,(+ (CEIL_DIV (+ (# 3 (SHAPE input_0)) -4) 4) 1)] to [1,512,0].)

ERROR: [Torch-TensorRT TorchScript Conversion Context] - 2: [builder.cpp::buildSerializedNetwork::609] Error Code 2: Internal Error (Assertion enginePtr != nullptr failed. )

terminate called after throwing an instance of 'torch_tensorrt::Error'

what(): [Error thrown at core/conversion/conversionctx/ConversionCtx.cpp:147] Building serialized network failed in TensorRT

Aborted

code:

std::string min_input_shape = "1 3 32 32";

std::string opt_input_shape = "1 3 32 512";

std::string max_input_shape = "1 3 32 1024";

## Environment

> Build information about Torch-TensorRT can be found by turning on debug messages

- PyTorch Version (e.g., 1.11.0):

- CPU Architecture: x86_64

- OS (Linux):

- How you installed PyTorch (`libtorch`):

- Python version:3.8

- CUDA version:11.3

|

https://github.com/pytorch/TensorRT/issues/1727

|

closed

|

[

"question",

"component: conversion",

"No Activity"

] | 2023-03-07T04:09:45Z

| 2023-06-18T00:02:24Z

| null |

f291400

|

pytorch/audio

| 3,153

|

Google colab notebook pointing to PyTorch 1.13.1

|

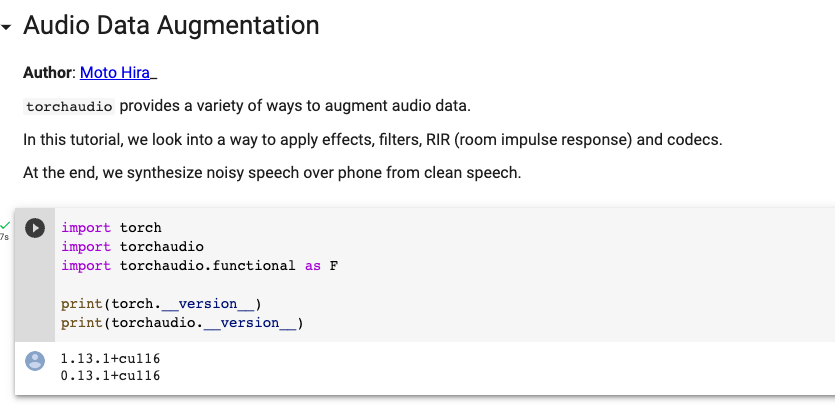

### 📚 The doc issue

When I open https://pytorch.org/audio/main/tutorials/audio_data_augmentation_tutorial.html in google colab and try running the notebook, I see that the PyTorch version is 1.13.1

### Suggest a potential alternative/fix

_No response_

|

https://github.com/pytorch/audio/issues/3153

|

closed

|

[

"question",

"triaged"

] | 2023-03-07T02:19:06Z

| 2023-03-07T15:41:03Z

| null |

agunapal

|

huggingface/datasets

| 5,615

|

IterableDataset.add_column is unable to accept another IterableDataset as a parameter.

|

### Describe the bug

`IterableDataset.add_column` occurs an exception when passing another `IterableDataset` as a parameter.

The method seems to accept only eager evaluated values.

https://github.com/huggingface/datasets/blob/35b789e8f6826b6b5a6b48fcc2416c890a1f326a/src/datasets/iterable_dataset.py#L1388-L1391

I wrote codes below to make it.

```py

def add_column(dataset: IterableDataset, name: str, add_dataset: IterableDataset, key: str) -> IterableDataset:

iter_add_dataset = iter(add_dataset)

def add_column_fn(example):

if name in example:

raise ValueError(f"Error when adding {name}: column {name} is already in the dataset.")

return {name: next(iter_add_dataset)[key]}

return dataset.map(add_column_fn)

```

Is there other way to do it? Or is it intended?

### Steps to reproduce the bug

Thie codes below occurs `NotImplementedError`

```py

from datasets import IterableDataset

def gen(num):

yield {f"col{num}": 1}

yield {f"col{num}": 2}

yield {f"col{num}": 3}

ids1 = IterableDataset.from_generator(gen, gen_kwargs={"num": 1})

ids2 = IterableDataset.from_generator(gen, gen_kwargs={"num": 2})

new_ids = ids1.add_column("new_col", ids1)

for row in new_ids:

print(row)

```

### Expected behavior

`IterableDataset.add_column` is able to task `IterableDataset` and lazy evaluated values as a parameter since IterableDataset is lazy evalued.

### Environment info

- `datasets` version: 2.8.0

- Platform: Linux-3.10.0-1160.36.2.el7.x86_64-x86_64-with-glibc2.17

- Python version: 3.9.7

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

|

https://github.com/huggingface/datasets/issues/5615

|

closed

|

[

"wontfix"

] | 2023-03-07T01:52:00Z

| 2023-03-09T15:24:05Z

| 1

|

zsaladin

|

huggingface/safetensors

| 188

|

How to extract weights from onnx to safetensors

|

How to extract weights from onnx to safetensors in rust?

|

https://github.com/huggingface/safetensors/issues/188

|

closed

|

[] | 2023-03-06T09:21:31Z

| 2023-03-07T14:23:16Z

| 2

|

oovm

|

huggingface/datasets

| 5,609

|

`load_from_disk` vs `load_dataset` performance.

|

### Describe the bug

I have downloaded `openwebtext` (~12GB) and filtered out a small amount of junk (it's still huge). Now, I would like to use this filtered version for future work. It seems I have two choices:

1. Use `load_dataset` each time, relying on the cache mechanism, and re-run my filtering.

2. `save_to_disk` and then use `load_from_disk` to load the filtered version.

The performance of these two approaches is wildly different:

* Using `load_dataset` takes about 20 seconds to load the dataset, and a few seconds to re-filter (thanks to the brilliant filter/map caching)

* Using `load_from_disk` takes 14 minutes! And the second time I tried, the session just crashed (on a machine with 32GB of RAM)

I don't know if you'd call this a bug, but it seems like there shouldn't need to be two methods to load from disk, or that they should not take such wildly different amounts of time, or that one should not crash. Or maybe that the docs could offer some guidance about when to pick which method and why two methods exist, or just how do most people do it?

Something I couldn't work out from reading the docs was this: can I modify a dataset from the hub, save it (locally) and use `load_dataset` to load it? This [post seemed to suggest that the answer is no](https://discuss.huggingface.co/t/save-and-load-datasets/9260).

### Steps to reproduce the bug

See above

### Expected behavior

Load times should be about the same.

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.31

- Python version: 3.10.8

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

|

https://github.com/huggingface/datasets/issues/5609

|

open

|

[] | 2023-03-05T05:27:15Z

| 2023-07-13T18:48:05Z

| 4

|

davidgilbertson

|

huggingface/sentence-transformers

| 1,856

|

What it is the ideal sentence size to train with TSDAE?

|

I have an unlabeled data that contains 80k texts, with about 250 tokens on average(with bert-base-multilingual-uncased tokenizer). I want to pre-train the model on my dataset, but I'm not sure if the texts are too large. It's possible to break in small sentences, but I'm afraid that some sentences lose context.

What it is the ideal sentence size to train with TSDAE?

|

https://github.com/huggingface/sentence-transformers/issues/1856

|

open

|

[] | 2023-03-04T21:16:14Z

| 2023-03-04T21:16:14Z

| null |

Diegobm99

|

huggingface/transformers

| 21,950

|

auto_find_batch_size should say what batch size it is using

|

### Feature request

When using `auto_find_batch_size=True` in the trainer I believe it identifies the right batch size but then it doesn't log it to the console anywhere?

It would be good if it could log what batch size it is using?

### Motivation

I'd like to know what batch size it is using because then I will know roughly how big a batch can fit in memory - this info would be useful elsewhere.

### Your contribution

N/A

|

https://github.com/huggingface/transformers/issues/21950

|

closed

|

[] | 2023-03-04T08:53:25Z

| 2023-06-28T15:03:39Z

| null |

p-christ

|

huggingface/datasets

| 5,604

|

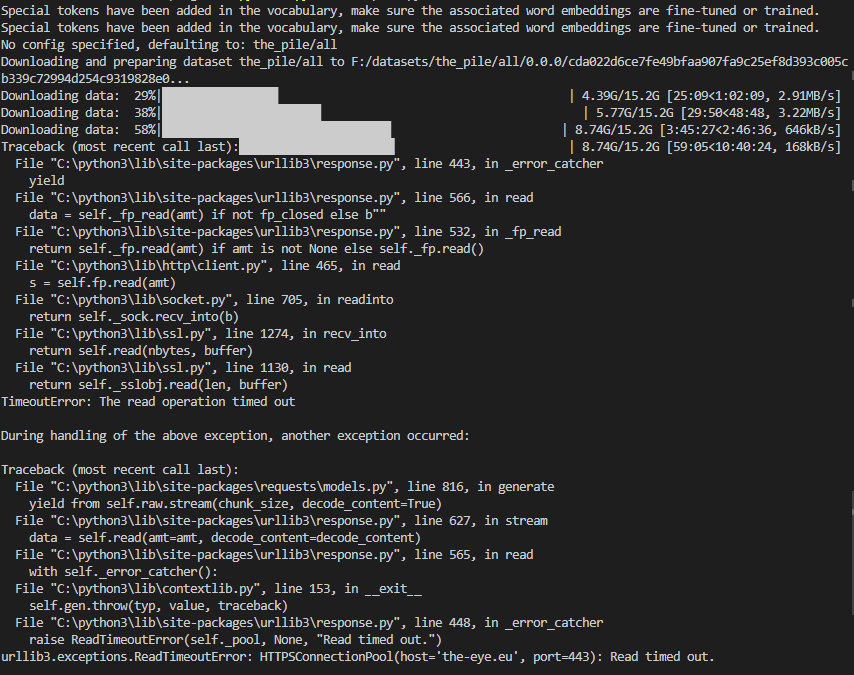

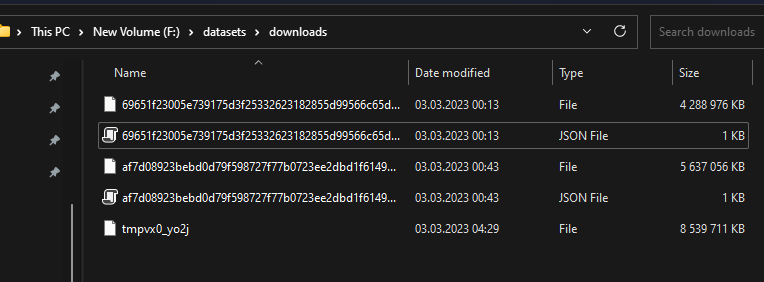

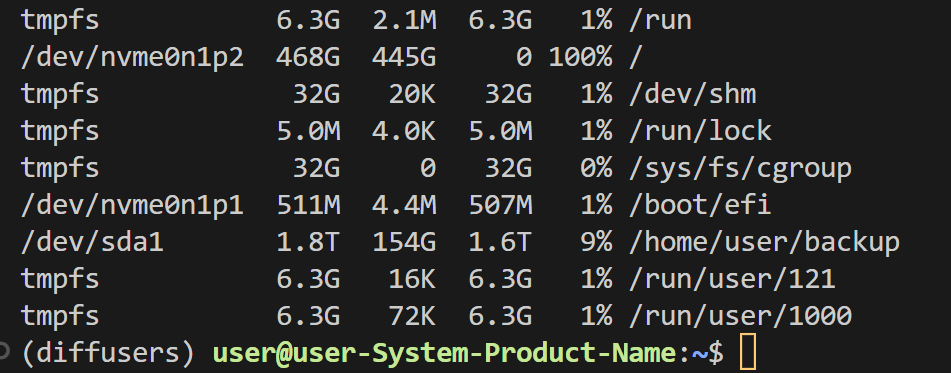

Problems with downloading The Pile

|

### Describe the bug

The downloads in the screenshot seem to be interrupted after some time and the last download throws a "Read timed out" error.

Here are the downloaded files:

They should be all 14GB like here (https://the-eye.eu/public/AI/pile/train/).

Alternatively, can I somehow download the files by myself and use the datasets preparing script?

### Steps to reproduce the bug

dataset = load_dataset('the_pile', split='train', cache_dir='F:\datasets')

### Expected behavior

The files should be downloaded correctly.

### Environment info

- `datasets` version: 2.10.1

- Platform: Windows-10-10.0.22623-SP0

- Python version: 3.10.5

- PyArrow version: 9.0.0

- Pandas version: 1.4.2

|

https://github.com/huggingface/datasets/issues/5604

|

closed

|

[] | 2023-03-03T09:52:08Z

| 2023-10-14T02:15:52Z

| 7

|

sentialx

|

huggingface/optimum

| 842

|

Auto-TensorRT engine compilation, or improved documentation for it

|

### Feature request

For decoder models with cache, it can be painful to manually compile the TensorRT engine as ONNX Runtime does not give options to specify shapes. The engine build could maybe be done automatically.

The current doc is only for `use_cache=False`, which is not very interesting. It could be improved to show how to pre-build the TRT with use_cache=True.

References:

https://huggingface.co/docs/optimum/main/en/onnxruntime/usage_guides/gpu#tensorrt-engine-build-and-warmup

https://github.com/microsoft/onnxruntime/issues/13559

### Motivation

TensorRT is fast

### Your contribution

will work on it sometime

|

https://github.com/huggingface/optimum/issues/842

|

open

|

[

"feature-request",

"onnxruntime"

] | 2023-03-02T13:50:17Z

| 2023-05-31T12:47:40Z

| 4

|

fxmarty

|

pytorch/tutorials

| 2,230

|

model.train(False) affects gradient tracking?

|

In this tutorial here it says in the comment that "# We don't need gradients on to do reporting". From what I understand the train flag only affects layers such as dropout and batch-normalization. Does it also affect gradient calculations, or is this comment wrong?

https://github.com/pytorch/tutorials/blob/6bd30cf214bf541a1c5d35cc45d10a381f57af1b/beginner_source/introyt/trainingyt.py#L293

cc @suraj813

|

https://github.com/pytorch/tutorials/issues/2230

|

closed

|

[

"question",

"intro",

"docathon-h1-2023",

"easy"

] | 2023-03-02T12:09:13Z

| 2023-06-01T01:19:02Z

| null |

MaverickMeerkat

|

huggingface/datasets

| 5,600

|

Dataloader getitem not working for DreamboothDatasets

|

### Describe the bug

Dataloader getitem is not working as before (see example of [DreamboothDatasets](https://github.com/huggingface/peft/blob/main/examples/lora_dreambooth/train_dreambooth.py#L451C14-L529))

moving Datasets to 2.8.0 solved the issue.

### Steps to reproduce the bug

1- using DreamBoothDataset to load some images

2- error after loading when trying to visualise the images

### Expected behavior

I was expecting a numpy array of the image

### Environment info

- Platform: Linux-5.10.147+-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyArrow version: 9.0.0

- Pandas version: 1.3.5

|

https://github.com/huggingface/datasets/issues/5600

|

closed

|

[] | 2023-03-02T11:00:27Z

| 2023-03-13T17:59:35Z

| 1

|

salahiguiliz

|

huggingface/trl

| 180

|

what is AutoModelForCausalLMWithValueHead?

|

trl use `AutoModelForCausalLMWithValueHead`,which is base_model(eg: GPT2LMHeadModel) + fc layer,but I can't understand why need a fc head layer?

|

https://github.com/huggingface/trl/issues/180

|

closed

|

[] | 2023-02-28T07:46:49Z

| 2025-02-21T11:29:04Z

| null |

akk-123

|

pytorch/serve

| 2,162

|

How to run torchserver without log printing?

|

How to run torchserver without log printing?I didn't see the relevant command line. Could someone tell me, thank you!

|

https://github.com/pytorch/serve/issues/2162

|

closed

|

[

"triaged",

"support"

] | 2023-02-28T02:22:02Z

| 2023-03-09T20:06:37Z

| null |

mqy9787

|

huggingface/datasets

| 5,585

|

Cache is not transportable

|

### Describe the bug

I would like to share cache between two machines (a Windows host machine and a WSL instance).

I run most my code in WSL. I have just run out of space in the virtual drive. Rather than expand the drive size, I plan to move to cache to the host Windows machine, thereby sharing the downloads.

I'm hoping that I can just copy/paste the cache files, but I notice that a lot of the file names start with the path name, e.g. `_home_davidg_.cache_huggingface_datasets_conll2003_default-451...98.lock` where `home/davidg` is where the cache is in WSL.

This seems to suggest that the cache is not portable/cannot be centralised or shared. Is this the case, or are the files that start with path names not integral to the caching mechanism? Because copying the cache files _seems_ to work, but I'm not filled with confidence that something isn't going to break.

A related issue, when trying to load a dataset that should come from cache (running in WSL, pointing to cache on the Windows host) it seemed to work fine, but it still uses a WSL directory for `.cache\huggingface\modules\datasets_modules`. I see nothing in the docs about this, or how to point it to a different place.

I have asked a related question on the forum: https://discuss.huggingface.co/t/is-datasets-cache-operating-system-agnostic/32656

### Steps to reproduce the bug

View the cache directory in WSL/Windows.

### Expected behavior

Cache can be shared between (virtual) machines and be transportable.

It would be nice to have a simple way to say "Dear Hugging Face packages, please put ALL your cache in `blah/de/blah`" and have all the Hugging Face packages respect that single location.

### Environment info

```

- `datasets` version: 2.9.0

- Platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.31

- Python version: 3.10.8

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

- ```

|

https://github.com/huggingface/datasets/issues/5585

|

closed

|

[] | 2023-02-28T00:53:06Z

| 2023-02-28T21:26:52Z

| 2

|

davidgilbertson

|

pytorch/examples

| 1,121

|

About fast_neural_style

|

How many rounds did you train in the fast neural style transfer experiment? I operate according to your steps, but the effect of the model I trained is not as good as the model you provided, and why is the model file I trained less than the file you provided by 3kb? I would like to know the reason and look forward to your reply!

|

https://github.com/pytorch/examples/issues/1121

|

closed

|

[

"help wanted"

] | 2023-02-27T15:53:38Z

| 2023-08-17T09:26:17Z

| 2

|

TOUBH

|

pytorch/functorch

| 1,113

|

How to get the jacobian matrix in GCNs?

|

Hi, I'm trying to use `jacrev` to get the jacobians in graph convolution networks, but it seems like I've called the function incorrectly.

```python

import torch.nn.functional as F

import functorch

import torch_geometric

from torch_geometric.data import Data

class GCN(torch.nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim):

super().__init__()

torch.manual_seed(12345)

self.conv1 = torch_geometric.nn.GCNConv(input_dim, hidden_dim, aggr='add')

self.conv2 = torch_geometric.nn.GCNConv(hidden_dim, output_dim, aggr='add')

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

adj_matrix = torch.ones(3,3)

edge_index = adj_matrix .nonzero().t().contiguous()

gcn = GCN(input_dim=5, hidden_dim=64, output_dim=5)

N = (128,3, 5)

x =torch.randn(N, requires_grad=True) # batch_size:128, node_num:10 , node_feature: 5

graph = Data(x=x, edge_index=edge_index)

gcn_out = gcn(graph.x, graph.edge_index)

```

Then I try to compute the jacobians of the input data `x` based on the tutorial,

```python

jacobian = functorch.vmap(functorch.jacrev(gcn))(graph.x, graph.edge_index)

```

and get the following error message:

```python

ValueError: vmap: Expected all tensors to have the same size in the mapped dimension, got sizes [128, 2] for the mapped dimension

```

|

https://github.com/pytorch/functorch/issues/1113

|

open

|

[] | 2023-02-27T13:23:50Z

| 2023-02-27T13:24:15Z

| null |

pcheng2

|

huggingface/dataset-viewer

| 857

|

Contribute to https://github.com/huggingface/huggingface.js?

|

https://github.com/huggingface/huggingface.js is a JS client for the Hub and inference. We could propose to add a client for the datasets-server.

|

https://github.com/huggingface/dataset-viewer/issues/857

|

closed

|

[

"question"

] | 2023-02-27T12:27:43Z

| 2023-04-08T15:04:09Z

| null |

severo

|

huggingface/dataset-viewer

| 852

|

Store the parquet metadata in their own file?

|

See https://github.com/huggingface/datasets/issues/5380#issuecomment-1444281177

> From looking at Arrow's source, it seems Parquet stores metadata at the end, which means one needs to iterate over a Parquet file's data before accessing its metadata. We could mimic Dask to address this "limitation" and write metadata in a _metadata/_common_metadata file in to_parquet/push_to_hub, which we could then use to optimize reads (if present). Plus, it's handy that PyArrow can also parse these metadata files.

|

https://github.com/huggingface/dataset-viewer/issues/852

|

closed

|

[

"question"

] | 2023-02-27T08:29:12Z

| 2023-05-01T15:04:07Z

| null |

severo

|

pytorch/functorch

| 1,112

|

Error about using a grad transform with in-place operation is inconsistent with and without DDP

|

Hi,

I was using `torch.func` in pytorch 2.0 to compute the Hessian-vector product of a neural network.

I first used `torch.func.functional_call` to define a functional version of the neural network model, and then proceeded to use `torch.func.jvp` and `torch.func.grad` to compute the hvp.

The above works when I was using one gpu without parallel processing. However, when I wrapped the model with Distributed Data Parallel (DDP), it gave the following error:

`*** RuntimeError: During a grad (vjp, jvp, grad, etc) transform, the function provided attempted to call in-place operation (aten::copy_) that would mutate a captured Tensor. This is not supported; please rewrite the function being transformed to explicitly accept the mutated Tensor(s) as inputs.`

I am confused about this error, because if there were indeed such in-place operations (which I couldn't find in my model.forward() code), I'd expect this error to occur regardless of DDP. Given the inconsistent behaviour, can I still trust the hvp result when I wasn't using DDP?

My torch version: is `2.0.0.dev20230119+cu117`

|

https://github.com/pytorch/functorch/issues/1112

|

open

|

[] | 2023-02-24T23:09:30Z

| 2023-03-14T13:56:55Z

| 1

|

XuchanBao

|

huggingface/datasets

| 5,570

|

load_dataset gives FileNotFoundError on imagenet-1k if license is not accepted on the hub

|

### Describe the bug

When calling ```load_dataset('imagenet-1k')``` FileNotFoundError is raised, if not logged in and if logged in with huggingface-cli but not having accepted the licence on the hub. There is no error once accepting.

### Steps to reproduce the bug

```

from datasets import load_dataset

imagenet = load_dataset("imagenet-1k", split="train", streaming=True)

FileNotFoundError: Couldn't find a dataset script at /content/imagenet-1k/imagenet-1k.py or any data file in the same directory. Couldn't find 'imagenet-1k' on the Hugging Face Hub either: FileNotFoundError: Dataset 'imagenet-1k' doesn't exist on the Hub

```

tested on a colab notebook.

### Expected behavior

I would expect a specific error indicating that I have to login then accept the dataset licence.

I find this bug very relevant as this code is on a guide on the [Huggingface documentation for Datasets](https://huggingface.co/docs/datasets/about_mapstyle_vs_iterable)

### Environment info

google colab cpu-only instance

|

https://github.com/huggingface/datasets/issues/5570

|

closed

|

[] | 2023-02-23T16:44:32Z

| 2023-07-24T15:18:50Z

| 2

|

buoi

|

huggingface/optimum

| 810

|

ORTTrainer using DataParallel instead of DistributedDataParallel causes downstream errors

|

### System Info

```shell

optimum 1.6.4

python 3.8

```

### Who can help?

@JingyaHuang @echarlaix

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

FROM mcr.microsoft.com/azureml/aifx/stable-ubuntu2004-cu117-py38-torch1131:latest

RUN git clone https://github.com/huggingface/optimum.git && cd optimum && python setup.py install

RUN python examples/onnxruntime/training/summarization/run_summarization.py --model_name_or_path t5-small --do_train --dataset_name cnn_dailymail --dataset_config "3.0.0" --source_prefix "summarize: " --predict_with_generate --fp16

### Expected behavior

This is expected to run t5-small with ONNXRuntime, however the model defaults to pytorch execution. I believe this is due to optimum's usage of torch.nn.DataParallel in trainer.py [here](https://github.com/huggingface/optimum/blob/dbb43fb622727f2206fa2a2b3b479f6efe82945b/optimum/onnxruntime/trainer.py#L1576) which is incompatible with ONNXRuntime.

PyTorch's [official documentation](https://pytorch.org/docs/stable/generated/torch.nn.DataParallel.html) recommends using DistributedDataParallel over DataParallel for multi-gpu training. Is there a reason why DataParallel is used here and, if not, can it be changed to use DistributedDataParallel?

|

https://github.com/huggingface/optimum/issues/810

|

closed

|

[

"bug"

] | 2023-02-22T22:15:41Z

| 2023-03-19T19:01:32Z

| 2

|

prathikr

|

huggingface/optimum

| 809

|

Better Transformer with QA pipeline returns padding issue

|

### System Info

```shell

Optimum version: 1.6.4

Platform: Linux

Python version: 3.10

Transformers version: 4.26.1

Accelerate version: 0.16.0

Torch version: 1.13.1+cu117

```

### Who can help?

@philschmid

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Notebook link to reproduce the same: https://colab.research.google.com/drive/1g-EzDtvEMIO1VjYBFJDlYWuKoBjaHxDd?usp=sharing

Code snippet:

```python

from optimum.pipelines import pipeline

qa_model = "bert-large-uncased-whole-word-masking-finetuned-squad"

reader = pipeline("question-answering", qa_model, accelerator="bettertransformer")

reader(question=["What is your name?", "What do you like to do in your free time?"] * 10, context=["My name is Bookworm and I like to read books."] * 20, batch_size=16)

```

Error persists on both cpu and cuda device. Works as expected if batches passed in require no padding.

Error received:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/question_answering.py", line 393, in __call__

return super().__call__(examples, **kwargs)

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/base.py", line 1065, in __call__

outputs = [output for output in final_iterator]

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/base.py", line 1065, in <listcomp>

outputs = [output for output in final_iterator]

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/pt_utils.py", line 124, in __next__

item = next(self.iterator)

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/pt_utils.py", line 266, in __next__

processed = self.infer(next(self.iterator), **self.params)

File "/app/.venv/lib/python3.10/site-packages/torch/utils/data/dataloader.py", line 628, in __next__

data = self._next_data()

File "/app/.venv/lib/python3.10/site-packages/torch/utils/data/dataloader.py", line 671, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

File "/app/.venv/lib/python3.10/site-packages/torch/utils/data/_utils/fetch.py", line 44, in fetch

return self.collate_fn(data)

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/base.py", line 169, in inner

padded[key] = _pad(items, key, _padding_value, padding_side)

File "/app/.venv/lib/python3.10/site-packages/transformers/pipelines/base.py", line 92, in _pad

tensor = torch.zeros((batch_size, max_length), dtype=dtype) + padding_value

TypeError: unsupported operand type(s) for +: 'Tensor' and 'NoneType'

```

The system versions mentioned above are from my server setup although it seems reproducible from the notebook with different torch/cuda installations!

### Expected behavior

Expected behavior is to produce correct results without error.

|

https://github.com/huggingface/optimum/issues/809

|

closed

|

[

"bug"

] | 2023-02-22T18:51:23Z

| 2023-02-27T11:29:09Z

| 2

|

vrdn-23

|

pytorch/text

| 2,072

|

how to build torchtext in cpp wiht cmake?

|

HI, guys, I want to use torchtext with liborch in cpp like cmake build torchvision in cpp, but I has try ,but meet some error in windows system,I don't know why some dependency subdirectory is empty, how to build it then include with cpp ?

thanks

````

-- Building for: Visual Studio 17 2022

-- Selecting Windows SDK version 10.0.20348.0 to target Windows 10.0.22621.

-- The C compiler identification is MSVC 19.34.31942.0

-- The CXX compiler identification is MSVC 19.34.31942.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: C:/Program Files/Microsoft Visual Studio/2022/Professional/VC/Tools/MSVC/14.34.31933/bin/Hostx64/x64/cl.exe - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: C:/Program Files/Microsoft Visual Studio/2022/Professional/VC/Tools/MSVC/14.34.31933/bin/Hostx64/x64/cl.exe - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

CMake Error at third_party/CMakeLists.txt:8 (add_subdirectory):

The source directory

C:/Apps/text-main/third_party/re2

does not contain a CMakeLists.txt file.

CMake Error at third_party/CMakeLists.txt:9 (add_subdirectory):

The source directory

C:/Apps/text-main/third_party/double-conversion

does not contain a CMakeLists.txt file.

CMake Error at third_party/CMakeLists.txt:10 (add_subdirectory):

The source directory

C:/Apps/text-main/third_party/sentencepiece

does not contain a CMakeLists.txt file.

CMake Error at third_party/CMakeLists.txt:11 (add_subdirectory):

The source directory

C:/Apps/text-main/third_party/utf8proc

does not contain a CMakeLists.txt file.

-- Configuring incomplete, errors occurred!

PS C:\Apps\text-main\build>

````

|

https://github.com/pytorch/text/issues/2072

|

closed

|

[] | 2023-02-22T15:33:37Z

| 2023-02-23T02:53:05Z

| null |

mullerhai

|

pytorch/xla

| 4,666

|

Got error when build xla from source

|

Hi! I am trying to build xla wheel by following the setup guide here: https://github.com/pytorch/xla/blob/master/CONTRIBUTING.md

I skipped building torch by `pip install torch==1.13.0` into virtualenv, and then run `env BUILD_CPP_TESTS=0 python setup.py bdist_wheel` under pytorch/xla. I got the following error:

```bash

ERROR: /home/ubuntu/pytorch/xla/third_party/tensorflow/tensorflow/compiler/xla/xla_client/BUILD:42:20: Linking tensorflow/compiler/xla/xla_client/libxla_computation_client.so failed: (Exit 1): gcc failed: error executing command /usr/bin/gcc @bazel-out/k8-opt/bin/tensorflow/compiler/xla/xla_client/libxla_computation_client.so-2.params

bazel-out/k8-opt/bin/tensorflow/core/profiler/convert/_objs/xplane_to_tools_data/xplane_to_tools_data.pic.o:xplane_to_tools_data.cc:function tensorflow::profiler::ConvertMultiXSpacesToToolData(tensorflow::profiler::SessionSnapshot const&, std::basic_string_view<char, std::char_traits<char> >, absl::lts_20220623::flat_hash_map<std::string, std::variant<int, std::string>, absl::lts_20220623::container_internal::StringHash, absl::lts_20220623::container_internal::StringEq, std::allocator<std::pair<std::string const, std::variant<int, std::string> > > > const&): error: undefined reference to 'tensorflow::profiler::ConvertHloProtoToToolData(tensorflow::profiler::SessionSnapshot const&, std::basic_string_view<char, std::char_traits<char> >, absl::lts_20220623::flat_hash_map<std::string, std::variant<int, std::string>, absl::lts_20220623::container_internal::StringHash, absl::lts_20220623::container_internal::StringEq, std::allocator<std::pair<std::string const, std::variant<int, std::string> > > > const&)'

collect2: error: ld returned 1 exit status

Target //tensorflow/compiler/xla/xla_client:libxla_computation_client.so failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 1657.036s, Critical Path: 351.63s

INFO: 9274 processes: 746 internal, 8528 local.

FAILED: Build did NOT complete successfully

Failed to build external libraries: ['/home/ubuntu/pytorch/xla/build_torch_xla_libs.sh', '-O', '-D_GLIBCXX_USE_CXX11_ABI=0', 'bdist_wheel']

```

|

https://github.com/pytorch/xla/issues/4666

|

closed

|

[

"question",

"build"

] | 2023-02-21T19:56:52Z

| 2025-05-06T13:32:43Z

| null |

aws-bowencc

|

pytorch/xla

| 4,662

|

CUDA momery:how can i control xla reserved in total by PyTorch with GPU

|

## ❓ Questions and Help

I see xla will reserve almost all memory on GPU,but when i run code both with xla and cuda, it will be error of `torch.cuda.OutOfMemoryError`。

```python

File "/workspace/volume/hqp-nas/xla/mmdetection/mmdet/models/backbones/resnet.py", line 298, in forward

out = _inner_forward(x)

File "/workspace/volume/hqp-nas/xla/mmdetection/mmdet/models/backbones/resnet.py", line 275, in _inner_forward

out = self.conv2(out)

File "/root/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/root/anaconda3/envs/pytorch/lib/python3.8/site-packages/mmcv/ops/modulated_deform_conv.py", line 338, in forward

output = modulated_deform_conv2d(x, offset, mask, weight1, bias1,

File "/root/anaconda3/envs/pytorch/lib/python3.8/site-packages/mmcv/ops/modulated_deform_conv.py", line 142, in forward

ext_module.modulated_deform_conv_forward(

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 52.00 MiB (GPU 0; 79.20 GiB total capacity; 752.52 MiB already allocated; 27.25 MiB free; 886.00 MiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

```

We can see there is 80GB in the single card of GPU-A100。but CUDA of Pytorch only 886.00 MiB reserved,and xla does reserve almost all memory on GPU。if i need cuda to exec operators that xla is not supported,it need more memory。

```markdown

Tue Feb 21 12:39:45 2023

+-----------------------------------------------------------+

| NVIDIA-SMI 520.61.05 Driver Version: 520.61.05 CUDA Version: 11.8 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A100-SXM... Off | 00000000:16:00.0 Off | 0 |

| N/A 33C P0 88W / 400W | 81073MiB / 81920MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

```

If i can contorl the size of XLA reserved, it will be nice。any answer will be helpful。

|

https://github.com/pytorch/xla/issues/4662

|

closed

|

[

"question",

"xla:gpu"

] | 2023-02-21T12:43:09Z

| 2025-05-07T12:13:34Z

| null |

qipengh

|

pytorch/kineto

| 727

|

How to trace torch cuda time in C++ using kineto?

|

**The problem**

Hi, I am using the pytorch profile to trace the gpu performance of models, and it works well in python.

For example:

```

import torch

from torch.autograd.profiler import profile, record_function

with profile(record_shapes=True, use_cuda=True, use_kineto=True, with_stack=False) as prof:

with record_function("model_inference"):

a = torch.randn(128, 128, device=torch.device('cuda:0'))

b = torch.randn(128, 128, device=torch.device('cuda:0'))

c = a + b

print(prof.key_averages().table(sort_by="cuda_time_total", row_limit=50))

```

Now, I want to implement the above code in C++ and get each operator's cuda (kernel) time. But I found very few relevant examples. So I implemented a C++ program against the python interface.

```

#include <torch/csrc/autograd/profiler_kineto.h>

...

...

const std::set<torch::autograd::profiler::ActivityType> activities(

{torch::autograd::profiler::ActivityType::CPU, torch::autograd::profiler::ActivityType::CUDA});

torch::autograd::profiler::prepareProfiler(

torch::autograd::profiler::ProfilerConfig(

torch::autograd::profiler::ProfilerState::KINETO, false, false), activities);

torch::autograd::profiler::enableProfiler(

torch::autograd::profiler::ProfilerConfig(

torch::autograd::profiler::ProfilerState::KINETO, false, false), activities);

auto a = torch::rand({128, 128}, {at::kCUDA});

auto b = torch::rand({128, 128}, {at::kCUDA});

auto c = a + b;

auto profiler_results_ptr = torch::autograd::profiler::disableProfiler();

const auto& kineto_events = profiler_results_ptr->events();

for (const auto e : kineto_events) {

std::cout << e.name() << " " << e.cudaElapsedUs() << " " << e.durationUs()<<std::endl;

}

```

But the printed cuda time is all equal to -1 like:

```

aten::empty -1 847

aten::uniform_ -1 3005641

aten::rand -1 3006600

aten::empty -1 21

aten::uniform_ -1 53

aten::rand -1 82

aten::add -1 156

cudaStreamIsCapturing -1 8

_ZN2at6native90_GLOBAL__N__66_tmpxft_000055e0_00000000_13_DistributionUniform_compute_86_cpp1_ii_f2fea07d43distribution_elementwise_grid_stride_kernelIfLi4EZNS0_9templates4cuda21uniform_and_transformIffLm4EPNS_17CUDAGeneratorImplEZZZNS4_14uniform_kernelIS7_EEvRNS_18TensorIteratorBaseEddT_ENKUlvE_clEvENKUlvE2_clEvEUlfE_EEvSA_T2_T3_EUlP24curandStatePhilox4_32_10E0_ZNS1_27distribution_nullary_kernelIffLi4ES7_SJ_SE_EEvSA_SF_RKSG_T4_EUlifE_EEviNS_15PhiloxCudaStateET1_SF_ -1 2

cudaLaunchKernel -1 3005499

cudaStreamIsCapturing -1 4

_ZN2at6native90_GLOBAL__N__66_tmpxft_000055e0_00000000_13_DistributionUniform_compute_86_cpp1_ii_f2fea07d43distribution_elementwise_grid_stride_kernelIfLi4EZNS0_9templates4cuda21uniform_and_transformIffLm4EPNS_17CUDAGeneratorImplEZZZNS4_14uniform_kernelIS7_EEvRNS_18TensorIteratorBaseEddT_ENKUlvE_clEvENKUlvE2_clEvEUlfE_EEvSA_T2_T3_EUlP24curandStatePhilox4_32_10E0_ZNS1_27distribution_nullary_kernelIffLi4ES7_SJ_SE_EEvSA_SF_RKSG_T4_EUlifE_EEviNS_15PhiloxCudaStateET1_SF_ -1 1

cudaLaunchKernel -1 14

void at::native::vectorized_elementwise_kernel<4, at::native::AddFunctor<float>, at::detail::Array<char*, 3> >(int, at::native::AddFunctor<float>, at::detail::Array<char*, 3>) -1 1

cudaLaunchKernel -1 16

```

I carefully compared the differences between the above two programs (python and C++) but did not find the cause of the problem. I also tried other parameter combinations and couldn't get the real cuda time.

**Expected behavior**

It can output cuda time of each operator in C++ program like python.

**Environment version**

OS: CentOS release 7.5 (Final)

nvidia driver version: 460.32.03

CUDA version: 11.2

PyTorch version: 1.9.0+cu111

Python version: 3.6.5

GPU: A10

|

https://github.com/pytorch/kineto/issues/727

|

closed

|

[

"question"

] | 2023-02-21T11:37:24Z

| 2023-10-10T15:07:23Z

| null |

TianShaoqing

|

pytorch/kineto

| 726

|

How to remove log output similar to “ActivityProfilerController.cpp:294] Completed Stage: Warm Up”

|

## What I encounter

when I use torch.profie to profie a large model, I found my log file have many lines like:

```

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101898:101898 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101899:101899 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101898:101898 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101899:101899 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101898:101898 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101899:101899 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101898:101898 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101899:101899 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101898:101898 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101899:101899 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:300] Completed Stage: Collection

STAGE:2023-02-21 15:15:48 101903:101903 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101902:101902 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

STAGE:2023-02-21 15:15:48 101898:101898 ActivityProfilerController.cpp:294] Completed Stage: Warm Up

```

## What I expect

Is there any way to ignore or turn off the output of these useless logs? I tried to set the environment variable `KINETO_LOG_LEVEL` equal to 99, but it didn't work. thanks you all.

```python

import os

os.environ.update({'KINETO_LOG_LEVEL' : '99'})

```

## Version and platform

CentOS-7 Linux

torch 1.13.1+cu117 <pip>

torch-tb-profiler 0.4.1 <pip>

torchaudio 0.13.1+cu117 <pip>

torchvision 0.14.1+cu117 <pip>

|

https://github.com/pytorch/kineto/issues/726

|

closed

|

[

"enhancement"

] | 2023-02-21T07:46:59Z

| 2023-06-22T02:37:44Z

| null |

SolenoidWGT

|

pytorch/data

| 1,033

|

Accessing DataPipe state with MultiProcessingReadingService

|

Hi TorchData team,

I'm wondering how to access the state of the datapipe in the multi-processing context with DataLoader2 + MultiProcessingReadingService. When using no reading service, we can simply access the graph using `dataloader.datapipe`, then I can easily access the state of my datapipe using the code shown below.

However, in the multi processing case, the datapipe graph is replaced with QueueWrapper instances, and I cannot find any way to communicate with the workers to get access to the state of the data pipe (and I get the error that my StatefulIterator cannot be found on the datapipe). If I access `dl2._datapipe_before_reading_service_adapt` I do get the initial state only which makes sense since there is no state sync between the main and worker processes.

As far as I understand, this will also be a blocker for state capturing for proper DataLoader checkpointing when the MultiProcessingReadingService is being used.

Potentially, could we add a `getstate` communication primitive in `communication.messages` in order to capture the state (via getstate) of a datapipe in a worker process?

We're also open to using `sharding_round_robin_dispatch` in order to keep more information in the main process but I'm a bit confused on how to use it, if you have some sample code for me for the following case?

Running against today's master (commit a3b34a00e7d2b6694ea0d5e21fcc084080a3abae):

```python

import torchdata.datapipes as dp