File size: 4,898 Bytes

5030a4b 5187ddb 24e1e82 9af33e0 24e1e82 5e50a8c 24e1e82 5e50a8c 24e1e82 5e50a8c 24e1e82 5e50a8c 24e1e82 b749b80 24e1e82 30fc18d 24e1e82 30fc18d 24e1e82 d6ed572 24e1e82 d6ed572 24e1e82 30fbf4e 24e1e82 30fbf4e 24e1e82 5e50a8c 24e1e82 5e50a8c 24e1e82 5e50a8c 24e1e82 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 |

---

language:

- en

tags:

- counting

- object-counting

---

# Dataset Card for Dataset Name

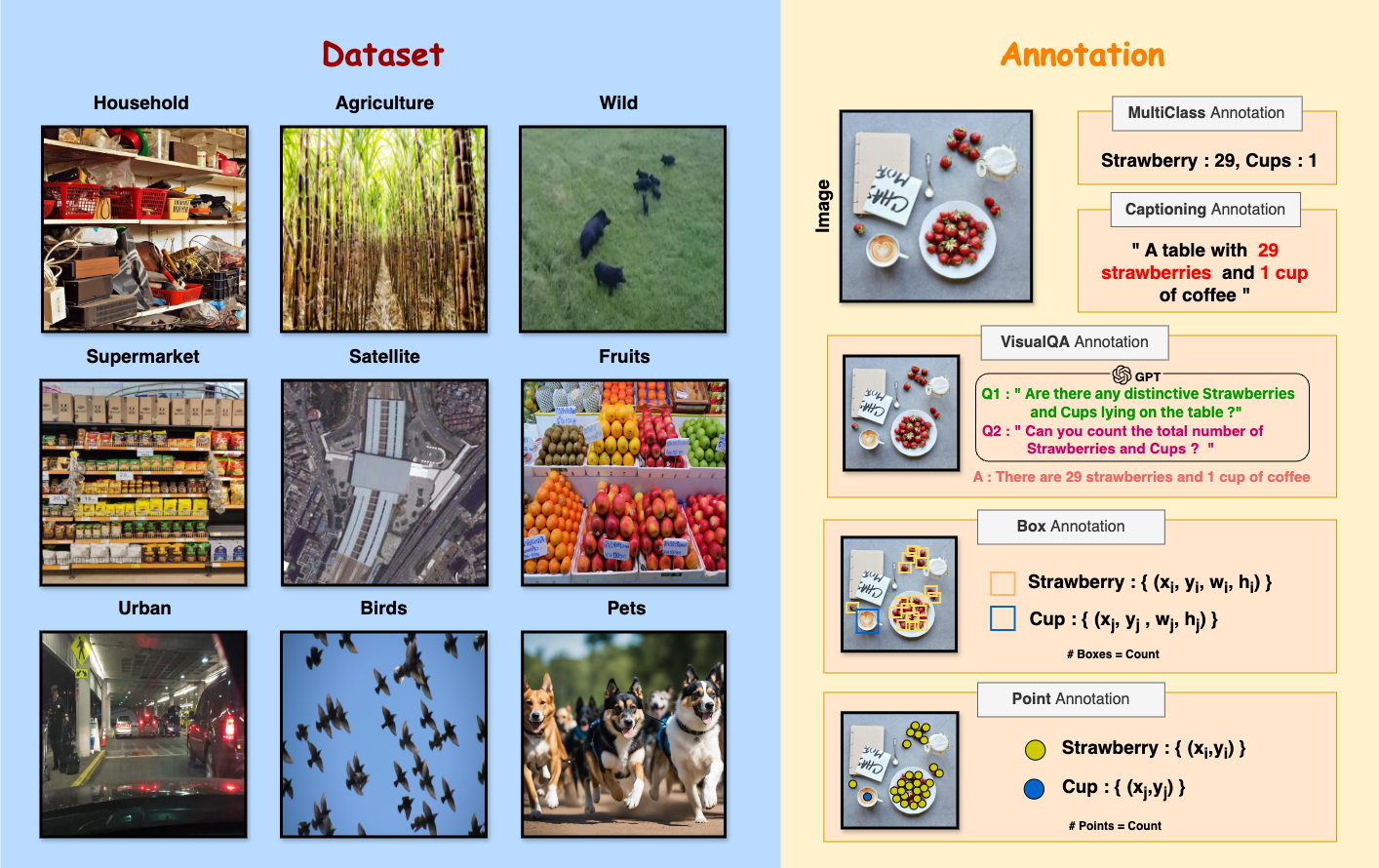

OmniCount-191 is a first-of-its-kind dataset with multi-label object counts, including points, bounding boxes, and VQA annotations.

## Dataset Details

### Dataset Description

Omnicount-191 is a dataset that caters to a broad spectrum of visual categories and instances featuring various visual categories with multiple instances and classes per image. The current datasets, primarily designed for object counting, focusing on singular object categories like humans and vehicles, fall short for multi-label object counting tasks. Despite the presence of multi-class datasets like MS COCO their utility is limited for counting due to the sparse nature of object appearance. Addressing this gap, we created a new dataset with 30,230 images spanning 191 diverse categories, including kitchen utensils, office supplies, vehicles, and animals. This dataset, featuring a wide range of object instance counts per image ranging from 1 to 160 and an average count of 10, bridges the existing void and establishes a benchmark for assessing counting models in varied scenarios.

- **Curated by:** [Anindya Mondal](https://mondalanindya.github.io), [Sauradip Nag](http://sauradip.github.io/), [Xiatian Zhu](https://surrey-uplab.github.io), [Anjan Dutta](https://www.surrey.ac.uk/people/anjan-dutta)

- **License:** [OpenRAIL]

### Dataset Sources

- **Paper :**[OmniCount: Multi-label Object Counting with Semantic-Geometric Priors](https://arxiv.org/pdf/2403.05435.pdf)

- **Demo :** [https://mondalanindya.github.io/OmniCount/](https://mondalanindya.github.io/OmniCount/)

## Uses

### Direct Use

Object Counting

### Out-of-Scope Use

Visual Question Answering (VQA), Object Detection (OD)

#### Data Collection and Processing

The data collection process for OmniCount-191 involved a team of 13 members who manually curated images from the web, released under Creative Commons (CC) licenses. The images were sourced using relevant keywords such as “Aerial Images”, “Supermarket Shelf”, “Household Fruits”, and “Many Birds and Ani- mals”. Initially, 40,000 images were considered, from which 30,230 images were selected based on the following criteria:

1. **Object instances**: Each image must contain at least five object instances, aiming to challenge object enumeration in complex scenarios;

2. **Image quality**: High-resolution images were selected to ensure clear object identification and counting;

3. **Severe occlusion**: We excluded images with significant occlusion to maintain accuracy in object counting;

4. **Object dimensions**: Images with objects too small or too distant for accurate counting or annotation were removed, ensuring all objects are adequately sized for analysis.

The selected images were annotated using the [Labelbox](https://labelbox.com) annotation platform.

### Statistics

The OmniCount-191 benchmark presents images with small, densely packed objects from multiple classes, reflecting real-world object counting scenarios. This dataset encompasses 30,230 images, with dimensions averaging 700 × 580 pixels. Each image contains an average of 10 objects, totaling 302,300 objects, with individual images ranging from 1 to 160 objects. To ensure diversity, the dataset is split into training and testing sets, with no overlap in object categories – 118 categories for training and 73 for testing, corresponding to a 60%-40% split. This results in 26,978 images for training and 3,252 for testing.

### Splits

We have prepared dedicated splits within the OmniCount-191 dataset to facilitate the assessment of object counting models under zero-shot and few-shot learning conditions. Please refer to the [technical report](https://arxiv.org/pdf/2403.05435.pdf) (Sec. 9.1, 9.2) for more detais.

## Citation

<!-- If there is a paper or blog post introducing the dataset, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

```

@article{mondal2024omnicount,

title={OmniCount: Multi-label Object Counting with Semantic-Geometric Priors},

author={Mondal, Anindya and Nag, Sauradip and Zhu, Xiatian and Dutta, Anjan},

journal={arXiv preprint arXiv:2403.05435},

year={2024}

}

```

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the dataset or dataset card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Dataset Card Authors

[Anindya Mondal](https://mondalanindya.github.io), [Sauradip Nag](http://sauradip.github.io/), [Xiatian Zhu](https://surrey-uplab.github.io), [Anjan Dutta](https://www.surrey.ac.uk/people/anjan-dutta)

## Dataset Card Contact

[More Information Needed] |