metadata

license: apache-2.0

datasets:

- datajuicer/redpajama-wiki-refined-by-data-juicer

- datajuicer/redpajama-arxiv-refined-by-data-juicer

- datajuicer/redpajama-c4-refined-by-data-juicer

- datajuicer/redpajama-book-refined-by-data-juicer

- datajuicer/redpajama-cc-2019-30-refined-by-data-juicer

- datajuicer/redpajama-cc-2020-05-refined-by-data-juicer

- datajuicer/redpajama-cc-2021-04-refined-by-data-juicer

- datajuicer/redpajama-cc-2022-05-refined-by-data-juicer

- datajuicer/redpajama-cc-2023-06-refined-by-data-juicer

- datajuicer/redpajama-pile-stackexchange-refined-by-data-juicer

- datajuicer/redpajama-stack-code-refined-by-data-juicer

- datajuicer/the-pile-nih-refined-by-data-juicer

- datajuicer/the-pile-europarl-refined-by-data-juicer

- datajuicer/the-pile-philpaper-refined-by-data-juicer

- datajuicer/the-pile-pubmed-abstracts-refined-by-data-juicer

- datajuicer/the-pile-pubmed-central-refined-by-data-juicer

- datajuicer/the-pile-freelaw-refined-by-data-juicer

- datajuicer/the-pile-hackernews-refined-by-data-juicer

- datajuicer/the-pile-uspto-refined-by-data-juicer

News

Our first data-centric LLM competition begins! Please visit the competition's official websites, FT-Data Ranker (1B Track, 7B Track), for more information.

Introduction

This is a reference LLM from Data-Juicer.

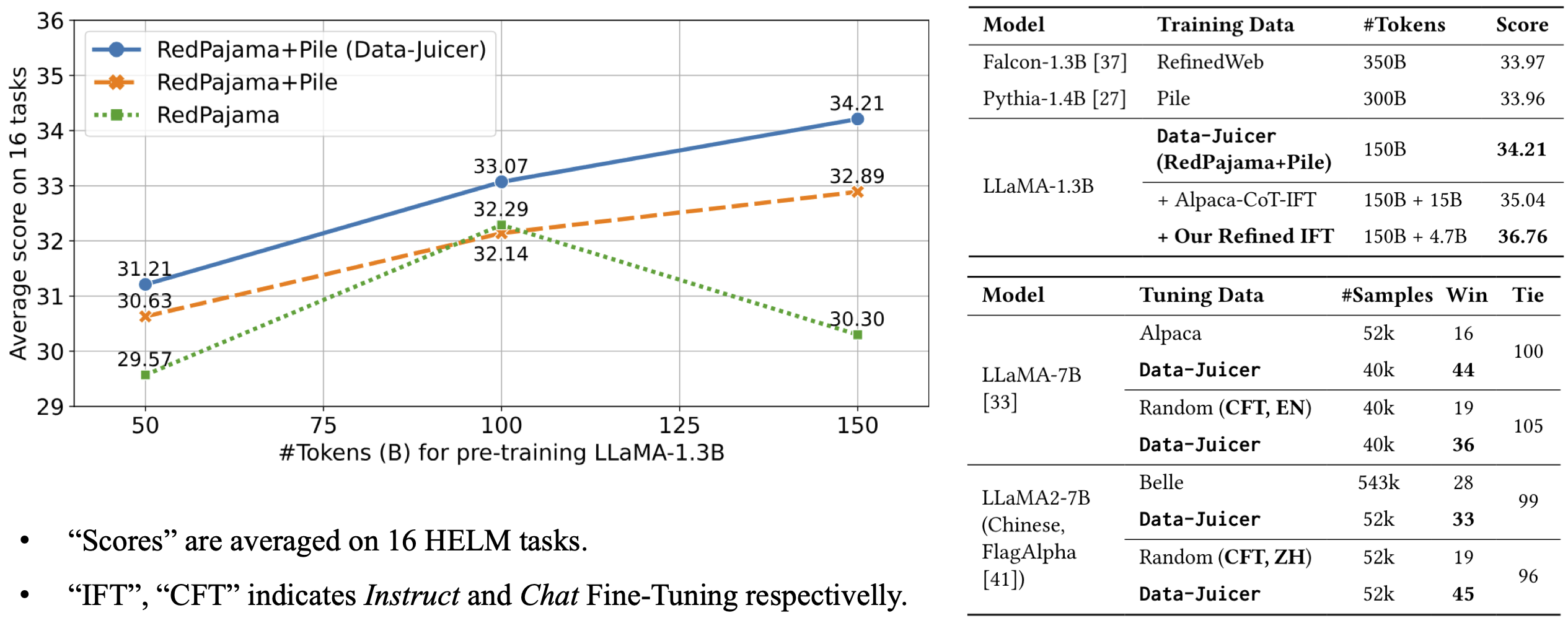

The model architecture is LLaMA-1.3B and we adopt the OpenLLaMA implementation. The model is pre-trained on 150B tokens of Data-Juicer's refined RedPajama and Pile. It achieves an average score of 34.21 over 16 HELM tasks, beating Falcon-1.3B (trained on 350B tokens from RefinedWeb), Pythia-1.4B (trained on 300B tokens from original Pile) and Open-LLaMA-1.3B (trained on 150B tokens from original RedPajama and Pile).

For more details, please refer to our paper.