File size: 2,117 Bytes

2da9e93 41177f7 0434df6 8c4e1c9 0434df6 8c4e1c9 41177f7 0434df6 8c4e1c9 0434df6 8c4e1c9 2da9e93 7a35218 2da9e93 9d075eb 8c4e1c9 9d075eb 2da9e93 9e1497b 2da9e93 9d075eb 2da9e93 0434df6 2da9e93 0434df6 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

---

license: apache-2.0

language:

- en

base_model:

- fal/AuraFlow-v0.3

pipeline_tag: text-to-image

tags:

- aura

- gguf-node

widget:

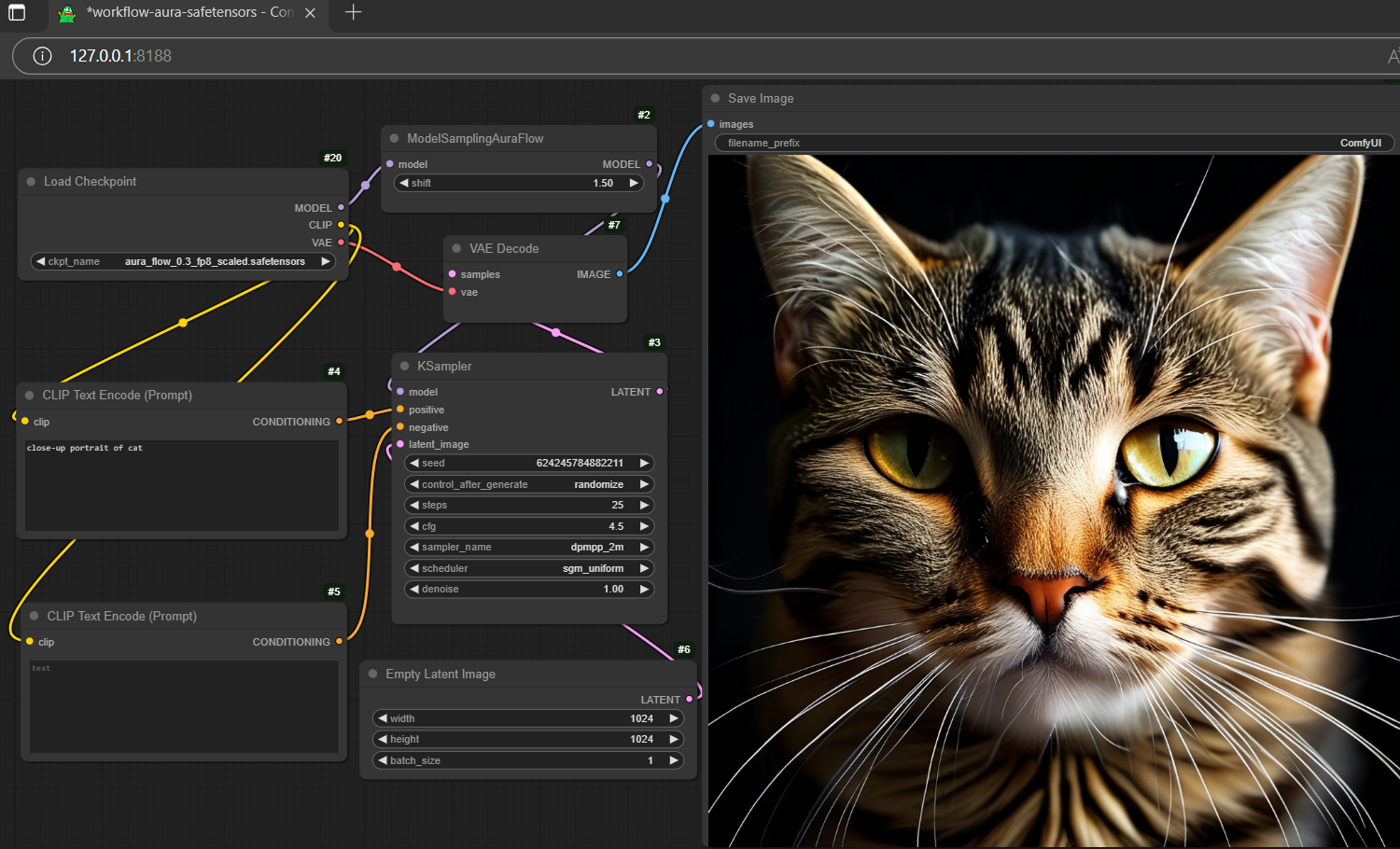

- text: close-up portrait of cat

output:

url: samples\ComfyUI_00003_.png

- text: close-up portrait of blooming daisy

output:

url: samples\ComfyUI_00002_.png

- text: close-up portrait of young lady

output:

url: samples\ComfyUI_00001_.png

---

# gguf quantized and fp8 scaled aura

## setup (once)

- drag aura_flow_0.3_q4_0.gguf [[3.95GB](https://huggingface.co/calcuis/aura/blob/main/aura_flow_0.3-q4_0.gguf)] to > ./ComfyUI/models/diffusion_models

- drag aura_flow_0.3_fp8_scaled.safetensors [[9.66GB](https://huggingface.co/calcuis/aura/blob/main/aura_flow_0.3_fp8_scaled.safetensors)] to > ./ComfyUI/models/checkpoints

- drag aura_vae.safetensors [[167MB](https://huggingface.co/calcuis/aura/blob/main/aura_vae.safetensors)] to > ./ComfyUI/models/vae

## run it straight (no installation needed way)

- run the .bat file in the main directory (assuming you are using the full pack below)

- drag the workflow json file (below) to > your browser

### workflows

- drag any workflow json file to the activated browser; or

- drag any generated picture (which contains the workflow metadata) to the activated browser

- workflow for [safetensors](https://huggingface.co/calcuis/aura/blob/main/workflow-aura-safetensors.json) (fp8 scaled version above is recommended)

- workflow for [gguf](https://huggingface.co/calcuis/aura/blob/main/workflow-gguf-aura.json) (opt to use the aura vae; but for the text encoder, since the unique format of that separate clip was not supported by comfyui yet, for the time being, use the one embedded inside fp8 scaled safetensors would be a better choice, it won't affect the speed, as the model will be loaded from gguf instead)

<Gallery />

### references

- base model from [fal](https://huggingface.co/fal/AuraFlow-v0.3)

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

- gguf-node [beta](https://github.com/calcuis/gguf/releases) |