Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,123 @@

|

|

| 1 |

---

|

| 2 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

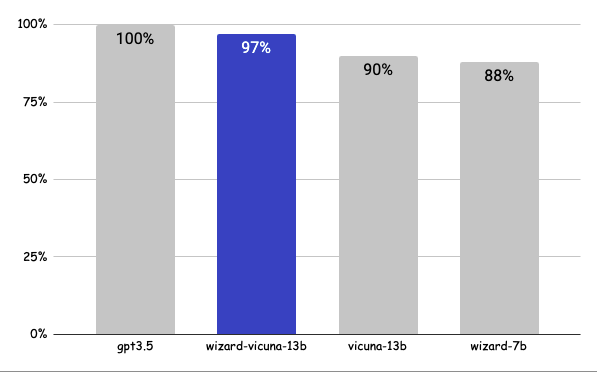

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

tags:

|

| 5 |

+

- causal-lm

|

| 6 |

+

- llama

|

| 7 |

+

inference: false

|

| 8 |

---

|

| 9 |

+

|

| 10 |

+

# Wizard-Vicuna-13B-GPTQ

|

| 11 |

+

|

| 12 |

+

This repo contains 4bit GPTQ format quantised models of [junlee's wizard-vicuna 13B](https://huggingface.co/junelee/wizard-vicuna-13b).

|

| 13 |

+

|

| 14 |

+

It is the result of quantising to 4bit using [GPTQ-for-LLaMa](https://github.com/qwopqwop200/GPTQ-for-LLaMa).

|

| 15 |

+

|

| 16 |

+

## Repositories available

|

| 17 |

+

|

| 18 |

+

* [4bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/stable-vicuna-13B-GPTQ).

|

| 19 |

+

* [4bit and 5bit GGML models for CPU inference](https://huggingface.co/TheBloke/stable-vicuna-13B-GGML).

|

| 20 |

+

* [Unquantised 16bit model in HF format](https://huggingface.co/TheBloke/stable-vicuna-13B-HF).

|

| 21 |

+

|

| 22 |

+

## How to easily download and use this model in text-generation-webui

|

| 23 |

+

|

| 24 |

+

Open the text-generation-webui UI as normal.

|

| 25 |

+

|

| 26 |

+

1. Click the **Model tab**.

|

| 27 |

+

2. Under **Download custom model or LoRA**, enter `TheBloke/wizard-vicuna-13B-GPTQ`.

|

| 28 |

+

3. Click **Download**.

|

| 29 |

+

4. Wait until it says it's finished downloading.

|

| 30 |

+

5. Click the **Refresh** icon next to **Model** in the top left.

|

| 31 |

+

6. In the **Model drop-down**: choose the model you just downloaded,`wizard-vicuna-13B-GPTQ`.

|

| 32 |

+

7. If you see an error in the bottom right, ignore it - it's temporary.

|

| 33 |

+

8. Fill out the `GPTQ parameters` on the right: `Bits = 4`, `Groupsize = 128`, `model_type = Llama`

|

| 34 |

+

9. Click **Save settings for this model** in the top right.

|

| 35 |

+

10. Click **Reload the Model** in the top right.

|

| 36 |

+

11. Once it says it's loaded, click the **Text Generation tab** and enter a prompt!

|

| 37 |

+

|

| 38 |

+

## Provided files

|

| 39 |

+

|

| 40 |

+

**Compatible file - stable-vicuna-13B-GPTQ-4bit.compat.no-act-order.safetensors**

|

| 41 |

+

|

| 42 |

+

In the `main` branch - the default one - you will find `stable-vicuna-13B-GPTQ-4bit.compat.no-act-order.safetensors`

|

| 43 |

+

|

| 44 |

+

This will work with all versions of GPTQ-for-LLaMa. It has maximum compatibility

|

| 45 |

+

|

| 46 |

+

It was created without the `--act-order` parameter. It may have slightly lower inference quality compared to the other file, but is guaranteed to work on all versions of GPTQ-for-LLaMa and text-generation-webui.

|

| 47 |

+

|

| 48 |

+

* `stable-vicuna-13B-GPTQ-4bit.compat.no-act-order.safetensors`

|

| 49 |

+

* Works with all versions of GPTQ-for-LLaMa code, both Triton and CUDA branches

|

| 50 |

+

* Works with text-generation-webui one-click-installers

|

| 51 |

+

* Parameters: Groupsize = 128g. No act-order.

|

| 52 |

+

* Command used to create the GPTQ:

|

| 53 |

+

```

|

| 54 |

+

CUDA_VISIBLE_DEVICES=0 python3 llama.py wizard-vicuna-13B-HF c4 --wbits 4 --true-sequential --groupsize 128 --save_safetensors wizard-vicuna-13B-GPTQ-4bit.compat.no-act-order.safetensors

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

# Original wizard-vicuna-13B model card

|

| 58 |

+

|

| 59 |

+

# WizardVicunaLM

|

| 60 |

+

### Wizard's dataset + ChatGPT's conversation extension + Vicuna's tuning method

|

| 61 |

+

I am a big fan of the ideas behind WizardLM and VicunaLM. I particularly like the idea of WizardLM handling the dataset itself more deeply and broadly, as well as VicunaLM overcoming the limitations of single-turn conversations by introducing multi-round conversations. As a result, I combined these two ideas to create WizardVicunaLM. This project is highly experimental and designed for proof of concept, not for actual usage.

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

## Benchmark

|

| 65 |

+

### Approximately 7% performance improvement over VicunaLM

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

### Detail

|

| 70 |

+

|

| 71 |

+

The questions presented here are not from rigorous tests, but rather, I asked a few questions and requested GPT-4 to score them. The models compared were ChatGPT 3.5, WizardVicunaLM, VicunaLM, and WizardLM, in that order.

|

| 72 |

+

|

| 73 |

+

| | gpt3.5 | wizard-vicuna-13b | vicuna-13b | wizard-7b | link |

|

| 74 |

+

|-----|--------|-------------------|------------|-----------|----------|

|

| 75 |

+

| Q1 | 95 | 90 | 85 | 88 | [link](https://sharegpt.com/c/YdhIlby) |

|

| 76 |

+

| Q2 | 95 | 97 | 90 | 89 | [link](https://sharegpt.com/c/YOqOV4g) |

|

| 77 |

+

| Q3 | 85 | 90 | 80 | 65 | [link](https://sharegpt.com/c/uDmrcL9) |

|

| 78 |

+

| Q4 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/XBbK5MZ) |

|

| 79 |

+

| Q5 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/AQ5tgQX) |

|

| 80 |

+

| Q6 | 92 | 85 | 87 | 88 | [link](https://sharegpt.com/c/eVYwfIr) |

|

| 81 |

+

| Q7 | 95 | 90 | 85 | 92 | [link](https://sharegpt.com/c/Kqyeub4) |

|

| 82 |

+

| Q8 | 90 | 85 | 75 | 70 | [link](https://sharegpt.com/c/M0gIjMF) |

|

| 83 |

+

| Q9 | 92 | 85 | 70 | 60 | [link](https://sharegpt.com/c/fOvMtQt) |

|

| 84 |

+

| Q10 | 90 | 80 | 75 | 85 | [link](https://sharegpt.com/c/YYiCaUz) |

|

| 85 |

+

| Q11 | 90 | 85 | 75 | 65 | [link](https://sharegpt.com/c/HMkKKGU) |

|

| 86 |

+

| Q12 | 85 | 90 | 80 | 88 | [link](https://sharegpt.com/c/XbW6jgB) |

|

| 87 |

+

| Q13 | 90 | 95 | 88 | 85 | [link](https://sharegpt.com/c/JXZb7y6) |

|

| 88 |

+

| Q14 | 94 | 89 | 90 | 91 | [link](https://sharegpt.com/c/cTXH4IS) |

|

| 89 |

+

| Q15 | 90 | 85 | 88 | 87 | [link](https://sharegpt.com/c/GZiM0Yt) |

|

| 90 |

+

| | 91 | 88 | 82 | 80 | |

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

## Principle

|

| 94 |

+

|

| 95 |

+

We adopted the approach of WizardLM, which is to extend a single problem more in-depth. However, instead of using individual instructions, we expanded it using Vicuna's conversation format and applied Vicuna's fine-tuning techniques.

|

| 96 |

+

|

| 97 |

+

Turning a single command into a rich conversation is what we've done [here](https://sharegpt.com/c/6cmxqq0).

|

| 98 |

+

|

| 99 |

+

After creating the training data, I later trained it according to the Vicuna v1.1 [training method](https://github.com/lm-sys/FastChat/blob/main/scripts/train_vicuna_13b.sh).

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

## Detailed Method

|

| 103 |

+

|

| 104 |

+

First, we explore and expand various areas in the same topic using the 7K conversations created by WizardLM. However, we made it in a continuous conversation format instead of the instruction format. That is, it starts with WizardLM's instruction, and then expands into various areas in one conversation using ChatGPT 3.5.

|

| 105 |

+

|

| 106 |

+

After that, we applied the following model using Vicuna's fine-tuning format.

|

| 107 |

+

|

| 108 |

+

## Training Process

|

| 109 |

+

|

| 110 |

+

Trained with 8 A100 GPUs for 35 hours.

|

| 111 |

+

|

| 112 |

+

## Weights

|

| 113 |

+

You can see the [dataset](https://huggingface.co/datasets/junelee/wizard_vicuna_70k) we used for training and the [13b model](https://huggingface.co/junelee/wizard-vicuna-13b) in the huggingface.

|

| 114 |

+

|

| 115 |

+

## Conclusion

|

| 116 |

+

If we extend the conversation to gpt4 32K, we can expect a dramatic improvement, as we can generate 8x more, more accurate and richer conversations.

|

| 117 |

+

|

| 118 |

+

## License

|

| 119 |

+

The model is licensed under the LLaMA model, and the dataset is licensed under the terms of OpenAI because it uses ChatGPT. Everything else is free.

|

| 120 |

+

|

| 121 |

+

## Author

|

| 122 |

+

|

| 123 |

+

[JUNE LEE](https://github.com/melodysdreamj) - He is active in Songdo Artificial Intelligence Study and GDG Songdo.

|