File size: 8,296 Bytes

47c4da6 1e5f0ed 47c4da6 1e5f0ed 3a6f4ab 1e5f0ed 73d6e2b 67e539e 73d6e2b 1e5f0ed 1b3937c 1e5f0ed 3a6f4ab 1e5f0ed |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 |

---

language:

- en

tags:

- causal-lm

- llama

inference: false

---

# Wizard-Vicuna-13B-GGML

This is GGML format quantised 4bit and 5bit models of [junelee's wizard-vicuna 13B](https://huggingface.co/junelee/wizard-vicuna-13b).

It is the result of quantising to 4bit and 5bit GGML for CPU inference using [llama.cpp](https://github.com/ggerganov/llama.cpp).

## Repositories available

* [4bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-GPTQ).

* [4bit and 5bit GGML models for CPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-GGML).

* [float16 HF format model for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-HF).

## Provided files

| Name | Quant method | Bits | Size | RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

`wizard-vicuna-13B.ggml.q4_0.bin` | q4_0 | 4bit | 8.14GB | 10.5GB | Maximum compatibility |

`wizard-vicuna-13B.ggml.q4_2.bin` | q4_2 | 4bit | 8.14GB | 10.5GB | Best compromise between resources, speed and quality |

`wizard-vicuna-13B.ggml.q5_0.bin` | q5_0 | 5bit | 8.95GB | 11.0GB | Brand new 5bit method. Potentially higher quality than 4bit, at cost of slightly higher resources. |

`wizard-vicuna-13B.ggml.q5_1.bin` | q5_1 | 5bit | 9.76GB | 12.25GB | Brand new 5bit method. Slightly higher resource usage than q5_0.|

* The q4_0 file provides lower quality, but maximal compatibility. It will work with past and future versions of llama.cpp

* The q4_2 file offers the best combination of performance and quality. This format is still subject to change and there may be compatibility issues, see below.

* The q5_0 file is using brand new 5bit method released 26th April. This is the 5bit equivalent of q4_0.

* The q5_1 file is using brand new 5bit method released 26th April. This is the 5bit equivalent of q4_1.

## q4_2 compatibility

q4_2 is a relatively new 4bit quantisation method offering improved quality. However they are still under development and their formats are subject to change.

In order to use these files you will need to use recent llama.cpp code. And it's possible that future updates to llama.cpp could require that these files are re-generated.

If and when the q4_2 file no longer works with recent versions of llama.cpp I will endeavour to update it.

If you want to ensure guaranteed compatibility with a wide range of llama.cpp versions, use the q4_0 file.

## q5_0 and q5_1 compatibility

These new methods were released to llama.cpp on 26th April. You will need to pull the latest llama.cpp code and rebuild to be able to use them.

Third party tools/UIs may or may not support them. Check you're using the latest version of any such tools and ask the devs for advice if you find you can't load q5 files.

## How to run in `llama.cpp`

I use the following command line; adjust for your tastes and needs:

```

./main -t 18 -m wizard-vicuna-13B.ggml.q4_2.bi --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "### Instruction: write a story about llamas ### Response:"

```

Change `-t 18` to the number of physical CPU cores you have. For example if your system has 8 cores/16 threads, use `-t 8`.

## How to run in `text-generation-webui`

GGML models can be loaded into text-generation-webui by installing the llama.cpp module, then placing the ggml model file in a model folder as usual.

Further instructions here: [text-generation-webui/docs/llama.cpp-models.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp-models.md).

Note: at this time text-generation-webui may not support the new q5 quantisation methods.

**Thireus** has written a [great guide on how to update it to the latest llama.cpp code](https://huggingface.co/TheBloke/wizardLM-7B-GGML/discussions/5) so that these files can be used in the UI.

# Original WizardVicuna-13B model card

Github page: https://github.com/melodysdreamj/WizardVicunaLM

# WizardVicunaLM

### Wizard's dataset + ChatGPT's conversation extension + Vicuna's tuning method

I am a big fan of the ideas behind WizardLM and VicunaLM. I particularly like the idea of WizardLM handling the dataset itself more deeply and broadly, as well as VicunaLM overcoming the limitations of single-turn conversations by introducing multi-round conversations. As a result, I combined these two ideas to create WizardVicunaLM. This project is highly experimental and designed for proof of concept, not for actual usage.

## Benchmark

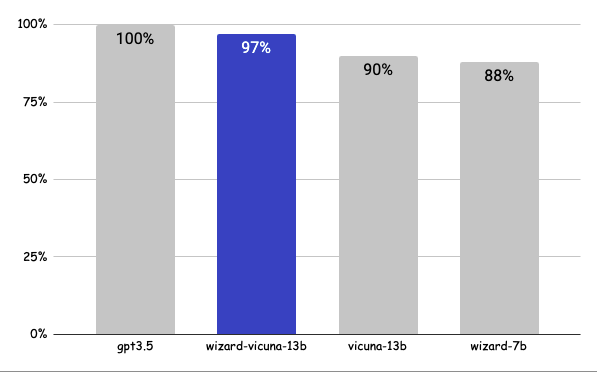

### Approximately 7% performance improvement over VicunaLM

### Detail

The questions presented here are not from rigorous tests, but rather, I asked a few questions and requested GPT-4 to score them. The models compared were ChatGPT 3.5, WizardVicunaLM, VicunaLM, and WizardLM, in that order.

| | gpt3.5 | wizard-vicuna-13b | vicuna-13b | wizard-7b | link |

|-----|--------|-------------------|------------|-----------|----------|

| Q1 | 95 | 90 | 85 | 88 | [link](https://sharegpt.com/c/YdhIlby) |

| Q2 | 95 | 97 | 90 | 89 | [link](https://sharegpt.com/c/YOqOV4g) |

| Q3 | 85 | 90 | 80 | 65 | [link](https://sharegpt.com/c/uDmrcL9) |

| Q4 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/XBbK5MZ) |

| Q5 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/AQ5tgQX) |

| Q6 | 92 | 85 | 87 | 88 | [link](https://sharegpt.com/c/eVYwfIr) |

| Q7 | 95 | 90 | 85 | 92 | [link](https://sharegpt.com/c/Kqyeub4) |

| Q8 | 90 | 85 | 75 | 70 | [link](https://sharegpt.com/c/M0gIjMF) |

| Q9 | 92 | 85 | 70 | 60 | [link](https://sharegpt.com/c/fOvMtQt) |

| Q10 | 90 | 80 | 75 | 85 | [link](https://sharegpt.com/c/YYiCaUz) |

| Q11 | 90 | 85 | 75 | 65 | [link](https://sharegpt.com/c/HMkKKGU) |

| Q12 | 85 | 90 | 80 | 88 | [link](https://sharegpt.com/c/XbW6jgB) |

| Q13 | 90 | 95 | 88 | 85 | [link](https://sharegpt.com/c/JXZb7y6) |

| Q14 | 94 | 89 | 90 | 91 | [link](https://sharegpt.com/c/cTXH4IS) |

| Q15 | 90 | 85 | 88 | 87 | [link](https://sharegpt.com/c/GZiM0Yt) |

| | 91 | 88 | 82 | 80 | |

## Principle

We adopted the approach of WizardLM, which is to extend a single problem more in-depth. However, instead of using individual instructions, we expanded it using Vicuna's conversation format and applied Vicuna's fine-tuning techniques.

Turning a single command into a rich conversation is what we've done [here](https://sharegpt.com/c/6cmxqq0).

After creating the training data, I later trained it according to the Vicuna v1.1 [training method](https://github.com/lm-sys/FastChat/blob/main/scripts/train_vicuna_13b.sh).

## Detailed Method

First, we explore and expand various areas in the same topic using the 7K conversations created by WizardLM. However, we made it in a continuous conversation format instead of the instruction format. That is, it starts with WizardLM's instruction, and then expands into various areas in one conversation using ChatGPT 3.5.

After that, we applied the following model using Vicuna's fine-tuning format.

## Training Process

Trained with 8 A100 GPUs for 35 hours.

## Weights

You can see the [dataset](https://huggingface.co/datasets/junelee/wizard_vicuna_70k) we used for training and the [13b model](https://huggingface.co/junelee/wizard-vicuna-13b) in the huggingface.

## Conclusion

If we extend the conversation to gpt4 32K, we can expect a dramatic improvement, as we can generate 8x more, more accurate and richer conversations.

## License

The model is licensed under the LLaMA model, and the dataset is licensed under the terms of OpenAI because it uses ChatGPT. Everything else is free.

## Author

[JUNE LEE](https://github.com/melodysdreamj) - He is active in Songdo Artificial Intelligence Study and GDG Songdo.

|