library_name: diffusers

SPRIGHT-T2I Model Card

The SPRIGHT-T2I model is a text-to-image diffusion model with high spatial coherency. It was first introduced in Getting it Right: Improving Spatial Consistency in Text-to-Image Models, authored by Agneet Chatterjee, Gabriela Ben Melech Stan, Estelle Aflalo, Sayak Paul, Dhruba Ghosh, Tejas Gokhale, Ludwig Schmidt, Hannaneh Hajishirzi, Vasudev Lal, Chitta Baral, and Yezhou Yang.

SPRIGHT-T2I model was finetuned from stable diffusion v2.1 on a subset of the SPRIGHT dataset, which contains images and spatially focused captions. Leveraging SPRIGHT, along with efficient training techniques, we achieve state-of-the art performance in generating spatially accurate images from text.

The training code and more details available in SPRIGHT-T2I GitHub Repository.

A demo is available on Spaces.

Use SPRIGHT-T2I with 🧨 diffusers.

Model Details

- Developed by: Agneet Chatterjee, Gabriela Ben Melech Stan, Estelle Aflalo, Sayak Paul, Dhruba Ghosh, Tejas Gokhale, Ludwig Schmidt, Hannaneh Hajishirzi, Vasudev Lal, Chitta Baral, and Yezhou Yang

- Model type: Diffusion-based text-to-image generation model with spatial coherency

- Language(s) (NLP): English

- License: [More Information Needed]

- Finetuned from model: Stable Diffusion v2-1

Usage

Use the code below to run SPRIGHT-T2I seamlessly and effectively on 🤗's Diffusers library .

pip install diffusers transformers accelerate scipy safetensors

Running the pipeline:

from diffusers import DiffusionPipeline

pipe_id = "SPRIGHT-T2I/spright-t2i-sd2"

pipe = DiffusionPipeline.from_pretrained(

pipe_id,

torch_dtype=torch.float16,

use_safetensors=True,

).to("cuda")

prompt = "a cute kitten is sitting in a dish on a table"

image = pipe(prompt).images[0]

image.save("kitten_sittin_in_a_dish.png")

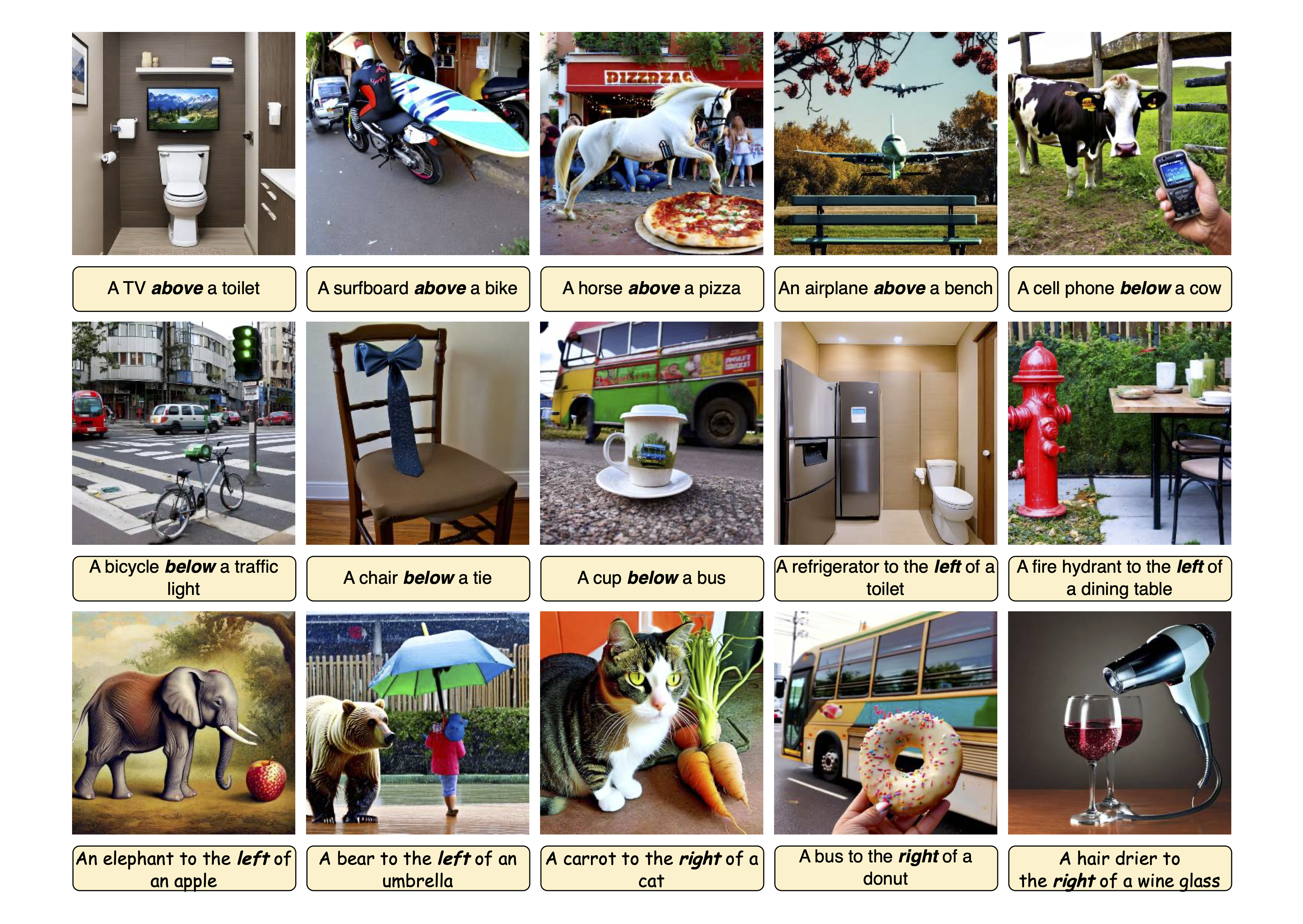

Additional examples that emphasize spatial coherence:

Bias and Limitations

The biases and limitation as specified in Stable Diffusion v2-1 apply here as well.

Training

Training Data

Our training and validation set are a subset of the SPRIGHT dataset, and consists of 444 and 50 images respectively, randomly sampled in a 50:50 split between LAION-Aesthetics and Segment Anything. Each image is paired with both, a general and a spatial caption (from SPRIGHT). During fine-tuning, for each image, we randomly choose one of the given caption types in a 50:50 ratio.

We find that SPRIGHT largely improves upon existing datasets in capturing spatial relationships. Additionally, we find that training on images containing a large number of objects results in substantial improvements in spatial consistency. To construct our dataset, we focused on images with object counts larger than 18, utilizing the open-world image tagging model Recognize Anything to achieve this constraint.

Training Procedure

Our base model is Stable Diffusion v2.1. We fine-tune the U-Net and the OpenCLIP-ViT/H text-encoder as part of our training for 10,000 steps, with different learning rates.

- Training regime: fp16 mixed precision

- Optimizer: AdamW

- Gradient Accumulations: 1

- Batch: 4 x 8 = 32

- UNet learning rate: 0.00005

- CLIP text-encoder learning rate: 0.000001

- Hardware: Training was performed using NVIDIA RTX A6000 GPUs and Intel®Gaudi®2 AI accelerators.

Evaluation

We find that compared to the baseline model SD 2.1, we largely improve the spatial accuracy, while also enhancing the non-spatial aspects associated with a text-to-image model.

The following table compares our SPRIGHT-T2I model with SD 2.1 across multiple spatial reasoning and image quality:

| Method | OA(%) ↑ | VISOR-4(%) ↑ | T2I-CompBench ↑ | FID ↓ | CCMD ↓ |

|---|---|---|---|---|---|

| SD v2.1 | 47.83 | 4.70 | 0.1507 | 27.39 | 1.060 |

| SPRIGHT-T2I (ours) | 60.68 | 16.15 | 0.2133 | 27.82 | 0.512 |

Our key findings are:

- Increased the Object Accuracy (OA) score by 26.86%, indicating that we are much better at generating objects mentioned in the input prompt

- Visor-4 score of 16.15% denotes that for a given input prompt, we consistently generate a spatially accurate image

- Improve on all aspects of the VISOR score while improving the ZS-FID and CMMD score on COCO-30K images by 23.74% and 51.69%, respectively

- Enhance the ability to generate 1 and 2 objects, along with generating the correct number of objects, as indicated by evaluation on the GenEval benchmark.

Model Sources

- Repository: SPRIGHT-T2I GitHub Repository

- Paper: Getting it Right: Improving Spatial Consistency in Text-to-Image Models

- Demo: SPRIGHT-T2I on Spaces

Citation

Coming soon