File size: 9,725 Bytes

c673305 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

StructLM-7B - GGUF

- Model creator: https://huggingface.co/TIGER-Lab/

- Original model: https://huggingface.co/TIGER-Lab/StructLM-7B/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [StructLM-7B.Q2_K.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q2_K.gguf) | Q2_K | 2.36GB |

| [StructLM-7B.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.IQ3_XS.gguf) | IQ3_XS | 2.6GB |

| [StructLM-7B.IQ3_S.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.IQ3_S.gguf) | IQ3_S | 2.75GB |

| [StructLM-7B.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q3_K_S.gguf) | Q3_K_S | 2.75GB |

| [StructLM-7B.IQ3_M.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.IQ3_M.gguf) | IQ3_M | 2.9GB |

| [StructLM-7B.Q3_K.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q3_K.gguf) | Q3_K | 3.07GB |

| [StructLM-7B.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q3_K_M.gguf) | Q3_K_M | 3.07GB |

| [StructLM-7B.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q3_K_L.gguf) | Q3_K_L | 3.35GB |

| [StructLM-7B.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.IQ4_XS.gguf) | IQ4_XS | 3.4GB |

| [StructLM-7B.Q4_0.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q4_0.gguf) | Q4_0 | 3.56GB |

| [StructLM-7B.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.IQ4_NL.gguf) | IQ4_NL | 3.58GB |

| [StructLM-7B.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q4_K_S.gguf) | Q4_K_S | 3.59GB |

| [StructLM-7B.Q4_K.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q4_K.gguf) | Q4_K | 3.8GB |

| [StructLM-7B.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q4_K_M.gguf) | Q4_K_M | 3.8GB |

| [StructLM-7B.Q4_1.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q4_1.gguf) | Q4_1 | 3.95GB |

| [StructLM-7B.Q5_0.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q5_0.gguf) | Q5_0 | 4.33GB |

| [StructLM-7B.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q5_K_S.gguf) | Q5_K_S | 4.33GB |

| [StructLM-7B.Q5_K.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q5_K.gguf) | Q5_K | 4.45GB |

| [StructLM-7B.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q5_K_M.gguf) | Q5_K_M | 4.45GB |

| [StructLM-7B.Q5_1.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q5_1.gguf) | Q5_1 | 4.72GB |

| [StructLM-7B.Q6_K.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q6_K.gguf) | Q6_K | 5.15GB |

| [StructLM-7B.Q8_0.gguf](https://huggingface.co/RichardErkhov/TIGER-Lab_-_StructLM-7B-gguf/blob/main/StructLM-7B.Q8_0.gguf) | Q8_0 | 6.67GB |

Original model description:

---

license: mit

datasets:

- TIGER-Lab/SKGInstruct

language:

- en

---

# 🏗️ StructLM: Towards Building Generalist Models for Structured Knowledge Grounding

<span style="color:red">This checkpoing seems to have some issue, please use https://huggingface.co/TIGER-Lab/StructLM-7B-Mistral instead.</span>

Project Page: [https://tiger-ai-lab.github.io/StructLM/](https://tiger-ai-lab.github.io/StructLM/)

Paper: [https://arxiv.org/pdf/2402.16671.pdf](https://arxiv.org/pdf/2402.16671.pdf)

Code: [https://github.com/TIGER-AI-Lab/StructLM](https://github.com/TIGER-AI-Lab/StructLM)

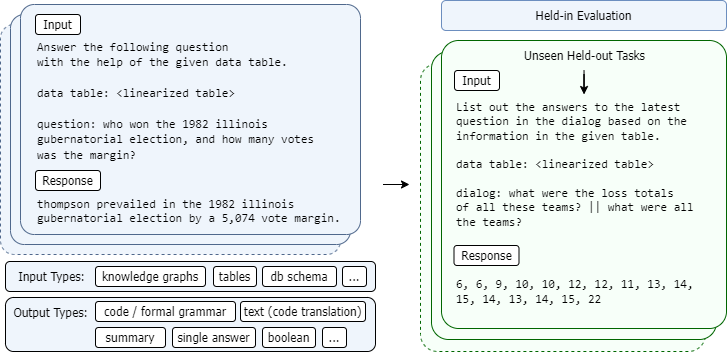

## Introduction

StructLM, is a series of open-source large language models (LLMs) finetuned for structured knowledge grounding (SKG) tasks. We release 3 models:

7B | [StructLM-7B](https://huggingface.co/TIGER-Lab/StructLM-7B)

13B | [StructLM-13B](https://huggingface.co/TIGER-Lab/StructLM-13B)

34B | [StructLM-34B](https://huggingface.co/TIGER-Lab/StructLM-34B)

## Training Data

These models are trained on 🤗 [SKGInstruct Dataset](https://huggingface.co/datasets/TIGER-Lab/SKGInstruct), an instruction-tuning dataset containing mixture of 19 SKG tasks combined with 🤗 [SlimOrca](https://huggingface.co/datasets/Open-Orca/SlimOrca). Check out the dataset card for more details.

## Training Procedure

The models are fine-tuned with CodeLlama-Instruct-hf models as base models. Each model is trained for 3 epochs, and the best checkpoint is selected.

## Evaluation

Here are a subset of model evaluation results:

### Held in

| **Model** | **ToTTo** | **GrailQA** | **CompWebQ** | **MMQA** | **Feverous** | **Spider** | **TabFact** | **Dart** |

|-----------------------|--------------|----------|----------|----------|----------|----------|----------|----------|

| **StructLM-7B** | 49.4 | 80.4 | 78.3 | 85.2 | 84.4 | 72.4 | 80.8 | 62.2 |

| **StructLM-13B** | 49.3 | 79.2 | 80.4 | 86.0 | 85.0 | 74.1 | 84.7 | 61.4 |

| **StructLM-34B** | 50.2 | 82.2 | 81.9 | 88.1 | 85.7 | 74.6 | 86.6 | 61.8 |

### Held out

| **Model** | **BIRD** | **InfoTabs** | **FinQA** | **SQA** |

|-----------------------|--------------|----------|----------|----------|

| **StructLM-7B** | 22.3 | 55.3 | 27.3 | 49.7 |

| **StructLM-13B** | 22.8 | 58.1 | 25.6 | 36.1 |

| **StructLM-34B** | 24.7 | 61.8 | 36.2 | 44.2 |

## Usage

You can use the models through Huggingface's Transformers library.

Check our Github repo for the evaluation code: [https://github.com/TIGER-AI-Lab/StructLM](https://github.com/TIGER-AI-Lab/StructLM)

## Prompt Format

**For this 7B model, the prompt format (different from 13B, 34B) is**

```

[INST] <<SYS>>

You are an AI assistant that specializes in analyzing and reasoning over structured information. You will be given a task, optionally with some structured knowledge input. Your answer must strictly adhere to the output format, if specified.

<</SYS>>

{instruction} [/INST]

```

To see concrete examples of this linearization, you can directly reference the 🤗 [SKGInstruct Dataset](https://huggingface.co/datasets/TIGER-Lab/SKGInstruct) (coming soon).

We will provide code for linearizing this data shortly.

A few examples:

**Tabular data**

```

col : day | kilometers row 1 : tuesday | 0 row 2 : wednesday | 0 row 3 : thursday | 4 row 4 : friday | 0 row 5 : saturday | 0

```

**Knowledge triples (dart)**

```

Hawaii Five-O : notes : Episode: The Flight of the Jewels | [TABLECONTEXT] : [title] : Jeff Daniels | [TABLECONTEXT] : title : Hawaii Five-O

```

**Knowledge graph schema (grailqa)**

```

top antiquark: m.094nrqp | physics.particle_antiparticle.self_antiparticle physics.particle_family physics.particle.antiparticle physics.particle_family.subclasses physics.subatomic_particle_generation physics.particle_family.particles physics.particle common.image.appears_in_topic_gallery physics.subatomic_particle_generation.particles physics.particle.family physics.particle_family.parent_class physics.particle_antiparticle physics.particle_antiparticle.particle physics.particle.generation

```

**Example input**

```

[INST] <<SYS>>

You are an AI assistant that specializes in analyzing and reasoning over structured information. You will be given a task, optionally with some structured knowledge input. Your answer must strictly adhere to the output format, if specified.

<</SYS>>

Use the information in the following table to solve the problem, choose between the choices if they are provided. table:

col : day | kilometers row 1 : tuesday | 0 row 2 : wednesday | 0 row 3 : thursday | 4 row 4 : friday | 0 row 5 : saturday | 0

question:

Allie kept track of how many kilometers she walked during the past 5 days. What is the range of the numbers? [/INST]

```

## Intended Uses

These models are trained for research purposes. They are designed to be proficient in interpreting linearized structured input. Downstream uses can potentially include various applications requiring the interpretation of structured data.

## Limitations

While we've tried to build an SKG-specialized model capable of generalizing, we have shown that this is a challenging domain, and it may lack performance characteristics that allow it to be directly used in chat or other applications.

## Citation

If you use the models, data, or code from this project, please cite the original paper:

```

@misc{zhuang2024structlm,

title={StructLM: Towards Building Generalist Models for Structured Knowledge Grounding},

author={Alex Zhuang and Ge Zhang and Tianyu Zheng and Xinrun Du and Junjie Wang and Weiming Ren and Stephen W. Huang and Jie Fu and Xiang Yue and Wenhu Chen},

year={2024},

eprint={2402.16671},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|