File size: 4,887 Bytes

72ff623 f7b73d0 72ff623 f7b73d0 72ff623 c3d5a10 72ff623 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 |

---

language:

- en

license: apache-2.0

tags:

- mistral

- instruct

- finetune

- chatml

- gpt4

- synthetic data

- distillation

- multimodal

- llava

- llava

base_model: mistralai/Mistral-7B-v0.1

pipeline_tag: image-text-to-text

model-index:

- name: Nous-Hermes-2-Vision

results: []

---

GGUF Quants by Twobob, Thanks to @jartine and @cmp-nct for the assists

It's vicuna ref: [here](https://github.com/qnguyen3/hermes-llava/blob/173b4ef441b5371c1e7d99da7a2e7c14c77ad12f/llava/conversation.py#L252)

Caveat emptor: There is still some kind of bug in the inference that is likely to get fixed upstream. Just FYI

# Nous-Hermes-2-Vision - Mistral 7B

*In the tapestry of Greek mythology, Hermes reigns as the eloquent Messenger of the Gods, a deity who deftly bridges the realms through the art of communication. It is in homage to this divine mediator that I name this advanced LLM "Hermes," a system crafted to navigate the complex intricacies of human discourse with celestial finesse.*

## Model description

Nous-Hermes-2-Vision stands as a pioneering Vision-Language Model, leveraging advancements from the renowned **OpenHermes-2.5-Mistral-7B** by teknium. This model incorporates two pivotal enhancements, setting it apart as a cutting-edge solution:

- **SigLIP-400M Integration**: Diverging from traditional approaches that rely on substantial 3B vision encoders, Nous-Hermes-2-Vision harnesses the formidable SigLIP-400M. This strategic choice not only streamlines the model's architecture, making it more lightweight, but also capitalizes on SigLIP's remarkable capabilities. The result? A remarkable boost in performance that defies conventional expectations.

- **Custom Dataset Enriched with Function Calling**: Our model's training data includes a unique feature – function calling. This distinctive addition transforms Nous-Hermes-2-Vision into a **Vision-Language Action Model**. Developers now have a versatile tool at their disposal, primed for crafting a myriad of ingenious automations.

This project is led by [qnguyen3](https://twitter.com/stablequan) and [teknium](https://twitter.com/Teknium1).

## Training

### Dataset

- 220K from **LVIS-INSTRUCT4V**

- 60K from **ShareGPT4V**

- 150K Private **Function Calling Data**

- 50K conversations from teknium's **OpenHermes-2.5**

## Usage

### Prompt Format

- Like other LLaVA's variants, this model uses Vicuna-V1 as its prompt template. Please refer to `conv_llava_v1` in [this file](https://github.com/qnguyen3/hermes-llava/blob/main/llava/conversation.py)

- For Gradio UI, please visit this [GitHub Repo](https://github.com/qnguyen3/hermes-llava)

### Function Calling

- For functiong calling, the message should start with a `<fn_call>` tag. Here is an example:

```json

<fn_call>{

"type": "object",

"properties": {

"bus_colors": {

"type": "array",

"description": "The colors of the bus in the image.",

"items": {

"type": "string",

"enum": ["red", "blue", "green", "white"]

}

},

"bus_features": {

"type": "string",

"description": "The features seen on the back of the bus."

},

"bus_location": {

"type": "string",

"description": "The location of the bus (driving or pulled off to the side).",

"enum": ["driving", "pulled off to the side"]

}

}

}

```

Output:

```json

{

"bus_colors": ["red", "white"],

"bus_features": "An advertisement",

"bus_location": "driving"

}

```

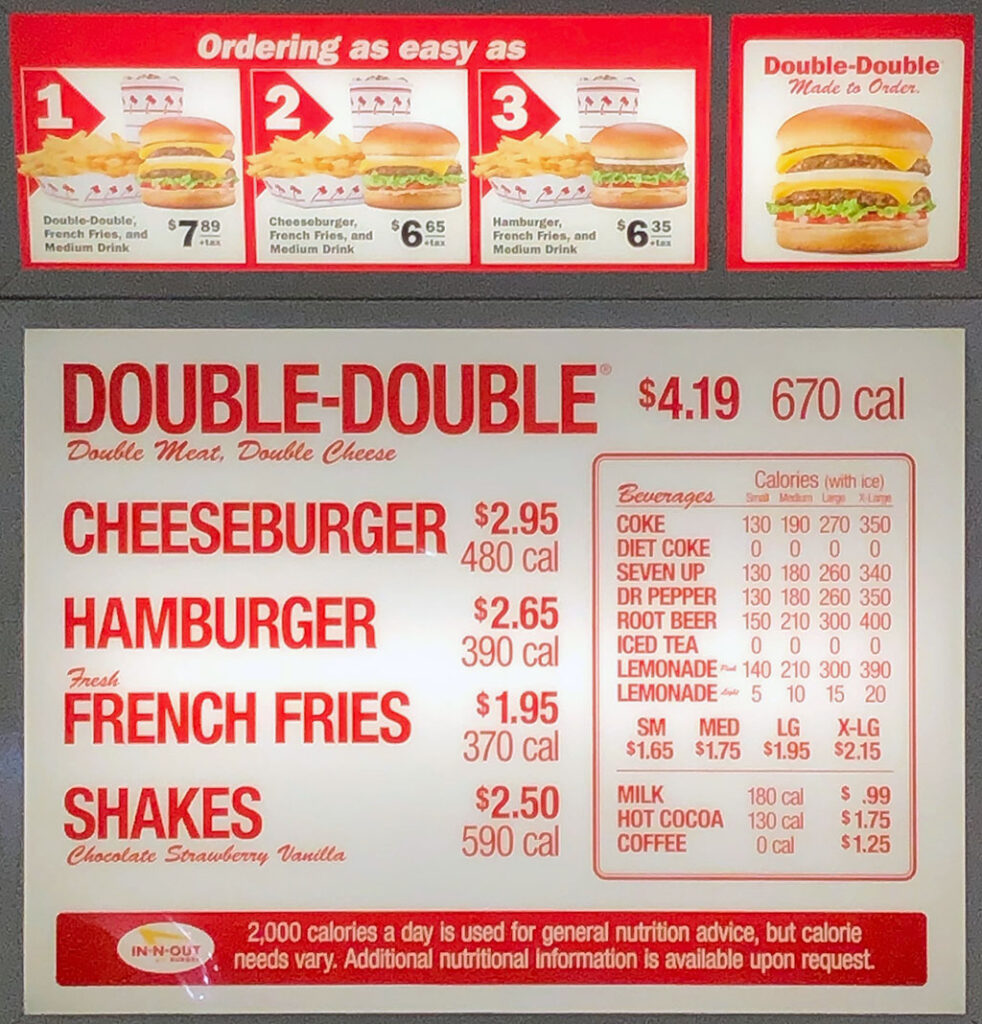

## Example

### Chat

### Function Calling

Input image:

Input message:

```json

<fn_call>{

"type": "object",

"properties": {

"food_list": {

"type": "array",

"description": "List of all the food",

"items": {

"type": "string",

}

},

}

}

```

Output:

```json

{

"food_list": [

"Double Burger",

"Cheeseburger",

"French Fries",

"Shakes",

"Coffee"

]

}

``` |