Upload folder using huggingface_hub (#1)

Browse files- c4271efb8b34156b208ac18688c9bb1865f22810ee14f973918b406b89986d17 (9043ce3fe9beaf372475cfc02be6590d2348593f)

- README.md +84 -0

- config.json +42 -0

- configuration_gpt_refact.py +51 -0

- generation_config.json +6 -0

- model.safetensors +3 -0

- modeling_gpt_refact.py +602 -0

- plots.png +0 -0

- smash_config.json +27 -0

README.md

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

thumbnail: "https://assets-global.website-files.com/646b351987a8d8ce158d1940/64ec9e96b4334c0e1ac41504_Logo%20with%20white%20text.svg"

|

| 3 |

+

metrics:

|

| 4 |

+

- memory_disk

|

| 5 |

+

- memory_inference

|

| 6 |

+

- inference_latency

|

| 7 |

+

- inference_throughput

|

| 8 |

+

- inference_CO2_emissions

|

| 9 |

+

- inference_energy_consumption

|

| 10 |

+

tags:

|

| 11 |

+

- pruna-ai

|

| 12 |

+

---

|

| 13 |

+

<!-- header start -->

|

| 14 |

+

<!-- 200823 -->

|

| 15 |

+

<div style="width: auto; margin-left: auto; margin-right: auto">

|

| 16 |

+

<a href="https://www.pruna.ai/" target="_blank" rel="noopener noreferrer">

|

| 17 |

+

<img src="https://i.imgur.com/eDAlcgk.png" alt="PrunaAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

|

| 18 |

+

</a>

|

| 19 |

+

</div>

|

| 20 |

+

<!-- header end -->

|

| 21 |

+

|

| 22 |

+

[](https://twitter.com/PrunaAI)

|

| 23 |

+

[](https://github.com/PrunaAI)

|

| 24 |

+

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

|

| 25 |

+

[](https://discord.gg/CP4VSgck)

|

| 26 |

+

|

| 27 |

+

# Simply make AI models cheaper, smaller, faster, and greener!

|

| 28 |

+

|

| 29 |

+

- Give a thumbs up if you like this model!

|

| 30 |

+

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 31 |

+

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 32 |

+

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

|

| 33 |

+

- Join Pruna AI community on Discord [here](https://discord.gg/CP4VSgck) to share feedback/suggestions or get help.

|

| 34 |

+

|

| 35 |

+

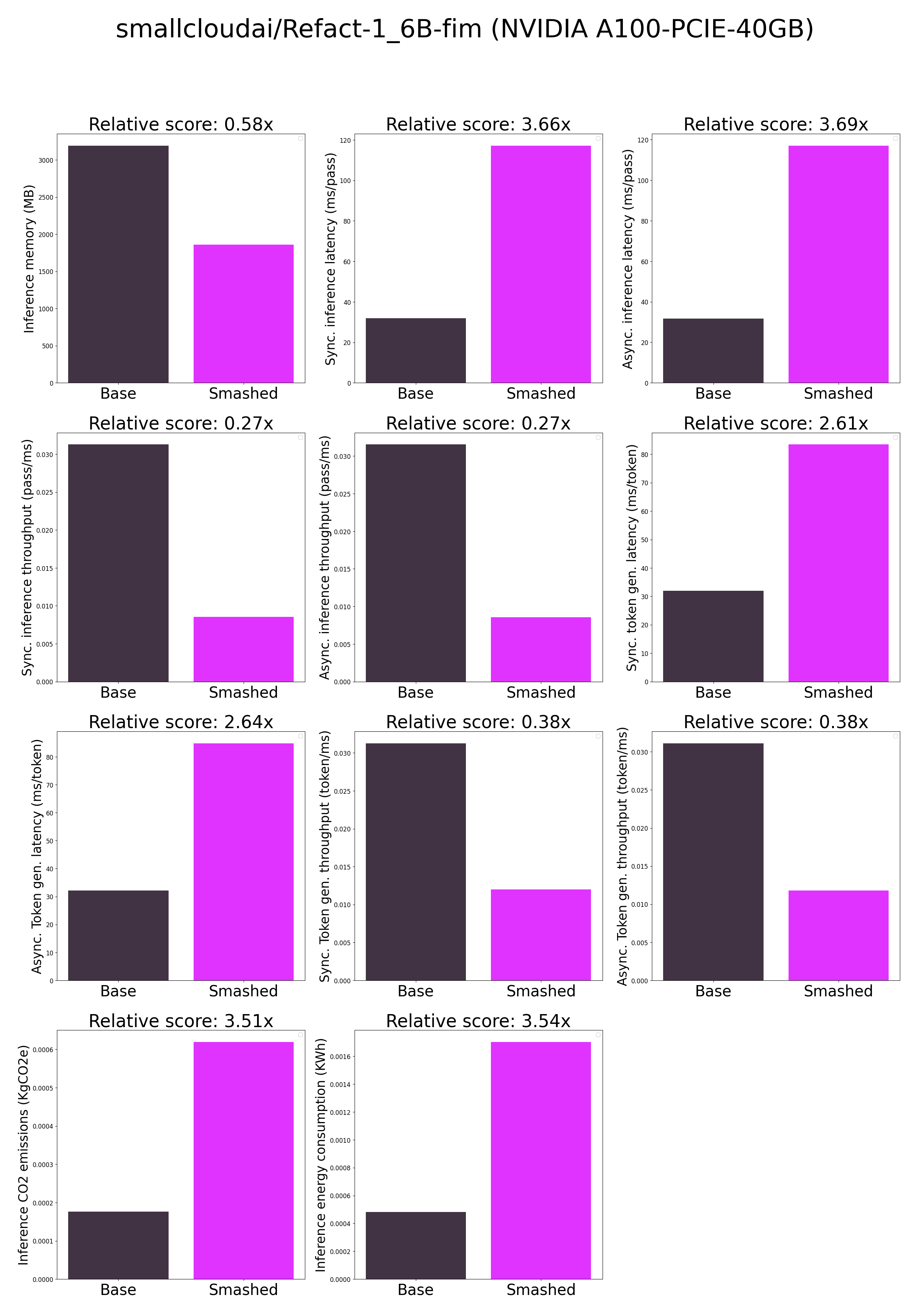

## Results

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

**Frequently Asked Questions**

|

| 40 |

+

- ***How does the compression work?*** The model is compressed with llm-int8.

|

| 41 |

+

- ***How does the model quality change?*** The quality of the model output might vary compared to the base model.

|

| 42 |

+

- ***How is the model efficiency evaluated?*** These results were obtained on NVIDIA A100-PCIE-40GB with configuration described in `model/smash_config.json` and are obtained after a hardware warmup. The smashed model is directly compared to the original base model. Efficiency results may vary in other settings (e.g. other hardware, image size, batch size, ...). We recommend to directly run them in the use-case conditions to know if the smashed model can benefit you.

|

| 43 |

+

- ***What is the model format?*** We use safetensors.

|

| 44 |

+

- ***What calibration data has been used?*** If needed by the compression method, we used WikiText as the calibration data.

|

| 45 |

+

- ***What is the naming convention for Pruna Huggingface models?*** We take the original model name and append "turbo", "tiny", or "green" if the smashed model has a measured inference speed, inference memory, or inference energy consumption which is less than 90% of the original base model.

|

| 46 |

+

- ***How to compress my own models?*** You can request premium access to more compression methods and tech support for your specific use-cases [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 47 |

+

- ***What are "first" metrics?*** Results mentioning "first" are obtained after the first run of the model. The first run might take more memory or be slower than the subsequent runs due cuda overheads.

|

| 48 |

+

- ***What are "Sync" and "Async" metrics?*** "Sync" metrics are obtained by syncing all GPU processes and stop measurement when all of them are executed. "Async" metrics are obtained without syncing all GPU processes and stop when the model output can be used by the CPU. We provide both metrics since both could be relevant depending on the use-case. We recommend to test the efficiency gains directly in your use-cases.

|

| 49 |

+

|

| 50 |

+

## Setup

|

| 51 |

+

|

| 52 |

+

You can run the smashed model with these steps:

|

| 53 |

+

|

| 54 |

+

0. Check requirements from the original repo smallcloudai/Refact-1_6B-fim installed. In particular, check python, cuda, and transformers versions.

|

| 55 |

+

1. Make sure that you have installed quantization related packages.

|

| 56 |

+

```bash

|

| 57 |

+

pip install transformers accelerate bitsandbytes>0.37.0

|

| 58 |

+

```

|

| 59 |

+

2. Load & run the model.

|

| 60 |

+

```python

|

| 61 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 62 |

+

|

| 63 |

+

model = AutoModelForCausalLM.from_pretrained("PrunaAI/smallcloudai-Refact-1_6B-fim-bnb-8bit-smashed",

|

| 64 |

+

trust_remote_code=True)

|

| 65 |

+

tokenizer = AutoTokenizer.from_pretrained("smallcloudai/Refact-1_6B-fim")

|

| 66 |

+

|

| 67 |

+

input_ids = tokenizer("What is the color of prunes?,", return_tensors='pt').to(model.device)["input_ids"]

|

| 68 |

+

|

| 69 |

+

outputs = model.generate(input_ids, max_new_tokens=216)

|

| 70 |

+

tokenizer.decode(outputs[0])

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

## Configurations

|

| 74 |

+

|

| 75 |

+

The configuration info are in `smash_config.json`.

|

| 76 |

+

|

| 77 |

+

## Credits & License

|

| 78 |

+

|

| 79 |

+

The license of the smashed model follows the license of the original model. Please check the license of the original model smallcloudai/Refact-1_6B-fim before using this model which provided the base model. The license of the `pruna-engine` is [here](https://pypi.org/project/pruna-engine/) on Pypi.

|

| 80 |

+

|

| 81 |

+

## Want to compress other models?

|

| 82 |

+

|

| 83 |

+

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 84 |

+

- Request access to easily compress your own AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

config.json

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "/tmp/tmphei5qhy9",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"GPTRefactForCausalLM"

|

| 5 |

+

],

|

| 6 |

+

"attention_bias_in_fp32": true,

|

| 7 |

+

"attention_softmax_in_fp32": true,

|

| 8 |

+

"auto_map": {

|

| 9 |

+

"AutoConfig": "configuration_gpt_refact.GPTRefactConfig",

|

| 10 |

+

"AutoModelForCausalLM": "modeling_gpt_refact.GPTRefactForCausalLM"

|

| 11 |

+

},

|

| 12 |

+

"do_sample": true,

|

| 13 |

+

"eos_token_id": 0,

|

| 14 |

+

"initializer_range": 0.02,

|

| 15 |

+

"layer_norm_epsilon": 1e-05,

|

| 16 |

+

"model_type": "gpt_refact",

|

| 17 |

+

"multi_query": true,

|

| 18 |

+

"n_embd": 2048,

|

| 19 |

+

"n_head": 32,

|

| 20 |

+

"n_inner": null,

|

| 21 |

+

"n_layer": 32,

|

| 22 |

+

"n_positions": 4096,

|

| 23 |

+

"quantization_config": {

|

| 24 |

+

"bnb_4bit_compute_dtype": "bfloat16",

|

| 25 |

+

"bnb_4bit_quant_type": "fp4",

|

| 26 |

+

"bnb_4bit_use_double_quant": true,

|

| 27 |

+

"llm_int8_enable_fp32_cpu_offload": false,

|

| 28 |

+

"llm_int8_has_fp16_weight": false,

|

| 29 |

+

"llm_int8_skip_modules": [

|

| 30 |

+

"lm_head"

|

| 31 |

+

],

|

| 32 |

+

"llm_int8_threshold": 6.0,

|

| 33 |

+

"load_in_4bit": false,

|

| 34 |

+

"load_in_8bit": true,

|

| 35 |

+

"quant_method": "bitsandbytes"

|

| 36 |

+

},

|

| 37 |

+

"scale_attention_softmax_in_fp32": true,

|

| 38 |

+

"torch_dtype": "float16",

|

| 39 |

+

"transformers_version": "4.37.1",

|

| 40 |

+

"use_cache": true,

|

| 41 |

+

"vocab_size": 49216

|

| 42 |

+

}

|

configuration_gpt_refact.py

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers.configuration_utils import PretrainedConfig

|

| 2 |

+

from transformers.utils import logging

|

| 3 |

+

|

| 4 |

+

logger = logging.get_logger(__name__)

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class GPTRefactConfig(PretrainedConfig):

|

| 8 |

+

model_type = "gpt_refact"

|

| 9 |

+

keys_to_ignore_at_inference = ["past_key_values"]

|

| 10 |

+

attribute_map = {

|

| 11 |

+

"hidden_size": "n_embd",

|

| 12 |

+

"max_position_embeddings": "n_positions",

|

| 13 |

+

"num_attention_heads": "n_head",

|

| 14 |

+

"num_hidden_layers": "n_layer",

|

| 15 |

+

}

|

| 16 |

+

|

| 17 |

+

def __init__(

|

| 18 |

+

self,

|

| 19 |

+

vocab_size: int = 49216,

|

| 20 |

+

n_positions: int = 4096,

|

| 21 |

+

n_embd: int = 1024,

|

| 22 |

+

n_layer: int = 32,

|

| 23 |

+

n_head: int = 64,

|

| 24 |

+

max_position_embeddings: int = 4096,

|

| 25 |

+

multi_query: bool = True,

|

| 26 |

+

layer_norm_epsilon: float = 1e-5,

|

| 27 |

+

initializer_range: float = 0.02,

|

| 28 |

+

use_cache: bool = True,

|

| 29 |

+

eos_token_id: int = 0,

|

| 30 |

+

attention_softmax_in_fp32: bool = True,

|

| 31 |

+

scale_attention_softmax_in_fp32: bool = True,

|

| 32 |

+

attention_bias_in_fp32: bool = True,

|

| 33 |

+

torch_dtype: str = 'bfloat16',

|

| 34 |

+

**kwargs,

|

| 35 |

+

):

|

| 36 |

+

self.vocab_size = vocab_size

|

| 37 |

+

self.n_positions = n_positions

|

| 38 |

+

self.n_embd = n_embd

|

| 39 |

+

self.n_layer = n_layer

|

| 40 |

+

self.n_head = n_head

|

| 41 |

+

self.n_inner = None

|

| 42 |

+

self.layer_norm_epsilon = layer_norm_epsilon

|

| 43 |

+

self.initializer_range = initializer_range

|

| 44 |

+

self.use_cache = use_cache

|

| 45 |

+

self.attention_softmax_in_fp32 = attention_softmax_in_fp32

|

| 46 |

+

self.scale_attention_softmax_in_fp32 = scale_attention_softmax_in_fp32

|

| 47 |

+

self.attention_bias_in_fp32 = attention_bias_in_fp32

|

| 48 |

+

self.multi_query = multi_query

|

| 49 |

+

self.max_position_embeddings = max_position_embeddings

|

| 50 |

+

self.torch_dtype = torch_dtype

|

| 51 |

+

super().__init__(eos_token_id=eos_token_id, **kwargs)

|

generation_config.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"do_sample": true,

|

| 4 |

+

"eos_token_id": 0,

|

| 5 |

+

"transformers_version": "4.37.1"

|

| 6 |

+

}

|

model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:607541c1f1a222648dcf86c104f7c7e49d0e61eb17c54e4e82cfbd3cd5d2c2c2

|

| 3 |

+

size 1789848296

|

modeling_gpt_refact.py

ADDED

|

@@ -0,0 +1,602 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

import torch.utils.checkpoint

|

| 5 |

+

from torch import nn

|

| 6 |

+

from torch.nn import CrossEntropyLoss

|

| 7 |

+

from transformers.modeling_outputs import (

|

| 8 |

+

BaseModelOutputWithPastAndCrossAttentions,

|

| 9 |

+

CausalLMOutputWithCrossAttentions,

|

| 10 |

+

)

|

| 11 |

+

from transformers.modeling_utils import PreTrainedModel

|

| 12 |

+

from transformers.utils import (

|

| 13 |

+

logging,

|

| 14 |

+

)

|

| 15 |

+

from typing import List, Optional, Tuple, Union

|

| 16 |

+

|

| 17 |

+

from .configuration_gpt_refact import GPTRefactConfig

|

| 18 |

+

|

| 19 |

+

logger = logging.get_logger(__name__)

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

@torch.jit.script

|

| 23 |

+

def upcast_masked_softmax(

|

| 24 |

+

x: torch.Tensor, mask: torch.Tensor, mask_value: torch.Tensor, softmax_dtype: torch.dtype

|

| 25 |

+

):

|

| 26 |

+

input_dtype = x.dtype

|

| 27 |

+

x = x.to(softmax_dtype)

|

| 28 |

+

x = torch.where(mask, x, mask_value)

|

| 29 |

+

x = torch.nn.functional.softmax(x, dim=-1).to(input_dtype)

|

| 30 |

+

return x

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

@torch.jit.script

|

| 34 |

+

def upcast_softmax(x: torch.Tensor, softmax_dtype: torch.dtype):

|

| 35 |

+

input_dtype = x.dtype

|

| 36 |

+

x = x.to(softmax_dtype)

|

| 37 |

+

x = torch.nn.functional.softmax(x, dim=-1).to(input_dtype)

|

| 38 |

+

return x

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

@torch.jit.script

|

| 42 |

+

def _get_slopes(attn_heads: int, dev: torch.device) -> torch.Tensor:

|

| 43 |

+

"""

|

| 44 |

+

## Get head-specific slope $m$ for each head

|

| 45 |

+

* `n_heads` is the number of heads in the attention layer $n$

|

| 46 |

+

The slope for first head is

|

| 47 |

+

$$\frac{1}{2^{\frac{8}{n}}} = 2^{-\frac{8}{n}}$$

|

| 48 |

+

The slopes for the rest of the heads are in a geometric series with a ratio same as above.

|

| 49 |

+

For instance when the number of heads is $8$ the slopes are

|

| 50 |

+

$$\frac{1}{2^1}, \frac{1}{2^2}, \dots, \frac{1}{2^8}$$

|

| 51 |

+

"""

|

| 52 |

+

|

| 53 |

+

# Get the closest power of 2 to `n_heads`.

|

| 54 |

+

# If `n_heads` is not a power of 2, then we first calculate slopes to the closest (smaller) power of 2,

|

| 55 |

+

# and then add the remaining slopes.

|

| 56 |

+

n = 2 ** math.floor(math.log(attn_heads, 2))

|

| 57 |

+

# $2^{-\frac{8}{n}}$

|

| 58 |

+

m_0 = 2.0 ** (-8.0 / n)

|

| 59 |

+

# $2^{-1\frac{8}{n}}, 2^{-2 \frac{8}{n}}, 2^{-3 \frac{8}{n}}, \dots$

|

| 60 |

+

m = torch.pow(m_0, torch.arange(1, 1 + n, device=dev))

|

| 61 |

+

|

| 62 |

+

# If `n_heads` is not a power of 2, then we add the remaining slopes.

|

| 63 |

+

# We calculate the remaining slopes for $n * 2$ (avoiding slopes added previously).

|

| 64 |

+

# And pick the slopes upto `n_heads`.

|

| 65 |

+

if n < attn_heads:

|

| 66 |

+

# $2^{-\frac{8}{2n}}$

|

| 67 |

+

m_hat_0 = 2.0 ** (-4.0 / n)

|

| 68 |

+

# $2^{-1\frac{8}{2n}}, 2^{-3 \frac{8}{2n}}, 2^{-5 \frac{8}{2n}}, \dots$

|

| 69 |

+

# Note that we take steps by $2$ to avoid slopes added previously.

|

| 70 |

+

m_hat = torch.pow(m_hat_0, torch.arange(1, 1 + 2 * (attn_heads - n), 2, device=dev))

|

| 71 |

+

# Concatenate the slopes with the remaining slopes.

|

| 72 |

+

m = torch.cat([m, m_hat])

|

| 73 |

+

return m

|

| 74 |

+

|

| 75 |

+

@torch.jit.script

|

| 76 |

+

def get_alibi_biases(

|

| 77 |

+

B: int,

|

| 78 |

+

T: int,

|

| 79 |

+

attn_heads: int,

|

| 80 |

+

dev: torch.device,

|

| 81 |

+

dtype: torch.dtype) -> torch.Tensor:

|

| 82 |

+

"""

|

| 83 |

+

## Calculate the attention biases matrix

|

| 84 |

+

* `n_heads` is the number of heads in the attention layer

|

| 85 |

+

* `mask` is the attention mask of shape `[seq_len_q, seq_len_k]`

|

| 86 |

+

This returns a matrix of shape `[seq_len_q, seq_len_k, n_heads, ]` with ALiBi attention biases.

|

| 87 |

+

"""

|

| 88 |

+

|

| 89 |

+

# Get slopes $m$ for each head

|

| 90 |

+

mask = torch.ones((T, T), device=dev, dtype=torch.bool)

|

| 91 |

+

|

| 92 |

+

m = _get_slopes(attn_heads, dev).to(dtype)

|

| 93 |

+

|

| 94 |

+

# Calculate distances $[0, 1, \dots, N]$

|

| 95 |

+

# Here we calculate the distances using the mask.

|

| 96 |

+

#

|

| 97 |

+

# Since it's causal mask we can just use $[0, 1, \dots, N]$ too.

|

| 98 |

+

# `distance = torch.arange(mask.shape[1], dtype=torch.long, device=mask.device)[None, :]`

|

| 99 |

+

distance = mask.cumsum(dim=-1).to(dtype)

|

| 100 |

+

|

| 101 |

+

# Multiply them pair-wise to get the AliBi bias matrix

|

| 102 |

+

biases = distance[:, :, None] * m[None, None, :]

|

| 103 |

+

biases = biases.permute(2, 0, 1)[None, :, :T, :T]

|

| 104 |

+

return biases.contiguous()

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

class Attention(nn.Module):

|

| 108 |

+

|

| 109 |

+

def __init__(self, config, layer_idx=None):

|

| 110 |

+

super().__init__()

|

| 111 |

+

self.mask_value = None

|

| 112 |

+

|

| 113 |

+

self.embed_dim = config.hidden_size

|

| 114 |

+

self.num_heads = config.num_attention_heads

|

| 115 |

+

self.head_dim = self.embed_dim // self.num_heads

|

| 116 |

+

self.kv_attn_heads = 1

|

| 117 |

+

|

| 118 |

+

self.scale_factor = self.head_dim ** -0.5

|

| 119 |

+

|

| 120 |

+

if self.head_dim * self.num_heads != self.embed_dim:

|

| 121 |

+

raise ValueError(

|

| 122 |

+

f"`embed_dim` must be divisible by num_heads (got `embed_dim`: {self.embed_dim} and `num_heads`:"

|

| 123 |

+

f" {self.num_heads})."

|

| 124 |

+

)

|

| 125 |

+

|

| 126 |

+

self.layer_idx = layer_idx

|

| 127 |

+

self.attention_softmax_in_fp32 = config.attention_softmax_in_fp32

|

| 128 |

+

self.scale_attention_softmax_in_fp32 = (

|

| 129 |

+

config.scale_attention_softmax_in_fp32 and config.attention_softmax_in_fp32

|

| 130 |

+

)

|

| 131 |

+

self.attention_bias_in_fp32 = config.attention_bias_in_fp32

|

| 132 |

+

|

| 133 |

+

self.q = nn.Linear(self.embed_dim, self.embed_dim, bias=False)

|

| 134 |

+

self.kv = nn.Linear(self.embed_dim, self.head_dim * 2, bias=False)

|

| 135 |

+

self.c_proj = nn.Linear(self.embed_dim, self.embed_dim, bias=False)

|

| 136 |

+

|

| 137 |

+

def _get_mask_value(self, device, dtype):

|

| 138 |

+

# torch.where expects a tensor. We use a cache to avoid recreating it every time.

|

| 139 |

+

if self.mask_value is None or self.mask_value.dtype != dtype or self.mask_value.device != device:

|

| 140 |

+

self.mask_value = torch.full([], torch.finfo(dtype).min, dtype=dtype, device=device)

|

| 141 |

+

return self.mask_value

|

| 142 |

+

|

| 143 |

+

def _attn(self, query, key, value, attention_mask=None, alibi=None):

|

| 144 |

+

dtype = query.dtype

|

| 145 |

+

softmax_dtype = torch.float32 if self.attention_softmax_in_fp32 else dtype

|

| 146 |

+

mask_value = self._get_mask_value(query.device, softmax_dtype)

|

| 147 |

+

upcast = dtype != softmax_dtype

|

| 148 |

+

|

| 149 |

+

query_shape = query.shape

|

| 150 |

+

batch_size = query_shape[0]

|

| 151 |

+

key_length = key.size(-1)

|

| 152 |

+

|

| 153 |

+

# (batch_size, query_length, num_heads, head_dim) x (batch_size, head_dim, key_length)

|

| 154 |

+

# -> (batch_size, query_length, num_heads, key_length)

|

| 155 |

+

query_length = query_shape[1]

|

| 156 |

+

attn_shape = (batch_size, query_length, self.num_heads, key_length)

|

| 157 |

+

attn_view = (batch_size, query_length * self.num_heads, key_length)

|

| 158 |

+

# No copy needed for MQA 2, or when layer_past is provided.

|

| 159 |

+

query = query.reshape(batch_size, query_length * self.num_heads, self.head_dim)

|

| 160 |

+

|

| 161 |

+

alibi = alibi.transpose(2, 1).reshape(alibi.shape[0], -1, alibi.shape[-1])

|

| 162 |

+

initial_dtype = query.dtype

|

| 163 |

+

new_dtype = torch.float32 if self.attention_bias_in_fp32 else initial_dtype

|

| 164 |

+

attn_weights = alibi.baddbmm(

|

| 165 |

+

batch1=query.to(new_dtype),

|

| 166 |

+

batch2=key.to(new_dtype),

|

| 167 |

+

beta=1,

|

| 168 |

+

alpha=self.scale_factor

|

| 169 |

+

).view(attn_shape).to(initial_dtype)

|

| 170 |

+

|

| 171 |

+

if upcast:

|

| 172 |

+

# Use a fused kernel to prevent a large overhead from casting and scaling.

|

| 173 |

+

# Sub-optimal when the key length is not a multiple of 8.

|

| 174 |

+

if attention_mask is None:

|

| 175 |

+

attn_weights = upcast_softmax(attn_weights, softmax_dtype)

|

| 176 |

+

else:

|

| 177 |

+

attn_weights = upcast_masked_softmax(attn_weights, attention_mask, mask_value, softmax_dtype)

|

| 178 |

+

else:

|

| 179 |

+

if attention_mask is not None:

|

| 180 |

+

# The fused kernel is very slow when the key length is not a multiple of 8, so we skip fusion.

|

| 181 |

+

attn_weights = torch.where(attention_mask, attn_weights, mask_value)

|

| 182 |

+

attn_weights = torch.nn.functional.softmax(attn_weights, dim=-1)

|

| 183 |

+

|

| 184 |

+

attn_output = torch.bmm(attn_weights.view(attn_view), value).view(query_shape)

|

| 185 |

+

|

| 186 |

+

return attn_output, attn_weights

|

| 187 |

+

|

| 188 |

+

def forward(

|

| 189 |

+

self,

|

| 190 |

+

hidden_states: torch.Tensor,

|

| 191 |

+

layer_past: Optional[torch.Tensor] = None,

|

| 192 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 193 |

+

alibi: Optional[torch.Tensor] = None,

|

| 194 |

+

use_cache: Optional[bool] = False,

|

| 195 |

+

output_attentions: Optional[bool] = False,

|

| 196 |

+

) -> Union[

|

| 197 |

+

Tuple[torch.Tensor, Optional[torch.Tensor]],

|

| 198 |

+

Tuple[torch.Tensor, Optional[torch.Tensor], Tuple[torch.Tensor, ...]],

|

| 199 |

+

]:

|

| 200 |

+

query = self.q(hidden_states)

|

| 201 |

+

kv = self.kv(hidden_states)

|

| 202 |

+

key, value = kv.split(self.head_dim, dim=-1)

|

| 203 |

+

|

| 204 |

+

if layer_past is not None:

|

| 205 |

+

past_key, past_value = layer_past

|

| 206 |

+

key = torch.cat((past_key, key), dim=-2)

|

| 207 |

+

value = torch.cat((past_value, value), dim=-2)

|

| 208 |

+

|

| 209 |

+

if use_cache is True:

|

| 210 |

+

present = (key, value)

|

| 211 |

+

else:

|

| 212 |

+

present = None

|

| 213 |

+

|

| 214 |

+

attn_output, attn_weights = self._attn(query, key.transpose(-1, -2), value, attention_mask, alibi)

|

| 215 |

+

attn_output = self.c_proj(attn_output)

|

| 216 |

+

|

| 217 |

+

outputs = (attn_output, present)

|

| 218 |

+

if output_attentions:

|

| 219 |

+

attn_weights = attn_weights.transpose(1, 2)

|

| 220 |

+

outputs += (attn_weights,)

|

| 221 |

+

|

| 222 |

+

return outputs # a, present, (attentions)

|

| 223 |

+

|

| 224 |

+

|

| 225 |

+

class MLP(nn.Module):

|

| 226 |

+

|

| 227 |

+

def __init__(self, intermediate_size, config, multiple_of: int = 256):

|

| 228 |

+

super().__init__()

|

| 229 |

+

embed_dim = config.hidden_size

|

| 230 |

+

hidden_dim = intermediate_size

|

| 231 |

+

hidden_dim = int(2 * hidden_dim / 3)

|

| 232 |

+

self.hidden_dim = multiple_of * ((hidden_dim + multiple_of - 1) // multiple_of)

|

| 233 |

+

self.gate_up_proj = nn.Linear(embed_dim, self.hidden_dim * 2, bias=False)

|

| 234 |

+

self.c_proj = nn.Linear(self.hidden_dim, embed_dim, bias=False)

|

| 235 |

+

|

| 236 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 237 |

+

up_proj = self.gate_up_proj(x)

|

| 238 |

+

x1, x2 = torch.split(up_proj, self.hidden_dim, dim=-1)

|

| 239 |

+

x = self.c_proj(F.silu(x1) * x2)

|

| 240 |

+

return x

|

| 241 |

+

|

| 242 |

+

|

| 243 |

+

class LayerNormNoBias(nn.Module):

|

| 244 |

+

|

| 245 |

+

def __init__(self, shape: int, eps: float = 1e-5):

|

| 246 |

+

super().__init__()

|

| 247 |

+

self.shape = (shape,)

|

| 248 |

+

self.eps = eps

|

| 249 |

+

self.weight = nn.Parameter(torch.empty(self.shape))

|

| 250 |

+

|

| 251 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 252 |

+

return F.layer_norm(x, self.shape, self.weight, None, self.eps)

|

| 253 |

+

|

| 254 |

+

|

| 255 |

+

class GPTRefactBlock(nn.Module):

|

| 256 |

+

def __init__(self, config, layer_idx=None):

|

| 257 |

+

super().__init__()

|

| 258 |

+

hidden_size = config.hidden_size

|

| 259 |

+

self.inner_dim = config.n_inner if config.n_inner is not None else 4 * hidden_size

|

| 260 |

+

|

| 261 |

+

self.ln_1 = LayerNormNoBias(hidden_size, eps=config.layer_norm_epsilon)

|

| 262 |

+

self.attn = Attention(config, layer_idx=layer_idx)

|

| 263 |

+

self.ln_2 = LayerNormNoBias(hidden_size, eps=config.layer_norm_epsilon)

|

| 264 |

+

self.mlp = MLP(self.inner_dim, config)

|

| 265 |

+

|

| 266 |

+

def forward(

|

| 267 |

+

self,

|

| 268 |

+

hidden_states: Optional[Tuple[torch.Tensor]],

|

| 269 |

+

layer_past: Optional[torch.Tensor] = None,

|

| 270 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 271 |

+

alibi: Optional[torch.Tensor] = None,

|

| 272 |

+

use_cache: Optional[bool] = False,

|

| 273 |

+

output_attentions: Optional[bool] = False,

|

| 274 |

+

) -> Union[

|

| 275 |

+

Tuple[torch.Tensor], Tuple[torch.Tensor, torch.Tensor], Tuple[torch.Tensor, torch.Tensor, torch.Tensor]

|

| 276 |

+

]:

|

| 277 |

+

hidden_states_norm = self.ln_1(hidden_states)

|

| 278 |

+

attn_outputs = self.attn(

|

| 279 |

+

hidden_states_norm,

|

| 280 |

+

layer_past=layer_past,

|

| 281 |

+

attention_mask=attention_mask,

|

| 282 |

+

alibi=alibi,

|

| 283 |

+

use_cache=use_cache,

|

| 284 |

+

output_attentions=output_attentions,

|

| 285 |

+

)

|

| 286 |

+

attn_output = attn_outputs[0] # output_attn: a, present, (attentions)

|

| 287 |

+

outputs = attn_outputs[1:]

|

| 288 |

+

# residual connection

|

| 289 |

+

mix = attn_output + hidden_states

|

| 290 |

+

|

| 291 |

+

norm_mix = self.ln_2(mix)

|

| 292 |

+

feed_forward_hidden_states = self.mlp(norm_mix)

|

| 293 |

+

# residual connection

|

| 294 |

+

hidden_states = mix + feed_forward_hidden_states

|

| 295 |

+

|

| 296 |

+

if use_cache:

|

| 297 |

+

outputs = (hidden_states,) + outputs

|

| 298 |

+

else:

|

| 299 |

+

outputs = (hidden_states,) + outputs[1:]

|

| 300 |

+

|

| 301 |

+

return outputs # hidden_states, present, (attentions, cross_attentions)

|

| 302 |

+

|

| 303 |

+

|

| 304 |

+

class GPTRefactPreTrainedModel(PreTrainedModel):

|

| 305 |

+

|

| 306 |

+

config_class = GPTRefactConfig

|

| 307 |

+

base_model_prefix = "transformer"

|

| 308 |

+

supports_gradient_checkpointing = True

|

| 309 |

+

_no_split_modules = ["GPTRefactBlock"]

|

| 310 |

+

_skip_keys_device_placement = "past_key_values"

|

| 311 |

+

|

| 312 |

+

def __init__(self, *inputs, **kwargs):

|

| 313 |

+

super().__init__(*inputs, **kwargs)

|

| 314 |

+

|

| 315 |

+

def _init_weights(self, module):

|

| 316 |

+

if isinstance(module, (MLP, Attention)):

|

| 317 |

+

# Reinitialize selected weights subject to the OpenAI GPT-2 Paper Scheme:

|

| 318 |

+

# > A modified initialization which accounts for the accumulation on the residual path with model depth. Scale

|

| 319 |

+

# > the weights of residual layers at initialization by a factor of 1/√N where N is the # of residual layers.

|

| 320 |

+

# > -- GPT-2 :: https://openai.com/blog/better-language-models/

|

| 321 |

+

#

|

| 322 |

+

# Reference (Megatron-LM): https://github.com/NVIDIA/Megatron-LM/blob/main/megatron/model/gpt_model.py

|

| 323 |

+

module.c_proj.weight.data.normal_(

|

| 324 |

+

mean=0.0, std=(self.config.initializer_range / math.sqrt(2 * self.config.n_layer))

|

| 325 |

+

)

|

| 326 |

+

module.c_proj._is_hf_initialized = True

|

| 327 |

+

elif isinstance(module, nn.Linear):

|

| 328 |

+

# Slightly different from the TF version which uses truncated_normal for initialization

|

| 329 |

+

# cf https://github.com/pytorch/pytorch/pull/5617

|

| 330 |

+

module.weight.data.normal_(mean=0.0, std=self.config.initializer_range)

|

| 331 |

+

if module.bias is not None:

|

| 332 |

+

module.bias.data.zero_()

|

| 333 |

+

elif isinstance(module, nn.Embedding):

|

| 334 |

+

module.weight.data.normal_(mean=0.0, std=self.config.initializer_range)

|

| 335 |

+

if module.padding_idx is not None:

|

| 336 |

+

module.weight.data[module.padding_idx].zero_()

|

| 337 |

+

elif isinstance(module, LayerNormNoBias):

|

| 338 |

+

module.weight.data.fill_(1.0)

|

| 339 |

+

|

| 340 |

+

|

| 341 |

+

class GPTRefactModel(GPTRefactPreTrainedModel):

|

| 342 |

+

|

| 343 |

+

def __init__(self, config):

|

| 344 |

+

super().__init__(config)

|

| 345 |

+

self.embed_dim = config.hidden_size

|

| 346 |

+

self.num_heads = config.num_attention_heads

|

| 347 |

+

self.multi_query = config.multi_query

|

| 348 |

+

self.wte = nn.Embedding(config.vocab_size, self.embed_dim)

|

| 349 |

+

|

| 350 |

+

self.h = nn.ModuleList([GPTRefactBlock(config, layer_idx=i) for i in range(config.num_hidden_layers)])

|

| 351 |

+

|

| 352 |

+

self.max_positions = config.max_position_embeddings

|

| 353 |

+

self.attention_bias_in_fp32 = config.attention_bias_in_fp32

|

| 354 |

+

self.register_buffer(

|

| 355 |

+

"bias", torch.tril(torch.ones((self.max_positions, self.max_positions), dtype=torch.bool)),

|

| 356 |

+

persistent=False

|

| 357 |

+

)

|

| 358 |

+

|

| 359 |

+

self.gradient_checkpointing = False

|

| 360 |

+

|

| 361 |

+

# Initialize weights and apply final processing

|

| 362 |

+

self.post_init()

|

| 363 |

+

|

| 364 |

+

def get_input_embeddings(self):

|

| 365 |

+

return self.wte

|

| 366 |

+

|

| 367 |

+

def forward(

|

| 368 |

+

self,

|

| 369 |

+

input_ids: Optional[torch.Tensor] = None,

|

| 370 |

+

past_key_values: Optional[List[torch.Tensor]] = None,

|

| 371 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 372 |

+

inputs_embeds: Optional[torch.Tensor] = None,

|

| 373 |

+

use_cache: Optional[bool] = None,

|

| 374 |

+

output_attentions: Optional[bool] = None,

|

| 375 |

+

output_hidden_states: Optional[bool] = None,

|

| 376 |

+

return_dict: Optional[bool] = None,

|

| 377 |

+

) -> Union[Tuple, BaseModelOutputWithPastAndCrossAttentions]:

|

| 378 |

+

output_attentions = output_attentions if output_attentions is not None else self.config.output_attentions

|

| 379 |

+

output_hidden_states = (

|

| 380 |

+

output_hidden_states if output_hidden_states is not None else self.config.output_hidden_states

|

| 381 |

+

)

|

| 382 |

+

use_cache = use_cache if use_cache is not None else self.config.use_cache

|

| 383 |

+

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

|

| 384 |

+

|

| 385 |

+

if input_ids is not None and inputs_embeds is not None:

|

| 386 |

+

raise ValueError("You cannot specify both input_ids and inputs_embeds at the same time")

|

| 387 |

+

elif input_ids is not None:

|

| 388 |

+

input_shape = input_ids.size()

|

| 389 |

+

input_ids = input_ids.view(-1, input_shape[-1])

|

| 390 |

+

batch_size = input_ids.shape[0]

|

| 391 |

+

elif inputs_embeds is not None:

|

| 392 |

+

input_shape = inputs_embeds.size()[:-1]

|

| 393 |

+

batch_size = inputs_embeds.shape[0]

|

| 394 |

+

else:

|

| 395 |

+

raise ValueError("You have to specify either input_ids or inputs_embeds")

|

| 396 |

+

|

| 397 |

+

if batch_size <= 0:

|

| 398 |

+

raise ValueError("batch_size has to be defined and > 0")

|

| 399 |

+

|

| 400 |

+

device = input_ids.device if input_ids is not None else inputs_embeds.device

|

| 401 |

+

|

| 402 |

+

if past_key_values is None:

|

| 403 |

+

past_length = 0

|

| 404 |

+

past_key_values = tuple([None] * len(self.h))

|

| 405 |

+

else:

|

| 406 |

+

past_length = past_key_values[0][0].size(-2)

|

| 407 |

+

|

| 408 |

+

query_length = input_shape[-1]

|

| 409 |

+

seq_length_with_past = past_length + query_length

|

| 410 |

+

|

| 411 |

+

# Self-attention mask.

|

| 412 |

+

key_length = past_length + query_length

|

| 413 |

+

self_attention_mask = self.bias[None, key_length - query_length : key_length, :key_length]

|

| 414 |

+

if attention_mask is not None:

|

| 415 |

+

self_attention_mask = self_attention_mask * attention_mask.view(batch_size, 1, -1).to(

|

| 416 |

+

dtype=torch.bool, device=self_attention_mask.device

|

| 417 |

+

)

|

| 418 |

+

|

| 419 |

+

# MQA models: (batch_size, query_length, n_heads, key_length)

|

| 420 |

+

attention_mask = self_attention_mask.unsqueeze(2)

|

| 421 |

+

|

| 422 |

+

hidden_states = self.wte(input_ids) if inputs_embeds is None else inputs_embeds

|

| 423 |

+

|

| 424 |

+

alibi_dtype = torch.float32 if self.attention_bias_in_fp32 else self.wte.weight.dtype

|

| 425 |

+

alibi = get_alibi_biases(hidden_states.shape[0], seq_length_with_past,

|

| 426 |

+

self.num_heads, device, alibi_dtype)[:, :, -query_length:, :]

|

| 427 |

+

|

| 428 |

+

output_shape = input_shape + (hidden_states.size(-1),)

|

| 429 |

+

|

| 430 |

+

presents = [] if use_cache else None

|

| 431 |

+

all_self_attentions = () if output_attentions else None

|

| 432 |

+

all_cross_attentions = () if output_attentions and self.config.add_cross_attention else None

|

| 433 |

+

all_hidden_states = () if output_hidden_states else None

|

| 434 |

+

for i, (block, layer_past) in enumerate(zip(self.h, past_key_values)):

|

| 435 |

+

if output_hidden_states:

|

| 436 |

+

all_hidden_states = all_hidden_states + (hidden_states,)

|

| 437 |

+

|

| 438 |

+

if self.gradient_checkpointing and self.training:

|

| 439 |

+

|

| 440 |

+

def create_custom_forward(module):

|

| 441 |

+

def custom_forward(*inputs):

|

| 442 |

+

# None for past_key_value

|

| 443 |

+

return module(*inputs, use_cache, output_attentions)

|

| 444 |

+

|

| 445 |

+

return custom_forward

|

| 446 |

+

|

| 447 |

+

outputs = torch.utils.checkpoint.checkpoint(

|

| 448 |

+

create_custom_forward(block),

|

| 449 |

+

hidden_states,

|

| 450 |

+

None,

|

| 451 |

+

attention_mask,

|

| 452 |

+

alibi

|

| 453 |

+

)

|

| 454 |

+

else:

|

| 455 |

+

outputs = block(

|

| 456 |

+

hidden_states,

|

| 457 |

+

layer_past=layer_past,

|

| 458 |

+

attention_mask=attention_mask,

|

| 459 |

+

alibi=alibi,

|

| 460 |

+

use_cache=use_cache,

|

| 461 |

+

output_attentions=output_attentions,

|

| 462 |

+

)

|

| 463 |

+

|

| 464 |

+

hidden_states = outputs[0]

|

| 465 |

+

if use_cache:

|

| 466 |

+

presents.append(outputs[1])

|

| 467 |

+

|

| 468 |

+

if output_attentions:

|

| 469 |

+

all_self_attentions = all_self_attentions + (outputs[2 if use_cache else 1],)

|

| 470 |

+

if self.config.add_cross_attention:

|

| 471 |

+

all_cross_attentions = all_cross_attentions + (outputs[3 if use_cache else 2],)

|

| 472 |

+

|

| 473 |

+

hidden_states = hidden_states.view(output_shape)

|

| 474 |

+

# Add last hidden state

|

| 475 |

+

if output_hidden_states:

|

| 476 |

+

all_hidden_states = all_hidden_states + (hidden_states,)

|

| 477 |

+

|

| 478 |

+

if not return_dict:

|

| 479 |

+

return tuple(

|

| 480 |

+

v

|

| 481 |

+

for v in [hidden_states, presents, all_hidden_states, all_self_attentions, all_cross_attentions]

|

| 482 |

+

if v is not None

|

| 483 |

+

)

|

| 484 |

+

|

| 485 |

+

return BaseModelOutputWithPastAndCrossAttentions(

|

| 486 |

+

last_hidden_state=hidden_states,

|

| 487 |

+

past_key_values=presents,

|

| 488 |

+

hidden_states=all_hidden_states,

|

| 489 |

+

attentions=all_self_attentions,

|

| 490 |

+

cross_attentions=all_cross_attentions,

|

| 491 |

+

)

|

| 492 |

+

|

| 493 |

+

|

| 494 |

+

class GPTRefactForCausalLM(GPTRefactPreTrainedModel):

|

| 495 |

+

|

| 496 |

+

_tied_weights_keys = ["lm_head.weight", "ln_f.weight"]

|

| 497 |

+

|

| 498 |

+

def __init__(self, config):

|

| 499 |

+

super().__init__(config)

|

| 500 |

+

self.transformer = GPTRefactModel(config)

|

| 501 |

+

self.ln_f = LayerNormNoBias(self.transformer.embed_dim, eps=config.layer_norm_epsilon)

|

| 502 |

+

self.lm_head = nn.Linear(config.n_embd, config.vocab_size, bias=False)

|

| 503 |

+

|

| 504 |

+

# Initialize weights and apply final processing

|

| 505 |

+

self.post_init()

|

| 506 |

+

|

| 507 |

+

# gradient checkpointing support for lower versions of transformers

|

| 508 |

+

import transformers

|

| 509 |

+

from packaging import version

|

| 510 |

+

|

| 511 |

+

def _set_gradient_checkpointing(module, value=False):

|

| 512 |

+

if isinstance(module, GPTRefactModel):

|

| 513 |

+

module.gradient_checkpointing = value

|

| 514 |

+

|

| 515 |

+

v = version.parse(transformers.__version__)

|

| 516 |

+

if v.major <= 4 and v.minor < 35:

|

| 517 |

+

self._set_gradient_checkpointing = _set_gradient_checkpointing

|

| 518 |

+

|

| 519 |

+

def prepare_inputs_for_generation(self, input_ids, past_key_values=None, inputs_embeds=None, **kwargs):

|

| 520 |

+

if inputs_embeds is not None and past_key_values is None:

|

| 521 |

+

model_inputs = {"inputs_embeds": inputs_embeds}

|

| 522 |

+

else:

|

| 523 |

+

if past_key_values is not None:

|

| 524 |

+

model_inputs = {"input_ids": input_ids[..., -1:]}

|

| 525 |

+

else:

|

| 526 |

+

model_inputs = {"input_ids": input_ids}

|

| 527 |

+

|

| 528 |

+

model_inputs.update(

|

| 529 |

+

{

|

| 530 |

+

"past_key_values": past_key_values,

|

| 531 |

+

"use_cache": kwargs.get("use_cache"),

|

| 532 |

+

}

|

| 533 |

+

)

|

| 534 |

+

return model_inputs

|

| 535 |

+

|

| 536 |

+

def forward(

|

| 537 |

+

self,

|

| 538 |

+

input_ids: Optional[torch.Tensor] = None,

|

| 539 |

+

past_key_values: Optional[Tuple[Tuple[torch.Tensor]]] = None,

|

| 540 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 541 |

+

inputs_embeds: Optional[torch.Tensor] = None,

|

| 542 |

+

labels: Optional[torch.Tensor] = None,

|

| 543 |

+

use_cache: Optional[bool] = None,

|

| 544 |

+

output_attentions: Optional[bool] = None,

|

| 545 |

+

output_hidden_states: Optional[bool] = None,

|

| 546 |

+

return_dict: Optional[bool] = None,

|

| 547 |

+

) -> Union[Tuple, CausalLMOutputWithCrossAttentions]:

|

| 548 |

+

r"""

|

| 549 |

+

labels (`torch.Tensor` of shape `(batch_size, sequence_length)`, *optional*):

|

| 550 |

+

Labels for language modeling. Note that the labels **are shifted** inside the model, i.e. you can set

|

| 551 |

+

`labels = input_ids` Indices are selected in `[-100, 0, ..., config.vocab_size]` All labels set to `-100`

|

| 552 |

+

are ignored (masked), the loss is only computed for labels in `[0, ..., config.vocab_size]`

|

| 553 |

+

"""

|

| 554 |

+

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

|

| 555 |

+

|

| 556 |

+

transformer_outputs = self.transformer(

|

| 557 |

+

input_ids,

|

| 558 |

+

past_key_values=past_key_values,

|

| 559 |

+

attention_mask=attention_mask,

|

| 560 |

+

inputs_embeds=inputs_embeds,

|

| 561 |

+

use_cache=use_cache,

|

| 562 |

+

output_attentions=output_attentions,

|

| 563 |

+

output_hidden_states=output_hidden_states,

|

| 564 |

+

return_dict=return_dict,

|

| 565 |

+

)

|

| 566 |

+

hidden_states = transformer_outputs[0]

|

| 567 |

+

|

| 568 |

+

x = self.ln_f(hidden_states)

|

| 569 |

+

lm_logits = self.lm_head(x)

|

| 570 |

+

|

| 571 |

+

loss = None

|

| 572 |

+

if labels is not None:

|

| 573 |

+

# Shift so that tokens < n predict n

|

| 574 |

+

shift_logits = lm_logits[..., :-1, :].contiguous()

|

| 575 |

+

shift_labels = labels[..., 1:].contiguous().to(shift_logits.device)

|

| 576 |

+

# Flatten the tokens

|

| 577 |

+

loss_fct = CrossEntropyLoss()

|

| 578 |

+

loss = loss_fct(shift_logits.view(-1, shift_logits.size(-1)), shift_labels.view(-1))

|

| 579 |

+

|

| 580 |

+

if not return_dict:

|

| 581 |

+

output = (lm_logits,) + transformer_outputs[1:]

|

| 582 |

+

return ((loss,) + output) if loss is not None else output

|

| 583 |

+

|

| 584 |

+

return CausalLMOutputWithCrossAttentions(

|

| 585 |

+

loss=loss,

|

| 586 |

+

logits=lm_logits,

|

| 587 |

+

past_key_values=transformer_outputs.past_key_values,

|

| 588 |

+

hidden_states=transformer_outputs.hidden_states,

|

| 589 |

+

attentions=transformer_outputs.attentions,

|

| 590 |

+

cross_attentions=transformer_outputs.cross_attentions,

|

| 591 |

+

)

|

| 592 |

+

|

| 593 |

+

@staticmethod

|

| 594 |

+

def _reorder_cache(

|

| 595 |

+

past_key_values: Tuple[Tuple[torch.Tensor]], beam_idx: torch.Tensor

|

| 596 |

+

) -> Tuple[Tuple[torch.Tensor]]:

|

| 597 |

+

"""

|

| 598 |

+

This function is used to re-order the `past_key_values` cache if [`~PreTrainedModel.beam_search`] or

|

| 599 |

+

[`~PreTrainedModel.beam_sample`] is called. This is required to match `past_key_values` with the correct

|

| 600 |

+

beam_idx at every generation step.

|

| 601 |

+

"""

|

| 602 |

+

return tuple(layer_past.index_select(0, beam_idx.to(layer_past.device)) for layer_past in past_key_values)

|

plots.png

ADDED

|

smash_config.json

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|