Update README.md

Browse files

README.md

CHANGED

|

@@ -34,6 +34,8 @@ https://AlignmentLab.ai

|

|

| 34 |

|

| 35 |

# Evaluation

|

| 36 |

|

|

|

|

|

|

|

| 37 |

|

| 38 |

|

| 39 |

| Metric | Value |

|

|

@@ -47,6 +49,19 @@ https://AlignmentLab.ai

|

|

| 47 |

We use [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) to run the benchmark tests above, using the same version as the HuggingFace LLM Leaderboard. Please see below for detailed instructions on reproducing benchmark results.

|

| 48 |

|

| 49 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 50 |

# Model Details

|

| 51 |

|

| 52 |

* **Trained by**: **Platypus2-13B** trained by Cole Hunter & Ariel Lee; **OpenOrcaxOpenChat-Preview2-13B** trained by Open-Orca

|

|

@@ -87,7 +102,7 @@ Please see our [paper](https://platypus-llm.github.io/Platypus.pdf) and [project

|

|

| 87 |

For training details and inference instructions please see the [Platypus](https://github.com/arielnlee/Platypus) GitHub repo.

|

| 88 |

|

| 89 |

|

| 90 |

-

# Reproducing Evaluation Results

|

| 91 |

|

| 92 |

Install LM Evaluation Harness:

|

| 93 |

```

|

|

|

|

| 34 |

|

| 35 |

# Evaluation

|

| 36 |

|

| 37 |

+

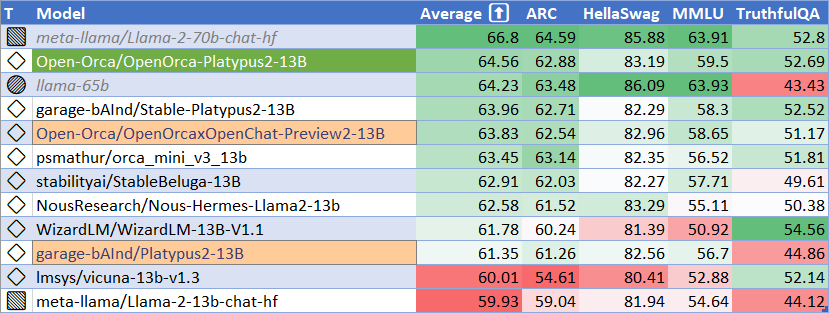

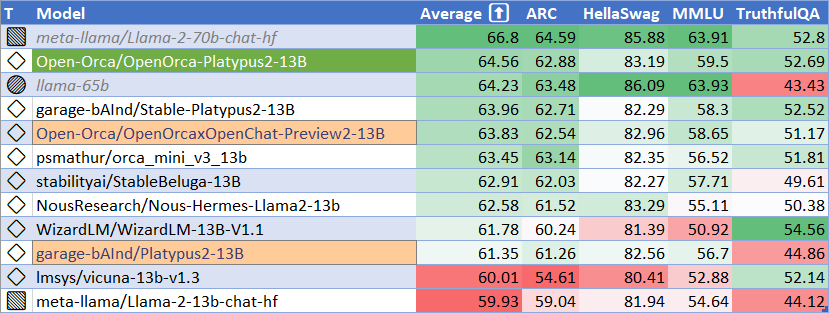

## HuggingFace Leaderboard Performance

|

| 38 |

+

|

| 39 |

|

| 40 |

|

| 41 |

| Metric | Value |

|

|

|

|

| 49 |

We use [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) to run the benchmark tests above, using the same version as the HuggingFace LLM Leaderboard. Please see below for detailed instructions on reproducing benchmark results.

|

| 50 |

|

| 51 |

|

| 52 |

+

## AGIEval Performance

|

| 53 |

+

|

| 54 |

+

We compare our results to our base Preview2 model, and find **112%** of the base model's performance on AGI Eval, averaging **0.463**.

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

## BigBench-Hard Performance

|

| 59 |

+

|

| 60 |

+

We compare our results to our base Preview2 model, and find **105%** of the base model's performance on BigBench-Hard, averaging **0.442**.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

# Model Details

|

| 66 |

|

| 67 |

* **Trained by**: **Platypus2-13B** trained by Cole Hunter & Ariel Lee; **OpenOrcaxOpenChat-Preview2-13B** trained by Open-Orca

|

|

|

|

| 102 |

For training details and inference instructions please see the [Platypus](https://github.com/arielnlee/Platypus) GitHub repo.

|

| 103 |

|

| 104 |

|

| 105 |

+

# Reproducing Evaluation Results (for HuggingFace Leaderboard Eval)

|

| 106 |

|

| 107 |

Install LM Evaluation Harness:

|

| 108 |

```

|