license: apache-2.0

datasets:

- ETDataset/ett

language:

- en

metrics:

- mse

- mae

library_name: transformers

pipeline_tag: time-series-forecasting

tags:

- Time-series

- foundation-model

- forecasting

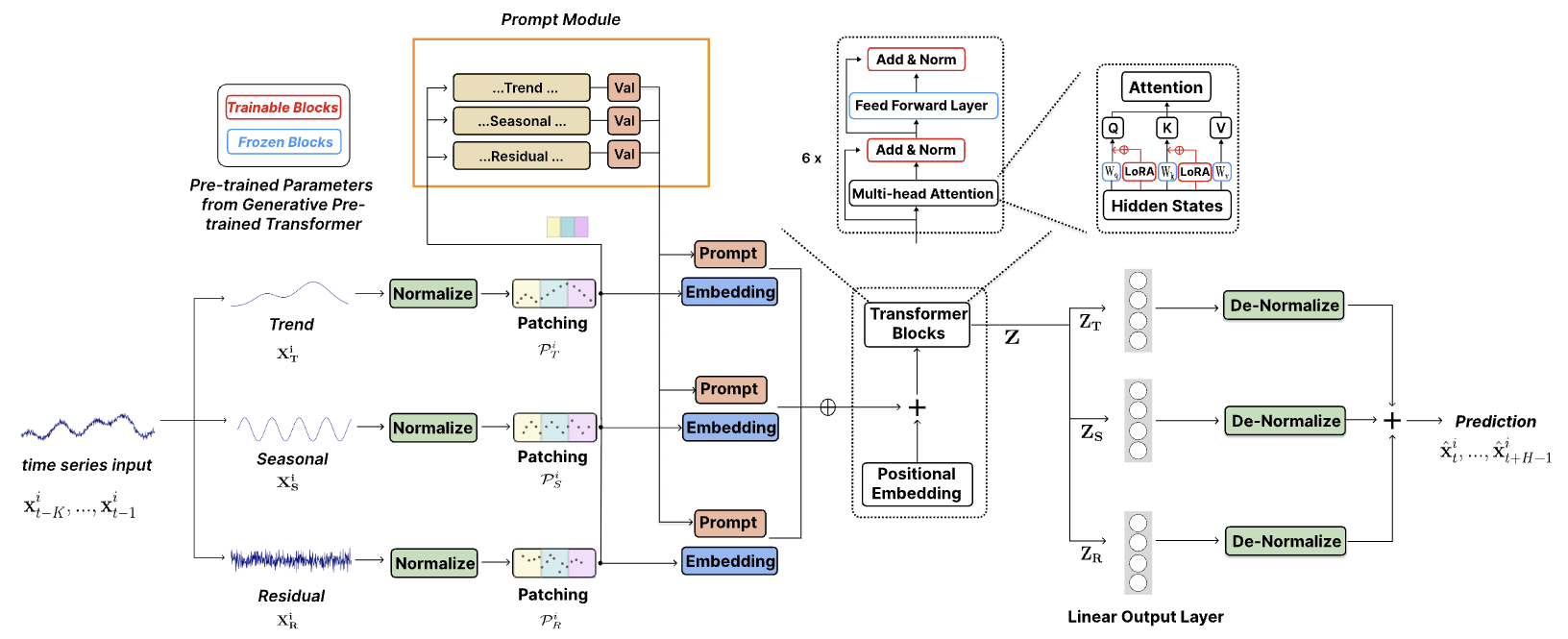

TEMPO: Prompt-based Generative Pre-trained Transformer for Time Series Forecasting

The official code for ICLR 2024 paper: "TEMPO: Prompt-based Generative Pre-trained Transformer for Time Series Forecasting (ICLR 2024)".

TEMPO is one of the very first open source Time Series Foundation Models for forecasting task v1.0 version.

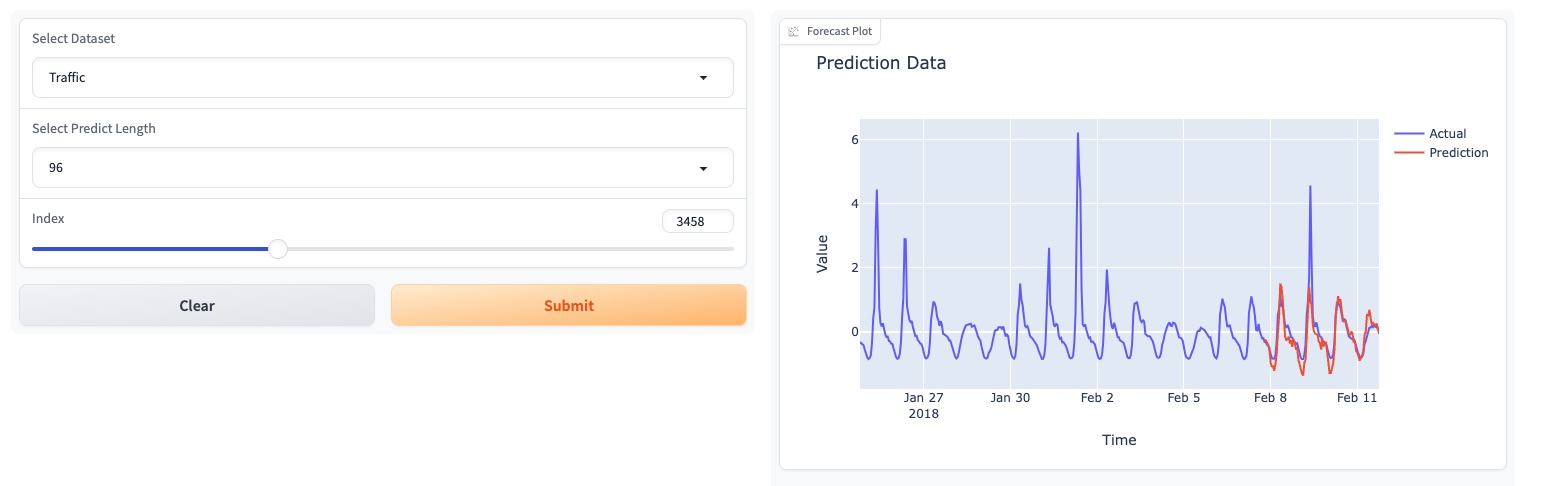

Please try our foundation model demo [here].

Build the environment

conda create -n tempo python=3.8

conda activate tempo

pip install -r requirements.txt

Get Data

Download the data from [Google Drive] or [Baidu Drive], and place the downloaded data in the folder./dataset. You can also download the STL results from [Google Drive], and place the downloaded data in the folder./stl.

Run TEMPO

Training Stage

bash [ecl, etth1, etth2, ettm1, ettm2, traffic, weather].sh

Test

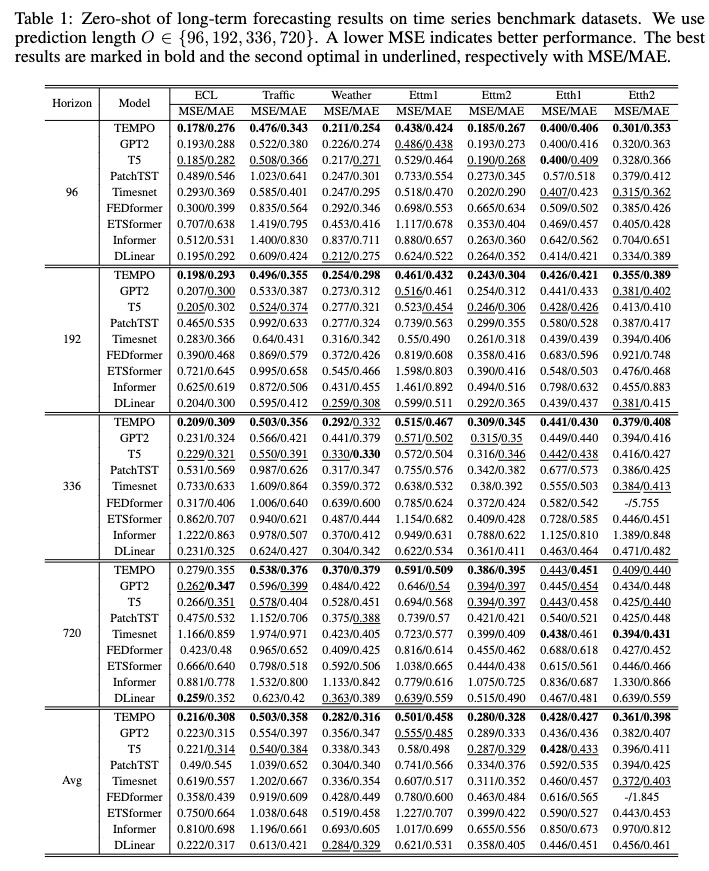

After training, we can test TEMPO model under the zero-shot setting:

bash [ecl, etth1, etth2, ettm1, ettm2, traffic, weather]_test.sh

Pre-trained Models

You can download the pre-trained model from [Google Drive] and then run the test script for fun.

Multi-modality dataset: TETS dataset

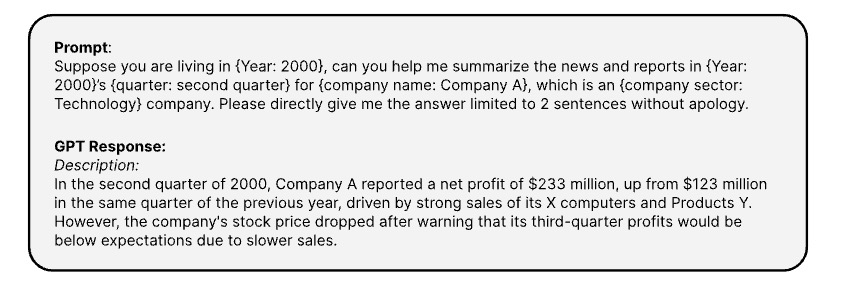

Here is the prompts use to generate the coresponding textual informaton of time series via [OPENAI ChatGPT-3.5 API]

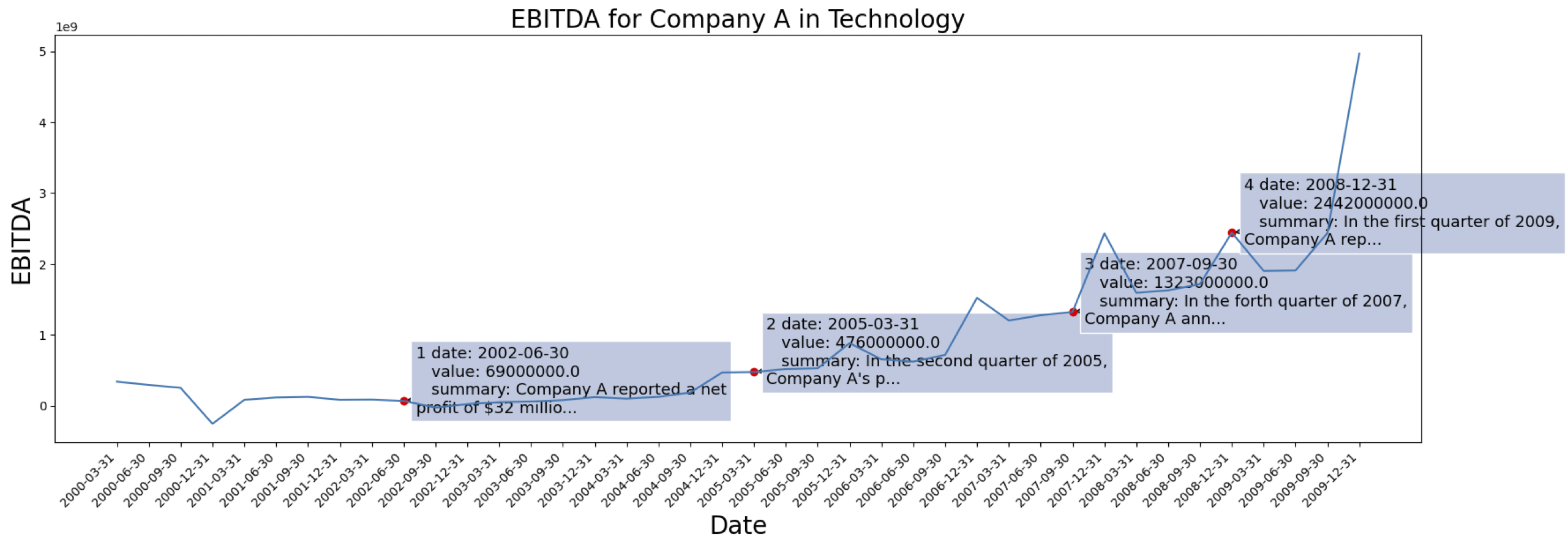

The time series data are come from [S&P 500]. Here is the EBITDA case for one company from the dataset:

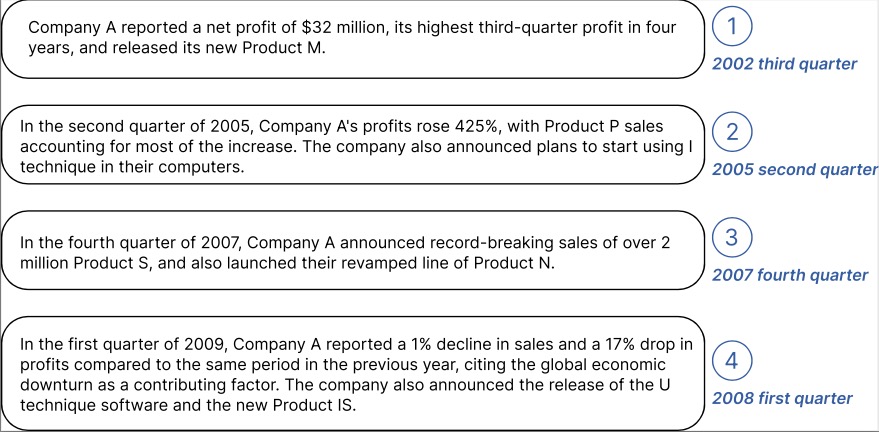

Example of generated contextual information for the Company marked above:

You can download the processed data with text embedding from GPT2 from: [TETS].

Cite

@inproceedings{

cao2024tempo,

title={{TEMPO}: Prompt-based Generative Pre-trained Transformer for Time Series Forecasting},

author={Defu Cao and Furong Jia and Sercan O Arik and Tomas Pfister and Yixiang Zheng and Wen Ye and Yan Liu},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=YH5w12OUuU}

}