base_model: mistralai/Mixtral-8x7B-Instruct-v0.1

license: apache-2.0

Introduction

Cerebrum 8x7b is a large language model (LLM) created specifically for reasoning tasks. It is based on the Mixtral 8x7b model. Similar to its smaller version, Cerebrum 7b, it is fine-tuned on a small custom dataset of native chain of thought data and further improved with targeted RLHF (tRLHF), a novel technique for sample-efficient LLM alignment. Unlike numerous other recent fine-tuning approaches, our training pipeline includes under 5000 training prompts and even fewer labeled datapoints for tRLHF.

Native chain of thought approach means that Cerebrum is trained to devise a tactical plan before tackling problems that require thinking. For brainstorming, knowledge intensive, and creative tasks Cerebrum will typically omit unnecessarily verbose considerations.

Cerebrum 8x7b offers competitive performance to Gemini 1.0 Pro and GPT-3.5 Turbo on a range of tasks that require reasoning.

Benchmarking

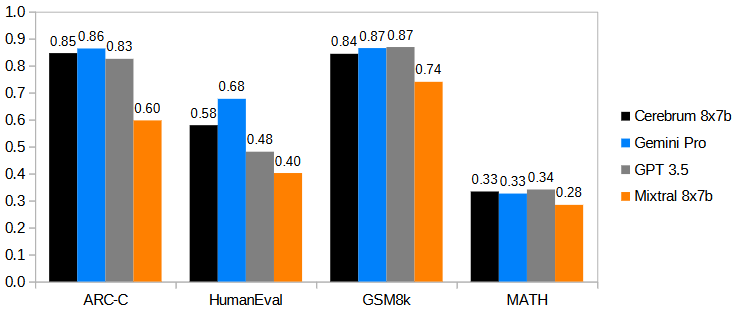

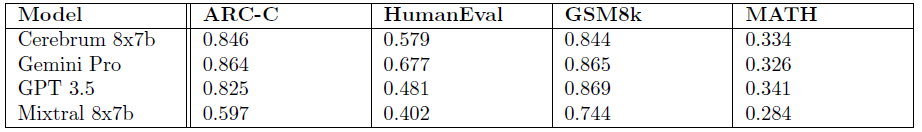

An overview of Cerebrum 8x7b performance compared to Gemini 1.0 Pro, GPT-3.5 and Mixtral 8x7b on selected benchmarks:

Evaluation details:

- ARC-C: all models evaluated zero-shot. Gemini 1.0 Pro and GPT-3.5 (gpt-3.5-turbo-0125) evaluated via API, reported numbers taken for Mixtral 8x7b.

- HumanEval: all models evaluated zero-shot, reported numbers used.

- GSM8k: Cerebrum, GPT-3.5, and Mixtral 8x7b evaluated with maj@8, Gemini evaluated with maj@32. GPT-3.5 (gpt-3.5-turbo-0125) evaluated via API, reported numbers taken for Gemini 1.0 Pro and Mixtral 8x7b.

- MATH: Cerebrum evaluated 0-shot. GPT-3.5 and Gemini evaluated 4-shot, Mixtral 8x7b maj@4. Reported numbers used.

Usage

For optimal performance, Cerebrum should be prompted with an Alpaca-style template that requests the description of the "thought process". Here is what a conversation should look like from the model's point of view:

<s>A chat between a user and a thinking artificial intelligence assistant. The assistant describes its thought process and gives helpful and detailed answers to the user's questions.

User: Are you conscious?

AI:

This prompt is also available as a chat template. Here is how you could use it:

messages = [

{'role': 'user', 'content': 'What is self-consistency decoding?'},

{'role': 'assistant', 'content': 'Self-consistency decoding is a technique used in natural language processing to improve the performance of language models. It works by generating multiple outputs for a given input and then selecting the most consistent output based on a set of criteria.'},

{'role': 'user', 'content': 'Why does self-consistency work?'}

]

input = tokenizer.apply_chat_template(messages, add_generation_prompt=True, return_tensors='pt')

with torch.no_grad():

out = model.generate(input_ids=input, max_new_tokens=100, do_sample=False)

The model ends its turn by generating the EOS token. Importantly, this token should be removed from the model answer in a multi-turn dialogue.

Cerebrum can be operated at very low temperatures (and specifically temperature 0), which improves performance on tasks that require precise answers. The alignment should be sufficient to avoid repetitions in most cases without a repetition penalty.