34d8b59e470cb8bb29febe9216fd3aad5fab95fd4ca35aa41b47b4eb754cdc8b

Browse files- extensions/microsoftexcel-supermerger/elemental_en.md +118 -0

- extensions/microsoftexcel-supermerger/elemental_ja.md +119 -0

- extensions/microsoftexcel-supermerger/install.py +7 -0

- extensions/microsoftexcel-supermerger/sample.txt +95 -0

- extensions/microsoftexcel-supermerger/scripts/__pycache__/supermerger.cpython-310.pyc +0 -0

- extensions/microsoftexcel-supermerger/scripts/mbwpresets.txt +39 -0

- extensions/microsoftexcel-supermerger/scripts/mbwpresets_master.txt +39 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/__pycache__/mergers.cpython-310.pyc +0 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/__pycache__/model_util.cpython-310.pyc +0 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/__pycache__/pluslora.cpython-310.pyc +0 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/__pycache__/xyplot.cpython-310.pyc +0 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/mergers.py +699 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/model_util.py +928 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/pluslora.py +1298 -0

- extensions/microsoftexcel-supermerger/scripts/mergers/xyplot.py +513 -0

- extensions/microsoftexcel-supermerger/scripts/supermerger.py +552 -0

- extensions/microsoftexcel-tunnels/.gitignore +176 -0

- extensions/microsoftexcel-tunnels/.pre-commit-config.yaml +25 -0

- extensions/microsoftexcel-tunnels/LICENSE.md +22 -0

- extensions/microsoftexcel-tunnels/README.md +21 -0

- extensions/microsoftexcel-tunnels/__pycache__/preload.cpython-310.pyc +0 -0

- extensions/microsoftexcel-tunnels/install.py +4 -0

- extensions/microsoftexcel-tunnels/preload.py +21 -0

- extensions/microsoftexcel-tunnels/pyproject.toml +25 -0

- extensions/microsoftexcel-tunnels/scripts/__pycache__/ssh_tunnel.cpython-310.pyc +0 -0

- extensions/microsoftexcel-tunnels/scripts/__pycache__/try_cloudflare.cpython-310.pyc +0 -0

- extensions/microsoftexcel-tunnels/scripts/ssh_tunnel.py +81 -0

- extensions/microsoftexcel-tunnels/scripts/try_cloudflare.py +15 -0

- extensions/microsoftexcel-tunnels/ssh_tunnel.py +86 -0

- extensions/put extensions here.txt +0 -0

- extensions/sd-webui-lora-block-weight/README.md +350 -0

- extensions/sd-webui-lora-block-weight/scripts/Roboto-Regular.ttf +0 -0

- extensions/sd-webui-lora-block-weight/scripts/__pycache__/lora_block_weight.cpython-310.pyc +0 -0

- extensions/sd-webui-lora-block-weight/scripts/elempresets.txt +7 -0

- extensions/sd-webui-lora-block-weight/scripts/lbwpresets.txt +10 -0

- extensions/sd-webui-lora-block-weight/scripts/lora_block_weight.py +744 -0

- extensions/stable-diffusion-webui-composable-lora/.gitignore +129 -0

- extensions/stable-diffusion-webui-composable-lora/LICENSE +21 -0

- extensions/stable-diffusion-webui-composable-lora/README.md +25 -0

- extensions/stable-diffusion-webui-composable-lora/__pycache__/composable_lora.cpython-310.pyc +0 -0

- extensions/stable-diffusion-webui-composable-lora/composable_lora.py +165 -0

- extensions/stable-diffusion-webui-composable-lora/scripts/__pycache__/composable_lora_script.cpython-310.pyc +0 -0

- extensions/stable-diffusion-webui-composable-lora/scripts/composable_lora_script.py +57 -0

- extensions/stable-diffusion-webui-images-browser/.gitignore +6 -0

- extensions/stable-diffusion-webui-images-browser/README.md +61 -0

- extensions/stable-diffusion-webui-images-browser/install.py +9 -0

- extensions/stable-diffusion-webui-images-browser/javascript/image_browser.js +770 -0

- extensions/stable-diffusion-webui-images-browser/req_IR.txt +1 -0

- extensions/stable-diffusion-webui-images-browser/scripts/__pycache__/image_browser.cpython-310.pyc +0 -0

- extensions/stable-diffusion-webui-images-browser/scripts/image_browser.py +1717 -0

extensions/microsoftexcel-supermerger/elemental_en.md

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Elemental Merge

|

| 2 |

+

- This is a block-by-block merge that goes beyond block-by-block merge.

|

| 3 |

+

|

| 4 |

+

In a block-by-block merge, the merge ratio can be changed for each of the 25 blocks, but a blocks also consists of multiple elements, and in principle it is possible to change the ratio for each element. It is possible, but the number of elements is more than 600, and it was doubtful whether it could be handled by human hands, but we tried to implement it. I do not recommend merging elements by element out of the blue. It is recommended to use it as a final adjustment when a problem that cannot be solved by block-by-block merging.

|

| 5 |

+

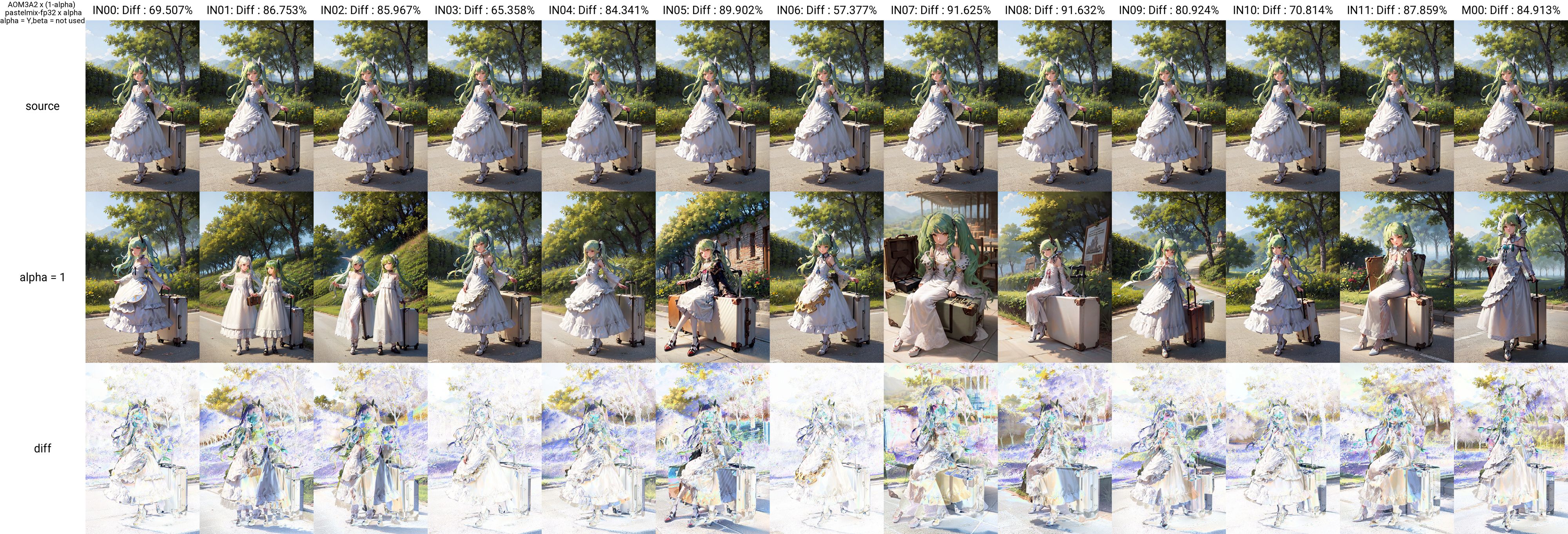

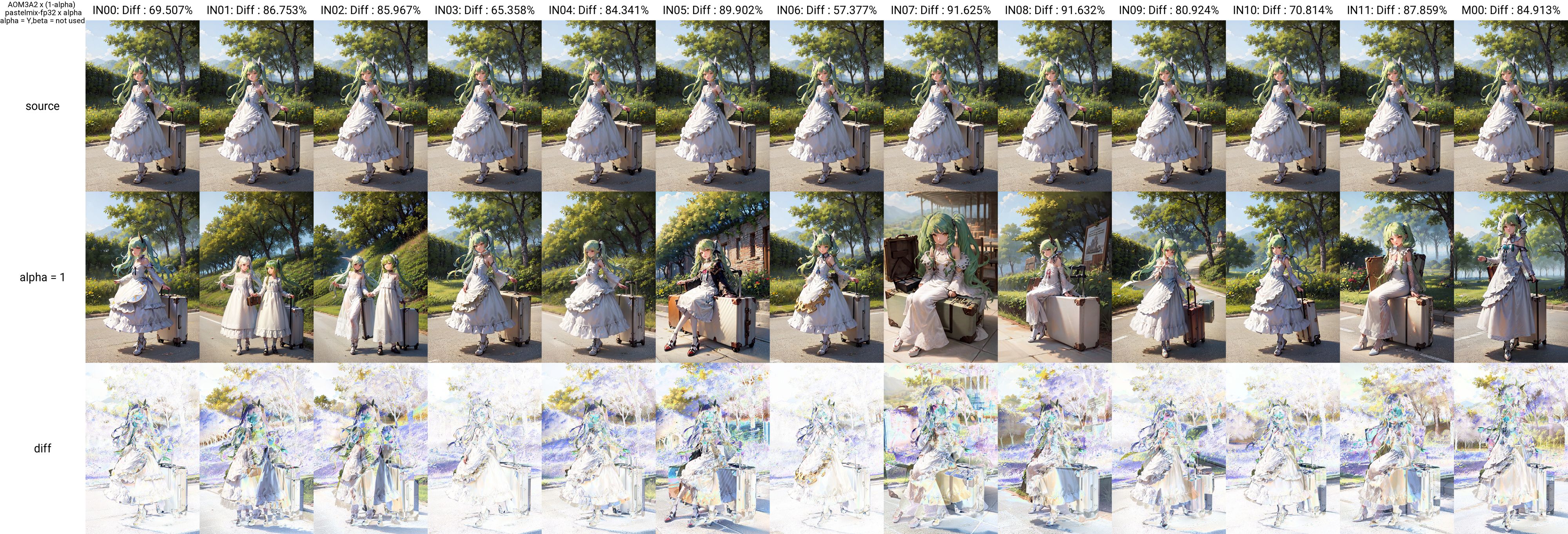

The following images show the result of changing the elements in the OUT05 layer. The leftmost one is without merging, the second one is all the OUT05 layers (i.e., normal block-by-block merging), and the rest are element merging. As shown in the table below, there are several more elements in attn2, etc.

|

| 6 |

+

|

| 7 |

+

## Usage

|

| 8 |

+

Note that elemental merging is effective for both normal and block-by-block merging, and is computed last, so it will overwrite values specified for block-by-block merging.

|

| 9 |

+

|

| 10 |

+

Set in Elemental Merge. Note that if text is set here, it will be automatically adapted. Each element is listed in the table below, but it is not necessary to enter the full name of each element.

|

| 11 |

+

You can check to see if the effect is properly applied by activating "print change" check. If this check is enabled, the applied elements will be displayed on the command prompt screen during the merge.

|

| 12 |

+

|

| 13 |

+

### Format

|

| 14 |

+

Bloks:Element:Ratio, Bloks:Element:Ratio,...

|

| 15 |

+

or

|

| 16 |

+

Bloks:Element:Ratio

|

| 17 |

+

Bloks:Element:Ratio

|

| 18 |

+

Bloks:Element:Ratio

|

| 19 |

+

|

| 20 |

+

Multiple specifications can be specified by separating them with commas or newlines. Commas and newlines may be mixed.

|

| 21 |

+

Bloks can be specified in uppercase from BASE,IN00-M00-OUT11. If left blank, all Bloks will be applied. Multiple Bloks can be specified by separating them with a space.

|

| 22 |

+

Similarly, multiple elements can be specified by separating them with a space.

|

| 23 |

+

Partial matching is used, so for example, typing "attn" will change both attn1 and attn2, and typing "attn2" will change only attn2. If you want to specify more details, enter "attn2.to_out" and so on.

|

| 24 |

+

|

| 25 |

+

OUT03 OUT04 OUT05:attn2 attn1.to_out:0.5

|

| 26 |

+

|

| 27 |

+

the ratio of elements containing attn2 and attn1.to_out in the OUT03, OUT04 and OUT05 layers will be 0.5.

|

| 28 |

+

If the element column is left blank, all elements in the specified Blocks will change, and the effect will be the same as a block-by-block merge.

|

| 29 |

+

If there are duplicate specifications, the one entered later takes precedence.

|

| 30 |

+

|

| 31 |

+

OUT06:attn:0.5,OUT06:attn2.to_k:0.2

|

| 32 |

+

|

| 33 |

+

is entered, attn other than attn2.to_k in the OUT06 layer will be 0.5, and only attn2.to_k will be 0.2.

|

| 34 |

+

|

| 35 |

+

You can invert the effect by first entering NOT.

|

| 36 |

+

This can be set by Blocks and Element.

|

| 37 |

+

|

| 38 |

+

NOT OUT04:attn:1

|

| 39 |

+

|

| 40 |

+

will set the ratio 1 to the attn of all Blocks except the OUT04 layer.

|

| 41 |

+

|

| 42 |

+

OUT05:NOT attn proj:0.2

|

| 43 |

+

|

| 44 |

+

will set all Blocks except attn and proj in the OUT05 layer to 0.2.

|

| 45 |

+

|

| 46 |

+

## XY plot

|

| 47 |

+

Several XY plots for elemental merge are available. Input examples can be found in sample.txt.

|

| 48 |

+

#### elemental

|

| 49 |

+

Creates XY plots for multiple elemental merges. Elements should be separated from each other by blank lines.

|

| 50 |

+

The following image is the result of executing sample1 of sample.txt.

|

| 51 |

+

|

| 52 |

+

#### pinpoint element

|

| 53 |

+

Creates an XY plot with different values for a specific element. Do the same with elements as with Pinpoint Blocks, but specify alpha for the opposite axis. Separate elements with a new line or comma.

|

| 54 |

+

The following image shows the result of running sample 3 of sample.txt.

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

#### effective elenemtal checker

|

| 58 |

+

Outputs the difference of each element's effective elenemtal checker. The gif.csv file will be created in the output folder under the ModelA and ModelB folders in the diff folder. If there are duplicate file names, rename and save the files, but it is recommended to rename the diff folder to an appropriate name because it is complicated when the number of files increases.

|

| 59 |

+

Separate the files with a new line or comma. Use alpha for the opposite axis and enter a single value. This is useful to see the effect of an element, but it is also possible to see the effect of a hierarchy by not specifying an element, so you may use it that way more often.

|

| 60 |

+

The following image shows the result of running sample5 of sample.txt.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

### List of elements

|

| 64 |

+

Basically, it seems that attn is responsible for the face and clothing information. The IN07, OUT03, OUT04, and OUT05 layers seem to have a particularly strong influence. It does not seem to make sense to change the same element in multiple Blocks at the same time, since the degree of influence often differs depending on the Blocks.

|

| 65 |

+

No element exists where it is marked null.

|

| 66 |

+

|

| 67 |

+

||IN00|IN01|IN02|IN03|IN04|IN05|IN06|IN07|IN08|IN09|IN10|IN11|M00|M00|OUT00|OUT01|OUT02|OUT03|OUT04|OUT05|OUT06|OUT07|OUT08|OUT09|OUT10|OUT11

|

| 68 |

+

|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|

|

| 69 |

+

op.bias|null|null|null||null|null||null|null||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null

|

| 70 |

+

op.weight|null|null|null||null|null||null|null||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null

|

| 71 |

+

emb_layers.1.bias|null|||null|||null|||null|null|||||||||||||||

|

| 72 |

+

emb_layers.1.weight|null|||null|||null|||null|null|||||||||||||||

|

| 73 |

+

in_layers.0.bias|null|||null|||null|||null|null|||||||||||||||

|

| 74 |

+

in_layers.0.weight|null|||null|||null|||null|null|||||||||||||||

|

| 75 |

+

in_layers.2.bias|null|||null|||null|||null|null|||||||||||||||

|

| 76 |

+

in_layers.2.weight|null|||null|||null|||null|null|||||||||||||||

|

| 77 |

+

out_layers.0.bias|null|||null|||null|||null|null|||||||||||||||

|

| 78 |

+

out_layers.0.weight|null|||null|||null|||null|null|||||||||||||||

|

| 79 |

+

out_layers.3.bias|null|||null|||null|||null|null|||||||||||||||

|

| 80 |

+

out_layers.3.weight|null|||null|||null|||null|null|||||||||||||||

|

| 81 |

+

skip_connection.bias|null|null|null|null||null|null||null|null|null|null|null|null||||||||||||

|

| 82 |

+

skip_connection.weight|null|null|null|null||null|null||null|null|null|null|null|null||||||||||||

|

| 83 |

+

norm.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 84 |

+

norm.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 85 |

+

proj_in.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 86 |

+

proj_in.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 87 |

+

proj_out.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 88 |

+

proj_out.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 89 |

+

transformer_blocks.0.attn1.to_k.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 90 |

+

transformer_blocks.0.attn1.to_out.0.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 91 |

+

transformer_blocks.0.attn1.to_out.0.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 92 |

+

transformer_blocks.0.attn1.to_q.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 93 |

+

transformer_blocks.0.attn1.to_v.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 94 |

+

transformer_blocks.0.attn2.to_k.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 95 |

+

transformer_blocks.0.attn2.to_out.0.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 96 |

+

transformer_blocks.0.attn2.to_out.0.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 97 |

+

transformer_blocks.0.attn2.to_q.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 98 |

+

transformer_blocks.0.attn2.to_v.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 99 |

+

transformer_blocks.0.ff.net.0.proj.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 100 |

+

transformer_blocks.0.ff.net.0.proj.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 101 |

+

transformer_blocks.0.ff.net.2.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 102 |

+

transformer_blocks.0.ff.net.2.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 103 |

+

transformer_blocks.0.norm1.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 104 |

+

transformer_blocks.0.norm1.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 105 |

+

transformer_blocks.0.norm2.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 106 |

+

transformer_blocks.0.norm2.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 107 |

+

transformer_blocks.0.norm3.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 108 |

+

transformer_blocks.0.norm3.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 109 |

+

conv.bias|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null||null|null||null|null||null|null|null

|

| 110 |

+

conv.weight|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null||null|null||null|null||null|null|null

|

| 111 |

+

0.bias||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 112 |

+

0.weight||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 113 |

+

2.bias|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 114 |

+

2.weight|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 115 |

+

time_embed.0.weight||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 116 |

+

time_embed.0.bias||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 117 |

+

time_embed.2.weight||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 118 |

+

time_embed.2.bias||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

extensions/microsoftexcel-supermerger/elemental_ja.md

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Elemental Merge

|

| 2 |

+

- 階層マージを越えた階層マージです

|

| 3 |

+

|

| 4 |

+

階層マージでは25の階層ごとにマージ比率を変えることができますが、階層もまた複数の要素で構成されており、要素ごとに比率を変えることも原理的には可能です。可能ですが、要素の数は600以上にもなり人の手で扱えるのかは疑問でしたが実装してみました。いきなり要素ごとのマージは推奨されません。階層マージにおいて解決不可能な問題が生じたときに最終調節手段として使うことをおすすめします。

|

| 5 |

+

次の画像はOUT05層の要素を変えた結果です。左端はマージ無し。2番目はOUT05層すべて(つまりは普通の階層マージ),以降が要素マージです。下表のとおり、attn2などの中にはさらに複数の要素が含まれます。

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

## 使い方

|

| 9 |

+

要素マージは通常マージ、階層マージ時どちらの場合でも有効で、最後に計算されるために、階層マージで指定した値は上書きされることに注意してください。

|

| 10 |

+

|

| 11 |

+

Elemental Mergeで設定します。ここにテキストが設定されていると自動的に適応されるので注意して下さい。各要素は下表のとおりですが、各要素のフルネームを入力する必要はありません。

|

| 12 |

+

ちゃんと効果が現れるかどうかはprint changeチェックを有効にすることで確認できます。このチェックを有効にするとマージ時にコマンドプロンプト画面に適用された要素が表示されます。

|

| 13 |

+

部分一致で指定が可能です。

|

| 14 |

+

### 書式

|

| 15 |

+

階層:要素:比率,階層:要素:比率,...

|

| 16 |

+

または

|

| 17 |

+

階層:要素:比率

|

| 18 |

+

階層:要素:比率

|

| 19 |

+

階層:要素:比率

|

| 20 |

+

|

| 21 |

+

カンマまたは改行で区切ることで複数の指定が可能です。カンマと改行は混在しても問題ありません。

|

| 22 |

+

階層は大文字でBASE,IN00-M00-OUT11まで指定でます。空欄にするとすべての階層に適用されます。スペースで区切ることで複数の階層を指定できます。

|

| 23 |

+

要素も同様でスペースで区切ることで複数の要素を指定できます。

|

| 24 |

+

部分一致で判断するので、例えば「attn」と入力するとattn1,attn2両方が変化します。「attn2」の場合はattn2のみ。さらに細かく指定したい場合は「attn2.to_out」などと入力します。

|

| 25 |

+

|

| 26 |

+

OUT03 OUT04 OUT05:attn2 attn1.to_out:0.5

|

| 27 |

+

|

| 28 |

+

と入力すると、OUT03,OUT04,OUT05層のattn2が含まれる要素及びattn1.to_outの比率が0.5になります。

|

| 29 |

+

要素の欄を空欄にすると指定階層のすべての要素が変わり、階層マージと同じ効果になります。

|

| 30 |

+

指定が重複する場合、後に入力された方が優先されます。

|

| 31 |

+

|

| 32 |

+

OUT06:attn:0.5,OUT06:attn2.to_k:0.2

|

| 33 |

+

|

| 34 |

+

と入力した場合、OUT06層のattn2.to_k以外のattnは0.5,attn2.to_kのみ0.2となります。

|

| 35 |

+

|

| 36 |

+

最初にNOTと入力することで効果範囲を反転させることができます。

|

| 37 |

+

これは階層・要素別に設定できます。

|

| 38 |

+

|

| 39 |

+

NOT OUT04:attn:1

|

| 40 |

+

|

| 41 |

+

と入力するとOUT04層以外の層のattnに比率1が設定されます。

|

| 42 |

+

|

| 43 |

+

OUT05:NOT attn proj:0.2

|

| 44 |

+

|

| 45 |

+

とすると、OUT05層のattnとproj以外の層が0.2になります。

|

| 46 |

+

|

| 47 |

+

## XY plot

|

| 48 |

+

elemental用のXY plotを複数用意しています。入力例はsample.txtにあります。

|

| 49 |

+

#### elemental

|

| 50 |

+

複数の要素マージについてXY plotを作成します。要素同士は空行で区切ってください。

|

| 51 |

+

トップ画像はsample.txtのsample1を実行した結果です。

|

| 52 |

+

|

| 53 |

+

#### pinpoint element

|

| 54 |

+

特定の要素について値を変えてXY plotを作成します。pinpoint Blocksと同じことを要素で行います。反対の軸にはalphaを指定してください。要素同士は改行またはカンマで区切ります。

|

| 55 |

+

以下の画像はsample.txtのsample3を実行した結果です。

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

#### effective elenemtal checker

|

| 59 |

+

各要素の影響度を差分として出力します。オプションでanime gif、csvファイルを出力できます。gif.csvファイルはoutputフォルダにModelAとModelBから作られるフォルダ下に作成されるdiffフォルダに作成されます。ファイル名が重複する場合名前を変えて保存しますが、増えてくるとややこしいのでdiffフォルダを適当な名前に変えることをおすすめします。

|

| 60 |

+

改行またはカンマで区切ります。反対の軸はalphaを使用し、単一の値を入力してください。これは要素の効果を見るのにも有効ですが、要素を指定しないことで階層の効果を見ることも可能なので、そちらの使い方をする場合が多いかもしれません。

|

| 61 |

+

以下��画像はsample.txtのsample5を実行した結果です。

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

### 要素一覧

|

| 65 |

+

基本的にはattnが顔や服装の情報を担っているようです。特にIN07,OUT03,OUT04,OUT05層の影響度が強いようです。階層によって影響度が異なることが多いので複数の層の同じ要素を同時に変化させることは意味が無いように思えます。

|

| 66 |

+

nullと書かれた場所には要素が存在しません。

|

| 67 |

+

|

| 68 |

+

||IN00|IN01|IN02|IN03|IN04|IN05|IN06|IN07|IN08|IN09|IN10|IN11|M00|M00|OUT00|OUT01|OUT02|OUT03|OUT04|OUT05|OUT06|OUT07|OUT08|OUT09|OUT10|OUT11

|

| 69 |

+

|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|-|

|

| 70 |

+

op.bias|null|null|null||null|null||null|null||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null

|

| 71 |

+

op.weight|null|null|null||null|null||null|null||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null

|

| 72 |

+

emb_layers.1.bias|null|||null|||null|||null|null|||||||||||||||

|

| 73 |

+

emb_layers.1.weight|null|||null|||null|||null|null|||||||||||||||

|

| 74 |

+

in_layers.0.bias|null|||null|||null|||null|null|||||||||||||||

|

| 75 |

+

in_layers.0.weight|null|||null|||null|||null|null|||||||||||||||

|

| 76 |

+

in_layers.2.bias|null|||null|||null|||null|null|||||||||||||||

|

| 77 |

+

in_layers.2.weight|null|||null|||null|||null|null|||||||||||||||

|

| 78 |

+

out_layers.0.bias|null|||null|||null|||null|null|||||||||||||||

|

| 79 |

+

out_layers.0.weight|null|||null|||null|||null|null|||||||||||||||

|

| 80 |

+

out_layers.3.bias|null|||null|||null|||null|null|||||||||||||||

|

| 81 |

+

out_layers.3.weight|null|||null|||null|||null|null|||||||||||||||

|

| 82 |

+

skip_connection.bias|null|null|null|null||null|null||null|null|null|null|null|null||||||||||||

|

| 83 |

+

skip_connection.weight|null|null|null|null||null|null||null|null|null|null|null|null||||||||||||

|

| 84 |

+

norm.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 85 |

+

norm.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 86 |

+

proj_in.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 87 |

+

proj_in.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 88 |

+

proj_out.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 89 |

+

proj_out.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 90 |

+

transformer_blocks.0.attn1.to_k.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 91 |

+

transformer_blocks.0.attn1.to_out.0.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 92 |

+

transformer_blocks.0.attn1.to_out.0.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 93 |

+

transformer_blocks.0.attn1.to_q.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 94 |

+

transformer_blocks.0.attn1.to_v.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 95 |

+

transformer_blocks.0.attn2.to_k.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 96 |

+

transformer_blocks.0.attn2.to_out.0.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 97 |

+

transformer_blocks.0.attn2.to_out.0.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 98 |

+

transformer_blocks.0.attn2.to_q.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 99 |

+

transformer_blocks.0.attn2.to_v.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 100 |

+

transformer_blocks.0.ff.net.0.proj.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 101 |

+

transformer_blocks.0.ff.net.0.proj.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 102 |

+

transformer_blocks.0.ff.net.2.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 103 |

+

transformer_blocks.0.ff.net.2.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 104 |

+

transformer_blocks.0.norm1.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 105 |

+

transformer_blocks.0.norm1.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 106 |

+

transformer_blocks.0.norm2.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 107 |

+

transformer_blocks.0.norm2.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 108 |

+

transformer_blocks.0.norm3.bias|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 109 |

+

transformer_blocks.0.norm3.weight|null|||null|||null|||null|null|null||null|null|null|null|||||||||

|

| 110 |

+

conv.bias|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null||null|null||null|null||null|null|null

|

| 111 |

+

conv.weight|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null||null|null||null|null||null|null|null

|

| 112 |

+

0.bias||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 113 |

+

0.weight||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 114 |

+

2.bias|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 115 |

+

2.weight|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 116 |

+

time_embed.0.weight||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 117 |

+

time_embed.0.bias||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 118 |

+

time_embed.2.weight||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

| 119 |

+

time_embed.2.bias||null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|null|

|

extensions/microsoftexcel-supermerger/install.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import launch

|

| 2 |

+

|

| 3 |

+

if not launch.is_installed("sklearn"):

|

| 4 |

+

launch.run_pip("install scikit-learn", "scikit-learn")

|

| 5 |

+

|

| 6 |

+

if not launch.is_installed("diffusers"):

|

| 7 |

+

launch.run_pip("install diffusers", "diffusers")

|

extensions/microsoftexcel-supermerger/sample.txt

ADDED

|

@@ -0,0 +1,95 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

eamples of XY plot

|

| 2 |

+

***************************************************************

|

| 3 |

+

for elemental

|

| 4 |

+

Each value is separated by a blank line/空行で区切ります

|

| 5 |

+

Element merge values are comma or newline delimited/要素マージの値はカンマまたは改行区切りです

|

| 6 |

+

Commas and newlines can also be mixed/カンマと改行の混在も可能です

|

| 7 |

+

You can insert no elemental by inserting two blank lines at the beginning/最初に空行をふたつ入れるとelementalのない状態を挿入できます

|

| 8 |

+

|

| 9 |

+

**sample1*******************************************************

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

OUT05::1

|

| 13 |

+

|

| 14 |

+

OUT05:layers:1

|

| 15 |

+

|

| 16 |

+

OUT05:attn1:1

|

| 17 |

+

|

| 18 |

+

OUT05:attn2:1

|

| 19 |

+

|

| 20 |

+

OUT05:ff.net:1

|

| 21 |

+

**sample2*******************************************************

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

IN07:attn1:0.5,IN07:attn2:0.2

|

| 25 |

+

OUT03:NOT attn1.to_k.weight:0.5

|

| 26 |

+

OUT03:attn1.to_k.weight:0.3

|

| 27 |

+

|

| 28 |

+

OUT04:NOT ff.net:0.5

|

| 29 |

+

OUT04:attn1.to_k.weight:0.3

|

| 30 |

+

OUT04:attn1.to_out.0.weight:0.3

|

| 31 |

+

|

| 32 |

+

OUT05:NOT ff.net:0.5

|

| 33 |

+

OUT05:attn1.to_k.weight:0.3

|

| 34 |

+

OUT05:attn1.to_out.0.weight:0.3

|

| 35 |

+

***************************************************************

|

| 36 |

+

***************************************************************

|

| 37 |

+

for pinpoint element

|

| 38 |

+

Each value is separated by comma or a blank line/カンマまたは改行で区切ります

|

| 39 |

+

do not enter ratio/ratioは入力しません

|

| 40 |

+

**sample3******************************************************

|

| 41 |

+

IN07:,IN07:layers,IN07:attn1,IN07:attn2,IN07:ff.net

|

| 42 |

+

**sample4******************************************************

|

| 43 |

+

OUT04:NOT attn2.to_q

|

| 44 |

+

OUT04:attn1.to_k.weight

|

| 45 |

+

OUT04:ff.net

|

| 46 |

+

OUT04:attn

|

| 47 |

+

***************************************************************

|

| 48 |

+

***************************************************************

|

| 49 |

+

for effective elemental cheker

|

| 50 |

+

|

| 51 |

+

Examine the effect of each block/各階層の影響度を調べる

|

| 52 |

+

Output results can be split by inserting "|"/途中「|」を挿入することで出力結果を分割できます。

|

| 53 |

+

**sample5*************************************************************

|

| 54 |

+

IN00:,IN01:,IN02:,IN03:,IN04:,IN05:,IN06:,IN07:,IN08:,IN09:,IN10:,IN11:,M00:|OUT00:,OUT01:,OUT02:,OUT03:,OUT04:,OUT05:,OUT06:,OUT07:,OUT08:,OUT09:,OUT10:,OUT11:

|

| 55 |

+

|

| 56 |

+

Examine the effect of all elements in the IN01/IN01層のすべての要素の影響度を調べる

|

| 57 |

+

Below that corresponds to IN02 or later/その下はIN02以降に対応

|

| 58 |

+

**sample6*************************************************************

|

| 59 |

+

IN01:emb_layers.1.bias,IN01:emb_layers.1.weight,IN01:in_layers.0.bias,IN01:in_layers.0.weight,IN01:in_layers.2.bias,IN01:in_layers.2.weight,IN01:out_layers.0.bias,IN01:out_layers.0.weight,IN01:out_layers.3.bias,IN01:out_layers.3.weight,IN01:skip_connection.bias,IN01:skip_connection.weight,IN01:norm.bias,IN01:norm.weight,IN01:proj_in.bias,IN01:proj_in.weight,IN01:proj_out.bias,IN01:proj_out.weight,IN01:transformer_blocks.0.attn1.to_k.weight,IN01:transformer_blocks.0.attn1.to_out.0.bias,IN01:transformer_blocks.0.attn1.to_out.0.weight,IN01:transformer_blocks.0.attn1.to_q.weight,IN01:transformer_blocks.0.attn1.to_v.weight,IN01:transformer_blocks.0.attn2.to_k.weight,IN01:transformer_blocks.0.attn2.to_out.0.bias,IN01:transformer_blocks.0.attn2.to_out.0.weight,IN01:transformer_blocks.0.attn2.to_q.weight,IN01:transformer_blocks.0.attn2.to_v.weight,IN01:transformer_blocks.0.ff.net.0.proj.bias,IN01:transformer_blocks.0.ff.net.0.proj.weight,IN01:transformer_blocks.0.ff.net.2.bias,IN01:transformer_blocks.0.ff.net.2.weight,IN01:transformer_blocks.0.norm1.bias,IN01:transformer_blocks.0.norm1.weight,IN01:transformer_blocks.0.norm2.bias,IN01:transformer_blocks.0.norm2.weight,IN01:transformer_blocks.0.norm3.bias,IN01:transformer_blocks.0.norm3.weight

|

| 60 |

+

|

| 61 |

+

IN02:emb_layers.1.bias,IN02:emb_layers.1.weight,IN02:in_layers.0.bias,IN02:in_layers.0.weight,IN02:in_layers.2.bias,IN02:in_layers.2.weight,IN02:out_layers.0.bias,IN02:out_layers.0.weight,IN02:out_layers.3.bias,IN02:out_layers.3.weight,IN02:skip_connection.bias,IN02:skip_connection.weight,IN02:norm.bias,IN02:norm.weight,IN02:proj_in.bias,IN02:proj_in.weight,IN02:proj_out.bias,IN02:proj_out.weight,IN02:transformer_blocks.0.attn1.to_k.weight,IN02:transformer_blocks.0.attn1.to_out.0.bias,IN02:transformer_blocks.0.attn1.to_out.0.weight,IN02:transformer_blocks.0.attn1.to_q.weight,IN02:transformer_blocks.0.attn1.to_v.weight,IN02:transformer_blocks.0.attn2.to_k.weight,IN02:transformer_blocks.0.attn2.to_out.0.bias,IN02:transformer_blocks.0.attn2.to_out.0.weight,IN02:transformer_blocks.0.attn2.to_q.weight,IN02:transformer_blocks.0.attn2.to_v.weight,IN02:transformer_blocks.0.ff.net.0.proj.bias,IN02:transformer_blocks.0.ff.net.0.proj.weight,IN02:transformer_blocks.0.ff.net.2.bias,IN02:transformer_blocks.0.ff.net.2.weight,IN02:transformer_blocks.0.norm1.bias,IN02:transformer_blocks.0.norm1.weight,IN02:transformer_blocks.0.norm2.bias,IN02:transformer_blocks.0.norm2.weight,IN02:transformer_blocks.0.norm3.bias,IN02:transformer_blocks.0.norm3.weight

|

| 62 |

+

|

| 63 |

+

IN00:bias,IN00:weight,IN03:op.bias,IN03:op.weight,IN06:op.bias,IN06:op.weight,IN09:op.bias,IN09:op.weight

|

| 64 |

+

|

| 65 |

+

IN04:emb_layers.1.bias,IN04:emb_layers.1.weight,IN04:in_layers.0.bias,IN04:in_layers.0.weight,IN04:in_layers.2.bias,IN04:in_layers.2.weight,IN04:out_layers.0.bias,IN04:out_layers.0.weight,IN04:out_layers.3.bias,IN04:out_layers.3.weight,IN04:skip_connection.bias,IN04:skip_connection.weight,IN04:norm.bias,IN04:norm.weight,IN04:proj_in.bias,IN04:proj_in.weight,IN04:proj_out.bias,IN04:proj_out.weight,IN04:transformer_blocks.0.attn1.to_k.weight,IN04:transformer_blocks.0.attn1.to_out.0.bias,IN04:transformer_blocks.0.attn1.to_out.0.weight,IN04:transformer_blocks.0.attn1.to_q.weight,IN04:transformer_blocks.0.attn1.to_v.weight,IN04:transformer_blocks.0.attn2.to_k.weight,IN04:transformer_blocks.0.attn2.to_out.0.bias,IN04:transformer_blocks.0.attn2.to_out.0.weight,IN04:transformer_blocks.0.attn2.to_q.weight,IN04:transformer_blocks.0.attn2.to_v.weight,IN04:transformer_blocks.0.ff.net.0.proj.bias,IN04:transformer_blocks.0.ff.net.0.proj.weight,IN04:transformer_blocks.0.ff.net.2.bias,IN04:transformer_blocks.0.ff.net.2.weight,IN04:transformer_blocks.0.norm1.bias,IN04:transformer_blocks.0.norm1.weight,IN04:transformer_blocks.0.norm2.bias,IN04:transformer_blocks.0.norm2.weight,IN04:transformer_blocks.0.norm3.bias,IN04:transformer_blocks.0.norm3.weight

|

| 66 |

+

|

| 67 |

+

IN05:emb_layers.1.bias,IN05:emb_layers.1.weight,IN05:in_layers.0.bias,IN05:in_layers.0.weight,IN05:in_layers.2.bias,IN05:in_layers.2.weight,IN05:out_layers.0.bias,IN05:out_layers.0.weight,IN05:out_layers.3.bias,IN05:out_layers.3.weight,IN05:skip_connection.bias,IN05:skip_connection.weight,IN05:norm.bias,IN05:norm.weight,IN05:proj_in.bias,IN05:proj_in.weight,IN05:proj_out.bias,IN05:proj_out.weight,IN05:transformer_blocks.0.attn1.to_k.weight,IN05:transformer_blocks.0.attn1.to_out.0.bias,IN05:transformer_blocks.0.attn1.to_out.0.weight,IN05:transformer_blocks.0.attn1.to_q.weight,IN05:transformer_blocks.0.attn1.to_v.weight,IN05:transformer_blocks.0.attn2.to_k.weight,IN05:transformer_blocks.0.attn2.to_out.0.bias,IN05:transformer_blocks.0.attn2.to_out.0.weight,IN05:transformer_blocks.0.attn2.to_q.weight,IN05:transformer_blocks.0.attn2.to_v.weight,IN05:transformer_blocks.0.ff.net.0.proj.bias,IN05:transformer_blocks.0.ff.net.0.proj.weight,IN05:transformer_blocks.0.ff.net.2.bias,IN05:transformer_blocks.0.ff.net.2.weight,IN05:transformer_blocks.0.norm1.bias,IN05:transformer_blocks.0.norm1.weight,IN05:transformer_blocks.0.norm2.bias,IN05:transformer_blocks.0.norm2.weight,IN05:transformer_blocks.0.norm3.bias,IN05:transformer_blocks.0.norm3.weight

|

| 68 |

+

|

| 69 |

+

IN07:emb_layers.1.bias,IN07:emb_layers.1.weight,IN07:in_layers.0.bias,IN07:in_layers.0.weight,IN07:in_layers.2.bias,IN07:in_layers.2.weight,IN07:out_layers.0.bias,IN07:out_layers.0.weight,IN07:out_layers.3.bias,IN07:out_layers.3.weight,IN07:skip_connection.bias,IN07:skip_connection.weight,IN07:norm.bias,IN07:norm.weight,IN07:proj_in.bias,IN07:proj_in.weight,IN07:proj_out.bias,IN07:proj_out.weight,IN07:transformer_blocks.0.attn1.to_k.weight,IN07:transformer_blocks.0.attn1.to_out.0.bias,IN07:transformer_blocks.0.attn1.to_out.0.weight,IN07:transformer_blocks.0.attn1.to_q.weight,IN07:transformer_blocks.0.attn1.to_v.weight,IN07:transformer_blocks.0.attn2.to_k.weight,IN07:transformer_blocks.0.attn2.to_out.0.bias,IN07:transformer_blocks.0.attn2.to_out.0.weight,IN07:transformer_blocks.0.attn2.to_q.weight,IN07:transformer_blocks.0.attn2.to_v.weight,IN07:transformer_blocks.0.ff.net.0.proj.bias,IN07:transformer_blocks.0.ff.net.0.proj.weight,IN07:transformer_blocks.0.ff.net.2.bias,IN07:transformer_blocks.0.ff.net.2.weight,IN07:transformer_blocks.0.norm1.bias,IN07:transformer_blocks.0.norm1.weight,IN07:transformer_blocks.0.norm2.bias,IN07:transformer_blocks.0.norm2.weight,IN07:transformer_blocks.0.norm3.bias,IN07:transformer_blocks.0.norm3.weight

|

| 70 |

+

|

| 71 |

+

IN08:emb_layers.1.bias,IN08:emb_layers.1.weight,IN08:in_layers.0.bias,IN08:in_layers.0.weight,IN08:in_layers.2.bias,IN08:in_layers.2.weight,IN08:out_layers.0.bias,IN08:out_layers.0.weight,IN08:out_layers.3.bias,IN08:out_layers.3.weight,IN08:skip_connection.bias,IN08:skip_connection.weight,IN08:norm.bias,IN08:norm.weight,IN08:proj_in.bias,IN08:proj_in.weight,IN08:proj_out.bias,IN08:proj_out.weight,IN08:transformer_blocks.0.attn1.to_k.weight,IN08:transformer_blocks.0.attn1.to_out.0.bias,IN08:transformer_blocks.0.attn1.to_out.0.weight,IN08:transformer_blocks.0.attn1.to_q.weight,IN08:transformer_blocks.0.attn1.to_v.weight,IN08:transformer_blocks.0.attn2.to_k.weight,IN08:transformer_blocks.0.attn2.to_out.0.bias,IN08:transformer_blocks.0.attn2.to_out.0.weight,IN08:transformer_blocks.0.attn2.to_q.weight,IN08:transformer_blocks.0.attn2.to_v.weight,IN08:transformer_blocks.0.ff.net.0.proj.bias,IN08:transformer_blocks.0.ff.net.0.proj.weight,IN08:transformer_blocks.0.ff.net.2.bias,IN08:transformer_blocks.0.ff.net.2.weight,IN08:transformer_blocks.0.norm1.bias,IN08:transformer_blocks.0.norm1.weight,IN08:transformer_blocks.0.norm2.bias,IN08:transformer_blocks.0.norm2.weight,IN08:transformer_blocks.0.norm3.bias,IN08:transformer_blocks.0.norm3.weight

|

| 72 |

+

|

| 73 |

+

IN10:emb_layers.1.bias,IN10:emb_layers.1.weight,IN10:in_layers.0.bias,IN10:in_layers.0.weight,IN10:in_layers.2.bias,IN10:in_layers.2.weight,IN10:out_layers.0.bias,IN10:out_layers.0.weight,IN10:out_layers.3.bias,IN10:out_layers.3.weight,IN11:emb_layers.1.bias,IN11:emb_layers.1.weight,IN11:in_layers.0.bias,IN11:in_layers.0.weight,IN11:in_layers.2.bias,IN11:in_layers.2.weight,IN11:out_layers.0.bias,IN11:out_layers.0.weight,IN11:out_layers.3.bias,IN11:out_layers.3.weight

|

| 74 |

+

|

| 75 |

+

M00:0.emb_layers.1.bias,M00:0.emb_layers.1.weight,M00:0.in_layers.0.bias,M00:0.in_layers.0.weight,M00:0.in_layers.2.bias,M00:0.in_layers.2.weight,M00:0.out_layers.0.bias,M00:0.out_layers.0.weight,M00:0.out_layers.3.bias,M00:0.out_layers.3.weight,M00:1.norm.bias,M00:1.norm.weight,M00:1.proj_in.bias,M00:1.proj_in.weight,M00:1.proj_out.bias,M00:1.proj_out.weight,M00:1.transformer_blocks.0.attn1.to_k.weight,M00:1.transformer_blocks.0.attn1.to_out.0.bias,M00:1.transformer_blocks.0.attn1.to_out.0.weight,M00:1.transformer_blocks.0.attn1.to_q.weight,M00:1.transformer_blocks.0.attn1.to_v.weight,M00:1.transformer_blocks.0.attn2.to_k.weight,M00:1.transformer_blocks.0.attn2.to_out.0.bias,M00:1.transformer_blocks.0.attn2.to_out.0.weight,M00:1.transformer_blocks.0.attn2.to_q.weight,M00:1.transformer_blocks.0.attn2.to_v.weight,M00:1.transformer_blocks.0.ff.net.0.proj.bias,M00:1.transformer_blocks.0.ff.net.0.proj.weight,M00:1.transformer_blocks.0.ff.net.2.bias,M00:1.transformer_blocks.0.ff.net.2.weight,M00:1.transformer_blocks.0.norm1.bias,M00:1.transformer_blocks.0.norm1.weight,M00:1.transformer_blocks.0.norm2.bias,M00:1.transformer_blocks.0.norm2.weight,M00:1.transformer_blocks.0.norm3.bias,M00:1.transformer_blocks.0.norm3.weight,M00:2.emb_layers.1.bias,M00:2.emb_layers.1.weight,M00:2.in_layers.0.bias,M00:2.in_layers.0.weight,M00:2.in_layers.2.bias,M00:2.in_layers.2.weight,M00:2.out_layers.0.bias,M00:2.out_layers.0.weight,M00:2.out_layers.3.bias,M00:2.out_layers.3.weight

|

| 76 |

+

|

| 77 |

+

OUT00:emb_layers.1.bias,OUT00:emb_layers.1.weight,OUT00:in_layers.0.bias,OUT00:in_layers.0.weight,OUT00:in_layers.2.bias,OUT00:in_layers.2.weight,OUT00:out_layers.0.bias,OUT00:out_layers.0.weight,OUT00:out_layers.3.bias,OUT00:out_layers.3.weight,OUT00:skip_connection.bias,OUT00:skip_connection.weight,OUT01:emb_layers.1.bias,OUT01:emb_layers.1.weight,OUT01:in_layers.0.bias,OUT01:in_layers.0.weight,OUT01:in_layers.2.bias,OUT01:in_layers.2.weight,OUT01:out_layers.0.bias,OUT01:out_layers.0.weight,OUT01:out_layers.3.bias,OUT01:out_layers.3.weight,OUT01:skip_connection.bias,OUT01:skip_connection.weight,OUT02:emb_layers.1.bias,OUT02:emb_layers.1.weight,OUT02:in_layers.0.bias,OUT02:in_layers.0.weight,OUT02:in_layers.2.bias,OUT02:in_layers.2.weight,OUT02:out_layers.0.bias,OUT02:out_layers.0.weight,OUT02:out_layers.3.bias,OUT02:out_layers.3.weight,OUT02:skip_connection.bias,OUT02:skip_connection.weight,OUT02:conv.bias,OUT02:conv.weight

|

| 78 |

+

|

| 79 |

+

OUT03:emb_layers.1.bias,OUT03:emb_layers.1.weight,OUT03:in_layers.0.bias,OUT03:in_layers.0.weight,OUT03:in_layers.2.bias,OUT03:in_layers.2.weight,OUT03:out_layers.0.bias,OUT03:out_layers.0.weight,OUT03:out_layers.3.bias,OUT03:out_layers.3.weight,OUT03:skip_connection.bias,OUT03:skip_connection.weight,OUT03:norm.bias,OUT03:norm.weight,OUT03:proj_in.bias,OUT03:proj_in.weight,OUT03:proj_out.bias,OUT03:proj_out.weight,OUT03:transformer_blocks.0.attn1.to_k.weight,OUT03:transformer_blocks.0.attn1.to_out.0.bias,OUT03:transformer_blocks.0.attn1.to_out.0.weight,OUT03:transformer_blocks.0.attn1.to_q.weight,OUT03:transformer_blocks.0.attn1.to_v.weight,OUT03:transformer_blocks.0.attn2.to_k.weight,OUT03:transformer_blocks.0.attn2.to_out.0.bias,OUT03:transformer_blocks.0.attn2.to_out.0.weight,OUT03:transformer_blocks.0.attn2.to_q.weight,OUT03:transformer_blocks.0.attn2.to_v.weight,OUT03:transformer_blocks.0.ff.net.0.proj.bias,OUT03:transformer_blocks.0.ff.net.0.proj.weight,OUT03:transformer_blocks.0.ff.net.2.bias,OUT03:transformer_blocks.0.ff.net.2.weight,OUT03:transformer_blocks.0.norm1.bias,OUT03:transformer_blocks.0.norm1.weight,OUT03:transformer_blocks.0.norm2.bias,OUT03:transformer_blocks.0.norm2.weight,OUT03:transformer_blocks.0.norm3.bias,OUT03:transformer_blocks.0.norm3.weight

|

| 80 |

+

|

| 81 |

+

OUT04:emb_layers.1.bias,OUT04:emb_layers.1.weight,OUT04:in_layers.0.bias,OUT04:in_layers.0.weight,OUT04:in_layers.2.bias,OUT04:in_layers.2.weight,OUT04:out_layers.0.bias,OUT04:out_layers.0.weight,OUT04:out_layers.3.bias,OUT04:out_layers.3.weight,OUT04:skip_connection.bias,OUT04:skip_connection.weight,OUT04:norm.bias,OUT04:norm.weight,OUT04:proj_in.bias,OUT04:proj_in.weight,OUT04:proj_out.bias,OUT04:proj_out.weight,OUT04:transformer_blocks.0.attn1.to_k.weight,OUT04:transformer_blocks.0.attn1.to_out.0.bias,OUT04:transformer_blocks.0.attn1.to_out.0.weight,OUT04:transformer_blocks.0.attn1.to_q.weight,OUT04:transformer_blocks.0.attn1.to_v.weight,OUT04:transformer_blocks.0.attn2.to_k.weight,OUT04:transformer_blocks.0.attn2.to_out.0.bias,OUT04:transformer_blocks.0.attn2.to_out.0.weight,OUT04:transformer_blocks.0.attn2.to_q.weight,OUT04:transformer_blocks.0.attn2.to_v.weight,OUT04:transformer_blocks.0.ff.net.0.proj.bias,OUT04:transformer_blocks.0.ff.net.0.proj.weight,OUT04:transformer_blocks.0.ff.net.2.bias,OUT04:transformer_blocks.0.ff.net.2.weight,OUT04:transformer_blocks.0.norm1.bias,OUT04:transformer_blocks.0.norm1.weight,OUT04:transformer_blocks.0.norm2.bias,OUT04:transformer_blocks.0.norm2.weight,OUT04:transformer_blocks.0.norm3.bias,OUT04:transformer_blocks.0.norm3.weight

|

| 82 |

+

|

| 83 |

+

OUT05:emb_layers.1.bias,OUT05:emb_layers.1.weight,OUT05:in_layers.0.bias,OUT05:in_layers.0.weight,OUT05:in_layers.2.bias,OUT05:in_layers.2.weight,OUT05:out_layers.0.bias,OUT05:out_layers.0.weight,OUT05:out_layers.3.bias,OUT05:out_layers.3.weight,OUT05:skip_connection.bias,OUT05:skip_connection.weight,OUT05:norm.bias,OUT05:norm.weight,OUT05:proj_in.bias,OUT05:proj_in.weight,OUT05:proj_out.bias,OUT05:proj_out.weight,OUT05:transformer_blocks.0.attn1.to_k.weight,OUT05:transformer_blocks.0.attn1.to_out.0.bias,OUT05:transformer_blocks.0.attn1.to_out.0.weight,OUT05:transformer_blocks.0.attn1.to_q.weight,OUT05:transformer_blocks.0.attn1.to_v.weight,OUT05:transformer_blocks.0.attn2.to_k.weight,OUT05:transformer_blocks.0.attn2.to_out.0.bias,OUT05:transformer_blocks.0.attn2.to_out.0.weight,OUT05:transformer_blocks.0.attn2.to_q.weight,OUT05:transformer_blocks.0.attn2.to_v.weight,OUT05:transformer_blocks.0.ff.net.0.proj.bias,OUT05:transformer_blocks.0.ff.net.0.proj.weight,OUT05:transformer_blocks.0.ff.net.2.bias,OUT05:transformer_blocks.0.ff.net.2.weight,OUT05:transformer_blocks.0.norm1.bias,OUT05:transformer_blocks.0.norm1.weight,OUT05:transformer_blocks.0.norm2.bias,OUT05:transformer_blocks.0.norm2.weight,OUT05:transformer_blocks.0.norm3.bias,OUT05:transformer_blocks.0.norm3.weight,OUT05:conv.bias,OUT05:conv.weight

|

| 84 |

+

|

| 85 |

+

,OUT06:emb_layers.1.bias,OUT06:emb_layers.1.weight,OUT06:in_layers.0.bias,OUT06:in_layers.0.weight,OUT06:in_layers.2.bias,OUT06:in_layers.2.weight,OUT06:out_layers.0.bias,OUT06:out_layers.0.weight,OUT06:out_layers.3.bias,OUT06:out_layers.3.weight,OUT06:skip_connection.bias,OUT06:skip_connection.weight,OUT06:norm.bias,OUT06:norm.weight,OUT06:proj_in.bias,OUT06:proj_in.weight,OUT06:proj_out.bias,OUT06:proj_out.weight,OUT06:transformer_blocks.0.attn1.to_k.weight,OUT06:transformer_blocks.0.attn1.to_out.0.bias,OUT06:transformer_blocks.0.attn1.to_out.0.weight,OUT06:transformer_blocks.0.attn1.to_q.weight,OUT06:transformer_blocks.0.attn1.to_v.weight,OUT06:transformer_blocks.0.attn2.to_k.weight,OUT06:transformer_blocks.0.attn2.to_out.0.bias,OUT06:transformer_blocks.0.attn2.to_out.0.weight,OUT06:transformer_blocks.0.attn2.to_q.weight,OUT06:transformer_blocks.0.attn2.to_v.weight,OUT06:transformer_blocks.0.ff.net.0.proj.bias,OUT06:transformer_blocks.0.ff.net.0.proj.weight,OUT06:transformer_blocks.0.ff.net.2.bias,OUT06:transformer_blocks.0.ff.net.2.weight,OUT06:transformer_blocks.0.norm1.bias,OUT06:transformer_blocks.0.norm1.weight,OUT06:transformer_blocks.0.norm2.bias,OUT06:transformer_blocks.0.norm2.weight,OUT06:transformer_blocks.0.norm3.bias,OUT06:transformer_blocks.0.norm3.weight

|

| 86 |

+

|

| 87 |

+

OUT07:emb_layers.1.bias,OUT07:emb_layers.1.weight,OUT07:in_layers.0.bias,OUT07:in_layers.0.weight,OUT07:in_layers.2.bias,OUT07:in_layers.2.weight,OUT07:out_layers.0.bias,OUT07:out_layers.0.weight,OUT07:out_layers.3.bias,OUT07:out_layers.3.weight,OUT07:skip_connection.bias,OUT07:skip_connection.weight,OUT07:norm.bias,OUT07:norm.weight,OUT07:proj_in.bias,OUT07:proj_in.weight,OUT07:proj_out.bias,OUT07:proj_out.weight,OUT07:transformer_blocks.0.attn1.to_k.weight,OUT07:transformer_blocks.0.attn1.to_out.0.bias,OUT07:transformer_blocks.0.attn1.to_out.0.weight,OUT07:transformer_blocks.0.attn1.to_q.weight,OUT07:transformer_blocks.0.attn1.to_v.weight,OUT07:transformer_blocks.0.attn2.to_k.weight,OUT07:transformer_blocks.0.attn2.to_out.0.bias,OUT07:transformer_blocks.0.attn2.to_out.0.weight,OUT07:transformer_blocks.0.attn2.to_q.weight,OUT07:transformer_blocks.0.attn2.to_v.weight,OUT07:transformer_blocks.0.ff.net.0.proj.bias,OUT07:transformer_blocks.0.ff.net.0.proj.weight,OUT07:transformer_blocks.0.ff.net.2.bias,OUT07:transformer_blocks.0.ff.net.2.weight,OUT07:transformer_blocks.0.norm1.bias,OUT07:transformer_blocks.0.norm1.weight,OUT07:transformer_blocks.0.norm2.bias,OUT07:transformer_blocks.0.norm2.weight,OUT07:transformer_blocks.0.norm3.bias,OUT07:transformer_blocks.0.norm3.weight

|

| 88 |

+

|

| 89 |

+

,OUT08:emb_layers.1.bias,OUT08:emb_layers.1.weight,OUT08:in_layers.0.bias,OUT08:in_layers.0.weight,OUT08:in_layers.2.bias,OUT08:in_layers.2.weight,OUT08:out_layers.0.bias,OUT08:out_layers.0.weight,OUT08:out_layers.3.bias,OUT08:out_layers.3.weight,OUT08:skip_connection.bias,OUT08:skip_connection.weight,OUT08:norm.bias,OUT08:norm.weight,OUT08:proj_in.bias,OUT08:proj_in.weight,OUT08:proj_out.bias,OUT08:proj_out.weight,OUT08:transformer_blocks.0.attn1.to_k.weight,OUT08:transformer_blocks.0.attn1.to_out.0.bias,OUT08:transformer_blocks.0.attn1.to_out.0.weight,OUT08:transformer_blocks.0.attn1.to_q.weight,OUT08:transformer_blocks.0.attn1.to_v.weight,OUT08:transformer_blocks.0.attn2.to_k.weight,OUT08:transformer_blocks.0.attn2.to_out.0.bias,OUT08:transformer_blocks.0.attn2.to_out.0.weight,OUT08:transformer_blocks.0.attn2.to_q.weight,OUT08:transformer_blocks.0.attn2.to_v.weight,OUT08:transformer_blocks.0.ff.net.0.proj.bias,OUT08:transformer_blocks.0.ff.net.0.proj.weight,OUT08:transformer_blocks.0.ff.net.2.bias,OUT08:transformer_blocks.0.ff.net.2.weight,OUT08:transformer_blocks.0.norm1.bias,OUT08:transformer_blocks.0.norm1.weight,OUT08:transformer_blocks.0.norm2.bias,OUT08:transformer_blocks.0.norm2.weight,OUT08:transformer_blocks.0.norm3.bias,OUT08:transformer_blocks.0.norm3.weight,OUT08:conv.bias,OUT08:conv.weight

|

| 90 |

+

|

| 91 |

+

OUT09:emb_layers.1.bias,OUT09:emb_layers.1.weight,OUT09:in_layers.0.bias,OUT09:in_layers.0.weight,OUT09:in_layers.2.bias,OUT09:in_layers.2.weight,OUT09:out_layers.0.bias,OUT09:out_layers.0.weight,OUT09:out_layers.3.bias,OUT09:out_layers.3.weight,OUT09:skip_connection.bias,OUT09:skip_connection.weight,OUT09:norm.bias,OUT09:norm.weight,OUT09:proj_in.bias,OUT09:proj_in.weight,OUT09:proj_out.bias,OUT09:proj_out.weight,OUT09:transformer_blocks.0.attn1.to_k.weight,OUT09:transformer_blocks.0.attn1.to_out.0.bias,OUT09:transformer_blocks.0.attn1.to_out.0.weight,OUT09:transformer_blocks.0.attn1.to_q.weight,OUT09:transformer_blocks.0.attn1.to_v.weight,OUT09:transformer_blocks.0.attn2.to_k.weight,OUT09:transformer_blocks.0.attn2.to_out.0.bias,OUT09:transformer_blocks.0.attn2.to_out.0.weight,OUT09:transformer_blocks.0.attn2.to_q.weight,OUT09:transformer_blocks.0.attn2.to_v.weight,OUT09:transformer_blocks.0.ff.net.0.proj.bias,OUT09:transformer_blocks.0.ff.net.0.proj.weight,OUT09:transformer_blocks.0.ff.net.2.bias,OUT09:transformer_blocks.0.ff.net.2.weight,OUT09:transformer_blocks.0.norm1.bias,OUT09:transformer_blocks.0.norm1.weight,OUT09:transformer_blocks.0.norm2.bias,OUT09:transformer_blocks.0.norm2.weight,OUT09:transformer_blocks.0.norm3.bias,OUT09:transformer_blocks.0.norm3.weight

|

| 92 |

+

|

| 93 |

+

OUT10:emb_layers.1.bias,OUT10:emb_layers.1.weight,OUT10:in_layers.0.bias,OUT10:in_layers.0.weight,OUT10:in_layers.2.bias,OUT10:in_layers.2.weight,OUT10:out_layers.0.bias,OUT10:out_layers.0.weight,OUT10:out_layers.3.bias,OUT10:out_layers.3.weight,OUT10:skip_connection.bias,OUT10:skip_connection.weight,OUT10:norm.bias,OUT10:norm.weight,OUT10:proj_in.bias,OUT10:proj_in.weight,OUT10:proj_out.bias,OUT10:proj_out.weight,OUT10:transformer_blocks.0.attn1.to_k.weight,OUT10:transformer_blocks.0.attn1.to_out.0.bias,OUT10:transformer_blocks.0.attn1.to_out.0.weight,OUT10:transformer_blocks.0.attn1.to_q.weight,OUT10:transformer_blocks.0.attn1.to_v.weight,OUT10:transformer_blocks.0.attn2.to_k.weight,OUT10:transformer_blocks.0.attn2.to_out.0.bias,OUT10:transformer_blocks.0.attn2.to_out.0.weight,OUT10:transformer_blocks.0.attn2.to_q.weight,OUT10:transformer_blocks.0.attn2.to_v.weight,OUT10:transformer_blocks.0.ff.net.0.proj.bias,OUT10:transformer_blocks.0.ff.net.0.proj.weight,OUT10:transformer_blocks.0.ff.net.2.bias,OUT10:transformer_blocks.0.ff.net.2.weight,OUT10:transformer_blocks.0.norm1.bias,OUT10:transformer_blocks.0.norm1.weight,OUT10:transformer_blocks.0.norm2.bias,OUT10:transformer_blocks.0.norm2.weight,OUT10:transformer_blocks.0.norm3.bias,OUT10:transformer_blocks.0.norm3.weight

|

| 94 |

+

|

| 95 |

+

OUT11:emb_layers.1.bias,OUT11:emb_layers.1.weight,OUT11:in_layers.0.bias,OUT11:in_layers.0.weight,OUT11:in_layers.2.bias,OUT11:in_layers.2.weight,OUT11:out_layers.0.bias,OUT11:out_layers.0.weight,OUT11:out_layers.3.bias,OUT11:out_layers.3.weight,OUT11:skip_connection.bias,OUT11:skip_connection.weight,OUT11:norm.bias,OUT11:norm.weight,OUT11:proj_in.bias,OUT11:proj_in.weight,OUT11:proj_out.bias,OUT11:proj_out.weight,OUT11:transformer_blocks.0.attn1.to_k.weight,OUT11:transformer_blocks.0.attn1.to_out.0.bias,OUT11:transformer_blocks.0.attn1.to_out.0.weight,OUT11:transformer_blocks.0.attn1.to_q.weight,OUT11:transformer_blocks.0.attn1.to_v.weight,OUT11:transformer_blocks.0.attn2.to_k.weight,OUT11:transformer_blocks.0.attn2.to_out.0.bias,OUT11:transformer_blocks.0.attn2.to_out.0.weight,OUT11:transformer_blocks.0.attn2.to_q.weight,OUT11:transformer_blocks.0.attn2.to_v.weight,OUT11:transformer_blocks.0.ff.net.0.proj.bias,OUT11:transformer_blocks.0.ff.net.0.proj.weight,OUT11:transformer_blocks.0.ff.net.2.bias,OUT11:transformer_blocks.0.ff.net.2.weight,OUT11:transformer_blocks.0.norm1.bias,OUT11:transformer_blocks.0.norm1.weight,OUT11:transformer_blocks.0.norm2.bias,OUT11:transformer_blocks.0.norm2.weight,OUT11:transformer_blocks.0.norm3.bias,OUT11:transformer_blocks.0.norm3.weight,OUT11:0.bias,OUT11:0.weight,OUT11:2.bias,OUT11:2.weight

|

extensions/microsoftexcel-supermerger/scripts/__pycache__/supermerger.cpython-310.pyc

ADDED

|

Binary file (22.2 kB). View file

|

|

|

extensions/microsoftexcel-supermerger/scripts/mbwpresets.txt

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

preset_name preset_weights

|

| 2 |

+

GRAD_V 0,1,0.9166666667,0.8333333333,0.75,0.6666666667,0.5833333333,0.5,0.4166666667,0.3333333333,0.25,0.1666666667,0.0833333333,0,0.0833333333,0.1666666667,0.25,0.3333333333,0.4166666667,0.5,0.5833333333,0.6666666667,0.75,0.8333333333,0.9166666667,1.0

|

| 3 |

+

GRAD_A 0,0,0.0833333333,0.1666666667,0.25,0.3333333333,0.4166666667,0.5,0.5833333333,0.6666666667,0.75,0.8333333333,0.9166666667,1.0,0.9166666667,0.8333333333,0.75,0.6666666667,0.5833333333,0.5,0.4166666667,0.3333333333,0.25,0.1666666667,0.0833333333,0

|

| 4 |

+

FLAT_25 0,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25,0.25

|

| 5 |

+

FLAT_75 0,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75,0.75

|

| 6 |

+

WRAP08 0,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1

|

| 7 |

+

WRAP12 0,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1

|

| 8 |

+

WRAP14 0,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1

|

| 9 |

+

WRAP16 0,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1

|

| 10 |

+

MID12_50 0,0,0,0,0,0,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0.5,0,0,0,0,0,0

|

| 11 |

+

OUT07 0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1

|

| 12 |

+

OUT12 0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,1

|

| 13 |

+

OUT12_5 0,0,0,0,0,0,0,0,0,0,0,0,0,0.5,1,1,1,1,1,1,1,1,1,1,1,1

|

| 14 |

+

RING08_SOFT 0,0,0,0,0,0,0.5,1,1,1,0.5,0,0,0,0,0,0.5,1,1,1,0.5,0,0,0,0,0

|

| 15 |

+

RING08_5 0,0,0,0,0,0,0,1,1,1,1,0,0,0,0,0,1,1,1,1,0,0,0,0,0,0

|

| 16 |

+

RING10_5 0,0,0,0,0,0,1,1,1,1,1,0,0,0,0,0,1,1,1,1,1,0,0,0,0,0

|

| 17 |

+

RING10_3 0,0,0,0,0,0,0,1,1,1,1,1,0,0,0,1,1,1,1,1,0,0,0,0,0,0

|

| 18 |

+

SMOOTHSTEP 0,0,0.00506365740740741,0.0196759259259259,0.04296875,0.0740740740740741,0.112123842592593,0.15625,0.205584490740741,0.259259259259259,0.31640625,0.376157407407407,0.437644675925926,0.5,0.562355324074074,0.623842592592592,0.68359375,0.740740740740741,0.794415509259259,0.84375,0.887876157407408,0.925925925925926,0.95703125,0.980324074074074,0.994936342592593,1

|

| 19 |

+

REVERSE-SMOOTHSTEP 0,1,0.994936342592593,0.980324074074074,0.95703125,0.925925925925926,0.887876157407407,0.84375,0.794415509259259,0.740740740740741,0.68359375,0.623842592592593,0.562355324074074,0.5,0.437644675925926,0.376157407407408,0.31640625,0.259259259259259,0.205584490740741,0.15625,0.112123842592592,0.0740740740740742,0.0429687499999996,0.0196759259259258,0.00506365740740744,0

|

| 20 |

+

SMOOTHSTEP*2 0,0,0.0101273148148148,0.0393518518518519,0.0859375,0.148148148148148,0.224247685185185,0.3125,0.411168981481482,0.518518518518519,0.6328125,0.752314814814815,0.875289351851852,1.,0.875289351851852,0.752314814814815,0.6328125,0.518518518518519,0.411168981481481,0.3125,0.224247685185184,0.148148148148148,0.0859375,0.0393518518518512,0.0101273148148153,0

|

| 21 |

+

R_SMOOTHSTEP*2 0,1,0.989872685185185,0.960648148148148,0.9140625,0.851851851851852,0.775752314814815,0.6875,0.588831018518519,0.481481481481481,0.3671875,0.247685185185185,0.124710648148148,0.,0.124710648148148,0.247685185185185,0.3671875,0.481481481481481,0.588831018518519,0.6875,0.775752314814816,0.851851851851852,0.9140625,0.960648148148149,0.989872685185185,1

|

| 22 |

+

SMOOTHSTEP*3 0,0,0.0151909722222222,0.0590277777777778,0.12890625,0.222222222222222,0.336371527777778,0.46875,0.616753472222222,0.777777777777778,0.94921875,0.871527777777778,0.687065972222222,0.5,0.312934027777778,0.128472222222222,0.0507812500000004,0.222222222222222,0.383246527777778,0.53125,0.663628472222223,0.777777777777778,0.87109375,0.940972222222222,0.984809027777777,1

|

| 23 |

+

R_SMOOTHSTEP*3 0,1,0.984809027777778,0.940972222222222,0.87109375,0.777777777777778,0.663628472222222,0.53125,0.383246527777778,0.222222222222222,0.05078125,0.128472222222222,0.312934027777778,0.5,0.687065972222222,0.871527777777778,0.94921875,0.777777777777778,0.616753472222222,0.46875,0.336371527777777,0.222222222222222,0.12890625,0.0590277777777777,0.0151909722222232,0

|

| 24 |

+

SMOOTHSTEP*4 0,0,0.0202546296296296,0.0787037037037037,0.171875,0.296296296296296,0.44849537037037,0.625,0.822337962962963,0.962962962962963,0.734375,0.49537037037037,0.249421296296296,0.,0.249421296296296,0.495370370370371,0.734375000000001,0.962962962962963,0.822337962962962,0.625,0.448495370370369,0.296296296296297,0.171875,0.0787037037037024,0.0202546296296307,0

|

| 25 |

+

R_SMOOTHSTEP*4 0,1,0.97974537037037,0.921296296296296,0.828125,0.703703703703704,0.55150462962963,0.375,0.177662037037037,0.0370370370370372,0.265625,0.50462962962963,0.750578703703704,1.,0.750578703703704,0.504629629629629,0.265624999999999,0.0370370370370372,0.177662037037038,0.375,0.551504629629631,0.703703703703703,0.828125,0.921296296296298,0.979745370370369,1

|

| 26 |

+

SMOOTHSTEP/2 0,0,0.0196759259259259,0.0740740740740741,0.15625,0.259259259259259,0.376157407407407,0.5,0.623842592592593,0.740740740740741,0.84375,0.925925925925926,0.980324074074074,1.,0.980324074074074,0.925925925925926,0.84375,0.740740740740741,0.623842592592593,0.5,0.376157407407407,0.259259259259259,0.15625,0.0740740740740741,0.0196759259259259,0

|

| 27 |

+

R_SMOOTHSTEP/2 0,1,0.980324074074074,0.925925925925926,0.84375,0.740740740740741,0.623842592592593,0.5,0.376157407407407,0.259259259259259,0.15625,0.0740740740740742,0.0196759259259256,0.,0.0196759259259256,0.0740740740740742,0.15625,0.259259259259259,0.376157407407407,0.5,0.623842592592593,0.740740740740741,0.84375,0.925925925925926,0.980324074074074,1

|

| 28 |

+

SMOOTHSTEP/3 0,0,0.04296875,0.15625,0.31640625,0.5,0.68359375,0.84375,0.95703125,1.,0.95703125,0.84375,0.68359375,0.5,0.31640625,0.15625,0.04296875,0.,0.04296875,0.15625,0.31640625,0.5,0.68359375,0.84375,0.95703125,1

|

| 29 |

+

R_SMOOTHSTEP/3 0,1,0.95703125,0.84375,0.68359375,0.5,0.31640625,0.15625,0.04296875,0.,0.04296875,0.15625,0.31640625,0.5,0.68359375,0.84375,0.95703125,1.,0.95703125,0.84375,0.68359375,0.5,0.31640625,0.15625,0.04296875,0

|

| 30 |

+

SMOOTHSTEP/4 0,0,0.0740740740740741,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1.,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740741,0.,0.0740740740740741,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1.,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740741,0

|

| 31 |

+

R_SMOOTHSTEP/4 0,1,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740742,0.,0.0740740740740742,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1.,0.925925925925926,0.740740740740741,0.5,0.259259259259259,0.0740740740740742,0.,0.0740740740740742,0.259259259259259,0.5,0.740740740740741,0.925925925925926,1

|

| 32 |

+

COSINE 0,1,0.995722430686905,0.982962913144534,0.961939766255643,0.933012701892219,0.896676670145617,0.853553390593274,0.80438071450436,0.75,0.691341716182545,0.62940952255126,0.565263096110026,0.5,0.434736903889974,0.37059047744874,0.308658283817455,0.25,0.195619285495639,0.146446609406726,0.103323329854382,0.0669872981077805,0.0380602337443566,0.0170370868554658,0.00427756931309475,0

|

| 33 |

+

REVERSE_COSINE 0,0,0.00427756931309475,0.0170370868554659,0.0380602337443566,0.0669872981077808,0.103323329854383,0.146446609406726,0.19561928549564,0.25,0.308658283817455,0.37059047744874,0.434736903889974,0.5,0.565263096110026,0.62940952255126,0.691341716182545,0.75,0.804380714504361,0.853553390593274,0.896676670145618,0.933012701892219,0.961939766255643,0.982962913144534,0.995722430686905,1

|

| 34 |

+

TRUE_CUBIC_HERMITE 0,0,0.199031876929012,0.325761959876543,0.424641927083333,0.498456790123457,0.549991560570988,0.58203125,0.597360869984568,0.598765432098765,0.589029947916667,0.570939429012346,0.547278886959876,0.520833333333333,0.49438777970679,0.470727237654321,0.45263671875,0.442901234567901,0.444305796682099,0.459635416666667,0.491675106095678,0.543209876543211,0.617024739583333,0.715904706790124,0.842634789737655,1

|

| 35 |

+

TRUE_REVERSE_CUBIC_HERMITE 0,1,0.800968123070988,0.674238040123457,0.575358072916667,0.501543209876543,0.450008439429012,0.41796875,0.402639130015432,0.401234567901235,0.410970052083333,0.429060570987654,0.452721113040124,0.479166666666667,0.50561222029321,0.529272762345679,0.54736328125,0.557098765432099,0.555694203317901,0.540364583333333,0.508324893904322,0.456790123456789,0.382975260416667,0.284095293209876,0.157365210262345,0

|

| 36 |

+

FAKE_CUBIC_HERMITE 0,0,0.157576195987654,0.28491512345679,0.384765625,0.459876543209877,0.512996720679012,0.546875,0.564260223765432,0.567901234567901,0.560546875,0.544945987654321,0.523847415123457,0.5,0.476152584876543,0.455054012345679,0.439453125,0.432098765432099,0.435739776234568,0.453125,0.487003279320987,0.540123456790124,0.615234375,0.71508487654321,0.842423804012347,1

|

| 37 |

+

FAKE_REVERSE_CUBIC_HERMITE 0,1,0.842423804012346,0.71508487654321,0.615234375,0.540123456790123,0.487003279320988,0.453125,0.435739776234568,0.432098765432099,0.439453125,0.455054012345679,0.476152584876543,0.5,0.523847415123457,0.544945987654321,0.560546875,0.567901234567901,0.564260223765432,0.546875,0.512996720679013,0.459876543209876,0.384765625,0.28491512345679,0.157576195987653,0

|

| 38 |

+

ALL_A 0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

|

| 39 |

+

ALL_B 1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1

|

extensions/microsoftexcel-supermerger/scripts/mbwpresets_master.txt

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|