7bd1acf8301721733c956a62ee480dc281b7213caae348c6d8690ec8224ac24f

Browse files- repositories/stable-diffusion-stability-ai/ldm/modules/karlo/kakao/template.py +141 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/__init__.py +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/__pycache__/__init__.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/__pycache__/api.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/api.py +170 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__init__.py +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/__init__.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/base_model.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/blocks.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/dpt_depth.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/midas_net.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/midas_net_custom.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/transforms.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/vit.cpython-310.pyc +0 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/base_model.py +16 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/blocks.py +342 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/dpt_depth.py +109 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/midas_net.py +76 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/midas_net_custom.py +128 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/transforms.py +234 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/vit.py +491 -0

- repositories/stable-diffusion-stability-ai/ldm/modules/midas/utils.py +189 -0

- repositories/stable-diffusion-stability-ai/ldm/util.py +207 -0

- repositories/stable-diffusion-stability-ai/modelcard.md +153 -0

- repositories/stable-diffusion-stability-ai/requirements.txt +19 -0

- repositories/stable-diffusion-stability-ai/scripts/gradio/depth2img.py +184 -0

- repositories/stable-diffusion-stability-ai/scripts/gradio/inpainting.py +195 -0

- repositories/stable-diffusion-stability-ai/scripts/gradio/superresolution.py +197 -0

- repositories/stable-diffusion-stability-ai/scripts/img2img.py +279 -0

- repositories/stable-diffusion-stability-ai/scripts/streamlit/depth2img.py +157 -0

- repositories/stable-diffusion-stability-ai/scripts/streamlit/inpainting.py +195 -0

- repositories/stable-diffusion-stability-ai/scripts/streamlit/stableunclip.py +416 -0

- repositories/stable-diffusion-stability-ai/scripts/streamlit/superresolution.py +170 -0

- repositories/stable-diffusion-stability-ai/scripts/tests/test_watermark.py +18 -0

- repositories/stable-diffusion-stability-ai/scripts/txt2img.py +388 -0

- repositories/stable-diffusion-stability-ai/setup.py +13 -0

- requirements-test.txt +3 -0

- requirements.txt +33 -0

- requirements_versions.txt +31 -0

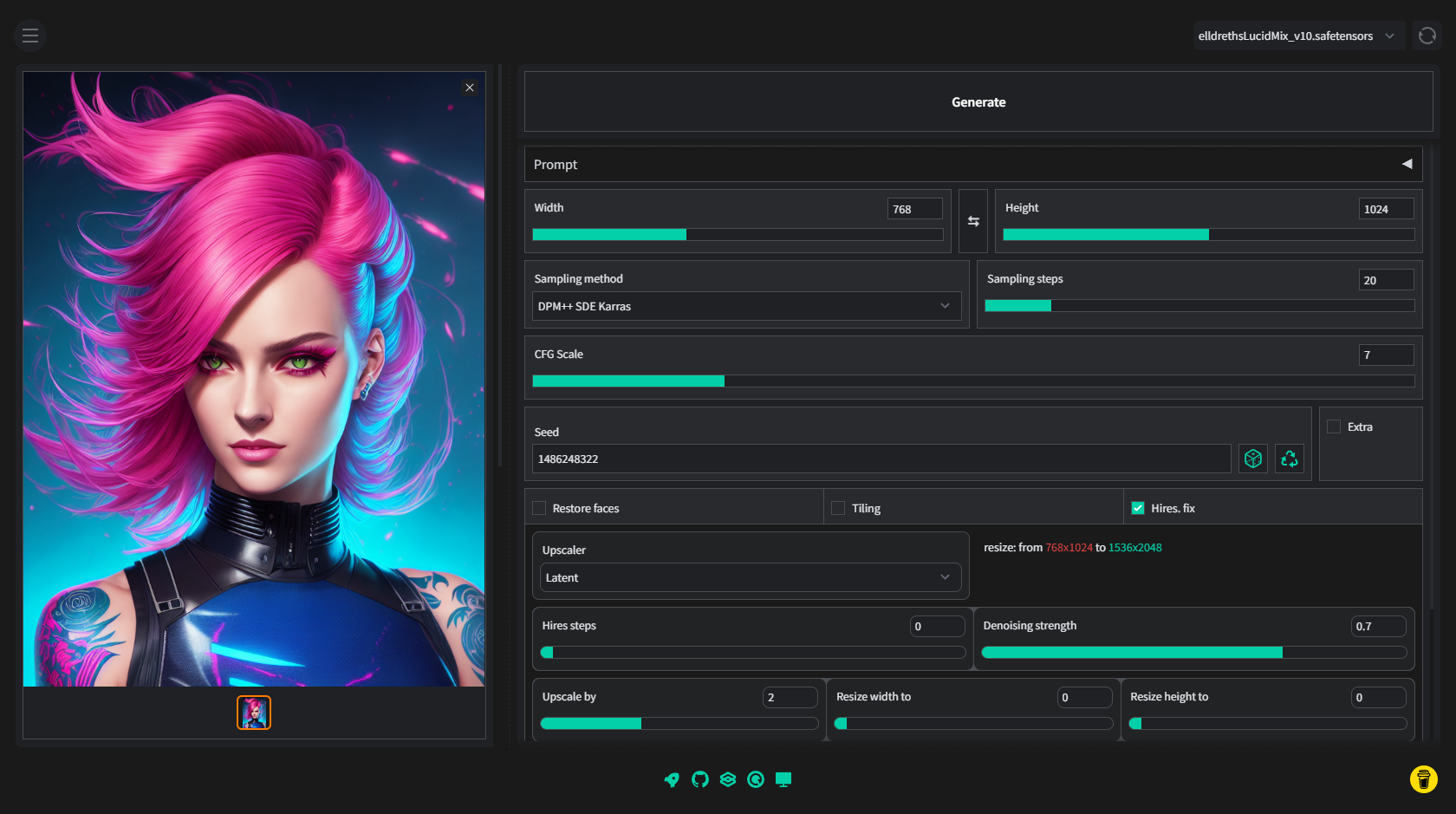

- screenshot.png +0 -0

- script.js +163 -0

- scripts/__pycache__/custom_code.cpython-310.pyc +0 -0

- scripts/__pycache__/img2imgalt.cpython-310.pyc +0 -0

- scripts/__pycache__/loopback.cpython-310.pyc +0 -0

- scripts/__pycache__/outpainting_mk_2.cpython-310.pyc +0 -0

- scripts/__pycache__/poor_mans_outpainting.cpython-310.pyc +0 -0

- scripts/__pycache__/postprocessing_codeformer.cpython-310.pyc +0 -0

- scripts/__pycache__/postprocessing_gfpgan.cpython-310.pyc +0 -0

- scripts/__pycache__/postprocessing_upscale.cpython-310.pyc +0 -0

- scripts/__pycache__/prompt_matrix.cpython-310.pyc +0 -0

repositories/stable-diffusion-stability-ai/ldm/modules/karlo/kakao/template.py

ADDED

|

@@ -0,0 +1,141 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ------------------------------------------------------------------------------------

|

| 2 |

+

# Karlo-v1.0.alpha

|

| 3 |

+

# Copyright (c) 2022 KakaoBrain. All Rights Reserved.

|

| 4 |

+

# ------------------------------------------------------------------------------------

|

| 5 |

+

|

| 6 |

+

import os

|

| 7 |

+

import logging

|

| 8 |

+

import torch

|

| 9 |

+

|

| 10 |

+

from omegaconf import OmegaConf

|

| 11 |

+

|

| 12 |

+

from ldm.modules.karlo.kakao.models.clip import CustomizedCLIP, CustomizedTokenizer

|

| 13 |

+

from ldm.modules.karlo.kakao.models.prior_model import PriorDiffusionModel

|

| 14 |

+

from ldm.modules.karlo.kakao.models.decoder_model import Text2ImProgressiveModel

|

| 15 |

+

from ldm.modules.karlo.kakao.models.sr_64_256 import ImprovedSupRes64to256ProgressiveModel

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

SAMPLING_CONF = {

|

| 19 |

+

"default": {

|

| 20 |

+

"prior_sm": "25",

|

| 21 |

+

"prior_n_samples": 1,

|

| 22 |

+

"prior_cf_scale": 4.0,

|

| 23 |

+

"decoder_sm": "50",

|

| 24 |

+

"decoder_cf_scale": 8.0,

|

| 25 |

+

"sr_sm": "7",

|

| 26 |

+

},

|

| 27 |

+

"fast": {

|

| 28 |

+

"prior_sm": "25",

|

| 29 |

+

"prior_n_samples": 1,

|

| 30 |

+

"prior_cf_scale": 4.0,

|

| 31 |

+

"decoder_sm": "25",

|

| 32 |

+

"decoder_cf_scale": 8.0,

|

| 33 |

+

"sr_sm": "7",

|

| 34 |

+

},

|

| 35 |

+

}

|

| 36 |

+

|

| 37 |

+

CKPT_PATH = {

|

| 38 |

+

"prior": "prior-ckpt-step=01000000-of-01000000.ckpt",

|

| 39 |

+

"decoder": "decoder-ckpt-step=01000000-of-01000000.ckpt",

|

| 40 |

+

"sr_256": "improved-sr-ckpt-step=1.2M.ckpt",

|

| 41 |

+

}

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

class BaseSampler:

|

| 45 |

+

_PRIOR_CLASS = PriorDiffusionModel

|

| 46 |

+

_DECODER_CLASS = Text2ImProgressiveModel

|

| 47 |

+

_SR256_CLASS = ImprovedSupRes64to256ProgressiveModel

|

| 48 |

+

|

| 49 |

+

def __init__(

|

| 50 |

+

self,

|

| 51 |

+

root_dir: str,

|

| 52 |

+

sampling_type: str = "fast",

|

| 53 |

+

):

|

| 54 |

+

self._root_dir = root_dir

|

| 55 |

+

|

| 56 |

+

sampling_type = SAMPLING_CONF[sampling_type]

|

| 57 |

+

self._prior_sm = sampling_type["prior_sm"]

|

| 58 |

+

self._prior_n_samples = sampling_type["prior_n_samples"]

|

| 59 |

+

self._prior_cf_scale = sampling_type["prior_cf_scale"]

|

| 60 |

+

|

| 61 |

+

assert self._prior_n_samples == 1

|

| 62 |

+

|

| 63 |

+

self._decoder_sm = sampling_type["decoder_sm"]

|

| 64 |

+

self._decoder_cf_scale = sampling_type["decoder_cf_scale"]

|

| 65 |

+

|

| 66 |

+

self._sr_sm = sampling_type["sr_sm"]

|

| 67 |

+

|

| 68 |

+

def __repr__(self):

|

| 69 |

+

line = ""

|

| 70 |

+

line += f"Prior, sampling method: {self._prior_sm}, cf_scale: {self._prior_cf_scale}\n"

|

| 71 |

+

line += f"Decoder, sampling method: {self._decoder_sm}, cf_scale: {self._decoder_cf_scale}\n"

|

| 72 |

+

line += f"SR(64->256), sampling method: {self._sr_sm}"

|

| 73 |

+

|

| 74 |

+

return line

|

| 75 |

+

|

| 76 |

+

def load_clip(self, clip_path: str):

|

| 77 |

+

clip = CustomizedCLIP.load_from_checkpoint(

|

| 78 |

+

os.path.join(self._root_dir, clip_path)

|

| 79 |

+

)

|

| 80 |

+

clip = torch.jit.script(clip)

|

| 81 |

+

clip.cuda()

|

| 82 |

+

clip.eval()

|

| 83 |

+

|

| 84 |

+

self._clip = clip

|

| 85 |

+

self._tokenizer = CustomizedTokenizer()

|

| 86 |

+

|

| 87 |

+

def load_prior(

|

| 88 |

+

self,

|

| 89 |

+

ckpt_path: str,

|

| 90 |

+

clip_stat_path: str,

|

| 91 |

+

prior_config: str = "configs/prior_1B_vit_l.yaml"

|

| 92 |

+

):

|

| 93 |

+

logging.info(f"Loading prior: {ckpt_path}")

|

| 94 |

+

|

| 95 |

+

config = OmegaConf.load(prior_config)

|

| 96 |

+

clip_mean, clip_std = torch.load(

|

| 97 |

+

os.path.join(self._root_dir, clip_stat_path), map_location="cpu"

|

| 98 |

+

)

|

| 99 |

+

|

| 100 |

+

prior = self._PRIOR_CLASS.load_from_checkpoint(

|

| 101 |

+

config,

|

| 102 |

+

self._tokenizer,

|

| 103 |

+

clip_mean,

|

| 104 |

+

clip_std,

|

| 105 |

+

os.path.join(self._root_dir, ckpt_path),

|

| 106 |

+

strict=True,

|

| 107 |

+

)

|

| 108 |

+

prior.cuda()

|

| 109 |

+

prior.eval()

|

| 110 |

+

logging.info("done.")

|

| 111 |

+

|

| 112 |

+

self._prior = prior

|

| 113 |

+

|

| 114 |

+

def load_decoder(self, ckpt_path: str, decoder_config: str = "configs/decoder_900M_vit_l.yaml"):

|

| 115 |

+

logging.info(f"Loading decoder: {ckpt_path}")

|

| 116 |

+

|

| 117 |

+

config = OmegaConf.load(decoder_config)

|

| 118 |

+

decoder = self._DECODER_CLASS.load_from_checkpoint(

|

| 119 |

+

config,

|

| 120 |

+

self._tokenizer,

|

| 121 |

+

os.path.join(self._root_dir, ckpt_path),

|

| 122 |

+

strict=True,

|

| 123 |

+

)

|

| 124 |

+

decoder.cuda()

|

| 125 |

+

decoder.eval()

|

| 126 |

+

logging.info("done.")

|

| 127 |

+

|

| 128 |

+

self._decoder = decoder

|

| 129 |

+

|

| 130 |

+

def load_sr_64_256(self, ckpt_path: str, sr_config: str = "configs/improved_sr_64_256_1.4B.yaml"):

|

| 131 |

+

logging.info(f"Loading SR(64->256): {ckpt_path}")

|

| 132 |

+

|

| 133 |

+

config = OmegaConf.load(sr_config)

|

| 134 |

+

sr = self._SR256_CLASS.load_from_checkpoint(

|

| 135 |

+

config, os.path.join(self._root_dir, ckpt_path), strict=True

|

| 136 |

+

)

|

| 137 |

+

sr.cuda()

|

| 138 |

+

sr.eval()

|

| 139 |

+

logging.info("done.")

|

| 140 |

+

|

| 141 |

+

self._sr_64_256 = sr

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/__init__.py

ADDED

|

File without changes

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (189 Bytes). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/__pycache__/api.cpython-310.pyc

ADDED

|

Binary file (3.63 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/api.py

ADDED

|

@@ -0,0 +1,170 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# based on https://github.com/isl-org/MiDaS

|

| 2 |

+

|

| 3 |

+

import cv2

|

| 4 |

+

import torch

|

| 5 |

+

import torch.nn as nn

|

| 6 |

+

from torchvision.transforms import Compose

|

| 7 |

+

|

| 8 |

+

from ldm.modules.midas.midas.dpt_depth import DPTDepthModel

|

| 9 |

+

from ldm.modules.midas.midas.midas_net import MidasNet

|

| 10 |

+

from ldm.modules.midas.midas.midas_net_custom import MidasNet_small

|

| 11 |

+

from ldm.modules.midas.midas.transforms import Resize, NormalizeImage, PrepareForNet

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

ISL_PATHS = {

|

| 15 |

+

"dpt_large": "midas_models/dpt_large-midas-2f21e586.pt",

|

| 16 |

+

"dpt_hybrid": "midas_models/dpt_hybrid-midas-501f0c75.pt",

|

| 17 |

+

"midas_v21": "",

|

| 18 |

+

"midas_v21_small": "",

|

| 19 |

+

}

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def disabled_train(self, mode=True):

|

| 23 |

+

"""Overwrite model.train with this function to make sure train/eval mode

|

| 24 |

+

does not change anymore."""

|

| 25 |

+

return self

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

def load_midas_transform(model_type):

|

| 29 |

+

# https://github.com/isl-org/MiDaS/blob/master/run.py

|

| 30 |

+

# load transform only

|

| 31 |

+

if model_type == "dpt_large": # DPT-Large

|

| 32 |

+

net_w, net_h = 384, 384

|

| 33 |

+

resize_mode = "minimal"

|

| 34 |

+

normalization = NormalizeImage(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

|

| 35 |

+

|

| 36 |

+

elif model_type == "dpt_hybrid": # DPT-Hybrid

|

| 37 |

+

net_w, net_h = 384, 384

|

| 38 |

+

resize_mode = "minimal"

|

| 39 |

+

normalization = NormalizeImage(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

|

| 40 |

+

|

| 41 |

+

elif model_type == "midas_v21":

|

| 42 |

+

net_w, net_h = 384, 384

|

| 43 |

+

resize_mode = "upper_bound"

|

| 44 |

+

normalization = NormalizeImage(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

|

| 45 |

+

|

| 46 |

+

elif model_type == "midas_v21_small":

|

| 47 |

+

net_w, net_h = 256, 256

|

| 48 |

+

resize_mode = "upper_bound"

|

| 49 |

+

normalization = NormalizeImage(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

|

| 50 |

+

|

| 51 |

+

else:

|

| 52 |

+

assert False, f"model_type '{model_type}' not implemented, use: --model_type large"

|

| 53 |

+

|

| 54 |

+

transform = Compose(

|

| 55 |

+

[

|

| 56 |

+

Resize(

|

| 57 |

+

net_w,

|

| 58 |

+

net_h,

|

| 59 |

+

resize_target=None,

|

| 60 |

+

keep_aspect_ratio=True,

|

| 61 |

+

ensure_multiple_of=32,

|

| 62 |

+

resize_method=resize_mode,

|

| 63 |

+

image_interpolation_method=cv2.INTER_CUBIC,

|

| 64 |

+

),

|

| 65 |

+

normalization,

|

| 66 |

+

PrepareForNet(),

|

| 67 |

+

]

|

| 68 |

+

)

|

| 69 |

+

|

| 70 |

+

return transform

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

def load_model(model_type):

|

| 74 |

+

# https://github.com/isl-org/MiDaS/blob/master/run.py

|

| 75 |

+

# load network

|

| 76 |

+

model_path = ISL_PATHS[model_type]

|

| 77 |

+

if model_type == "dpt_large": # DPT-Large

|

| 78 |

+

model = DPTDepthModel(

|

| 79 |

+

path=model_path,

|

| 80 |

+

backbone="vitl16_384",

|

| 81 |

+

non_negative=True,

|

| 82 |

+

)

|

| 83 |

+

net_w, net_h = 384, 384

|

| 84 |

+

resize_mode = "minimal"

|

| 85 |

+

normalization = NormalizeImage(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

|

| 86 |

+

|

| 87 |

+

elif model_type == "dpt_hybrid": # DPT-Hybrid

|

| 88 |

+

model = DPTDepthModel(

|

| 89 |

+

path=model_path,

|

| 90 |

+

backbone="vitb_rn50_384",

|

| 91 |

+

non_negative=True,

|

| 92 |

+

)

|

| 93 |

+

net_w, net_h = 384, 384

|

| 94 |

+

resize_mode = "minimal"

|

| 95 |

+

normalization = NormalizeImage(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

|

| 96 |

+

|

| 97 |

+

elif model_type == "midas_v21":

|

| 98 |

+

model = MidasNet(model_path, non_negative=True)

|

| 99 |

+

net_w, net_h = 384, 384

|

| 100 |

+

resize_mode = "upper_bound"

|

| 101 |

+

normalization = NormalizeImage(

|

| 102 |

+

mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]

|

| 103 |

+

)

|

| 104 |

+

|

| 105 |

+

elif model_type == "midas_v21_small":

|

| 106 |

+

model = MidasNet_small(model_path, features=64, backbone="efficientnet_lite3", exportable=True,

|

| 107 |

+

non_negative=True, blocks={'expand': True})

|

| 108 |

+

net_w, net_h = 256, 256

|

| 109 |

+

resize_mode = "upper_bound"

|

| 110 |

+

normalization = NormalizeImage(

|

| 111 |

+

mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

else:

|

| 115 |

+

print(f"model_type '{model_type}' not implemented, use: --model_type large")

|

| 116 |

+

assert False

|

| 117 |

+

|

| 118 |

+

transform = Compose(

|

| 119 |

+

[

|

| 120 |

+

Resize(

|

| 121 |

+

net_w,

|

| 122 |

+

net_h,

|

| 123 |

+

resize_target=None,

|

| 124 |

+

keep_aspect_ratio=True,

|

| 125 |

+

ensure_multiple_of=32,

|

| 126 |

+

resize_method=resize_mode,

|

| 127 |

+

image_interpolation_method=cv2.INTER_CUBIC,

|

| 128 |

+

),

|

| 129 |

+

normalization,

|

| 130 |

+

PrepareForNet(),

|

| 131 |

+

]

|

| 132 |

+

)

|

| 133 |

+

|

| 134 |

+

return model.eval(), transform

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

class MiDaSInference(nn.Module):

|

| 138 |

+

MODEL_TYPES_TORCH_HUB = [

|

| 139 |

+

"DPT_Large",

|

| 140 |

+

"DPT_Hybrid",

|

| 141 |

+

"MiDaS_small"

|

| 142 |

+

]

|

| 143 |

+

MODEL_TYPES_ISL = [

|

| 144 |

+

"dpt_large",

|

| 145 |

+

"dpt_hybrid",

|

| 146 |

+

"midas_v21",

|

| 147 |

+

"midas_v21_small",

|

| 148 |

+

]

|

| 149 |

+

|

| 150 |

+

def __init__(self, model_type):

|

| 151 |

+

super().__init__()

|

| 152 |

+

assert (model_type in self.MODEL_TYPES_ISL)

|

| 153 |

+

model, _ = load_model(model_type)

|

| 154 |

+

self.model = model

|

| 155 |

+

self.model.train = disabled_train

|

| 156 |

+

|

| 157 |

+

def forward(self, x):

|

| 158 |

+

# x in 0..1 as produced by calling self.transform on a 0..1 float64 numpy array

|

| 159 |

+

# NOTE: we expect that the correct transform has been called during dataloading.

|

| 160 |

+

with torch.no_grad():

|

| 161 |

+

prediction = self.model(x)

|

| 162 |

+

prediction = torch.nn.functional.interpolate(

|

| 163 |

+

prediction.unsqueeze(1),

|

| 164 |

+

size=x.shape[2:],

|

| 165 |

+

mode="bicubic",

|

| 166 |

+

align_corners=False,

|

| 167 |

+

)

|

| 168 |

+

assert prediction.shape == (x.shape[0], 1, x.shape[2], x.shape[3])

|

| 169 |

+

return prediction

|

| 170 |

+

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__init__.py

ADDED

|

File without changes

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (195 Bytes). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/base_model.cpython-310.pyc

ADDED

|

Binary file (723 Bytes). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/blocks.cpython-310.pyc

ADDED

|

Binary file (7.24 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/dpt_depth.cpython-310.pyc

ADDED

|

Binary file (2.95 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/midas_net.cpython-310.pyc

ADDED

|

Binary file (2.63 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/midas_net_custom.cpython-310.pyc

ADDED

|

Binary file (3.75 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/transforms.cpython-310.pyc

ADDED

|

Binary file (5.71 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/__pycache__/vit.cpython-310.pyc

ADDED

|

Binary file (9.4 kB). View file

|

|

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/base_model.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

class BaseModel(torch.nn.Module):

|

| 5 |

+

def load(self, path):

|

| 6 |

+

"""Load model from file.

|

| 7 |

+

|

| 8 |

+

Args:

|

| 9 |

+

path (str): file path

|

| 10 |

+

"""

|

| 11 |

+

parameters = torch.load(path, map_location=torch.device('cpu'))

|

| 12 |

+

|

| 13 |

+

if "optimizer" in parameters:

|

| 14 |

+

parameters = parameters["model"]

|

| 15 |

+

|

| 16 |

+

self.load_state_dict(parameters)

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/blocks.py

ADDED

|

@@ -0,0 +1,342 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

|

| 4 |

+

from .vit import (

|

| 5 |

+

_make_pretrained_vitb_rn50_384,

|

| 6 |

+

_make_pretrained_vitl16_384,

|

| 7 |

+

_make_pretrained_vitb16_384,

|

| 8 |

+

forward_vit,

|

| 9 |

+

)

|

| 10 |

+

|

| 11 |

+

def _make_encoder(backbone, features, use_pretrained, groups=1, expand=False, exportable=True, hooks=None, use_vit_only=False, use_readout="ignore",):

|

| 12 |

+

if backbone == "vitl16_384":

|

| 13 |

+

pretrained = _make_pretrained_vitl16_384(

|

| 14 |

+

use_pretrained, hooks=hooks, use_readout=use_readout

|

| 15 |

+

)

|

| 16 |

+

scratch = _make_scratch(

|

| 17 |

+

[256, 512, 1024, 1024], features, groups=groups, expand=expand

|

| 18 |

+

) # ViT-L/16 - 85.0% Top1 (backbone)

|

| 19 |

+

elif backbone == "vitb_rn50_384":

|

| 20 |

+

pretrained = _make_pretrained_vitb_rn50_384(

|

| 21 |

+

use_pretrained,

|

| 22 |

+

hooks=hooks,

|

| 23 |

+

use_vit_only=use_vit_only,

|

| 24 |

+

use_readout=use_readout,

|

| 25 |

+

)

|

| 26 |

+

scratch = _make_scratch(

|

| 27 |

+

[256, 512, 768, 768], features, groups=groups, expand=expand

|

| 28 |

+

) # ViT-H/16 - 85.0% Top1 (backbone)

|

| 29 |

+

elif backbone == "vitb16_384":

|

| 30 |

+

pretrained = _make_pretrained_vitb16_384(

|

| 31 |

+

use_pretrained, hooks=hooks, use_readout=use_readout

|

| 32 |

+

)

|

| 33 |

+

scratch = _make_scratch(

|

| 34 |

+

[96, 192, 384, 768], features, groups=groups, expand=expand

|

| 35 |

+

) # ViT-B/16 - 84.6% Top1 (backbone)

|

| 36 |

+

elif backbone == "resnext101_wsl":

|

| 37 |

+

pretrained = _make_pretrained_resnext101_wsl(use_pretrained)

|

| 38 |

+

scratch = _make_scratch([256, 512, 1024, 2048], features, groups=groups, expand=expand) # efficientnet_lite3

|

| 39 |

+

elif backbone == "efficientnet_lite3":

|

| 40 |

+

pretrained = _make_pretrained_efficientnet_lite3(use_pretrained, exportable=exportable)

|

| 41 |

+

scratch = _make_scratch([32, 48, 136, 384], features, groups=groups, expand=expand) # efficientnet_lite3

|

| 42 |

+

else:

|

| 43 |

+

print(f"Backbone '{backbone}' not implemented")

|

| 44 |

+

assert False

|

| 45 |

+

|

| 46 |

+

return pretrained, scratch

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

def _make_scratch(in_shape, out_shape, groups=1, expand=False):

|

| 50 |

+

scratch = nn.Module()

|

| 51 |

+

|

| 52 |

+

out_shape1 = out_shape

|

| 53 |

+

out_shape2 = out_shape

|

| 54 |

+

out_shape3 = out_shape

|

| 55 |

+

out_shape4 = out_shape

|

| 56 |

+

if expand==True:

|

| 57 |

+

out_shape1 = out_shape

|

| 58 |

+

out_shape2 = out_shape*2

|

| 59 |

+

out_shape3 = out_shape*4

|

| 60 |

+

out_shape4 = out_shape*8

|

| 61 |

+

|

| 62 |

+

scratch.layer1_rn = nn.Conv2d(

|

| 63 |

+

in_shape[0], out_shape1, kernel_size=3, stride=1, padding=1, bias=False, groups=groups

|

| 64 |

+

)

|

| 65 |

+

scratch.layer2_rn = nn.Conv2d(

|

| 66 |

+

in_shape[1], out_shape2, kernel_size=3, stride=1, padding=1, bias=False, groups=groups

|

| 67 |

+

)

|

| 68 |

+

scratch.layer3_rn = nn.Conv2d(

|

| 69 |

+

in_shape[2], out_shape3, kernel_size=3, stride=1, padding=1, bias=False, groups=groups

|

| 70 |

+

)

|

| 71 |

+

scratch.layer4_rn = nn.Conv2d(

|

| 72 |

+

in_shape[3], out_shape4, kernel_size=3, stride=1, padding=1, bias=False, groups=groups

|

| 73 |

+

)

|

| 74 |

+

|

| 75 |

+

return scratch

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def _make_pretrained_efficientnet_lite3(use_pretrained, exportable=False):

|

| 79 |

+

efficientnet = torch.hub.load(

|

| 80 |

+

"rwightman/gen-efficientnet-pytorch",

|

| 81 |

+

"tf_efficientnet_lite3",

|

| 82 |

+

pretrained=use_pretrained,

|

| 83 |

+

exportable=exportable

|

| 84 |

+

)

|

| 85 |

+

return _make_efficientnet_backbone(efficientnet)

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

def _make_efficientnet_backbone(effnet):

|

| 89 |

+

pretrained = nn.Module()

|

| 90 |

+

|

| 91 |

+

pretrained.layer1 = nn.Sequential(

|

| 92 |

+

effnet.conv_stem, effnet.bn1, effnet.act1, *effnet.blocks[0:2]

|

| 93 |

+

)

|

| 94 |

+

pretrained.layer2 = nn.Sequential(*effnet.blocks[2:3])

|

| 95 |

+

pretrained.layer3 = nn.Sequential(*effnet.blocks[3:5])

|

| 96 |

+

pretrained.layer4 = nn.Sequential(*effnet.blocks[5:9])

|

| 97 |

+

|

| 98 |

+

return pretrained

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

def _make_resnet_backbone(resnet):

|

| 102 |

+

pretrained = nn.Module()

|

| 103 |

+

pretrained.layer1 = nn.Sequential(

|

| 104 |

+

resnet.conv1, resnet.bn1, resnet.relu, resnet.maxpool, resnet.layer1

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

pretrained.layer2 = resnet.layer2

|

| 108 |

+

pretrained.layer3 = resnet.layer3

|

| 109 |

+

pretrained.layer4 = resnet.layer4

|

| 110 |

+

|

| 111 |

+

return pretrained

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

def _make_pretrained_resnext101_wsl(use_pretrained):

|

| 115 |

+

resnet = torch.hub.load("facebookresearch/WSL-Images", "resnext101_32x8d_wsl")

|

| 116 |

+

return _make_resnet_backbone(resnet)

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

class Interpolate(nn.Module):

|

| 121 |

+

"""Interpolation module.

|

| 122 |

+

"""

|

| 123 |

+

|

| 124 |

+

def __init__(self, scale_factor, mode, align_corners=False):

|

| 125 |

+

"""Init.

|

| 126 |

+

|

| 127 |

+

Args:

|

| 128 |

+

scale_factor (float): scaling

|

| 129 |

+

mode (str): interpolation mode

|

| 130 |

+

"""

|

| 131 |

+

super(Interpolate, self).__init__()

|

| 132 |

+

|

| 133 |

+

self.interp = nn.functional.interpolate

|

| 134 |

+

self.scale_factor = scale_factor

|

| 135 |

+

self.mode = mode

|

| 136 |

+

self.align_corners = align_corners

|

| 137 |

+

|

| 138 |

+

def forward(self, x):

|

| 139 |

+

"""Forward pass.

|

| 140 |

+

|

| 141 |

+

Args:

|

| 142 |

+

x (tensor): input

|

| 143 |

+

|

| 144 |

+

Returns:

|

| 145 |

+

tensor: interpolated data

|

| 146 |

+

"""

|

| 147 |

+

|

| 148 |

+

x = self.interp(

|

| 149 |

+

x, scale_factor=self.scale_factor, mode=self.mode, align_corners=self.align_corners

|

| 150 |

+

)

|

| 151 |

+

|

| 152 |

+

return x

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

class ResidualConvUnit(nn.Module):

|

| 156 |

+

"""Residual convolution module.

|

| 157 |

+

"""

|

| 158 |

+

|

| 159 |

+

def __init__(self, features):

|

| 160 |

+

"""Init.

|

| 161 |

+

|

| 162 |

+

Args:

|

| 163 |

+

features (int): number of features

|

| 164 |

+

"""

|

| 165 |

+

super().__init__()

|

| 166 |

+

|

| 167 |

+

self.conv1 = nn.Conv2d(

|

| 168 |

+

features, features, kernel_size=3, stride=1, padding=1, bias=True

|

| 169 |

+

)

|

| 170 |

+

|

| 171 |

+

self.conv2 = nn.Conv2d(

|

| 172 |

+

features, features, kernel_size=3, stride=1, padding=1, bias=True

|

| 173 |

+

)

|

| 174 |

+

|

| 175 |

+

self.relu = nn.ReLU(inplace=True)

|

| 176 |

+

|

| 177 |

+

def forward(self, x):

|

| 178 |

+

"""Forward pass.

|

| 179 |

+

|

| 180 |

+

Args:

|

| 181 |

+

x (tensor): input

|

| 182 |

+

|

| 183 |

+

Returns:

|

| 184 |

+

tensor: output

|

| 185 |

+

"""

|

| 186 |

+

out = self.relu(x)

|

| 187 |

+

out = self.conv1(out)

|

| 188 |

+

out = self.relu(out)

|

| 189 |

+

out = self.conv2(out)

|

| 190 |

+

|

| 191 |

+

return out + x

|

| 192 |

+

|

| 193 |

+

|

| 194 |

+

class FeatureFusionBlock(nn.Module):

|

| 195 |

+

"""Feature fusion block.

|

| 196 |

+

"""

|

| 197 |

+

|

| 198 |

+

def __init__(self, features):

|

| 199 |

+

"""Init.

|

| 200 |

+

|

| 201 |

+

Args:

|

| 202 |

+

features (int): number of features

|

| 203 |

+

"""

|

| 204 |

+

super(FeatureFusionBlock, self).__init__()

|

| 205 |

+

|

| 206 |

+

self.resConfUnit1 = ResidualConvUnit(features)

|

| 207 |

+

self.resConfUnit2 = ResidualConvUnit(features)

|

| 208 |

+

|

| 209 |

+

def forward(self, *xs):

|

| 210 |

+

"""Forward pass.

|

| 211 |

+

|

| 212 |

+

Returns:

|

| 213 |

+

tensor: output

|

| 214 |

+

"""

|

| 215 |

+

output = xs[0]

|

| 216 |

+

|

| 217 |

+

if len(xs) == 2:

|

| 218 |

+

output += self.resConfUnit1(xs[1])

|

| 219 |

+

|

| 220 |

+

output = self.resConfUnit2(output)

|

| 221 |

+

|

| 222 |

+

output = nn.functional.interpolate(

|

| 223 |

+

output, scale_factor=2, mode="bilinear", align_corners=True

|

| 224 |

+

)

|

| 225 |

+

|

| 226 |

+

return output

|

| 227 |

+

|

| 228 |

+

|

| 229 |

+

|

| 230 |

+

|

| 231 |

+

class ResidualConvUnit_custom(nn.Module):

|

| 232 |

+

"""Residual convolution module.

|

| 233 |

+

"""

|

| 234 |

+

|

| 235 |

+

def __init__(self, features, activation, bn):

|

| 236 |

+

"""Init.

|

| 237 |

+

|

| 238 |

+

Args:

|

| 239 |

+

features (int): number of features

|

| 240 |

+

"""

|

| 241 |

+

super().__init__()

|

| 242 |

+

|

| 243 |

+

self.bn = bn

|

| 244 |

+

|

| 245 |

+

self.groups=1

|

| 246 |

+

|

| 247 |

+

self.conv1 = nn.Conv2d(

|

| 248 |

+

features, features, kernel_size=3, stride=1, padding=1, bias=True, groups=self.groups

|

| 249 |

+

)

|

| 250 |

+

|

| 251 |

+

self.conv2 = nn.Conv2d(

|

| 252 |

+

features, features, kernel_size=3, stride=1, padding=1, bias=True, groups=self.groups

|

| 253 |

+

)

|

| 254 |

+

|

| 255 |

+

if self.bn==True:

|

| 256 |

+

self.bn1 = nn.BatchNorm2d(features)

|

| 257 |

+

self.bn2 = nn.BatchNorm2d(features)

|

| 258 |

+

|

| 259 |

+

self.activation = activation

|

| 260 |

+

|

| 261 |

+

self.skip_add = nn.quantized.FloatFunctional()

|

| 262 |

+

|

| 263 |

+

def forward(self, x):

|

| 264 |

+

"""Forward pass.

|

| 265 |

+

|

| 266 |

+

Args:

|

| 267 |

+

x (tensor): input

|

| 268 |

+

|

| 269 |

+

Returns:

|

| 270 |

+

tensor: output

|

| 271 |

+

"""

|

| 272 |

+

|

| 273 |

+

out = self.activation(x)

|

| 274 |

+

out = self.conv1(out)

|

| 275 |

+

if self.bn==True:

|

| 276 |

+

out = self.bn1(out)

|

| 277 |

+

|

| 278 |

+

out = self.activation(out)

|

| 279 |

+

out = self.conv2(out)

|

| 280 |

+

if self.bn==True:

|

| 281 |

+

out = self.bn2(out)

|

| 282 |

+

|

| 283 |

+

if self.groups > 1:

|

| 284 |

+

out = self.conv_merge(out)

|

| 285 |

+

|

| 286 |

+

return self.skip_add.add(out, x)

|

| 287 |

+

|

| 288 |

+

# return out + x

|

| 289 |

+

|

| 290 |

+

|

| 291 |

+

class FeatureFusionBlock_custom(nn.Module):

|

| 292 |

+

"""Feature fusion block.

|

| 293 |

+

"""

|

| 294 |

+

|

| 295 |

+

def __init__(self, features, activation, deconv=False, bn=False, expand=False, align_corners=True):

|

| 296 |

+

"""Init.

|

| 297 |

+

|

| 298 |

+

Args:

|

| 299 |

+

features (int): number of features

|

| 300 |

+

"""

|

| 301 |

+

super(FeatureFusionBlock_custom, self).__init__()

|

| 302 |

+

|

| 303 |

+

self.deconv = deconv

|

| 304 |

+

self.align_corners = align_corners

|

| 305 |

+

|

| 306 |

+

self.groups=1

|

| 307 |

+

|

| 308 |

+

self.expand = expand

|

| 309 |

+

out_features = features

|

| 310 |

+

if self.expand==True:

|

| 311 |

+

out_features = features//2

|

| 312 |

+

|

| 313 |

+

self.out_conv = nn.Conv2d(features, out_features, kernel_size=1, stride=1, padding=0, bias=True, groups=1)

|

| 314 |

+

|

| 315 |

+

self.resConfUnit1 = ResidualConvUnit_custom(features, activation, bn)

|

| 316 |

+

self.resConfUnit2 = ResidualConvUnit_custom(features, activation, bn)

|

| 317 |

+

|

| 318 |

+

self.skip_add = nn.quantized.FloatFunctional()

|

| 319 |

+

|

| 320 |

+

def forward(self, *xs):

|

| 321 |

+

"""Forward pass.

|

| 322 |

+

|

| 323 |

+

Returns:

|

| 324 |

+

tensor: output

|

| 325 |

+

"""

|

| 326 |

+

output = xs[0]

|

| 327 |

+

|

| 328 |

+

if len(xs) == 2:

|

| 329 |

+

res = self.resConfUnit1(xs[1])

|

| 330 |

+

output = self.skip_add.add(output, res)

|

| 331 |

+

# output += res

|

| 332 |

+

|

| 333 |

+

output = self.resConfUnit2(output)

|

| 334 |

+

|

| 335 |

+

output = nn.functional.interpolate(

|

| 336 |

+

output, scale_factor=2, mode="bilinear", align_corners=self.align_corners

|

| 337 |

+

)

|

| 338 |

+

|

| 339 |

+

output = self.out_conv(output)

|

| 340 |

+

|

| 341 |

+

return output

|

| 342 |

+

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/dpt_depth.py

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

|

| 5 |

+

from .base_model import BaseModel

|

| 6 |

+

from .blocks import (

|

| 7 |

+

FeatureFusionBlock,

|

| 8 |

+

FeatureFusionBlock_custom,

|

| 9 |

+

Interpolate,

|

| 10 |

+

_make_encoder,

|

| 11 |

+

forward_vit,

|

| 12 |

+

)

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def _make_fusion_block(features, use_bn):

|

| 16 |

+

return FeatureFusionBlock_custom(

|

| 17 |

+

features,

|

| 18 |

+

nn.ReLU(False),

|

| 19 |

+

deconv=False,

|

| 20 |

+

bn=use_bn,

|

| 21 |

+

expand=False,

|

| 22 |

+

align_corners=True,

|

| 23 |

+

)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class DPT(BaseModel):

|

| 27 |

+

def __init__(

|

| 28 |

+

self,

|

| 29 |

+

head,

|

| 30 |

+

features=256,

|

| 31 |

+

backbone="vitb_rn50_384",

|

| 32 |

+

readout="project",

|

| 33 |

+

channels_last=False,

|

| 34 |

+

use_bn=False,

|

| 35 |

+

):

|

| 36 |

+

|

| 37 |

+

super(DPT, self).__init__()

|

| 38 |

+

|

| 39 |

+

self.channels_last = channels_last

|

| 40 |

+

|

| 41 |

+

hooks = {

|

| 42 |

+

"vitb_rn50_384": [0, 1, 8, 11],

|

| 43 |

+

"vitb16_384": [2, 5, 8, 11],

|

| 44 |

+

"vitl16_384": [5, 11, 17, 23],

|

| 45 |

+

}

|

| 46 |

+

|

| 47 |

+

# Instantiate backbone and reassemble blocks

|

| 48 |

+

self.pretrained, self.scratch = _make_encoder(

|

| 49 |

+

backbone,

|

| 50 |

+

features,

|

| 51 |

+

False, # Set to true of you want to train from scratch, uses ImageNet weights

|

| 52 |

+

groups=1,

|

| 53 |

+

expand=False,

|

| 54 |

+

exportable=False,

|

| 55 |

+

hooks=hooks[backbone],

|

| 56 |

+

use_readout=readout,

|

| 57 |

+

)

|

| 58 |

+

|

| 59 |

+

self.scratch.refinenet1 = _make_fusion_block(features, use_bn)

|

| 60 |

+

self.scratch.refinenet2 = _make_fusion_block(features, use_bn)

|

| 61 |

+

self.scratch.refinenet3 = _make_fusion_block(features, use_bn)

|

| 62 |

+

self.scratch.refinenet4 = _make_fusion_block(features, use_bn)

|

| 63 |

+

|

| 64 |

+

self.scratch.output_conv = head

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

def forward(self, x):

|

| 68 |

+

if self.channels_last == True:

|

| 69 |

+

x.contiguous(memory_format=torch.channels_last)

|

| 70 |

+

|

| 71 |

+

layer_1, layer_2, layer_3, layer_4 = forward_vit(self.pretrained, x)

|

| 72 |

+

|

| 73 |

+

layer_1_rn = self.scratch.layer1_rn(layer_1)

|

| 74 |

+

layer_2_rn = self.scratch.layer2_rn(layer_2)

|

| 75 |

+

layer_3_rn = self.scratch.layer3_rn(layer_3)

|

| 76 |

+

layer_4_rn = self.scratch.layer4_rn(layer_4)

|

| 77 |

+

|

| 78 |

+

path_4 = self.scratch.refinenet4(layer_4_rn)

|

| 79 |

+

path_3 = self.scratch.refinenet3(path_4, layer_3_rn)

|

| 80 |

+

path_2 = self.scratch.refinenet2(path_3, layer_2_rn)

|

| 81 |

+

path_1 = self.scratch.refinenet1(path_2, layer_1_rn)

|

| 82 |

+

|

| 83 |

+

out = self.scratch.output_conv(path_1)

|

| 84 |

+

|

| 85 |

+

return out

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

class DPTDepthModel(DPT):

|

| 89 |

+

def __init__(self, path=None, non_negative=True, **kwargs):

|

| 90 |

+

features = kwargs["features"] if "features" in kwargs else 256

|

| 91 |

+

|

| 92 |

+

head = nn.Sequential(

|

| 93 |

+

nn.Conv2d(features, features // 2, kernel_size=3, stride=1, padding=1),

|

| 94 |

+

Interpolate(scale_factor=2, mode="bilinear", align_corners=True),

|

| 95 |

+

nn.Conv2d(features // 2, 32, kernel_size=3, stride=1, padding=1),

|

| 96 |

+

nn.ReLU(True),

|

| 97 |

+

nn.Conv2d(32, 1, kernel_size=1, stride=1, padding=0),

|

| 98 |

+

nn.ReLU(True) if non_negative else nn.Identity(),

|

| 99 |

+

nn.Identity(),

|

| 100 |

+

)

|

| 101 |

+

|

| 102 |

+

super().__init__(head, **kwargs)

|

| 103 |

+

|

| 104 |

+

if path is not None:

|

| 105 |

+

self.load(path)

|

| 106 |

+

|

| 107 |

+

def forward(self, x):

|

| 108 |

+

return super().forward(x).squeeze(dim=1)

|

| 109 |

+

|

repositories/stable-diffusion-stability-ai/ldm/modules/midas/midas/midas_net.py

ADDED

|

@@ -0,0 +1,76 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""MidashNet: Network for monocular depth estimation trained by mixing several datasets.

|

| 2 |

+

This file contains code that is adapted from

|

| 3 |

+

https://github.com/thomasjpfan/pytorch_refinenet/blob/master/pytorch_refinenet/refinenet/refinenet_4cascade.py

|

| 4 |

+

"""

|

| 5 |

+

import torch

|

| 6 |

+

import torch.nn as nn

|

| 7 |

+

|

| 8 |

+

from .base_model import BaseModel

|

| 9 |

+

from .blocks import FeatureFusionBlock, Interpolate, _make_encoder

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class MidasNet(BaseModel):

|

| 13 |

+

"""Network for monocular depth estimation.

|

| 14 |

+

"""

|

| 15 |

+

|

| 16 |

+

def __init__(self, path=None, features=256, non_negative=True):

|

| 17 |

+

"""Init.

|

| 18 |

+

|

| 19 |

+

Args:

|

| 20 |

+

path (str, optional): Path to saved model. Defaults to None.

|

| 21 |

+

features (int, optional): Number of features. Defaults to 256.

|

| 22 |

+

backbone (str, optional): Backbone network for encoder. Defaults to resnet50

|

| 23 |

+

"""

|

| 24 |

+

print("Loading weights: ", path)

|

| 25 |

+

|

| 26 |

+

super(MidasNet, self).__init__()

|

| 27 |

+

|

| 28 |

+

use_pretrained = False if path is None else True

|

| 29 |

+

|

| 30 |

+

self.pretrained, self.scratch = _make_encoder(backbone="resnext101_wsl", features=features, use_pretrained=use_pretrained)

|

| 31 |

+

|

| 32 |

+

self.scratch.refinenet4 = FeatureFusionBlock(features)

|

| 33 |

+

self.scratch.refinenet3 = FeatureFusionBlock(features)

|

| 34 |

+

self.scratch.refinenet2 = FeatureFusionBlock(features)

|

| 35 |

+

self.scratch.refinenet1 = FeatureFusionBlock(features)

|

| 36 |

+

|

| 37 |

+

self.scratch.output_conv = nn.Sequential(

|

| 38 |

+

nn.Conv2d(features, 128, kernel_size=3, stride=1, padding=1),

|

| 39 |

+

Interpolate(scale_factor=2, mode="bilinear"),

|

| 40 |

+

nn.Conv2d(128, 32, kernel_size=3, stride=1, padding=1),

|

| 41 |

+

nn.ReLU(True),

|

| 42 |

+

nn.Conv2d(32, 1, kernel_size=1, stride=1, padding=0),

|

| 43 |

+

nn.ReLU(True) if non_negative else nn.Identity(),

|

| 44 |

+

)

|

| 45 |

+

|

| 46 |