license: apache-2.0

tags:

- object-detection

- vision

widget:

- src: >-

https://huggingface.co/thejagstudio/TrolexFabricDay2Model/resolve/main/results.png

example_title: Result

pipeline_tag: image-segmentation

Model Card for YOLOv8 Defect Segmentation Model

Model Details

Model Description

This YOLOv8 model is designed for defect segmentation on fabric. It is capable of detecting and segmenting various types of defects such as tears, holes, stains, and irregularities on fabric surfaces. The model is trained using the YOLO (You Only Look Once) architecture, which enables real-time object detection and segmentation.

- Developed by: Ebest

- Model type: Object Detection and Segmentation

- Language(s): Python, PyTorch

- License: apache-2.0

- Finetuned from model: YOLOv8

Model Sources

- Repository: https://github.com/TheJagStudio/pipeliner

Uses

Direct Use

This model can be used directly for detecting and segmenting defects on fabric surfaces in real-time or on static images.

Downstream Use

This model can be fine-tuned for specific fabric types or defect categories, and integrated into quality control systems in textile industries.

Out-of-Scope Use

This model may not perform well on detecting defects on non-textile surfaces or in environments with highly complex backgrounds.

Bias, Risks, and Limitations

The model's performance may vary based on factors such as lighting conditions, fabric texture, and defect severity. It may struggle with detecting subtle defects or distinguishing defects from intricate fabric patterns.

Recommendations

Users should validate the model's performance on their specific dataset and consider augmenting the training data with diverse examples to improve generalization.

How to Get Started with the Model

You can use the provided code snippets to initialize and utilize the YOLOv8 defect segmentation model. Ensure that you have the necessary dependencies installed and refer to the training data section for instructions on preparing your dataset.

Training Details

Training Data

The model was trained on a dataset comprising images of various fabric types with annotated defect regions. The dataset includes examples of tears, holes, stains, and other common fabric defects.

Training Procedure

The training utilized a combination of data augmentation techniques such as random rotations, flips, and scaling to enhance model robustness. The YOLOv8 architecture was trained using a combination of labeled and synthetically generated defect images.

Training Hyperparameters

- Training regime: YOLOv8 architecture with stochastic gradient descent (SGD) optimizer

- Learning rate: 0.005

- Batch size: 16

- Epochs: 300

Evaluation

Testing Data, Factors & Metrics

Testing Data

The model was evaluated on a separate test set comprising fabric images with ground truth defect annotations.

Metrics

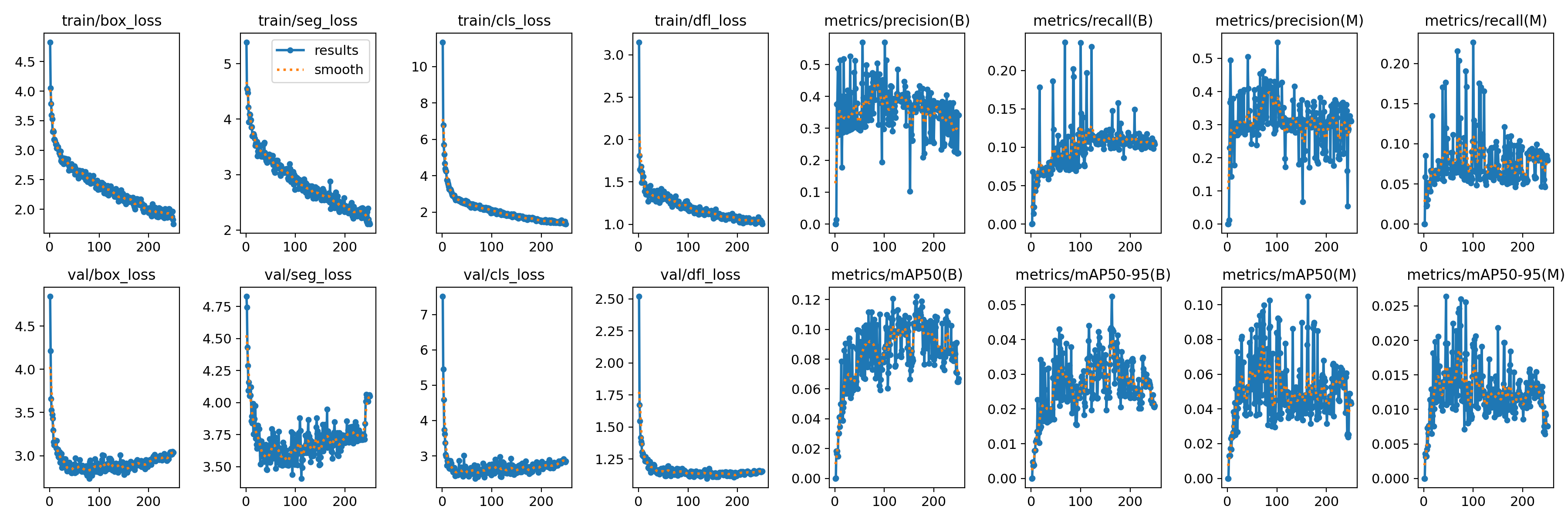

Evaluation metrics include precision, recall, and intersection over union (IoU) for defect segmentation accuracy.

Results

Environmental Impact

Carbon emissions associated with training and inference can be estimated using the Machine Learning Impact calculator. Specify the hardware type, hours used, cloud provider, compute region, and carbon emitted accordingly.

Technical Specifications

Model Architecture and Objective

The model architecture is based on the YOLO (You Only Look Once) framework, which enables efficient real-time object detection and segmentation. The objective is to accurately localize and segment defects on fabric surfaces.

Compute Infrastructure

Hardware

- GPU: Nvidia RTX 3050

Software

- Framework: PyTorch, Cuda

- Dependencies: Python