Upload folder using huggingface_hub

Browse files- LICENSE +23 -0

- README.md +137 -0

- feature_extractor/preprocessor_config.json +27 -0

- figures/collage_2.jpg +0 -0

- figures/collage_4.jpg +0 -0

- figures/comparison.png +0 -0

- figures/controlnet-canny.jpg +0 -0

- figures/controlnet-face.jpg +0 -0

- figures/controlnet-paint.jpg +0 -0

- figures/fernando.jpg +0 -0

- figures/fernando_original.jpg +0 -0

- figures/image-to-image-example-rodent.jpg +0 -0

- figures/image-variations-example-headset.jpg +0 -0

- figures/model-overview.jpg +0 -0

- figures/original.jpg +0 -0

- figures/reconstructed.jpg +0 -0

- figures/text-to-image-example-penguin.jpg +0 -0

- image_encoder/config.json +23 -0

- image_encoder/model.safetensors +3 -0

- model_index.json +30 -0

- prior/config.json +61 -0

- prior/diffusion_pytorch_model.safetensors +3 -0

- scheduler/scheduler_config.json +6 -0

- text_encoder/config.json +25 -0

- text_encoder/model.safetensors +3 -0

- tokenizer/merges.txt +0 -0

- tokenizer/special_tokens_map.json +30 -0

- tokenizer/tokenizer.json +0 -0

- tokenizer/tokenizer_config.json +30 -0

- tokenizer/vocab.json +0 -0

LICENSE

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

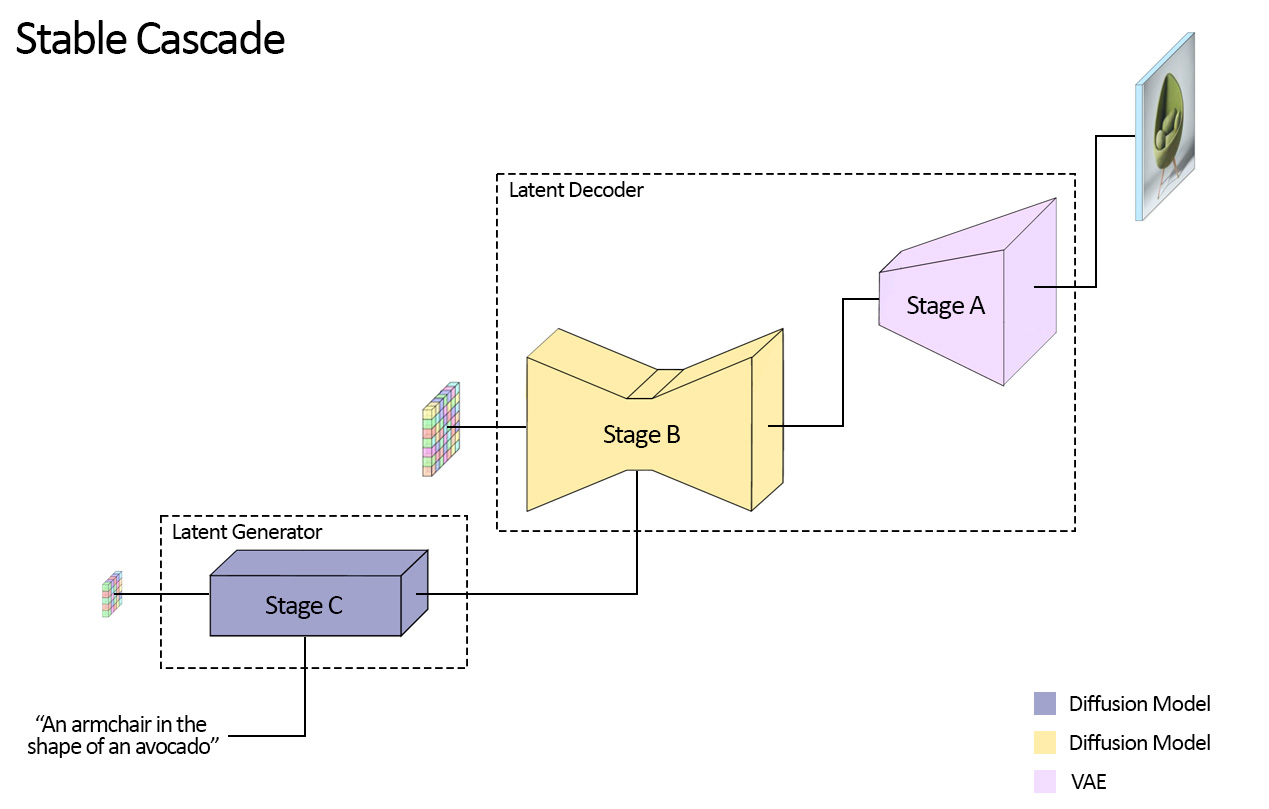

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

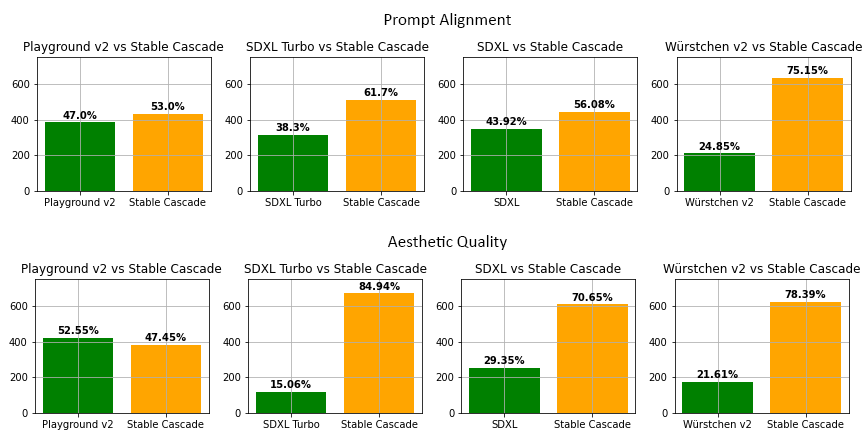

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Stable Cascade NON-COMMERCIAL COMMUNITY LICENSE AGREEMENT

|

| 2 |

+

Dated: February 10, 2024

|

| 3 |

+

|

| 4 |

+

“AUP” means the Stability AI Acceptable Use Policy available at https://stability.ai/use-policy, as may be updated from time to time.

|

| 5 |

+

|

| 6 |

+

"Agreement" means the terms and conditions for use, reproduction, distribution and modification of the Software Products set forth herein.

|

| 7 |

+

"Derivative Work(s)” means (a) any derivative work of the Software Products as recognized by U.S. copyright laws and (b) any modifications to a Model, and any other model created which is based on or derived from the Model or the Model’s output. For clarity, Derivative Works do not include the output of any Model.

|

| 8 |

+

“Documentation” means any specifications, manuals, documentation, and other written information provided by Stability AI related to the Software.

|

| 9 |

+

"Licensee" or "you" means you, or your employer or any other person or entity (if you are entering into this Agreement on such person or entity's behalf), of the age required under applicable laws, rules or regulations to provide legal consent and that has legal authority to bind your employer or such other person or entity if you are entering in this Agreement on their behalf.

|

| 10 |

+

"Stability AI" or "we" means Stability AI Ltd.

|

| 11 |

+

"Software" means, collectively, Stability AI’s proprietary models and algorithms, including machine-learning models, trained model weights and other elements of the foregoing, made available under this Agreement.

|

| 12 |

+

“Software Products” means Software and Documentation.

|

| 13 |

+

By using or distributing any portion or element of the Software Products, you agree to be bound by this Agreement.

|

| 14 |

+

License Rights and Redistribution.

|

| 15 |

+

Subject to your compliance with this Agreement, the AUP (which is hereby incorporated herein by reference), and the Documentation, Stability AI grants you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty free and limited license under Stability AI’s intellectual property or other rights owned by Stability AI embodied in the Software Products to reproduce, distribute, and create Derivative Works of the Software Products for purposes other than commercial or production use.

|

| 16 |

+

b. If you distribute or make the Software Products, or any Derivative Works thereof, available to a third party, the Software Products, Derivative Works, or any portion thereof, respectively, will remain subject to this Agreement and you must (i) provide a copy of this Agreement to such third party, and (ii) retain the following attribution notice within a "Notice" text file distributed as a part of such copies: "Stable Cascade is licensed under the Stable Cascade Research License, Copyright (c) Stability AI Ltd. All Rights Reserved.” If you create a Derivative Work of a Software Product, you may add your own attribution notices to the Notice file included with the Software Product, provided that you clearly indicate which attributions apply to the Software Product and you must state in the NOTICE file that you changed the Software Product and how it was modified.

|

| 17 |

+

2. Disclaimer of Warranty. UNLESS REQUIRED BY APPLICABLE LAW, THE SOFTWARE PRODUCTS AND ANY OUTPUT AND RESULTS THEREFROM ARE PROVIDED ON AN "AS IS" BASIS, WITHOUT WARRANTIES OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, WITHOUT LIMITATION, ANY WARRANTIES OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR A PARTICULAR PURPOSE. YOU ARE SOLELY RESPONSIBLE FOR DETERMINING THE APPROPRIATENESS OF USING OR REDISTRIBUTING THE SOFTWARE PRODUCTS AND ASSUME ANY RISKS ASSOCIATED WITH YOUR USE OF THE SOFTWARE PRODUCTS AND ANY OUTPUT AND RESULTS.

|

| 18 |

+

3. Limitation of Liability. IN NO EVENT WILL STABILITY AI OR ITS AFFILIATES BE LIABLE UNDER ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, TORT, NEGLIGENCE, PRODUCTS LIABILITY, OR OTHERWISE, ARISING OUT OF THIS AGREEMENT, FOR ANY LOST PROFITS OR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, INCIDENTAL, EXEMPLARY OR PUNITIVE DAMAGES, EVEN IF STABILITY AI OR ITS AFFILIATES HAVE BEEN ADVISED OF THE POSSIBILITY OF ANY OF THE FOREGOING.

|

| 19 |

+

3. Intellectual Property.

|

| 20 |

+

a. No trademark licenses are granted under this Agreement, and in connection with the Software Products, neither Stability AI nor Licensee may use any name or mark owned by or associated with the other or any of its affiliates, except as required for reasonable and customary use in describing and redistributing the Software Products.

|

| 21 |

+

Subject to Stability AI’s ownership of the Software Products and Derivative Works made by or for Stability AI, with respect to any Derivative Works that are made by you, as between you and Stability AI, you are and will be the owner of such Derivative Works.

|

| 22 |

+

If you institute litigation or other proceedings against Stability AI (including a cross-claim or counterclaim in a lawsuit) alleging that the Software Products or associated outputs or results, or any portion of any of the foregoing, constitutes infringement of intellectual property or other rights owned or licensable by you, then any licenses granted to you under this Agreement shall terminate as of the date such litigation or claim is filed or instituted. You will indemnify and hold harmless Stability AI from and against any claim by any third party arising out of or related to your use or distribution of the Software Products in violation of this Agreement.

|

| 23 |

+

4. Term and Termination. The term of this Agreement will commence upon your acceptance of this Agreement or access to the Software Products and will continue in full force and effect until terminated in accordance with the terms and conditions herein. Stability AI may terminate this Agreement if you are in breach of any term or condition of this Agreement. Upon termination of this Agreement, you shall delete and cease use of the Software Products. Sections 2-4 shall survive the termination of this Agreement.

|

README.md

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

pipeline_tag: text-to-image

|

| 3 |

+

license: other

|

| 4 |

+

license_name: stable-cascade-nc-community

|

| 5 |

+

license_link: LICENSE

|

| 6 |

+

---

|

| 7 |

+

|

| 8 |

+

# Stable Cascade Prior

|

| 9 |

+

|

| 10 |

+

<!-- Provide a quick summary of what the model is/does. -->

|

| 11 |

+

<img src="figures/collage_1.jpg" width="800">

|

| 12 |

+

|

| 13 |

+

This model is built upon the [Würstchen](https://openreview.net/forum?id=gU58d5QeGv) architecture and its main

|

| 14 |

+

difference to other models like Stable Diffusion is that it is working at a much smaller latent space. Why is this

|

| 15 |

+

important? The smaller the latent space, the **faster** you can run inference and the **cheaper** the training becomes.

|

| 16 |

+

How small is the latent space? Stable Diffusion uses a compression factor of 8, resulting in a 1024x1024 image being

|

| 17 |

+

encoded to 128x128. Stable Cascade achieves a compression factor of 42, meaning that it is possible to encode a

|

| 18 |

+

1024x1024 image to 24x24, while maintaining crisp reconstructions. The text-conditional model is then trained in the

|

| 19 |

+

highly compressed latent space. Previous versions of this architecture, achieved a 16x cost reduction over Stable

|

| 20 |

+

Diffusion 1.5. <br> <br>

|

| 21 |

+

Therefore, this kind of model is well suited for usages where efficiency is important. Furthermore, all known extensions

|

| 22 |

+

like finetuning, LoRA, ControlNet, IP-Adapter, LCM etc. are possible with this method as well.

|

| 23 |

+

|

| 24 |

+

## Model Details

|

| 25 |

+

|

| 26 |

+

### Model Description

|

| 27 |

+

|

| 28 |

+

Stable Cascade is a diffusion model trained to generate images given a text prompt.

|

| 29 |

+

|

| 30 |

+

- **Developed by:** Stability AI

|

| 31 |

+

- **Funded by:** Stability AI

|

| 32 |

+

- **Model type:** Generative text-to-image model

|

| 33 |

+

|

| 34 |

+

### Model Sources

|

| 35 |

+

|

| 36 |

+

For research purposes, we recommend our `StableCascade` Github repository (https://github.com/Stability-AI/StableCascade).

|

| 37 |

+

|

| 38 |

+

- **Repository:** https://github.com/Stability-AI/StableCascade

|

| 39 |

+

- **Paper:** https://openreview.net/forum?id=gU58d5QeGv

|

| 40 |

+

|

| 41 |

+

### Model Overview

|

| 42 |

+

Stable Cascade consists of three models: Stage A, Stage B and Stage C, representing a cascade to generate images,

|

| 43 |

+

hence the name "Stable Cascade".

|

| 44 |

+

Stage A & B are used to compress images, similar to what the job of the VAE is in Stable Diffusion.

|

| 45 |

+

However, with this setup, a much higher compression of images can be achieved. While the Stable Diffusion models use a

|

| 46 |

+

spatial compression factor of 8, encoding an image with resolution of 1024 x 1024 to 128 x 128, Stable Cascade achieves

|

| 47 |

+

a compression factor of 42. This encodes a 1024 x 1024 image to 24 x 24, while being able to accurately decode the

|

| 48 |

+

image. This comes with the great benefit of cheaper training and inference. Furthermore, Stage C is responsible

|

| 49 |

+

for generating the small 24 x 24 latents given a text prompt. The following picture shows this visually.

|

| 50 |

+

|

| 51 |

+

<img src="figures/model-overview.jpg" width="600">

|

| 52 |

+

|

| 53 |

+

For this release, we are providing two checkpoints for Stage C, two for Stage B and one for Stage A. Stage C comes with

|

| 54 |

+

a 1 billion and 3.6 billion parameter version, but we highly recommend using the 3.6 billion version, as most work was

|

| 55 |

+

put into its finetuning. The two versions for Stage B amount to 700 million and 1.5 billion parameters. Both achieve

|

| 56 |

+

great results, however the 1.5 billion excels at reconstructing small and fine details. Therefore, you will achieve the

|

| 57 |

+

best results if you use the larger variant of each. Lastly, Stage A contains 20 million parameters and is fixed due to

|

| 58 |

+

its small size.

|

| 59 |

+

|

| 60 |

+

## Evaluation

|

| 61 |

+

<img height="300" src="figures/comparison.png"/>

|

| 62 |

+

According to our evaluation, Stable Cascade performs best in both prompt alignment and aesthetic quality in almost all

|

| 63 |

+

comparisons. The above picture shows the results from a human evaluation using a mix of parti-prompts (link) and

|

| 64 |

+

aesthetic prompts. Specifically, Stable Cascade (30 inference steps) was compared against Playground v2 (50 inference

|

| 65 |

+

steps), SDXL (50 inference steps), SDXL Turbo (1 inference step) and Würstchen v2 (30 inference steps).

|

| 66 |

+

|

| 67 |

+

## Code Example

|

| 68 |

+

```shell

|

| 69 |

+

#install `diffusers` from this branch while the PR is WIP

|

| 70 |

+

pip install git+https://github.com/kashif/diffusers.git@wuerstchen-v3

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

```python

|

| 74 |

+

import torch

|

| 75 |

+

from diffusers import StableCascadeDecoderPipeline, StableCascadePriorPipeline

|

| 76 |

+

|

| 77 |

+

device = "cuda"

|

| 78 |

+

dtype = torch.bfloat16

|

| 79 |

+

num_images_per_prompt = 2

|

| 80 |

+

|

| 81 |

+

prior = StableCascadePriorPipeline.from_pretrained("stabilityai/stable-cascade-prior", torch_dtype=dtype).to(device)

|

| 82 |

+

decoder = StableCascadeDecoderPipeline.from_pretrained("stabilityai/stable-cascade", torch_dtype=dtype).to(device)

|

| 83 |

+

|

| 84 |

+

prompt = "Anthropomorphic cat dressed as a pilot"

|

| 85 |

+

negative_prompt = ""

|

| 86 |

+

|

| 87 |

+

prior_output = prior_pipeline(

|

| 88 |

+

prompt=caption,

|

| 89 |

+

height=1024,

|

| 90 |

+

width=1024,

|

| 91 |

+

negative_prompt=negative_prompt,

|

| 92 |

+

guidance_scale=4.0,

|

| 93 |

+

num_images_per_prompt=num_images_per_prompt,

|

| 94 |

+

)

|

| 95 |

+

decoder_output = decoder_pipeline(

|

| 96 |

+

image_embeddings=prior_output.image_embeddings,

|

| 97 |

+

prompt=caption,

|

| 98 |

+

negative_prompt=negative_prompt,

|

| 99 |

+

guidance_scale=0.0,

|

| 100 |

+

output_type="pil",

|

| 101 |

+

).images

|

| 102 |

+

```

|

| 103 |

+

|

| 104 |

+

## Uses

|

| 105 |

+

|

| 106 |

+

### Direct Use

|

| 107 |

+

|

| 108 |

+

The model is intended for research purposes for now. Possible research areas and tasks include

|

| 109 |

+

|

| 110 |

+

- Research on generative models.

|

| 111 |

+

- Safe deployment of models which have the potential to generate harmful content.

|

| 112 |

+

- Probing and understanding the limitations and biases of generative models.

|

| 113 |

+

- Generation of artworks and use in design and other artistic processes.

|

| 114 |

+

- Applications in educational or creative tools.

|

| 115 |

+

|

| 116 |

+

Excluded uses are described below.

|

| 117 |

+

|

| 118 |

+

### Out-of-Scope Use

|

| 119 |

+

|

| 120 |

+

The model was not trained to be factual or true representations of people or events,

|

| 121 |

+

and therefore using the model to generate such content is out-of-scope for the abilities of this model.

|

| 122 |

+

The model should not be used in any way that violates Stability AI's [Acceptable Use Policy](https://stability.ai/use-policy).

|

| 123 |

+

|

| 124 |

+

## Limitations and Bias

|

| 125 |

+

|

| 126 |

+

### Limitations

|

| 127 |

+

- Faces and people in general may not be generated properly.

|

| 128 |

+

- The autoencoding part of the model is lossy.

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

### Recommendations

|

| 132 |

+

|

| 133 |

+

The model is intended for research purposes only.

|

| 134 |

+

|

| 135 |

+

## How to Get Started with the Model

|

| 136 |

+

|

| 137 |

+

Check out https://github.com/Stability-AI/StableCascade

|

feature_extractor/preprocessor_config.json

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": {

|

| 3 |

+

"height": 224,

|

| 4 |

+

"width": 224

|

| 5 |

+

},

|

| 6 |

+

"do_center_crop": true,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_rescale": true,

|

| 10 |

+

"do_resize": true,

|

| 11 |

+

"image_mean": [

|

| 12 |

+

0.48145466,

|

| 13 |

+

0.4578275,

|

| 14 |

+

0.40821073

|

| 15 |

+

],

|

| 16 |

+

"image_processor_type": "CLIPImageProcessor",

|

| 17 |

+

"image_std": [

|

| 18 |

+

0.26862954,

|

| 19 |

+

0.26130258,

|

| 20 |

+

0.27577711

|

| 21 |

+

],

|

| 22 |

+

"resample": 3,

|

| 23 |

+

"rescale_factor": 0.00392156862745098,

|

| 24 |

+

"size": {

|

| 25 |

+

"shortest_edge": 224

|

| 26 |

+

}

|

| 27 |

+

}

|

figures/collage_2.jpg

ADDED

|

figures/collage_4.jpg

ADDED

|

figures/comparison.png

ADDED

|

figures/controlnet-canny.jpg

ADDED

|

figures/controlnet-face.jpg

ADDED

|

figures/controlnet-paint.jpg

ADDED

|

figures/fernando.jpg

ADDED

|

figures/fernando_original.jpg

ADDED

|

figures/image-to-image-example-rodent.jpg

ADDED

|

figures/image-variations-example-headset.jpg

ADDED

|

figures/model-overview.jpg

ADDED

|

figures/original.jpg

ADDED

|

figures/reconstructed.jpg

ADDED

|

figures/text-to-image-example-penguin.jpg

ADDED

|

image_encoder/config.json

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "StableCascade-prior/image_encoder",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"CLIPVisionModelWithProjection"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"dropout": 0.0,

|

| 8 |

+

"hidden_act": "quick_gelu",

|

| 9 |

+

"hidden_size": 1024,

|

| 10 |

+

"image_size": 224,

|

| 11 |

+

"initializer_factor": 1.0,

|

| 12 |

+

"initializer_range": 0.02,

|

| 13 |

+

"intermediate_size": 4096,

|

| 14 |

+

"layer_norm_eps": 1e-05,

|

| 15 |

+

"model_type": "clip_vision_model",

|

| 16 |

+

"num_attention_heads": 16,

|

| 17 |

+

"num_channels": 3,

|

| 18 |

+

"num_hidden_layers": 24,

|

| 19 |

+

"patch_size": 14,

|

| 20 |

+

"projection_dim": 768,

|

| 21 |

+

"torch_dtype": "bfloat16",

|

| 22 |

+

"transformers_version": "4.38.0.dev0"

|

| 23 |

+

}

|

image_encoder/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e4b33d864f89a793357a768cb07d0dc18d6a14e6664f4110a0d535ca9ba78da8

|

| 3 |

+

size 607980488

|

model_index.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableCascadePriorPipeline",

|

| 3 |

+

"_diffusers_version": "0.26.0.dev0",

|

| 4 |

+

"_name_or_path": "StableCascade-prior/",

|

| 5 |

+

"feature_extractor": [

|

| 6 |

+

"transformers",

|

| 7 |

+

"CLIPImageProcessor"

|

| 8 |

+

],

|

| 9 |

+

"image_encoder": [

|

| 10 |

+

"transformers",

|

| 11 |

+

"CLIPVisionModelWithProjection"

|

| 12 |

+

],

|

| 13 |

+

"prior": [

|

| 14 |

+

"stable_cascade",

|

| 15 |

+

"StableCascadeUnet"

|

| 16 |

+

],

|

| 17 |

+

"resolution_multiple": 42.67,

|

| 18 |

+

"scheduler": [

|

| 19 |

+

"diffusers",

|

| 20 |

+

"DDPMWuerstchenScheduler"

|

| 21 |

+

],

|

| 22 |

+

"text_encoder": [

|

| 23 |

+

"transformers",

|

| 24 |

+

"CLIPTextModelWithProjection"

|

| 25 |

+

],

|

| 26 |

+

"tokenizer": [

|

| 27 |

+

"transformers",

|

| 28 |

+

"CLIPTokenizerFast"

|

| 29 |

+

]

|

| 30 |

+

}

|

prior/config.json

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableCascadeUnet",

|

| 3 |

+

"_diffusers_version": "0.26.0.dev0",

|

| 4 |

+

"_name_or_path": "StableCascade-prior/prior",

|

| 5 |

+

"block_repeat": [

|

| 6 |

+

[

|

| 7 |

+

1,

|

| 8 |

+

1

|

| 9 |

+

],

|

| 10 |

+

[

|

| 11 |

+

1,

|

| 12 |

+

1

|

| 13 |

+

]

|

| 14 |

+

],

|

| 15 |

+

"blocks": [

|

| 16 |

+

[

|

| 17 |

+

8,

|

| 18 |

+

24

|

| 19 |

+

],

|

| 20 |

+

[

|

| 21 |

+

24,

|

| 22 |

+

8

|

| 23 |

+

]

|

| 24 |

+

],

|

| 25 |

+

"c_clip_img": 768,

|

| 26 |

+

"c_clip_seq": 4,

|

| 27 |

+

"c_clip_text": 1280,

|

| 28 |

+

"c_clip_text_pooled": 1280,

|

| 29 |

+

"c_cond": 2048,

|

| 30 |

+

"c_effnet": null,

|

| 31 |

+

"c_hidden": [

|

| 32 |

+

2048,

|

| 33 |

+

2048

|

| 34 |

+

],

|

| 35 |

+

"c_in": 16,

|

| 36 |

+

"c_out": 16,

|

| 37 |

+

"c_pixels": null,

|

| 38 |

+

"c_r": 64,

|

| 39 |

+

"dropout": [

|

| 40 |

+

0.1,

|

| 41 |

+

0.1

|

| 42 |

+

],

|

| 43 |

+

"kernel_size": 3,

|

| 44 |

+

"level_config": [

|

| 45 |

+

"CTA",

|

| 46 |

+

"CTA"

|

| 47 |

+

],

|

| 48 |

+

"nhead": [

|

| 49 |

+

32,

|

| 50 |

+

32

|

| 51 |

+

],

|

| 52 |

+

"patch_size": 1,

|

| 53 |

+

"self_attn": true,

|

| 54 |

+

"switch_level": [

|

| 55 |

+

false

|

| 56 |

+

],

|

| 57 |

+

"t_conds": [

|

| 58 |

+

"sca",

|

| 59 |

+

"crp"

|

| 60 |

+

]

|

| 61 |

+

}

|

prior/diffusion_pytorch_model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:44a4cd9540f327f2fb4ac09179e4e87912a01cdb1b3b86c79f0f853976fb4c98

|

| 3 |

+

size 7178377816

|

scheduler/scheduler_config.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "DDPMWuerstchenScheduler",

|

| 3 |

+

"_diffusers_version": "0.26.0.dev0",

|

| 4 |

+

"s": 0.008,

|

| 5 |

+

"scaler": 1.0

|

| 6 |

+

}

|

text_encoder/config.json

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "StableCascade-prior/text_encoder",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"CLIPTextModelWithProjection"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 49406,

|

| 8 |

+

"dropout": 0.0,

|

| 9 |

+

"eos_token_id": 49407,

|

| 10 |

+

"hidden_act": "gelu",

|

| 11 |

+

"hidden_size": 1280,

|

| 12 |

+

"initializer_factor": 1.0,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 5120,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 77,

|

| 17 |

+

"model_type": "clip_text_model",

|

| 18 |

+

"num_attention_heads": 20,

|

| 19 |

+

"num_hidden_layers": 32,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"projection_dim": 1280,

|

| 22 |

+

"torch_dtype": "bfloat16",

|

| 23 |

+

"transformers_version": "4.38.0.dev0",

|

| 24 |

+

"vocab_size": 49408

|

| 25 |

+

}

|

text_encoder/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:260e0127aca3c89db813637ae659ebb822cb07af71fedc16cbd980e9518dfdcd

|

| 3 |

+

size 1389382688

|

tokenizer/merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer/special_tokens_map.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<|startoftext|>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": true,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "<|endoftext|>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": false,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": {

|

| 17 |

+

"content": "<|endoftext|>",

|

| 18 |

+

"lstrip": false,

|

| 19 |

+

"normalized": false,

|

| 20 |

+

"rstrip": false,

|

| 21 |

+

"single_word": false

|

| 22 |

+

},

|

| 23 |

+

"unk_token": {

|

| 24 |

+

"content": "<|endoftext|>",

|

| 25 |

+

"lstrip": false,

|

| 26 |

+

"normalized": false,

|

| 27 |

+

"rstrip": false,

|

| 28 |

+

"single_word": false

|

| 29 |

+

}

|

| 30 |

+

}

|

tokenizer/tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer/tokenizer_config.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_prefix_space": false,

|

| 3 |

+

"added_tokens_decoder": {

|

| 4 |

+

"49406": {

|

| 5 |

+

"content": "<|startoftext|>",

|

| 6 |

+

"lstrip": false,

|

| 7 |

+

"normalized": true,

|

| 8 |

+

"rstrip": false,

|

| 9 |

+

"single_word": false,

|

| 10 |

+

"special": true

|

| 11 |

+

},

|

| 12 |

+

"49407": {

|

| 13 |

+

"content": "<|endoftext|>",

|

| 14 |

+

"lstrip": false,

|

| 15 |

+

"normalized": false,

|

| 16 |

+

"rstrip": false,

|

| 17 |

+

"single_word": false,

|

| 18 |

+

"special": true

|

| 19 |

+

}

|

| 20 |

+

},

|

| 21 |

+

"bos_token": "<|startoftext|>",

|

| 22 |

+

"clean_up_tokenization_spaces": true,

|

| 23 |

+

"do_lower_case": true,

|

| 24 |

+

"eos_token": "<|endoftext|>",

|

| 25 |

+

"errors": "replace",

|

| 26 |

+

"model_max_length": 77,

|

| 27 |

+

"pad_token": "<|endoftext|>",

|

| 28 |

+

"tokenizer_class": "CLIPTokenizer",

|

| 29 |

+

"unk_token": "<|endoftext|>"

|

| 30 |

+

}

|

tokenizer/vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|