Spaces:

Runtime error

Runtime error

metadata

title: YoloV3 on PASCAL VOC Dataset From Scratch (Slide for GradCam output)

emoji: 🚀

colorFrom: gray

colorTo: blue

sdk: gradio

sdk_version: 3.39.0

app_file: app.py

pinned: false

license: mit

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

GithubREPO

Training Procedure

- The model is trained on Tesla T4 (15GB GPU memory)

- The training is completed in two phases

- The first phase contains 20 epochs and second phase contains another 20 epochs

- In the first training we see loss dropping correctly but in the second training it drops less

- We run our two training loops separately and do not run any kind of validation on them, except for validation loss

- Later we evaluate the model and get the numbers

- The lightning generally saves the model as .ckpt format, so we convert it to torch format by saving state dict as .pt format

- For doing this we use these two lines of code

best_model = torch.load(weights_path)

torch.save(best_model['state_dict'], f'best_model.pth')

litemodel = YOLOv3(num_classes=num_classes)

litemodel.load_state_dict(torch.load("best_model.pth",map_location='cpu'))

device = "cpu"

torch.save(litemodel.state_dict(), PATH)

- The model starts overfitting on the dataset after 30 epochs

- Future Improvements

- Train the model in 1 shot instead of two different phases

- Keep a better batch size (Basically earn more money and buy a good GPU)

- Data transformation also plays a vital role here

- OneCycle LR range needs to be appropriately modified for a better LR

Data Transformation

Along with the transforms mentioned in the config file, we also apply mosaic transform on 75% images

Accuracy Report

Class accuracy is: 82.999725%

No obj accuracy is: 96.828300%

Obj accuracy is: 76.898473%

MAP: 0.29939851760864258

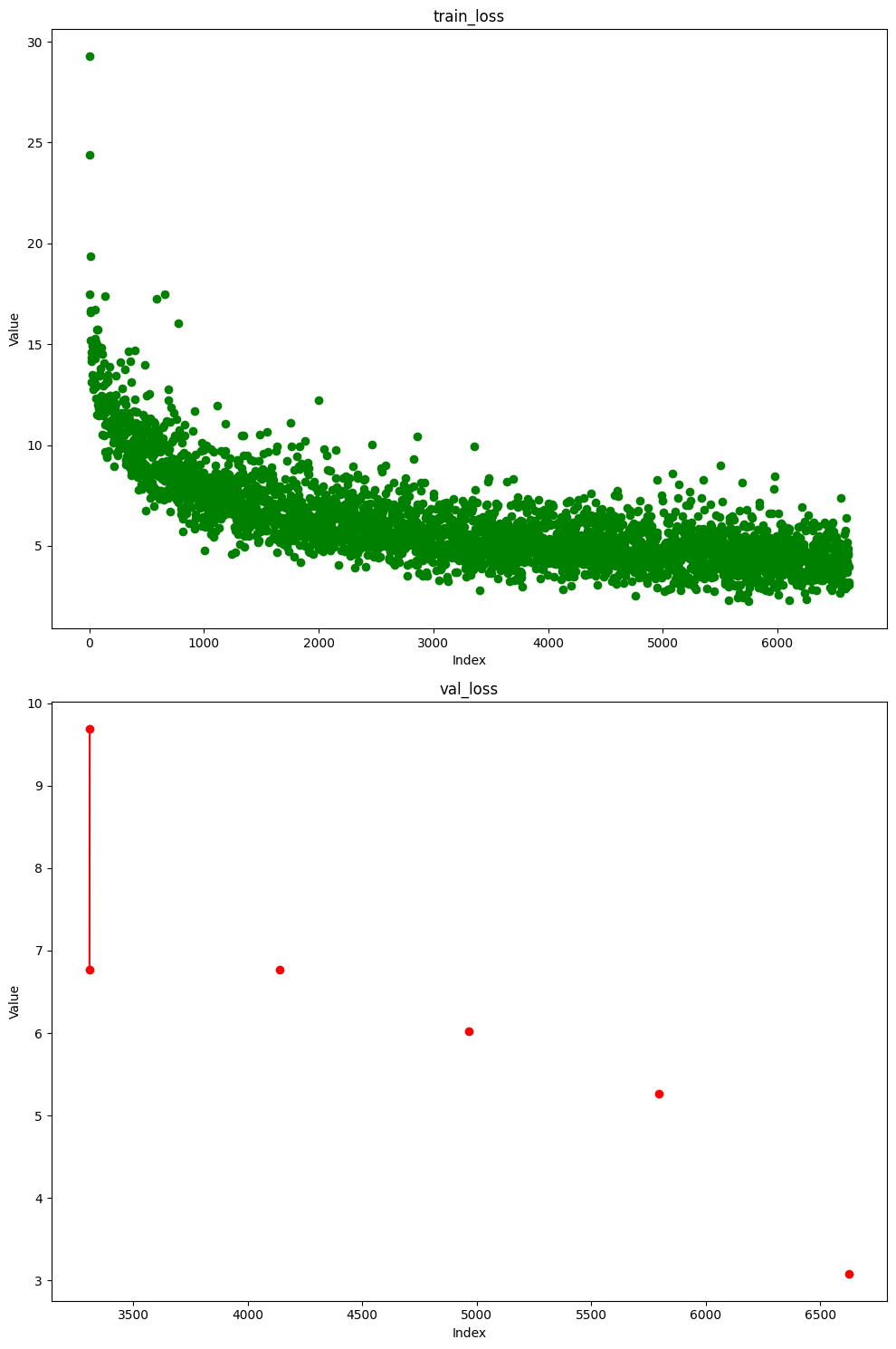

Training Logs

For faster execution we run the validation step after 20 epochs for the first 20 epochs of training and after that after every 5 epochs till 40 epochs

Unnamed: 0 lr-Adam step train_loss epoch val_loss

6576 6576 NaN 164299 4.186745 39.0 NaN

6577 6577 0.000132 164349 NaN NaN NaN

6578 6578 NaN 164349 2.936086 39.0 NaN

6579 6579 0.000132 164399 NaN NaN NaN

6580 6580 NaN 164399 4.777130 39.0 NaN

6581 6581 0.000132 164449 NaN NaN NaN

6582 6582 NaN 164449 3.139145 39.0 NaN

6583 6583 0.000132 164499 NaN NaN NaN

6584 6584 NaN 164499 4.596097 39.0 NaN

6585 6585 0.000132 164549 NaN NaN NaN

6586 6586 NaN 164549 5.587294 39.0 NaN

6587 6587 0.000132 164599 NaN NaN NaN

6588 6588 NaN 164599 4.592830 39.0 NaN

6589 6589 0.000132 164649 NaN NaN NaN

6590 6590 NaN 164649 3.914468 39.0 NaN

6591 6591 0.000132 164699 NaN NaN NaN

6592 6592 NaN 164699 3.180615 39.0 NaN

6593 6593 0.000132 164749 NaN NaN NaN

6594 6594 NaN 164749 5.772174 39.0 NaN

6595 6595 0.000132 164799 NaN NaN NaN

6596 6596 NaN 164799 2.894014 39.0 NaN

6597 6597 0.000132 164849 NaN NaN NaN

6598 6598 NaN 164849 4.473828 39.0 NaN

6599 6599 0.000132 164899 NaN NaN NaN

6600 6600 NaN 164899 6.397766 39.0 NaN

6601 6601 0.000132 164949 NaN NaN NaN

6602 6602 NaN 164949 3.789242 39.0 NaN

6603 6603 0.000132 164999 NaN NaN NaN

6604 6604 NaN 164999 5.182691 39.0 NaN

6605 6605 0.000132 165049 NaN NaN NaN

6606 6606 NaN 165049 4.845749 39.0 NaN

6607 6607 0.000132 165099 NaN NaN NaN

6608 6608 NaN 165099 3.672542 39.0 NaN

6609 6609 0.000132 165149 NaN NaN NaN

6610 6610 NaN 165149 4.230726 39.0 NaN

6611 6611 0.000132 165199 NaN NaN NaN

6612 6612 NaN 165199 4.625024 39.0 NaN

6613 6613 0.000132 165249 NaN NaN NaN

6614 6614 NaN 165249 4.549682 39.0 NaN

6615 6615 0.000132 165299 NaN NaN NaN

6616 6616 NaN 165299 4.040627 39.0 NaN

6617 6617 0.000132 165349 NaN NaN NaN

6618 6618 NaN 165349 4.857126 39.0 NaN

6619 6619 0.000132 165399 NaN NaN NaN

6620 6620 NaN 165399 3.081895 39.0 NaN

6621 6621 0.000132 165449 NaN NaN NaN

6622 6622 NaN 165449 3.945353 39.0 NaN

6623 6623 0.000132 165499 NaN NaN NaN

6624 6624 NaN 165499 3.203420 39.0 NaN

6625 6625 NaN 165519 NaN 39.0 3.081895