Spaces:

Runtime error

Runtime error

sashavor

commited on

Commit

•

1833b56

1

Parent(s):

ece8ba5

adding stuff

Browse files- app.py +50 -4

- images/averagefaces/CEO.png +0 -0

- images/averagefaces/hairdresser.png +0 -0

- images/averagefaces/jailer.png +0 -0

- images/averagefaces/janitor.png +0 -0

- images/averagefaces/manicurist.png +0 -0

- images/averagefaces/postal_worker.png +0 -0

- images/examples/bus driver (SD v2).png +0 -0

- images/examples/carpenter (SD v1.4).png +0 -0

- images/examples/fitness instructor (SD v2).png +0 -0

- images/examples/pilot (Dall-E 2).png +0 -0

- images/examples/scientist (Dall-E 2).png +0 -0

- images/examples/security guard (SD v1.4).png +0 -0

app.py

CHANGED

|

@@ -2,9 +2,11 @@ import gradio as gr

|

|

| 2 |

from PIL import Image

|

| 3 |

import os

|

| 4 |

|

| 5 |

-

def

|

| 6 |

-

|

| 7 |

-

|

|

|

|

|

|

|

| 8 |

|

| 9 |

with gr.Blocks() as demo:

|

| 10 |

gr.Markdown("""

|

|

@@ -13,6 +15,9 @@ with gr.Blocks() as demo:

|

|

| 13 |

gr.HTML('''

|

| 14 |

<p style="margin-bottom: 10px; font-size: 94%">This is the demo page for the "Stable Bias" paper, which aims to explore and quantify social biases in text-to-image systems. <br> This work was done by <a href='https://huggingface.co/sasha' style='text-decoration: underline;' target='_blank'> Alexandra Sasha Luccioni (Hugging Face) </a>, <a href='https://huggingface.co/cakiki' style='text-decoration: underline;' target='_blank'> Christopher Akiki (ScaDS.AI, Leipzig University)</a>, <a href='https://huggingface.co/meg' style='text-decoration: underline;' target='_blank'> Margaret Mitchell (Hugging Face) </a> and <a href='https://huggingface.co/yjernite' style='text-decoration: underline;' target='_blank'> Yacine Jernite (Hugging Face) </a> .</p>

|

| 15 |

''')

|

|

|

|

|

|

|

|

|

|

| 16 |

|

| 17 |

gr.HTML('''

|

| 18 |

<p style="margin-bottom: 14px; font-size: 100%"> As AI-enabled Text-to-Image systems are becoming increasingly used, characterizing the social biases they exhibit is a necessary first step to lowering their risk of discriminatory outcomes. <br> We propose a new method for exploring and quantifying social biases in these kinds of systems by directly comparing collections of generated images designed to showcase a system’s variation across social attributes — gender and ethnicity — and target attributes for bias evaluation — professions and gender-coded adjectives. <br> We compare three models: Stable Diffusion v.1.4, Stable Diffusion v.2., and Dall-E 2, and present some of our key findings below:</p>

|

|

@@ -57,5 +62,46 @@ humans have no inherent gender or ethnicity nor do they belong to socially-const

|

|

| 57 |

with gr.Row():

|

| 58 |

gr.HTML('''

|

| 59 |

<p style="margin-bottom: 14px; font-size: 100%"> TO DO: talk about what we see above. <br> Continue exploring the demo on your own to uncover other patterns! </p>''')

|

| 60 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 61 |

demo.launch(debug=True)

|

|

|

|

| 2 |

from PIL import Image

|

| 3 |

import os

|

| 4 |

|

| 5 |

+

def get_images(path):

|

| 6 |

+

images = [Image.open(os.path.join(path,im)) for im in os.listdir(path)]

|

| 7 |

+

paths = os.listdir(path)

|

| 8 |

+

return([(im, path) for im, path in zip(images,paths)])

|

| 9 |

+

|

| 10 |

|

| 11 |

with gr.Blocks() as demo:

|

| 12 |

gr.Markdown("""

|

|

|

|

| 15 |

gr.HTML('''

|

| 16 |

<p style="margin-bottom: 10px; font-size: 94%">This is the demo page for the "Stable Bias" paper, which aims to explore and quantify social biases in text-to-image systems. <br> This work was done by <a href='https://huggingface.co/sasha' style='text-decoration: underline;' target='_blank'> Alexandra Sasha Luccioni (Hugging Face) </a>, <a href='https://huggingface.co/cakiki' style='text-decoration: underline;' target='_blank'> Christopher Akiki (ScaDS.AI, Leipzig University)</a>, <a href='https://huggingface.co/meg' style='text-decoration: underline;' target='_blank'> Margaret Mitchell (Hugging Face) </a> and <a href='https://huggingface.co/yjernite' style='text-decoration: underline;' target='_blank'> Yacine Jernite (Hugging Face) </a> .</p>

|

| 17 |

''')

|

| 18 |

+

examples_path= "images/examples"

|

| 19 |

+

examples_gallery = gr.Gallery(get_images(examples_path),

|

| 20 |

+

label="Example images", show_label=False, elem_id="gallery").style(grid=[1,6], height="auto")

|

| 21 |

|

| 22 |

gr.HTML('''

|

| 23 |

<p style="margin-bottom: 14px; font-size: 100%"> As AI-enabled Text-to-Image systems are becoming increasingly used, characterizing the social biases they exhibit is a necessary first step to lowering their risk of discriminatory outcomes. <br> We propose a new method for exploring and quantifying social biases in these kinds of systems by directly comparing collections of generated images designed to showcase a system’s variation across social attributes — gender and ethnicity — and target attributes for bias evaluation — professions and gender-coded adjectives. <br> We compare three models: Stable Diffusion v.1.4, Stable Diffusion v.2., and Dall-E 2, and present some of our key findings below:</p>

|

|

|

|

| 62 |

with gr.Row():

|

| 63 |

gr.HTML('''

|

| 64 |

<p style="margin-bottom: 14px; font-size: 100%"> TO DO: talk about what we see above. <br> Continue exploring the demo on your own to uncover other patterns! </p>''')

|

| 65 |

+

|

| 66 |

+

with gr.Accordion("Comparing model generations", open=False):

|

| 67 |

+

gr.HTML('''

|

| 68 |

+

<p style="margin-bottom: 14px; font-size: 100%"> One of the goals of our study was allowing users to compare model generations across professions in an open-ended way, uncovering patterns and trends on their own. This is why we created the <a href='https://huggingface.co/spaces/society-ethics/DiffusionBiasExplorer' style='text-decoration: underline;' target='_blank'> Diffusion Bias Explorer </a> and the <a href='https://huggingface.co/spaces/society-ethics/Average_diffusion_faces' style='text-decoration: underline;' target='_blank'> Average Diffusion Faces </a> tools. <br> We show some of their functionalities below: </p> ''')

|

| 69 |

+

with gr.Row():

|

| 70 |

+

with gr.Column():

|

| 71 |

+

impath = "images/biasexplorer"

|

| 72 |

+

biasexplorer_gallery = gr.Gallery([os.path.join(impath,im) for im in os.listdir(impath)],

|

| 73 |

+

label="Bias explorer images", show_label=False, elem_id="gallery").style(grid=2, height="auto")

|

| 74 |

+

with gr.Column():

|

| 75 |

+

gr.HTML('''

|

| 76 |

+

<p style="margin-bottom: 14px; font-size: 100%"> Comparing generations both between two models and within a single model can help uncover trends and patterns that are hard to measure using quantitative approaches. </p>''')

|

| 77 |

+

with gr.Row():

|

| 78 |

+

impath = "images/averagefaces"

|

| 79 |

+

average_gallery = gr.Gallery([os.path.join(impath,im) for im in os.listdir(impath)],

|

| 80 |

+

label="Average Face images", show_label=False, elem_id="gallery").style(grid=3, height="auto")

|

| 81 |

+

gr.HTML('''

|

| 82 |

+

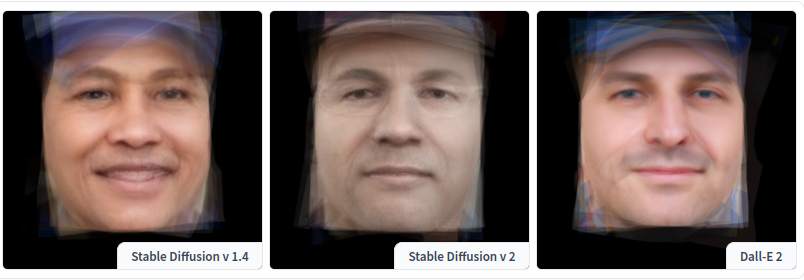

<p style="margin-bottom: 14px; font-size: 100%"> Looking at the average faces for a given profession across multiple models can help see the dominant characteristics of that profession, as well as how much variation there is (based on how fuzzy the image is). </p>''')

|

| 83 |

+

|

| 84 |

+

with gr.Accordion("Exploring the color space of generated images", open=False):

|

| 85 |

+

gr.HTML('''

|

| 86 |

+

<p style="margin-bottom: 14px; font-size: 100%"> TODO Chris </p> ''')

|

| 87 |

+

|

| 88 |

+

with gr.Accordion("Exploring the nearest neighbors of generated images", open=False):

|

| 89 |

+

gr.HTML('''

|

| 90 |

+

<p style="margin-bottom: 14px; font-size: 100%"> TODO Chris </p> ''')

|

| 91 |

+

|

| 92 |

+

gr.Markdown("""

|

| 93 |

+

### All of the tools created as part of this project:

|

| 94 |

+

""")

|

| 95 |

+

gr.HTML('''

|

| 96 |

+

<p style="margin-bottom: 10px; font-size: 94%">

|

| 97 |

+

<a href='https://huggingface.co/spaces/society-ethics/Average_diffusion_faces' style='text-decoration: underline;' target='_blank'> Average Diffusion Faces </a> <br>

|

| 98 |

+

<a href='https://huggingface.co/spaces/society-ethics/DiffusionBiasExplorer' style='text-decoration: underline;' target='_blank'> Diffusion Bias Explorer </a> <br>

|

| 99 |

+

<a href='https://huggingface.co/spaces/society-ethics/DiffusionClustering' style='text-decoration: underline;' target='_blank'> Diffusion Cluster Explorer </a>

|

| 100 |

+

<a href='https://huggingface.co/spaces/society-ethics/DiffusionFaceClustering' style='text-decoration: underline;' target='_blank'> Identity Representation Demo </a>

|

| 101 |

+

<a href='https://huggingface.co/spaces/tti-bias/identities-bovw-knn' style='text-decoration: underline;' target='_blank'> BoVW Nearest Neighbors Explorer </a> <br>

|

| 102 |

+

<a href='https://huggingface.co/spaces/tti-bias/professions-bovw-knn' style='text-decoration: underline;' target='_blank'> BoVW Professions Explorer </a> <br>

|

| 103 |

+

<a href='https://huggingface.co/spaces/tti-bias/identities-colorfulness-knn' style='text-decoration: underline;' target='_blank'> Colorfulness Profession Explorer </a> <br>

|

| 104 |

+

<a href='https://huggingface.co/spaces/tti-bias/professions-colorfulness-knn' style='text-decoration: underline;' target='_blank'> Colorfulness Identities Explorer </a> <br> </p>

|

| 105 |

+

''')

|

| 106 |

+

|

| 107 |

demo.launch(debug=True)

|

images/averagefaces/CEO.png

ADDED

|

images/averagefaces/hairdresser.png

ADDED

|

images/averagefaces/jailer.png

ADDED

|

images/averagefaces/janitor.png

ADDED

|

images/averagefaces/manicurist.png

ADDED

|

images/averagefaces/postal_worker.png

ADDED

|

images/examples/bus driver (SD v2).png

ADDED

.png)

|

images/examples/carpenter (SD v1.4).png

ADDED

.png)

|

images/examples/fitness instructor (SD v2).png

ADDED

.png)

|

images/examples/pilot (Dall-E 2).png

ADDED

.png)

|

images/examples/scientist (Dall-E 2).png

ADDED

.png)

|

images/examples/security guard (SD v1.4).png

ADDED

.png)

|